Abstract

Background

Accurate accelerometer-based methods are required for assessment of 24-h physical behavior in young children. We aimed to summarize evidence on measurement properties of accelerometer-based methods for assessing 24-h physical behavior in young children.

Methods

We searched PubMed (MEDLINE) up to June 2021 for studies evaluating reliability or validity of accelerometer-based methods for assessing physical activity (PA), sedentary behavior (SB), or sleep in 0–5-year-olds. Studies using a subjective comparison measure or an accelerometer-based device that did not directly output time series data were excluded. We developed a Checklist for Assessing the Methodological Quality of studies using Accelerometer-based Methods (CAMQAM) inspired by COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN).

Results

Sixty-two studies were included, examining conventional cut-point-based methods or multi-parameter methods. For infants (0—12 months), several multi-parameter methods proved valid for classifying SB and PA. From three months of age, methods were valid for identifying sleep. In toddlers (1—3 years), cut-points appeared valid for distinguishing SB and light PA (LPA) from moderate-to-vigorous PA (MVPA). One multi-parameter method distinguished toddler specific SB. For sleep, no studies were found in toddlers. In preschoolers (3—5 years), valid hip and wrist cut-points for assessing SB, LPA, MVPA, and wrist cut-points for sleep were identified. Several multi-parameter methods proved valid for identifying SB, LPA, and MVPA, and sleep.

Despite promising results of multi-parameter methods, few models were open-source. While most studies used a single device or axis to measure physical behavior, more promising results were found when combining data derived from different sensor placements or multiple axes.

Conclusions

Up to age three, valid cut-points to assess 24-h physical behavior were lacking, while multi-parameter methods proved valid for distinguishing some waking behaviors. For preschoolers, valid cut-points and algorithms were identified for all physical behaviors. Overall, we recommend more high-quality studies evaluating 24-h accelerometer data from multiple sensor placements and axes for physical behavior assessment. Standardized protocols focusing on including well-defined physical behaviors in different settings representative for children’s developmental stage are required. Using our CAMQAM checklist may further improve methodological study quality.

PROSPERO Registration number

CRD42020184751.

Similar content being viewed by others

Introduction

Accurate assessment of 24-h physical behavior in young children is crucial as it provides the basis for examining the health benefits of these behaviors and thereby evidence for establishing 24-h movement guidelines. Recent studies indicated the importance of an integrated approach to all 24-h physical behaviors for health, encompassing sleep, sedentary behavior (SB), and physical activity (PA) [1,2,3,4,5]. These behaviors are distributed along an intensity continuum ranging from low energy expenditure, such as sleep, to vigorous PA requiring high energy expenditure [6, 7].

Currently, a wide variety of direct measurement instruments are used to assess physical behaviors of children, such as doubly labelled water, (in)direct calorimetry, polysomnography, direct (video) observation, and accelerometry [8]. Polysomnography is considered a “gold standard” for sleep, however, this can only be applied in a laboratory setting. Doubly labelled water is considered a “gold standard” for total energy expenditure, however, it cannot distinguish frequency, type, and intensity of specific physical behaviors [9, 10]. Direct calorimetry accurately measures metabolic rate in confinement, and indirect calorimetry allows for this assessment in free-living situations, however, both methods come with relatively high costs and are also not distinctive for frequency, type, and intensity of specific physical behaviors [11]. While direct (video) observation is considered a suitable comparator measure for assessing different types of physical behaviors, it is less suitable for assessing activity intensity because this can only be derived by assigning a metabolic equivalent to represent energy cost, which is unknown for the youngest age groups (i.e., infants and toddlers) [6, 7]. In addition, direct observation is very time consuming and requires trained observers scoring a specified protocol [9]. Given these limitations, these methods might not be feasible for measuring young children’s 24-h physical behaviors in free-living situations. Accelerometers can capture data on body movement, or lack thereof, continuously over extended periods of time, and are therefore widely considered the most promising method for physical behavior assessment.

Current reviews on reliability and validity of accelerometer-based methods for measuring physical behaviors in young children were limited to evaluation of only one measurement property [12] or one physical behavior [13]. Lynch and colleagues (2019) reviewed studies that evaluated criterion validity of accelerometers against indirect calorimetry, concluding that accelerometers can accurately assess SB and PA in children 3 to 18 years old [12]. De Vries and colleagues (2006) reviewed criterion-, convergent validity, test–retest- and inter-device reliability of accelerometers. They found that accelerometers provide reasonable estimates for assessing PA, however, no evidence on reliability was found in 2- to 4-years-old children [13]. Moreover, evidence on these measurement properties for infants (0—12 months) and toddlers (1—3 years) is lacking [14, 15]. Bruijns and colleagues (2020) reviewed estimates of PA and SB derived from accelerometer data in infants and toddlers and found that PA estimates were inconclusive and largely heterogeneous [14]. Additionally, no studies under three years old were found in a review on the evidence for methodological accelerometer decisions (e.g., epoch length, wear location, data analysis approach) for assessing PA in children aged 0—5 years [15].

While accelerometer-based methods provide reasonable estimates of time spent in SB, PA, and sleep in school-aged children [12, 13, 16,17,18,19], this cannot be generalized to young children due to major differences in types and intensity of their physical behaviors [20]. Physical activity types are different for children, depending on their developmental stage, e.g., daytime naps, crawling, and being carried in the youngest age groups [15, 21]. Moreover, the intensity of activities might differ between children depending on the efficiency of motor skills. For instance, toddlers start walking around one year of age, increase locomotor (e.g., running, jumping, hopping), stability (e.g., balancing, climbing), and develop object-control skills (e.g., kicking, catching, rolling) [22]. Preschoolers (3–5 years) further develop these skills and often participate in modified sports [23]. These differences in physical behaviors and motor development require age group specific studies on the validity and reliability of measurement instruments and analysis techniques, adapted to the child’s developmental stage.

For assessment of 24-h physical behavior in young children a complete overview of measurement properties of accelerometer-based methods is unavailable and there is no consensus about the optimal measurement protocol (e.g., epoch length, wear location) and accelerometer processing (e.g., cut-points, algorithms, machine learning methods) decisions for the use of accelerometer-based methods in young children [15]. To be able to select the most appropriate method for the child’s developmental stage, an overview of current accelerometer processing and study designs, and measurement properties of the available accelerometer-based methods is warranted. Therefore, we aimed to comprehensively review all studies examining the measurement properties test–retest, inter-device reliability, criterion- and convergent validity of accelerometer-based methods assessing 24-h physical behavior in young children aged 0–5 years, including an evaluation of the quality of evidence.

Methods

We registered this review on PROSPERO (international prospective register of ongoing systematic reviews; registration number: CRD42020184751) and followed the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) guidelines [24].

Search strategy

We systematically searched the electronic database PubMed (MEDLINE) up until 26th June 2021. The search strategy focused on terms related to young children (e.g., infant, toddler, preschooler), accelerometer-based methods (e.g., accelerometry/methods, actigraphy), and measurement properties (e.g., validity, reliability). These terms were used in AND-combination with terms related to physical behavior: SB (e.g., inactive behavior, stationary behavior, sitting), PA (e.g., motor activity, tummy time, cycling), OR sleep (e.g., nap, bedtime, night rest). Articles related to animals, a variety of disorders (e.g., autism, attention deficit disorder), and diseases were excluded using the NOT-combination. Medical Subject Heading (MeSH), title and abstract (TIAB), and free-text search terms were used, and a variety of publication types were excluded (e.g., book sections, thesis). The full search strategy can be found in Additional File 1.

Eligibility criteria

Studies were eligible for inclusion when the study: 1) used an accelerometer-based method to monitor at least one physical behavior: SB, PA, or sleep; 2) evaluated at least one measurement property of an accelerometer-based method: test–retest or inter-device reliability, criterion- or convergent validity; 3) included a (sub)sample of apparently healthy children, born term (> 37 weeks), with a mean age < 5 years or a wider range with the results for 0–5-year-olds reported separately; 4) was published in English in a peer-reviewed journal; and 5) full-text was available.

Studies were excluded when the study: 1) used a diary, parent- or proxy-report, or relied on parents for direct observation as comparison measure; 2) evaluated measurement properties in a clinical population, e.g., focused on only children with overweight or obesity; or 3) relied on accelerometer-based devices that do not directly output data on acceleration time series data or magnitude of acceleration, e.g., Fitbit.

Selection procedures

We imported articles into reference manager software (EndNote X 9.1), and subsequently removed duplicate articles. Two researchers (AL and TA) independently screened titles and abstracts for eligibility using Rayyan and subsequently screened full-text articles. For four publications, the mean age of the study population was missing. To resolve this missing information, we contacted the authors. In addition, the reference lists of all relevant full-text articles were screened for possible inclusion of additional studies. A third researcher (MC) was consulted to resolve discrepancies.

Data extraction

For all eligible studies, two researchers (AL and JA) extracted data using a structured form. Disagreements were resolved through discussion. Extracted data included the evaluated measurement properties, study and target population, accelerometer specifications (i.e., device and model, placement location and site, epoch length, axis), accelerometer data analysis approach used, outcome(s) and setting, comparison method (in case of validity), time interval (in case of test–retest reliability), and results of the evaluated measurement properties. The variety of accelerometer-based methods was described using code combinations of the following four elements: accelerometer brand, analysis approach, axis, and epoch length (see Additional File 3).

Methodological quality assessment

Two researchers (AL and either TA or MC) rated the methodological quality of the included studies independently using a newly developed checklist to assess risk of bias. Risk of bias refers to whether the results for evaluating a measurement property are trustworthy based on the methodological study quality. In case of disagreement, all three researchers discussed the rating until consensus was reached.

Checklist development

We newly developed a Checklist for Assessing the Methodological Quality of studies using Accelerometer-based Methods (CAMQAM), as there was no standardized checklist available. The CAMQAM was inspired by quality assessment of patient reported outcomes, the standardized COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN) risk of bias checklist [25,26,27], and by a previous review by Terwee and colleagues [28]. To fit accelerometer-based methods, we used the following relevant parts and, made minimal adjustments (e.g., wordings): ‘Box 6 Reliability’ for test–retest- and inter-device reliability,’Box 8 Criterion Validity’ for criterion validity, and ‘Box 9 Hypothesis testing for construct validity’ part ‘9a Comparison with other outcome measurement instruments’ for convergent validity. Moreover, we developed two boxes with additional items to rate methodological quality of studies assessing the criterion- or convergent validity of a specific accelerometer data analysis approach to categorize physical behavior: 1) conventional cut-points based method using a single value, or 2) multi-parameter method using more than one parameter, e.g., sleep algorithm, machine learning method. Two of the authors (TA and MC) independently rated the most diverse included studies for each measurement property. Thereafter, we added examples or explanations to clarify the items and ensure studies were scored using the correct box. The CAMQAM was used as a modular tool; only those boxes were completed for the measurement properties evaluated in the study.

For each examined measurement property, the study design requirements were rated as either very good, adequate, doubtful, or inadequate quality [25]. To rate the final methodological study quality, a worst score method was adopted, i.e., using the lowest rating of any item in a box. Additional File 2 presents the complete checklist and scoring manual.

In the appraisal of methodological study quality, the following study aspects were considered: study design (e.g., sample, epoch length, measurement duration, comparison instrument and their measurement properties in the study population) and the performed statistical analysis to evaluate the measurement property of the accelerometer-based method (see Table 1 in Additional File 2 for a summary of the definitions).

Rating study results

The result of each study on a measurement property was rated against the criteria for good measurement properties proposed in the COSMIN guideline, i.e., sufficient (+), insufficient (-), inconsistent (±) or intermediate (?) [26]. Below is indicated for each measurement property which outcomes were considered sufficient (+). Outcomes were considered insufficient (-) when these criteria were not met, and intermediate (?) when not all necessary information was reported. Due to the great variety of accelerometer-based methods adopted in the studies, quantitative pooling or quantitatively summarizing of the results was not feasible.

Reliability

Reliability results were considered acceptable under the following conditions: 1) Intraclass Correlation Coefficients (ICC) or Kappa values (κ) were ≥ 0.70 [28]; or Pearson (rp), Spearman rank (rsp) or unknown (r) correlation coefficients were ≥ 0.80 [25]. Some studies reported multiple correlations per accelerometer-based method for reliability, e.g., separate correlations for different physical intensities. Therefore, we applied a rating per physical behavior (i.e., incorporating correlations separately for PA, SB, and/or sleep), and an overall rating (i.e., incorporating all correlations) to obtain final reliability rating for each study. When ≥ 75% of reliability outcomes were acceptable, a sufficient rating was received, when ≥ 50% and < 75% of reliability outcomes were acceptable an inconsistent rating was received, and an insufficient evidence rating was received when < 50% of reliability outcomes were acceptable.

Validity

Criterion validity was considered acceptable when: 1) correlations or κ with the ‘gold standard’ were ≥ 0.70 (Table 1, e.g., comparison measure was polysomnography for accelerometer-based methods aiming to assess sleep, or indirect calorimetry to score energy expenditure); or diagnostic test results (i.e., area under the receiver operating curve, accuracy, sensitivity, or specificity) were ≥ 0.80.

To rate the results of studies that evaluated convergent validity, we formulated criteria for acceptable results regarding the confidence in the comparison instrument to accurately measure the relevant physical behavior (i.e., level of evidence) (Table 1). We first assessed the level of evidence using these criteria, where level 1 indicated strong evidence, level 2 indicated moderate evidence, and level 3 indicated weak evidence. Thereafter, subdivided by the level of evidence, we rated study results as acceptable when: 1a) correlations (i.e., rp, rsp, r) with the comparison measure were ≥ 0.70 (level 1); or 1b) correlations with the comparison measure were ≥ 0.60 (level 2 or level 3) [13]; 1c) ICC, Concordance Correlation Coefficients (CCC), or κ with the comparison measure were ≥ 0.70; 2) or diagnostic test results were ≥ 0.80. As most studies reported multiple results, we applied a rating per physical behavior (i.e., incorporating results separately for SB, PA, and/or sleep), and an overall rating for each study. When ≥ 75% of the validity outcomes were rated as acceptable, a sufficient rating was received, when ≥ 50% and < 75% of validity outcomes were rated as acceptable an inconsistent rating was received, and an insufficient evidence rating was received when < 50% of validity outcomes were acceptable.

Quality of evidence grading

Quality of evidence was graded using the Grading of Recommendations Assessment, Development and Evaluation (GRADE) approach as proposed in the COSMIN guideline, i.e., high, moderate, low, or very low [26], to indicate trustworthiness of the measurement property results. To derive the grading, the methodological study quality (i.e., risk of bias) was weighted with relevant risk factors: 1) inconsistency, i.e., unexplained inconsistency of results across studies, 2) imprecision, i.e., total sample size of the available studies, and 3) indirectness, i.e., evidence from different populations than the population of interest in this review [26]. The evidence grading was subsequently downgraded with one, two, or three levels for each risk factor, to moderate, low, or very low quality of evidence. The quality of evidence grading was performed for each measurement property and each accelerometer-based method separately.

Results

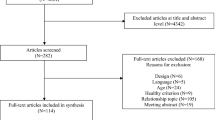

The systematic literature search yielded a total of 1,673 unique articles. After title and abstract screening, 82 full-texts were screened. Additionally, 16 articles were found through cross-reference searches. Therefore, a total of 98 full-text articles were assessed for eligibility, of which 62 were included (see Fig. 1 for the full selection process). Thirteen of the included studies evaluated the measurement properties of accelerometer-based methods for assessing SB, PA, and/or sleep in infants [29,30,31,32,33,34,35,36,37,38,39,40,41], nine in toddlers [42,43,44,45,46,47,48,49,50], and forty in preschoolers [51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90].

The included studies evaluated measurement properties for time series data or magnitude of acceleration directly [42, 45, 51,52,53,54,55,56,57,58,59,60, 62, 63, 70] or applied one of the following data analysis approaches to categorize physical behavior: a conventional cut-points based method using a single value [29,30,31,32,33,34, 42,43,44,45,46,47, 51,52,53, 56, 61, 64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81, 89], or a multi-parameter method (e.g., algorithm, machine learning) [30, 35,36,37,38,39,40,41, 48,49,50, 55, 66, 75, 82,83,84,85,86,87,88,89,90].

Reliability

Table 2 summarizes the results for reliability, of which two studies examined test–retest reliability [29, 65], and one study examined inter-device reliability [42]. Test–retest reliability of an accelerometer-based method using a cut-points based method was evaluated by measuring SB and PA in preschool aged children [65]. Total PA, SB, light PA (LPA) and moderate-to-vigorous PA (MVPA) were considered reliable across all wear time criteria, except for absolute values of SB. For absolute values of SB, results were sufficient if data was collected for ≥ 5 days/week of ≥ 10 h. Despite adequate methodological study quality, these results received low quality of evidence as they were retrieved in a sample of 91 preschoolers. Inter-device reliability for epoch level activity counts (60 s) was rated as sufficient for activity counts in toddlers wearing two Actical devices side-by-side on the non-dominant ankle [42]. Despite adequate methodological study quality, these results received low quality of evidence as they were retrieved in a limited sample of 24 toddlers.

Validity

The following subsections present the results for validity by age group. Notably, most studies used the vertical axis (VA). In studies among infants the accelerometer was predominantly worn on the ankle, while for studies among toddlers or preschoolers the devices were mainly placed on the hip. Unless otherwise specified, we report study results based on this majority placement and axis.

Infants

Table 3 summarizes the results for validity, of which four studies examined criterion validity [30,31,32, 35], and nine studies examined convergent validity [29, 33, 34, 36,37,38,39,40,41] in infants.

No studies assessed validity of cut-points for SB, LPA and MVPA in infants, while for sleep no cut-points (i.e., wake thresholds) were evaluated as valid [30,31,32,33]. Quality of evidence was low for studies evaluating criterion validity, as results were retrieved in limited samples of 22 to 31 infants, despite very good methodological quality [30,31,32]. The results of the study that evaluated convergent validity, were insufficient, despite moderate quality of evidence [33].

In contrast, multi-parameter methods were more suitable for assessing the physical behavior of infants than a conventional cut-points based method. Infant leg movements could sufficiently be distinguished from non-infant produced movement using an algorithm describing velocity and acceleration magnitude for this activity [41]. However, these results received very low quality of evidence as methodological study quality was doubtful and the results were retrieved in a sample of only 12 infants.

For posture and movement classification, using arm and leg data, validity of convolutional neural networks was rated as sufficient [39]. The performance of convolutional neural networks and supported vector machines were comparable for classification of infant specific postures, e.g., tummy time and crawl posture. However, for movement in prone positions (e.g., crawl, turn and pivot) the performance of the convolutional neural networks was consistently 5 to 10% higher than the performance of support vector machines, resulting in a sufficient study result rating for the former and an inconsistent rating for the latter. Despite adequate methodological study quality, these results received low quality of evidence as the results were retrieved in a sample of 22 infants. Another neural network using chest data was rated as sufficient for sleep and movement classification [40]. Despite adequate methodological study quality, the results received very low quality of evidence as the results were retrieved in a sample of only nine infants.

Sleep could be distinguished from wake from 3-months of age using different multi-parameter methods [30, 36, 38]. Convergent validity of the Sadeh algorithm that calculates the probability of sleep, was rated as sufficient in free-living situations for infants [38]. However, it was less suitable to distinguish active and quiet sleep. These results received very low quality of evidence as the results were retrieved in a limited sample of 41 infants and methodological study quality was doubtful. Similarly, convergent validity of the automatic sleep–wake scoring algorithm developed for raw data was rated as sufficient to distinguish sleep from wake, despite low accuracy for distinguishing active from quiet sleep [36]. However, these results received very low quality of evidence as they were retrieved in a sample of only 10 infants and methodological study quality was inadequate. Galland and colleagues (2012) determined the accuracy of three algorithms for distinguishing sleep from wake states using 15-, 30- and 60 s epochs in infants with a mean age around 3-months [30]. In line with previous results, criterion validity of the Sadeh and the Cole-Kripke (computing the weighted sum activity) algorithm was rated as insufficient. However, criterion validity of an algorithm similar to the Cole-Kripke algorithm that uses count-scaled data (leg placement) was rated as sufficient in infants of around 3-months of age using 15 s or 30 s epochs. The best performing algorithm used a sampling epoch of 15 s. Sleep agreement of the other algorithms was highest using the 15- or 30 s epoch, however, at the expense of wake agreement. Notably, correspondence with polysomnography was poorest for the number of wake time after sleep onset using 60 s epochs. Despite very good methodological study quality, these results received low quality of evidence due to the limited sample size of 31 infants.

Toddlers

Table 4 summarizes the results of nine studies in toddlers that examined convergent validity [42,43,44,45,46,47,48,49,50]. No studies evaluated methods to distinguish sleep from wake.

For the assessment of SB, LPA and MVPA no valid cut-point sets were found. Four studies evaluated the convergent validity of cut-points based methods for accelerometers using direct (video) observation as comparison measure [43, 44, 46, 47]. These studies suggested that cut-points can be used to distinguish SB [46, 47] or low intensity [43, 44] from high intensity PA. Cut-points to distinguish SB and LPA from MVPA were rated as sufficient, with MVPA for the vector magnitude (VM) ≥ 208 counts/15 s (Mage = 1.42 ± 0.05 years) [43], or for the VA ≥ 418 counts/15 s (Mage = 2.30 ± 0.40 years) [44]. In contrast, cut-points to distinguish SB from total PA were rated as sufficient, for the VM with SB < 6 counts/5 s (Mage = 2.99 ± 0.48 years) [46], or < 40 counts/5 s [47]. These results seemed promising as high agreement and low bias were found, but the results of these studies received low [44, 46] to very low [43, 47] quality of evidence due to small sample sizes (10 ≥ n ≤ 40), despite very good methodological quality of two studies [44, 46].

Using a multi-parameter method, SB (e.g., carrying) could be sufficiently distinguished from ambulation PA (e.g., running, crawling, and climbing) using time-domain and frequency acceleration signal features. Convergent validity of this random forests was rated as sufficient [49]. However, these results received low quality of evidence as the results were retrieved in a sample of only 21 toddlers, despite very good methodological study quality. In another study, compared to other multi-parameter methods, random forests provided the best classification of activity type (i.e., running/walking, crawling, climbing, standing, sitting, lying down, carried and stroller) [48]. To improve accuracy, the models were augmented by a hidden Markov model by providing the predictions of the models as observations. Despite small improvements, study results were rated as insufficient.

Preschoolers

Table 5 summarizes the results for validity, of which ten studies examined criterion validity [51,52,53,54,55, 66, 67, 75, 84, 88] and thirty studies examined convergent validity [51, 56,57,58,59,60,61,62,63,64, 68,69,70,71,72,73,74, 76,77,78,79,80,81,82,83, 85,86,87, 89, 90] in preschoolers.

For the assessment of SB, LPA and MVPA, hip [81] and wrist [78] cut-points were evaluated as valid. Sirard and colleagues (2005) evaluated convergent validity of age specific cut-points [81]. Compared to observational scores using the Children’s Activity Rating Scale (CARS) agreement was high. Highest correlation between predicted and observed scores was found for SB, whereas lowest correlation between predicted and observed scores was found for MVPA. These results received high quality of evidence as the methodological study quality was very good and results were retrieved in a sample of 269 preschoolers. Similar results for convergent validity of Sirard’s SB and MVPA cut-points were found, using the Observational System for Recording physical Activity in Children-Preschool (OSRAC-P) as comparison measure [71]. Highest sensitivity was found for SB, while sensitivity was lowest for VPA. However, specificity was lowest for SB and highest for MVPA. Compared to other cut-points based methods, Sirard’s cut-points were most sensitive in detecting SB and converged best with direct observation [45, 52, 71, 91]. However, convergent validity of Sirard’s cut-points was rated as inconsistent with moderate quality of evidence, as these results were retrieved in a sample of 56 preschoolers. Another study evaluated convergent validity of the Sirard MVPA cut-point compared to the same direct observation scheme, i.e., CARS [61]. Bias was lowest when applying the Sirard MVPA cut-point versus other cut-points based methods in a sample of only 32 preschoolers [45, 52, 97]. When applied to accelerometer-derived data in toddlers, results of Sirard’s cut-points were rated as insufficient [45].

Johansson and colleagues (2016) evaluated convergent validity of wrist cut-points (both VA and VM) [78]. Agreement with direct observation (i.e., CARS) was high [78]. However, these results received very low quality of evidence, as the methodological study quality was inadequate, and results were retrieved in a limited sample of 30 preschoolers.

Some multi-parameter methods were also suitable for assessing SB, LPA and MVPA. Convergent validity of a support vector machine was evaluated as sufficient for distinguishing SB, LPA and MVPA, walking and running in laboratory setting [89] but not in free-living settings [83, 85]. Trost and colleagues (2018) developed random forest and support vector machine models to categorize SB, light activities and games (i.e., LPA), moderate-to-vigorous activities and games (i.e., MVPA), walking and running [89]. Almost perfect agreement with direct observation was found (hip and combination hip and wrist), while cut-points for this sample resulted in only moderate to substantial agreement. Using hip or wrist only data, convergent validity of support vector machines was rated as sufficient, while both random forests and support vector machines were rated as sufficient when hip and wrist data were combined. In free-living settings accuracy of these models decreased by 11 to 15% [85]. Hip classifiers had moderate agreement with direct video observation, while agreement of wrist classifiers was lower in free-living. In free-living settings, accuracy of random forests combining hip and wrist data was comparable. However, study results were rated as insufficient. In addition, support vector machine models have not been tested while combining hip and wrist data. Despite very good [85] and adequate [83, 89] methodological study quality, these results received low quality of evidence as the results were retrieved in limited samples of 11 [89] and 31 [83, 85] preschoolers. Convergent validity of a simple decision table was evaluated as sufficient for distinguishing comparable activity types (i.e., sit, stand, run, walk and bike) in a free-living setting [86]. These study results received very low quality of evidence as methodological study quality was doubtful and the results were retrieved in a sample of only 29 preschoolers.

Criterion validity of support vector machine models, random forests, and artificial neural networks was evaluated [84, 88]. Despite intermediate study results, random forests and artificial neural networks seemed to result in equal prediction accuracy of energy expenditure [88]. Results of the other study indicated that predicted energy expenditure of existing lab, free-living and retrained random forests (wrist and hip) and support vector machines (wrist) were within ± 6% of measured energy expenditure, but not for artificial neural networks (hip) [84]. Despite adequate methodological study quality, these study results received very low quality of evidence as they were retrieved in limited samples of 31 [84] and 41 [88] preschoolers. Criterion validity of cross-sectional time series models [55, 75] and multivariate adaptive regression splines [55] was evaluated as sufficient for predicting minute-by-minute energy expenditure. Results indicated a lack of bias and acceptable limits of agreement for these models, however, bias was slightly lower for multivariate adaptive regression splines. These results received moderate [55] and very low [75] quality of evidence.

For sleep assessment, different cut-points based methods (i.e., wake threshold values) were evaluated to distinguish sleep from wake. Moreover, the multi-parameter method AlgoSmooth was evaluated, which smooths data derived from the wake threshold value of 40 before scoring sleep or wake [100]. Criterion validity of wake threshold values of 40 and 80 applied to adjusted wrist data (i.e., regressed using lower activity counts of the ankle device) was rated as sufficient for wake time but poor for wake time after onset, but results were rated as inconsistent when applied to raw data [66]. In contrast, when the raw wrist data was smoothed using the AlgoSmooth algorithm, criterion validity was evaluated as sufficient [66]. Correlations between an Actiwatch and polysomnography were high, except for wake time after onset. Results were worse for ankle data. Despite very good methodological study quality, these results received low quality of evidence as the results were retrieved in a limited sample of 12 preschoolers [66].

Convergence between devices

Few studies examined the convergence between different accelerometers [56, 64, 72, 74]. No studies evaluated convergence between accelerometer-based methods in infants and toddlers. Convergence of both time spent in SB derived by ActiGraph and activPAL (hip vs. thigh) [72, 74], Actical and activPAL (wrist vs. thigh) [64], and ActiGraph and Actiwatch (waist vs. wrist) count data [56] was rated as insufficient as low to moderate agreement was found. Despite very good methodological study quality of all studies, these results received moderate [74] to low [56, 64, 72] quality of evidence due to sample sizes of 23 to 60 preschoolers.

Combining data

Combining data derived from multiple sensors [39, 85, 89] or multiple axes [34, 43, 47, 49, 77, 78] resulted in more valid predictions. Single placed sensors resulted in lower performance and insufficient data for posture and movement classification, compared to combining data from two or four different locations [39]. In line with these results, the combination of hip and wrist data in models for activity intensity prediction resulted in higher performance compared to using hip or wrist data only [85, 89]. Despite very good [85] and adequate [39, 89] methodological study quality, these results received (very) low quality of evidence due to limited sample sizes of 31 [85], 22 [39], and 11 [89] children.

Generally, using data from the VM to categorize activity intensity provided better results (higher agreement between accelerometry and comparison measure) than the VA (y-axis) [43, 47, 77, 78]. In line with these results, for activity type classification (i.e., running walking climbing up/down, crawling, riding on a ride on toy, standing, sitting, stroller, being carried) feature importance was highest for the VM standard deviation versus features of the different axes or other VM features [49]. In addition, combined cut-points for the VA (y-axis) and horizontal axis (x-axis) were used to classify the postures of infants as prone and non-prone [34]. Despite the inconsistent results, the study indicated that combining data from these axes is required to accurately assess horizontal movement behaviors such as tummy time (time spent prone on floor). The combination of the acceleration signal time-domain and frequency features also resulted in better activity type classification [49, 50]. When comparing feature sets, accuracy was higher when frequency-domain features were included in addition to time-domain features. Moreover, activity intensity classification improved when adding temporal features to this base set (i.e., time domain and frequency features) [85].

Discussion

This review summarizes studies that evaluated the measurement properties of accelerometer-based methods for assessing physical behavior in young children (< 5 years old). To assess the methodological quality of the 62 included studies, we developed a new checklist inspired by COSMIN [25,26,27]. Despite very good to adequate methodological study quality of 58% of the studies, only ten percent of the study results received high or moderate quality of evidence, due to limited samples sizes.

Validated cut-points for the youngest age group (i.e., infants) are still lacking, while multi-parameter methods were evaluated as sufficient to distinguish posture, SB and PA using multiple sensors [39], movement and sleep [40], leg movements [41], and sleep–wake [30, 36, 38]. Despite very good [30] or adequate [39, 40] methodological study quality of some of these studies, quality of evidence was rated as low [30] to very low [36, 38,39,40,41].

In toddlers, hip cut-points were considered valid for distinguishing SB and LPA [46, 47] from high intensity PA [43, 44], despite large differences between cut-points based methods. No studies were found for identifying sleep in toddlers using cut-points based or multi-parameter methods. For SB, VM cut-points varied between < 40 counts/5 s [47] and < 97 counts/5 s [46]. For MVPA, cut-points were VM ≥ 208 counts/15 s [43] and VA ≥ 418 counts/15 s [44]. The difference between the SB cut-points is probably due to inconsistency in the definition of SB adopted by the different observational schemes used. Specifically, in the study by Costa and colleagues, SB was defined as “stationary with no movement and stationary with movement of the limbs”, resulting in a higher cut-point [46] than Oftedal and colleagues who did not include limb movement [47]. Notably, the MVPA cut-points were derived in study samples with divergent characteristics. Trost and colleagues included children from an urban area that were around one year older than the children from a rural area included by Pulakka and colleagues [43, 44]. Conversely, cut-points could not identify toddler specific behaviors such as “being carried” as SB. A random forest model was considered valid to distinguish SB (including this toddler specific behavior) from PA [49]. Despite very good methodological study quality of some studies [44, 46, 49], quality of evidence for these cut-points based and multi-parameter methods was low.

For distinguishing different physical intensities (i.e., SB, LPA, and MVPA), we found strongest evidence in preschoolers. Next to age-specific hip cut-points [81], wrist cut-points were also evaluated as valid [78]. For assessing sleep, adjusted wrist [66] cut-points were evaluated as valid. Quality of evidence for hip cut-points was high [81], and these cut-points were also positively evaluated in cross-validation studies [61, 71], but not when applied in toddlers [45]. The wrist cut-points, however, have not been cross-validated and despite very good methodological study quality of the study assessing sleep cut-points [66], quality of evidence was low [66, 78]. Conversely, for identifying SB, LPA, and MVPA, using multi-parameter methods resulted in more promising results compared to cut-points based methods [83, 85, 89]. Although random forest and support vector machine models were rated as sufficient in laboratory setting [89], these were rated as insufficient in a free-living setting [83, 85]. Activity type could be distinguished using a decision table in a free-living setting [86]. In addition, sleep could be distinguished from wake using the AlgoSmooth algorithm applied to (raw) wrist data [66]. Despite very good [66, 85] and adequate [83, 86, 89] methodological study quality, quality of evidence was low [66, 85] or very low [83, 86, 89].

Despite the promising results of multi-parameter methods, only few models were accessible as open-source software [84,85,86, 88]. This hampers the replication of study results as closed source models cannot be reused. In case software is available, be aware to use the same version, configuration, and implementation (e.g., brand, axis, placement, parameters, target group). It is not recommended to reuse cut-points based methods when deviating from accelerometer specifications (i.e., brand, axis, and placement) or target population (age group), as cut-points need to be re-evaluated. Moreover, the inconvenience of cut-points based methods is related to the derivation of the magnitude of acceleration (count data), which is kept close source by most manufacturers.

Most studies used a single device or axis to measure physical behavior, while more promising results were found when combining data derived from different sensor placements [39, 85, 89] or multiple axes [34, 43, 47, 49, 50, 90]. Movement patterns of young children are sporadic, omnidirectional, and unique per developmental stage (e.g., lying on back or tummy, crawling or walking), and accelerometers capture only the movement of the body segment it is attached to [15, 20, 23]. This requires accelerometers that can capture movement in multiple planes, and placement of accelerometers on different sites (e.g., wrist and hip, legs, and arms).

There are a few reasons that contributed to the (very) low quality of evidence of the studies. Firstly, the quality of evidence was mostly downgraded due to limited sample sizes of < 100 children included in studies, i.e., imprecision [26]. If more studies would have used the same measurement and analyses protocol (i.e., accelerometer brand, accelerometer data analysis approach, axis, and epoch length), this sample size issue could have been resolved by pooling the results. Besides sample size, another important aspect for the generalizability is that the variation of the performed physical behaviors in the target population is captured. Secondly, the doubtful methodological study quality contributed to the downgrading of the quality of evidence, i.e., risk of bias [26]. Common methodological limitations varied per measurement property. Regarding convergent validity, both the unknown or insufficient measurement properties of the comparison instrument and the too long epoch lengths [101] resulted in low methodological study quality. For most studies that used direct observation as comparison measure, interrater agreement was insufficient, or a non-validated observation scheme was adopted. In some studies, physical behavior of toddlers was assessed using observation schemes developed and validated in preschoolers, thereby disregarding developmental specific physical behavior. Thus, physical activities and their intensities may have been misinterpreted. In addition, an epoch length < 60 s is preferred to measure the sporadic and intermittent nature of physical behavior in young children. However, some studies reintegrated the epochs up to three minutes without providing a valid reason, e.g., to align the epochs with the comparison instrument. For studies evaluating validity of cut-points based methods, low methodological study quality was mostly due to validation using data derived under the same circumstances as for the cut-point calibration, e.g., using the same sample instead of an independent sample. Studies that assessed validity of multi-parameter methods, mainly received low methodological study quality due to not reporting statistics suitable for comparing the performance of prediction models.

Next to the low quality of evidence, there were some general study limitations. For instance, in the reliability studies, differences between accelerometer recordings might be due to slightly different placement of the accelerometer, or actual different physical behaviors during the repeated measurements. Differences based on mechanical shaker experiments, on the other hand, are purely device related. Regarding validity studies using observation as comparison measure, it is difficult to estimate what observation time would be sufficient for validation of the targeted physical intensities and representative for physical behavior. This is also dependent on the observed activities and setting. Observation durations of included studies varied from 8 to 180 min. For instance, if observation took place in the childcare setting, this might not be representative for daily life physical behavior. Another general limitation is that activity types were not described in detail, resulting in different activity intensity ranges between studies.

Strengths and limitations

A strength of this review is that the methodological study quality was systematically assessed using the newly developed CAMQAM checklist. Another strength is that our search had no publication date limit. Although this resulted in including studies on devices that are no longer available on the market, this review includes all available studies examining measurement properties of accelerometer-based methods in 0—5-year-olds. A limitation of this review is that due to the great variability of accelerometer-based methods, it was not feasible to pool the study results, resulting in low quality of evidence ratings due to small sample sizes. Appropriate sample sizes are important for precision but also in order to capture adequate variation in physical activities. Another limitation is that we were unable to rate study results of measurement errors, as the minimal important difference or minimal important change is needed to conclude on the magnitude of measurement error. Since this information was not available for 0—5-year-olds, we only described these results. A limitation of the study result rating is that, the ratings were not weighted for the number of reported results. Lastly, our focus was limited to the evaluation and quality of measurement properties and did not include feasibility. Feasibility is context-specific because studies differ in available expertise and computational resources to perform the data analysis. Further, feasibility may change over time as software is subject to ongoing development and maintenance, or lack thereof, and accelerometers may change in price or dimensions as newer models enter the market.

Recommendations for future studies

High quality studies and standardized protocols are required to assess measurement properties (including feasibility) of these accelerometer-based methods and enable pooling of data. To improve methodological study quality of future studies, we recommend using our developed CAMQAM Checklist for Assessing the Methodological Quality of studies using Accelerometer-based Methods. Future studies should incorporate more precise definitions for physical activity types, adapted to the child’s developmental stage. For example, activity types such as crawling can be more precisely defined using inclination angles in video observation or derived from accelerometer-based methods. Additionally, for accelerometer-based methods to be generalizable to young children, ideally in a free-living setting, validation studies should include a variety of physical activity types representative for the target population. Moreover, we recommend that future studies transparently share methods by making these open-source available. Making methods accessible supports the sustained impact of research investments. Given the lack of reliable and/or valid of accelerometer-based methods and the lack of 24-h studies on physical behavior, especially in the youngest age groups (i.e., infants and toddlers), future studies should develop and evaluate methods targeted at these young age groups, including all 24-h physical behaviors, and exploring different sensor placements and axes using raw acceleration data of modern accelerometers.

Conclusions

Validated cut-points are lacking in infants and toddlers, while multi-parameter methods proved valid to distinguish SB and LPA from more vigorous activities. For preschoolers, both valid cut-points based and valid multi-parameter methods were identified, where multi-parameter methods appeared to have better measurement properties. Large heterogeneity and methodological limitations, impedes our ability to draw definitive conclusions about the best available accelerometer-based methods assessing all 24-h physical behaviors combined in young children.

Availability of data and materials

The data that support the findings of this review are available from the corresponding author upon reasonable request.

References

Chaput JP, Carson V, Gray CE, Tremblay MS. Importance of all movement behaviors in a 24 hour period for overall health. Int J Environ Res Public Health. 2014;11(12):12575–81. https://doi.org/10.3390/ijerph111212575.

Kuzik N, Poitras VJ, Tremblay MS, Lee EY, Hunter S, Carson V. Systematic review of the relationships between combinations of movement behaviours and health indicators in the early years (0–4 years). BMC Public Health. 2017;17(5):849. https://doi.org/10.1186/s12889-017-4851-1.

Rollo S, Antsygina O, Tremblay MS. The whole day matters: understanding 24-hour movement guideline adherence and relationships with health indicators across the lifespan. J Sport Health Sci. 2020;9(6):493–510. https://doi.org/10.1016/j.jshs.2020.07.004.

Tremblay MS, Carson V, Chaput J-P, Connor Gorber S, Dinh T, Duggan M, et al. Canadian 24-hour movement guidelines for children and youth: an integration of physical activity, sedentary behaviour, and sleep. Appl Physiol Nutr Metab. 2016;41(6):S311–27. https://doi.org/10.1139/apnm-2016-0151.

Tremblay MS, Chaput JP, Adamo KB, Aubert S, Barnes JD, Choquette L, et al. Canadian 24-hour movement guidelines for the early years (0–4 years): an integration of physical activity, sedentary behaviour, and sleep. BMC Public Health. 2017;17(5):874. https://doi.org/10.1186/s12889-017-4859-6.

Ainsworth BE, Haskell WL, Herrmann SD, Meckes N, Bassett DR, Tudor-Locke C, et al. 2011 Compendium of physical activities: a second update of codes and MET values. Med Sci Sports Exerc. 2011;43(8):1575–81. https://doi.org/10.1249/MSS.0b013e31821ece12.

Butte NF, Watson KB, Ridley K, Zakeri IF, McMurray RG, Pfeiffer KA, et al. A youth compendium of physical activities: activity codes and metabolic intensities. Med Sci Sports Exerc. 2018;50(2):246–56. https://doi.org/10.1249/mss.0000000000001430.

Hills AP, Mokhtar N, Byrne NM. Assessment of physical activity and energy expenditure: an overview of objective measures. Front Nutr. 2014;1:5. https://doi.org/10.3389/fnut.2014.00005.

Loprinzi PD, Cardinal BJ. Measuring children’s physical activity and sedentary behaviors. J Exerc Sci Fit. 2011;9(1):15–23. https://doi.org/10.1016/s1728-869x(11)60002-6.

Park J, Kazuko IT, Kim E, Kim J, Yoon J. Estimating free-living human energy expenditure: practical aspects of the doubly labeled water method and its applications. Nutr Res Pract. 2014;8(3):241–8. https://doi.org/10.4162/nrp.2014.8.3.241.

Ndahimana D, Kim EK. Measurement methods for physical activity and energy expenditure: a review. Clin Nutr Res. 2017;6(2):68–80. https://doi.org/10.7762/cnr.2017.6.2.68.

Lynch BA, Kaufman TK, Rajjo TI, Mohammed K, Kumar S, Murad MH, et al. Accuracy of accelerometers for measuring physical activity and levels of sedentary behavior in children: a systematic review. J Prim Care Community Health. 2019;10:1–8. https://doi.org/10.1177/2150132719874252.

de Vries SI, Bakker I, Hopman-Rock M, Hirasing RA, van Mechelen W. Clinimetric review of motion sensors in children and adolescents. J Clin Epidemiol. 2006;59(7):670–80. https://doi.org/10.1016/j.jclinepi.2005.11.020.

Bruijns BA, Truelove S, Johnson AM, Gilliland J, Tucker P. Infants’ and toddlers’ physical activity and sedentary time as measured by accelerometry: a systematic review and meta-analysis. Int J Behav Nutrition Physical Act. 2020;17(1):14. https://doi.org/10.1186/s12966-020-0912-4.

Cliff DP, Reilly JJ, Okely AD. Methodological considerations in using accelerometers to assess habitual physical activity in children aged 0–5 years. J Sci Med Sport. 2009;12(5):557–67. https://doi.org/10.1016/j.jsams.2008.10.008.

Sarker H, Anderson LN, Borkhoff CM, Abreo K, Tremblay MS, Lebovic G, et al. Validation of parent-reported physical activity and sedentary time by accelerometry in young children. BMC Research Notes. 2015;8(1):735. https://doi.org/10.1186/s13104-015-1648-0.

Sasaki JE, John D, Freedson PS. Validation and comparison of ActiGraph activity monitors. J Sci Med Sport. 2011;14(5):411–6. https://doi.org/10.1016/j.jsams.2011.04.003.

Weiss AR, Johnson NL, Berger NA, Redline S. Validity of activity-based devices to estimate sleep. J Clin Sleep Med. 2010;6(4):336–42.

Welk GJ, Schaben JA, Morrow JR. Reliability of accelerometry-based activity monitors: a generalizability study. Med Sci Sports Exerc. 2004;36(9):1637–45.

Oliver M, Schofield GM, Kolt GS. Physical activity in preschoolers. Sports Med. 2007;37(12):1045–70. https://doi.org/10.2165/00007256-200737120-00004.

Galland BC, Taylor BJ, Elder DE, Herbison P. Normal sleep patterns in infant and children: a systematic review of observational studies. Sleep Med Rev. 2012;16(3):213–22. https://doi.org/10.1016/j.smrv.2011.06.001.

Adolph KE, Franchak JM. The development of motor behavior. Wiley Interdiscip Rev Cog Sci. 2017;8(1–2):e1430. https://doi.org/10.1002/wcs.1430.

Gerber RJ, Wilks T, Erdie-Lalena C. Developmental milestones: motor development. Pediatr Rev. 2010;31(7):267–77. https://doi.org/10.1542/pir.31-7-267.

Page M, McKenzie J, Bossuyt P, Boutron I, Hoffmann T, Mulrow C, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. PLoS Med. 2021;18(3):e1003583. https://doi.org/10.1371/journal.pmed.1003583.

Mokkink LB, De Vet HC, Prinsen CA, Patrick DL, Alonso J, Bouter LM, et al. COSMIN risk of bias checklist for systematic reviews of patient-reported outcome measures. Qual Life Res. 2018;27(5):1171–9. https://doi.org/10.1007/s11136-017-1765-4.

Prinsen CA, Mokkink LB, Bouter LM, Alonso J, Patrick DL, De Vet HC, et al. COSMIN guideline for systematic reviews of patient-reported outcome measures. Qual Life Res. 2018;27(5):1147–57. https://doi.org/10.1007/s11136-018-1798-3.

Terwee CB, Prinsen CA, Chiarotto A, Westerman MJ, Patrick DL, Alonso J, et al. COSMIN methodology for evaluating the content validity of patient-reported outcome measures: a Delphi study. Qual Life Res. 2018;27(5):1159–70. https://doi.org/10.1007/s11136-018-1829-0.

Terwee CB, Mokkink LB, van Poppel MN, Chinapaw MJ, van Mechelen W, de Vet HC. Qualitative attributes and measurement properties of physical activity questionnaires. Sports Med. 2010;40(7):525–37. https://doi.org/10.2165/11531370-000000000-00000.

Greenspan B, Cunha AB, Lobo MA. Design and validation of a smart garment to measure positioning practices of parents with young infants. Infant Behav Dev. 2021;62:101530. https://doi.org/10.1016/j.infbeh.2021.101530.

Galland BC, Kennedy GJ, Mitchell EA, Taylor BJ. Algorithms for using an activity-based accelerometer for identification of infant sleep-wake states during nap studies. Sleep Med. 2012;13(6):743–51. https://doi.org/10.1016/j.sleep.2012.01.018.

Insana SP, Gozal D, Montgomery-Downs HE. Invalidity of one actigraphy brand for identifying sleep and wake among infants. Sleep Med. 2010;11(2):191–6. https://doi.org/10.1016/j.sleep.2009.08.010.

Rioualen S, Roué JM, Lefranc J, Gouillou M, Nowak E, Alavi Z, et al. Actigraphy is not a reliable method for measuring sleep patterns in neonates. Acta Paediatr. 2015;104(11):e478-82. https://doi.org/10.1111/apa.13088.

Camerota M, Tully KP, Grimes M, Gueron-Sela N, Propper CB. Assessment of infant sleep: how well do multiple methods compare? Sleep. 2018;41(10):zsy146. https://doi.org/10.1093/sleep/zsy146.

Hewitt L, Stanley RM, Cliff D, Okely AD. Objective measurement of tummy time in infants (0–6 months): a validation study. PLoS One. 2019;14(2):e0210977. https://doi.org/10.1371/journal.pone.0210977.

Lewicke AT, Sazonov ES, Schuckers SA. Sleep-wake identification in infants : heart rate variability compared to actigraphy. Conf Proc IEEE Eng Med Biol Soc. 2004;1:442–5. https://doi.org/10.1109/IEMBS.2004.1403189.

Gnidovec B, Neubauer D, Zidar J. Actigraphic assessment of sleep-wake rhythm during the first 6 months of life. Clin Neurophysiol. 2002;113(11):1815–21. https://doi.org/10.1016/s1388-2457(02)00287-0.

Horger MN, Marsiliani R, DeMasi A, Allia A, Berger SE. Researcher choices for infant sleep assessment: parent report, actigraphy, and a novel video system. J Genet Psychol. 2021;182(4):218–35. https://doi.org/10.1080/00221325.2021.1905600.

Sadeh A, Acebo C, Seifer R, Aytur S, Carskadon MA. Activity-based assessment of sleep-wake patterns during 1st year of life. Infant Behav Dev. 1995;18(3):329–37. https://doi.org/10.1016/0163-6383(95)90021-7.

Airaksinen M, Räsänen O, Ilén E, Häyrinen T, Kivi A, Marchi V, et al. Automatic posture and movement tracking of infants with wearable movement sensors. Sci Rep. 2020;10(1):169. https://doi.org/10.1038/s41598-019-56862-5.

Jun K, Choi S. Unsupervised end-to-end deep model for newborn and infant activity recognition. Sensors (Basel). 2020;20(22):6467. https://doi.org/10.3390/s20226467.

Smith BA, Trujillo-Priego IA, Lane CJ, Finley JM, Horak FB. Daily quantity of infant leg movement: wearable sensor algorithm and relationship to walking onset. Sensors (Basel). 2015;15(8):19006–20. https://doi.org/10.3390/s150819006.

Hager ER, Gormley CE, Latta LW, Treuth MS, Caulfield LE, Black MM. Toddler physical activity study: laboratory and community studies to evaluate accelerometer validity and correlates. BMC Public Health. 2016;16(6):936. https://doi.org/10.1186/s12889-016-3569-9.

Pulakka A, Cheung YB, Ashorn U, Penpraze V, Maleta K, Phuka JC, et al. Feasibility and validity of the ActiGraph GT3X accelerometer in measuring physical activity of Malawian toddlers. Acta Paediatr. 2013;102(12):1192–8. https://doi.org/10.1111/apa.12412.

Trost SG, Fees BS, Haar SJ, Murray AD, Crowe LK. Identification and validity of accelerometer cut-points for toddlers. Obesity (Silver Spring). 2012;20(11):2317–9. https://doi.org/10.1038/oby.2011.364.

van Cauwenberghe E, Gubbels J, De Bourdeaudhuij I, Cardon G. Feasibility and validity of accelerometer measurements to assess physical activity in toddlers. Int J Behav Nutr Phys Act. 2011;8:67. https://doi.org/10.1186/1479-5868-8-67.

Costa S, Barber SE, Cameron N, Clemes SA. Calibration and validation of the ActiGraph GT3X+ in 2–3 year olds. J Sci Med Sport. 2014;17(6):617–22. https://doi.org/10.1016/j.jsams.2013.11.005.

Oftedal S, Bell KL, Davies PS, Ware RS, Boyd RN. Validation of accelerometer cut points in todlers with and without cerebral palsy. Med Sci Sports Exerc. 2014;46(9):1808–15. https://doi.org/10.1249/MSS.0000000000000299.

Albert MV, Sugianto A, Nickele K, Zavos P, Sindu P, Ali M, et al. Hidden Markov model-based activity recognition for toddlers. Physiol Meas. 2020;41(2):025003. https://doi.org/10.1088/1361-6579/ab6ebb.

Kwon S, Zavos P, Nickele K, Sugianto A, Albert MV. Hip and wrist-worn accelerometer data analysis for toddler activities. Int J Environ Res Public Health. 2019;16(14). https://doi.org/10.3390/ijerph16142598

Nam Y, Park JW. Child activity recognition based on cooperative fusion model of a triaxial accelerometer and a barometric pressure sensor. IEEE J Biomed Health Inform. 2013;17(2):420–6. https://doi.org/10.1109/JBHI.2012.2235075.

Adolph AL, Puyau MR, Vohra FA, Nicklas TA, Zakeri IF, Butte NF. Validation of uniaxial and triaxial accelerometers for the assessment of physical activity in preschool children. J Phys Act Health. 2012;9(7):944–53. https://doi.org/10.1123/jpah.9.7.944.

Pate RR, Almeida MJ, McIver KL, Pfeiffer KA, Dowda M. Validation and calibration of an accelerometer in preschool children. Obesity (Silver Spring). 2006;14(11):2000–6. https://doi.org/10.1038/oby.2006.234.

Pfeiffer KA, McIver KL, Dowda M, Almeida MJ, Pate RR. Validation and calibration of the Actical accelerometer in preschool children. Med Sci Sports Exerc. 2006;38(1):152–7. https://doi.org/10.1249/01.mss.0000183219.44127.e7.

Sijtsma A, Schierbeek H, Goris AH, Joosten KF, van Kessel I, Corpeleijn E, et al. Validation of the TracmorD triaxial accelerometer to assess physical activity in preschool children. Obesity (Silver Spring). 2013;21(9):1877–83. https://doi.org/10.1002/oby.20401.

Zakeri IF, Adolph AL, Puyau MR, Vohra FA, Butte NF. Cross-sectional time series and multivariate adaptive regression splines models using accelerometry and heart rate predict energy expenditure of preschoolers. J Nutr. 2013;143(1):114–22. https://doi.org/10.3945/jn.112.168542.

Alhassan S, Sirard JR, Kurdziel LBF, Merrigan S, Greever C, Spencer RMC. Cross-validation of two accelerometers for assessment of physical pctivity and sedentary time in preschool children. Pediatr Exerc Sci. 2017;29(2):268–77. https://doi.org/10.1123/pes.2016-0074.

Djafarian K, Speakman JR, Stewart J, Jackson DM. Comparison of activity levels measured by a wrist worn accelerometer and direct observation in young children. Open J Pediatr. 2013;3(04):422–7. https://doi.org/10.4236/ojped.2013.34076.

Dobell AP, Eyre ELJ, Tallis J, Chinapaw MJM, Altenburg TM, Duncan MJ. Examining accelerometer validity for estimating physical activity in pre-schoolers during free-living activity. Scand J Med Sci Sports. 2019;29(10):1618–28. https://doi.org/10.1111/sms.13496.

Fairweather SC, Reilly J, Grant S, Whittaker A, Paton JY. Using the Computer Science and Applications (CSA) activity monitor in preschool children. Pediatr Exerc Sci. 1999;11(4):413–20. https://doi.org/10.1123/pes.11.4.413.

Finn KJ, Specker B. Comparison of Actiwatch activity and Children’s Activity Rating scale in children. Med Sci Sports Exerc. 2000;32(10):1794–7. https://doi.org/10.1097/00005768-200010000-00021.

Hislop JF, Bulley C, Mercer TH, Reilly JJ. Comparison of accelerometry cut points for physical activity and sedentary behavior in preschool children: a validation study. Pediatr Exerc Sci. 2012;24(4):563–76. https://doi.org/10.1123/pes.24.4.563.

Klesges LM, Klesges RC. The assessment of children’s physical activity: a comparison of methods. Med Sci Sports Exerc. 1987;19(5):511–7.

Klesges RC, Klesges LM, Swenson AM, Pheley AM. A validation of two motion sensors in the prediction of child and adult physical activity levels. Am J Epidemiol. 1985;122(3):400–10. https://doi.org/10.1093/oxfordjournals.aje.a114121.

van Cauwenberghe E, Wooller L, Mackay L, Cardon G, Oliver M. Comparison of Actical and activPAL measures of sedentary behaviour in preschool children. J Sci Med Sport. 2012;15(6):526–31. https://doi.org/10.1016/j.jsams.2012.03.014.

Aadland E, Johannessen K. Agreement of objectively measured physical activity and sedentary time in preschool children. Prev Med Rep. 2015;2:635–9. https://doi.org/10.1016/j.pmedr.2015.07.009.

Bélanger ME, Bernier A, Paquet J, Simard V, Carrier J. Validating actigraphy as a measure of sleep for preschool children. J Clin Sleep Med. 2013;9(7):701–6. https://doi.org/10.5664/jcsm.2844.

Roscoe CMP, James RS, Duncan MJ. Calibration of GENEActiv accelerometer wrist cut-points for the assessment of physical activity intensity of preschool aged children. Eur J Pediatr. 2017;176(8):1093–8. https://doi.org/10.1007/s00431-017-2948-2.

de Bock F, Menze J, Becker S, Litaker D, Fischer J, Seidel I. Combining accelerometry and HR for assessing preschoolers’ physical activity. Med Sci Sports Exerc. 2010;42(12):2237–43. https://doi.org/10.1249/MSS.0b013e3181e27b5d.

Ettienne R, Nigg CR, Li F, Su Y, McGlone K, Luick B, et al. Validation of the Actical accelerometer in multiethnic preschoolers: The Children’s Healthy Living (CHL) program. Hawai’i J Med Public Health. 2016;75(4):95–100.

Hislop JF, Bulley C, Mercer TH, Reilly JJ. Comparison of epoch and uniaxial versus triaxial accelerometers in the measurement of physical activity in preschool children: a validation study. Pediatr Exerc Sci. 2012;24(3):450–60. https://doi.org/10.1123/pes.24.3.450.

Kahan D, Nicaise V, Reuben K. Convergent validity of four accelerometer cutpoints with direct observation of preschool children’s outdoor physical activity. Res Q Exerc Sport. 2013;84(1):59–67. https://doi.org/10.1080/02701367.2013.762294.

Martin A, Mcneil M, Penpraze V, Dall P, Granat M, Paton JY, et al. Objective measurement of habitual sedentary behavior in pre-school children: comparison of activPAL with Actigraph monitors. Pediatr Exerc Sci. 2011;23(4):468–76. https://doi.org/10.1123/pes.23.4.468.

Pagels P, Boldemann C, Raustorp A. Comparison of pedometer and accelerometer measures of physical activity during preschool time on 3- to 5-year-old children. Acta Paediatr. 2011;100(1):116–20. https://doi.org/10.1111/j.1651-2227.2010.01962.x.

Pereira JR, Sousa-Sá E, Zhang Z, Cliff DP, Santos R. Concurrent validity of the ActiGraph GT3X+ and activPAL for assessing sedentary behaviour in 2–3-year-old children under free-living conditions. J Sci Med Sport. 2020;23(2):151–6. https://doi.org/10.1016/j.jsams.2019.08.009.

Butte NF, Wong WW, Lee JS, Adolph AL, Puyau MR, Zakeri IF. Prediction of energy expenditure and physical activity in preschoolers. Med Sci Sports Exerc. 2014;46(6):1216–26. https://doi.org/10.1249/mss.0000000000000209.

Hislop J, Palmer N, Anand P, Aldin T. Validity of wrist worn accelerometers and comparability between hip and wrist placement sites in estimating physical activity behaviour in preschool children. Physiol Meas. 2016;37(10):1701–14. https://doi.org/10.1088/0967-3334/37/10/1701.

Johansson E, Ekelund U, Nero H, Marcus C, Hagströmer M. Calibration and cross-validation of a wrist-worn Actigraph in young preschoolers. Pediatr Obes. 2015;10(1):1–6. https://doi.org/10.1111/j.2047-6310.2013.00213.x.

Johansson E, Larisch LM, Marcus C, Hagströmer M. Calibration and validation of a wrist- and hip-worn Actigraph accelerometer in 4-year-old children. PLoS One. 2016;11(9):e0162436. https://doi.org/10.1371/journal.pone.0162436.

Li S, Howard JT, Sosa ET, Cordova A, Parra-Medina D, Yin Z. Calibrating wrist-worn accelerometers for physical activity assessment in prechoolers : machine learning approaches. JMIR Form Res. 2020;4(8):e16727. https://doi.org/10.2196/16727.

Reilly JJ, Coyle J, Kelly L, Burke G, Grant S, Paton JY. An objective method for measurement of sedentary behavior in 3- to 4-year olds. Obes Res. 2003;11(10):1155–8. https://doi.org/10.1038/oby.2003.158.

Sirard J, Trost S, Pfeiffer K, Dowda M, Pate R. Calibration and evaluation of an objective measure of physical activity in preschool children. J Phys Act Health. 2005;2(3):345–57. https://doi.org/10.1123/jpah.2.3.345.

Davies G, Reilly J, McGowan AJ, Dall P, Granat M, Paton JY. Validity, practical utility, and reliability of the Activpal in preschool children. Med Sci Sports Exerc. 2012;44(4):761–8. https://doi.org/10.1249/MSS.0b013e31823b1dc7.

Ahmadi MN, Brookes D, Chowdhury A, Pavey T, Trost SG. Free-living evaluation of laboratory-based activity classifiers in preschoolers. Med Sci Sports Exerc. 2020;52(5):1227–34. https://doi.org/10.1249/mss.0000000000002221.

Ahmadi MN, Chowdhury A, Pavey T, Trost SG. Laboratory-based and free-living algorithms for energy expenditure estimation in preschool children: a free-living evaluation. PLoS One. 2020;15(5):e0233229. https://doi.org/10.1371/journal.pone.0233229.

Ahmadi MN, Pavey TG, Trost SG. Machine learning models for classifying physical activity in free-living preschool children. Sensors (Basel). 2020;20(16). https://doi.org/10.3390/s20164364

Brønd JC, Grøntved A, Andersen LB, Arvidsson D, Olesen LG. Simple method for the objective activity type assessment with preschoolers, children and adolescents. Children (Basel). 2020;7(7). https://doi.org/10.3390/children7070072

Hagenbuchner M, Cliff DP, Trost SG, Van Tuc N, Peoples GE. Prediction of activity type in preschool children using machine learning techniques. J Sci Med Sport. 2015;18(4):426–31. https://doi.org/10.1016/j.jsams.2014.06.003.

Steenbock B, Wright MN, Wirsik N, Brandes M. Accelerometry-based prediction of energy expenditure in preschoolers. J Meas Phys Behav. 2019;2(2):94–102. https://doi.org/10.1123/jmpb.2018-0032.

Trost SG, Cliff DP, Ahmadi MN, Tuc NV, Hagenbuchner M. Sensor-enabled activity class recognition in prechoolers: hip versus wrist Data. Med Sci Sports Exerc. 2018;50(3):634–41. https://doi.org/10.1249/MSS.0000000000001460.

Zhao W, Adolph AL, Puyau MR, Vohra FA, Butte NF, Zakeri IF. Support vector machines classifiers of physical activities in preschoolers. Physiol Rep. 2013;1(1):e00006. https://doi.org/10.1002/phy2.6.

Evenson KR, Catellier DJ, Gill K, Ondrak KS, McMurray RG. Calibration of two objective measures of physical activity for children. J Sports Sci. 2008;26(14):1557–65. https://doi.org/10.1080/02640410802334196.

Sadeh A, Lavie P, Scher A, Tirosh E, Epstein R. Actigraphic home-monitoring sleep-disturbed and control infants and young children: a new method for pediatric assessment of sleep-wake patterns. Pediatrics. 1991;87(4):494–9.

Redmond DP, Hegge FW. Observations on the design and specification of a wrist-worn human activity monitoring system. Behav Res Methods Instrum Comput. 1985;17(6):659–69. https://doi.org/10.3758/BF03200979.

Sitnick SL, Goodlin-Jones BL, Anders TF. The use of actigraphy to study sleep disorders in preschoolers: some concerns about detection of nighttime awakenings. Sleep. 2008;31(3):395–401. https://doi.org/10.1093/sleep/31.3.395.

Ekblom O, Nyberg G, Bak EE, Ekelund U, Marcus C. Validity and comparability of a wrist-worn accelerometer in children. J Phys Act Health. 2012;9(3):389–93. https://doi.org/10.1123/jpah.9.3.389.

Kelly LA, Villalpando J, Carney B, Wendt S, Haas R, Ranieri BJ, et al. Development of Actigraph GT1M accelerometer cut-points for young children aged 12–36 months. J Athl Enhanc. 2016;5(4):1–4. https://doi.org/10.4172/2324-9080.1000235.

Puyau MR, Adolph AL, Vohra FA, Butte NF. Validation and calibration of physical activity monitors in children. Obes Res. 2002;10(3):150–7. https://doi.org/10.1038/oby.2002.24.

Schaefer CA, Nigg CR, Hill JO, Brink LA, Browning RC. Establishing and evaluating wrist cutpoints for the GENEActiv accelerometer in youth. Med Sci Sports Exerc. 2014;46(4):826–33. https://doi.org/10.1249/MSS.0000000000000150.

Sun DX, Schmidt G, Teo-Koh SM. Validation of the RT3 accelerometer for measuring physical activity of children in simulated free-living conditions. Pediatr Exerc Sci. 2008;20(2):181–97. https://doi.org/10.1123/pes.20.2.181.

Sitnick SL, Goodlin-Jones BL, Anders TF. The use of actigraphy to study sleep disorders in preschoolers: some concerns about detection of nighttime awakenings. Sleep. 2008;31(3):395. https://doi.org/10.1093/sleep/31.3.395.

Altenburg TM, Wang X, van Ekris E, Andersen LB, Møller NC, Wedderkopp N, et al. The consequences of using different epoch lengths on the classification of accelerometer based sedentary behaviour and physical activity. PLOS ONE. 2021;16(7):e0254721. https://doi.org/10.1371/journal.pone.0254721.

Acknowledgements

Not applicable.

Funding

This review is part of the “My Little Moves” project that is funded by ZonMw (546003008) and the Bernard van Leer foundation. The funding bodies had no role in the design of the study; in the collection, analysis, and interpretation of the data; or in the writing of the manuscript.

Author information

Authors and Affiliations

Contributions

AL, MC, TA, and VH conceived the study. AL and TA screened all articles for eligibility. AL and JA extracted the data. AL, MC and TA rated the methodological study quality. AL completed data analysis. AL drafted the manuscript. MC, TA, and VH revised and edited significant sections of the manuscript. All authors reviewed and approved the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

Annelinde Lettink, Teatske M. Altenburg, Jelle Arts, Vincent T. van Hees, and Mai J. M. Chinapaw declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Search strategy using MEDLINE for studies evaluating accelerometer-based methods.

Additional file 2.

CAMQAM: Checklist for Assessing the Methodological Quality of studies using Accelerometer-based Methods.

Additional file 3.

Description of the elements and the corresponding codes to describe accelerometer-based methods.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Lettink, A., Altenburg, T.M., Arts, J. et al. Systematic review of accelerometer-based methods for 24-h physical behavior assessment in young children (0–5 years old). Int J Behav Nutr Phys Act 19, 116 (2022). https://doi.org/10.1186/s12966-022-01296-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12966-022-01296-y