Abstract

Background

This systematic review and meta-analysis identified early evidence quantifying the disruption to the education of health workers by the COVID-19 pandemic, ensuing policy responses and their outcomes.

Methods

Following a pre-registered protocol and PRISMA/AMSTAR-2 guidelines, we systematically screened MEDLINE, EMBASE, Web of Science, CENTRAL, clinicaltrials.gov and Google Scholar from January 2020 to July 2022. We pooled proportion estimates via random-effects meta-analyses and explored subgroup differences by gender, occupational group, training stage, WHO regions/continents, and study end-year. We assessed risk of bias (Newcastle–Ottawa scale for observational studies, RοB2 for randomized controlled trials [RCT]) and rated evidence certainty using GRADE.

Results

Of the 171 489 publications screened, 2 249 were eligible, incorporating 2 212 observational studies and 37 RCTs, representing feedback from 1 109 818 learners and 22 204 faculty. The sample mostly consisted of undergraduates, medical doctors, and studies from institutions in Asia. Perceived training disruption was estimated at 71.1% (95% confidence interval 67.9–74.2) and learner redeployment at 29.2% (25.3–33.2). About one in three learners screened positive for anxiety (32.3%, 28.5–36.2), depression (32.0%, 27.9–36.2), burnout (38.8%, 33.4–44.3) or insomnia (30.9%, 20.8–41.9). Policy responses included shifting to online learning, innovations in assessment, COVID-19-specific courses, volunteerism, and measures for learner safety. For outcomes of policy responses, most of the literature related to perceptions and preferences. More than two-thirds of learners (75.9%, 74.2–77.7) were satisfied with online learning (postgraduates more than undergraduates), while faculty satisfaction rate was slightly lower (71.8%, 66.7–76.7). Learners preferred an in-person component: blended learning 56.0% (51.2–60.7), face-to-face 48.8% (45.4–52.1), and online-only 32.0% (29.3–34.8). They supported continuation of the virtual format as part of a blended system (68.1%, 64.6–71.5). Subgroup differences provided valuable insights despite not resolving the considerable heterogeneity. All outcomes were assessed as very-low-certainty evidence.

Conclusion

The COVID-19 pandemic has severely disrupted health worker education, inflicting a substantial mental health burden on learners. Its impacts on career choices, volunteerism, pedagogical approaches and mental health of learners have implications for educational design, measures to protect and support learners, faculty and health workers, and workforce planning. Online learning may achieve learner satisfaction as part of a short-term solution or integrated into a blended model in the post-pandemic future.

Similar content being viewed by others

Background

The Coronavirus Disease 2019 (COVID-19) pandemic has affected human health to an unprecedented degree: more than 569 million cases had been reported by July 2022 and an estimated 14.9 million excess deaths was reported in May 2022 [1]. This has been accompanied by profound disruption to health worker education, due to distancing, restrictions on access to learning facilities and clinical sites, or learner and faculty infection or illness [2, 3]. In response, many institutions rapidly embraced digital innovation and other policy responses to support continued learning [4].

Building on an earlier review by the same authors [5], this paper seeks to quantify the educational innovations and their outcomes since the start of the pandemic, as documented in published studies [6, 7], capturing different regions, levels of training, and occupations [8]. The pertinent challenge is how to translate this evidence into enduring policies, strategy and regulation on the instruction, assessment and well-being of health worker learners [9], in accordance with the WHO Global Strategy on Human Resources for Health: Workforce 2030 [10].

The aim of this systematic review and meta-analysis is to identify and quantify the impact of COVID-19 on the education of health workers worldwide, the resulting policy responses, and their outcomes, providing evidence on emerging good practices to inform policy change.

A graphical abstract summarizing our systematic review and meta-analysis in a cohesive and legible way is presented in Fig. 1.

Methods

Study design

We conducted a systematic review and meta-analysis in accordance with the Measurement Tool to Assess Systematic Reviews-2 (AMSTAR-2) checklist [11] and the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) 2020 statement [12], based on a predesigned protocol registered with PROSPERO (CRD42021256629) [13].

Search strategy

We searched the MEDLINE (via PubMed), EMBASE, Web of Science, and CENTRAL databases, as well as ClinicalTrials.gov and Google Scholar (first 300 records) for randomized controlled trials (RCTs) or observational studies published from 1/1/2020 to 31/07/2022 in English, French or German (full search strategy available in Additional file 1). A snowball approach was also employed.

Eligibility criteria and outcomes

Our eligible population included Health Worker (HW) learners or faculty, as defined by the International Standard Classification of Occupations (ISCO-08) [14] group of health professionals, excluding veterinarians. Health care settings per the Classification of Health Care Providers (International Classification for Health Accounts, ICHA-HP) [15] and relevant educational settings (i.e., universities, colleges) were considered eligible. The included population was divided into undergraduate learners, postgraduate (e.g., residents or fellows) and continuing education (in-service) [16]. Any change(s) and/or innovation(s) that were implemented in health worker education in response to the COVID-19 pandemic (not before the COVID-19 pandemic or amidst other pandemics) were considered eligible. Online training methods were sub-divided into predominantly theoretical courses, courses with a practical component (i.e., practical skill, simulation-based training), congresses/meetings, interviews, and clinical experience with patients (i.e., clinical rotations/electives, telehealth-based training). Comparators included conventional/traditional practices existing prior to the pandemic.

The study outcomes are organized according to (1) impact of the COVID-19 pandemic on the educational process and mental health of learners; (2) policy responses (not included in the meta-analysis); and (3) outcomes of those policy responses (Table 1). Specific meta-analysis outcomes in the categories shown in Table 1 included: regarding axis 1, clinical training, mental health (i.e., anxiety, depression, insomnia and burnout), and learner career plan disruptions (e.g., redeployment), and concerning axis 3, satisfaction, preference and performance with new training and assessment modalities and volunteerism, including any social/community/institutional work. Regarding anxiety and depression, individuals whose symptom severity was deemed moderate or higher according to validated measurement scales were considered as affected. For the Generalized Anxiety Disorder-7 (GAD-7) and Patient Health Questionnaire-9 (PHQ-9) screening tools, this corresponded to a cut-off score of 10.

Literature search and data extraction

All retrieved records underwent semi-automatic deduplication in EndNote 20 (Clarivate Analytics) [17], and were then transferred to a Covidence library (Veritas Health Innovation, Melbourne, Australia) for title and abstract screening. Pairs of authors performed a blind scan of a random 15% sample of records. After achieving an absolute agreement rate > 95% (Fleiss’ kappa, 1st phase: 0.872, 99% confidence interval (CI) [0.846–0.898]; 2nd phase: 0.840, 99% CI [0.814–0.866]), single-reviewer screening was performed for the remainder of the studies, as per the AMSTAR-2 criteria [11]. Subsequently, pairs of independent reviewers screened the full texts of the selected studies for eligibility, and, if eligible, extracted the required data in a predetermined Excel spreadsheet. Screening and data extraction was carried out in two phases: the initial phase (1/1/2020 to 31/8/2021 by AD, ANP, M. Papapanou and MGS) and the updated living phase (1/9/2021 to 31/7/2022 by NRK, AA, DM, MN, CK, M. Papageorgakopoulou). After discussion with the WHO technical partner, we amended the extraction spreadsheet to further include descriptions of policies in the updated living phase. Satisfaction was extracted either from direct mentions of participants’ satisfaction by the authors or from questions surveying the participants’ perceptions on their satisfaction, the success, usefulness or effectiveness of the learning activity. Conflicts were resolved by team consensus. For missing data, study investigators were contacted. Studies for which the full text or missing data were unable to be retrieved were categorized as “reports not retrieved”. Studies on overlapping populations were also considered duplicates and subsequently removed if they related to the same study period and institution(s) and involved similar populations and author lines. The study with the most comprehensive report was retained.

Risk of bias, publication bias and certainty of evidence

Pairs of all aforementioned authors performed the risk of bias assessment, and any conflicts were resolved by team consensus. The quality assessment was performed using an adapted version of the Newcastle–Ottawa Scale (NOS) for cross-sectional studies (Additional file 1), the original NOS for cohort and case–control studies, and the Cochrane risk-of-bias (RoB2) tool (Version-2) for RCTs. Publication bias was explored with funnel plots and the Egger’s test [18]. Certainty of evidence was assessed using the Grading of Recommendations, Assessment, Development and Evaluations (GRADE) approach [19].

Data synthesis

Categorical variables were presented as frequencies (%) and continuous variables as mean (standard deviation [SD]). To dichotomize ordinal data (e.g., Likert-type scales), we used the specific author provided cut-offs for the respective scales, or, if not provided, the 60th percentile (40th if the scale was reversed). Regarding mental health outcomes, we derived scale-specific cut-offs from the literature.

Analyses were carried out on learner and faculty population subsets separately. We carried out a meta-analysis of the Freeman–Tukey (FT) double-arcsine transformed estimates using the DerSimonian and Laird (DL) random-effects model [20,21,22]. We used the harmonic mean in the back-transformation formula of FT estimates to proportions [23]. For each meta-analyzed outcome, we reported the raw proportion (%), pooled proportion (%) along with its 95% CI, the number of studies (n) and number of included individuals (N). When applicable, we pooled standardized mean differences (SMDs) with the method of Cohen [24]. Statistical heterogeneity was quantified by the I2 [25], and was classified as substantial (I2 = 50–90%) or considerable (I2 > 90%) [26].

Subgroup and sensitivity analyses

We performed subgroup analyses stratified by gender, continent, WHO geographical region, ISCO-08 occupational group, stage of training, and year of undergraduate studies, and computed p-values for subgroup differences (psubgroup < 0.10 indicates statistically significant intra-subgroup differences) [26]. The potential effect of time on outcomes potentially exhibiting dynamic changes during the evolution of the pandemic, such as satisfaction and preference with learning formats, as well as mental health outcomes, was explored via additional subgroup analyses by year data collection was completed (2020 vs 2021 vs 2022). Only subgroups involving 3 or more studies are presented and taken into account for the psubgroup calculation, so no subgroup analysis is presented for the 2022 study end year.

Sensitivity analyses excluding studies with N > 25 000 were performed to minimize the risk for duplicate populations that may be introduced by large-scale nationwide studies. Regarding anxiety, depression and burnout, sensitivity analyses restricted to studies employing the GAD-7, PHQ-9, and Maslach Burnout Inventory (MBI, including its variants), respectively, and, even further, their low-risk-of-bias subsets were carried out.

To better account for the anticipated substantial heterogeneity, two additional meta-analytical approaches were used: (i) the Paule–Mandel estimator to calculate the between-study variance [27]; and (ii) the Hartung–Knapp method for the CI calculation [28].

Statistical significance for all analyses was set at a two-sided p < 0.05. All analyses were conducted using aggregate data via the STATA software, version 16.1 (Stata Corporation, College Station, TX, USA). Further explanation of adopted statistical approaches is provided in Additional file 1.

Results

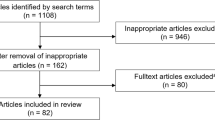

The literature search yielded a total of 171 489 publications (168 102 from databases and 3 387 from snowball and Google Scholar). Following deduplication and title-abstract screening, a total of 10 525 publications (7 214 from database/register search, and 3 311 from snowball/Google Scholar) were assessed for eligibility, of which a total of 2 249 were included in the systematic review. Of these, 2 212 were observational studies (2 079 cross-sectional), and 37 RCTs. The PRISMA 2020 flow diagram is available in Fig. 2. All our included studies are cited in Additional file 2.

Overall, 1 149 073 individuals (1 109 818 learners [96.6%], 22 204 faculty [1.9%], 12 544 combined learner and faculty participants [1.1%], and 4 507 education leaders representing institutions [0.4%]) across 109 countries from 6 continents/WHO regions were included. The total number of women was 468 966 (63.4%) out of 739 127 participants whose gender was reported. Of the studies included in the meta-analysis and pertaining to the impact of the pandemic, 314 focused on training disruption, 193 on career plans disruption, and 287 on the mental health of learners; regarding the outcomes of policy responses, 1013 studies focused on innovations in learning, 121 on online assessment methods and 48 on volunteerism.

Characteristics of included individuals and settings per outcome are available in Table 2A, B, Additional file 3 and Additional file 4. The sample mostly represented undergraduate learners (81.4%), within the field of medicine (86.5%), in studies originating from institutions in Asia (59.9%) and the Western Pacific WHO Region (WPR, 40.7%).

Thirty-seven RCTs were included: 20 out of them were assessed as at high risk of bias, 12 at low risk of bias, and 5 at risk of bias with some concerns. They mostly compared newly developed virtual, gamified or in-person learning for medical or nursing students during the COVID-19 pandemic to prior established teaching methods. They mostly showed better learning outcomes with the innovative modalities, with some studies showing no significant difference. More details are available in Additional file 5. Based on the NOS and adapted NOS scales, the median (Q1–Q3) quality score of all observational studies was 6 (4–7), [5 (4–7) for cross-sectional; 6 (5–7) for retrospective; 5 (4–7) for prospective cohorts; and 7 (6–7) for case-controls] (Additional file 3).

The main results of our systematic review and meta-analysis are analyzed below, along with the most noteworthy subgroup results. Figures 3 and 4 also depict the main meta-analysis outcomes from Axes 1 and 3 (i.e., impact of the pandemic on health worker education and Outcomes of policy responses, Table 1). All results from subgroup analyses based on gender, ISCO-08 group, continent, WHO region, training level and undergraduate year of studies are detailed in Tables 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, and 15. The full spectrum of analyses is also available in more detail in Additional file 6.

Meta-analysis of impact of COVID-19 on Health Worker Education. Random-effect meta-analyses of proportions reflecting the impact of the pandemic on health worker education. A Disruption of learning, redeployment, changes of career plans and potential prolongation of studies. B mental health effects of the pandemic on learners. Each analysis is depicted as a cyclic data marker; the horizontal lines indicate the 95% confidence intervals (CI). The “raw proportion (%)” is derived from simple weighted division. I2 quantifies heterogeneity, which is statistically significant (p < 0.01) in all cases (metric omitted)

Meta-analysis of outcomes of policy responses. Random-effect meta-analyses of proportions reflecting the outcomes of policy and management responses in regard to the pandemic. A Learner and faculty perceptions on online and blended forms of learning. B Satisfaction with online assessments and volunteerism initiatives. Each analysis is depicted as a cyclic data marker; the horizontal lines indicate the 95% confidence intervals (CI). The “raw proportion (%)” is derived from simple weighted division. I2 quantifies heterogeneity, which is statistically significant (p < 0.01) in all cases (metric omitted)

Impact of the pandemic on health worker education

The widespread disruption in undergraduate, graduate and continuing education of health workers due to closures and physical distancing has been clearly reported since the start of the pandemic [5]. There were references to complete or temporary cessation of in-person educational activities including classes and patient contact [29, 30]; and in many cases the temporary cessation of face-to-face learning, both pre-clinical and clinical. Especially for undergraduate learners, bedside education was initially halted to protect learners [31]. During residency training, the main disruptions identified were the reduction in case volumes [32, 33] especially in surgical training [34, 35], less time available for learners to spend in the hospital [36], or, conversely, increased workload, especially in COVID-related specialties. Other activities including in-person scientific conferences were discontinued [37]. Timely graduation was jeopardized [38], required examinations were canceled [39] and graduates were unable to apply for their next steps [40].

Disruption to clinical training

Most studies surveying training disruption focused on learners in a clinical setting. Overall, self-perceived disruption of training during the pandemic was estimated at 71.1% (95% confidence interval: 67.9–74.2) and varied according to WHO region, with the highest disruption having been observed in the Southeast Asia Region (SEAR) (Table 3). When surveyed, 75.8% (71.4–79.9) of learners noted decreased exposure to invasive procedures, such as surgeries or endoscopies, whereas a somewhat lower disruption was observed for the outpatient or inpatient clinical activity and performance in non-invasive procedures (69.7%, 64.4–74.9). Due to the disruption, 44.7% (39.2–50.2) of learners would want to prolong their training to presumably cover their educational gaps.

Disruption of career plans

Learners were sometimes redeployed from their training programs to support the COVID-19 response [41,42,43]. An estimated 29.2% (25.3–33.2) of clinical learners had to be redeployed during the pandemic to fulfill new roles, either caring for COVID-19 patients or accommodating other clinical needs associated with the response to the pandemic (e.g., covering a non-COVID-19 unit because of health worker shortage). This was more evident for learners in the WHO European region (EUR) (35.2%, 28.8–41.8), compared to those in Regions of the Americas (AMR, 24.7%, 19.5–30.3) (Table 4). Also, 21.5% (16.9–26.1) of learners admitted that they were reevaluating their future career plans due to the pandemic.

Mental health of learners: anxiety, depression, burnout, and insomnia

At least moderate anxiety, measured by validated scales, was estimated at 32.3% (28.4–36.1%). Notably, pharmacy learners reported higher anxiety than any other occupation, undergraduate learners scored higher than graduate ones, female learners scored higher than males, and learners in the WPR scored lower than any other WHO region. Also, learners surveyed in 2021 showed higher anxiety rates than learners in 2020 (Table 5).

Based on validated instruments, at least moderate depression was prevalent in 32.0% of learners (27.8–36.2), with undergraduates showing higher rates than graduate learners, learners in South America and Africa showing higher rates than other continents, and learners in the WPR showing lower rates than any other WHO region (Table 5). Further sensitivity analyses on studies using GAD-7 or PHQ-9 revealed similar findings (32.1% for anxiety, 32.8% for depression). Pooled mean GAD-7 and PHQ-9 learner scores were 7.00 (6.22–7.79), and 6.83 (5.72–7.95), respectively.

Burnout was prevalent in 38.8% of learners (33.4–44.2), with sensitivity analysis restricted to MBI scale showing 46.8% (28.5–55.0). Finally, insomnia was estimated at 30.9% (20.3–41.5), with significantly higher scores being reported in 2021 than in 2020 (Table 5).

Policy and management responses to those impacts

Several policy and management responses by governments, regulatory and accreditation bodies, schools, hospitals, clinical departments, health systems and student organizations were identified. A commonly cited response was the transition of face-to-face learning to online formats [44], including online videos [45], game-based learning [46], virtual clinical placements [34, 47,48,49], virtual simulations [50], remote teaching of practical skills as well as augmented reality [51, 52]. Interviews also transitioned to virtual format after guidance by accreditation bodies [53, 54], and face-to-face conferences were replaced with large-scale virtual conferences [55]. There were also responses relating to online assessment [56].

COVID-19-specific learning was introduced in particular for in-service and postgraduate learners [57], such as workshops on the use of personal protective equipment (PPE) [58,59,60] and simulations for COVID-specific protocols [61, 62]. Institutions published regulations and recommendations safeguarding learners’ health and continued learning [57, 63, 64], while there were interventions to specifically support learners’ mental health [65, 66]. Undergraduate learners were also involved in volunteering towards supporting the COVID-19 response [67, 68]. Another policy response was early graduation of final-year students who could work in a clinical capacity [69]. An overview of the institutions enacting these responses and policies as identified in the second phase of our systematic review is summarized in Table 6.

Outcomes of policy responses

Online and blended learning approaches

Overall 75.9% (74.2–77.7) of learners were satisfied with online learning. Learners appeared more satisfied with online clinical exposure, such as fully virtual clinical rotations and real patient encounters (86.9%, 79.5–93.1) or online practical courses (85.4%, 82.3–88.2) compared to predominantly theoretical courses (67.5%, 64.7–70.3). Satisfaction with virtual congresses was also high (84.1%, 71.0–94.0). Learner satisfaction rates with virtual methods were lower in the EMR and SEAR (Table 7).

Overall, 32.0% (29.3–34.8) of learners preferred fully online learning, which was lower than preferences for fully in-person learning (48.8%, 45.4–52.1) or for blended learning (56.0%, 51.2–60.7). Lastly, when examined about their willingness to maintain an online-only format or not, and a blended online and in-person training or not, 34.7% (30.7–38.8), and 68.1% (64.6–71.5) of them, respectively, replied positively.

As training level was increasing (undergraduates vs graduates vs continuing education), a gradually higher preference for online learning (29.5% vs 39.7% vs 39.9%) and lower preference for learning in-person (50.9%, 47.6%, 30.7%) were observed. Also, learners in the AMR and EUR expressed higher willingness to keep blended learning after the pandemic (Tables 8, 9, 10, 11, and 12).

Assessing the same outcomes for faculty, 71.8% (66.8–76.8) expressed satisfaction with online methods. Preference for online-only, in-person or blended training methods were, respectively, 25.5% (15.5–35.5), 58.7% (51.6–65.8), and 64.5% (47.8–81.2). Their willingness to maintain an online-only or a blended online and in-person teaching post-pandemic were 36.7% (22.3–51.2) and 65.6% (57.1–74.0), respectively.

Responses were overall effective, significantly increasing learner skills scores when compared to scores before the response or scores achieved with pre-pandemic comparators (Table 15).

Assessment

The satisfaction of learners with online assessments was 68.8% (60.7–76.3). Postgraduate learners were significantly more inclined towards the use of online assessments compared to undergraduate ones (86.6% vs 62.5%), and with female learners being less satisfied than males (38.7% vs 58.1%). Learners in EMR and SEAR were less satisfied with online assessment than their colleagues in EUR and AMR (Table 13). Candidates also achieved significantly higher mean scores at online assessments compared to previous, in-person assessments or with innovations in assessment compared to traditional [pre vs post: SMD = − 0.68 (95% CI − 0.96 to − 0.40)].

Volunteerism

Studies investigating willingness of learners to volunteer in the COVID-19 response were also included. Despite 62.2% (49.6–74.8) of learners expressing an intention to volunteer, 27.7% (18.6–36.8) of learners reported engaging in volunteer activity, with undergraduate learners volunteering much more (pooled estimate of 32.4%) than their graduate colleagues (pooled estimate of 9.1%) (Table 14, Fig. 4).

A full list of all outcomes, Forest plots (in which the extent of the variation in the pooled estimates is more visible) and funnel plots are available at Additional file 6 and Additional file 7. Publication bias was evident in about one-fourth of the analyses. The GRADE certainty of evidence was assessed as “very low” for all outcomes of the meta-analysis. Finally, alternative meta-analytical approaches additionally undertaken for our main analyses did not materially change our findings (Additional file 8).

A summary of our main findings can be found in Table 15, with additional interpretation in “Discussion”.

Discussion

A summary and interpretation of our main findings can be found in Table 15.

Impacts of the pandemic on health worker education

Our meta-analysis showed that 71% of learners reported their clinical training was adversely impacted by the pandemic. In a large study surveying medical students from South America, Japan and Europe, 93% of students reported a suspension of bedside teaching [70]. Trainees in surgical and procedural fields were severely affected, with 96% of surgery residents and early-career surgeons in the US reporting a disruption in their clinical experience, with an overall 84% reduction in their operative volume in the early phases of the pandemic [71]. Most included studies did not provide separate data on the type of surgery. In similar large-scale disruptions, achieving the difficult but crucial balance between patient and trainee safety with the necessary clinical training of health workers should be a priority for policymaking.

The extent of the impact on the mental health of learners is concerning and highlights the need for sufficient resources to support learners and faculty. Our meta-analysis revealed that about one in three learners suffered from at least moderate anxiety, depression, insomnia, or burnout. These appear to be higher than reported anxiety and depression in health workers, similar to the general population during the pandemic and similar to pre-pandemic studies. In an umbrella review of depression and anxiety among health workers (not learners) during the pandemic, anxiety and depression were estimated at 24.9% and 24.8%, respectively [72], although most meta-analyses also included mild forms of anxiety and depression. A different subgroup analysis estimated moderate or higher anxiety and depression in health workers at 6.88% (4.4–9.9) and 16.2% (12.8–19.9) [73], results much lower than ours. In the general population, anxiety and depression were estimated in one meta-analysis at 31.9% (27.5–36.7) and 33.7% (27.5–40.6) [74], similar to our estimate for health worker learners. Lastly, comparing our results with a 2018 meta-analysis, the prevalence of anxiety (33.7%, 10.1–58.9) and depression (39.2%, 29.0–49.5) might be similar among health learners before and during the COVID-19 pandemic [75], and warrants further study and policy interventions. Anxiety was significantly higher in 2021 studies compared to 2020, indicating a notable effect of persisting stressors on mental health and emphasizing the need for early intervention to prevent anxiety. Pharmacy learners were significantly more anxious, which may be associated with different backgrounds and levels of familiarity with the intense clinical environment at times of capacity, in comparison to medical and nursing colleagues.

Multiple studies showed female gender was a risk factor for increased anxiety and depression among health learners [71, 76,77,78,79]. In studies that investigated underlying stressors, learners showed a high level of anxiety about their relatives’ health [41, 80,81,82,83], getting infected with COVID-19 themselves [41, 80, 84, 85], lack of PPE [86, 87], failing their clinical obligations [88], the disruption of educational activities [89, 90], or financial reasons [88, 91, 92]. A UK study on the psychological well-being of health worker learners during the pandemic associated the educational disruption with a negative impact on mental health, estimating low well-being at 61.9%, moderate to high perceived stressfulness of training at 83.3% and high presenteeism at 50% despite high satisfaction with training (90%) [93]. Learners felt a lack of mental health resources and supports in some disciplines [93]. A US study found that lack of wellness framework and lack of personal protective equipment were predictors of increased depression and burnout in surgery residents and early-career surgeons, highlighting the importance of well-designed wellness initiatives and appropriate protection for learners [71]. A summary of protective and exacerbating factors identified from included studies is available in Table 16. An international study of medical students identified high rates of insomnia (57%), depressed mood (40%) as well as multiple physical symptoms including headache (36%), eye fatigue (57%) and back pain (49%) [70]. These important physical complaints were not included in our systematic review. Interestingly, time spent in front of a screen daily correlated positively with depression, insomnia and headache. Alcohol consumption declined during the pandemic, whereas cigarette and marijuana use was unchanged. Putting together these findings, trainees’ mental- and physical-health appears to be associated with multiple factors that should be targeted by policy interventions: gender disparities, lack of well-designed wellness frameworks, stressful training, lack of protective equipment and potential implications of increased screen time. It should be noted that variants of the MBI scale also tend to overestimate burnout rates [94], so these may be actually lower than reported by our study.

Outcomes of policy responses

Learners’ satisfaction with the rapidly implemented policy of online learning was relatively high (76%), especially if it included patient contact or practical training, rather than a purely theoretical approach. However, although learners were relatively satisfied when the alternative was no education, their opinions seemed to change when presented with options for the future. Learners preferred face-to-face (49%) and blended (56%) over fully online education (32%). In addition, only a small percentage of students were willing to pursue an exclusively online learning format (35%) in the post-pandemic era, with their preference trending towards a blended model (68%). The “Best Evidence in Medical Education” series and other systematic reviews, including only studies published in 2020, showed that the rapid shift to online learning proved to be an easily accessible tool that was able to minimize the impact of early lockdowns, both in undergraduate and graduate education [105,106,107]. Adaptations included telesimulations, live-streaming of surgical procedures and the integration of students to support clinical services remotely. Challenges included the lack of personal interaction and standardized curricula. All studies showed high risk of bias and poor reporting of the educational setting and theory [105]. Out meta-analysis of all relevant studies spanning from 2020 to mid-2022 showed that the integration of practical skill training into online courses led to higher satisfaction rates, solidifying a well-known preference for active learning among health workers. Satisfaction and preference for online learning was significantly increased in postgraduate and continuing learners compared to undergraduates, indicating it may be better suited for advanced learners with busy schedules. Higher convenience and ability to manage one’s time more flexibly and efficiently were frequently reported reasons for satisfaction and preference for online education [108,109,110,111]. Ιn synchronous learning, interaction through interactive lectures or courses, quizzes, case-based discussions, social media, breakout rooms or journal clubs were associated with increased satisfaction [112,113,114,115,116]. Conversely, in asynchronous learning, the opportunity for self-paced study and more detailed review of study material increased satisfaction [117,118,119]. Limitations of online education included challenges in comprehending material in courses such as anatomy [120, 121], as well as lack of motivation among learners [122,123,124,125]. A different systematic review found medical students appreciated the ability to interact with patients from home, easier remote access to experts and peer mentoring, whereas they viewed technical issues, reduced engagement and worldwide inequality were viewed as negative attributes of online learning [126]. Interestingly, one study comparing medical and nursing student satisfaction across India found high dissatisfaction (42%, compared to 37% satisfaction) which was not significantly different between the two fields, and higher in first-year students. Supportive faculty was important in increasing satisfaction [121].

We found that learners performed better in online assessments compared to prior in-person ones. It is unknown whether this represents lower demands, inadequate supervision, or changes in the constructive alignment between learning outcomes (e.g., theoretical knowledge) and assessment modality (e.g., multiple choice questions) [127]. However, online assessment has significant limitations in evaluating hands-on skills. Learners perceived online assessments as less fair, as cheating can be easier [128,129,130], or felt unable to showcase their skills online [131]. Open-book assessments focusing on thinking instead of memorization were preferred by learners [132] and may be more appropriate for online assessment. A different systematic review including studies up to October 2021 reviewed adaptations in in-person and online clinical examinations of medical students. Overall, online or modified in-person clinical assessment was deemed feasible, with similar scores to prior in-person iterations, and well received by trainees [133].

Although 62% of learners reported a willingness to volunteer, one in three actually did. This could be due to health risks, lockdowns, lack of opportunity or time, or other factors. As expected, undergraduates had more time to actually volunteer than other groups, however willingness to volunteer was comparable between the different training levels. These activities made heavy use of technology and frequently involved telephone outreach and counseling of patients and the public [134,135,136,137]. Students were also employed clinically in hospitals or other settings [138] and assisted with food and PPE donation and other nonclinical activities such as babysitting [139]. Some accrediting institutions responded by recommending that volunteering activities be rewarded with academic credit and supervised adequately [140].

Strengths of our study

To our knowledge, this is the largest systematic review and meta-analysis exploring the impact of the pandemic on the education and mental health of health worker learners. The vast amount of data allowed us to perform multiple subgroup analyses and explore the potential differences in training disruption, mental health and perceptions on educational innovations. We included health worker learners from all regions of the world, all occupations, and all levels of training. We also undertook sensitivity analyses by restricting our analyses to a homogenous sample of higher quality studies (e.g., by only pooling GAD-7/PHQ-9/MBI low risk of bias studies for anxiety/depression/burnout). These approaches demonstrated the robustness of our findings. Finally, we attempted to explore the effect of time on outcomes, given the dynamic character of the pandemic.

Limitations of our study

Although we excluded duplicate publications, there is still a risk for overlap, as learners may have participated anonymously in multiple cross-sectional studies. We attempted to minimize this with sensitivity analyses excluding very large datasets. Second, satisfaction was extracted from a variety of definitions among different studies leading to considerable heterogeneity. While prior experience with virtual learning might have affected learners’ or faculty perceptions, its inconsistent reporting did not allow us to account for it. For similar reasons, we did not manage to quantify mild mental health disruption for anxiety and depression. Although multiple significant subgroup differences emerged, heterogeneity remained largely unresolved. Heterogeneity is inherently high in meta-analyses of proportions, and the large sample of studies along with the subjective nature of many outcomes are in part responsible. The precision in point estimates (i.e., the observed narrow CIs) is therefore mainly a consequence of the large sample rather than true low variation. Therefore, we advise cautious interpretation and assess all our outcomes as very-low-certainty of evidence. Our sample mainly represented undergraduate students, learners in medicine and Asia, with reduced representation from Africa, South America and Oceania. Therefore, our results should be generalized with caution. However, subgroup analyses provide some insight into intra-group differences. Last, the authors were unable to include studies published in Spanish, which may in part reflect the scarcity of included studies from South America. We did, however, include studies in German and French.

Quality assessment revealed mostly observational studies and self-reported outcomes. RCTs were scarce and a considerable subset of them at high risk of bias. Publication bias was also evident in one-fourth of our analyses, leading to potential overestimation of proportions (e.g., higher satisfaction may be reported more eagerly). The above are consistent with the challenge in the education literature, which tends to capture mostly Kirkpatrick Level 1 data [141] (learner reaction), instead of objective learning assessments or behavioral changes. However, at the early stages of the pandemic, the literature is more likely to include lower-level immediate outcomes. Future studies will likely capture more objective outcomes and similar reviews should be repeated. Educational experiences are difficult to standardize and measure, making strict evidence-informed practice difficult [142]. However, quantitative evidence of any form can be a significant contributor to policy change.

Conclusion

Our systematic review and meta-analysis quantified the widespread disruption of health worker education during the early phases of the COVID-19 pandemic. Clinical training was severely disrupted, with many learners being redeployed and some expressing a need to prolong their training. About one in three learners screened positive for anxiety, depression, burnout or insomnia. Although learners from all occupations and countries were overall satisfied with new educational experiences including online learning, indicating a cultural shift towards the acceptability of online learning, they ultimately preferred in-person or blended formats. Learners in regions with lower satisfaction with online learning (e.g., Asian countries—especially EMR or SEAR), would need further support with resources to maximize learning opportunities. Our evidence supports acceptability for a shift to blended learning, especially for postgraduate learners. This can combine the adaptability and personalized online learning with in-person consolidation of interpersonal and practical skills, which both learners and educators agree is necessary. Policies should also prioritize prevention, screening, and interventions for anxiety, depression, insomnia, and burnout among not only health workers, but also undergraduate and graduate learners, who are significantly affected. A repeated large-scale review in a few years will be able to capture a more representative sample of countries, occupations and experiences. Our review aspires to inform future studies that will objectively evaluate the effectiveness of ensuing policy and management responses.

Availability of data and materials

The datasets generated and analyzed during the current study are available from the corresponding author upon reasonable request.

Abbreviations

- HW:

-

Health worker

- COVID-19:

-

Coronavirus disease 2019

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- AMSTAR-2:

-

Measurement Tool to Assess Systematic Reviews-2

- AMR:

-

Region of the Americas

- CI:

-

Confidence interval

- EMR:

-

Eastern Mediterranean Region

- EUR:

-

European Region

- FT:

-

Freeman–Tukey

- GAD-7:

-

General Anxiety Disorder-7

- GRADE:

-

Grading of Recommendations Assessment, Development and Evaluation

- ICHA:

-

International Classification for Health Accounts

- ISCO:

-

International Standard Classification of Occupations

- NOS:

-

Newcastle–Ottawa Scale

- PHQ-9:

-

Patient Health Questionnaire-9

- PPE:

-

Personal Protective Equipment

- RoB-2:

-

Risk of Bias 2

- SEAR:

-

South East Asian Region

- WHO:

-

World Health Organization

- WPR:

-

Western Pacific Region

- SD:

-

Standard deviation

- SMD:

-

Standardized mean difference

- IQR:

-

Interquartile range

- N :

-

Number of individuals

- n :

-

Number of studies

- DL:

-

DerSimonian and Laird

- RCT:

-

Randomized controlled trial

References

COVID-19 coronavirus pandemic. https://www.worldometers.info/coronavirus/#page-top.

Robles-Pérez E, González-Díaz B, Miranda-García M, Borja-Aburto VH. Infection and death by COVID-19 in a cohort of healthcare workers in Mexico. Scand J Work Environ Health. 2021;47:349–55.

Bandyopadhyay S, Baticulon RE, Kadhum M, Alser M, Ojuka DK, Badereddin Y, Kamath A, Parepalli SA, Brown G, Iharchane S, et al. Infection and mortality of healthcare workers worldwide from COVID-19: a systematic review. BMJ Glob Health. 2020;5: e003097.

Stoller JK. A perspective on the educational “SWOT” of the coronavirus pandemic. Chest. 2021;159:743–8.

Dedeilia A, Sotiropoulos MG, Hanrahan JG, Janga D, Dedeilias P, Sideris M. Medical and surgical education challenges and innovations in the COVID-19 era: a systematic review. In Vivo. 2020;34:1603–11.

Daniel M, Gordon M, Patricio M, Hider A, Pawlik C, Bhagdev R, Ahmad S, Alston S, Park S, Pawlikowska T, et al. An update on developments in medical education in response to the COVID-19 pandemic: a BEME scoping review: BEME guide no. 64. Med Teach. 2021;43:253–71.

Papapanou M, Routsi E, Tsamakis K, Fotis L, Marinos G, Lidoriki I, Karamanou M, Papaioannou TG, Tsiptsios D, Smyrnis N, et al. Medical education challenges and innovations during COVID-19 pandemic. Postgrad Med J. 2021;98(1159):321–7.

Seymour-Walsh AE, Bell A, Weber A, Smith T. Adapting to a new reality: COVID-19 coronavirus and online education in the health professions. Rural Remote Health. 2020;20:6000.

Hays RB, Ramani S, Hassell A. Healthcare systems and the sciences of health professional education. Adv Health Sci Educ Theory Pract. 2020;25:1149–62.

World Health O. Global strategy on human resources for health: workforce 2030. Geneva: World Health Organization; 2016.

Shea BJ, Reeves BC, Wells G, Thuku M, Hamel C, Moran J, Moher D, Tugwell P, Welch V, Kristjansson E, Henry DA. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017;358: j4008.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, Shamseer L, Tetzlaff JM, Akl EA, Brennan SE, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372: n71.

Health Worker Education during the COVID-19 pandemic: challenges, responses and lessons for the future—a living systematic review. PROSPERO 2021 CRD42021256629. https://www.crd.york.ac.uk/prospero/display_record.php?ID=CRD42021256629.

International Labour Organization. International standard classification of occupations 2008 (ISCO-08): structure, group definitions and correspondence tables: 2012. Geneva: International Labour Organization; 2016.

Organization WH. A system of health accounts, 2011 edition. OECD Publishing; 2011.

Sheikh A, Donaldson L, Dhingra-Kumar N, Bates D, Kelley E, Larizgoitia I, Panesar S, Singh C, de Silva D, Markuns J, et al. Education and training: technical series on safer primary care. Geneva: World Health Organization; 2016. Licence: CC BY-NC-SA 3.0 IGO. 2016.

Bramer WM, Giustini D, de Jonge GB, Holland L, Bekhuis T. De-duplication of database search results for systematic reviews in EndNote. J Med Libr Assoc. 2016;104:240–3.

Egger M, Smith GD, Schneider M, Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ. 1997;315:629–34.

Balshem H, Helfand M, Schunemann HJ, Oxman AD, Kunz R, Brozek J, Vist GE, Falck-Ytter Y, Meerpohl J, Norris S, Guyatt GH. GRADE guidelines: 3. Rating the quality of evidence. J Clin Epidemiol. 2011;64:401–6.

Nyaga VN, Arbyn M, Aerts M. Metaprop: a Stata command to perform meta-analysis of binomial data. Arch Public Health. 2014;72:39.

Barendregt JJ, Doi SA, Lee YY, Norman RE, Vos T. Meta-analysis of prevalence. J Epidemiol Community Health. 2013;67:974–8.

DerSimonian R, Laird N. Meta-analysis in clinical trials. Control Clin Trials. 1986;7:177–88.

Miller JJ. The inverse of the Freeman–Tukey double arcsine transformation. Am Stat. 1978;32:138–138.

LLC S. Stata meta-analysis reference manual release 17. College Station: Stata Press; 2021.

Higgins JP, Thompson SG, Deeks JJ, Altman DG. Measuring inconsistency in meta-analyses. BMJ. 2003;327:557–60.

Higgins JP, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA. Cochrane handbook for systematic reviews of interventions. Chichester: Wiley; 2019.

Veroniki AA, Jackson D, Viechtbauer W, Bender R, Bowden J, Knapp G, Kuss O, Higgins JP, Langan D, Salanti G. Methods to estimate the between-study variance and its uncertainty in meta-analysis. Res Synth Methods. 2016;7:55–79.

IntHout J, Ioannidis JPA, Borm GF. The Hartung–Knapp–Sidik–Jonkman method for random effects meta-analysis is straightforward and considerably outperforms the standard DerSimonian–Laird method. BMC Med Res Methodol. 2014;14:25.

Aghakhani K, Shalbafan M. What COVID-19 outbreak in Iran teaches us about virtual medical education. Med Educ Online. 2020;25:1770567.

Elengickal JA, Delgado AM, Jain SP, Diller ER, Valli CE, Dhillon KK, Lee HK, Baskar R, MacArthur RD. Adapting education at the medical college of Georgia at Augusta university in response to the COVID-19 pandemic: the pandemic medicine elective. Med Sci Educ. 2021;31:1–8.

De Ponti R, Marazzato J, Maresca AM, Rovera F, Carcano G, Ferrario MM. Pre-graduation medical training including virtual reality during COVID-19 pandemic: a report on students’ perception. BMC Med Educ. 2020;20:332.

Kilcoyne MF, Coyan GN, Aranda-Michel E, Kilic A, Morell VO, Sultan I. The impact of coronavirus 2019 on general surgery residency: a national survey of program directors. Ann Med Surg. 2021;65: 102285.

Veerasuri S, Vekeria M, Davies SE, Graham R, Rodrigues JCL. Impact of COVID-19 on UK radiology training: a questionnaire study. Clin Radiol. 2020;75:877.e877-877.e814.

Mallick R, Odejinmi F, Sideris M, Egbase E, Kaler M. The impact of COVID-19 on obstetrics and gynaecology trainees; how do we move on? Facts Views Vis Obgyn. 2021;13:9–14.

Sahin B, Hanalioglu S. The continuing impact of coronavirus disease 2019 on neurosurgical training at the 1-year mark: results of a nationwide survey of neurosurgery residents in Turkey. World Neurosurg. 2021;151:e857–70.

Liles JL, Danilkowicz R, Dugas JR, Safran M, Taylor D, Amendola AN, Herzog M, Provencher MT, Lau BC. In response to COVID-19: current trends in orthopaedic surgery sports medicine fellowships. Orthop J Sports Med. 2021;9:2325967120987004.

Fero KE, Weinberger JM, Lerman S, Bergman J. Perceived impact of urologic surgery training program modifications due to COVID-19 in the United States. Urology. 2020;143:62–7.

Brondani M, Donnelly L. COVID-19 pandemic: students’ perspectives on dental geriatric care and education. J Dent Educ. 2020;84:1237–44.

Escalon MX, Raum G, Tieppo Francio V, Eubanks JE, Verduzco-Gutierrez M. The immediate impact of the coronavirus pandemic and resulting adaptations in physical medicine and rehabilitation medical education and practice. Pm & R. 2020;12:1015–23.

Harries AJ, Lee C, Jones L, Rodriguez RM, Davis JA, Boysen-Osborn M, Kashima KJ, Krane NK, Rae G, Kman N, et al. Effects of the COVID-19 pandemic on medical students: a multicenter quantitative study. BMC Med Educ. 2021;21:14.

Eweida RS, Rashwan ZI, Desoky GM, Khonji LM. Mental strain and changes in psychological health hub among intern-nursing students at pediatric and medical-surgical units amid ambience of COVID-19 pandemic: a comprehensive survey. Nurse Educ Pract. 2020;49: 102915.

Kessler RA, Oermann EK, Dangayach NS, Bederson J, Mocco J, Shrivastava RK. Letter to the Editor: Changes in neurosurgery resident education during the COVID-19 pandemic: an institutional experience from a global epicenter. World Neurosurg. 2020;140:439–40.

Juprasert JM, Gray KD, Moore MD, Obeid L, Peters AW, Fehling D, Fahey TJ 3rd, Yeo HL. Restructuring of a general surgery residency program in an epicenter of the coronavirus disease 2019 pandemic: lessons from New York City. JAMA Surg. 2020;155:870–5.

Hadie SNH, Tan VPS, Omar N, Nik Mohd Alwi NA, Lim HL, Ku Marsilla KI. COVID-19 disruptions in health professional education: use of cognitive load theory on students’ comprehension, cognitive load, engagement, and motivation. Front Med. 2021;8: 739238.

Nomura O, Irie J, Park Y, Nonogi H, Hanada H. Evaluating effectiveness of YouTube videos for teaching medical students CPR: solution to optimizing clinician educator workload during the COVID-19 pandemic. Int J Environ Res Public Health. 2021;18:7113.

Chang CY, Chung MH, Yang JC. Facilitating nursing students’ skill training in distance education via online game-based learning with the watch-summarize-question approach during the COVID-19 pandemic: a quasi-experimental study. Nurse Educ Today. 2022;109: 105256.

Redinger KE, Greene JD. Virtual emergency medicine clerkship curriculum during the COVID-19 pandemic: development, application, and outcomes. West J Emerg Med. 2021;22:792–8.

Luck J, Gosling N, Saour S. Undergraduate surgical education during COVID-19: could augmented reality provide a solution? Br J Surg. 2021;108:e129–30.

Almohammed OA, Alotaibi LH, Ibn Malik SA. Student and educator perspectives on virtual institutional introductory pharmacy practice experience (IPPE). BMC Med Educ. 2021;21:257.

Bourgault A, Mayerson E, Nai M, Orsini-Garry A, Alexander IM. Implications of the COVID-19 pandemic: virtual nursing education for delirium care. J Prof Nurs. 2022;38:54–64.

Mladenovic R, Matvijenko V, Subaric L, Mladenovic K. Augmented reality as e-learning tool for intraoral examination and dental charting during COVID-19 era. J Dent Educ. 2021;86:862–4.

Miles S, Donnellan N. Learning fundamentals of laparoscopic surgery manual skills: an institutional experience with remote coaching and assessment. Mil Med. 2021;187:e1281–5.

D’Angelo AD, D’Angelo JD, Beaty JS, Cleary RK, Hoedema RE, Mathis KL, Dozois EJ, Kelley SR. Virtual interviews—utilizing technological affordances as a predictor of applicant confidence. Am J Surg. 2021;222:1085–92.

Romano R, Mukherjee D, Michael LM, Huang J, Snyder MH, Reddy VP, Guzman K, Lane P, Johnson JN, Selden NR, et al. Optimizing the residency application process: insights from neurological surgery during the pandemic virtual application cycle. J Neurosurg. 2022;137:1–9.

Stamelou M, Struhal W, Ten Cate O, Matczak M, Caliskan SA, Soffietti R, Marson A, Zis P, di Lorenzo F, Sander A, et al. Evaluation of the 2020 European academy of neurology virtual congress: transition from a face-to-face to a virtual meeting. Eur J Neurol. 2021;28:2523–32.

Fitzgerald N, Moylett E, Gaffney G, McCarthy G, Fapohunda O, Murphy AW, Geoghegan R, Hallahan B. Undertaking a face-to-face objective structured clinical examination for medical students during the COVID-19 pandemic. Ir J Psychol Med. 2022. https://doi.org/10.1017/ipm.2022.19.

Findyartini A, Greviana N, Hanum C, Husin JM, Sudarsono NC, Krisnamurti DGB, Rahadiani P. Supporting newly graduated medical doctors in managing COVID-19: an evaluation of a massive open online course in a limited-resource setting. PLoS ONE. 2021;16: e0257039.

Díaz-Guio DA, et al. Cognitive load and performance of health care professionals in donning and doffing PPE before and after a simulation-based educational intervention and its implications during the COVID-19 pandemic for biosafety. Infez Med. 2020;28:111–7.

Engberg M, Bonde J, Sigurdsson ST, Moller K, Nayahangan LJ, Berntsen M, Eschen CT, Haase N, Bache S, Konge L, Russell L. Training non-intensivist doctors to work with COVID-19 patients in intensive care units. Acta Anaesthesiol Scand. 2021;65:664–73.

Li Y, Wang Y, Li Y, Zhong M, Liu H, Wu C, Gao X, Xia Z, Ma W. Comparison of repeated video display vs combined video display and live demonstration as training methods to healthcare providers for donning and doffing personal protective equipment: a randomized controlled trial. Risk Manag Healthc Policy. 2020;13:2325–35.

Trembley LL, Tobias AZ, Schillo G, von Foerster N, Singer J, Pavelka SL, Phrampus P. A multidisciplinary intubation algorithm for suspected COVID-19 patients in the emergency department. West J Emerg Med. 2020;21:764–70.

Santos TM, Pedrosa R, Carvalho D, Franco MH, Silva JLG, Franci D, de Jorge B, Munhoz D, Calderan T, Grangeia TAG, Cecilio-Fernandes D. Implementing healthcare professionals’ training during COVID-19: a pre and post-test design for simulation training. Sao Paulo Med J. 2021;139:514–9.

Dempsey L, Gaffney L, Bracken S, Tully A, Corcoran O, McDonnell-Naughton M, Sweeney L, McDonnell D. Experiences of undergraduate nursing students who worked clinically during the COVID-19 pandemic. Nurs Open. 2022;10(1):142–55.

Mann S, Duffy J, Muffly T, Tilva K, Gray S, Hetzler L, Kraft S, Malekzadeh S, Pletcher S, Cabrera-Muffly C. Effect of the COVID-19 pandemic on otolaryngology trainee surgical case numbers: a multi-institutional review. Otolaryngol Head Neck Surg. 2022;168(1):26–31.

Brazier A, Larson E, Xu Y, Judah G, Egan M, Burd H, Darzi A. ‘Dear Doctor’: a randomised controlled trial of a text message intervention to reduce burnout in trainee anaesthetists. Anaesthesia. 2022;77:405–15.

Eraydin C, Alpar SE. The effect of laughter therapy on nursing students’ anxiety, satisfaction with life, and psychological well-being during the COVID-19 pandemic: randomized controlled study. Adv Integr Med. 2022;9:173–9.

Diamond-Caravella M, Fox A, Clark M, Goodstone L, Glaser C. Alternative capstone nursing experience to scale up testing and case investigation. Public Health Nurs. 2022;39:664–9.

Passemard S, Faye A, Dubertret C, Peyre H, Vorms C, Boimare V, Auvin S, Flamant M, Ruszniewski P, Ricard JD. Covid-19 crisis impact on the next generation of physicians: a survey of 800 medical students. BMC Med Educ. 2021;21:529.

Flotte TR, Larkin AC, Fischer MA, Chimienti SN, DeMarco DM, Fan PY, Collins MF. Accelerated graduation and the deployment of new physicians during the COVID-19 pandemic. Acad Med. 2020;95:1492–4.

Michaeli D, Keough G, Perez-Dominguez F, Polanco-Ilabaca F, Pinto-Toledo F, Michaeli J, Albers S, Achiardi J, Santana V, Urnelli C, et al. Medical education and mental health during COVID-19: a survey across 9 countries. Int J Med Educ. 2022;13:35–46.

Coleman JR, Abdelsattar JM, Glocker RJ, Force R-AC-T. COVID-19 pandemic and the lived experience of surgical residents, fellows, and early-career surgeons in the American college of surgeons. J Am Coll Surg. 2021;232:119-135.e120.

Sahebi A, Nejati-Zarnaqi B, Moayedi S, Yousefi K, Torres M, Golitaleb M. The prevalence of anxiety and depression among healthcare workers during the COVID-19 pandemic: an umbrella review of meta-analyses. Prog Neuropsychopharmacol Biol Psychiatry. 2021;107: 110247.

Pappa S, Ntella V, Giannakas T, Giannakoulis VG, Papoutsi E, Katsaounou P. Prevalence of depression, anxiety, and insomnia among healthcare workers during the COVID-19 pandemic: a systematic review and meta-analysis. Brain Behav Immun. 2020;88:901–7.

Salari N, Hosseinian-Far A, Jalali R, Vaisi-Raygani A, Rasoulpoor S, Mohammadi M, Rasoulpoor S, Khaledi-Paveh B. Prevalence of stress, anxiety, depression among the general population during the COVID-19 pandemic: a systematic review and meta-analysis. Glob Health. 2020;16:57.

Sarkar S, Gupta R, Menon V. A systematic review of depression, anxiety, and stress among medical students in India. J Ment Health Hum Behav. 2017;22:88–96.

Alsairafi Z, Naser AY, Alsaleh FM, Awad A, Jalal Z. Mental health status of healthcare professionals and students of health sciences faculties in Kuwait during the COVID-19 pandemic. Int J Environ Res Public Health. 2021;18:2203.

Almufarriji R, Elarjani T, Abdullah J, Alobaid A, Alturki AY, Aldakkan A, Ajlan A, Lary A, Al Jehani H, Algahtany M, et al. Impact of COVID-19 on Saudi neurosurgery residency: trainers’ and trainees’ perspectives. World Neurosurg. 2021;154:e547–54.

Amin D, Austin TM, Roser SM, Abramowicz S. A cross-sectional survey of anxiety levels of oral and maxillofacial surgery residents during the early COVID-19 pandemic. Oral Surg Oral Med Oral Pathol Oral Radiol. 2021;132:137–44.

Yildirim TT, Atas O. The evaluation of psychological state of dental students during the COVID-19 pandemic. Braz Oral Res. 2021;35: e069.

Michno DA, Tan J, Adelekan A, Konczalik W, Woollard ACS. How can we help? Medical students’ views on their role in the COVID-19 pandemic. J Public Health. 2021;43:479–89.

Palacios Huatuco RM, Liano JE, Moreno LB, Ponce Beti MS. Analysis of the impact of the pandemic on surgical residency programs during the first wave in Argentina: a cross-sectional study. Ann Med Surg. 2021;62:455–9.

Rainford LA, Zanardo M, Buissink C, Decoster R, Hennessy W, Knapp K, Kraus B, Lanca L, Lewis S, Mahlaola TB, et al. The impact of COVID-19 upon student radiographers and clinical training. Radiography. 2021;27:464–74.

Shih G, Deer JD, Lau J, Loveland Baptist L, Lim DJ, Lockman JL. The impact of the COVID-19 pandemic on the education and wellness of US pediatric anesthesiology fellows. Paediatr Anaesth. 2021;31:268–74.

Agius AM, Gatt G, Vento Zahra E, Busuttil A, Gainza-Cirauqui ML, Cortes ARG, Attard NJ. Self-reported dental student stressors and experiences during the COVID-19 pandemic. J Dent Educ. 2021;85:208–15.

Alawia R, Riad A, Kateeb E. Risk perception and readiness of dental students to treat patients amid COVID-19: implication for dental education. Oral Dis. 2020;28(Suppl 1):975.

Osama M, Zaheer F, Saeed H, Anees K, Jawed Q, Syed SH, Sheikh BA. Impact of COVID-19 on surgical residency programs in Pakistan; a residents’ perspective. Do programs need formal restructuring to adjust with the “new normal”? A cross-sectional survey study. Int J Surg. 2020;79:252–6.

Faderani R, Monks M, Peprah D, Colori A, Allen L, Amphlett A, Edwards M. Improving wellbeing among UK doctors redeployed during the COVID-19 pandemic. Future Healthc J. 2020;7:e71–6.

Perrone MA, Youssefzadeh K, Serrano B, Limpisvasti O, Banffy M. The impact of COVID-19 on the sports medicine fellowship class of 2020. Orthop J Sports Med. 2020;8:2325967120939901.

Ostapenko A, McPeck S, Liechty S, Kleiner D. Impacts on surgery resident education at a first wave COVID-19 epicenter. J Med Educ Curric Dev. 2020;7:2382120520975022.

Sanghavi PB, Au Yeung K, Sosa CE, Veesenmeyer AF, Limon JA, Vijayan V. Effect of the coronavirus disease 2019 (COVID-19) pandemic on pediatric resident well-being. J Med Educ Curric Dev. 2020;7:2382120520947062.

Domaradzki J, Walkowiak D. Medical students’ voluntary service during the COVID-19 pandemic in Poland. Front Public Health. 2021;9: 618608.

Ferreira LC, Amorim RS, Melo Campos FM, Cipolotti R. Mental health and illness of medical students and newly graduated doctors during the pandemic of SARS-Cov-2/COVID-19. PLoS ONE. 2021;16: e0251525.

Blake H, Mahmood I, Dushi G, Yildirim M, Gay E. Psychological impacts of COVID-19 on healthcare trainees and perceptions towards a digital wellbeing support package. Int J Environ Res Public Health. 2021;18:10647.

Lim WY, Ong J, Ong S, Hao Y, Abdullah HR, Koh DL, Mok USM. The abbreviated Maslach Burnout Inventory can overestimate burnout: a study of anesthesiology residents. J Clin Med. 2019;9:61.

Mehrzad R, Akiki R, Crozier J, Schmidt S. Mental health outcomes in plastic surgery residents during the COVID-19 pandemic. Plast Reconstr Surg. 2021;148:349e–50e.

Fekih-Romdhane F, Snene H, Jebri A, Ben Rhouma M, Cheour M. Psychological impact of the pandemic COVID-19 outbreak among medical residents in Tunisia. Asian J Psychiatr. 2020;53: 102349.

Aftab M, Abadi AM, Nahar S, Ahmed RA, Mahmood SE, Madaan M, Ahmad A. COVID-19 pandemic affects the medical students’ learning process and assaults their psychological wellbeing. Int J Environ Res Public Health. 2021;18:5792.

Alkhamees AA, Assiri H, Alharbi HY, Nasser A, Alkhamees MA. Burnout and depression among psychiatry residents during COVID-19 pandemic. Hum Resour Health. 2021;19:46.

Basheti IA, Mhaidat QN, Mhaidat HN. Prevalence of anxiety and depression during COVID-19 pandemic among healthcare students in Jordan and its effect on their learning process: a national survey. PLoS ONE. 2021;16: e0249716.

Cao W, Fang Z, Hou G, Han M, Xu X, Dong J, Zheng J. The psychological impact of the COVID-19 epidemic on college students in China. Psychiatry Res. 2020;287: 112934.

Abdessater M, Roupret M, Misrai V, Matillon X, Gondran-Tellier B, Freton L, Vallee M, Dominique I, Felber M, Khene ZE, et al. COVID19 pandemic impacts on anxiety of French urologist in training: outcomes from a national survey. Prog Urol. 2020;30:448–55.

Yadav RK, Baral S, Khatri E, Pandey S, Pandeya P, Neupane R, Yadav DK, Marahatta SB, Kaphle HP, Poudyal JK, Adhikari C. Anxiety and depression among health sciences students in home quarantine during the COVID-19 pandemic in selected provinces of Nepal. Front Public Health. 2021;9: 580561.

Awoke M, Mamo G, Abdu S, Terefe B. Perceived stress and coping strategies among undergraduate health science students of Jimma university amid the COVID-19 outbreak: online cross-sectional survey. Front Psychol. 2021;12: 639955.

Lee CM, Juarez M, Rae G, Jones L, Rodriguez RM, Davis JA, Boysen-Osborn M, Kashima KJ, Krane NK, Kman N, et al. Anxiety, PTSD, and stressors in medical students during the initial peak of the COVID-19 pandemic. PLoS ONE. 2021;16: e0255013.

Grafton-Clarke C, Uraiby H, Gordon M, Clarke N, Rees E, Park S, Pammi M, Alston S, Khamees D, Peterson W, et al. Pivot to online learning for adapting or continuing workplace-based clinical learning in medical education following the COVID-19 pandemic: a BEME systematic review: BEME guide no. 70. Med Teach. 2022;44:227–43.

Khamees D, Peterson W, Patricio M, Pawlikowska T, Commissaris C, Austin A, Davis M, Spadafore M, Griffith M, Hider A, et al. Remote learning developments in postgraduate medical education in response to the COVID-19 pandemic—a BEME systematic review: BEME guide no. 71. Med Teach. 2022;44:466–85.

Lee IR, Kim HW, Lee Y, Koyanagi A, Jacob L, An S, Shin JI, Smith L. Changes in undergraduate medical education due to COVID-19: a systematic review. Eur Rev Med Pharmacol Sci. 2021;25:4426–34.

Miguel C, Castro L, Marques Dos Santos JP, Serrao C, Duarte I. Impact of COVID-19 on medicine lecturers’ mental health and emergency remote teaching challenges. Int J Environ Res Public Health. 2021;18:6792.

Dost S, Hossain A, Shehab M, Abdelwahed A, Al-Nusair L. Perceptions of medical students towards online teaching during the COVID-19 pandemic: a national cross-sectional survey of 2721 UK medical students. BMJ Open. 2020;10: e042378.

Findyartini A, Anggraeni D, Husin JM, Greviana N. Exploring medical students’ professional identity formation through written reflections during the COVID-19 pandemic. J Public Health Res. 1918;2020:9.

Michel A, Ryan N, Mattheus D, Knopf A, Abuelezam NN, Stamp K, Branson S, Hekel B, Fontenot HB. Undergraduate nursing students’ perceptions on nursing education during the 2020 COVID-19 pandemic: a national sample. Nurs Outlook. 2021;69:903–12.

Newall N, Smith BG, Burton O, Chari A, Kolias AG, Hutchinson PJ, Alamri A, Uff C, Brainbook. Improving neurosurgery education using social media case-based discussions: a pilot study. World Neurosurg X. 2021;11: 100103.

Yoo H, Kim D, Lee YM, Rhyu IJ. Adaptations in anatomy education during COVID-19. J Korean Med Sci. 2021;36: e13.

Fabiani MA, Gonzalez-Urquijo M, Cassagne G, Dominguez A, Hinojosa-Gonzalez DE, Lozano-Balderas G, Cisneros Tinoco MA, Escotto Sanchez I, Esperon Percovich A, Vegas DH, et al. Thirty-three vascular residency programs among 13 countries joining forces to improve surgical education in times of COVID-19: a survey-based assessment. Vascular. 2021;30:146–50.

Fatani TH. Student satisfaction with videoconferencing teaching quality during the COVID-19 pandemic. BMC Med Educ. 2020;20:396.

Guo T, Kiong KL, Yao C, Windon M, Zebda D, Jozaghi Y, Zhao X, Hessel AC, Hanna EY. Impact of the COVID-19 pandemic on otolaryngology trainee education. Head Neck. 2020;42:2782–90.

Khalil R, Mansour AE, Fadda WA, Almisnid K, Aldamegh M, Al-Nafeesah A, Alkhalifah A, Al-Wutayd O. The sudden transition to synchronized online learning during the COVID-19 pandemic in Saudi Arabia: a qualitative study exploring medical students’ perspectives. BMC Med Educ. 2020;20:285.

Al-Husban N, Alkhayat A, Aljweesri M, Alharbi R, Aljazzaf Z, Al-Husban N, Elmuhtaseb MS, Al Oweidat K, Obeidat N. Effects of COVID-19 pandemic on medical students in Jordanian universities: a multi-center cross-sectional study. Ann Med Surg. 2021;67: 102466.

Kovacs E, Kallai A, Frituz G, Ivanyi Z, Miko V, Valko L, Hauser B, Gal J. The efficacy of virtual distance training of intensive therapy and anaesthesiology among fifth-year medical students during the COVID-19 pandemic: a cross-sectional study. BMC Med Educ. 2021;21:393.

Thom ML, Kimble BA, Qua K, Wish-Baratz S. Is remote near-peer anatomy teaching an effective teaching strategy? Lessons learned from the transition to online learning during the Covid-19 pandemic. Anat Sci Educ. 2021;14:552–61.

Dutta S, Ambwani S, Lal H, Ram K, Mishra G, Kumar T, Varthya SB. The satisfaction level of undergraduate medical and nursing students regarding distant preclinical and clinical teaching amidst COVID-19 across India. Adv Med Educ Pract. 2021;12:113–22.

Lahon J, Shukla P, Singh A, Jain A, Brahma D, Adhaulia G, Kamal P, Abbas A. Coronavirus disease 2019 pandemic and medical students’ knowledge, attitude, and practice on online teaching-learning process in a medical institute in North India. Natl J Physiol Pharm Pharmacol. 2021;11:774.

Larocque N, Shenoy-Bhangle A, Brook A, Eisenberg R, Chang YM, Mehta P. Resident experiences with virtual radiology learning during the COVID-19 pandemic. Acad Radiol. 2021;28:704–10.

Joshi H, Bhardwaj R, Hathila S, Vaniya VH. Pre-COVID conventional offline teaching v/s intra-COVID online teaching: a descriptive map of preference patterns among first year MBBS students. Acta Med Int. 2021;8:28.

Chugh A, Jain G, Gaur S, Gigi PG, Kumar P, Singh S. Transformational effect of COVID pandemic on postgraduate programme in oral and maxillofacial surgery in India—a trainee perspective. J Maxillofac Oral Surg. 2021;21:1–6.

Wilcha RJ. Effectiveness of virtual medical teaching during the COVID-19 crisis: systematic review. JMIR Med Educ. 2020;6: e20963.

Vishwanathan K, Patel GM, Patel DJ. Medical faculty perception toward digital teaching methods during COVID-19 pandemic: experience from India. J Educ Health Promot. 2021;10:95.

Yu-Fong Chang J, Wang LH, Lin TC, Cheng FC, Chiang CP. Comparison of learning effectiveness between physical classroom and online learning for dental education during the COVID-19 pandemic. J Dent Sci. 2021;16:1281–9.

Zemela MS, Malgor RD, Smith BK, Smeds MR. Feasibility and acceptability of virtual mock oral examinations for senior vascular surgery trainees and implications for the certifying exam. Ann Vasc Surg. 2021;76:28–37.

Jaap A, Dewar A, Duncan C, Fairhurst K, Hope D, Kluth D. Effect of remote online exam delivery on student experience and performance in applied knowledge tests. BMC Med Educ. 2021;21:86.

Alqurshi A. Investigating the impact of COVID-19 lockdown on pharmaceutical education in Saudi Arabia—a call for a remote teaching contingency strategy. Saudi Pharm J. 2020;28:1075–83.

Dave M, Dixon C, Patel N. An educational evaluation of learner experiences in dentistry open-book examinations. Br Dent J. 2021;231:243–8.

Cartledge S, Ward D, Stack R, Terry E. Adaptations in clinical examinations of medical students in response to the COVID-19 pandemic: a systematic review. BMC Med Educ. 2022;22:607.

Bautista CA, Huang I, Stebbins M, Floren LC, Wamsley M, Youmans SL, Hsia SL. Development of an interprofessional rotation for pharmacy and medical students to perform telehealth outreach to vulnerable patients in the COVID-19 pandemic. J Interprof Care. 2020;34:694–7.

Carson S, Peraza LR, Pucci M, Huynh J. Student hotline improves remote clinical skills and access to rural care. PRiMER. 2020;4:22.

Coe TM, McBroom TJ, Brownlee SA, Regan K, Bartels S, Saillant N, Yeh H, Petrusa E, Dageforde LA. Medical students and patients benefit from virtual non-medical interactions due to COVID-19. J Med Educ Curric Dev. 2021;8:23821205211028344.

Hughes T, Beard E, Bowman A, Chan J, Gadsby K, Hughes M, Humphries M, Johnston A, King G, Knock M, et al. Medical student support for vulnerable patients during COVID-19—a convergent mixed-methods study. BMC Med Educ. 2020;20:377.

Gómez-Ibáñez R, Watson C, Leyva-Moral JM, Aguayo-González M, Granel N. Final-year nursing students called to work: experiences of a rushed labour insertion during the COVID-19 pandemic. Nurse Educ Pract. 2020;49: 102920.

Appelbaum NP, Misra SM, Welch J, Humphries MH, Sivam S, Ismail N. Variations in medical students’ educational preferences, attitudes and volunteerism during the COVID-19 global pandemic. J Community Health. 2021;46(6):1204–12.

Whelan A, Prescott J, Young G, Catanese V, McKinney R. Guidance on medical students’ participation in direct in-person patient contact activities. Washington: Association of American Medical Colleges; 2020.

Kirkpatrick DL. Evaluating training programs: the four levels,1st edition. San Francisco: Berrett-Koehler; 1994.

Biesta GJJ. Why ‘what works’ still won’t work: from evidence-based education to value-based education. Stud Philos Educ. 2010;29:491–503.

Acknowledgements

We thank Mathieu Boniol, Michelle McIsaac, Amani Siyam and Pascal Zurn from the WHO Health Workforce Department for their thoughtful critical review.

Funding

This review was funded by the Government of Canada (ACT-A Health Workers’ fund) through two grants administered by the World Health Organization (2021/1110248-0, 2022/1270903-0).

Author information

Authors and Affiliations

Contributions

AD and MGS conceptualized the study, designed the search strategy, and led the review process. AD, MGS, ANP and MPapapanou developed the protocol, performed the first phase of the literature search and data extraction, performed the data analysis, designed the figures and tables, and drafted the manuscript. ANP and MPapapanou performed the meta-analysis. NRK, AA, DM, MN, CK, MPapageorgakopoulou performed the literature search and data extraction for the second part of the project. MS, EOJ, SF, GC and JC offered guidance on the search strategy, participated in the interpretation of the results, and revised the manuscript for intellectual content. All authors read and approved the final manuscript.

Authors’ information

AD is a medical doctor researching general and pediatric surgery, and medical and surgical education. M. Papapanou is a medical doctor and educator pursuing a career in obstetrics and gynecology, with interests in clinical epidemiology, statistics and data analysis. ANP is a medical doctor and is currently receiving a scholarship from the Onassis Foundation to pursue postgraduate studies in the field of Epidemiology and research methodology with a special interest in meta-analytical approaches. NRK, AA, DM, MN, CK, M. Papageorgakopoulou are medical students and junior doctors in Greece with prior experience in systematic reviews. MS is a UK-based doctor specializing in gynecological oncology with a PhD and extensive experience in surgical curriculum development. EOJ is Professor of Anatomy and Dean of the European University Cyprus School of Medicine. She has longstanding experience in creating and assessing medical school curricula in the US, Greece and Cyprus. GC, JC and SF are affiliated with the Health Workforce Department, World Health Organization. MGS is a neurology resident doctor and medical educator with research interests in neuroscience, educational reform and curriculum development.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

MGS has received research funding from Mallinckrodt Pharmaceuticals and the United States Department of Defense, unrelated to the present review. All other authors report no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Additional information on the methods of the study. Full search strategy, extracted variables on predetermined Excel table, Modified Newcastle–Ottawa Scale (NOS) for Cross-Sectional studies, further explanations for statistical analyses.

Additional file 2.

Citations of all studies included in the systematic review.

Additional file 3.

Descriptive Graphs and Figures for the demographics and descriptive characteristics of included individuals.

Additional file 4.

Demographics for each outcome quantitatively synthesized.

Additional file 5.

Characteristics of eligible randomized controlled trials. PICO, findings and Risk of Bias-2 quality assessment of the 37 included randomized controlled trials.

Additional file 6.

Summarized results of all meta-analyses.

Additional file 7.

Forest plots and funnel plots of all meta-analyses.

Additional file 8.

Sensitivity analyses. Alternative data synthesis methods/alternative meta-analytical approaches for the main analyses.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Dedeilia, A., Papapanou, M., Papadopoulos, A.N. et al. Health worker education during the COVID-19 pandemic: global disruption, responses and lessons for the future—a systematic review and meta-analysis. Hum Resour Health 21, 13 (2023). https://doi.org/10.1186/s12960-023-00799-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12960-023-00799-4