Abstract

As the most common examination tool in medical practice, chest radiography has important clinical value in the diagnosis of disease. Thus, the automatic detection of chest disease based on chest radiography has become one of the hot topics in medical imaging research. Based on the clinical applications, the study conducts a comprehensive survey on computer-aided detection (CAD) systems, and especially focuses on the artificial intelligence technology applied in chest radiography. The paper presents several common chest X-ray datasets and briefly introduces general image preprocessing procedures, such as contrast enhancement and segmentation, and bone suppression techniques that are applied to chest radiography. Then, the CAD system in the detection of specific disease (pulmonary nodules, tuberculosis, and interstitial lung diseases) and multiple diseases is described, focusing on the basic principles of the algorithm, the data used in the study, the evaluation measures, and the results. Finally, the paper summarizes the CAD system in chest radiography based on artificial intelligence and discusses the existing problems and trends.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

Chest radiography (chest X-ray or CXR) is an economical and easy-to-use medical imaging and diagnostic technique. The technique is the most commonly used diagnostic tool in medical practice and has an important role in the diagnosis of the lung disease [1]. Well-trained radiologists use chest X-rays to detect illnesses, such as pneumonia, tuberculosis, interstitial lung disease, and early lung cancer.

The great advantages of chest X-rays include their low cost and easy operation. Even in underdeveloped areas, modern digital radiography (DR) machines are very affordable. Therefore, chest radiographs are widely used in the detection and diagnosis of the lung diseases, such as pulmonary nodules, tuberculosis, and interstitial lung disease. Chest radiography contains a large amount of information about a patient’s health. However, correctly interpreting the information is always a major challenge for the doctor. The overlapping of the tissue structures in the chest X-ray greatly increases the complexity of the interpretation. For example, detection is challenging when the contrast between the lesion and the surrounding tissue is very low or when the lesion overlaps the ribs or large pulmonary blood vessels. Even for an experienced doctor, it is sometimes not easy to distinguish between similar lesions or to find very obscure nodules. Therefore, the examination of the lung disease in chest X-ray will cause a certain degree of missed detection. The wide application of chest X-rays and the complexity of reading them make computer-aided detection (CAD) systems a hot research topic since the system can help doctors to detect suspicious lesions that are easily missed, thus improving the accuracy of their detection.

The first attempt to establish a computer-aided detection system was in the 1960s [2], and studies have shown that the detection accuracy for the chest disease is improved with a X-ray CAD system as an assistant. Many commercial products have been developed for the clinical applications, including CAD4 TB, Riverain, and Delft imaging systems [3]. However, because of the complexity of the chest X-rays, the automatic detection of the diseases remains unresolved, and most of the existing CAD systems are aimed at the early detection of the lung cancer. A relatively small number of studies are devoted to the automatic detection of the other types of the pathologies [4].

The CAD systems are mainly divided into the following steps: image preprocessing, extracting ROI regions, extracting ROI features, and classifying disease according to the features. The recent development of artificial intelligence (AI) combined with the accumulation of large volumes of medical images opens up new opportunities for building CAD systems in the medical applications. Artificial intelligence methods (including shallow learning and deep learning, etc.), especially deep learning, mainly replace the process of feature extraction and disease classification in the traditional CAD systems. Artificial intelligence methods have also been widely used in image segmentation and bone suppression of chest X-ray. The shallow learning methods are widely used as classifiers to detect diseases, but their performance depends strongly on the extracted hand-crafted features. For the complex chest X-ray images, it takes a long time for researchers to find a good set of features that will be helpful of the CAD performance. Recently, due to the extensive and successful application of deep learning in different image recognition tasks (such as image classification [5,6,7,8] and semantic segmentation [9,10,11,12]), interest has been stimulated in reapplying deep learning to medical images. In particular, advances in deep learning and large database construction have made the algorithm “go beyond” the performance of medical professionals in a variety of medical imaging tasks, including pneumonia diagnosis [13], diabetic retinopathy detection [14], skin cancer classification [15], arrhythmia detection [16], and bleeding identification [17]. Therefore, deep learning methods (especially CNN), which automatically learn image features to classify chest diseases, have become a mainstream trend.

This article reviews the common methods of computer-aided detection of the chest radiographs based on AI. The second section provides commonly used CXRs datasets and general image preprocessing techniques applied to chest radiographs. The third section discusses the detection of single diseases, including tuberculosis, pulmonary nodules, interstitial lung disease and other diseases. The fourth section provides the detection of multiple diseases. The fifth section summarizes the CAD of chest radiography and discusses existing problems and development trends, finally, we conclude the paper in the sixth section.

Datasets and image preprocessing techniques

Datasets

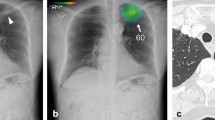

CAD systems can be used to detect various diseases in the chest X-rays. Figure 1 shows the eight most common types of diseases observed in the chest radiograph [23], which are the disease of infiltration, atelectasis, cardiac hypertrophy, effusion, lumps, nodules, pneumonia, and pneumothorax, respectively. The training, validation, testing, and performance comparisons of CAD systems require many chest radiographs. Since creating a large, annotated medical image dataset is not easy, most researchers rely on the following publicly available CXR datasets.

Indiana dataset [18]

The dataset was collected from various hospitals affiliated to the Indiana University School of Medicine. It consists of 7470 chest radiographs including the frontal and lateral images of disease annotations, such as cardiac hypertrophy, pulmonary edema, opacity, or pleural effusion.

KIT dataset [19]

The dataset consists of 10,848 DICOM cases from the Korea Tuberculosis Institute under the Korea Association of Tuberculosis, including 7020 cases of normal and 3828 cases of abnormalities (tuberculosis).

MC dataset [20]

The dataset was collected from the Department of Health and Human Services in partnership with Montgomery County, Maryland in the United States. The group consisted of 138 frontal chest radiographs from the Montgomery County Tuberculosis Screening Program, of which 80 were normal and 58 were tuberculosis with the image sizes as 4020 × 4892 or 4892 × 4020 pixels.

JSRT dataset [21, 22]

The dataset was compiled by the Japanese Society of Radiological Technology (JSRT) and includes 247 chest radiographs, of which 154 have pulmonary nodules (100 malignant and 54 benign), and 93 have no nodules. All of the X-ray images are 2048 × 2048 pixels in size, while the color depth of the grayscale is 12 bits.

Shenzhen dataset [20]

The dataset was collected in collaboration with Shenzhen No. 3 People’s Hospital, Guangdong Medical College, Shenzhen, China. It contains 662 cases of chest X-rays, including 326 normal cases and 336 tuberculosis cases.

Chest X-ray14 dataset [23]

The dataset is extracted from the clinical PACS databases in the hospitals affiliated to National Institutes of Health Clinical Center and consisted of about 60% of all frontal chest X-rays in the hospitals. The dataset contains the X-ray images of 112,120 frontal views of 30,805 patients and the image labels of 14 diseases (each image can have multiple labels) that can be mined from related radiology reports using natural language processing (NLP). The dataset contains 14 common chest pathologies, including atelectasis, consolidation, infiltration, pneumothorax, edema, emphysema, fibrosis, effusion, pneumonia, pleural thickening, cardiomegaly, nodule, mass, and hernia.

Image preprocessing techniques

Computer-aided detection systems usually take input images for a series of preprocessing steps. The main purpose of preprocessing is to enhance the quality of the images and make the ROI (region of interest) more obvious. Thus, the quality of the preprocessing has a large influence on the performance of the subsequent procedures. Typical preprocessing techniques include image enhancement, image segmentation and bone suppression for specific applications in chest X-rays. This section briefly describes these techniques.

Enhancement

Contrast, edge features, and noise in images have a large influence on the classification and identification of lesions. To obtain more details in obscure and low-contrast areas of chest X-ray images, chest radiographs should be enhanced to highlight the structural information and suppress noise. The enhancement of chest X-rays includes contrast enhancement, noise suppression, edge sharpening, and filtering [24,25,26,27]. Contrast enhancement is the process of stretching the brightness value range in an image, which improves the overall or local contrast of the image and makes the image clear. Image sharpening compensates the contour of the image, enhances the edge of the image and the part of the grayscale jump, that is, enhances the image detail information. Noise suppression is the process of image denoising while preserving the details of the image as much as possible. In the image enhancement process, the filtering operation may be used, which can be carried out either in the real domain or in the frequency domain. Filtering is a neighborhood operator that uses the value of the pixels around a given pixel to determine the final output value of that pixel. In general, as a preprocessing step, the image enhancement can help reduce the rate of misdiagnosis without losing image details, introducing excessive noise and causing detail distortions.

Segmentation

In chest radiographs, it is usually necessary to segment the anatomy to obtain the ROI. Due to the different purpose (for detection of pulmonary nodules, cardiomegaly and abnormal asymmetry, etc.) of the tasks in chest radiography, there are many different studies focus on segmentation. Some studies segment the lung fields [28, 29]; others detect the contours of the lung fields [30] or ribs [31]; and a few try to directly detect the diaphragm or the costophrenic angle [32]. Lung field segmentation is the most important because it accurately defines the ROIs of the lung fields, where specific radiological signs, such as lung opacities, cavities, consolidation, and nodules, can be searched. Segmentation methods can be divided into image progressing-based methods and machine learning-based methods. Table 1 summarizes the segmentation methods which are evaluated by the used data, the assessment measures, and the corresponding segmentation results.

-

Image progressing-based methods. The category can be subdivided into rule-based methods and deformable model-based methods. Rule-based algorithms segment the lung region using rules based on the location, intensity, texture, shape, and relationships with other anatomies [33], including thresholding, edge detection, region growth, mathematical morphology operations, geometric models matching methods, etc. [34,35,36,37,38]. Typical examples based on deformable model segmentation are the active shape model (ASM) [39], the active appearance model (AAM) [40], and improvements to both [41,42,43,44].

-

Machine learning-based methods. The category can also be referred to as pixel-based methods. For chest radiographs, each pixel is assigned to a corresponding anatomical structure, such as lung, heart, mediastinum, diaphragm and so on. The classifier can use various features, such as the gray value of the pixel, spatial location information, and texture statistical information. There features are inputted into some classifier, e.g., a k-nearest neighbor (KNN) classifier, support vector machine (SVM), Markov random field (MRF) model, or neural network (NN), to train the classifier. The method can be subdivided into shallow machine learning-based methods and deep learning-based methods.

-

In shallow machine learning-based methods, the feature extraction process is intuitive, and the main challenge is to determine the appropriate categories of the features and extract them in a robust way. Mcnittgray et al. [45] first proposed a method of lung field segmentation using features. The features used were grayscale, a measure of the local difference, and a measure of the local texture. Using KNN, linear discriminant analysis (LDA), and feedforward backpropagation neural network (NN), the method classifies each pixel of a CXR into one of several anatomical categories (heart, sub diaphragm, upper mediastinum, lungs, armpit, and background). The correct percentages were 70%, 70%, and 76% for each of classifier, respectively. Similar to the literature [45], Vittitoe et al. [46] used spatial and texture information to segment CXRs into lungs or non-lungs. They used Markov Random Field to build a model that had high sensitivity, specificity, and accuracy. Shi et al. [47] used an unsupervised approach to segment the lung region in CXRs. They segmented lung fields using fuzzy C-means (FCM) clustering based on Gaussian kernels and space constraints. The method was tested on 52 CXRs of the JSRT dataset and achieved an accuracy of 0.978 ± 0.0213.

-

Shallow learning-based approaches rely on hand-crafted features that can become vulnerable when applied to different patient groups and image qualities. Because the traditional lung segmentation method requires human intervention and a priori knowledge of the dependence of the problems, the deep learning extractor has effectively replaced manual feature extraction. The current application of a more semantic segmentation method is the fully convolutional network (FCN) [48], which retains the advantageous features of SegNet [49], accepts input of any size, and produces output of the same size. Ronneberger et al. [50] improved the FCN to create a U-net structure consisting of a context-grabbing path and a symmetric extension path, allowing for precise positioning and reducing the number of images required for training. Subsequently, U-net was used for biomedical segmentation with good performance. For example, Novikov et al. [51] proposed a multiple image segmentation method based on U-net to segment the lung region and solve the data imbalance problem by associating the a priori class data distribution with a loss function. Additionally, this method goes beyond the advanced methods in the clavicle and heart segmentation tasks. Dai et al. [52] proposed a structure correcting adversarial network (SCAN) framework that uses a confrontational process to develop an accurate semantic segmentation model for segmenting lung fields and the heart in chest X-ray images. This method improves the FCN and achieves segmentation performance comparable to human experts.

-

Bone suppression

Bone suppression is a unique preprocessing technique in chest radiography and is an important preprocessing step in lung segmentation and feature extraction. The ribs and clavicle can block lung abnormalities, which complicates the feature extraction phase of a CAD system. Therefore, there is a need to remove skeletal structures, especially the posterior ribs and clavicle structures, to increase the visibility of the soft tissue density. Suzuki et al. [53] and Loog et al. [54] first proposed the bone suppression technique in 2006. Subsequent research has shown that using bone suppression techniques can improve the performance of pulmonary nodule detection [55,56,57] and can also be used to detect other abnormalities. For example, Li et al. [58] found that bone suppression could increase the performance on recognition of local pneumonia significantly.

A method of removing the skeletal structure of CXRs is mainly applied to dual-energy subtraction (DES) imaging [59]. DES radiography involves the use of X-ray radiation to take two radiographs at high energy and low energy. The two radiographs are then combined using a specific weighting factor to form a subtracted image that highlights soft tissue or skeletal components. However, the use of this technology requires specialized equipment, and only a few hospitals use the DES system.

A better solution is to automatically detect or remove bone structures in chest X-rays based on image processing techniques. Suzuki et al. [53] developed a method to suppress the contrast between ribs and clavicles in a chest X-ray with a multiresolution, large-scale training artificial neural network (MTANN). Subtracting a bone image from the corresponding chest radiograph produces a “soft tissue image”, where the rib and clavicle are substantially suppressed. Nguyen et al. [60] used independent component analysis (ICA) to separate the ribs and other parts of lung images. The results showed that 90% of the ribs could be completely and partially inhibited, and 85% of the cases increased the nodule visibility. Yang et al. [61] used deep convolution neural networks (ConvNets) as the basic prediction unit and proposed an effective deep learning method for single conventional CXR skeletal suppression. The results showed that this method can produce high-quality and high-resolution images of bone and soft tissue. Gordienko et al. [62] detected lung cancer using a deep learning method, which demonstrated the efficiency of the bone suppression technique. The study found that the pretreatment dataset without bones showed better accuracy and loss results.

Specific disease detection

Chest X-rays contain the main respiratory and circulatory organs, maintaining some of the body’s vital life activities. Millions of people suffer from chest disease each year. Tuberculosis, interstitial lung disease (LID), pneumonia, lung cancer, and other diseases are the most common diseases in the world [4]. In chest radiographs, there are three main types of anomalies: texture abnormalities, which are characterized by diffuse changes in the appearance and structure of the area, such as interstitial lesions; focal abnormalities, which are manifested as isolated changes in density, such as pulmonary nodules; and abnormal shape, in which disease processes change the outline of the normal anatomy, such as cardiomegaly. Sometimes, the texture and shape of the chest changes at the same time as a certain disease, such as tuberculosis [63]. The section describes common abnormalities in chest radiographs mainly caused by pulmonary nodules, tuberculosis, interstitial lesions, cardiomegaly, etc.

Pulmonary nodule detection

According to the World Cancer Report, lung cancer is the most common cancer in men and the third most common in women. It is one of the most aggressive human cancers, with a 5-year overall survival of 10–15% [64]. Pulmonary nodules are early manifestations of lung cancer; thus, the early detection and diagnosis of pulmonary nodules is very important for the early diagnosis and treatment of lung cancer. Nodules often appear on chest radiographs as small circular or oval low-contrast tissue masses in the lung region. They are characterized by several features, e.g., large changes in size, large changes in density, uncertainty of location in the lung area, etc. [65]. Creating a lung nodules automatic detection algorithm has always been a difficult but important aspect in the field of medical image CAD. Table 2 summarizes the main methods of pulmonary nodule detection, assessment measures, and results.

To prove that CAD is clinically useful for radiologists in detecting pulmonary nodules on chest radiographs, Kobayashi et al. [66] conducted observer performance studies. In this trial, 60 cases of chest radiographs that contained pulmonary nodules and 60 cases of non-nodular chest radiographs were used. The 16 radiologists who participated in the trial explained chest radiographs without computer-aided and with computer-aided interventions. Radiologists were evaluated for the performance of distinguishing the lung nodule using receiver operating characteristic curves (ROCs). The results showed that CAD systems increased the accuracy of the radiologist’s detection of pulmonary nodules from 0.894 to 0.940.

Traditional pulmonary nodule CAD systems include image preprocessing (enhancement and lung segmentation), candidate nodule detection, and extraction of features to reduce false positives [4, 67]. The purpose of image preprocessing is to enhance nodules, partition lung tissue, remove other tissue areas, and reduce data noise. Candidate nodule detection uses a variety of algorithms to identify as many of the nodules in the image as possible. To enhance the sensitivity of the algorithm to the nodules, this step does not strictly require a false alarm rate. False positive reduction in the suspected concentration removes the non-nodules and reduces the false positive false alarm rate of the system. At present, many algorithms focus on how to improve the detection rate of the nodules while reducing the false positives in the detection results.

To reduce the false positive nodules, the traditional algorithm extracts the features of the candidate nodules and classifies the nodules and non-nodules by the features. These features have a significant impact on the CAD performance [68, 69]. Early studies of lung nodule detection used differences in the candidate shapes at different thresholds as a feature to identify the candidate nodules. However, these methods consider only the intensity and shape of the lung nodule candidates, and they cannot achieve high sensitivity and low false positive rates. Recent studies on pulmonary nodule detection have added gradient features (including intensity and direction) and texture features to identify lung nodules from pre-detected candidates. For example, Wei et al. [70] determined the optimal feature set of 210 features using the forward-step selection method. This method achieved good lung nodule sensitivity. However, with too many features, robustness cannot be guaranteed. Schiham et al. [67] used a multiscale Gaussian filter to extract 96 texture features as well as two location features and 11 lung nodule characterization detector features and classified them using the KNN classifier. Shiraishi et al. [71] extracted 57 image features from original and nodule-enhanced images based on the geometry, grayscale, background texture, and edge gradient features. Fourteen image features were extracted from the corresponding locations in the subtracted images, and three consecutive artificial neural networks (ANNs) were used to reduce the number of false positive candidates. Chen et al. [65] enhanced the nodules in images and used a clustering watershed algorithm to extract the initial candidate nodules. Thirty-one features, including morphology, gray intensity, area, and gradient, were extracted to identify pulmonary nodules using a nonlinear SVM with a Gaussian kernel. Hardie et al. [72] calculated a set of 114 features for each candidate nodule. The final test was performed on a subset of 46 features using the Fisher linear discriminant (FLD) classifier. Ogul et al. [73] used supervised methods to distinguish nodules and non-nodules via a set of representative image features. However, inaccurate feature calculations and segmentation of complex objects can introduce new errors in their performance because hand-crafted features do not adequately represent the nodules; moreover, the design of these features requires specialized prior knowledge.

With the development of convolution neural network (CNN) in recent years, CNN model has proved its performance in image classification and detection. However, the chest radiograph datasets used for lung nodule detection are relatively small, making it might not be very successful to train a complicated pulmonary nodule image neural network from scratch. To accomplish this goal, the following studies explored and used transfer learning [74]. Transfer learning is considered to be an efficient learning technique, especially when faced with relatively limited medical datasets. Compared with the “starting from scratch” way of most other learning models, transfer learning helps train new models by transferring the learned model parameters trained on a large datasets to the new model. Considering that some data or tasks are related, we can share the model parameters (also known as the knowledge) learned from the model into the new model to improve the model performance. Concretely, in the detection of chest X-ray diseases, it is to learn general semantic features (such as edge information, color information, etc.) from classification tasks (such as natural image classification) related to disease detection to improve the generalization of disease detection. The network learns the advanced semantic classification features by self-adjusting on the chest dataset to achieve the purpose of distinguishing specific types of diseases. Bar et al. [75] explored the feasibility of training a CNN with ImageNet, a well-known large scale non-medical image database, and finally the trained CNN model was applied to distinguish the diseases in chest radiograph. The best performance was achieved using a combination of features extracted from the CNN and a set of low-level features including: SIFT, GIST, PHOG and SSIM. This is the first-of-its-kind experiment that shows that deep learning with large scale non-medical image databases may be sufficient for general medical image recognition tasks. Based on this approach, Bush [76] explored the use of the RESNET CNN model by transfer learning the pre-training weights extracted from the ImageNet to classify pulmonary nodules, with a sensitivity of 92% and a specificity of 86%. The model can determine the general nodular area but cannot determine the exact locations of the nodules. Although advanced features can be derived from classical deep learning models that used transfer learning, they are not related to medical image analysis tasks. The greater the gap between features extracted from natural images and those from medical images implies lower transferability of the feature. Wang et al. [77] fused the deep feature obtained from the CNN model used transfer learning and hand-crafted features (geometric features, intensity and contrast features, etc.) to reduce the false positive results. With the guidance of the specific false positives, i.e., 1.19 false positives per image, the sensitivity is 69.27% and the specificity is 96.02%. The low sensitivity is likely due to hand-crafted features being not superior.

Tuberculosis detection

Tuberculosis (TB) is the ninth leading cause of death worldwide and the leading cause from a single infectious agent, ranking above HIV/AIDS. Globally, in 2016, the proportion of people who developed TB and died from the disease (case fatality ratio, CFR) was 16%, which meant that an estimated 10.4 million people (90% adults; 65% male; 10% people living with HIV) fell ill with TB [78]. Detecting tuberculosis in CXRs is a difficult task since it has different manifestations. Abnormal tuberculosis manifestations often affect the lungs’ texture and geometry in CXRs, such as consolidations, infiltrates and cavitation, ranging from subtle miliary patterns to obvious effusions [63, 79]. Table 3 summarizes the methods of tuberculosis detection, assessment measures, and results.

Some studies detected tuberculosis based on the shape, texture, and local characteristics of the lungs, focusing on the general performance. To imitate radiologists for visual detection and diagnosis of the texture features of chest X-ray images, Rohmah et al. [80] used texture features as descriptors to classify images as tuberculosis or non-tuberculosis. The results showed that tuberculosis can be detected based on the statistical features in the image histogram. Tan et al. [81] proposed a tuberculosis index (TI) based on the segmented pulmonary regional texture features and classified the normal and abnormal CXR using a decision tree, and obtained an accuracy rate of 94.9%. Noor et al. [82] proposed a statistical interpretation technique to detect tuberculosis in CXR images. They first applied the wavelet transform to the CXR image, calculated 12 texture measures from the wavelet coefficients, reduced the dimensions with PCA, and estimated the probability of misclassification using the probability ellipsoid and discriminant functions. In addition to extracting texture features, some studies applied bone suppression to pretreat chest radiographs for improving the classification performance. Leibstein et al. [83] used a DES rib block to pretreat chest radiographs and proposed a method based on the local binary pattern (LBP) and Laplacian of Gaussian (LoG) to detect tuberculosis and improve the classification performance. Maduskar et al. [84] compared the automatic tuberculosis detection of conventional CXRs and bone suppression CXRs and found that bone suppression was better than the conventional classification performance due to the diversity of pulmonary tuberculosis manifestations in the chest. Hogeweg et al. [85] improved the detection performance with help of an anomaly detection system and normal anatomy. The combination of texture anomaly detection and clavicle detection reduced false positives. In a study [86], the authors fused the supervisory subsystems for detecting the texture, shape, and focal abnormalities and developed a generic framework for tuberculosis detection.

Another portion of the literature has focused on the detection of specific manifestations, such as diffuse opacity, effusion, cavities, and nodule lesions. Song et al. [87] proposed a method to locate focal opacities in tuberculosis. These investigators studied the initial extraction of rib threads. After locating the ribs, morphological opening operations and seed growth methods were used to automatically locate the focal opacity. However, handling images that have blots or have no visible features on the border is not sufficient and can even lead to misjudgment. Shen et al. [88] proposed a Bayesian classification method based on hybrid knowledge to automatically detect tuberculosis cavities in CXRs. The gradient inverse coefficient of variation (GICOV) describes the texture (area boundary), and the circular measure describes the shape of the latent cavity. This method is the first automatic algorithm that detects tuberculosis accurately but uses a global adaptive threshold in such a way that automatic initialization cannot place the initial contour within the cavity, leaving a cavity. Xu et al. [89] classified tuberculosis cavities by combining texture and geometric features. First, rough feature classification was performed using Gaussian model-based template matching (GTM), LBP, and directional gradient histogram (HOG) methods to extract cavity candidates from CXR images. These candidates were then further refined using Hessian matrix eigenvalues and snake-based techniques by means of active contouring. In the final phase, SVM was used to reduce the false positives by further narrowing the enhanced cavity candidates at finer scales.

Most prior CAD algorithms used well-designed morphological features to distinguish different types of lesions and to improve the screening performance. However, such manual features do not guarantee the best description of tuberculosis classification. Recently, the role of deep learning in tuberculosis classification has proven to be effective. Hwang et al. [90] proposed the first CNN-based automatic tuberculosis detection system. To overcome the difficulty of training deep NNs, the author adopted a transfer learning strategy to improve the system’s performance. Lakhani et al. [91] used radiologist-enhanced methods to further improve the accuracy in cases of ambiguous classification, and they obtained an AUC of 0.99.

Interstitial lung disease detection

The interstitial lung is support tissue outside the alveolar and terminal airway epithelium. When the interstitial lung is damaged, the chest radiograph indicates changes in the texture of the lung [92], such as linear, reticular, nodular, honeycomb, etc. [93]. Interstitial lung disease (ILD) is a group of basic pathological lesions with diffuse pulmonary parenchyma, alveolar inflammation, and interstitial fibrosis, including interstitial pulmonary edema, allergic pneumonia, idiopathic pulmonary interstitial fibrosis, sarcoidosis, and lung lymphatic cancer [94, 95]. Cases with different interstitial lesions behave very similarly on light sheets, even for professionals, it is difficult to distinguish between normal and non-normal tissue based on texture. Therefore, the detection of ILD in chest radiography is one of the most difficult tasks for radiologists.

Earlier articles used CAD systems to detect ILD in chest radiographs through texture analysis [96,97,98,99]. For example, the CAD system of the Kun Rossman Laboratory in Chicago [100] divided the lung into multiple regions of interest and analyzed the lungs’ ROI to determine whether there was any abnormalities. Then, pretrained NNs were used to classify suspicious areas to be detected. This system can help doctors improve the accuracy of interstitial lesion detection.

Plankis et al. [94] developed a flexible scheme for CAD of ILD. This approach can detect a variety of pathological features of interstitial lung tissue based on an active contour algorithm which can select the lung region. The region is then divided into 40 different regions of interest. Then, a two-dimensional Daubechies wavelet transform is performed on the ROI to calculate the texture measure. However, with the extensive application of deep learning in the detection of lung diseases, there is little literature on the detection of interstitial lung disease in the absence of a large chest X-ray dataset on ILD. Most of the literature used CT datasets to detect ILD.

Other diseases

In chest X-rays, in addition to pulmonary nodules, tuberculosis, and ILD, there are other diseases that can be detected, such as cardiomegaly, pneumonia, pulmonary edema, and emphysema. There is less literature on these diseases, and a brief discussion is given here.

Detecting cardiomegaly usually requires analyzing the heart size and calculating the cardiothoracic ratio (CTR) and developing a cardiac tumor screening system. Candemir et al. [30] used 1D-CTR, 2D-CTR, and CTAR as features, and they used SVM to classify 250 cardiomegaly images and 250 normal images, obtaining an accuracy of 76.5%. Islam et al. [101] used multiple CNNs to detect cardiomegaly. The network was accurately adjusted on 560 image samples and validated on 100 images, and they obtained a maximum accuracy of 93%, which is 17% points higher than in the literature [30].

Pneumonia and pulmonary edema can be classified by extracting texture features. Parveen et al. [102] used an FCM clustering algorithm to detect pneumonia. The results showed that the lung area of the chest was low in black or dark gray. When a patient has pneumonia, the lungs are full of water or sputum. Thus, there will be more absorbed radiation, and the lung areas will be white or light gray. This approach can help doctors detect the degree of infection easily and accurately. Kumar et al. [103] used a machine learning algorithm to perform texture analysis of chest X-rays. They used a Gabor filter and SVM to distinguish normal chest and pulmonary edema in chest radiographs, and they obtained an AUC of 0.96. Here, they did not use large datasets and validate other lung conditions. Islam et al. [101] used a CNN to detect and locate pulmonary edema, which manifested as a reticular white structure in the lung area with no anatomical changes.

Multiple disease detection

There may be one or more diseases in the chest radiographs. However, when using CAD methods to output the normal/abnormal classification of each image, i.e., when they are applied to only detect one disease, this approach cannot meet the demand. This section discusses the methods of using CAD technique to detect multiple diseases. Table 4 summarizes the conditions, methods, assessment measures, and results.

Avni et al. [104] used the “bag of visual words” to represent the image content and used a nonlinear, multiple SVM to classify the left and right pulmonary pleural effusion, cardiomegaly, and septum enlargement, and they obtained AUCs of 80%, 79.2%, and 88.2%, respectively. However, their algorithm was designed only for global representation and could identify the diseases manifested in the locally or relatively small regions.

Noor et al. [105] proposed a new texture-based statistical method for the detection of lobar pneumonia, tuberculosis, and lung cancer. Each ROI was transformed into four subsets using a two-dimensional Daubechies wavelet transform, which represented the trend, horizontal, vertical, and diagonal detail coefficients. Twelve types of texture measurements, such as the mean energy, entropy, contrast, and maximum column total energy, were calculated. The modified principal component (ModPC) method was used to generate the feature vectors for the discrimination process. For all three diseases, the texture measurement of maximum column total energy produced a 98% correct classification rate. The two diseases were then compared in pairs, and the correct classification rates for lobar, tuberculosis, and lung cancer using mean energy and maximum texture measurements were 70%, 97%, and 79%, respectively. This algorithm is different from other semi-automatic methods in that the ROI choice does not involve the usual segmentation problem, and the proposed statistical-based CAD algorithm does not rely on establishing precise boundaries and avoids the possibility of losing information from the original image. However, this method still requires further work that involves larger samples for validation studies.

Bar et al. [75] used a combination of features extracted from a CNN, which were trained on ImageNet, and a set of low-level features to detect right pleural effusion, cardiomegaly, and health versus abnormal disease; they obtained AUC values of 93%, 89%, and 79%, respectively. However, the detection was performed with features learned from non-medical datasets, and it was inaccurate.

Cicero et al. [106] used the GoogLeNet CNN to classify normal, cardiomegaly, pleural effusion, pulmonary edema, and pneumothorax on a moderate size dataset automatically. It was shown that the current CNN architecture can be trained with a medical dataset of moderate size, which solves the problem of simultaneous prediction of multiple labels for the detection and removal of common diseases in chest radiographs.

NIH [23] published a large-scale chest X-ray dataset and used a weak-supervised multi-label method to classify and locate eight diseases, which validated the usability of deep learning on this dataset. Based on this result, Yao et al. [107] used DENSENET to extract disease features on this dataset and proposed LSTM-based approach to simulate label dependency, which improved the classification performance. Rajpurkar et al. [13] proposed the ChexNet method, which used dense connections [108] and batch normalization [109] to make the optimization of such a deep network tractable. The AUC of pneumonia on the Chest-Xray14 dataset was 76.4%, reaching the human level, and greatly improved the accuracy of detection of these 14 diseases. Kumar et al. [110] used a cascade deep learning network to classify 14 diseases on this dataset, and the performance of classification of cardiomegaly improved upon previous methods. These methods of detecting multiple labels in chest radiographs all input the global image into the network. However, the lesion area can be very small compared to the global image, and using a global image for classification could result in a significant amount of noise outside the lesion area. In response to this problem, Guan et al. [111] proposed the attention guided convolutional neural network (AG-CNN), which has three branches, i.e., global branch, local branch, and fusion branch. This network combines global and local information to improve the recognition performance.

Discussion

This study discussed various CAD algorithms for detecting abnormal chest radiographs. CXR CAD algorithms have wide applications in detecting various diseases, and they are playing a vital role as a second opinion for medical experts. In addition, CAD algorithms also reduce the workload of medical experts by reviewing many CXRs quickly. In this section, we will discuss and compare the CAD algorithms in detection of chest abnormalities, and will state some problems and the future works in this field.

-

1.

From the literatures, it can be found that there are many CAD methods currently used to detect abnormalities in chest radiographs. Most of these methods belong to the field of artificial intelligence [112] and they dedicate to computer-aid detection based on the chest radiograph. However, it is proved that the deep learning methods are more accurate in classification from the comparison and laboratory experiments shown in Table 5. Furthermore, previous methods can only detect one or several diseases from the chest radiograph, while Table 6 shows that the deep learning method can read the appearance of various types of suspected diseases simultaneously and directly from the chest radiograph, which conforms to the principles of radiologists’ interpretation of the film. This should be a main direction of computer-assisted detection of chest radiographs in the future. We think that deep learning has very far-reaching development potential, while at the same time it needs further improvement for playing a greater role. At present, deep learning methods classify diseases by extracting the features from the limited datasets. However, the problems with these methods are that limited datasets have limitations, such as unbalanced sample distribution, and the generality of the networks trained with them is insufficient. In order to further improve the classification ability, the following aspects should be carried out: (1) Dataset: Based on existing datasets, a more representative dataset with a larger number of examples, preferably from different devices and different regions, is established, making the training network more versatile. (2) The network needs to be further studied and optimized in order to facing special resolution. For example, RESNET increased network depth by adding residual blocks [7]. DENSENET connected all layers (with matching feature-map sizes) directly with each other to ensure maximum information flow between layers in the network. They can farther alleviate the vanishing-gradient problem, strengthen feature propagation, encourage feature reuse, and substantially reduce the number of parameters [108]. Dual Path Network [113] combined residual channels with densely connected paths to increase training speed significantly, reduce memory footprint, and maintain higher accuracy. Similar to the literature [111], the Residual Attention Network [114] introduced an attention mechanism to extract the significant features from the images by stacking multiple attention modules. These mentioned above methods have greatly improved the classification accuracy.

Table 5 Comparison of classification methods for thoracic diseases. The classification methods, measurements, and best results in the review are shown in each column, respectively Table 6 Comparison of multiple label classification methods for thoracic diseases. The classification methods, measurements, and best results in the review are shown in each column, respectively -

2.

The big data is used in current deep learning methods to extract the features of the corresponding disease through the convolution algorithm, that is, to extract different features from shallow level to deep level through convolution operations. The training process of the neural network makes the entire network to automatically adjust the parameters of the convolution kernel, resulting in the suitable classification features being consistent with the appearance of the chest radiograph image. Although these methods have made great progress in this area, it is very time-consuming to build big data. Therefore, it should be considered whether future studies can use other domain datasets to emulate chest radiographs. Or similar to Two-Pathway Generative Adversarial Network (TP-GAN) proposed by literature [115] (combining a realistic frontal face view by simultaneously sensing the global structure and local details), it may be possible to use small datasets to generate valuable big data that can help with lung diseases detection. To avoid the need for large datasets, a method similar to Alphago Zero [116], which uses no datasets and instead relies on radiologist-interpreting-film-rules studied in the review, could be used. This should be a direction of study in the future.

-

3.

According to the first point mentioned above, CNN and other networks have reached a relatively mature stage and have higher classification accuracy than other artificial intelligence methods. However, it should be believed that in the future research, in addition to the above-mentioned improvements on deep learning network, some traditional machine learning methods still have potential for development and will continue to play a vital role in many aspects. It is valuable to study these methods for improvement of chest X-ray disease detection.

-

4.

The appearance of a disease in the chest is usually accompanied by other diseases and is related to each other, such as pulmonary tuberculosis usually accompanied by pneumonia, and there are other abnormalities that can be caused by the exacerbation of a disease. According to the multiple diseases detection section, multiple disease detection through artificial intelligence methods in the chest radiographs is clinically required, and is currently an important study direction. We can also consider the further detection of the deterioration of the disease, such as whether ordinary pneumonia can be transformed into interstitial pneumonia. The current methods do not reach this point, because the features of diseases that could further deteriorate have not been discovered yet. It could be solved by using CAD techniques in molecular biology, which plays an important role in the diagnosis and treatment of diseases.

Conclusions

Usually there are four steps in a CAD system: algorithm preprocessing, extracting ROI regions, extracting ROI features, and classifying disease according to the features. In the algorithm preprocessing and extraction of ROI, the techniques of enhancement and segmentation are very important. Usually, there are many ways to highlight lesions and suppress noise. In the segmentation, the deformable model and the deep learning method are the best, while the rule-based methods have poor performance, and they often used together with other methods to improve the segmentation performance. The techniques of bone suppression are used less frequently in the literature, but removing the rib and clavicle that block lung abnormalities can improve the system performance; In terms of feature extraction, the features extracted by traditional machine learning algorithms include geometric features, texture features, and shape features, which are usually processed to reduce the dimensionality due to feature redundancy. However, hand-crafted features could have errors that affect the classification performance and are gradually replaced by deep learning methods. In terms of classifier selection, the performance of support vector machine and random forest in traditional algorithms may be better, but with the excellent performance of deep learning in image classification, the deep learning methods have gradually become the mainstream.

References

X-ray (radiography)—chest. https://www.radiologyinfo.org/en/info.cfm?pg=chestrad. Accessed 10 June 2018.

Lodwick GS, Keats TE, Dorst JP. The coding of roentgen images for computer analysis as applied to lung cancer. Radiology. 1963;81:185–200.

Zakirov AN, Kuleev RF, Timoshenko AS, Vladimirov AV. Advanced approaches to computer-aided detection of thoracic diseases on chest X-rays. Appl Math Sci. 2015;9(88):4361–9. https://doi.org/10.12988/ams.2015.54348.

van Ginneken B, Hogeweg L, Prokop M. Computer-aided diagnosis in chest radiography: beyond nodules. Eur J Radiol. 2009;72(2):226–30.

Krizhevsky A, Sutskever I, Hinton GE. ImageNet classification with deep convolutional neural networks. Commun ACM. 2017;60(6):84–90. https://doi.org/10.1145/3065386.

Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:14091556. 2014. http://arxiv.org/abs/1409.1556v6.

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR). Las Vegas, NV, USA: IEEE. 2016. p. 770–8. http://dx.doi.org/10.1109/CVPR.2016.90.

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, et al. Going deeper with convolutions. In: 2015 IEEE conference on computer vision and pattern recognition (CVPR). Boston, MA, USA: IEEE. 2015. p. 1–9. http://dx.doi.org/10.1109/CVPR.2015.7298594.

Long J, Shelhamer E, Darrell T. Fully convolutional networks for semantic segmentation. In: 2015 IEEE conference on computer vision and pattern recognition (CVPR). Boston, MA, USA: IEEE. 2015. p. 3431–40. http://dx.doi.org/10.1109/CVPR.2015.7298965.

Mostajabi M, Yadollahpour P, Shakhnarovich G. Feedforward semantic segmentation with zoom-out features. In: 2015 IEEE conference on computer vision and pattern recognition (CVPR). Boston, MA, USA: IEEE. 2015. p. 3376–85. http://dx.doi.org/10.1109/cvpr.2015.7298959.

Noh H, Hong S, Han B. Learning deconvolution network for semantic segmentation. In: IEEE I conf comp vis. Santiago, Chile: IEEE. 2015. p. 1520–8. http://dx.doi.org/10.1109/iccv.2015.178.

Chen L, Papandreou G, Kokkinos I, Murphy K, Yuille AL. Semantic image segmentation with deep convolutional nets and fully connected CRFs. IEEE Trans Pattern Anal Mach Intell. 2018;40(4):834–48. https://doi.org/10.1109/TPAMI.2017.2699184.

Rajpurkar P, Irvin J, Zhu K, Yang B, Mehta H, Duan T, et al. CheXNet: radiologist-level pneumonia detection on chest x-rays with deep learning. arXiv preprint arXiv:171105225. 2017.

Gulshan V, Peng L, Coram M, Stumpe MC, Wu D, Narayanaswamy A, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316(22):2402–10.

Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115–8.

Rajpurkar P, Hannun AY, Haghpanahi M, Bourn C, Ng AY. Cardiologist-level arrhythmia detection with convolutional neural networks. arXiv preprint arXiv:170701836. 2017.

Grewal M, Srivastava MM, Kumar P, Varadarajan S. RADNET: radiologist level accuracy using deep learning for HEMORRHAGE detection in CT scans. In: 2018 IEEE 15th international symposium on biomedical imaging (ISBI 2018). Washington, DC, USA: IEEE. 2018. p. 281–4. http://dx.doi.org/10.1109/ISBI.2018.8363574.

Demner-Fushman D, Kohli MD, Rosenman MB, Shooshan SE, Rodriguez L, Antani S, et al. Preparing a collection of radiology examinations for distribution and retrieval. JAMIA. 2016;23(2):304–10.

Ryoo S, Kim HJ. Activities of the Korean institute of tuberculosis. Osong Public Health Res Perspect. 2014;5(Suppl):S43–9.

Jaeger S, Candemir S, Antani S, Wang YX, Lu PX, Thoma G. Two public chest X-ray datasets for computer-aided screening of pulmonary diseases. Quant Imaging Med Surg. 2014;4(6):475–7.

Shiraishi J, Katsuragawa S, Ikezoe J, Matsumoto T, Kobayashi T, Komatsu K, et al. Development of a digital image database for chest radiographs with and without a lung nodule: receiver operating characteristic analysis of radiologists’ detection of pulmonary nodules. AJR Am J Roentgenol. 2000;174(1):71–4.

van Ginneken B, Stegmann MB, Loog M. Segmentation of anatomical structures in chest radiographs using supervised methods: a comparative study on a public database. Med Image Anal. 2006;10(1):19–40.

Wang XS, Peng YF, Lu L, Lu ZY, Bagheri M, Summers RM. ChestX-ray8: hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR). Honolulu, HI, USA: IEEE. 2017. p. 3462–71. http://dx.doi.org/10.1109/CVPR.2017.369.

Sherrier RH, Johnson GA. Regionally adaptive histogram equalization of the chest. IEEE Trans Med Imaging. 1987;6(1):1–7.

Kwan B, Kwan HK. Improved lung nodule visualization on chest radiographs using digital filtering and contrast enhancement. World Acad Sci Technol. 2011; 110:590–3. http://waset.org/publications/6635.

Xu X, Wang Y, Yang G, Hu Y. Image enhancement method based on fractional wavelet transform. In: 2016 IEEE international conference on signal and image processing (ICSIP). Beijing, China: IEEE. 2016. p. 194–7. http://dx.doi.org/10.1109/SIPROCESS.2016.7888251.

Savitha SK, Naveen NC. Algorithm for pre-processing chest-x-ray using multi-level enhancement operation. In: 2016 international conference on wireless communications, signal processing and networking (WiSPNET). Chennai, India: IEEE. 2016. p. 2182–6.

Soleymanpour E, Pourreza HR, Ansaripour E, Yazdi MS. Fully automatic lung segmentation and rib suppression methods to improve nodule detection in chest radiographs. J Med Signals Sens. 2011;1(3):191–9.

Candemir S, Jaeger S, Palaniappan K, Musco JP, Singh RK, Xue Z, et al. Lung segmentation in chest radiographs using anatomical atlases with nonrigid registration. IEEE Trans Med Imaging. 2014;33(2):577–90.

Candemir S, Jaeger S, Lin W, Xue Z, Antani S, Thoma G. Automatic heart localization and radiographic index computation in chest x-rays. In: Medical imaging 2016: computer-aided diagnosis. Vol. 9785. San Diego: SPIE; 2016. https://doi.org/10.1117/12.2217209.

Zhanjun Y, Goshtasby A, Ackerman LV. Automatic detection of rib borders in chest radiographs. IEEE Trans Med Imaging. 1995;14(3):525–36.

Nakamori N, Doi K, Sabeti V, MacMahon H. Image feature analysis and computer-aided diagnosis in digital radiography: automated analysis of sizes of heart and lung in chest images. Med Phys. 1990;17(3):342–50.

Brown MS, Wilson LS, Doust BD, Gill RW, Sun C. Knowledge-based method for segmentation and analysis of lung boundaries in chest X-ray images. Comput Med Imaging Graph. 1998;22(6):463–77.

Cheng D, Goldberg M. An algorithm for segmenting chest radiographs. In: Proc SPIE. 1988. p. 261–8. http://dx.doi.org/10.1117/12.968961.

Armato SG 3rd, Giger ML, MacMahon H. Automated lung segmentation in digitized posteroanterior chest radiographs. Acad Radiol. 1998;5(4):245–55.

Li L, Zheng Y, Kallergi M, Clark RA. Improved method for automatic identification of lung regions on chest radiographs. Acad Radiol. 2001;8(7):629–38.

Iakovidis DK, Papamichalis G. Automatic segmentation of the lung fields in portable chest radiographs based on Bézier interpolation of salient control points. In: Proc IEEE Int Conf Img SysTech. Crete, Greece: IEEE. 2008. p. 82–7. http://dx.doi.org/10.1109/IST.2008.4659946.

Wan Ahmad WS, Zaki WM, Ahmad Fauzi MF. Lung segmentation on standard and mobile chest radiographs using oriented Gaussian derivatives filter. Biomed Eng Online. 2015;14:20.

Cootes TF, Taylor CJ, Cooper DH, Graham J. Active shape models—their training and application. Comput Vis Image Underst. 1995;61(1):38–59. https://doi.org/10.1006/cviu.1995.1004.

Cootes TF, Edwards GJ, Taylor CJ. Active appearance models. IEEE Trans Pattern Anal. 2001;23(6):681–5.

Li XC, Luo SH, Hu QM, Li JM, Wang DD, Chiong FB. Automatic lung field segmentation in x-ray radiographs using statistical shape and appearance models. J Med Imaging Health Inf. 2016;6(2):338–48. https://doi.org/10.1166/jmihi.2016.1714.

van Ginneken B, Frangi AF, Staal JJ, Romeny BMT, Viergever MA. Active shape model segmentation with optimal features. IEEE Trans Med Imaging. 2002;21(8):924–33.

Iakovidis DK, Savelonas M. Active shape model aided by selective thresholding for lung field segmentation in chest radiographs. In: 2009 9th international conference on information technology and applications in biomedicine. Larnaca, Cyprus: IEEE. 2009. p. 1–4. http://dx.doi.org/10.1109/itab.2009.5394326.

Wu G, Zhang XD, Luo S, Hu QM. Lung segmentation based on customized active shape model from digital radiography chest images. J Med Imaging Health Inf. 2015;5(2):184–91. https://doi.org/10.1166/jmihi.2015.1382.

Mcnittgray MF. Pattern classification approach to segmentation of chest radiographs. In: Medical imaging 1993; Newport Beach, CA, United States. SPIE. 1993. p. 160–70. http://dx.doi.org/10.1117/12.154500.

Vittitoe NF, Vargas-Voracek R, Floyd CE. Identification of lung regions in chest radiographs using Markov random field modeling. Med Phys. 1998;25(6):976–85.

Shi Z, Zhou P, He L, Nakamura T, Yao Q, Itoh H. Lung segmentation in chest radiographs by means of Gaussian Kernel-based FCM with spatial constraints. In: 2009 sixth international conference on fuzzy systems and knowledge discovery; Tianjin, China. IEEE. 2009. p. 428–32. http://dx.doi.org/10.1109/FSKD.2009.811.

Shelhamer E, Long J, Darrell T. Fully convolutional networks for semantic segmentation. IEEE Trans Pattern Anal Mach Intell. 2017;39(4):640–51.

Badrinarayanan V, Handa A, Cipolla R. SegNet: a deep convolutional encoder-decoder architecture for robust semantic pixel-wise labelling. arXiv preprint arXiv:150507293. 2015.

Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. In: Medical image computing and computer-assisted intervention: MICCAI international conference on medical image computing and computer-assisted intervention. 2015. p. 234–41.

Novikov AA, Lenis D, Major D, Hladůvka J, Wimmer M, Bühler K. Fully convolutional architectures for multi-class segmentation in chest radiographs. IEEE Trans Med Imaging. 2017. https://doi.org/10.1109/TMI.2018.2806086.

Dai W, Doyle J, Liang X, Zhang H, Dong N, Li Y, et al. SCAN: Structure Correcting Adversarial Network for organ segmentation in chest x-rays. arXiv preprint arXiv:170308770. 2017.

Suzuki K, Abe H, MacMahon H, Doi K. Image-processing technique for suppressing ribs in chest radiographs by means of massive training artificial neural network (MTANN). IEEE Trans Med Imaging. 2006;25(4):406–16.

Loog M, Ginneken BV. Bony structure suppression in chest radiographs. In: computer vision approaches to medical image analysis. Vol. 4241. Graz, Austria: Springer. 2006. p. 166–77. https://doi.org/10.1007/11889762_15.

Freedman MT, Lo SCB, Seibel JC, Bromley CM. Lung nodules: improved detection with software that suppresses the rib and clavicle on chest radiographs. Radiology. 2011;260(1):265–73.

Oda S, Awai K, Suzuki K, Yanaga Y, Funama Y, MacMahon H, et al. Performance of radiologists in detection of small pulmonary nodules on chest radiographs: effect of rib suppression with a massive-training artificial neural network. AJR Am J Roentgenol. 2009;193(5):W397–402.

Li F, Engelmann R, Pesce LL, Doi K, Metz CE, MacMahon H. Small lung cancers: improved detection by use of bone suppression imaging-comparison with dual-energy subtraction chest radiography. Radiology. 2011;261(3):937–49.

Li F, Engelmann R, Pesce L, Armato SG 3rd, Macmahon H. Improved detection of focal pneumonia by chest radiography with bone suppression imaging. Eur Radiol. 2012;22(12):2729–35.

Vock P, Szucs-Farkas Z. Dual energy subtraction: principles and clinical applications. Eur J Radiol. 2009;72(2):231–7.

Nguyen HX, Dang TT. Ribs suppression in chest x-ray images by using ICA method. In: Van Toi V, Tran PHL, editors. Ifmbe Proc; Cham: Springer. 2015. p. 194–7. https://doi.org/10.1007/978-3-319-11776-8_47.

Yang W, Chen Y, Liu Y, Zhong L, Qin G, Lu Z, et al. Cascade of multi-scale convolutional neural networks for bone suppression of chest radiographs in gradient domain. Med Image Anal. 2017;35:421–33.

Gordienko Y, Peng G, Jiang H, Wei Z, Kochura Y, Alienin O, et al. Deep learning with lung segmentation and bone shadow exclusion techniques for chest x-ray analysis of lung cancer. arXiv preprint arXiv:171207632. 2017.

Jaeger S, Karargyris A, Candemir S, Siegelman J, Folio L, Antani S, et al. Automatic screening for tuberculosis in chest radiographs: a survey. Quant Imaging Med Surg. 2013;3(2):89–99.

Stewart BW, Wild CP. World cancer report 2014. World. http://www.who.int/cancer/publications/WRC_2014/en/. Accessed 10 June 2018.

Chen S, Suzuki K, MacMahon H. Development and evaluation of a computer-aided diagnostic scheme for lung nodule detection in chest radiographs by means of two-stage nodule enhancement with support vector classification. Med Phys. 2011;38(4):1844–58.

Kobayashi T, Xu XW, MacMahon H, Metz CE, Doi K. Effect of a computer-aided diagnosis scheme on radiologists’ performance in detection of lung nodules on radiographs. Radiology. 1996;199(3):843–8.

Schilham AM, van Ginneken B, Loog M. A computer-aided diagnosis system for detection of lung nodules in chest radiographs with an evaluation on a public database. Med Image Anal. 2006;10(2):247–58.

Hassen DB, Taleb H. Automatic detection of lesions in lung regions that are segmented using spatial relations. Clin Imaging. 2013;37(3):498–503.

Al-Absi HRH, Samir BB, Sulaiman S. A computer aided diagnosis system for lung cancer based on statistical and machine learning techniques. J Comput. 2014;9(2):425–31.

Wei J, Hagihara Y, Shimizu A, Kobatake H. Optimal image feature set for detecting lung nodules on chest X-ray images. In: CARS 2002 computer assisted radiology and surgery. Berlin: Springer; 2002. p. 706–11. https://doi.org/10.1007/978-3-642-56168-9_118.

Shiraishi J, Li Q, Suzuki K, Engelmann R, Doi K. Computer-aided diagnostic scheme for the detection of lung nodules on chest radiographs: localized search method based on anatomical classification. Med Phys. 2006;33(7):2642–53.

Hardie RC, Rogers SK, Wilson T, Rogers A. Performance analysis of a new computer aided detection system for identifying lung nodules on chest radiographs. Med Image Anal. 2008;12(3):240–58.

Oğul BB, Koşucu P, Özçam A, Kanik SD. Lung nodule detection in x-ray images: a new feature set. In: European conference of the international federation for medical and biological engineering; Cham: Springer International Publishing; 2015. p. 150–5.

Pan SJ, Yang Q. A survey on transfer learning. IEEE Trans Knowl Data Eng. 2010;22(10):1345–59.

Bar Y, Diamant I, Wolf L, Greenspan H. Deep learning with non-medical training used for chest pathology identification. In: SPIE medical imaging; Orlando, Florida, United States. SPIE; 2015. p. 7. https://doi.org/10.1117/12.2083124.

Bush I. Lung nodule detection and classification 2016. http://cs231n.stanford.edu/reports/2016/pdfs/313_Report.pdf. Accessed 20 Apr 2018.

Wang CM, Elazab A, Wu JH, Hu QM. Lung nodule classification using deep feature fusion in chest radiography. Comput Med Imaging Graph. 2017;57:10–8.

WHO. Global tuberculosis report 2017. 2017. http://www.who.int/tb/publications/global_report/en/. Accessed 11 June 2018.

Roy M, Ellis S. Radiological diagnosis and follow-up of pulmonary tuberculosis. Postgrad Med J. 1021;2010(86):663–74.

Rohmah RN, Susanto A, Soesanti I. Lung tuberculosis identification based on statistical feature of thoracic X-ray. In: 2013 international conference on QiR; Yogyakarta, Indonesia. IEEE. 2013. p. 19–26. http://dx.doi.org/10.1109/QiR.2013.6632528.

Tan JH, Acharya UR, Tan C, Abraham KT, Lim CM. Computer-assisted diagnosis of tuberculosis: a first order statistical approach to chest radiograph. J Med Syst. 2012;36(5):2751–9.

Noor NM, Rijal OM, Yunus A, Mahayiddin AA, Peng GC, Abu-Bakar SAR. A statistical interpretation of the chest radiograph for the detection of pulmonary tuberculosis. In: 2010 IEEE EMBS conference on biomedical engineering and sciences (IECBES); Kuala Lumpur, Malaysia. IEEE. 2010. p. 47–51. http://dx.doi.org/10.1109/iecbes.2010.5742197.

Leibstein JM, Nel AL. Detecting tuberculosis in chest radiographs using image processing techniques. University of Johannesburg. 2006. http://www.satnac.org.za/proceedings/2011/papers/Posters/235.pdf

Maduskar P, Hogeweg L, Philipsen R, Schalekamp S, van Ginneken B. Improved texture analysis for automatic detection of tuberculosis (TB) on chest radiographs with bone suppression images. In: Medical imaging 2013: Computer-aided diagnosis. Vol. 8670. Lake Buena Vista (Orlando Area), Florida, United States: SPIE. 2013. Vol. 1. p. 148–9. http://dx.doi.org/10.1117/12.2008083.

Hogeweg L, Mol C, de Jong PA, Dawson R, Ayles H, van Ginneken B. Fusion of local and global detection systems to detect tuberculosis in chest radiographs. Med Image Comput Comput Assist Interv. 2010;13(Pt 3):650–7.

Hogeweg L, Sanchez CI, Maduskar P, Philipsen R, Story A, Dawson R, et al. Automatic detection of tuberculosis in chest radiographs using a combination of textural, focal, and shape abnormality analysis. IEEE Trans Med Imaging. 2015;34(12):2429–42.

Song YL, Yang Y. Localization algorithm and implementation for focal of pulmonary tuberculosis chest image. In: 2010 international conference on machine vision and human–machine interface. Kaifeng, China: IEEE. 2010. p. 361–4. http://dx.doi.org/10.1109/mvhi.2010.180.

Shen R, Cheng I, Basu A. A hybrid knowledge-guided detection technique for screening of infectious pulmonary tuberculosis from chest radiographs. IEEE Trans Biomed Eng. 2010;57(11):2646–56.

Xu T, Cheng I, Long R, Mandal M. Novel coarse-to-fine dual scale technique for tuberculosis cavity detection in chest radiographs. EURASIP J Image Video Process. 2013;2013(1):3.

Hwang S, Kim HE, Jeong J. A novel approach for tuberculosis screening based on deep convolutional neural networks. In: Medical imaging 2016: computer-aided diagnosis. Vol. 9785. San Diego: SPIE; 2016. https://doi.org/10.1117/12.2216198.

Lakhani P, Sundaram B. Deep learning at chest radiography: automated classification of pulmonary tuberculosis by using convolutional neural networks. Radiology. 2017;284(2):574–82.

Arzhaeva Y, Tax D, van Ginneken B. Improving computer-aided diagnosis of interstitial disease in chest radiographs by combining one-class and two-class classifiers. In: Medical imaging 2006: image processing. Vol. 6144. San Diego: SPIE; 2006. http://dx.doi.org/10.1117/12.652208.

Miller WT Jr. Radiographic evaluation of diffuse interstitial lung disease: review of a dying art. Semin Ultrasound CT MRI. 2002;23(4):324–38. https://doi.org/10.1016/S0887-2171(02)90020-X.

Plankis T, Juozapavicius A, Stasiene E, Usonis V. Computer-aided detection of interstitial lung diseases: a texture approach. Nonlin Anal Model. 2017;22(3):404–11. https://doi.org/10.15388/NA.2017.3.8.

Antoniou KM, Margaritopoulos GA, Tomassetti S, Bonella F, Costabel U, Poletti V. Interstitial lung disease. Eur Respir Rev. 2014;23(131):40–54.

Katsuragawa S, Doi K, MacMahon H. Image feature analysis and computer-aided diagnosis in digital radiography: classification of normal and abnormal lungs with interstitial disease in chest images. Med Phys. 1989;16(1):38–44.

Kido S, Ikezoe J, Naito H, Tamura S, Machi S. Fractal analysis of interstitial lung abnormalities in chest radiography. Radiographics. 1995;15(6):1457–64.

Ishida T, Katsuragawa S, Kobayashi T, MacMahon H, Doi K. Computerized analysis of interstitial disease in chest radiographs: improvement of geometric-pattern feature analysis. Med Phys. 1997;24(6):915–24.

Loog M, van Ginneken B, Nielsen M. Detection of interstitial lung disease in PA chest radiographs. In: Medical imaging 2004: physics of medical imaging. Vol. 5368. San Diego: SPIE; 2004. https://doi.org/10.1117/12.535307.

Abe H, Macmahon H, Shiraishi J, Li Q, Engelmann R, Doi K. Computer-aided diagnosis in chest radiology. Semin Ultrasound CT MRI. 2004;25(5):432–7.

Islam MT, Aowal MA, Minhaz AT, Ashraf K. Abnormality detection and localization in chest x-rays using deep convolutional neural networks. arXiv preprint arXiv:170509850. 2017.

Parveen NR, Sathik MM. Detection of pneumonia in chest X-ray images. J X-ray Sci Technol. 2011;19(4):423–8.

Kumar A, Yen-Yu W, Kai-Che L, Tsai IC, Ching-Chun H, Nguyen H. Distinguishing normal and pulmonary edema chest x-ray using Gabor filter and SVM. In: 2014 IEEE international symposium on bioelectronics and bioinformatics (IEEE ISBB 2014). Chung Li, Taiwan: IEEE. 2014. p. 1–4. http://dx.doi.org/10.1109/isbb.2014.6820918.

Avni U, Greenspan H, Konen E, Sharon M, Goldberger J. X-ray categorization and retrieval on the organ and pathology level, using patch-based visual words. IEEE Trans Med Imaging. 2011;30(3):733–46.

Noor NM, Rijal OM, Yunus A, Mahayiddin AA, Gan CP, Ong EL, et al. Texture-based statistical detection and discrimination of some respiratory diseases using chest radiograph. In: Lai KW, Hum YC, Mohamad Salim MI, Ong S-B, Utama NP, Myint YM, Mohd Noor N, Supriyanto E, editors. Advances in medical diagnostic technology. Singapore: Springer Singapore; 2014. p. 75–97. https://doi.org/10.1007/978-981-4585-72-9_4.

Cicero M, Bilbily A, Colak E, Dowdell T, Gray B, Perampaladas K, et al. Training and validating a deep convolutional neural network for computer-aided detection and classification of abnormalities on frontal chest radiographs. Invest Radiol. 2017;52(5):281–7.

Yao L, Poblenz E, Dagunts D, Covington B, Bernard D, Lyman K. Learning to diagnose from scratch by exploiting dependencies among labels. arXiv preprint arXiv:171010501. 2017.

Huang G, Liu Z, Maaten Lvd, Weinberger KQ. Densely Connected Convolutional Networks. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR). Honolulu: IEEE; 2017. p. 4700–8. http://dx.doi.org/10.1109/cvpr.2017.243.

Ioffe S, Szegedy C. Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning. 2015. p. 448–56.

Kumar P, Grewal M, Srivastava MM. Boosted cascaded convnets for multilabel classification of thoracic diseases in chest radiographs. arXiv preprint arXiv:171108760. 2017.

Guan Q, Huang Y, Zhong Z, Zheng Z, Zheng L, Yang Y. Diagnose like a radiologist: attention guided convolutional neural network for thorax disease classification. arXiv preprint arXiv:180109927. 2018.

What’s the difference between artificial intelligence, machine learning, and deep learning? https://blogs.nvidia.com/blog/2016/07/29/whats-difference-artificial-intelligence-machine-learning-deep-learning-ai/. Accessed 11 June 2018.

Chen Y, Li J, Xiao H, Jin X, Yan S, Feng J. Dual path networks. In: Neural information processing systems (NIPS). 2017.

Wang F, Jiang M, Qian C, Yang S, Li C, Zhang H, et al. Residual attention network for image classification. In: 2017 IEEE conference on computer vision and pattern recognition (CVPR). Honolulu, HI, USA: IEEE. 2017. p. 6450–8.

Huang R, Zhang S, Li T, He R. Beyond Face Rotation: Global and Local Perception GAN for Photorealistic and Identity Preserving Frontal View Synthesis. In: 2017 IEEE international conference on computer vision (ICCV). Venice, Italy; 2018. p. 2458–67. https://doi.ieeecomputersociety.org/10.1109/iccv.2017.267.

Silver D, Schrittwieser J, Simonyan K, Antonoglou I, Huang A, Guez A, et al. Mastering the game of Go without human knowledge. Nature. 2017;550(7676):354–9.

Authors’ contributions

CQ conceived and wrote the manuscript. DY read and check the manuscript strictly, YS thoroughly revised the manuscript, and ZJ critically revised the manuscript for intellectual contents. All authors read and approved the final manuscript.

Acknowledgements

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Availability of data and materials

Not applicable.

Consent for publication

Not applicable.

Ethics approval and consent to participate

Not applicable.

Funding

This research was supported by grants from National Natural Science Foundation of China, namely, Grants 60972102, 81471758, and 81271670. This research was also supported by grants from The National Key Research and Development Program of China (2016YFC0106102 and 2017YFC0110700).

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Qin, C., Yao, D., Shi, Y. et al. Computer-aided detection in chest radiography based on artificial intelligence: a survey. BioMed Eng OnLine 17, 113 (2018). https://doi.org/10.1186/s12938-018-0544-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12938-018-0544-y