Abstract

Background

The CONSORT Statement is an evidence-informed guideline for reporting randomised controlled trials. A number of extensions have been developed that specify additional information to report for more complex trials. The aim of this study was to evaluate the impact of using a simple web-based tool (WebCONSORT, which incorporates a number of different CONSORT extensions) on the completeness of reporting of randomised trials published in biomedical publications.

Methods

We conducted a parallel group randomised trial. Journals which endorsed the CONSORT Statement (i.e. referred to it in the Instruction to Authors) but do not actively implement it (i.e. require authors to submit a completed CONSORT checklist) were invited to participate. Authors of randomised trials were requested by the editor to use the web-based tool at the manuscript revision stage. Authors registering to use the tool were randomised (centralised computer generated) to WebCONSORT or control. In the WebCONSORT group, they had access to a tool allowing them to combine the different CONSORT extensions relevant to their trial and generate a customised checklist and flow diagram that they must submit to the editor. In the control group, authors had only access to a CONSORT flow diagram generator. Authors, journal editors, and outcome assessors were blinded to the allocation. The primary outcome was the proportion of CONSORT items (main and extensions) reported in each article post revision.

Results

A total of 46 journals actively recruited authors into the trial (25 March 2013 to 22 September 2015); 324 author manuscripts were randomised (WebCONSORT n = 166; control n = 158), of which 197 were reports of randomised trials (n = 94; n = 103). Over a third (39%; n = 127) of registered manuscripts were excluded from the analysis, mainly because the reported study was not a randomised trial. Of those included in the analysis, the most common CONSORT extensions selected were non-pharmacologic (n = 43; n = 50), pragmatic (n = 20; n = 16) and cluster (n = 10; n = 9). In a quarter of manuscripts, authors either wrongly selected an extension or failed to select the right extension when registering their manuscript on the WebCONSORT study site. Overall, there was no important difference in the overall mean score between WebCONSORT (mean score 0.51) and control (0.47) in the proportion of CONSORT and CONSORT extension items reported pertaining to a given study (mean difference, 0.04; 95% CI −0.02 to 0.10).

Conclusions

This study failed to show a beneficial effect of a customised web-based CONSORT checklist to help authors prepare more complete trial reports. However, the exclusion of a large number of inappropriately registered manuscripts meant we had less precision than anticipated to detect a difference. Better education is needed, earlier in the publication process, for both authors and journal editorial staff on when and how to implement CONSORT and, in particular, CONSORT-related extensions.

Trial registration

ClinicalTrials.gov: NCT01891448 [registered 24 May 2013].

Similar content being viewed by others

Background

Published articles reporting on the methodology and results of clinical trials are most often, for all readers, the only way to know how a study was conducted and what the results were. These articles must present accurate, unbiased, and transparent information concerning the methodology and conduct of the trial for the reader to assess the validity, generalizability, and applicability of the trial results.

Many studies have evaluated the quality of reporting in randomised trials in almost every clinical specialty and subspecialty [1–8]. In nearly every study, the results indicate that many crucial methodological elements are not reported in published reports of randomised trials. For example, in a sample of 616 randomised trials indexed in PubMed in December 2006, the primary endpoint was not defined in 47% of trials, the method used to generate the sequence of randomisation was not reported in 66%, the method used to conceal allocation was not reported in 75%, and the sample size calculation was not reported in 56% [8]. When the tested interventions or studied populations are insufficiently described, reproducing these interventions is impossible [9], as is assessing the population to which the results may apply. Lack of transparency is a major limiting factor for clinicians wanting to translate best evidence into best practice. It is also a major problem for scientists who perform systematic reviews and meta-analyses as some published trials may have to be excluded because of missing information [10, 11].

Lack of transparency [12–14] is mainly the responsibility of the authors of articles, but peer reviewers and journal editors should ensure that the results are based on an appropriate methodology. The CONSORT (CONsolidated Standards of Reporting Trials) Statement, published in 1996 and updated in 2001 and 2010 [15, 16], was designed to improve the transparency and quality of the reporting in clinical trials. It comprises a checklist of 25 items and a flow chart that allows visualisation of the flow of patients through the study, from recruitment to the analysis of the results. This recommendation, endorsed by a considerable number of medical journals, informs not only authors, but also reviewers and editors, about which information should be included in articles to facilitate critical judgment and interpretation of results. A recent systematic review showed that endorsement of the CONSORT Statement by a journal was associated with an improvement in the quality of reporting of randomised trials [17].

Although the CONSORT Statement applies to all randomised trials, it is primarily appropriate for superiority trials with two parallel treatment arms and individual randomisation. Several extensions of the CONSORT Statement have been developed to specify the additional information needed in reports of trials with different designs (e.g. non-inferiority [18], cluster randomised [19], and pragmatic trials [20]) or for specific interventions (e.g. non-pharmacological treatments [21], acupuncture, [22] and herbal therapy [23]). Each of these extensions includes a list of items modified from the original CONSORT Statement or new items that need to be addressed when reporting these trials. However, proliferation of these extensions makes their application difficult for a specific trial as it involves combining items from the main CONSORT checklist with those from one or more extensions. This could be cumbersome and difficult to apply in practice and so CONSORT may have limited impact on the reporting quality of these trials.

The objective of this study was to evaluate the impact of a simple web-based tool (called WebCONSORT, which incorporates the main CONSORT checklist and different CONSORT extensions) on the completeness of reporting of randomised trials published in biomedical journals. WebCONSORT allows authors to obtain a customised CONSORT checklist and flow diagram specific to their trial design and type of intervention. Our hypothesis was that this tool would allow optimal use of the CONSORT Statement and its extensions, thus leading to an improvement in the transparency of articles related to randomised trials.

Methods

Design

We conducted a multi-journal, two-arm parallel group, randomised trial to assess the impact of the WebCONSORT tool compared to a control intervention on the completeness of reporting of randomised trials submitted to biomedical journals. The study obtained ethics approval from the University of Oxford Central Research Ethics Committee, Oxford, UK (MSD-IDREC-C1-2012-89) and is registered on ClinicalTrials.gov (NCT01891448).

Journal participants

To be eligible for inclusion, journals were required to (1) endorse the CONSORT Statement (assessed via journal Instruction to Authors and as listed on the CONSORT website: www.consort-statement.org); (2) not actively implement the CONSORT Statement (defined as requiring authors to submit a completed CONSORT checklist alongside their manuscript at the time of article submission); and (3) publish reports of randomised trials (criteria assessed February 2013). All journals that met the above inclusion criteria were sent an email (February 2013) from the WebCONSORT study scientific committee inviting them to participate in the study. The description of requirements for participation were included in the email and study information sheet (Appendix 1) and editors were asked to verify that they complied with these criteria and that, while they endorsed the CONSORT Statement, they do not actively implement it.

If a journal agreed to participate, and confirmed they met the eligibility criteria, then the journal editor was asked (Appendix 2) to include an electronic web address to the WebCONSORT study website in their request for revision letter to authors for any manuscript identified by the journal as reporting the results of a randomised trial. We did this by asking the journal to include this standard sentence in their revision letter to authors:

“As part of the process of revising your manuscript we would like you to use the WebCONSORT tool which is designed to help you improve the reporting of your randomised trial. You can access the tool by clicking on the following link: [link to WebCONSORT study site]. Please be aware that by submitting your manuscript to our journal it may be part of a research study, any participation will not impact on any future acceptance or rejection of your manuscript”.

Participating journals were also informed that we would require access to the revised manuscript to assess reporting quality irrespective of whether it was published or not.

Random assignment

Authors registering on the WebCONSORT study website were asked to provide some basic information about their randomised trial. This included the name of the journal where the manuscript was submitted, the manuscript number and title, name of submitting author, trial design (e.g. parallel, cluster, non-inferiority, pragmatic), type of intervention (e.g. non-pharmacologic, herbal, acupuncture), and number of study groups (arms). Registered manuscripts were then randomised into two groups (i.e. WebCONSORT tool or control). The sequence of randomisation was computer generated and stratified by whether or not a CONSORT extension was relevant. The assignment was centralised using a web-based system. Authors and journal editors were blinded to allocation of the intervention.

Interventions

Construction of the WebCONSORT tool

To construct the WebCONSORT tool (Fig. 1) we first combined the different CONSORT extensions to allow grouping of items of similar nature and adaptation of some items to the 2010 version of the CONSORT Statement. Secondly, we designed and built a computerised tool to allow authors to produce a list of items that must be included in the report of their results and a flowchart specific to their trial. The tool combines the main CONSORT checklist and extension checklists for different trial designs (e.g. non-inferiority [18], cluster randomised [19], and pragmatic trials [20]) and for specific types of interventions (e.g. non-pharmacological treatments [21], acupuncture [22], and herbal therapy [23]). The checklist extensions for Abstracts [24] and Harms [25] were not included because they are applicable to all trials. The tool automatically generated a unique list of items customised to a specific trial combining the list of items from the main CONSORT and the items from all relevant extensions (e.g. for a pragmatic trial evaluating a non-pharmacological treatment with cluster randomisation, the main CONSORT checklist was combined with three extensions: pragmatic trial, cluster trial, and non-pharmacological extensions). This list was generated based on the description of the trial made by the author (i.e. type of design and interventions).

A website (Appendix 3: Figure 6) was created where authors were able to log on and register. Using a drop-down menu, they could select their precise type of trial, taking into account the methodological characteristics. Authors were unaware that they were randomised by the software to the WebCONSORT or control intervention.

Experimental intervention

Authors randomised to the WebCONSORT arm were directed to a list of CONSORT items specific to their trial which they could print out. They could also obtain an automatic flowchart adapted to the design of their trial. Authors were told that the items generated by the WebCONSORT tool should be reported in the revised manuscript and that the completed checklist and flow diagram should be submitted to the editor. The content of the WebCONSORT tool was validated by members of the study team; this was done by performing a number of “dummy” randomisations to ensure the correctly formatted customised checklist was generated based on different numbers and types of CONSORT extensions being selected. The WebCONSORT tool website was also tested by the scientific committee of the study and by external experts with experience in designing and conducting clinical trials to ensure the website was clear and well understood.

Control intervention

Authors randomised to the control group were directed to a dummy version of the WebCONSORT tool website which included the customised flow diagram generator part of the tool but not the main checklist generator or elements relating to CONSORT extensions.

Outcomes

Our primary outcome was the proportion of the most important and poorly reported CONSORT Statement checklist items (main CONSORT and extensions), pertaining to a given study, reported in the revised manuscript. For the main CONSORT Statement, a group of experts, from within the CONSORT Group, identified the 10 most important and poorly reported CONSORT checklist items to be assessed for each manuscript, based on their expert opinion and supported by empirical evidence where this was available. In addition, the lead authors of each extension were asked to define the five most important and poorly reported modified items specific to their extension (Appendix 4: Table 3). As the number of items differed across trials because the number of relevant extensions varied, we calculated the percentage of possible items that were reported for each article.

The secondary outcomes were the mean proportion of adequately reported items from the main CONSORT Statement (based on the 10 items for the primary outcome above), and the mean proportion of adequately reported items for each of the relevant CONSORT extensions (based on the five items for the primary outcome above). We also collected data on the rejection rate of studies. We had planned to assess the compliance rate of authors submitting a CONSORT checklist to the journal and to obtain feedback from authors and journal editors on the review process; however, these proved difficult to implement in practice and hence were not assessed.

The evaluation of revised manuscripts was conducted by a team of 10 reviewers (based at the Centre for Statistics in Medicine, University of Oxford), with statistical expertise in the design and reporting of clinical trials, working in pairs who were blinded to the nature of the study and allocation of the interventions. Each pair independently extracted data from the manuscripts; any differences between reviewers were resolved by discussion, with the involvement of an arbitrator if necessary. To ensure consistency between reviewers, we first piloted the data extraction form. We discussed any disparities in the interpretation and modified the data extraction form accordingly.

Sample size

The expected average proportion of adequately reported items in the control arm was 0.60, and our hypothesis was that the proportion of adequately reported items would increase by 25% relatively (15% in absolute value), thus attaining 0.75 in the experimental arm. Assuming a common standard deviation of 0.40, 151 articles per arm were required to demonstrate a significant difference with a power of 90% (two-sided type 1 error is set at 5%), for a total of 302 articles. This sample size calculation was based on the assumption that the mean absolute difference is similar in each stratum (whether or not a CONSORT extension is relevant). We also hypothesized that clustering by journal would have a limited impact because we anticipated the number of journals would be high. Consequently, we did not take into account the clustering by journal in the sample size calculation. We did not anticipate that journals would enroll manuscripts that were not in fact reports of randomised trials.

Statistical analysis

The main population for analysis were all manuscripts resubmitted to journals after the intervention occurred, which was during the revision process of the manuscript. Statistical analysis was undertaken using STATA IC (version 13). All outcomes were quantitative and described using proportions, mean, standard deviation, and minimum and maximum values. Quantitative variables with asymmetric distributions were presented as medians and interquartile ranges. For the primary and secondary outcomes, we estimated the difference between means in the two groups with 95% confidence intervals. The analysis was also stratified according to those articles which required the inclusion of one or more CONSORT extensions and those which did not. Due to the much larger than anticipated incorrectly specified extensions, we also performed a post-hoc sensitivity analysis for both primary and secondary outcomes to exclude an extension from the analysis of a manuscript if it was wrongly selected by the authors.

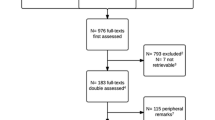

Results

Between 25 March 2013 and 22 September 2015, 357 manuscripts were registered on the WebCONSORT study site from 46 general medical and specialty journals with an impact factor ranging from 11.34 to 0.65 as of 2014 (Appendix 5: Table 4). Two journals (n = 33 manuscripts) subsequently withdrew and were therefore excluded as we were not able to obtain the revised manuscripts. Of the remaining 324 registered manuscripts, 166 were randomised to the WebCONSORT tool and 158 to the control intervention; of these, 197 were reports of randomised trials (and we were able to obtained the revised manuscript) and were included in the analysis (WebCONSORT n = 94; Control n = 103). Over a third (39%; n = 127) of registered manuscripts were excluded from the analysis. Reasons for exclusion were similar across study arms, the most common reason being that the study was not in fact a report of a randomised trial (Fig. 2). The percentage of eligible manuscripts varied considerably across journals (median 73%; IQR 27% to 100%).

Characteristics of manuscripts of randomised trials

Of those included in the analysis (n = 197), the most common CONSORT extensions selected were non-pharmacologic (WebCONSORT n = 43; control n = 50), pragmatic (n = 20; n = 16), cluster (n = 10; n = 9), and then non-inferiority (n = 9; n = 8), herbal (n = 2; n = 13), and acupuncture (n = 2; n = 0). Over two-thirds (64%; 72%) of manuscripts were registered as requiring one or more CONSORT extension. However, for almost a quarter (23%; 21%) of the manuscripts authors either wrongly selected an extension or failed to select the right extension when registering their manuscript on the WebCONSORT study site (Table 1).

Most of the 197 trials were two-arm (WebCONSORT 86%; control 82.5%), about half were multicentre (45%; 46.5%), half non-industry funded (50%; 53%), and the median sample size was 98 (IQR, 51 to 180). Around one-third of interventions were drugs (42.5%; 32%), a third were rehabilitation, psychological or educational interventions (30%; 36%), and just under a quarter were surgical or device interventions (23%; 19%). A CONSORT flow diagram was included in 85% and 86% of WebCONSORT and control manuscripts, respectively. Most manuscripts were subsequently published (81%; 84%) in the journal requesting the revision (Table 2).

Impact of the WebCONSORT tool on reporting of the revised manuscript

There was no important difference in the overall mean score (primary outcome) between the WebCONSORT (mean score 0.51; SD 0.2) and control (mean score 0.47; SD 0.2) interventions in the proportion of CONSORT and CONSORT extension items reported pertaining to a given study (mean difference (MD) 0.04; 95% CI −0.02 to 0.10) (Fig. 3). There was no difference between groups when the analysis was stratified according to those articles which were registered as requiring the inclusion of one or more CONSORT extensions (MD 0.03; 95% CI −0.03 to 0.09) and those which did not (MD 0.03; 95% CI −0.07 to 0.13) (Fig. 4), excluding manuscripts for which an extension wrongly selected by the author had little impact on the results (MD 0.05; 95% CI −0.01 to 0.11) (Fig. 5). For the secondary outcomes, there was again minimal difference between groups in the mean proportion of adequately reported CONSORT items (based on the 10 items for the primary outcome) (MD 0.03; 95% CI −0.03 to 0.09) or individual CONSORT extension items (based on the five items for the primary outcome) when analysed separately (Fig. 3). The percentage of adequately reported individual CONSORT and CONSORT extension items (i.e. cluster, non-inferiority, pragmatic, non-pharmacologic, acupuncture, herbal) are given in Appendix 4: Table 3.

Discussion

Principal findings and implications

Our study is the first to evaluate the impact of a simple web-based intervention (WebCONSORT) that incorporates the original CONSORT checklist and different CONSORT extensions, on the completeness of reporting of randomised trials published in biomedical journals. Over 40 journals took part in this study, all of which endorsed the CONSORT Statement in their journal ‘Instruction to Authors’. Our study found no overall difference between WebCONSORT and the control intervention in the completeness of reporting of revised manuscripts. This finding suggests that creating a customised CONSORT checklist specific to an individual trial, for use at the revision stage of manuscript submission, may not optimise the use of CONSORT and its extensions.

There are several potential reasons why we did not see an effect of the WebCONSORT intervention. Firstly, all journals included in our sample already endorsed CONSORT, thus authors may have felt they complied with CONSORT guidelines as part of their original submission; although the low level of reporting seen in our study suggest this may not be the case. Secondly, it is possible that the combined customised WebCONSORT checklist had too many items and was overwhelming for authors to comply with. There were also no instructions on how to implement each item in the checklist, suggesting that the checklist alone may not provide sufficient information for most authors. It might be more effective to focus on a core set of CONSORT items with more detail about how to implement each item. Thirdly, it is possible that implementation of a WebCONSORT tool to improve reporting at the revision stage of a manuscript once submitted to a journal may be too late. We may need to intervene earlier in the publication process and provide more explicit succinct guidance along with examples of adequate reporting, tailored to the checklist and context of the trial. COBWEB (Consort-based WEB tool) is an online writing aid tool for authors to use when writing up the results of a randomised trial. The tool consists of a series of text boxes that address CONSORT items, and upon completion the tool provides a formatted Word document. A randomised trial evaluating the impact of COBWEB found that the writing aid tool improved the completeness of manuscripts reporting the results of randomised trials and therefore may be more effective than the creation of a customised checklist [26]; the effectiveness of the writing tool now needs to be tested in a real world setting [27].

The process of conducting our study produced some other interesting findings with important implications. More than one third (39%) of registered manuscripts were excluded from the analysis as they were not reports of randomised trials. This was despite clear instructions provided to journal editorial staff, and included in the revision letter to authors, that only manuscripts reporting the results of randomised trials were eligible for inclusion. Clearly, the journal editorial staff at some journals were unable to correctly identify a randomised trial based on what was reported in the submitted manuscript. Another important finding is that in a quarter (23%) of manuscripts authors either selected an inappropriate CONSORT extension or failed to select the right extension applicable to their trial when registering their manuscript on the WebCONSORT study site. A tool to help authors and journal editors correctly identify the most appropriate checklist to use when reporting the results of a study is currently being piloted by the EQUATOR Network (www.equator-network.org) and may offer a potential solution.

Comparison with other studies

To our knowledge, our study is the largest randomised trial of its kind, conducted across multiple journals, evaluating the impact of an intervention to improve reporting of published research. Other than the COBWEB study mentioned above [26], very few randomised trials have been conducted evaluating interventions to improve the quality of reporting. One randomised trial evaluated the use of the CONSORT checklist as part of the peer review process and found this could improve the quality of submitted manuscripts [28]; however, this study was only conducted at a single journal. Previous studies have tended to explore the impact of the publication of the CONSORT guidelines and CONSORT extensions by studying reporting before and after journal endorsement of CONSORT [17], over time (using a time series analysis) [8, 29] or by monitoring journal endorsement of CONSORT in their ‘Instruction to Authors’ [30].

Limitations

Our study has several limitations. Firstly, we had to exclude a number of inappropriately registered studies from the analysis and, as such, we had less precision than anticipated to detect a difference between the WebCONSORT intervention and control. Secondly, we do not have information on the number of manuscripts, at each journal, which were eligible for inclusion in the study but where the author chose not to register their manuscript on the WebCONSORT study website (and therefore be randomised). Finally, we do not understand the reasons why authors who registered their manuscript on the WebCONSORT study website and were randomised to the WebCONSORT intervention arm did not then address the recommended checklist items pertaining to their study in their revised manuscript. Future qualitative research to understand the potential barriers and facilitators to better implementation of reporting guidelines would be beneficial.

Conclusion

Twenty years since its first publication, poor adherence to CONSORT recommendations remains common in published reports of randomised trials. Our randomised trial failed to show a beneficial effect of a customised web-based CONSORT checklist to help authors prepare more complete trial reports. However, it is important to note that the study had less precision than we anticipated to detect a difference due to the exclusion of a large number of inappropriately registered manuscripts. These findings have a number of important implications for future implementation of CONSORT and reporting guidelines more generally. There is a clear need for better education much earlier in the publication process for authors and journal editorial staff on when and how to implement CONSORT and, in particular, CONSORT-related extensions. It may be more effective to focus on a core set of CONSORT items with more detailed information on how to implement each item within the context of a specific trial.

References

Agha R, Cooper D, Muir G. The reporting quality of randomised controlled trials in surgery: a systematic review. Int J Surg. 2007;5(6):413–22.

Chan AW, Altman DG. Epidemiology and reporting of randomised trials published in PubMed journals. Lancet. 2005;365(9465):1159–62.

Gibson CA, Kirk EP, LeCheminant JD, Bailey Jr BW, Huang G, Donnelly JE. Reporting quality of randomized trials in the diet and exercise literature for weight loss. BMC Med Res Methodol. 2005;5:9.

Jacquier I, Boutron I, Moher D, Roy C, Ravaud P. The reporting of randomized clinical trials using a surgical intervention is in need of immediate improvement: a systematic review. Ann Surg. 2006;244(5):677–83.

Kober T, Trelle S, Engert A. Reporting of randomized controlled trials in Hodgkin lymphoma in biomedical journals. J Natl Cancer Inst. 2006;98(9):620–5.

Mills EJ, Wu P, Gagnier J, Devereaux PJ. The quality of randomized trial reporting in leading medical journals since the revised CONSORT statement. Contemp Clin Trials. 2005;26(4):480–7.

Moberg-Mogren E, Nelson DL. Evaluating the quality of reporting occupational therapy randomized controlled trials by expanding the CONSORT criteria. Am J Occup Ther. 2006;60(2):226–35.

Hopewell S, Dutton S, Yu LM, Chan AW, Altman DG. The quality of reports of randomised trials in 2000 and 2006: comparative study of articles indexed in PubMed. BMJ. 2010;340:c723.

Glasziou P, Meats E, Heneghan C, Shepperd S. What is missing from descriptions of treatment in trials and reviews? BMJ. 2008;336(7659):1472–4.

Chan AW, Hrobjartsson A, Haahr MT, Gotzsche PC, Altman DG. Empirical evidence for selective reporting of outcomes in randomized trials: comparison of protocols to published articles. JAMA. 2004;291(20):2457–65.

Chan AW, Krleza-Jeric K, Schmid I, Altman DG. Outcome reporting bias in randomized trials funded by the Canadian Institutes of Health Research. CMAJ. 2004;171(7):735–40.

Moher D, Pham B, Jones A, Cook DJ, Jadad AR, Moher M, et al. Does quality of reports of randomised trials affect estimates of intervention efficacy reported in meta-analyses? Lancet. 1998;352(9128):609–13.

Schulz KF, Chalmers I, Hayes RJ, Altman DG. Empirical evidence of bias. Dimensions of methodological quality associated with estimates of treatment effects in controlled trials. JAMA. 1995;273(5):408–12.

Wood L, Egger M, Gluud LL, Schulz KF, Juni P, Altman DG, et al. Empirical evidence of bias in treatment effect estimates in controlled trials with different interventions and outcomes: meta-epidemiological study. BMJ. 2008;336(7644):601–5.

Moher D, Hopewell S, Schulz KF, Montori V, Gotzsche PC, Devereaux PJ, et al. CONSORT 2010 explanation and elaboration: updated guidelines for reporting parallel group randomised trials. BMJ. 2010;340:c869.

Schulz KF, Altman DG, Moher D. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. BMJ. 2010;340:c332.

Turner L, Shamseer L, Altman DG, Schulz KF, Moher D. Does use of the CONSORT Statement impact the completeness of reporting of randomised controlled trials published in medical journals? A Cochrane review. Syst Rev. 2012;1:60.

Piaggio G, Elbourne DR, Pocock SJ, Evans SJ, Altman DG. Reporting of noninferiority and equivalence randomized trials: extension of the CONSORT 2010 statement. JAMA. 2012;308(24):2594–604.

Campbell MK, Piaggio G, Elbourne DR, Altman DG. Consort 2010 statement: extension to cluster randomised trials. BMJ. 2012;345, e5661.

Zwarenstein M, Treweek S, Gagnier JJ, Altman DG, Tunis S, Haynes B, et al. Improving the reporting of pragmatic trials: an extension of the CONSORT statement. BMJ. 2008;337:a2390.

Boutron I, Moher D, Altman DG, Schulz KF, Ravaud P. Extending the CONSORT statement to randomized trials of nonpharmacologic treatment: explanation and elaboration. Ann Intern Med. 2008;148(4):295–309.

MacPherson H, Altman DG, Hammerschlag R, Youping L, Taixiang W, White A, et al. Revised STandards for Reporting Interventions in Clinical Trials of Acupuncture (STRICTA): extending the CONSORT statement. PLoS Med. 2010;7(6), e1000261.

Gagnier JJ, Boon H, Rochon P, Moher D, Barnes J, Bombardier C. Reporting randomized, controlled trials of herbal interventions: an elaborated CONSORT statement. Ann Intern Med. 2006;144(5):364–7.

Hopewell S, Clarke M, Moher D, Wager E, Middleton P, Altman DG, et al. CONSORT for reporting randomized controlled trials in journal and conference abstracts: explanation and elaboration. PLoS Med. 2008;5(1), e20.

Ioannidis JP, Evans SJ, Gotzsche PC, O’Neill RT, Altman DG, Schulz K, et al. Better reporting of harms in randomized trials: an extension of the CONSORT statement. Ann Intern Med. 2004;141(10):781–8.

Barnes C, Boutron I, Giraudeau B, Porcher R, Altman DG, Ravaud P. Impact of an online writing aid tool for writing a randomized trial report: the COBWEB (Consort-based WEB tool) randomized controlled trial. BMC Med. 2015;13:221.

Marusic A. A tool to make reporting checklists work. BMC Med. 2015;13:243.

Cobo E, Cortes J, Ribera JM, Cardellach F, Selva-O’Callaghan A, Kostov B, et al. Effect of using reporting guidelines during peer review on quality of final manuscripts submitted to a biomedical journal: masked randomised trial. BMJ. 2011;343:d6783.

Hopewell S, Ravaud P, Baron G, Boutron I. Effect of editors’ implementation of CONSORT guidelines on the reporting of abstracts in high impact medical journals: interrupted time series analysis. BMJ. 2012;344:e4178.

Shamseer L, Hopewell S, Altman DG, Moher D, Schulz KF. Update on the endorsement of CONSORT by high impact factor journals: a survey of journal “Instructions to Authors” in 2014. Trials. 2016;17:301.

Acknowledgements

We are very grateful to the following journals and their authors for participating in the WebCONSORT study: American Journal of Kidney Diseases; Annals of Surgery; Arquivos Brasileiros; BMC Anesthesiology; BMC Cancer; BMC Endocrine Disorders; BMC Family Practice; BMC Gastroenterology; BMC Health Services Research; BMC Infectious Diseases; BMC Medicine; BMC Nursing; BMC Oral Health; BMC Public Health; BMC Surgery; British Journal of Geriatrics; British Journal of Obstetrics and Gynaecology; British Journal of Surgery; Canadian Medical Association Journal; Child and Adolescent Psychiatry and Mental Health; Chinese Medicine; Conflict and Health; Critical Care; Indian Journal of Dermatology; International Journal of Nursing Studies; International Journal of Paediatric Dentistry; Journal of Advanced Nursing; Journal of Cardiothoracic Surgery; Journal of Genetic Counseling; Journal of Gynecologic Oncology; Journal of Hand Surgery; Journal of Hepatology; Journal of the American Podiatric Medical Association; NIHR HTA monograph; Neurourology and Urodynamics; Nordic Journal of Music Therapy; Orphanet Journal of Rare Diseases; Pediatric Pulmonology; Peritoneal Dialysis International; Physiotherapy; Public Health Nutrition; and Thrombosis and Haemostasis.

Funding

This study received funding from the French Ministry for Health. The funder had no role in the design, conduct, analysis or reporting of this study.

Authors’ contributions

SH, IB, DA, PR, GB, DM, DS, and VM were involved in the design, implementation, and analysis of the study, and in writing the final manuscript. JC, SG, OO, PD, CR, EF, LC, VC, and IR were involved in the implementation of the study and in commenting on drafts of the final manuscript. SH is responsible for the overall content as guarantor. All authors read and approved the final manuscript.

Competing interests

All authors have completed the ICMJE uniform disclosure form at www.icmje.org/coi_disclosure.pdf and declare no support from any organisation for the submitted work, no financial relationships with any organisations that might have an interest in the submitted work in the previous three years, and no other relationships or activities that could appear to have influenced the submitted work.

Ethics approval

The study obtained ethics approval from the University of Oxford Central Research Ethics Committee, Oxford, UK (MSD-IDREC-C1-2012-89).

Transparency

The lead author (the manuscript’s guarantor) affirms that the manuscript is an honest, accurate, and transparent account of the study being reported, that no important aspects of the study have been omitted, and that any discrepancies from the study as planned (and, if relevant, registered) have been explained.

Data sharing

Anonymous data are available on request.

Author information

Authors and Affiliations

Corresponding author

Appendix 1

WebCONSORT invitation letter to participating journals

Dear Editor in Chief,

We would like to invite your journal to participate in an exciting new study which aims to help improve the reporting of randomised trials in medical journal articles. We all know that the reporting of clinical trials is not always optimal, despite the impact of reporting guidelines such as the CONSORT Statement, and we recognise that it can be a difficult task for editors to try to improve it.

Our study aims to evaluate whether using a simple web-based tool (WebCONSORT) improves the reporting of randomised trials. The tool allows authors of manuscripts to obtain a customised CONSORT checklist and flow diagram specific to their trial design (e.g. non-inferiority trial, pragmatic trial, cluster trial) and/or type of intervention (pharmacological or non-pharmacological).

We are seeking journals willing to collaborate in this project. The study has been designed so that it will require only a minimal amount of work on your part and yet provide a real opportunity to actively contribute to improving the future reporting of randomised trials, ultimately having a direct impact on patients and patient care. We plan to make the WebCONSORT tool freely available on completion of the study and will of course acknowledge all participating journals in future publications.

We have attached a short summary providing you with more details about the study. If you are interested in participating or have any questions please register your interest by responding to this email. We look forward to hearing from you.

With best wishes

Prof Philippe Ravaud and Dr Sally Hopewell

(Paris Descartes University, France and University of Oxford, UK)

On behalf of the WebCONSORT Steering Committee: Prof Doug Altman (University of Oxford, UK), Dr Ginny Barbour (PLoS Medicine), Prof Isabelle Boutron (Paris Descartes University, France), Dr Agnes Deschartes (Paris Descartes University, France), Dr David Moher (University of Ottawa, Canada), Prof Victor Montori (Mayo Clinc, USA), Dr David Schriger (Annals of Emergency Medicine, USA).

This study is supported by the CONSORT Group and the EQUATOR Network and has been approved by the University of Oxford Central Research Ethics Committee MSD-IDREC-C1-2012-89.

Appendix 2

WebCONSORT confirmation letter to participating journals

Dear [insert editor name]

Thank you very much for agreeing to participate in this exciting new study which aims to help improve the reporting of randomised trials in medical journal articles through the use of a simple web-based tool.

To participate in this study we need you include a link to the WebCONSORT study website in your revision letter to authors and you should also notify authors that their manuscript might be part of a research study. The easiest way to do this is to include a standard sentence in your revision letter to authors, for example:

“As part of the process of revising your manuscript we would like to use the WebCONSORT tool which is designed to help you improve the reporting of your randomised trial. You can access the tool by clicking on the following link: [link to WebCONSORT study site].”

“Please be aware that by submitting your manuscript to our journal it may be part of research study, any participation will not impact on any future acceptance or rejection of your manuscript.”

“As part of the process of revising your manuscript we would like to use the WebCONSORT tool which is designed to help you improve the reporting of your randomised trial. You can access the tool by clicking on the following link: [link to WebCONSORT study site].”

“Please be aware that by submitting your manuscript to our journal it may be part of research study, any participation will not impact on any future acceptance or rejection of your manuscript.”

By agreeing to participate in this study, you are thereby agreeing to allow us access to the revised manuscript to assess specific aspects of reporting quality (irrespective of whether the revised manuscript is published). We will contact you periodically through the study with details on any manuscripts registered from your journal. All details of the manuscript content and the identity of its authors will be treated in the strictest confidence.

On completion of the study we plan to make the WebCONSORT tool freely available and will, of course, acknowledge your journal’s contributions in future publications. Thank you once again for your participation in this study, if you have any questions please do not hesitate to contact us.

With best wishes

Prof Philippe Ravaud and Dr Sally Hopewell

(Paris Descartes University, France and University of Oxford, UK)

On behalf of the WebCONSORT Steering Committee: Prof Doug Altman (University of Oxford, UK), Dr Ginny Barbour (PLoS Medicine), Prof Isabelle Boutron (Paris Descartes University, France), Dr Agnes Deschartes (Paris Descartes University, France), Dr David Moher (University of Ottawa, Canada), Prof Victor Montori (Mayo Clinc, USA), and Dr David Schriger (Annals of Emergency Medicine, USA).

This study is supported by the CONSORT Group and the EQUATOR Network and has been approved by the University of Oxford Central Research Ethics Committee MSD-IDREC-C1-2012-89.

Appendix 3

Fig. 6

Screen shot of WebCONSORT study website

Appendix 4

Appendix 5

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Hopewell, S., Boutron, I., Altman, D.G. et al. Impact of a web-based tool (WebCONSORT) to improve the reporting of randomised trials: results of a randomised controlled trial. BMC Med 14, 199 (2016). https://doi.org/10.1186/s12916-016-0736-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12916-016-0736-x