Abstract

Background

High symptom burden is common in long-term care residents with dementia and results in distress and behavioral challenges if undetected. Physicians may have limited time to regularly examine all residents, particularly those unable to self-report, and may rely on reports from caregivers who are frequently in a good position to detect symptoms quickly. We aimed to identify proxy-completed assessment measures of symptoms experienced by people with dementia, and critically appraise the psychometric properties and applicability for use in long-term care settings by caregivers.

Methods

We searched Medline, EMBASE, PsycINFO, CINAHL and ASSIA from inception to 23 June 2015, supplemented by citation and reference searches. The search strategy used a combination of terms: dementia OR long-term care AND assessment AND symptoms (e.g. pain). Studies were included if they evaluated psychometric properties of proxy-completed symptom assessment measures for people with dementia in any setting or those of mixed cognitive abilities residing in long-term care settings. Measures were included if they did not require clinical training, and used proxy-observed behaviors to support assessment in verbally compromised people with dementia. Data were extracted on study setting and sample, measurement properties and psychometric properties. Measures were independently evaluated by two investigators using quality criteria for measurement properties, and evaluated for clinical applicability in long-term settings.

Results

Of the 19,942 studies identified, 40 studies evaluating 32 measures assessing pain (n = 12), oral health (n = 2), multiple neuropsychiatric symptoms (n = 2), depression (n = 8), anxiety (n = 2), psychological wellbeing (n = 4), and discomfort (n = 2) were included. The majority of studies (31/40) were conducted in long-term care settings although none of the neuropsychiatric or anxiety measures were validated in this setting. The pain assessments, PAINAD and PACSLAC had the strongest psychometric evidence. The oral health, discomfort, and three psychological wellbeing measures were validated in this setting but require further psychometric evaluation. Depression measures were poor at detecting depression in this population. All measures require further investigation into agreement, responsiveness and interpretability.

Conclusions

Measures for pain are best developed for this population and setting. All other measures require further validation. A multi-symptom measure to support comprehensive assessment and monitoring in this population is required.

Similar content being viewed by others

Background

People with dementia in long-term care settings commonly have high levels of comorbidity and symptom burden [1]. Multiple symptoms at all stages of the disease with varying prevalence are reported [1–9], notably pain (12–76 %) [2], dyspnea (8–80 %) [2], depression (9–32 %) [5, 7], anxiety (3–22 %) [5, 7], hallucinations (2–11 %) [5, 7], and delusions (18 %) [5]. Assessment is challenging, with declining verbal communication and cognition and absence of biological markers; with reliance on clinical examination. Untreated symptoms lead to distress and behavioral complications and compromises quality of life, resulting in challenges to clinical management [10] and staff burden [11].

Caregivers providing personal care are well placed to detect and monitor symptoms through daily contact and knowledge of residents [12], and refer to physicians for clinical examination and treatment. Routine use of measures in care supports systematic assessment and monitoring of symptoms, with increased access to treatment and improved outcomes [13]. However, there is limited evidence on their use in long-term care settings [13]. Requirements for such measures are that they are valid and reliable to ensure accurate assessment, responsive to change, clinically interpretable, brief and simple to use [14], and require minimal training [15]. Additionally, measures used by caregivers should not require a clinical qualification or expertise, and should support assessment through proxy-observed behaviors and signs for those residents unable to reliably self-report.

Caregiver assessment in long-term care settings is not well-established for all common symptoms, e.g. psychotic symptoms [16]. However, measures based on caregiver knowledge of the person with dementia without the requirement of clinical expertise, and validated in other settings, may have clinical applicability in long-term care settings and be transferable. With further validation, psychometrically robust and established assessment measures could support caregiver assessment of residents in long-term care.

This systematic review aimed to identify proxy-completed assessment measures of common symptoms experienced by people with dementia, and critically appraise the psychometric properties and applicability for use in long-term care settings by caregivers.

Methods

This systematic review followed Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) (Additional file 1: PRISMA checklist) [17].

Search strategy

We searched Medline, EMBASE, PsycINFO, CINAHL and ASSIA from inception to 9 April 2014 and updated on 23 June 2015. A search strategy was developed, informed by search strategies used in previous reviews, and a scoping review of common symptoms in people with dementia performed by the authors. A combination of MeSH and keyword terms were used: dementia [18] OR long-term care AND assessment AND symptoms [19] (e.g. pain, dyspnea, depression, dental pain; Additional file 2). The search was supplemented by reference and citation search of included articles using Scopus.

Eligibility criteria

The population comprised people with dementia, or dementia subgroup analyzed separately, in any care setting, e.g. long-term care, inpatient hospital. All settings were included to identify validated measures with potential applicability to long-term care settings. To include measures with high applicability in long-term care, studies with mixed cognitively intact and cognitively impaired participants in these settings were included. Measures were included if they assessed symptoms using proxy-observed behaviors or signs in people whose verbal communication was compromised due to dementia, were validated in English, and were for use in routine care without the requirement of formal clinical training. Caregiver self-administered measures were included as they do not rely on clinicians or trained personnel to administer them, which reduces their applicability for use in care. We excluded studies of:

-

Measures that required verbal responses from people with dementia

-

Measures that were face-to-face or interview administered to proxies due to limited clinical applicability in routine care

-

Behavioral measures that did not identify underlying causes of behavioral change, for example, measures of aggression and sleep disturbance

-

Measures not primarily assessing symptoms, including those of frailty, cognition, functioning, disease progression, quality of life, and risk, and process measures, e.g. quality of communication

-

Measures that required extensive training that may not be easily accessible or available to caregivers

Studies were identified for inclusion if they were in the English language and evaluated at least two psychometric properties (including one aspect of reliability and validity) of the full measure. Qualitative, review studies, theses, and conference abstracts were excluded.

Study selection

One investigator (CES) reviewed titles and abstracts and excluded all those clearly irrelevant. Full text review was then conducted by one investigator (CES) to exclude those studies not meeting the inclusion criteria. Studies not clearly excluded were reviewed by a second reviewer (AEB) and the final inclusion of studies was agreed by discussion and consensus. Where further information was required to determine eligibility criteria, the authors were contacted. When authors were not contactable, a decision was made based on available information.

Data extraction and assessment of quality criteria of measures

One reviewer (CES) extracted all data from each study into a standardized data extraction Excel template, and assessed the psychometric properties using quality criteria for measurement properties of health status questionnaires. Data extraction included (1) study setting, sample, and who the measure was administered by; (2) measurement properties, including method of administration, number of items, rating period, time to administer, and training required; and (3) psychometric properties, including content validity, internal consistency, criterion validity, construct validity, reproducibility (agreement and reliability), responsiveness, floor and ceiling effects, and interpretability [20]. Where applicable, psychometric properties were extracted on dementia subsamples. Evaluation of each psychometric property was based on detailed and well-established quality criteria [20], with four ratings: positive (strong psychometric properties using adequate design and method), intermediate (some but not all aspects of property is positive, or there is doubt about design and method used) [21], negative (psychometric property does not meet criteria despite adequate design and method), or no information. Details of methodological and quality criteria are detailed in Terwee et al. [20] but include, for example, requirement for formulated hypotheses with 75 % of hypotheses supported by findings for construct validity, intraclass correlation coefficient (ICC) or Cohen’s kappa ≥0.70 for reliability, ≤15 % obtaining highest or lowest possible scores for floor and ceiling effects, and sufficient sample size ≥50 for all (sub)groups. As quality rating of sensitivity and specificity are not included in the Terwee et al. [20] quality criteria, we calculated the sum of percentages misclassified, i.e. false positives and false negatives, as follows: [(1 – sensitivity) + (1 – specificity)] [22] and gave a positive rating for criterion validity of misclassification less than 50 %, i.e. better than chance [23]. A second reviewer (LAH, MD, or PMK) checked the data extraction and independently assessed the quality. The first and second reviewer resolved any disagreements by consensus. Where authors did not state which aspect of validity or reliability were being evaluated, the investigators made a judgement based on the methods used.

Results

Study selection

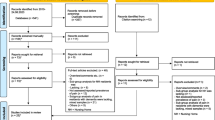

A total of 28,386 studies were identified through database searches. After deduplication, 19,942 titles and abstracts were screened, of which 1,302 were retained for full-text review. Following an independent review of 154 studies, 36 were retained for inclusion. Reasons for exclusion were required verbal responses from person with dementia (n = 125), face-to-face or interview administered to proxy (n = 38), no symptom assessment (n = 289), not dementia population (n = 64), not validated in English (n = 169), not or insufficient psychometric evaluation (n = 374), dissertation/conference abstract/study not published in English/other (n = 195), and administration required extensive training (n = 12). Following citation and reference searches, an additional four studies were identified for inclusion, resulting in a total of 40 studies (Fig. 1).

In total, 32 measures were identified, assessing pain (n = 12), oral health signs and symptoms (n = 2), multiple neuropsychiatric symptoms (n = 2), depression (n = 8), anxiety (n = 2), psychological wellbeing (n = 4), and discomfort (n = 2). The majority of studies were conducted in long-term care settings (n = 31), with seven studies recruiting from outpatient clinics [24–30]. One study was conducted in an orthopedic ward [31] and one in psychogeriatric wards [32]. Only 11 out of the 40 studies included measures administered by non-clinically trained caregivers: six pain measures [33–39], two oral health signs and symptoms measures [40, 41], two depression measures [42, 43], and one psychological wellbeing measure [44]. The neuropsychiatric symptoms [26, 30] and anxiety [24] measures were all validated with unpaid caregiver proxies in community settings. Additional file 3 provides setting and population details of each study.

Quality assessment agreement between reviewers was 86 %. Disagreements were resolved by consensus, e.g. data checking, and discussion regarding adequacy of hypotheses and whether findings supported hypotheses.

The strength of the psychometric properties of measures validated in long-term care settings

Of those measures validated in long-term care settings, the measures with strongest psychometric properties for pain were Pain Assessment in Advanced Dementia (PAINAD) [31, 45–48], and Pain Assessment Checklist for Seniors with Limited Ability to Communicate (PACSLAC/PACSLAC-II) [35, 36, 45, 47, 49, 50]. The Oral Health Assessment Tool (OHAT) [40] and Discomfort Scale-Dementia Alzheimer’s Type (DS-DAT) [47, 51] had the strongest psychometric properties for oral health and discomfort, respectively (Table 1). The depression measures demonstrated weak abilities to accurately detect depression in this population, and all the psychological wellbeing measures require further validation. No measures achieved positive ratings for all psychometric properties with information lacking on agreement, floor and ceiling effects, responsiveness, and interpretability (Additional file 4).

Measures were administered through observation during provision of routine care, observations during specified activities or time periods, examinations, knowledge of resident, all available information available to caregiver, or video recordings of residents. Rating periods ranged from one minute to one month, with time taken to complete ranging from 30 seconds to 10 minutes. Measurement training ranged from none to 4 hours (where details were provided), and up to 2 days for validation purposes. Table 2 summarizes the elements of the measure (scoring, rating period), and the feasibility (measure length, time to complete, training requirements) and applicability (method of administration used in the included studies, type of training) of measures; Additional file 5 provides details of all measures.

Pain

The measures identified to assess pain were the Abbey Pain Scale (APS) [33, 47, 52], Checklist of Nonverbal Pain Indicators (CNPI) [45, 46, 52, 53], CNA Pain Assessment Tool [34], Doloplus-2 [52], Mahoney Pain Scale [38], Non-communicative Patient’s Pain Assessment Instrument (NOPPAIN) [45, 54], PAINAD [31, 45–48], PACSLAC [36, 45, 47, 49, 50] and PACSLAC-II [35], Pain Assessment in Communicatively Impaired [37, 50, 55], Pain Assessment for Dementing Elderly (PADE) [39, 45], and Pain Behaviors for Osteoarthritis Instrument for Cognitively Impaired Elders [29].

Of these, the PAINAD and PACSLAC have been the most extensively evaluated with the strongest psychometric properties. PAINAD has good internal consistency (Cronbach’s alpha of 0.70 and greater) [46, 47]. Inter-rater reliability is strong (kappa = 0.87 [45], ICC ≥0.87 [47]) in two studies, although one study reported an ICC of 0.24 when administered in rest situations and 0.80 during movement situations [46]. PAINAD has demonstrated good construct validity against APS, PACSLAC, CNPI, NOPPAIN, and PADE at rest and during exercise (r ≤0.62) [45, 47]. The PACSLAC demonstrated good construct validity against the NOPPAIN, CNPI, PADE, APS, and PAINAD at rest and during exercise (r ≤0.56) [45, 47]. Inter-rater reliability at rest and movement situations is consistently high (ICC ≥0.76) [45, 47, 50]. Both measures require further validation when used by caregivers as these have predominantly been evaluated when administered by trained research assistants or clinicians. PACSLAC-II is a modified and shortened version of the PACSLAC based on theoretical and evidence developments in pain assessment, and has good content validity [35]. Only one study evaluating the psychometric properties of PACSLAC-II [35] was identified, and was conducted in long-term care settings and administered by trained research assistants and caregivers. Evidence for construct validity was supported with expected strong correlations with PACSLAC, CNPI, PADE, and PAINAD in pain and non-pain conditions (r ≥0.56), and expected weak correlations with the Cornell Scale for Depression in Dementia (CSDD) (non-pain condition: r = –0.05, vaccination: r = 0.10, movement: r = –0.06). PACSLAC-II demonstrated ability to discriminate between non-pain and painful conditions (P <0.01). Internal consistency was strong (Cronbach’s alpha ≥0.74) and interrater reliability kappa was 0.63.

The NOPPAIN [45, 54] and CNA Pain Assessment Tool [34] are the only measures of pain developed for administration by non-clinically trained caregivers. The NOPPAIN is completed by observations carried out during routine care tasks, and is designed for easy administration with limited training [54]. NOPPAIN has high correlation (r ≤0.70) against CNPI, PACSLAC, PADE, and PAINAD with an inter-rater reliability kappa of 0.73 when administered by trained research assistants [45].

Oral health signs and symptoms

Two measures, the Brief Oral Health Status Examination (BOHSE) [41] and the OHAT [40], were identified. Both assess oral health in long-term care residents and are administered by caregivers through oral examination of the resident. OHAT was derived from BOHSE and is simpler. OHAT comprises eight items and involves 3 hours of training to caregivers with calibration. When compared against comprehensive examination by a dentist, the Pearson correlation coefficients for each item ranged from –0.1 to 1.0 (n = 21). Test-retest reliability item-level unweighted kappa ranged from 0.51 to 0.71, with a total score ICC of 0.78. Inter-rater reliability item-level unweighted kappa ranged from 0.47 to 0.66 with a total score ICC of 0.74 (n = 485). Further testing in a larger sample is required to test validity.

Multiple neuropsychiatric symptoms

The Neuropsychiatric Inventory Questionnaire (NPI-Q) [26] is an unpaid (usually family) caregiver self-administered version of the well-validated and extensively-used NPI [56]. The NPI is sometimes reported as a behavioral measure, but was included in this review as it assesses symptom experience, including depression, anxiety, hallucinations and delusions, and provides observational signs to support assessment of these symptoms. The NPI-Q subscales demonstrated high correlations with the original clinician-administered NPI subscales (r <0.70, n = 60). The California Dementia Behavior Questionnaire [30] was designed to assess behavior, but was included in this review as the majority of items assess the symptoms of depression and psychosis and is completed based on unpaid caregiver observations. Neither of these measures have been validated in the long-term care setting.

Depression

Of the ten depression measures identified, two were developed for the purpose of assessment of verbally compromised people with dementia: the Minimum Data Set Depression Rating Scale [57–60], and Hayse and Lohse Non-Verbal Depression Scale [61]. The former is a seven-item scale derived from Minimum Data Set 2.0 items and developed to screen for depression by caregiver staff drawing upon observations during routine care; designed for long-term care settings it has high clinical applicability and is the most extensively psychometrically evaluated. However, evidence for detecting depression against gold-standard diagnosis of depression at a score cut-off point ≥3 is mixed with sensitivities and specificities of 0.91/0.69 (40 % misclassified, n = 82) [58] and 0.23/0.97 (80 % misclassified, n = 145) [57].

The CSDD is designed to be administered through interview with the person with dementia and a proxy but was modified for proxy-completion in two studies [32, 43]. Watson et al. [43] modified the CSDD for use by long-term caregiver staff most involved in the resident’s care using all available information to make the assessment (CSDD-M-LTCS). Modifications involved cognitive testing to remove technical language and changing response options from severity to frequency. Sensitivity and specificity against geriatric psychiatrist diagnosis was 0.33/0.86 (81 % misclassified, n = 112). Test-retest reliability was strong (≥0.70) but limited by small sample size (ICC = 0.83, n = 25) and inter-rater reliability ICC was 0.20 (n=111).

The Depression Signs Scale and Depression in Dementia Mood Scale [32] were originally designed to be administered based on clinical interview with the person with dementia and information from proxies, but were modified to be completed based on all available information to psychogeriatric ward staff.

The Geriatric Depression Scale (GDS) [27, 28, 42], Beck Depression Inventory [27], and Center for Epidemiologic Studies Depression Scale [27] were not originally developed for dementia but have been used in this population, either through clinical interview or self-report. In the included studies, they have been modified for proxy-completion by unpaid caregivers. The evidence for validity and applicability for use in long-term care by caregivers is therefore limited. One study examined caregiver-completed Collateral Source-GDS (CS-GDS) 30 and 15 versions in long-term care compared to gold standard diagnosis of depression [42]. In the dementia subsample (n = 35), sensitivities and specificities for the CS-GDS-30 and CS-GDS-15 were 0.70/0.56 (74 % misclassified) and 0.71/0.64 (66 % misclassified), respectively. Pearson correlation coefficient between CS-GDS and GDS ranged from 0.50 to 0.61 [42].

Anxiety

Two anxiety measures, the Collateral-completed Geriatric Anxiety Inventory and the Penn-State Worry Questionnaire-Abbreviated were identified in the same study [24]. Both were modified in this study to be proxy-completed by unpaid caregivers. Sensitivity and specificity for the two measures, against gold-standard clinician-administered MINI-International Neuropsychiatric Inventory [62], were 0.62/0.93 (45 % misclassified) and 0.81/0.73 (46 % misclassified), respectively (n = 41). This study was not conducted in long-term care settings and proxies were therefore not caregiver staff. The measures’ validity and applicability in this setting were therefore not established.

Psychological wellbeing

We identified four measures focused on psychological wellbeing. These were Psychological Wellbeing in Cognitively Impaired Persons [25], the Philadelphia Geriatric Center Affect Rating Scale (PGCARS) [63], Apparent Affect Rating Scale (AARS) [44], and the Apparent Emotion Rating Instrument (AER) [64]. The AARS and AER are both derived from PGCARS, originally developed by Lawton et al. [65]. However, this study was not included due to extensive training over 1 month provided to research assistant administrators [65].

The PGCARS, AARS, and AER were all validated in nursing home settings, and AARS and AER were administered by caregivers in the validation study. All three measure positive and negative affect, including items of pleasure, interest, anger, anxiety, and depression/sadness. All these measures require further psychometric evaluation.

Discomfort

The term discomfort is operationalized as the presence of a negative emotional/physical state that can be observed [51]. The Discomfort Behavior Scale (DBS) was developed to assess discomfort/pain [66]; it was derived from items on the Minimum Data Set 2.0 and it has therefore been developed for use in long-term care. It is administered based on review of all available information, including direct observation and communication with residents, discussions with family, and review of records [66]. Internal consistency of DBS is positive (Cronbach alpha = 0.77, n = 9,672) [66]. However, only one psychometric evaluation of the DBS was identified and further evaluation is warranted.

The DS-DAT [47, 51] does not require clinical training. Neither of the studies identified reported the requirement for extensive training and DS-DAT was therefore included in this review. DS-DAT has, however, been critiqued as complex to use and requiring significant training [67]. As such, it may not be useful as a symptom assessment tool in routine care provision. It has demonstrated expected high correlations with pain assessment measures PAINAD, APS, and PACSLAC (≥0.63) and a strong inter-rater reliability ICC at rest (0.83) and exercise (0.85, n = 62) [47].

Discussion

Key findings

To our knowledge, this is the first systematic review to identify and appraise assessment measures of symptoms commonly experienced by people with dementia for use in long-term care settings. Our review identified 32 proxy-completed measures of common symptoms experienced by people with dementia. Of these measures, those that assess pain possess the strongest evidence of psychometric properties. Progress on all the other measures is promising, although oral health, psychological wellbeing, and discomfort measures require further psychometric evaluation, and there have been challenges in developing a measure that accurately detects depression. Neither of the two neuropsychiatric or two anxiety measures were validated in the long-term care setting. Furthermore, we found only 11 studies where measures were validated when administered by non-clinically trained caregivers even though these caregivers are frequently in the best position to detect changes quickly due to enhanced resident knowledge and contact [12].

Despite the extent of symptoms experienced by this population, we were unable to find any multi-symptom assessment measures validated for use in routine care as an assessment measure. Instead, we found measures that assess single symptoms or symptom groups, specifically pain, oral health signs and symptoms, multiple neuropsychiatric symptoms, depression, anxiety and psychological wellbeing, and discomfort. Assessing discomfort may alert caregivers to physical or emotional discomfort that can then be further investigated to determine the underlying cause [68]. However, content analyses of pain and discomfort measures in dementia found significant overlap resulting in poor sensitivity in assessing these constructs [69], a finding supported by our results with pain and discomfort frequently being used interchangeably. An alternative to assessing discomfort is to provide caregivers with measures to assess all common symptoms. This would facilitate a comprehensive symptom assessment, and alert caregivers to consider all common symptoms and sources of distress.

Caregivers’ use of a battery of single assessments (e.g. pain, neuropsychiatric symptoms, oral health) could facilitate detection and monitoring of common symptoms, but is unlikely to be feasible for regular and frequent use due to the time taken to complete multiple measures. Palliative or end-of-life measures, such as the Symptom Management at the End of Life in Dementia [70] or the Palliative care Outcome Scale [71] could provide a brief yet comprehensive assessment of common physical, psychological, and other distressing (such as agitation) [70] symptoms to support detection and management of symptoms in care. The former was developed to measure outcomes and evaluate end-of-life care in dementia and has been extensively evaluated [70, 72–74], although predominantly after the death of the resident. It incorporates nine symptoms in people dying with dementia and therefore has the potential for use as a clinical assessment measure for people in the dying phase. The Palliative care Outcome Scale was developed for a non-dementia population but has sound psychometric properties and is used across settings to support clinical care [75]. It has been used to assess symptoms and the quality of palliative care to nursing home patients with and without dementia [76] and found to have the potential to identify areas of care that require addressing. Nonetheless, there was a high level of missing scores for some items (≤59.8 %) in the dementia subgroup, suggesting adaptation is required for this population [76]. Results of a qualitative study suggest that such multi-symptom measures used in routine care may require provision of proxy-observed behaviors or signs to assess verbally-compromised residents with dementia [77]. Use of a single multi-symptom measure may not provide a detailed assessment of each symptom. However, multi-symptom measures may support comprehensive assessment of symptoms with minimal time burden and, if required, inform requirement for further assessment or prompt referral to health professionals.

The second major finding from our study is the lack of assessment measures to assess common symptoms. The clinical challenges and importance of accurately assessing pain in this population is apparent by substantial development in pain measures, evidenced by a recent meta-review [78]. As a consequence, we found pain measures have the strongest psychometric evidence. Nonetheless, despite the prevalence of other common symptoms in residents with dementia, such as nausea, constipation, and dyspnea, we were unable to identify any measures to assess these. With further evaluation, the Respiratory Distress Observation Scale-Family (RDOS-Family) [79] has potential to be an important measure for detecting dyspnea in long-term care residents with dementia. The original RDOS was designed for cognitively impaired adults unable to self-report but required clinical expertise to administer [80]. RDOS-Family is family caregiver self-administered based on observations with a 20-minute training provided. It has good inter-rater reliability (ICC = 0.71) between family and trained research assistants when used with patients hospitalized for conditions with dyspnea.

The stringent methodological requirements of the quality criteria and the challenges of conducting research in verbally compromised people with dementia resulted in no measures achieving positive ratings for all psychometric properties in the review. In particular, this review shows that detecting depression in people unable to self-report in this setting is challenging and that caregivers’ use of observational signs may be insufficient to assess depression. Self-report, or a clinician-administered observer-rated scale for those with moderate to severe dementia, has been recommended for assessment of depression in nursing home residents [81]. The MDS 3.0 takes this approach with the embedded Patient Health Questionnaire-9 Observational Version designed for residents unable to self-report based on observations [82]. It is completed by trained nurse assessors through interview with a caregiver who knows the resident, thus combining clinician expertise with caregiver knowledge of the resident. The Patient Health Questionnaire-9 Observational Version demonstrated strong correlation (r = 0.84, n = 48) with trained research nurse-administered CSDD [83].

We included studies conducted in all settings and some measures therefore require further validation in long-term care settings. Where measures do not exist for symptom assessment in long-term care, this review informs selection of measures for further validation by reporting strength of psychometric properties and potential applicability in long-term care settings.

This systematic review identifies and critically appraises measures of common symptoms in the dementia population in long-term care; however, there are a number of limitations. Screening measures are used to detect diagnoses such as depression and anxiety. Studies evaluating screening measures may not have been detected or met the inclusion criteria for this study. Furthermore, the quality criteria used in this review were not developed to evaluate screening tools. However, using the same quality criteria provided consistency of appraisal across the included measures. We limited the study to English language-validated measures only and to publications in English only. This means measures not developed in English, such as the Dutch Rotterdam Elderly Pain Observation Scale [84], or translated measures, such as the German [85, 86] and Chinese [87] versions of the PAINAD, and Dutch [88, 89] and Italian [90] versions of the DS-DAT, are excluded. We recognize that the conclusions are therefore limited to English language measures and therefore limited to English-speaking populations and cultures, with the majority of studies conducted in English-speaking countries, predominantly the United States. This means that the most established measures with the strongest international psychometric evidence that have been validated in multiple languages, countries, or cultures are not identified as such. Finally, decisions regarding whether measures met the inclusion criteria required judgement at times. To improve objectivity, those full-texts that did not clearly meet the exclusion criteria were second reviewed and a decision reached by consensus.

Conclusion

Assessment measures of pain are the best developed and have the strongest evidence of psychometric properties for use by caregivers in people with dementia. All other assessment measures require further evaluation when administered by caregivers in long-term care settings. A caregiver-completed multi-symptom measure to assess the full extent of symptoms in people with dementia is urgently required so that symptoms are detected and residents are referred when medical intervention is needed.

Availability of data and materials

The datasets supporting the conclusions of this article are included within the article and its additional files.

Abbreviations

- AARS:

-

Apparent Affect Rating Scale

- APS:

-

Abbey Pain Scale

- AER:

-

Apparent Emotion Rating

- BOHSE:

-

Brief Oral Health Status Examination

- CNPI:

-

Checklist of Nonverbal Pain Behaviors

- CSDD:

-

Cornell Scale for Depression in Dementia

- CS-GDS:

-

Collateral Source Geriatric Depression Scale

- DBS:

-

Discomfort Behavior Scale

- DS-DAT:

-

Discomfort Scale for patients with Dementia of Alzheimer’s Type

- ICC:

-

Intraclass correlation coefficient

- NOPPAIN:

-

Non-communicative Patient’s Pain Assessment Instrument

- NPI-Q:

-

Neuropsychiatric Inventory-Questionnaire

- OHAT:

-

Oral Health Assessment Tool

- PACSLAC:

-

Pain Assessment Checklist for Seniors with Limited Ability to Communicate

- PADE:

-

Pain Assessment for the Dementing Elderly

- PAINAD:

-

Pain Assessment in Advanced Dementia

- PBOICIE:

-

Pain Behaviors for Osteoarthritis Instrument for Cognitively Impaired Elders

- PGCARS:

-

Philadelphia Geriatric Center Affect Rating Scale

- RDOS:

-

Respiratory Distress Observation Scale

References

Mitchell SL, Kiely DK, Hamel MB, Park PS, Morris JN, Fries BE. Estimating prognosis for nursing home residents with advanced dementia. JAMA. 2004;291:2734–40.

van der Steen JT. Dying with dementia: what we know after more than a decade of research. J Alzheimers Dis. 2010;22:37–55.

McCarthy M, Addington-Hall J, Altmann D. The experience of dying with dementia: a retrospective study. Int J Geriatr Psychiatry. 1997;12:404–9.

Di Giulio P, Toscani F, Villani D, Brunelli C, Gentile S, Spadin P. Dying with advanced dementia in long-term care geriatric institutions: a retrospective study. J Palliat Med. 2008;11:1023–8.

Lyketsos CG, Lopez O, Jones B, Fitzpatrick AL, Breitner J, DeKosky S. Prevalence of neuropsychiatric symptoms in dementia and mild cognitive impairment: results from the cardiovascular health study. JAMA. 2002;288:1475–83.

Hanson LC, Eckert JK, Dobbs D, Williams CS, Caprio AJ, Sloane PD, et al. Symptom experience of dying long-term care residents. J Am Geriatr Soc. 2008;56:91–8.

Mitchell SL, Kiely DK, Hamel MB. Dying with advanced dementia in the nursing home. Arch Intern Med. 2004;164:321–6.

Brandt HE, Deliens L, Ooms ME, van der Steen JT, van der Wal G, Ribbe MW. Symptoms, signs, problems, and diseases of terminally ill nursing home patients: A nationwide observational study in the Netherlands. Arch Intern Med. 2005;165:314–20.

Mitchell SL, Teno JM, Kiely DK, Shaffer ML, Jones RN, Prigerson HG, et al. The clinical course of advanced dementia. N Engl J Med. 2009;36:1529–38.

Husebo BS, Ballard C, Sandvik R, Nilsen OB, Aarsland D. Efficacy of treating pain to reduce behavioural disturbances in residents of nursing homes with dementia: cluster randomised clinical trial. Br Med J. 2011;343:d4065.

Sourial R, McCusker J, Cole M, Abrahamowicz M. Agitation in demented patients in an acute care hospital: Prevalence, disruptiveness, and staff burden. Int Psychogeriatr. 2001;13:183–96.

Hendrix CC, Sakauye KM, Karabatsos G, Daigle D. The use of the Minimum Data Set to identify depression in the elderly. J Am Med Dir Assoc. 2003;4:308–12.

Etkind SN, Daveson BA, Kwok W, Witt J, Bausewein C, Higginson IJ, et al. Capture, transfer, and feedback of patient-centered outcomes data in palliative care populations: does it make a difference? A systematic review. J Pain Symptom Manage. 2015;49:611–24.

Higginson IJ, Carr AJ. Using quality of life measures in the clinical setting. Br Med J. 2001;322:1297–300.

Slade M, Thornicroft G, Glover G. The feasibility of routine outcome measures in mental health. Soc Psychiatry Psychiatr Epidemiol. 1999;34:243–9.

Gitlin LN, Marx KA, Stanley IH, Hansen BR, Van Haitsma KS. Assessing neuropsychiatric symptoms in people with dementia: a systematic review of measures. Int Psychogeriatr. 2014;26:1805–48.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Br Med J. 2009;339:b2535.

Woods B, Aguirre E, Spector AE, Orrell M. Cognitive stimulation to improve cognitive functioning in people with dementia. Cochrane Database Syst Rev. 2012;2:CD005562. doi:10.1002/14651858.CD005562.pub2.

Hall S, Kolliakou A, Petkova H, Froggatt K, Higginson IJ. Interventions for improving palliative care for older people living in nursing care homes (review). Cochrane Database Syst Rev. 2011;3, CD007132. doi:10.1002/14651858.CD007132.pub2.

Terwee CB, Bot SD, de Boer MR, van der Windt DA, Knol DL, Dekker J, et al. Quality criteria were proposed for measurement properties of health status questionnaires. J Clin Epidemiol. 2007;60:34–42.

Bollen JC, Dean SG, Siegert RJ, Howe TE, Goodwin VA. A systematic review of measures of self-reported adherence to unsupervised home-based rehabilitation exercise programmes, and their psychometric properties. BMJ Open. 2014;4:e005044.

de Vet HC, Ostelo RW, Terwee CB, van der Roer N, Knol DL, Beckerman H, et al. Minimally important change determined by a visual method integrating an anchor-based and a distribution-based approach. Qual Life Res. 2007;16:131–42.

Streiner DL, Cairney J. What’s under the ROC? An introduction to receiver operating characteristics curves. Can J Psychiatry. 2007;52:121–8.

Bradford A, Brenes GA, Robinson RA, Wilson N, Snow AL, Kunik ME, et al. Concordance of self- and proxy-rated worry and anxiety symptoms in older adults with dementia. J Anxiety Disord. 2013;27:125–30.

Burgener SC, Twigg P, Popovich A. Measuring psychological well-being in cognitively impaired persons. Dementia. 2005;4:463–85.

Kaufer DI, Cummings JL, Ketchel P, Smith V, MacMillan A, Shelley T, et al. Validation of the NPI-Q, a brief clinical form of the Neuropsychiatric Inventory. J Neuropsychiatry Clin Neurosci. 2000;12:233–9.

Logsdon RG, Teri L. Depression in Alzheimer’s disease patients: caregivers as surrogate reporters. J Am Geriatr Soc. 1995;43:150–5.

Nitcher RL, Burke WJ, Roccaforte WH, Wengel SP. A collateral source version of the Geriatric Depression Rating Scale. Am J Geriatr Psychiatry. 1993;1:143–52.

Tsai PF, Beck C, Richards KC, Phillips L, Roberson PK, Evans J. The Pain Behaviors for Osteoarthritis Instrument for Cognitively Impaired Elders (PBOICIE). Res Gerontol Nurs. 2008;1:116–22.

Victoroff J, Nielson K, Mungas D. Caregiver and clinician assessment of behavioral disturbances: the California Dementia Behavior Questionnaire. Int Psychogeriatr. 1997;9:155–74.

DeWaters T, Faut-Callahan M, McCann JJ, Paice JA, Fogg L, Hollinger-Smith L, et al. Comparison of self-reported pain and the PAINAD scale in hospitalized cognitively impaired and intact older adults after hip fracture surgery. Orthop Nurs. 2008;27:21–8.

Elanchenny N, Shah A. Evaluation of three nurse-administered depression rating scales on acute admission and continuing care geriatric psychiatry wards. Int J Methods Psychiatr Res. 2001;10:43–51.

Abbey J, Piller N, De Bellis A, Esterman A, Parker D, Giles L, et al. The Abbey pain scale: a 1-minute numerical indicator for people with end-stage dementia. Int J Palliat Nurs. 2004;10:6–13.

Cervo FA, Bruckenthal P, Chen JJ, Bright-Long LE, Fields S, Zhang G, et al. Pain assessment in nursing home residents with dementia: psychometric properties and clinical utility of the CNA Pain Assessment Tool (CPAT). J Am Med Dir Assoc. 2009;10:505–10.

Chan S, Hadjistavropoulos T, Williams J, Lints-Martindale A. Evidence-based development and initial validation of the pain assessment checklist for seniors with limited ability to communicate-II (PACSLAC-II). Clin J Pain. 2014;30:816–24.

Cheung G, Choi P. The use of the Pain Assessment Checklist for Seniors with Limited Ability to Communicate (PACSLAC) by caregivers in dementia care facilities. N Z Med J. 2008;121:21–9.

Kaasalainen S, Stewart N, Middleton J, Knezacek S, Hartley T, Ife C, et al. Development and evaluation of the Pain Assessment in the Communicatively Impaired (PACI) tool: part II. Int J Palliat Nurs. 2011;17:431–8.

Mahoney AEJ, Peters L. The Mahoney pain scale: Examining pain and agitation in advanced dementia. Am J Alzheimers Dis Other Demen. 2008;23:250–61.

Villanueva MR, Smith TL, Erickson JS, Lee AC, Singer CM. Pain Assessment for the Dementing Elderly (PADE): reliability and validity of a new measure. J Am Med Dir Assoc. 2003;4:1–8.

Chalmers JM, King PL, Spencer AJ, Wright FAC, Carter KD. The oral health assessment tool--validity and reliability. Aust Dent J. 2005;50:191–9.

Kayser-Jones J, Bird WF, Paul SM, Long L, Schell ES. An instrument to assess the oral health status of nursing home residents. Gerontologist. 1995;35:814–24.

Li Z, Jeon YH, Low LF, Chenoweth L, O’Connor DW, Beattie E, et al. Validity of the geriatric depression scale and the collateral source version of the geriatric depression scale in nursing homes. Int Psychogeriatr. 2015;27:1495–504.

Watson LC, Zimmerman S, Cohen LW, Dominik R. Practical depression screening in residential care/assisted living: five methods compared with gold standard diagnoses. Am J Geriatr Psychiatry. 2009;17:556–64.

Lawton MP, Van Haitsma K, Perkinson M, Ruckdeschel K. Observed affect and quality of life in dementia: further affirmations and problems. J Ment Health Aging. 1999;5:69–81.

Lints-Martindale AC, Hadjistavropoulos T, Lix LM, Thorpe L. A comparative investigation of observational pain assessment tools for older adults with dementia. Clin J Pain. 2012;28:226–37.

Ersek M, Herr K, Neradilek MB, Buck HG, Black B. Comparing the psychometric properties of the checklist of nonverbal pain behaviors (CNPI) and the pain assessment in advanced dementia (PAIN-AD) instruments. Pain Med. 2010;11:395–404.

Liu JYW, Briggs M, Closs SJ. The psychometric qualities of four observational pain tools (OPTs) for the assessment of pain in elderly people with osteoarthritic pain. J Pain Symptom Manage. 2010;40:582–98.

Warden V, Hurley AC, Volicer L. Development and psychometric evaluation of the Pain Assessment in Advanced Dementia (PAINAD) scale. J Am Med Dir Assoc. 2003;4:9–15.

Fuchs-Lacelle S, Hadjistavropoulos T. Development and preliminary validation of the Pain Assessment Checklist for Seniors With Limited Ability to Communicate (PACSLAC). Pain Manag Nurs. 2004;5:37–49.

Kaasalainen S, Akhtar-Danesh N, Hadjistavropoulos T, Zwakhalen S, Verreault R. A comparison between behavioral and verbal report pain assessment tools for use with residents in long term care. Pain Manag Nurs. 2013;14:e106–14.

Hurley AC, Volicer BJ, Hanrahan PA, Houde S, Volicer L. Assessment of discomfort in advanced Alzheimer patients. Res Nurs Health. 1992;15:369–77.

Neville C, Ostini R. A psychometric evaluation of three pain rating scales for people with moderate to severe dementia. Pain Manag Nurs. 2014;15:798–806.

Feldt KS. The Checklist of Nonverbal Pain Indicators (CNPI). Pain Manag Nurs. 2000;1:13–21.

Horgas AL, Nichols AL, Schapson CA, Vietes K. Assessing pain in persons with dementia: relationships among the non-communicative patient's pain assessment instrument, self-report, and behavioral observations. Pain Manag Nurs. 2007;8:77–85.

Kaasalainen S, Crook J. A comparison of pain-assessment tools for use with elderly long-term-care residents. Can J Nurs Res. 2003;35:58–71.

Cummings JL. The Neuropsychiatric Inventory: assessing psychopathology in dementia patients. Neurology. 1997;48 Suppl 6:S10–6.

Anderson RL, Buckwalter KC, Buchanan RJ, Maas ML, Imhof SL. Validity and reliability of the Minimum Data Set Depression Rating Scale (MDSDRS) for older adults in nursing homes. Age Ageing. 2003;32:435–8.

Burrows AB, Morris JN, Simon SE, Hirdes JP, Phillips C. Development of a Minimum Data Set-based depression rating scale for use in nursing homes. Age Ageing. 2000;29:165–72.

Koehler M, Rabinowitz T, Hirdes J, Stones M, Carpenter GI, Fries BE, et al. Measuring depression in nursing home residents with the MDS and GDS: an observational psychometric study. BMC Geriatr. 2005;5:1471–2318.

Martin L, Poss JW, Hirdes JP, Jones RN, Stones MJ, Fries BE. Predictors of a new depression diagnosis among older adults admitted to complex continuing care: Implications for the depression rating scale (DRS). Age Ageing. 2008;37:51–6.

Hayes PM, Lohse D, Bernstein I. The development and testing of the Hayes and Lohse Non-Verbal Depression Scale. Clin Gerontol. 1991;10:3–13.

Sheehan DV, Lecrubier Y, Sheehan KH, Amorim P, Janavs J, Weiller E, et al. The Mini-International Neuropsychiatric Interview (MINI): the development and validation of a structured diagnostic psychiatric interview for DSM-IV and ICD-10. J Clin Psychiatry. 1998;59:22–33.

Kolanowski A, Hoffman L, Hofer SM. Concordance of self-report and informant assessment of emotional well-being in nursing home residents with dementia. J Gerontol. 2007;62:20–7.

Snyder M, Ryden MB, Shaver P, Wang J, Savik K, Gross CR, et al. The Apparent Emotion Rating Instrument: assessing affect in cognitively impaired elders. Clin Gerontol. 1998;18:17–29.

Lawton MP, Van Haitsma K, Klapper J. Observed affect in nursing home residents with Alzheimer’s disease. J Gerontol. 1996;51B:3–14.

Stevenson KM, Brown RL, Dahl JL, Ward SE, Brown MS. The discomfort behavior scale: a measure of discomfort in the cognitively impaired based on the minimum data set 2.0. Res Nurs Health. 2006;29:576–87.

Miller J, Neelon V, Dalton J, Ng’andu N, Bailey Jr D, Layman E, et al. The assessment of discomfort in elderly confused patients: a preliminary study. J Neurosci Nurs. 1996;28:175–82.

Kovach CR, Noonan PE, Griffie J, Muchka S, Weissman DE. Use of the assessment of discomfort in dementia protocol. Appl Nurs Res. 2001;14:193–200.

van der Steen JT, Sampson EL, Van den Block L, Lord K, Vankova H, Pautex S, et al. Tools to assess pain or lack of comfort in dementia: a content analysis. J Pain Symptom Manage. 2015;50:659–75.

Volicer L, Hurley AC, Blasi ZV. Scales for evaluation of end-of-life care in dementia. Alzheimer Dis Assoc Disord. 2001;15:194–200.

Hearn J, Higginson I. Development and validation of a core outcome measure for palliative care: the palliative care outcome scale. Palliative Care Core Audit Project Advisory Group. Qual Health Care. 1999;8:219–27.

Kiely DK, Shaffer ML, Mitchell SL. Scales for the evaluation of end-of-life care in advanced dementia: sensitivity to change. Alzheimer Dis Assoc Disord. 2012;26:358–63.

Kiely DK, Volicer L, Teno J, Jones RN, Prigerson HG, Mitchell SL. The validity and reliability of scales for the evaluation of end-of-life care in advanced dementia. Alzheimer Dis Assoc Disord. 2006;20:176–81.

van Soest-Poortvliet MC, van der Steen JT, Zimmerman S, Cohen LW, Klapwijk MS, Bezemer M, et al. Psychometric properties of instruments to measure the quality of end-of-life care and dying for long-term care residents with dementia. Qual Life Res. 2012;21:671–84.

Collins ES, Witt J, Bausewein C, Daveson BA, Higginson IJ, Murtagh FEM. A systematic review of the use of the palliative care outcome scale and the support team assessment schedule in palliative care. J Pain Symptom Manage. 2015;50:842–53.

Brandt HE, Deliens L, van der Steen JT, Ooms ME, Ribbe MW, van der Wal G. The last days of life of nursing home patients with and without dementia assessed with the Palliative Care Outcome Scale. Palliat Med. 2005;19:334–42.

Krumm N, Larkin P, Connolly M, Rode P, Elsner F. Improving dementia care in nursing homes: experiences with a palliative care symptom-assessment tool (MIDOS). Int J Palliat Nurs. 2014;20:187–92.

Lichtner V, Dowding D, Esterhuizen P, Closs S, Long A, Corbett A, et al. Pain assessment for people with dementia: a systematic review of systematic reviews of pain assessment tools. BMC Geriatr. 2014;14:138.

Campbell ML, Templin TN. RDOS-Family: a guided learning tool for layperson assessment of respiratory distress. J Palliat Med. 2014;17:982–3.

Campbell ML, Templin T, Walch J. A respiratory distress observation scale for patients unable to self-report dyspnea. J Palliat Med. 2010;13:285–90.

American Geriatrics Society and American Association for Geriatric Psychiatry. Consensus statement on improving the quality of mental health care in U.S. nursing homes: management of depression and behavioral symptoms associated with dementia. J Am Geriatr Soc. 2003;51:1287–98.

Saliba D, DiFilippo S, Edelen MO, Kroenke K, Buchanan J, Streim J. Testing the PHQ-9 Interview and Observational Versions (PHQ-9 OV) for MDS 3.0. J Am Med Dir Assoc. 2012;13:618–25.

Alexopoulos GS, Abrams RC, Young RC, Shamoian CA. Cornell Scale for Depression in Dementia. Biol Psychiatry. 1988;23:271–84.

Van Herk R, Van Dijk M, Tibboel D, Baar FPM, De Wit R, Duivenvoorden HJ. The Rotterdam Elderly Pain Observation Scale (REPOS): a new behavioral pain scale for non-communicative adults and cognitively impaired elderly persons. J Pain Manag. 2009;1:367–78.

Basler HD, Huger D, Kunz R, Luckmann J, Lukas A, Nikolaus T, et al. Assessment of pain in advanced dementia. Construct validity of the German PAINAD. Schmerz. 2006;20:519–26.

Schuler MS, Becker S, Kaspar R, Nikolaus T, Kruse A, Basler HD. Psychometric properties of the German ‘Pain Assessment in Advanced Dementia Scale’ (PAINAD-G) in nursing home residents. J Am Med Dir Assoc. 2007;8:388–95.

Lin PC, Lin LC, Shyu YIL, Hua MS. Chinese version of the Pain Assessment in Advanced Dementia Scale: initial psychometric evaluation. J Adv Nurs. 2010;66:2360–8.

van der Steen JT, Ader HJ, van Assendelft JH, Kooistra M, Passier PE, Ooms ME. [Retrospective assessment of the Dutch version of the Discomfort Scale--Dementia of Alzheimer Type (DS-DAT): is estimation sufficiently valid and reliable?]. Tijdschr Gerontol Geriatr. 2003;34:254–9 [In Dutch].

van der Steen JT, Ooms ME, van der Wal G, Ribbe MW. [Measuring discomfort in patients with dementia. Validity of a Dutch version of the Discomfort Scale--dementia of Alzheimer type (DS-DAT)]. Tijdschr Gerontol Geriatr. 2002;33:257–63 [In Dutch].

Dello Russo C, Di Giulio P, Brunelli C, Dimonte V, Villani D, Renga G, et al. Validation of the Italian version of the Discomfort Scale - Dementia of Alzheimer Type. J Adv Nurs. 2008;64:298–303.

Acknowledgements

BuildCARE is supported by Cicely Saunders International (CSI) and The Atlantic Philanthropies, led by King’s College London, Cicely Saunders Institute, Department of Palliative Care, Policy & Rehabilitation, UK. CI: Higginson. Grant leads: Higginson, McCrone, Normand, Lawlor, Meier, Morrison. Project Co-ordinator/PI: Daveson. Study arm PIs: Pantilat, Selman, Normand, Ryan, McQuillan, Morrison, Daveson. We thank all collaborators & advisors including service-users. BuildCARE members: Emma Bennett, Francesca Cooper, Barbara A Daveson, Susanne de Wolf-Linder, Mendwas Dzingina, Clare Ellis-Smith, Catherine J Evans, Lesley Henson, Irene J Higginson, Bridget Johnston, Paramjote Kaler, Pauline Kane, Lara Klass, Peter Lawlor, Paul McCrone, Regina McQuillan, Diane Meier, Susan Molony, Sean Morrison, Fliss E Murtagh, Charles Normand, Caty Pannell, Steve Pantilat, Karen Ryan, Lucy Selman, Melinda Smith, Katy Tobin, Gao Wei.

Funding

This study was part funded by Cicely Saunders International and The Atlantic Philanthropies, and part funded by the National Institute for Health Research Collaboration for Leadership in Applied Health Research and Care Funding scheme. The Collaboration for Leadership in Applied Health Research and Care (CLAHRC) South London is part of the National Institute for Health Research (NIHR) and is a partnership between King’s Health Partners, St. George’s, University London, and St George’s Healthcare NHS Trust. The views and opinions expressed are those of the authors and not necessarily those of the NHS, the NIHR, MRC, CCF, NETSCC, NIHR Programme Grants for Applied Research or Department of Health. The funding organizations had no role in the design of the study, and collection, analysis, and interpretation of the data, or writing of the manuscript.

Author information

Authors and Affiliations

Consortia

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

CES, CJE, IJH and BD conceived and designed the study. CES conducted the literature search. CES, CJE, AEB, LAH, MD, PMK, and BD were involved in the analysis and interpretation of data. CES, CJE and BD drafted the manuscript. The study was supervised by CJE, IJH and BD. All authors read and approved the final manuscript.

Additional files

Additional file 1:

PRISMA checklist. (DOC 63 kb)

Additional file 2:

Full search strategy – search strategy used. (DOCX 19 kb)

Additional file 3:

Study details for included studies – population, setting, and who the measure was administered by. (DOCX 24 kb)

Additional file 4:

Psychometric evaluation of measures – psychometric evaluation of all measures. (DOCX 24 kb)

Additional file 5:

Summary of measure details, methods of administration, feasibility, and applicability in care – measure details, length of measure, time taken to complete, method of administration, any training required. (DOCX 43 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Ellis-Smith, C., Evans, C.J., Bone, A.E. et al. Measures to assess commonly experienced symptoms for people with dementia in long-term care settings: a systematic review. BMC Med 14, 38 (2016). https://doi.org/10.1186/s12916-016-0582-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12916-016-0582-x