Abstract

Background

eHealth literacy is a key concept in the implementation of eHealth resources. However, most eHealth literacy definitions and frameworks are designed from the perceptive of the individual receiving eHealth care, which do not include health care providers’ eHealth literacy or acceptance of delivering eHealth resources.

Aims

To identify existing research on eHealth literacy domains and measurements and identify eHealth literacy scores and associated factors among hospital health care providers.

Methods

This systematic review was reported in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) 2020 checklist. A systematic literature search was conducted in MEDLINE, Cinahl, Embase, Scopus, PEDro, AMED and Web of Science. Quantitative studies assessing eHealth literacy with original research, targeting hospital health care providers were included. Three eHealth literacy domains based on the eHealth literacy framework were defined a priori; (1) Individual eHealth literacy, (2) Interaction with the eHealth system, and (3) Access to the system. Pairs of authors independently assessed eligibility, appraised methodological quality and extracted data.

Results

Fourteen publications, of which twelve publications were conducted in non-Western countries, were included. In total, 3,666 health care providers within eleven different professions were included, with nurses being the largest group. Nine of the included studies used the eHealth literacy scale (eHEALS) to measure eHealth literacy, representing the domain of individual eHealth literacy. A minority of the studies covered domains such as interaction with the eHealth system and access to the system. The mean eHEALS score in the studies ranged from 27.8 to 31.7 (8–40), indicating a higher eHealth literacy. One study reported desirable eHealth literacy based on the Digital Health Literacy Instrument. Another study reported a relatively high score on the Staff eHealth literacy questionnaire. eHealth literacy was associated with socio-demographic factors, experience of technology, health behaviour and work-related factors.

Conclusions

Health care providers have good individual eHealth literacy. However, more research is needed on the eHealth literacy domains dependent on interaction with the eHealth system and access to the system. Furthermore, most studies were conducted in Eastern and Central-Africa, and more research is thus needed in a Western context.

Trial registration

PROSPERO International Prospective Register of Systematic Reviews (CRD42022363039).

Similar content being viewed by others

Background

Health literacy is described as the individual’s knowledge, confidence and comfort to access, understand, appraise, remember and use information about health and health care [1], and is crucial for enabling health care providers to integrate evidence-based knowledge in their daily practice [2, 3]. Moreover, health literacy is essential for the health care providers` own health and well-being, in addition to those around them (e.g. patients admitted to the hospital) [1].

In the last decade, digital solutions of service provision and working methods have changed health care providers’ competence requirements [4]. With the growing use of digital technology in the last decade, the concept of digital health competency has received considerable attention [4,5,6], while the concept of electronic health (eHealth) literacy is still unexplored among health care providers.

The concept of eHealth literacy was introduced by Norman and Skinner in 2006, defined as the individual’s ability to seek, find, understand, and appraise health information from electronic sources and apply the knowledge gained to address or solve a health problem. The concept was built on the well-known Lily model and measured with the tool eHealth literacy scale (eHEALS) [7]. The Lily model consists of six core literacies forming the basic skills required to optimise individuals’ experiences of eHealth sources [8]. However, as the Lily model was developed for the first generation of eHealth (web 1.0), the skills and confidence in using digital interactions and social media are not part of the model (web 2.0) [9]. Therefore, as advances in technology offer health care providers and patients new ways to interact with and manage such information about health and services, other researchers have continued to develop the concept [10,11,12,13,14]. This has led to two definitions that include possibilities for communication through eHealth sources in all contexts of health care [11, 12]. Furthermore, a new comprehensive framework has been developed. The eHealth literacy framework (eHLF) contains seven scales that provide a new way to understand the interaction and relationship between individuals and the system, in addition to the individual abilities and resources [14]. Moreover, these seven scales are divided into three domains; (1) Domain dependent on the basic individual eHealth literacy; (2) Domain dependent on how the individual interacts with the eHealth system; and (3) Domain dependent on the system (e.g. access to hardware or an internet connection when needed). The interaction between the individual and the system is where unique aspects of the revised concept of eHealth literacy start to unfold [14]. Unfortunately, most of these definitions and frameworks for eHealth literacy have been designed from the perceptive of the individual receiving eHealth care, and not from that of the health care providers who provided the care by using eHealth resources [12].

In its broadest sense, eHealth is concerned with improving the flow of information, through electronic means, to support delivery of health services and the management of health systems [15]. Despite substantial advances to data and that health care providers having relatively high digital literacy, challenges in health care providers use of eHealth persists [16, 17]. Among those challenges, poor eHealth literacy has been highlighted as a common barrier to the implementation of eHealth resources [18, 19]. That being said, the scientific literature underpinning these barriers for technology integration among health care providers is weak [20], and the concept of eHealth literacy among health care providers almost absent. To our knowledge, two systematic reviews have been published that summarise the digital health competencies among health care providers [5, 6]. One of those identified three studies assessing eHealth literacy among primary health care providers [6]. However, the latter systematic review did not give any attention to eHealth literacy in the discussion section. The most recent systematic review found that the subcategory Self-rated competencies containing the concept of eHealth literacy was assessed by five out of 26 studies and all five used the eHEALS which represents the domain dependent on the basic individual eHealth literacy [5]. However, these systematic reviews assessed general digital health competence as an umbrella term, and not eHealth literacy which is a separate established and defined concept. Furthermore, the systematic review did not describe the existing state of eHealth literacy among hospital health care providers, nor associated factors. Thus, due to a number of gaps in the literature, the aims of this systematic review were:

-

1.

To identify existing research on different eHealth literacy measurements and domains among hospital health care providers.

-

2.

To identify eHealth literacy scores among hospital health care providers.

-

3.

To identify factors associated with eHealth literacy among hospital health care providers.

Methods

Design

A systematic review with a narrative synthesis was used. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines were followed to minimise potential sources of bias [21]. The protocol for this systematic review was registered in the International Prospective Register of Systematic Reviews (PROSPERO) (CRD42022363039) [22].

Eligibility criteria

The specific eligibility criteria defined a priori are presented in Table 1. Studies that included only a subset of relevant participants were included if they presented the results in subgroups, if not they were excluded.

Search strategy

The systematic search strategy was designed to locate eligible studies published in English and Scandinavian languages. A team of clinical researchers (GB, AW, MHL) and a librarian (LBH) agreed on a search strategy for MEDLINE, which was adapted for use in Cinahl, Embase, Scopus, PEDro, AMED and Web of Science. The timeframe was from inception to November 18th, 2022, we sat no limit on the year of publication, as we wanted to describe the entire range of research relevant for our research questions. The search strategy was made available through DataverseNO [23]. Articles identified through references in the included studies and hand searches were considered for inclusion.

Data management

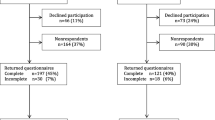

The search results from the different electronic databases were combined in a single EndNote library by the librarian (LBH). The librarian identified and removed duplicates. All search results were subsequently uploaded to Rayyan (Rayyan Systems Inc) for storage and facilitation of blinding during the screening process. According to the pre-defined inclusion and exclusion criteria all selected titles and abstracts were scanned independently by two researchers (MHL) and (AKW). The full-text versions of potentially relevant articles were obtained and assessed independently for eligibility by two researchers (MHL and AKW). Any disagreements were resolved through discussion with a third researcher (GB). Three researchers (GB, AKW and MHL) verified the final list of included studies. The reasons for the exclusion of full text publications were recorded using the PRISMA 2020 flow diagram [21]. An overview of the selection procedure of reviewed articles is presented in Fig. 1.

Data extraction

One researcher (GB) extracted the data to a standardized data collection form that included the following data: year of publication, country of origin, the aims, study design, time period, sample size, health care profession, context, definitions of health literacy, eHealth literacy domains defined a priori, eHealth literacy measures, and findings related to the research questions of the review. A second researcher (AKW) assessed the data extraction for accuracy. Disagreements were resolved through discussion with a third researcher (MHL).

Quality appraisal

The quality appraisal was systematically assessed using the Joanna Briggs Institute (JBI) critical appraisal checklist for analytical cross-sectional studies [24]. The checklist contains eight questions, however question 3 (Was the exposure measured in a valid and reliable way?) and question 4 (Were objective, standard criteria used for measurement of the condition?) were assessed as Not applicable in the selected publications, as these types of studies did not include any exposures or set out to measure a condition. Hence, we assessed the remaining six questions. Three researchers (GB, MHL and AW) independently conducted the quality appraisal in pairs, and any disagreements were resolved through discussion. The scores applied were “Y” (yes) when the item was satisfied, “N” (no) when the item was not satisfied, and “U” (unclear) when the information contained in the study was not sufficient. To comprehend all studies, their methodological quality was not considered an exclusion criterion.

Data synthesis and analysis

A descriptive summary of the included publications was performed. To answer the first aim, the eHealth literacy scales used in the included studies were operationalised into three major domains defined a priori; (1) Domains dependent on the basic individual eHealth literacy; (2) Domains dependent on how the individual interacts with the eHealth system; and (3) Domains dependent on the system (e.g. access to hardware or an internet connection when needed). These three major eHealth literacy domains were based on the eHLF [14]. To answer the second aim, eHealth literacy was described according to the measurement used to assess it. Moreover, a comparison was made between health care professions. To answer the third aim, factors associated with eHealth literacy were categorised into groups according to the phenomena being investigated.

Results

Overview

A total of 1,212 publications were identified from the systematic literature search after removal of duplicates. After the first screening, 26 publications were assessed in full text, of which 14 met the inclusion criteria. One study identified from citation searching was assessed as eligible. During the quality appraisal and the data extraction process, one more study was excluded as it did not meet the inclusion criteria for the population. Figure 1 shows the study selection process in the PRISMA 2020 flow diagram [21].

The PRISMA flow diagram [21] describing selection procedure of reviewed articles

Characteristics of included studies

In total, 14 publications from 10 different studies were included. The characteristics of the included studies are presented in Table 2. Thirteen of the publications used a cross-sectional design [25,26,27,28,29,30,31,32,33,34,35,36,37], and one used a longitudinal design with two cross-sectional samples [38]. The latter study assessed eHealth literacy before and after the implementation of an integrated electronic health record (HER) system. The other studies included did not assessed eHealth literacy based on a particular type of eHealth source or resource. The publications included were conducted in Ethiopia [27, 33,34,35], Turkey [25, 36, 37], South Korea [30, 31], Denmark [38], Iran [26, 28] and Greece [32] over a period from 2015 to 2021 (Fig. 2). In total, 3666 health care providers within 11 different professions were included. Nine studies included health care providers from three or more professions (e.g. nurse, physician, midwife, laboratory) [25, 27,28,29, 33,34,35, 37, 38]. Two studies included health care providers from two different professions, more precisely nurses and nursing assistants [32], and nurses and physicians [37]. Three studies included nurses as the only health care profession [26, 30, 31, 36]. Hence, approximately two-thirds were nurses.

eHealth literacy domains

Two publications did not define the concept of eHealth literacy [29, 35], four publications more or less described eHealth literacy without using an established definition [25, 27, 32, 37], and eight used the established definition of eHealth literacy defined by Norman and Skinner in 2006 [7, 8]. Nine of the included publications used the eHealth literacy scale (eHEALS) as a measure of eHealth literacy, which represents domains dependent on the basic individual eHealth literacy [25, 26, 30,31,32,33,34, 36, 37]. One publication used the Digital Health Literacy Instrument (DHLI), which represents the domains dependent on the basic individual eHealth literacy and on how the individual interacts with the eHealth system [28]. One other study used the Staff eHealth literacy questionnaire (Staff eHLQ), which represents the domains dependent on the basic individual eHealth literacy, on how the individual interact with the eHealth system and on the system (e.g. access to hardware or an internet connection when needed) [38]. Two publications did not use a study-specific tool to measure eHealth literacy. However, according to the content of the items, these publications represent domains dependent on the basic individual eHealth literacy [27, 29]. One publication did not specify the tool used to measure eHealth literacy [35].

eHealth literacy among hospital health care providers

Overall, the mean eHEALS score in the studies ranged from 27.8 to 31.7 (8–40). The studies assessing eHealth literacy among nurses in South Korea [30], Turkey [36] and Iran [26] reported a mean eHEALS score of 28.2, 29.9 and 31.7, respectively. A study from Greece, which included nurses and nursing assistants, reported a mean eHEALS score of 30.7 [32]. A study from Turkey which included nurses and physician reported a mean eHEALS score of 28.7. However, there was a statistically significant difference between the mean eHEALS score for physicians (mean score of 30.7) and nurses (mean score of 27.6) [37]. The studies from Ethiopia, which included three or more health care providers in their sample, reported a mean eHEALS score of 27.8 [33] and 29.2 [34]. The latter studies categorised eHealth literacy as high or low, and reported that 59% [34] and 70% [33] of the health care providers had high eHealth literacy.

The study, which used the Digital Health Literacy Instrument (DHLI) reported that the health care providers have very desirable literacy in the scales Protecting privacy, Operational skills, Navigation skills and Information searching. Furthermore, they had desirable level in Adding content, Determining data relevancy and Evaluating data reliability.

The study that used the Staff eHealth literacy questionnaire (Staff eHLQ) reported a relatively high score on the Staff eHLQ scale 1 to 3 (Using technology to process health information, Understanding of health concepts and language, and Ability to actively engage with digital services), while they reported a lower score on the Staff eHLQ scale 4 to 7 (Feel safe and in control, Motivated to engage with digital services, Access to digital services that work, and Digital services that suit individual needs). Physicians scored higher on Staff eHLQ2 (Understanding of health concepts and language), but lower on Staff eHLQ scale 6 and eHLQ scale 7 (Access to digital services that work, and Digital services that suit individual needs) compared to medical secretaries, nursing assistants and nurses.

Two studies assessed eHealth literacy in relation to COVID-19 by using a questionnaire tailored for this purpose (e.g. I know how to use the internet to answer my questions about the COVID-19 pandemic). They reported that 40 to 50% of health care providers had a higher eHealth literacy score [27, 29].

Factors associated with eHealth literacy

A higher score on the eHEALS was associated with demographic characteristics such as age, being married and having higher education [26, 37]. Moreover, a higher score on the eHEALS was associated with better health promoting behaviour [30, 31, 36], and work-related factors, such as a better collegial nurse-physician relationship and nurse participation in hospital affairs [32]. Those with a higher eHEALS score had better computer access, and knowledge, and perceived digital tools as useful and ease to use [34, 35]. Finally, the perception of cyberchondria explained 12% of the total variance in eHealth literacy [25].

The mean eHealth literacy measured using DHLI was significantly different based on health care providers’ level of education, hospital affiliation and job category [28]. The study that used the Staff eHLQ reported that males had a higher score on the eHLQ scale 5 (Motivated to engage with digital services) compared to females. Moreover, the Staff eHLQ scale 1–4 (Using technology to process health information, Understanding of health concepts and language, Ability to actively engage with digital services, and Feel safe and in control) was negatively correlated with age. Finally, the Staff eHLQ was positive correlated with experience of quick and easy access to information, sharing of data to reduce double registration and stability of IT systems [38].

The studies assessing eHealth literacy in relation to COVID-19 reported that higher eHealth literacy was associated with a higher educational level, higher income, access to a smartphone, high computer literacy and perception of digital tools as useful and easy to use. These participants also had a favourable attitude to eHealth, good knowledge of eHealth and a higher frequency of internet use [27, 29].

Quality appraisal

To enhance trustworthiness, all studies were quality appraised using the JBI critical appraisal checklist for analytical cross-sectional studies. Overall, on average, the studies reported an adequate score (Yes) in four of the included six questions, however with some variety. One study reported an adequate score in six out of six questions [30], and one study reported an adequate score in only two out of six questions [35]. Some questions showed quality problems; the criteria for inclusion (question 1) were sufficiently defined in only half of the included articles and confounding factors (question 5) were clearly identified in four articles, while strategies to deal with confounding factors were stated in five of the 14 articles (question 6). On a positive note, the study subjects were described in 12 of the 14 included articles (question 2), the outcomes were measured in a valid and reliable way in 11 (question 7), and appropriate statistical analysis was used in all articles (question 8) (Table 3).

Discussion

Only half of the studies presented an established definition of eHealth literacy, which is important for how the concept is operationalised and measured (e.g. construct validity). The findings showed that health care providers have good individual eHealth literacy, primarily measured with the first-generation patient reported outcome measurement for eHealth literacy (the eHEALS) representing the domains dependent on the basic individual eHealth literacy. Assessments on the eHealth literacy domain dependent on how the individual interacts with the eHealth system and domain dependent the system itself (e.g. access to hardware that work) were almost absent. Moreover, the studies were conducted in six different countries, primarily non-Western countries. This, as well as the low number of studies being published on this topic in the era of eHealth development, indicate that eHealth literacy among hospital health care providers is underexplored globally, specifically in Western countries. This is important as eHealth literacy is a key to seeking health information online for appropriate decision-making [39]. Therefore, eHealth literacy resources must be available to support hospital health care providers to access, remember, understand and use up-to-date evidence-based knowledge and help the patients to take health-related decisions through eHealth sources in their daily practice. Increased knowledge about factors that increase eHealth literacy can guide better health care practice and improve patient safety in the era of eHealth. Therefore, to achieve equality in health care service in the era of eHealth, the national government needs to place eHealth literacy among hospital health care providers on the agenda.

As the eHEALS is based on the Lily model, which was developed for the first generation of eHealth back in 2006, these publications may not provide enough knowledge important to implementing eHealth resources that enable digital interactions with patients [12, 14]. Approximately seven eHealth literacy instruments were available in 2021 [40]. Among those, the DHLI and the eHLQ (used in two publications included in this systematic review [28, 38]) were described as second-generation measurements with a broader scope suitable for individuals living in the web 2.0 era of eHealth [40]. However, these measurements were only available in a few languages. The eHEALS has been translated and assessed for psychometric properties in at least 17 languages [40]. Thus, one reason for the large amounts of publications using the eHEALS may be that the second-generation eHealth literacy measurements have not been available in the language in which the studies were carried out.

Furthermore, a discrepancy was identified between the definitions and measurements used in very many of the publications. A previous systematic review has also reported this unfortunate absence of established eHealth literacy definitions [40]. Additionally, a discrepancy between definitions and measurements has also been reported for the concept of health literacy [41]. Defining the concept to be measured is the most basic and important starting point, as it determines the scope of the instrument being developed and will impact on measurement properties [40]. Therefore, it is relevant to question the content and construct validity, and responsiveness of the measurements used to assess eHealth literacy among hospital health care providers. These findings highlight the importance of developing new definitions that conceptualise eHealth literacy among health care providers and operationalise the concept into new and broader measurements. This will secure more reliable and valid assessments of eHealth literacy among health care providers, which can advance the research field. However, the Staff eHLQ is a modified version of the eHLQ. By changing the perspective of the respondent from themselves to their interaction with patients, this measurement should become more adapted to the health care providers who deliver the eHealth resources, and it is the first questionnaire with this approach. However, further evidence is needed on the psychometric properties of the measurement [38].

The hospital health care providers reported an eHEALS score of between 27.8 and 30.7 (8–40) which represents a high self-perceived eHealth literacy, compared with eHEALS scores reported by younger adults in South Korea who are active online users (eHEALS score 28.06) [42] and by a general Dutch population (eHEALS score 27.6) [43]. Moreover, findings in this current systematic review showed that eHealth literacy measured using eHEALS is associated with health promoting behaviour in the studies conducted in Turkey and South Korea [30, 31, 36]. This is also shown in a previous systematic review on eHealth literacy within different age groups (from teenagers to older adults) and among different populations regardless of disease status [44]. This is interesting as health care providers play an important role in encouraging adherence to public health guidelines [45]. Additionally, non-adherence to lifestyle-related health guidelines among nurses in Scotland and England is reported to be high, which raises concerns about the effectiveness of health promotion during patient interactions [45]. The level of eHealth literacy is one of the main factors in combating too much information including false or misleading information in eHealth sources (e.g. infodemic during the COVID-19 pandemic). Increasing eHealth literacy among health care providers could help to avoid the negative consequences of false or misleading information in daily decision-making [39]. However, further investigation is needed to better understand this association as eHealth interventions will increasingly be used in the future to change negative health behaviour and to promote a healthy lifestyle [46].

Interestingly, eHealth literacy was higher among physicians, than other health care providers. This could be related to physicians’ prior digital training and higher level of education, which may be associated with higher eHealth literacy [38]. These results support previous research reporting that health care providers with higher level of education are more willing to improve their digital health competence [4, 47]. This indicate that hospital health care providers’ eHealth literacy strengths and limitations should be considered when developing eHealth resources. Moreover, to implement measures that respond to the health care providers’ eHealth literacy to improve the health care service in the era of eHealth.

It is a paradox that the countries that have measured eHealth literacy among hospital health care providers are those assumed to have lower awareness and utilisation of eHealth technology, such as Ethiopia, and not the countries that have implemented eHealth on a larger scale. Importantly, hospital health care providers in Ethiopia with a higher eHealth literacy score also had better computer access and knowledge, and perceived digital tools as useful and easy to use [27, 29, 34, 35]. Furthermore, as World Health Organization stated that eHealth has been implemented in the absence of a careful examination of the evidence base [48, 49], inadequate knowledge of health care providers’ eHealth literacy may be one of the reasons why Western countries have faced barriers in the implementation processes. Thus, knowledge of health care providers’ eHealth literacy seems highly relevant for countries with lower economic performance, such as Iran and Ethiopia, when developing eHealth programmes to improve the delivery of health care services [50]. However, this knowledge cannot be generalised to Western countries, where eHealth infrastructure exists to very different extent compared to non-Western countries. Moreover, the pattern in eHealth access, use and engagement is reported to vary across populations in Europe, and tends to be more widespread in urban areas, and less so among people from ethnic minorities and those facing language barriers [51]. This means that the eHealth literacy of health care providers may vary across continents, countries and regions, and may therefore be addressed differently.

Strengths and limitations

The strength of our systematic mixed studies review lies in its methodological rigour. A systematic approach to collecting data, including a broad search strategy in six databases, developed in close collaboration with an experienced research librarian. The PRISMA guidelines were used to minimise potential sources of bias. In addition, study selection, quality assessment and data extraction were conducted systematically and in parallel by two independent researchers. One limitation could be that the current systematic review only includes quantitative studies, and qualitative studies might have added valuable knowledge to the field.

Furthermore, the measurements used are based on Western conceptualization of eHealth literacy and operationalisation, which may not harmonise with the worldviews of all participants in the studies using these instruments [52]. Therefore, any generalisation of eHealth literacy must be made with caution as context is crucial and factors as described above can influence the results and lead to bias. Our systematic review also had some language restrictions as studies may have been published in other languages that we were not able to identify. A further limitation is the lack of doing meta-analysis to explore the pooled associations between the studies. This was however considered to have limited utility as the included studies were observed to be heterogeneous in execution and choice of outcome measures. The choice of reporting results from studies independent of their quality assessment may also be seen as a limitation.

Conclusions

The result from this systematic review shows that health care providers have good individual eHealth literacy. However, more research is needed on the eHealth literacy domains dependent on how the individual interacts with the eHealth system and on the system itself, using more comprehensive measures. Studies are also needed to measure multidisciplinary professions’ eHealth literacy in the hospital context. Furthermore, most of the studies were conducted in Eastern and Central-Africa, and more research of higher methodological quality is needed in a Western context.

Data Availability

The search strategy is available through DataverseNO.

Abbreviations

- DHLI:

-

The Digital Health Literacy Instrument

- eHealth:

-

Electronic health

- eHEALS:

-

eHealth literacy scale

- PRISMA:

-

The Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- PROSPERO:

-

The International Prospective Register of Systematic Reviews

- Staff eHLQ:

-

Staff eHealth literacy questionnaire

References

World Health Organization. Health literacy development for the prevention and control of noncommunicable diseases: volume 2. A globally relevant perspective. Geneva: World Health Organization; 2022.

Yusefi A, Ebrahim Z, Bastani P, Najibi M, Radinmanesh M, Mehrtak M. Health literacy status and its relationship with quality of life among nurses in teaching hospitals of Shiraz University of Medical Sciences. Iran J Nurs Midwifery Res. 2019;24(1):73–9. https://doi.org/10.4103/ijnmr.IJNMR_205_17.

Mor-Anavy S, Lev-Ari S, Levin-Zamir D, Health Literacy. Primary Care Health Care Providers, and communication. Health Lit Res Pract. 2021;5(3):e194–e200. https://doi.org/10.3928/24748307-20210529-01.

Jarva E, Oikarinen A, Andersson J, Tomietto M, Kääriäinen M, Mikkonen K. Healthcare professionals’ digital health competence and its core factors; development and psychometric testing of two instruments. Int J Med Inform. 2023;171:104995. https://doi.org/10.1016/j.ijmedinf.2023.104995.

Longhini J, Rossettini G, Palese A. Digital Health Competencies among Health Care Professionals: systematic review. J Med Internet Res. 2022;24(8):e36414. https://doi.org/10.2196/36414.

Jimenez G, Spinazze P, Matchar D, van der Koh Choon Huat G, Chavannes NH, et al. Digital health competencies for primary healthcare professionals: a scoping review. Int J Med Inform. 2020;143:104260. https://doi.org/10.1016/j.ijmedinf.2020.104260.

Norman CD, Skinner HA, eHEALS. The eHealth literacy scale. J Med Internet Res. 2006;8(4):e27. https://doi.org/10.2196/jmir.8.4.e27.

Norman CD, Skinner HA. eHealth literacy: essential skills for consumer health in a networked world. J Med Internet Res. 2006;8(2):1–10. https://doi.org/10.2196/jmir.8.2.e9.

Norman C. eHealth literacy 2.0: problems and opportunities with an evolving concept. J Med Internet Res. 2011;13(4):1–4.

Chan CV, Kaufman DR. A framework for characterizing eHealth literacy demands and barriers. J Med Internet Res. 2011;13(4):e94. https://doi.org/10.2196/jmir.1750.

Bautista JR. From solving a Health Problem to Achieving Quality of Life: redefining eHealth literacy. J Lit Technol. 2015;16(2).

Griebel L, Enwald H, Gilstad H, Pohl AL, Moreland J, Sedlmayr M. eHealth literacy research-quo vadis? Inf Health Soc Care. 2018;43(4):427–42. https://doi.org/10.1080/17538157.2017.1364247.

van der Vaart R, Drossaert C. Development of the digital health literacy instrument: measuring a broad spectrum of Health 1.0 and Health 2.0 skills. J Med Internet Res. 2017;19(1):e27. https://doi.org/10.2196/jmir.6709.

Norgaard O, Furstrand D, Klokker L, et a. The e-health literacy framework: a conceptual framework for characterizing e-health users and their interaction with e-health systems. Knowl Manag E-Learn. 2015;7(4):522–40.

World Health Organization. World Health Organization. National eHealth Strategy Toolkit. 2012. http://apps.who.int/iris/bitstream/handle/10665/75211/9789241548465_eng.pdf?sequence=1.

Booth RG, Strudwick G, McBride S, O’Connor S, Solano López AL. How the nursing profession should adapt for a digital future. BMJ. 2021;373:n1190. https://doi.org/10.1136/bmj.n1190.

Kuek A, Hakkennes S. Healthcare staff digital literacy levels and their attitudes towards information systems. Health Inf J. 2020;26(1):592–612. https://doi.org/10.1177/1460458219839613.

Brørs G, Norman CD, Norekvål TM. Accelerated importance of eHealth literacy in the COVID-19 outbreak and beyond. Eur J Cardiovasc Nurs. 2020;19(6):458–61. https://doi.org/10.1177/1474515120941307.

Schreiweis B, Pobiruchin M, Strotbaum V, Suleder J, Wiesner M, Bergh B. Barriers and facilitators to the implementation of eHealth Services: systematic literature analysis. J Med Internet Res. 2019;21(11):e14197. https://doi.org/10.2196/14197.

Brown J, Pope N, Bosco AM, Mason J, Morgan A. Issues affecting nurses’ capability to use digital technology at work: an integrative review. J Clin Nurs. 2020;29(15–16):2801–19. https://doi.org/10.1111/jocn.15321.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:n71. https://doi.org/10.1136/bmj.n71.

National Institute for Health Research. International prospective register of systematic reviews (PROSPERO). https://www.crd.york.ac.uk/prospero/display_record.php?ID=CRD42022363039. Accessed 20 May 2018.

Brørs G, Larsen HM, Hølvold Benjaminsen L, Wahl KA. Replication Data for: eHealth literacy among hospital health care providers: a systematic review. DataverseNO. 2023. https://doi.org/10.18710/VTTH2P.

Moola S, Munn Z, Tufanaru CAE, Sears K, Sfetcu R, Currie M et al. Chapter 7: Systematic reviews of etiology and risk. In: Aromataris E, Munn Z, editors. JBI Manual for Evidence Synthesis. JBI. 2020. https://doi.org/10.46658/JBIMES-20-08.

Özer Ö, Özmen S, Özkan O. Investigation of the effect of cyberchondria behavior on e-health literacy in healthcare workers. Hosp Top. 2021:1–9. https://doi.org/10.1080/00185868.2021.1969873.

Isazadeh M, Asadi ZS, Badiani E, Taghizadeh MR. Electronic health literacy level in nurses working at selected military hospitals in Tehran in 2019. Ann Mil Health Sci Res. 2020;17(4):e99377. https://doi.org/10.5812/amh.99377.

Ahmed MH, Guadie HA, Ngusie HS, Teferi GH, Gullslett MK, Hailegebreal S, et al. Digital Health literacy during the COVID-19 pandemic among Health Care Providers in Resource-Limited Settings: cross-sectional study. JMIR Nurs. 2022;5(1):e39866. https://doi.org/10.2196/39866.

Alipour J, Payandeh A. Assessing the level of digital health literacy among healthcare workers of teaching hospitals in the southeast of Iran. Inf Med Unlocked. 2022;29:100868. https://doi.org/10.1016/j.imu.2022.100868.

Chereka AA, Demsash AW, Ngusie HS, Kassie SY. Digital health literacy to share COVID-19 related information and associated factors among healthcare providers worked at COVID-19 treatment centers in Amhara region, Ethiopia: a cross-sectional survey. Inf Med Unlocked. 2022;30:100934. https://doi.org/10.1016/j.imu.2022.100934.

Cho H, Han K, Park BK. Associations of eHealth literacy with health-promoting behaviours among hospital nurses: a descriptive cross-sectional study. J Adv Nurs. 2018;74(7):1618–27. https://doi.org/10.1111/jan.13575.

Gartrell K, Han K, Trinkoff A, Cho H. Three-factor structure of the eHealth literacy scale and its relationship with nurses’ health-promoting behaviours and performance quality. J Adv Nurs. 2020;76(10):2522–30. https://doi.org/10.1111/jan.14490.

Kritsotakis G, Andreadaki E, Linardakis M, Manomenidis G, Bellali T, Kostagiolas P. Nurses’ ehealth literacy and associations with the nursing practice environment. Int Nurs Rev. 2021;68(3):365–71. https://doi.org/10.1111/inr.12650.

Shiferaw KB, Mehari EA. Internet use and eHealth literacy among health-care professionals in a resource limited setting: a cross-sectional survey. Adv Med Educ Pract. 2019;10:563–70. https://doi.org/10.2147/amep.S205414.

Tesfa GA, Yehualashet DE, Ewune HA, Zemeskel AG, Kalayou MH, Seboka BT. eHealth literacy and its Associated factors among Health Professionals during the COVID-19 pandemic in Resource-Limited Settings: cross-sectional study. JMIR Form Res. 2022;6(7):e36206. https://doi.org/10.2196/36206.

Tesfa GA, Kalayou MH, Zemene W. Electronic Health-Information Resource utilization and its Associated factors among Health Professionals in Amhara Regional State Teaching Hospitals, Ethiopia. Adv Med Educ Pract. 2021;12:195–202. https://doi.org/10.2147/amep.S289212.

Yoğurtcu H, Ozturk Haney M. The relationship between e-health literacy and health-promoting behaviors of turkish hospital nurses. Glob Health Promot. 2022;17579759221093389. https://doi.org/10.1177/17579759221093389.

Şayık D, Uçan A. Determination of anxiety and e-Health literacy levels and related factors in physicians and nurses involved in the treatment and care of COVID-19 patients. ESTUDAM Public Health Journal. 2022;7(2):340–50. https://doi.org/10.35232/estudamhsd.1065427.

Kayser L, Karnoe A, Duminski E, Jakobsen S, Terp R, Dansholm S, et al. Health Professionals’ eHealth literacy and system experience before and 3 months after the implementation of an Electronic Health Record System: longitudinal study. JMIR Hum Factors. 2022;9(2):e29780. https://doi.org/10.2196/29780.

Assaye BT, Kassa M, Belachew M, Birhanu S, Worku A. Association of digital health literacy and information-seeking behaviors among physicians during COVID-19 in Ethiopia: a cross-sectional study. Digit Health. 2023;9:20552076231180436. https://doi.org/10.1177/20552076231180436.

Lee J, Lee E-H, Chae D. eHealth literacy Instruments: systematic review of Measurement Properties. J Med Internet Res. 2021;23(11):e30644. https://doi.org/10.2196/30644.

Urstad KH, Andersen MH, Larsen MH, Borge CR, Helseth S, Wahl AK. Definitions and measurement of health literacy in health and medicine research: a systematic review. BMJ open. 2022;12(2):e056294. https://doi.org/10.1136/bmjopen-2021-056294.

Chung S, Park BK, Nahm ES. The korean eHealth literacy scale (K-eHEALS): reliability and validity testing in younger adults recruited online. J Med Internet Res. 2018;20(4):e138. https://doi.org/10.2196/jmir.8759.

van der Vaart R, van Deursen AJ, Drossaert CH, Taal E, van Dijk JA. Does the eHealth literacy scale (eHEALS) measure what it intends to measure? Validation of a dutch version of the eHEALS in two adult populations. J Med Internet Res. 2011;13(4):e86. https://doi.org/10.2196/jmir.1840.

Kim K, Shin S, Kim S, Lee E. The relation between eHealth literacy and health-related behaviors: systematic review and Meta-analysis. J Med Internet Res. 2023;25:e40778. https://doi.org/10.2196/40778.

Schneider A, Bak M, Mahoney C, Hoyle L, Kelly M, Atherton IM, et al. Health-related behaviours of nurses and other healthcare professionals: a cross-sectional study using the Scottish Health Survey. J Adv Nurs. 2019;75(6):1239–51. https://doi.org/10.1111/jan.13926.

Chatterjee A, Prinz A, Gerdes M, Martinez S. Digital Interventions on healthy Lifestyle Management: systematic review. J Med Internet Res. 2021;23(11):e26931. https://doi.org/10.2196/26931.

Jarva E, Oikarinen A, Andersson J, Tuomikoski AM, Kääriäinen M, Meriläinen M, et al. Healthcare professionals’ perceptions of digital health competence: a qualitative descriptive study. Nurs Open. 2022;9(2):1379–93. https://doi.org/10.1002/nop2.1184.

Tromp J, Jindal D, Redfern J, Bhatt A, Séverin T, Banerjee A, et al. World Heart Federation Roadmap for Digital Health in Cardiology. Glob Heart. 2022;17(1):61. https://doi.org/10.5334/gh.1141.

World Health Organization. World Health Organization (WHO) guideline. Recommendations on digital interventions for health system strengthening. World Health Organization: World Health Organization; 2019. https://www.who.int/publications/i/item/9789241550505.

Wubante SM, Tegegne MD, Melaku MS, Kalayou MH, Tarekegn YA, Tsega SS, et al. eHealth literacy and its associated factors in Ethiopia: systematic review and meta-analysis. PLoS ONE. 2023;18(3):e0282195. https://doi.org/10.1371/journal.pone.0282195.

World health organization. Equity within digital health technology within the WHO European Region: a scoping review. 2022.

Osborne RH, Cheng CC, Nolte S, Elmer S, Besancon S, Budhathoki SS, et al. Health literacy measurement: embracing diversity in a strengths-based approach to promote health and equity, and avoid epistemic injustice. BMJ Global Health. 2022;7(9):e009623. https://doi.org/10.1136/bmjgh-2022-009623.

Acknowledgements

The authors are grateful for the assistance provided by Lise Marie Blomseth for the development of Fig. 2.

Funding

Not applicable.

Open access funding provided by Norwegian University of Science and Technology

Author information

Authors and Affiliations

Contributions

GB was responsible for study conception. GB developed the systematic review protocol, and registered it in PROSPERO. MHL, LBH and AKW contributed to the development of the protocol. LBH conducted the literature search. MHL and AKW scanned selected titles and abstracts and assessed full-text versions independently. GB, MHL and AKW were independently conducting the quality appraisal in pairs. GB extracted the data to a standardized data collection form, wrote the first draft of the manuscript and prepared figures. MHL, LBH and AKW revised the manuscript critically. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Brørs, G., Larsen, M.H., Hølvold, L.B. et al. eHealth literacy among hospital health care providers: a systematic review. BMC Health Serv Res 23, 1144 (2023). https://doi.org/10.1186/s12913-023-10103-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-023-10103-8