Abstract

Background

An increasing number of countries are using or planning to use quality indicators (QIs) in residential long-term care. Knowledge regarding the current state of evidence on usage and methodological soundness of publicly reported clinical indicators of quality in nursing homes is needed. The study aimed to answer the questions: 1) Which health-related QIs for residents in long-term care are currently publicly reported internationally? and 2) What is the methodological quality of these indicators?

Methods

A systematic search was conducted in the electronic databases PubMed, CINAHL and Embase in October 2019 and last updated on August 31st, 2022. Grey literature was also searched. We used the Appraisal of Indicators through Research and Evaluation (AIRE) instrument for the methodological quality assessment of the identified QIs.

Results

Of 23′344 identified records, 22 articles and one report describing 21 studies met the inclusion criteria. Additionally, we found 17 websites publishing information on QIs. We identified eight countries publicly reporting a total of 99 health-related QIs covering 31 themes. Each country used between six and 31 QIs. The most frequently reported indicators were pressure ulcers, falls, physical restraints, and weight loss. For most QI sets, we found basic information regarding e.g., purpose, definition of the indicators, risk-adjustment, and stakeholders’ involvement in QIs’ selection. Little up to date information was found regarding validity, reliability and discriminative power of the QIs. Only the Australian indicator set reached high methodological quality, defined as scores of 50% or higher in all four AIRE instrument domains.

Conclusions

Little information is available to the public and researchers for the evaluation of a large number of publicly reported QIs in the residential long-term care sector. Better reporting is needed on the methodological quality of QIs in this setting, whether they are meant for internal quality improvement or provider comparison.

Similar content being viewed by others

Background

Due to demographic changes, residential long-term care (RLTC) institutions are increasingly challenged by growing numbers of older residents with complex care needs and dwindling supply of trained workforce [1]. The share of people aged over 80 years is expected to double by 2050 [2]. Simultaneously the number of RLTC workers per 100 people aged 65 and over has stagnated or decreased in many countries, raising concerns about capacity to meet the care needs in the coming years [3]. These challenges make monitoring and assessing of quality in RLTC crucial, where we understand RLTC as any type of setting where older adults reside and receive 24 h formal long-term care services, i.e., by paid care staff, and there is an expectation of a long stay [4].

Considerable public and private spending on health care results in an increased demand for transparency and accountability for the quality of care provided [2, 5]. Accordingly, quality indicators (QIs) are increasingly used internationally to measure, report and track quality of care over time. In the US, for example, the basis for the nation-wide measurement was laid with the 1987 Omnibus Budget Reconciliation Act (OBRA) – reacting to reports of quality problems in RLTC –, which mandated a comprehensive assessment with a Minimum Data Set (MDS). Based on the MDS, an array of QIs were developed, tested and implemented to initiate quality improvement in the context of the Nursing Home Case Mix and Quality Demonstration Project of the Centers of Medicare & Medicaid Services (CMS) starting in 1996 and linking funding schemes to QI measurement [6, 7]. QIs can be used to identify potential quality problems or to guide quality improvement initiatives [8, 9]. Their public reporting allows benchmarking between health care institutions or against established thresholds. Therefore, QIs can be a valuable source of information for health care providers, residents, insurers, governments and researchers. Although QIs are not absolute measures of quality, they can reflect aspects of it by describing desired or undesired structures, processes and outcomes [9]. QIs need to meet minimum methodological standards to be usable and useful. They need to be carefully developed to ensure that they accurately and consistently measure what they are supposed to measure. In order to be accepted as valid measures [10], QIs should cover relevant subjects and be feasible for use in practice. If intended for provider comparison, it is important that they show sufficient discriminative power and that risk-adjustment is applied to account for between-provider differences, e.g., in residents’ case-mix [11]. Finally, QIs need to be comprehensible to the public, so that the information can be correctly interpreted. Considering its importance, the information regarding the methodological quality of QIs should be publicly available to allow for correct interpretation of the results and international comparisons. This includes information about their development, definitions, measurement, risk-adjustment and measurement quality (e.g., its validity, reliability, and discriminative power).

Two published reviews considered methodological quality of QIs used in RLTC. In 2009, Nakrem at al [12]. investigated the development descriptions and the validity testing of national QIs obtained from peer-reviewed and grey literature in a convenience sample of seven countries. This review included indicators considered nursing sensitive, but not necessarily publicly reported. Hutchinson at al. (2010) [13] systematically examined the evidence for the validity and reliability of QIs based on Resident Assessment Instrument - Minimum Data Set (RAI-MDS) 2.0. Although several countries derive their QIs from RAI-MDS data, both the publicly reported indicators from RAI-MDS and the instrument itself have evolved over the last 10 years.

With the launching of national QIs in 2019, Switzerland is one of the countries that has recently started to measure quality in RLTC and the results have recently been published for the first time. A total of 6 QIs were included in the initial QI set, which is expected to be expanded [11]. Since an increasing number of countries are using or planning to measure quality in RLTC, knowledge regarding the current state of evidence on the usage and quality of publicly reported health-related QIs is needed. By health-related QIs we understand indicators concerning the process of health-care (e.g., medication review) or clinical outcomes of the residents, such as pain or falls. In order to inform choices regarding possible themes of QI measurements and to provide an update on the current state of evidence, this systematic literature review aimed to answer the following questions: 1) Which health-related QIs for RLTC are currently publicly reported internationally? and 2) What is the methodological quality of these indicators?

Methods

Search strategy

A systematic search was conducted in the electronic databases PubMed, CINAHL and Embase in October 2019 and updated twice, last on August 31st 2022. We constructed a search string including Medical Subject Headings (MeSH) and key words related to the concepts of RLTC and QIs or public reporting, connected with appropriate Boolean operators (see Additional file 1). We also tracked references of included articles for unidentified relevant studies. Additionally, we searched for grey literature on organizational websites mentioned in identified studies, as well as on websites recommended by experts or identified when screening published reports. Grey literature was searched in English, German, French, and Spanish and an electronic translation to screen websites in other languages was used. If the published literature on publicly reported QIs was scarce or unclear, we contacted the reports’ authors or website owners via e-mail for further information. Additional file 2 provides a list of consulted websites and contacted institutions.

Inclusion and exclusion criteria

Based on preliminary inclusion and exclusion criteria, the first author screened around 40 papers for title and abstract to refine the criteria. In the discussion with the last author, the final set of criteria was developed, with refinements in the definition of RLTC (e.g., no short-stay residents) and target population (e.g., only QIs including all residents and not subgroups like palliative care or rehabilitative care). In the end, we included published primary studies and grey literature (e.g., government reports) which described the development, testing or measurement of publicly reported health-related QIs in RLTC. The QIs were included, if we found information that they were publicly reported at the time of the review at any level (e.g., facility, regional, national) on mandatory or voluntary basis. The QIs had to be related to the process of health-care or to health-related resident outcomes. We excluded QIs related to quality of life, such as satisfaction with care services, structural QIs, such as staffing levels or financing, and QIs designed specifically for short-term or specialized care, such as rehabilitative or palliative care, since the latter cannot be applied to the general population of residents. We also excluded editorials, comments and reader’s letters.

Screening and data extraction

Identified studies were entered and managed in EndNote and duplicates were removed. One author (MO) screened by title and abstract for relevance according to the inclusion criteria and non-eligible studies were removed. Potentially eligible articles underwent full-text screening (MO) for inclusion. Doubts about inclusion were clarified with the third author (FZ). Reference lists of included studies were also screened (MO) for further relevant literature. Eligible grey literature (e.g., reports and manuals) found on websites in other languages than English, German, French, or Spanish were translated into English with DeepL Pro or Google Translate translation service before being screened (MO, FZ). Definitive inclusion was agreed on by two authors (MO and FZ).

Methodological assessment

We used the Appraisal of Indicators through Research and Evaluation (AIRE) instrument for the methodological assessment of the identified QIs [14]. AIRE is a validated instrument for the critical appraisal of QIs and has been previously used in various settings [15,16,17,18]. AIRE includes 20 items in four domains: 1) purpose, relevance and organizational context, 2) stakeholder involvement, 3) scientific evidence, and 4) additional evidence, formulation and usage. A detailed description of the items can be found in Additional file 3. All three authors appraised the QIs independently using information from included studies, websites and emails from consulted organisations. The assessment of each AIRE item is based on a 4-point Likert scale, ranging from 1 ‘strongly disagree or no information available’ to 4 ‘strongly agree’. Differences of more than 1 point in assessment were resolved in discussion between the authors. We calculated standardized scores per domain, which can range from 0 to 100%, with a higher score indicating a higher methodological quality.

Results

Search results

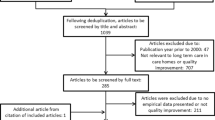

The systematic review – including records found in other sources – identified 23′344 potential records. After removing duplicates, 16′973 were screened by title and abstract, 144 publications underwent full-text screening and 23 met inclusion criteria. Of 39 consulted websites 17 contained information on QIs relevant for the review. We contacted 10 organizations for further information, of which 6 replied. Figure 1 presents the flow diagram for the QI selection process and Additional file 4 gives an overview of publications excluded based on full-text screening.

Description of included studies and grey literature

Twenty two articles [6, 8, 19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38] and one study report [39] were included. The articles were published between 1995 and 2021. Nineteen studies were conducted in the U.S., one in Canada [23] and one in the Netherlands [21]. Eleven studies described the development or evaluation of QI sets and the other evaluated single QIs or QIs pertaining to one theme. Apart from the study from the Netherlands [21], all studies regarded QIs derived from the MDS. The included studies are described in Table 1.

All countries published information on their QIs on websites [40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56]. There we found manuals or guides on QI definitions, measurements, collection, reporting and interpretation in the U.S. [57,58,59], Canada [60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75], Australia [76, 77], New Zealand [78], the Netherlands [79], Belgium [80], Norway [81,82,83,84,85,86,87] and Sweden [88,89,90,91]. Development and validation reports were available for the QI sets from the U.S. [39], Canada [92] and Australia [93]. Some information on QI selection and development was also included in documents from the Netherlands [79], Belgium [80] and Sweden [94]. QI results from all countries were published on websites accessible to the public [42, 45, 47,48,49,50, 52, 53, 55, 56, 95].

Purpose, data collection and reporting characteristics of the QI sets

We identified eight countries publicly reporting health-related QIs. They are selected, collected and reported in different ways based on feasibility, practicality and stated purposes. In the U.S., Canada, New Zealand, Norway and Sweden all or most QIs are built from already available data (i.e., routine assessments or national registries), whereas Australia, Belgium and the Netherlands use separate collection methods (e.g., surveys). Most countries provide online manuals with guidance on how data should be collected; e.g., in Australia, QI data is to be collected via full body assessment (pressure ulcers) or auditing charts (e.g., falls) quarterly by filling out a sheet per resident. Data is then entered in an online platform aggregated at facility level [77]. However, in the manuals we consulted we did not find information about, e.g., minimal standards for training on data collection. Most countries state several purposes for their QI sets, but some differences in their focus can be observed. The U.S. and Canada focus the most on comparing individual service providers. Australia, Belgium and Sweden concentrate rather on supporting RLTC institutions in monitoring and improving care [45, 52, 76, 80]. The main purpose of the QIs in the Netherlands is stimulating learning and improvement in care teams [79]. QIs in Norway are aimed for meeting the information needs of the healthcare sector and the government (e-mail communication).

The purpose of the QIs influences the level (i.e., national, regional, facilities) and the manner of reporting. For instance, the U.S. and Canada developed rating systems and specifically dedicated websites intended to make comparison easier for consumers and almost only use resident outcome indicators (e.g., prevalence of falls) [50, 95]. Countries focusing rather on supporting RLTC institutions in improving care than on provider comparison use more process indicators (e.g., medication review) and do not risk-adjust QIs. Table 2 presents an overview of data collection methods, measurement frequency and reporting characteristics. Types (i.e., prevalence or incidence) and measurement level (i.e., process or outcome) of included QIs are described in Additional file 3.

Publicly reported health-related QIs

In the identified eight countries, a total of 99 QIs were publicly reported, covering 31 themes. Each country reported between six and 31 health-related QIs. The most frequently reported indicators were related to pressure ulcers, falls, physical restraints and weight loss. Table 3 presents the identified QIs themes. In some cases, more than one indicator is used for the same theme. A full list of all included indicators per country can be found in Additional file 3.

Methodological quality of the QIs

The amount of published information regarding QIs methodological qualities differs per country. For all QI sets the QI purpose, definitions with numerator and denominator, exclusion criteria and risk-adjustment information were publicly available. We found published information on the selection or development of QIs for six countries (U.S., Canada, Australia, Belgium, the Netherlands and partly Sweden). For three countries (Norway, New Zealand and Australia), we received more information on QI development via e-mail contact. All countries involved stakeholders in the development of QIs, however the detailed information was not always available. For Norway, we only know that stakeholders were consulted (e-mail communication), for the Netherlands [79], New Zealand (e-mail communication) and Sweden’s medication-related QIs [94], we also know, which expert groups were included. Regarding QIs from Belgium [80] and most QIs from the U.S. [51], the description of some assessment criteria for the evaluation of QIs, such as relevance or influenceability by RLTC institutions, is available. We were only able to find published results of an expert assessment for the Australian QI set [93] and for six of the Canadian QIs [92].

We found little up-to-date information regarding the validity and reliability of the QIs. Two studies assessed the validity of MDS 2.0 QIs using the same data (209 U.S. RLTC institutions) and validity assessment method, but the results differ depending on applied inclusion criteria and risk-adjustment [25, 39]. Only QIs currently used in Canada apply the same or a very similar measurement as the QIs reported in the study by Jones et al. (2010), showing mostly moderate and in some cases insufficient validity [25]. The U.S. and New Zealand apply no or a different risk-adjustment and use different data collection instruments (i.e., MDS 3.0 and interRAI LTCF respectively). Other studies investigated sensitivity or ability to reflect differences in care between facilities with higher and lower QI scores in multiple [23, 28] or single MDS 2.0 QIs [19, 22, 24, 30,31,32,33,34].

Studies assessing reliability used Kappa statistics. The higher Kappa (range 0–1), the higher the agreement between the two independent raters (interrater reliability) or the higher the consistency of the same rater’s assessment at different timepoints (intrarater reliability). We found interrater reliability results for 17 MDS 2.0-based QIs showing moderate to good Kappa values (0.52–0.89) [39] and on a pressure ulcers QI from the Netherlands (Kappa 0.97) [21]. The reported information on validity and reliability measures per country and per indicator can be found in Additional file 5.

Methodological assessment of the QI sets with AIRE tool

We assessed the methodological quality of a total of 99 QIs from eight countries using the AIRE instrument. As the information regarding domains 1 and 2 for each QI was mostly applicable to all QIs in a set, we decided to evaluate domain 1 and 2 per country, while in domains 3 and 4 each QI was evaluated separately. Assessment results for each domain per country are reported in Table 4 and the results for each QI can be found in Additional file 3.

As presented in Table 4, most QI sets scored well in domain 1 regarding purpose, relevance and organizational context. For New Zealand and the Netherlands, we found little information regarding criteria for selecting the topic of the indicator, which caused a lower rating. The rating in domain 2 shows good ratings for stakeholders’ involvement, except for the Norwegian and Swedish QI sets, where detailed information was not available or scant. Poor performance in domain 3 on scientific evidence has to do with the fact that most countries’ reports stated that a literature search was carried out, but fail to give further details, such as search strategies, reference list of studies based on which the decisions were made, or, if the used studies are referenced, then the quality of the studies was not assessed. In domain 4, the definitions of the QIs and their target groups were consistently well-described and, in most countries, there were also good indications for the display and interpretation of the results. Most frequently lacking was information on validity, discriminative power, testing in practice and the effort required for data collection. Only the Australian indicator set reached high methodological quality, defined as scores of 50% or higher in all four AIRE instrument domains [14].

Discussion

This review identified 99 publicly reported health-related QIs covering 31 themes in eight countries. The most relevant and up-to-date information was found in grey literature. Peer-reviewed studies with scientific investigations could be found almost only for MDS-based QIs. The themes most covered by the QIs were pressure ulcers, falls, physical restraints and weight loss and often several indicators were used to assess one theme. An Australian review report from 2020, which next to health-related QIs included also QIs related to social well-being and quality of life identified a total of 305 internationally reported QIs for residential aged care (both long-term and short-term) in 11 countries [97]. These results indicate high interest in quality and safety assessment in RLTC in developed countries and a large variety of quality measures. Additionally, a recent article compared the measurement of pressure ulcers with four different approaches in more than 25 countries. Although they found methodological differences in point prevalence measurement that hinder comparison, it shows on the one hand the international interest in comparing QI data and on the other hand the importance of methodological soundness in QI development and measurement [98].

We found limited publicly available up-to-date scientific evidence on the RLTC QIs currently in use, which is consistent with findings of previous reviews [12, 13]. The amount of published information on QIs differs between countries. This could be related to the different stages of development and implementation of RLTC QIs and their public reporting. The U.S. has over 20 years of experience in public reporting of QIs in the RLTC [99], while in Australia, first mandatory measurement results at national level were published in 2019. Most studies reporting on the assessment of QIs were carried out in the U.S. in the first years following the introduction of MDS-based indicators. Since then, the measurement of the U.S. QIs has changed in several cases, which is why some of the evidence is no longer applicable or can only be used to a limited extent. Australia, which was the last country to introduce a nationally publicly reported set of QIs, scored best on the AIRE assessment. It may be because national and international interest in transparency increased over the years. On the other hand, in some countries testing may be still planned or in progress and no results can be reported yet.

All countries published information on their RLTC QIs on websites. While it may be a good solution for publishing guides on QI measurement or results, which change with each reporting period, peer-reviewed journal publications seem better suited for reporting on the development and validation of the QIs. It could improve accessibility of the research for the international public. Moreover, it would strengthen the reporting on scientific evidence (e.g., providing details on the used search methods and critical appraisal of the results). Finally, it would prevent loss of accessibility to the reports due to content transfer or removal, e.g., when the website is no longer maintained, as was the case with information published by Health Quality Ontario cited in this review [92]. The lack of scientific publications might be based on the process of QI development, which is often government-initiated and results in government reports with no further funding for the scientific reporting. Here, a rethinking at government level would be needed to strengthen the scientific approach with peer-reviewed publications.

Publicly reported QIs serve different purposes, which partly drives the way the data is collected and reported. We found little evidence regarding the discriminatory power of QIs meant for providers’ comparisons [11]. This is an important aspect both for quality improvement and for comparing service providers (e.g., through benchmarking) [11, 100,101,102]. Benchmarking based on QIs which are not able to identify differences of quality of care beyond chance between facilities or regions can lead to wrong or misleading quality assessments, rankings or comparisons. This can in turn result in inappropriate policies or decisions, unfair treatment of the care providers or misguided quality improvement projects [11, 100,101,102]. Furthermore, while risk-adjustment might not be needed when the goal of the RLTC institutions is to track their own results over time, it is recommended when QIs are used to compare facilities and benchmark them to another or to a set threshold [103].

Some countries, like the U.S. and Canada, use QIs for comparisons between providers, while other mainly aim for quality monitoring and improvement. Both purposes can conceptually lead to better care according to Berwick et al. [104]. On one hand, comparing providers will allow consumers to select better care providers, and might allow regulatory bodies to identify problematic facilities, but it might not fundamentally change the overall performance of the sector. On the other hand, using QIs with the aim to directly improve facilities’ own care processes, and ultimately outcomes, might actually achieve changes in the care quality provided [104]. Ideally, both dynamics would operate stimulatingly for continuous quality improvement of the sector [104]. In practice, using QIs to support quality improvement is difficult and barriers have been identified, such as the lack of organizational and professional skills and capacity in the healthcare sector to use this data for change, or issues with QI measurements [104]. The development, measurement and reporting of QIs depend on their primary purpose. Therefore, countries using or planning to use QIs in RLTC need to coherently construct QIs matching their main purpose [10] and invest in the RLTC sector to fill in deficiencies in capacity linked to QI usage for quality improvement.

Implications for practice, policy and research

Countries should be encouraged to share more broadly and transparently information on the selection, development, evaluation and reporting of QIs. The AIRE criteria can provide guidance regarding aspects to consider in this process. QIs with satisfying measurement properties increase the possibility for countries, regions or facilities to be using QIs in a useful and informative way and for policy-makers to make informed decisions. Increasing published and accessible information on QI sets would also allow researchers to make international comparisons more easily.

Limitations

This review has some limitations. First, it is possible that we have not identified all publicly-reported QI sets because of language barriers. Second, only one team member was primarily responsible for initial and full-text screening, therefore relevant information might have been missed. Third, the AIRE assessment is heavily dependent on the available sources of information and the completeness of the documentation for the assessed QIs. Our rating is hence limited by the lack of available information (e.g., it is possible that the information exists but only in a document intended for internal use) or the difficulty of finding published documents (e.g., due to language barriers). For this reason, the AIRE assessment can only reflect the quality of the QIs on the basis of the publicly available data. In this sense, a low rating in some domains does not necessarily mean that the indicators are of low quality. Lastly, the number, types and measurement of internationally reported QIs can change with each following reporting period. For instance, in Australia the nationally reported QI set was extended in July 2021 [41]. However, this did not change our overall quality assessment findings and we did not integrate changes concerning new or changed QIs in our results. A new QI set was also developed and tested in Germany [105,106,107], but public reporting of the collected data was postponed due to COVID-19 pandemic [108].

Conclusion

A growing number of countries are using and reporting QI results on a wide range of themes with various purposes and in different ways. Publicly available information on the RLTC QIs was limited for a number of methodological aspects. There is a need for better reporting on the selection and development of QIs, so that these can be used in a useful way to ultimately improve the quality of care provided to the residents.

Availability of data and materials

Data sharing is not applicable to this article as no datasets were generated or analysed during the study.

Abbreviations

- ADL:

-

Activities of Daily Living

- AIRE:

-

Appraisal of Indicators through Research and Evaluation

- CMS:

-

Centers for Medicare & Medicaid Servies

- LTCF:

-

Long-term care facilitiy

- MDS:

-

Minimum Data Set

- MeSH:

-

Medical Subject Heading

- QI:

-

Quality indicator

- RAI:

-

Resident Assessment Instrument

- RLTC:

-

Residential long-term care

- U.S.:

-

United States

- UTI:

-

Urinary tract infection

References

World Health Organization. World report on ageing and health. Geneva: WHO; 2015.

OECD. Health at a glance 2021: OECD indicators. Paris; 2021. https://www.oecd-ilibrary.org/content/publication/4dd50c09-en

OECD. Who cares? Attracting and retaining Care Workers for the Elderly. Paris; 2020. https://www.oecd-ilibrary.org/content/publication/92c0ef68-en

Siegel EO, Backman A, Cai Y, Goodman C, Ocho ON, Wei S, et al. Understanding contextual differences in residential LTC provision for cross-National Research: identifying internationally relevant CDEs. Gerontol Geriatr Med. 2019;5:2333721419840591.

European Commission, Economic Policy Committee. Joint Report on Health Care and Long-Term Care Systems & Fiscal Sustainability. Volume 1. Luxembourg; 2016. https://ec.europa.eu/info/sites/default/files/file_import/ip037_vol1_en_2.pdf. Accessed 30 March 2022

Zimmerman DR, Karon SL, Arling G, Clark BR, Collins T, Ross R, et al. Development and testing of nursing home quality indicators. Health Care Financ Rev. 1995;16(4):107–27.

Mor V. Defining and measuring quality outcomes in long-term care. J Am Med Dir Assoc. 2006;7(8):532–8 discussion 8–40.

Zimmerman DR. Improving nursing home quality of care through outcomes data: the MDS quality indicators. Int J Geriatr Psychiatry. 2003;18(3):250–7.

Mainz J. Defining and classifying clinical indicators for quality improvement. Int J Qual Health Care. 2003;15(6):523–30.

Schang L, Blotenberg I, Boywitt D. What makes a good quality indicator set? A systematic review of criteria. Int J Qual Health Care. 2021;33(3),1-10.

Favez L, Zúñiga F, Sharma N, Blatter C, Simon M. Assessing nursing homes quality Indicators’ between-provider variability and reliability: a cross-sectional study using ICCs and Rankability. Int J Environ Res Public Health. 2020;17(24).

Nakrem S, Vinsnes AG, Harkless GE, Paulsen B, Seim A. Nursing sensitive quality indicators for nursing home care: international review of literature, policy and practice. Int J Nurs Stud. 2009;46(6):848–57.

Hutchinson AM, Milke DL, Maisey S, Johnson C, Squires JE, Teare G, et al. The resident assessment instrument-minimum data set 2.0 quality indicators: a systematic review. BMC Health Serv Res. 2010;10:166.

De Koning J, Smulders A, Klazinga N. Appraisal of indicators through research and evaluation (AIRE) Versie 2.0. Amsterdam: Academic Medical Center; 2007.

Dequanter S, Buyl R, Fobelets M. Quality indicators for community dementia care: a systematic review. Eur J Pub Health. 2020;30(5):879–85.

O'Riordan F, Shiely F, Byrne S, Fleming A. Quality indicators for hospital antimicrobial stewardship programmes: a systematic review. J Antimicrob Chemother. 2021;76(6):1406–19.

Righolt AJ, Sidorenkov G, Faggion CM Jr, Listl S, Duijster D. Quality measures for dental care: a systematic review. Community Dent Oral Epidemiol. 2019;47(1):12–23.

Wagner A, Schaffert R, Möckli N, Zúñiga F, Dratva J. Home care quality indicators based on the resident assessment instrument-home care (RAI-HC): a systematic review. BMC Health Serv Res. 2020;20(1):366.

Bates-Jensen BM, Cadogan M, Osterweil D, Levy-Storms L, Jorge J, Al-Samarrai N, et al. The minimum data set pressure ulcer indicator: does it reflect differences in care processes related to pressure ulcer prevention and treatment in nursing homes? J Am Geriatr Soc. 2003;51(9):1203–12.

Berg K, Mor V, Morris J, Murphy KM, Moore T, Harris Y. Identification and evaluation of existing nursing homes quality indicators. Health Care Financ Rev. 2002;23(4):19–36.

Bours GJ, Halfens RJ, Lubbers M, Haalboom JR. The development of a national registration form to measure the prevalence of pressure ulcers in the Netherlands. Ostomy Wound Manage. 1999;45(11):28–33 6–8, 40.

Cadogan MP, Schnelle JF, Yamamoto-Mitani N, Cabrera G, Simmons SF, Cadogan MP, et al. A minimum data set prevalence of pain quality indicator: is it accurate and does it reflect differences in care processes? Gerontol A Biol Sci Med Sci. 2004;59(3):281–5.

Estabrooks CA, Knopp-Sihota JA, Norton PG. Practice sensitive quality indicators in RAI-MDS 2.0 nursing home data. BMC Res Notes. 2013;6:460.

Hill-Westmoreland EE, Gruber-Baldini AL. Falls documentation in nursing homes: agreement between the minimum data set and chart abstractions of medical and nursing documentation. J Am Geriatr Soc. 2005;53(2):268–73.

Jones RN, Hirdes JP, Poss JW, Kelly M, Berg K, Fries BE, et al. Adjustment of nursing home quality indicators. BMC Health Serv Res. 2010;10:96.

Karon SL, Sainfort F, Zimmerman DR. Stability of nursing home quality indicators over time. Med Care. 1999;37(6):570–9.

Mor V, Angelelli J, Jones R, Roy J, Moore T, Morris J. Inter-rater reliability of nursing home quality indicators in the U.S. BMC Health Serv Res. 2003;3(1):20.

Rantz MJ, Hicks L, Petroski GF, Madsen RW, Mehr DR, Conn V, et al. Stability and sensitivity of nursing home quality indicators. Gerontol A Biol Sci Med Sci. 2004;59(1):79–82.

Rantz MJ, Popejoy L, Mehr DR, Zwygart-Stauffacher M, Hicks LL, Grando V, et al. Verifying nursing home care quality using minimum data set quality indicators and other quality measures. J Nurs Care Qual. 1997;12(2):54–62.

Schnelle JF, Bates-Jensen BM, Levy-Storms L, Grbic V, Yoshii J, Cadogan M, et al. The minimum data set prevalence of restraint quality indicator: does it reflect differences in care? Gerontologist. 2004;44(2):245–55.

Schnelle JF, Cadogan MP, Yoshii J, Al-Samarrai NR, Osterweil D, Bates-Jensen BM, et al. The minimum data set urinary incontinence quality indicators: do they reflect differences in care processes related to incontinence? Med Care. 2003;41(8):909–22.

Stevenson KB, Moore JW, Sleeper B. Validity of the minimum data set in identifying urinary tract infections in residents of long-term care facilities. J Am Geriatr Soc. 2004;52(5):707–11.

Wu N, Miller SC, Lapane K, Roy J, Mor V. The quality of the quality indicator of pain derived from the minimum data set. Health Serv Res. 2005;40(4):1197–216.

Simmons SF, Garcia EG, Cadogan MP, Al-Samarrai NR, Levy-Storms LF, Osterweil D, et al. The minimum data set weight-loss quality Indicator: does it reflect differences in care processes related to weight loss? J Am Geriatr Soc. 2003;51(10):1410–8.

Mintz J, Lee A, Gold M, Hecker EJ, Colón-Emeric C, Berry SD. Validation of the minimum data set items on falls and injury in two long-stay facilities. J Am Geriatr Soc. 2021;69(4):1099–100.

Phillips CD, Shen R, Chen M, Sherman M. Evaluating nursing home performance indicators: an illustration exploring the impact of facilities on ADL change. Gerontologist. 2007;47(5):683–9.

Sanghavi P, Pan S, Caudry D. Assessment of nursing home reporting of major injury falls for quality measurement on nursing home compare. Health Serv Res. 2020;55(2):201–10.

Wu N, Mor V, Roy J. Resident, nursing home, and state factors affecting the reliability of minimum data set quality measures. Am J Med Qual. 2009;24(3):229–40.

Morris JN, Moore T, Jones R, Mor V, Angelelli J, Berg K, et al. Validation of long-term and post-acute care quality indicators. Cambridge: Abt Associates Inc, Brown University; 2003. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/NursingHomeQualityInits/Downloads/NHQIFinalReport.pdf. Accessed 20 Mar 2020.

Alberta Health Services. https://www.albertahealthservices.ca/about/Page12954.aspx. Accessed 20 March 2020.

Australian Government Depertment of Health. National Aged Care Mandatory Quality Indicator Program (QI Program). https://www.health.gov.au/initiatives-and-programs/national-aged-care-mandatory-quality-indicator-program. Accessed: 21 June 2021.

Australian Institute of Health and Welfare. GEN aged care data. https://www.gen-agedcaredata.gov.au/Topics/Quality-in-aged-care/Residential-Aged-Care-Quality-Indicators—Previous-. Accessed 20 March 2020.

Canadian Institute for Health Information. Indicator library. https://indicatorlibrary.cihi.ca/display/HSPIL/Indicator+Library. Accessed: 20 March 2020.

Centers for Medicare & Medicaid Services. Quality Measures https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/NursingHomeQualityInits/NHQIQualityMeasures. Accessed: 26 April 2021.

Folkhälsomyndigheten. Svenska HALT. https://www.folkhalsomyndigheten.se/halt/. Accessed 29 March 2022.

Health Quality Ontario. http://indicatorlibrary.hqontario.ca/IndicatorByCategory/EN. Accessed 20 March 2020.

Helsedirektoratet. Nasjonale kvalitetsindikatorer (NKI). https://www.helsedirektoratet.no/statistikk/statistikk/kvalitetsindikatorer. Accessed: 20 March 2020.

interRAI New Zealand. Quality indicators in aged residential care. https://www.interrai.co.nz/data-and-reporting/quality-indicators/. Accessed 20 March 2020.

Kolada. Jämföraren. https://www.kolada.se/verktyg/jamforaren/?_p=jamforelse&focus=16680&tab_id=84176. Accessed 29 March 2022.

Medicare.gov. https://www.medicare.gov/care-compare/?providerType=NursingHome&redirect=true. Accessed 20 March 2020.

National Quality Forum (NQF). Measure Endorsement https://www.qualityforum.org/Measuring_Performance/Consensus_Development_Process/CSAC_Decision.aspx. Accessed 26 April 2021.

Senior alert. https://www.senioralert.se. Accessed: 29 March 2022.

Socialstyrelsen. Öppna jämförelser av äldreomsorg. https://www.socialstyrelsen.se/statistik-och-data/oppna-jamforelser/socialtjanst/aldreomsorg/. Accessed 29 March 2022.

State of Victoria, Department of Health & Human Services. https://www.health.vic.gov.au/residential-aged-care/quality-indicators-in-public-sector-residential-aged-care-services. Accessed 20 March 2020.

Vlaams Instituut voor Kwaliteit van Zorg. Resultaten van de kwaliteitsmetingen in woonzorgcentra. https://www.zorgkwaliteit.be/woonzorgcentra. Accessed 5 March 2020.

Zorginstituut Nederland. Open data Verpleeghuiszorg. https://www.zorginzicht.nl/openbare-data/open-data-verpleeghuiszorg#verslagjaar-2020. Accessed 26 April 2021.

RTI International. MDS 3.0 Quality Measures User’s Manual (v.14.0). 2020. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/NursingHomeQualityInits/NHQIQualityMeasures. Accessed 15 Sep 2020.

Abt Associates. Nursing home compare claims-based quality measure technical specifications. 2019. https://www.cms.gov/Medicare/Provider-Enrollment-and-Certification/CertificationandComplianc/Downloads/APPENDIX-New-Claims-based-Measures-Technical-Specifications-April-2019.pdf. Accessed 15 Sep 2020.

RTI International. Skilled Nursing Facility Quality Reporting Program Measure Calculation s and Reporting User’s Manual Version 3.0. 2019. https://www.cms.gov/Medicare/Quality-Initiatives-Patient-Assessment-Instruments/NursingHomeQualityInits/NHQIQualityMeasures. Accessed 15 Sep 2020.

Alberta Health. RAI-MDS 2.0 Quality Indicator Interpretation Guide.; 2015. https://open.alberta.ca/dataset/aa056894-c85f-4526-a3da-25cd64795082/resource/05bfbf33-070c-49d7-a4ce-1fc7857741cd/download/cc-cihi-rai-guide-2015.pdf. Accessed 20 March 2020.

Canadian Institute for Health Information (CIHI). Worsened Physical Functioning in Long-Term Care. http://indicatorlibrary.cihi.ca/display/HSPIL/Worsened+Physical+Functioning+in+Long-Term+Care. Accessed 20 April 2020.

Canadian Institute for Health Information (CIHI). Improved Physical Functioning in Long-Term Care. http://indicatorlibrary.cihi.ca/display/HSPIL/Improved+Physical+Functioning+in+Long-Term+Care. Accessed 20 April 2020.

Canadian Institute for Health Information (CIHI). Percentage of Residents Whose Bladder Continence Worsened. http://indicatorlibrary.cihi.ca/display/HSPIL/Percentage+of+Residents+Whose+Bladder+Continence+Worsened. Accessed:20 April 2020.

Canadian Institute for Health Information (CIHI). Falls in the Last 30 Days in Long-Term Care. http://indicatorlibrary.cihi.ca/display/HSPIL/Falls+in+the+Last+30+Days+in+Long-Term+Care. Accessed 20 April 2020.

Canadian Institute for Health Information (CIHI). Experiencing Worsened Pain in Long-Term Care. http://indicatorlibrary.cihi.ca/display/HSPIL/Experiencing+Worsened+Pain+in+Long-Term+Care. Accessed 20 April 2020.

Canadian Institute for Health Information (CIHI). Worsened Pressure Ulcer in Long-Term Care. http://indicatorlibrary.cihi.ca/display/HSPIL/Worsened+Pressure+Ulcer+in+Long-Term+Care. Accessed 20 April 2020.

Canadian Institute for Health Information (CIHI). Worsened Depressive Mood in Long-Term Care. http://indicatorlibrary.cihi.ca/display/HSPIL/Worsened+Depressive+Mood+in+Long-Term+Care. Accessed 20 April 2020.

Canadian Institute for Health Information (CIHI). Percentage of Residents Who Had a Newly Occurring Stage 2 to 4 Pressure Ulcer. http://indicatorlibrary.cihi.ca/display/HSPIL/Percentage+of+Residents+Who+Had+a+Newly+Occurring+Stage+2+to+4+Pressure+Ulcer. Accessed 20 April 2020.

Canadian Institute for Health Information (CIHI). Percentage of Residents Whose Behavioural Symptoms Worsened. http://indicatorlibrary.cihi.ca/display/HSPIL/Percentage+of+Residents+Whose+Behavioural+Symptoms+Worsened. Accessed 20 April 2020.

Canadian Institute for Health Information (CIHI). Percentage of Residents Whose Behavioural Symptoms Improved. http://indicatorlibrary.cihi.ca/display/HSPIL/Percentage+of+Residents+Whose+Behavioural+Symptoms+Improved. Accessed 20 April 2020.

Canadian Institute for Health Information (CIHI). Potentially Inappropriate Use of Antipsychotics in Long-Term Care. http://indicatorlibrary.cihi.ca/display/HSPIL/Potentially+Inappropriate+Use+of+Antipsychotics+in+Long-Term+Care. Accessed 20 April 2020.

Canadian Institute for Health Information (CIHI). Experiencing Pain in Long-Term Care. http://indicatorlibrary.cihi.ca/display/HSPIL/Experiencing+Pain+in+Long-Term+Care. Accessed: 20 April 2020.

Canadian Institute for Health Information (CIHI). Restraint Use in Long-Term Care. http://indicatorlibrary.cihi.ca/display/HSPIL/Restraint+Use+in+Long-Term+Care. Accessed 20 April 2020.

Health Quality Ontario. Potentially avoidable emergency department visits for long-term care residents. http://indicatorlibrary.hqontario.ca/Indicator/Detailed/Potentially-avoidable-emergency-department-visits-LTC/EN. Accessed: 30 March 2020.

Health Quality Ontario. Percentage of long-term care home residents who developed a stage 2 to 4 pressure ulcer or had a pressure ulcer that worsened to a stage 2, 3 or 4. http://indicatorlibrary.hqontario.ca/Indicator/Detailed/Pressure-Ulcers-Among-Residents/EN. Accessed 20 April 2020.

My Aged Care. National Aged Care Mandatory Quality Indicator Program Manual 1.0. 2019. https://agedcare.health.gov.au/quality/quality-indicators/national-aged-care-mandatory-quality-indicator-program-manual-10. Accessed 20 March 2020.

State of Victoria. Department of Health & Human Services. Quality indicators in public sector residential aged care services. Resource materials. Department of Health & Human Services, State Government of Victoria, Australia; 2015. https://content.health.vic.gov.au/sites/default/files/migrated/files/collections/policies-and-guidelines/q/quality-indicators-psracs-201-pdf.pdf. Accessed 20 March 2020.

interRAI New Zealand. Quality indicators in aged residential care. https://www.interrai.co.nz/data-and-reporting/quality-indicators/. Accessed 5 March 2020.

ActiZ, Verenso, Verpleegkundigen & Verzorgenden Nederland, Zorgthuisnl. Handboek voor zorgaanbieders van verpleeghuiszorg Toelichting op de kwaliteitsindicatoren en meetinstructie verslagjaar 2020.; 2020. https://www.zorginzicht.nl/binaries/content/assets/zorginzicht/kwaliteitsinstrumenten/handboek-indicatoren-basisveiligheid-verslagjaar-2020.pdf. Accessed 20 March 2020.

Vlaams indicatorenproject woonzorgcentra: handleiding 1.8.; 2018. https://www.zorg-en-gezondheid.be/sites/default/files/atoms/files/HandleidingKwaliteitsindicatoren_versie1%208_0.pdf. Accessed 20 March 2020.

Norwegian Ministry of Health (Helsedirektoratet). Nasjonalt kvalitetsindikatorsystem: Kvalitetsindikatorbeskrivelse. Antibiotikabruk i sykehjem. https://www.helsedirektoratet.no/statistikk/kvalitetsindikatorer/legemidler/antibiotikabruk-i-sykehjem/Antibiotikabruk%20i%20sykehjem-1.0apr2019.pdf/_/attachment/inline/1d0fec4e-9418-465e-b76b-eb6cddbbba44:d2cc183be30d057c412fa4a90a8f3094bd1c2df0/Antibiotikabruk%20i%20sykehjem-1.0apr2019.pdf. Accessed 16 Dec 2019.

Norwegian Ministry of Health (Helsedirektoratet). Nasjonalt kvalitetsindikatorsystem: Kvalitetsindikatordefinisjon. Legemiddelgjennomgang hos beboere på sykehjem. https://www.helsedirektoratet.no/statistikk/kvalitetsindikatorer/kommunale-helse-og-omsorgstjenester/legemiddelgjennomgang-hos-beboere-p%C3%A5-sykehjem/Legemiddelgjennomgang%20hos%20beboere%20p%C3%A5%20sykehjem_juni2019..pdf/_/attachment/inline/c28addab-a80b-4c9f-9955-2837c122783e:3810df806fbb8eb30665fbd49d573305e6bab345/Legemiddelgjennomgang%20hos%20beboere%20p%C3%A5%20sykehjem_juni2019..pdf. Accessed 16 Dec 2019.

Norwegian Ministry of Health (Helsedirektoratet). Nasjonalt kvalitetsindikatorsystem: Kvalitetsindikatordefinisjon. Beboere på sykehjem vurdert av lege siste 12 måneder. https://www.helsedirektoratet.no/statistikk/kvalitetsindikatorer/kommunale-helse-og-omsorgstjenester/Sykehjemsbeboere%20vurdert%20av%20lege%20siste%2012%20m%C3%A5neder. Accessed 16 Dec 2019.

Norwegian Ministry of Health (Helsedirektoratet). Nasjonalt kvalitetsindikatorsystem: Kvalitetsindikatordefinisjon. Beboere på sykehjem vurdert av tannhelsepersonell siste 12 måneder. https://www.helsedirektoratet.no/statistikk/kvalitetsindikatorer/kommunale-helse-og-omsorgstjenester/beboere-p%C3%A5-sykehjem-vurdert-av-tannhelsepersonell-siste-12-m%C3%A5neder/Beboere%20p%C3%A5%20sykehjem%20vurdert%20av%20tannhelsepersonell%20siste%2012%20mn_juni2019..pdf/_/attachment/inline/817ff884-14dc-4c61-9b2f-664a5f64c7dc:959757b065f44f1253a3e5fbb512aa8885834ece/Beboere%20p%C3%A5%20sykehjem%20vurdert%20av%20tannhelsepersonell%20siste%2012%20mn_juni2019..pdf. Accessed 16 Dec 2019.

Norwegian Ministry of Health (Helsedirektoratet). Nasjonalt kvalitetsindikatorsystem: Kvalitetsindikatorbeskrivelse. Legetimer per beboer i sykehjem. https://www.helsedirektoratet.no/statistikk/kvalitetsindikatorer/kommunale-helse-og-omsorgstjenester/legetimer-per-beboer-i-sykehjem/Legetimer%20for%20beboer%20i%20sykehjem%20-2.0%20mars%202017.pdf/_/attachment/inline/822548e1-81fb-4c78-a9f0-2d83c0241143:39913945fe5f22cf1c8814c9b216f7a576f79849/Legetimer%20for%20beboer%20i%20sykehjem%20-2.0%20mars%202017.pdf. Accessed 16 Dec 2019.

Norwegian Ministry of Health (Helsedirektoratet). Nasjonalt kvalitetsindikatorsystem: Kvalitetsindikatordefinisjon. Oppfølging av ernæring hos beboere på sykehjem. https://www.helsedirektoratet.no/statistikk/kvalitetsindikatorer/kommunale-helse-og-omsorgstjenester/oppf%C3%B8lging-av-ern%C3%A6ring-hos-beboere-p%C3%A5-sykehjem/Oppf%C3%B8lging%20av%20ern%C3%A6ring%20hos%20beboere%20p%C3%A5%20sykehjem_juni2019..pdf/_/attachment/inline/727e3e4f-b19b-4a2c-ac80-861999c7fce2:0d36bcc931d89bac43d8f95e78aeb9a05764e28c/Oppf%C3%B8lging%20av%20ern%C3%A6ring%20hos%20beboere%20p%C3%A5%20sykehjem_juni2019..pdf. Accessed 16 Dec 2019.

Norwegian Ministry of Health (Helsedirektoratet). Nasjonalt kvalitetsindikatorsystem: Kvalitetsindikatorbeskrivelse. Forekomst av helsetjenesteassosierte infeksjoner i norske sykehjem. https://www.helsedirektoratet.no/statistikk/kvalitetsindikatorer/infeksjoner/forekomst-av-helsetjenesteassosierte-infeksjoner-i-norske-sykehjem/Forekomst%20av%20helsetjenesteassosierte%20infeksjoner%20i%20norske%20sykehjem%201,0.pdf/_/attachment/inline/43dfbe84-d39a-40e7–9564-8aa305cf6898:1f4be42890eedf637b474230597f1e7a4265924f/Forekomst%20av%20helsetjenesteassosierte%20infeksjoner%20i%20norske%20sykehjem%201,0.pdf. Accessed 16 Dec 2019.

Folkhälsomyndigheten. Svenska HALT, Metodbeskrivning för HALT-mätning på särskilt boende. Punktprevalensmätning av vårdrelaterade infektioner och antibiotikaanvändning inom särskilt boende.; 2020. https://www.folkhalsomyndigheten.se/publicerat-material/publikationsarkiv/s/svenska-halt-metodbeskrivning/?pub=null. Accessed 29 March 2022.

Senior alert. Följa sina resultat, Version 6. https://www.senioralert.se/media/1x2h05wj/rapportbeskrivning-version-6.pdf. Accessed 29 March 2022.

Senior alert. Har ni koll på era resultat? 2021.

Socialstyrelsen. Bilaga – Tabeller – Öppna jämförelser 2021 – Vård och omsorg för äldre. https://www.socialstyrelsen.se/globalassets/sharepoint-dokument/artikelkatalog/oppna-jamforelser/2022-2-7760-tabeller.xlsx. Accessed 29 March 2022.

Health Quality Ontario. LTC Indicator Review Report: The review and selection of indicators for long-term care public reporting.; 2015. https://www.hqontario.ca/Portals/0/documents/system-performance/ltc-indicator-review-report-november-2015.pdf. Accessed 20 March 2020.

Department of Health & Human Services State Government of Victoria. Australia. Public Sector Residential Aged Care Quality of Care Performance Indicator Project Report.; 2004. https://www2.health.vic.gov.au/about/publications/policiesandguidelines/public-sector-residential-aged-care-quality-of-care-performance-indicator-project-report. Accessed 20 March 2020.

Socialstyrelsen. Indikatorer för god läkemedelsterapi hos äldre.; 2017. https://www.socialstyrelsen.se/globalassets/sharepoint-dokument/artikelkatalog/ovrigt/2017-6-7.pdf. Accessed 29.03.2022.

Canadian Institute for Health Information. Your Health System. https://yourhealthsystem.cihi.ca/hsp/indepth?lang=en&_ga=2.259284042.1390645241.1584620109-180361114.1584620109#/. Accessed: 26 April 2021.

Kieft R, Stalpers D, Jansen APM, Francke AL, Delnoij DMJ. The methodological quality of nurse-sensitive indicators in Dutch hospitals: a descriptive exploratory research study. Health Policy. 2018;122(7):755–64.

Caughey GE, Lang CE, Bray SC, Moldovan M, Jorissen RN, Wesselingh S, Inacio MC. International and National Quality and safety indicators for aged care. Report for the Royal Commission into aged care quality and safety. Adelaide: South Australian Health and Medical Research Institute; 2020.

Poldrugovac M, Padget M, Schoonhoven L, Thompson ND, Klazinga NS, Kringos DS. International comparison of pressure ulcer measures in long-term care facilities: assessing the methodological robustness of 4 approaches to point prevalence measurement. J Tissue Viability. 2021;30(4):517–26.

Konetzka RT, Yan K, Werner RM. Two decades of nursing home compare: what have we learned? Med Care Res Rev. 2021;78(4):295–310.

Fung V, Schmittdiel JA, Fireman B, Meer A, Thomas S, Smider N, et al. Meaningful variation in performance: a systematic literature review. Med Care. 2010;48(2):140–8.

Sales AE, Bostrom AM, Bucknall T, Draper K, Fraser K, Schalm C, et al. The use of data for process and quality improvement in long term care and home care: a systematic review of the literature. J Am Med Dir Assoc. 2012;13(2):103–13.

Phillips CD, Zimmerman D, Bernabei R, Jonsson PV. Using the Resident Assessment Instrument for quality enhancement in nursing homes. Age and Ageing. 1997;26(Suppl 2):77-81.

Iezzoni LI. Risk adjustment for performance measurement. In: Smith P, Mossialos E, Papanicolas I, Leatherman S, editors. Performance measurement for Health system improvement experiences, challenges and prospects. New York: Cambridge University Press; 2009. p. 252–85.

Berwick DM, James B, Coye MJ. Connections between quality measurement and improvement. Med Care. 2003;41(1):I-30-I-8.

UBC. Modellhafte Pilotierung von Indikatoren in der stationären Pflege (MoPIP). (SV14–9015). Abschlussbericht zum Forschungsprojekt. Bremen: UBC-Zentrum für Alterns- und Pflegeforschung und UBC-Zentrum für Sozialpolitik.; 2017. https://www.bagfw.de/fileadmin/user_upload/Qualitaet/Materialien_indikatorenbezogenes_Prüfverfahren/Erga__nzt_Abschlussbericht_MoPIP_Universita__t_Bremen_20.03.2017.pdf. Accessed: 20 March 2020.

Wingenfeld K., Engels D., Kleina T., Franz S., Mehlan S., Engel H. Entwicklung und Erprobung von Instrumenten zur Beurteilung der Ergebnisqualität in der stationären Altenhilfe. Abschlussbericht.; 2011. https://www.bagfw.de/fileadmin/user_upload/Abschlussbericht_Ergebnisqualitaet_.pdf. Accessed 20 March 2020.

Wingenfeld K, Stegbauer C, Willms G, Voigt C, Woitzik R. Entwicklung der Instrumente und Verfahren für Qualitätsprüfungen nach §§114 ff. SGB XI und die Qualitätsdarstellung nach §115 Abs. 1a SGB XI in der stationären Pflege: Darstellung der Konzeptionen für das neue Prüfverfahren und die Qualitätsdarstellung. Abschlussbericht. Im Auftrag des Qualitätsausschusses Pflege. Bielefeld/Göttingen; 2018. https://www.gs-qsa-pflege.de/wp-content/uploads/2018/10/20180903_Entwicklungsauftrag_stationär_Abschlussbericht.pdf Accessed 20 March 2020.

Bundesarbeitsgemeinschaft der Freien Wohlfahrtspflege. Qualitätsindikatoren in der stationären Pflege. https://www.bagfw.de/themen/qualitaetsindikatoren-in-der-stationaeren-pflege. Accessed 21 June 2021.

Acknowledgments

Not applicable.

Funding

MO and FZ were financed by the Swiss Federal Office of Public Health for the realization of the original version of this review.

Author information

Authors and Affiliations

Contributions

Magdalena Osińska: Methodology, Formal analysis, Investigation, Writing - Original Draft, Writing - Review & Editing. Lauriane Favez: Formal analysis, Writing - Original Draft, Writing - Review & Editing. Franziska Zúñiga: Conceptualization, Methodology, Formal analysis, Writing - Review & Editing, Supervision, Funding acquisition. The author(s) read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participation

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Search strings used in the databases.

Additional file 2.

List of consulted websites and organizations.

Additional file 3.

List of included QIs with type of QI, measurement level and AIRE rating.

Additional file 4.

List of articles excluded after full-text screening.

Additional file 5.

Reported measurement properties on validity and reliability per QI.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Osińska, M., Favez, L. & Zúñiga, F. Evidence for publicly reported quality indicators in residential long-term care: a systematic review. BMC Health Serv Res 22, 1408 (2022). https://doi.org/10.1186/s12913-022-08804-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-022-08804-7