Abstract

Background

Internationally, it is expected that health services will involve the public in health service design. Evaluation of public involvement has typically focused on the process and experiences for participants. Less is known about outcomes for health services. The aim of this systematic review was to a) identify and synthesise what is known about health service outcomes of public involvement and b) document how outcomes were evaluated.

Methods

Searches were undertaken in MEDLINE, EMBASE, The Cochrane Library, PsycINFO, Web of Science, and CINAHL for studies that reported health service outcomes from public involvement in health service design. The review was limited to high-income countries and studies in English. Study quality was assessed using the Mixed Methods Appraisal Tool and critical appraisal guidelines for assessing the quality and impact of user involvement in health research. Content analysis was used to determine the outcomes of public involvement in health service design and how outcomes were evaluated.

Results

A total of 93 articles were included. The majority were published in the last 5 years, were qualitative, and were located in the United Kingdom. A range of health service outcomes (discrete products, improvements to health services and system/policy level changes) were reported at various levels (service level, across services, and across organisations). However, evaluations of outcomes were reported in less than half of studies. In studies where outcomes were evaluated, a range of methods were used; most frequent were mixed methods. The quality of study design and reporting was inconsistent.

Conclusion

When reporting public involvement in health service design authors outline a range of outcomes for health services, but it is challenging to determine the extent of outcomes due to inadequate descriptions of study design and poor reporting. There is an urgent need for evaluations, including longitudinal study designs and cost-benefit analyses, to fully understand outcomes from public involvement in health service design.

Similar content being viewed by others

Background

Internationally, there is an expectation that health services will involve the public in health service design. The assumption is that public involvement results in services that improve health and quality of life [1,2,3,4,5,6]. While potential outcomes of public involvement are widely discussed, these are primarily focused on outcomes for participants. Less is known about the outcomes of public involvement for health services or how these have been evaluated [4, 7,8,9,10]. This review addresses this gap.

The term ‘outcome’ is used in literature but rarely defined. For this review, any proposed or eventuating positive or negative change to the health service, or product produced, was considered an outcome. Public involvement in health services is promoted as an ethical and democratic right [11, 12], and valuable regardless of outcomes [13]. However, in an era of finite health service resources, robust evidence of the outcomes of public involvement is critical for health service planning [14]. Public involvement requires investment of time and energy for the service and public participants. Financial and other costs can be significant [12]. Participants want their contributions to make a difference, and services want good value for the resources required [15, 16]. Without robust evaluation of outcomes, there is a risk that public involvement is merely a tokenistic attempt to comply with government policy and accreditation standards [17, 18].

Systematic reviews to this point have explored patient participation in shared decision making [19], public involvement in health services in the United Kingdom [20], and the acute healthcare setting [21]; while another is somewhat dated [7]. In a recent review, Boivin et al. explored evaluation tools for patient and public engagement in research and health system decision making [9]. While they found 27 patient and public engagement evaluation tools, most lacked in scientific rigour and conceptual underpinnings, and most focused on evaluations of the engagement process and context rather than outcomes [9]. Comprehensive, purposeful evaluation is needed to understand what difference public involvement makes to health services and the broader community. Given international interest in public participation, a review of outcomes and evaluation approaches is overdue.

The aim of this systematic review was to a) identify and synthesise what is known about health service outcomes of public involvement b) document how outcomes were evaluated. The review questions were: What are the outcomes for health services of public involvement in health service design? Have these outcomes been evaluated, and if so, how?

Methods

The review protocol was registered on the International Prospective Register of Systematic Reviews (PROSPERO) (https://www.crd.york.ac.uk/prospero/, registration number CRD4201809237). The review is reported according to the PRISMA statement.

Definitions used in this review

There is a lack of consistency in defining and conceptualising public involvement, and many overlapping terms exist [22]. Research on public involvement is documented from a wide variety of disciplines and settings and has emerged from disparate policies, contexts and social movements [23]. In this review, public involvement is defined as any interaction between members of the public and employees of a health service, government, or other organisation, with the agreed purpose of designing or re-designing health services. Public involvement was chosen as an umbrella term in the absence of a gold standard. For the purpose of this review user involvement, patient and public involvement, consumer engagement, public participation, community participation, co-design, co-production, or a combination of these terms were accepted.

The definition of a health service was guided by the Australian Privacy Act of 1998 [24], which in summary includes any activity assessing, maintaining, improving, managing, or recording an individual’s health; diagnosing or treating illness, disability, or injury; or dispensing prescribed drugs or medicinal preparations by a pharmacist. Health service design (or re-design) was deemed any activity which proposed or implemented a change to improve service quality [25]. This included developing new models, priority setting, quality improvement projects, and physical environment planning, if they occurred in the context of health service design.

Search strategy

Breadth in study type was deemed necessary to ensure a comprehensive exploration and synthesis of all relevant studies. Searches were completed in March 2018 in MEDLINE, EMBASE, the Cochrane Library, PsycINFO, Web of Science, and CINAHL, and updated in December 2019. There were no date restrictions. A preliminary search of two databases was used to identify additional keywords, index terms or subject headings. The final search strategy was developed in consultation with a university librarian and included combining terms from each of the following groups: health service, involvement, public and service design (see Additional file 1 for example of MEDLINE search strategy).

The search was augmented by hand-searching references lists of included articles, and forward-searching for relevant articles where there was reference to plans for further implementation or evaluation studies.

Eligibility criteria

Articles were included if they were original research published in academic peer-reviewed journals with evidence of public involvement in health service design or re-design, with reported health service outcomes. Outcomes for participating individuals or evaluations of how public involvement was conducted were not the focus of this review. Due to differences in health service design and delivery between low, middle, and high-income countries, the review was limited to high-income countries, defined as members of the Organisation for Economic Co-operation and Development (OECD) at the time the search was conducted [26]. There were no date restrictions. Articles were excluded if they were unavailable in the English language due to time and cost limitations.

Article selection

The systematic review was managed with Covidence™ [27]. Titles and abstracts were reviewed for eligibility independently by the first author and a second member of the research team. Any disagreements were resolved by discussion. The same screening and decision process were used for full-text article eligibility.

Data extraction

The first author extracted data from each article using a standardized Excel spreadsheet. The form was piloted on 10% of randomly selected articles, and modifications were made following discussion from all three researchers. Data extraction included the following: first author; year of publication; study design; level of involvement using the International Association for Public Participation (IAP2) framework [28]; study aim/s and objective/s; details of health service; country; definition/conceptualisation of public involvement; public recruitment procedures; characteristics of public participants; data collection and analysis methods; reported outcomes; study conclusions; strengths and limitations; and details of evaluation including any frameworks or tools. Data were extracted by the first author and reviewed by a second author. Discrepancies were compared against the original article, and disagreements were resolved by discussion.

The IAP2 [28] is a spectrum of five levels of public participation, ranging from the lowest level ‘Inform’, to the highest level ‘Empower’. The level of public involvement in each study was rated on this spectrum. Where projects had multiple components involving the public at different levels or several publications relating to the same study, the highest overall level of public involvement was recorded.

Quality assessment

Quality appraisal of studies was assessed using the Mixed Methods Appraisal Tool (MMAT, 2018 Version) [29]. The MMAT was designed for appraising studies of various designs, has been pilot tested for efficiency, content validity, and reliability, and has been used in over 100 systematic reviews [30, 31]. Each criterion is assessed as either met (‘Yes’), not met (‘No’) or insufficient information for judgement (‘Can’t tell’). The publicly available MMAT Excel spreadsheet was used to record criterion assessment and reviewer comments [32]. The critical appraisal guidelines for assessing the quality and impact of user involvement in health research were used [33]. These guidelines consider the quality of research relating to public involvement. Each article was assessed for compliance against the nine criteria. A scoring system of one point per criterion met was used to assess the overall quality of included articles. Common themes or areas of concern regarding quality were identified to support recommendations for improving reporting or rigour in future studies [34]. Quality assessment was completed by the first author and confirmed by a second author.

Data synthesis

Content analysis, as described by Hsieh and Shannon [35] was used for data synthesis. This inductive approach involved familiarisation with included articles, then exploration and defining of the emerging categories and themes relevant to the research questions. Tabulation and frequencies of the categories derived from the data enabled exploration of various factors, such as the type of outcomes described and the level they occurred at [7, 36] Additional file 2.

Results

Included studies

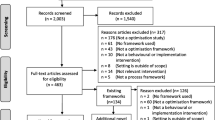

The study selection process is outlined in Fig. 1. A total of 93 articles were included in this review. There were 90 original public involvement studies and the outcomes for these are reported together in Additional file 2.

Study characteristics

Publication dates of included articles ranged from 1994 to 2019, with 61.4% of articles published in the last 5 years. Of the 93 included articles, 55 were qualitative (59.1%); 34 were mixed methods (36.6%); and 4 were quantitative (4.3%). The majority of articles originated in the United Kingdom (n = 49, 52.7%), followed by the United States of America (n = 12, 12.9%), Australia (n = 11, 11.8%), and Canada (n = 10, 10.75%). The remainder were from Norway (n = 4, 4.3%), Spain (n = 2, 2.1%), Sweden (n = 2, 2.1%), Denmark (n = 1, 1.1%), New Zealand (n = 1, 1.1%), and South Korea (n = 1, 1.1%). The most common service type was mental health (n = 23, 25.6%), followed by multiple/all (n = 11, 12.2%), chronic/complex needs (n = 7, 7.8%), oncology (n = 6, 6.7%) and women’s health (n = 5, 5.6%). Authors commonly reported that studies were conducted across multiple settings in a health service (n = 24, 26.7%), followed by outpatients (n = 18, 20.0%), community (n = 17, 18.9%), primary care (n = 13, 14.4%) and acute inpatients (n = 12, 13.3%).

The articles varied in the detail that was provided regarding definition, rationale, or context for the concept of public involvement. For example, formal definitions of key concepts were given in some articles (e.g., of co-production [37]), while others provided an overview of the history and usage of the involvement activity (e.g. Experience based co-design [38], or presented arguments for how and why the public should be involved (e.g. [39]). There were no definitions or conceptualisation of public involvement in almost half of articles (n = 43, 46.2%) [40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82]. Where reported, public participant numbers ranged from 4 [83], to an estimated 1200 [84], though no numbers were given in 13 articles (14.0%) [45, 47, 49, 58, 69, 72,73,74, 77, 85,86,87,88], and incomplete or estimated numbers were provided in a further 25 (26.9%) [40, 43, 44, 50, 51, 56, 57, 61,62,63, 66, 76, 82, 84, 89,90,91,92,93,94,95,96,97,98,99]. Some authors provided demographic data of public participants, such as age, gender, medical condition and/or race, however, there was no detail provided in more than half of articles (n = 52, 55.9%) [37, 38, 40, 41, 43,44,45,46,47,48,49,50,51, 55, 58, 59, 61, 63, 66, 68, 69, 71,72,73,74, 77, 79, 81,82,83,84,85,86,87,88, 93, 95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110]. There were no studies where IAP2 public involvement level was rated as ‘Inform’, or ‘Empower’. Of the included 90 studies, 37 (41.1%) were rated as ‘Consult’, 29 (32.2%) as ‘Involve’, and 24 (26.7%) as ‘Collaborate’ (Additional file 2).

Review question 1: what are the health service outcomes of public involvement in health service design?

The definition of an outcome for this review was intentionally broad, being any proposed or eventuating positive or negative change to the health service or product produced. Table 1 documents the range of health service outcomes that were reported. Outcomes have been grouped into categories of discrete products [130], improvements to health services, and system or policy level change/s. In some articles, multiple types of outcomes were described, and these were categorised as the highest-level outcome reported. Outcomes were produced at the service level (n = 46, 51.1%), across services (n = 16, 17.8%), and across organisations (n = 28, 31.1%). Full detail of the types and range of health service outcomes are outlined in Additional file 2.

Review question 2: have these outcomes been evaluated, and if so, how?

Evaluation of the reported outcome was outlined in less than half of studies (n = 39, 43.3%) [38, 41,42,43,44,45, 47,48,49,50, 53, 54, 56,57,58,59, 61, 65, 66, 68,69,70,71, 80, 81, 83,84,85, 87, 92, 93, 99, 102, 105, 107, 109, 110, 123, 131] (Additional file 2). Some evaluations were reported additionally [59, 70, 107] or exclusively [50, 53, 61, 66, 71, 76, 81, 83, 85, 102, 123, 131] in separate publications. We found examples of mixed methods (n = 21, 53.8% of projects which completed evaluation), quantitative (n = 10, 25.6%), and qualitative outcome evaluations (n = 8, 20.5%). (Additional file 2).

Evaluation method

We observed some trends regarding type of outcome and evaluation method chosen. Studies that resulted in discrete products (e.g., information leaflets, decision tools) were typically evaluated using pre-post measures of knowledge and/or health literacy [48, 50, 61]. For outcomes that included apps and e-health interventions, usability [42, 80, 132,133,134], acceptance [45], feasibility [57, 135,136,137], and perceived confidence in self-management [102] were measured. Studies evaluated over longer periods explored health outcomes using, for example, laboratory tests, neonatal outcomes, and body mass index with comparisons to prior to the new service or intervention, or in trials that compared an intervention to a control group.

Qualitative evaluation

Qualitative evaluation via participant feedback was common. Data were collected through interviews [38, 42, 44, 45, 58, 59, 62, 65, 72, 80, 81, 84, 87, 93, 100, 105, 109, 123, 138], focus groups [44, 45, 62, 70, 136], questionnaires or surveys [42, 45, 48, 59, 62, 66, 84, 100], evaluation forms [92], online collection of comments [70], and a yarning circle, “..a culturally-appropriate form of group discussion …” ([93], p.5). In one study, it was noted that “ …researchers spoke with consumers and other advocates…to elicit their opinions about how the public mental health system has changed” ([49], p.46). Stakeholder views were rarely measured using standardized tools, with the exception of the study by Cook et al. [85], which used the Client Services Questionnaire in a subsequent randomized controlled trial [139].

Quantitative evaluation

Data on indicators such as service usage, treatment uptake, client/patient attendance, and morbidity were frequently reported [47,48,49, 54, 65, 68, 69, 85, 92, 93, 99, 102]. Evaluations of feasibility, usability, and user acceptance were common in studies designing e-health products [42, 45, 57, 135,136,137]. Pre-existing validated questionnaires or instruments to evaluate outcomes were used in eight studies [44, 50, 65, 72, 139,140,141]. These evaluated health literacy [44], disease severity [65], disease-related stress [140, 142], quality of life [59], life satisfaction [59], self-efficacy [72, 140] perceptions of care and support [139, 140, 143], quality of mobile health apps [137], goals of care [142], and various other cognitive, emotional, behavioural, social or attitudinal elements of service providers and/or users [59, 72, 139, 140]. These were usually self-report instruments used as pre- and post-intervention comparisons with statistical analysis. A number of other authors developed their own questionnaires for pre-post comparisons or post-implementation evaluations.

Timing of evaluation

There was a paucity of longitudinal designs to determine what outcomes are achieved and sustained over the longer term [5]. Only a small number of authors evaluated outcomes over a longer period, for example, at 1 year [65], 2 years [41, 144], 3 years [69], and up to 6 years later [145]. It was sometimes difficult to determine at what point evaluation occurred, with authors typically reporting the length of the project overall but not necessarily when evaluations occurred.

Impact of public involvement on outcomes

Only one study was found which evaluated or measured the influence of public involvement on outcomes. That is, how the new product or service designs were different due to public involvement. Valaitis et al. [110] compared the ideas generated by public participants versus researchers and found nearly half (44.8%) of the ideas generated were novel, feasible, and could be integrated in some way. Some authors made comment on the value of public involvement, but these claims were not supported by objective evaluation. For example, Ruland et al. ([123], p.628-9) developed a software program to help children with cancer to communicate their symptoms, and noted the children involved in the design “…had many excellent and creative suggestions that the design team would not have thought of alone”. Similar observations were reported in three other studies [100, 107, 115].

Economic evaluations

Cost-benefit analyses were not reported in any studies, and economic evaluations were scarce. Locock et al. [107] evaluated the full costs of conducting an accelerated version of an experienced based co-design project compared to the standard method. In four studies, authors reported cost savings as a result of introducing a new model of care [41, 59, 85]. Airoldi [41] reported a 15% reduction in service costs. Forchuk et al. [59] reported an annual cost saving of $500,000 CAD for a new model of care. Cook et al. [139] determined a new model was budget neutral compared to a previous model but resulted in better outcomes and satisfaction for clients.

Quality assessment

The MMAT quality assessment is shown in Additional file 3: Table S1. Only seven articles satisfied the first screening question: “Are there clear research questions?” ([146], p.2). Six were qualitative [84, 90, 100, 107, 111, 125] and generally demonstrated appropriateness of qualitative approach, data collection, analysis and interpretation. One was a non-randomized study [47], and demonstrated appropriate representation of the target population, measurements, and outcome data, accounted for confounders, and administered the intervention as intended.

The application of the critical appraisal guidelines for assessing the quality and impact of user involvement in health research [33] are shown in Additional file 3: Table S2. In many cases, there was insufficient detail reported to determine whether the criteria were met. The authors of the articles generally provided adequate detail about the rationale for, and level of, user involvement, and descriptions indicated ‘added-value’ of user involvement. However, these descriptions were usually reflective in nature or based on anecdotal stakeholder feedback rather than pre-planned evaluation or measurement. The articles were of lower quality for other criteria, indicating a lack of detail about recruitment strategies, user training, and methodological and ethical consideration. There was also limited detail regarding attempts to evaluate the user involvement component or involve users in the dissemination of findings.

Discussion

This is the first systematic review to identify and synthesise what is known about health service outcomes of public involvement and how these outcomes were evaluated. The review addresses a major gap in current knowledge and reinforces the critical need to build a broader evidence base on what public involvement achieves and how best to enact it [10]. Interest in public involvement in health service design is rapidly increasing. In this review, over 60% of included studies were published in the last 5 years. Public involvement in health service is seen by some as a democratic and ethical right, with evaluation of outcomes, not a priority [147]. However, public involvement in health service design is resource intensive [12], and participants expect outcomes from their contributions [14, 16].

Robust evaluation is critical to build an evidence base that informs whether and how public involvement practices are linked to health service outcomes. Without robust evaluation, there is a risk that public involvement is simply a box-ticking exercise to comply with policy and accreditation standards [17, 18]. Evaluation informs what involvement methods are most effective [148] and encourages organisations to focus on the goals for public involvement and whether these have been achieved [149].

Despite the need for robust evaluation, a major finding of this review is that evaluating health service outcomes of public involvement is challenging [10, 150]. Authors commonly reported outputs (what results immediately from the activity) rather than outcomes (the result of the change) [151]. The majority of authors reported ‘added-value’ of public involvement (as per criterion 8 of the critical appraisal guidelines by Wright et al. [33]) and wide-ranging outcomes were clustered into categories of discrete products, improvement to health services, and system or policy level change/s. However, outcomes were evaluated in less than half of all studies.

There is a need for researchers to ensure that well designed, and robust evaluations are included in study designs a priori [152]. However, it is acknowledged that this can be challenging when impacts and outcomes cannot always be predetermined to guide appropriate evaluation methods. We encourage researchers to design broad evaluations and to be flexible enough to incorporate different methods and approaches in their design as their study develops. Russell, Fudge and Greenhalgh [153] reinforce the need for broad evaluation methods as narrow evaluation often fails to capture potential negative impacts and other more complex and longer-term outcomes, such as the impact on health service culture.

Evaluation should be linked to the study design and rationale for public involvement. For example, it is argued that public involvement can improve patient experience or satisfaction with services, and that potentially even “small-scale” changes can make a significant difference for patients [100]. Pre- and post- measures of these variables could be more frequently utilised in future evaluation studies to determine whether this is the case.

Given a rationale for public involvement in health service design is often accountability of resources [3, 14], or more efficient services [154, 155], it was surprising that few authors evaluated cost savings, and none conducted cost-benefit analyses.

In this review, there was a paucity of longitudinal studies that reported the sustainability of outcomes over time. Our findings aligned closely with what Russell, Fudge and Greenhalgh [153] identified as a major risk. That is, a propensity to focus on reporting outcomes that are more easily measured, short term and positive.

Staley [13] argues that public involvement is context-dependent and that evaluating outcomes of specific projects provides limited insight into broader questions about when and how involvement makes a difference or is worth doing. In this review, the majority of studies were conducted in single study sites, and there was a lack of consistency in how outcomes were evaluated. Although there does not yet appear to be a gold standard tool to measure health service outcomes from public involvement [20], tools do exist and have increased in number in the last decade [9]. It is not clear why these tools are not being widely utilised. Further work is needed to understand why this is the case, and to develop, test, and improve evaluation tools and measures. Awareness of these tools may be aided by such resources as the online toolkit produced by the Canadian organisation, Centre of Excellence on Partnership with Patients and the Public (CEPPP) [156].

We do note that there are studies currently underway, that appear to be well designed. The study protocol published by Kjellström et al. [157] may provide more evidence of health service outcomes and evaluation. The aim of their longitudinal, mixed-methods research is “…to explore, enhance and measure the value of co-production for improving the health and well-being of citizens” ([157], p.3), and one objective is to develop and test measures of co-production processes and outcomes. There is potential for national and transnational evaluations across services using consistent evaluation methods, and these could include randomised controlled trials. Large, cross country collaborations could result in the refinement or development of international tools for high quality transnational studies.

The characteristics of studies included in this review suggest a need for published evidence across a broader range of settings. Over half of the studies in this review were from the United Kingdom (UK). This may reflect UK history as one of the first countries to establish a welfare state following the second world war [158], and later, the influence of large government funding into public involvement programmes (e.g. INVOLVE) as part of neoliberal agendas. Major UK health research funding bodies require public involvement with a resultant flow on to academic publications [159]. More Australian studies might have been expected, given public involvement in the design of healthcare is mandated for health services via the National Safety and Quality Health Services Standards [155]. However, while the standards require evidence of consumer involvement for health service accreditation, there is no requirement for services to publish their activity. Additionally, the emphasis on public involvement in nationally competitive funding calls is only relatively recent.

Mental health service types made up over a quarter of the studies. This is not unexpected due to the long history of mental health service users driving health service reform, beginning with the psychiatric survivors’ movement in the 1970s [91]. Consumer involvement in all aspects of mental health service design and delivery has been enshrined in Australia’s National Mental Health Plans since 1992 [160]. There is clearly a need to broaden study context and focus.

There must be a greater focus on quality and reporting in studies of public involvement. Few authors in this review provided adequate details for rigorous assessment of quality appraisal. Authors should report greater detail about the definitions and conceptual underpinnings of public involvement and their study methods. Terminology has both conceptual and pragmatic implications and is necessary for the reader to understand the background and purpose of the study [161]. Without clarity of who is being involved, why, and how, it is hard to see the link between the public involvement element of the activity, and any outcome (expected or unexpected) that is achieved [162]. Reporting guidelines for public involvement do exist and should be utilised. For example, Staniszewska et al. [163] developed a reporting checklist, the Guidance for Reporting Involvement of Patients and the Public (GRIPP, and later updated as GRIPP2).

This review highlights that health service outcomes are sometimes published separately to the public involvement activity. Donetto, Tsianakas and Robert [12] found the majority of experience-based co-design projects included outcome evaluations, but these were often shared within the health organisation or at conferences rather than in academic peer-reviewed publications. In order to build a solid evidence base in the field the findings of future studies must be disseminated in a way that is readily available, such as publishing in open access journals, publishing outcome evaluations with public involvement activity descriptions, and via regular updates of systematic reviews.

Limitations

This was the first review of its type to explore health service outcomes from public involvement and how these outcomes have been evaluated. It was important to capture all study types across a large body of literature on public involvement from multiple disciplines spanning decades. We consulted with a university librarian for advice on the search strategy and completed test searches of the primary databases in order to maximise the number of appropriate studies located. However, the different terminology and methodology in public involvement research has resulted in a large number of titles to screen and studies to review. Thorough cross-checking at all steps by a second reviewer was used to mitigate the potential for human error that is possible with such a large review. Future reviews could consider tighter refinement of terms as the quality of literature allows.

There is a level of subjectivity in quality appraisal tools and in the assignment of a level of public participation as per the IAP2. Bias was reduced by using a second reviewer and by resolving disagreements with consensus.

Finally, the continuous cycle of research and publication will inevitably have affected the results of this review. This review has shown a significant increase in studies in the last 5 years, and this growth is expected to continue. As an evolving field of research, regular updates of systematic reviews of evidence should be considered for new and relevant findings.

Conclusion

Public involvement in health service design requires considerable investment of resources, including time and energy from the public. It is essential that empirical evidence demonstrates how public involvement improves health services and represents a worthwhile use of resources. While this systematic review demonstrates a range of health service outcomes arising from public involvement in health services design, these are predominantly known through descriptive reporting of immediate or shorter-term outcomes. The studies reviewed lacked consistency and varied considerably in quality. Formal evaluation of longer-term outcomes with objective measures is lacking, and there is an absence of large high quality transnational studies. Further research is required to understand what issues and challenges researchers face in evaluating impacts and how this could be improved. There is a critical need to improve the design and quality of studies. Longitudinal study designs which consider economic analyses are required. There must be ongoing development and use of valid and appropriate evaluation tools and reporting guidelines.

Availability of data and materials

Articles included in the analysis are cited in the reference list.

Abbreviations

- CAD:

-

Canadian dollar

- CEPPP:

-

Centre of Excellence on Partnership with Patients and the Public

- GRIPP/GRIPP2:

-

Guidance for Reporting Involvement of Patients and the Public

- IAP2:

-

International Association for Public Participation

- MMAT:

-

Mixed Methods Appraisal Tool

- NHS:

-

National Health Service (UK)

- NICU:

-

Neonatal Intensive Care Unit

- OECD:

-

Organisation for Economic Co-operation and Development

- UK:

-

United Kingdom

References

Preston R, Waugh H, Larkins S, Taylor J. Community participation in rural primary health care: intervention or approach? Aust J Prim Health. 2010;16(1):4–16. https://doi.org/10.1071/PY09053.

Nilsen ES, Myrhaug HT, Johansen M, Oliver S, Oxman AD. Methods of consumer involvement in developing healthcare policy and research, clinical practice guidelines and patient information material. Cochrane Database Syst Rev. 2006;3(3):1–36.

Fredriksson M, Tritter JQ. Disentangling patient and public involvement in healthcare decisions: why the difference matters. Sociol Health Illn. 2017;39(1):95–111. https://doi.org/10.1111/1467-9566.12483.

Burton P. Conceptual, theoretical and practical issues in measuring the benefits of public participation. Evaluation. 2009;15(3):263–84. https://doi.org/10.1177/1356389009105881.

Kenny A, Farmer J, Dickson-Swift V, Hyett N. Community participation for rural health: a review of challenges. Health Expect. 2015;18(6):1906–17. https://doi.org/10.1111/hex.12314.

Florin D, Dixon J. Public involvement in health care. BMJ. 2004;328:159–61.

Crawford MJ, Rutter D, Manley C, Weaver T, Bhui K, Fulop N, et al. Systematic review of involving patients in the planning and development of health care. Br Med J. 2002;325(7375):1–5.

Madden M, Speed E. Beware Zombies and Unicorns: toward critical patient and public involvement in health research in a neoliberal context. Front Sociol. 2017;2:1–6.

Boivin A, L'Espérance A, Gauvin F-P, Dumez V, Macaulay AC, Lehoux P, et al. Patient and public engagement in research and health system decision making: a systematic review of evaluation tools. Health Expect. 2018;21(6):1075–84. https://doi.org/10.1111/hex.12804.

Staniszewska S, Adebajo A, Barber R, Beresford P, Brady L, Brett J, et al. Developing the evidence base of patient and public involvement in health and social care research: the case for measuring impact. Int J Consum Stud. 2011;35(6):628–32. https://doi.org/10.1111/j.1470-6431.2011.01020.x.

Gregory J. Engaging consumers in discussion about Australian health policy: key themes emerging from the AIHPS study. Melbourne: Australian Institute of Health Policy Studies; 2008.

Donetto S, Tsianakas V, Robert G. Using experience-based co-design (EBCD) to improve the quality of healthcare: mapping where we are now and establishing future directions. London: King’s College London; 2014.

Staley K. ‘Is it worth doing?’ Measuring the impact of patient and public involvement in research. Res Involv Engagem. 2015;1(1):6.

Abelson J, Gauvin F-P: Assessing the impacts of public participation: Concepts, evidence and policy implications. In: Centre for Health Economics and Policy Analysis Working Paper Series 2008-01. McMaster University, Hamilton, Canada: Centre for Health Economics and Policy Analysis (CHEPA); 2008.

Bowen S, McSeveny K, Lockley E, Wolstenholme D, Cobb M, Dearden A. How was it for you? Experiences of participatory design in the UK health service. CoDesign. 2013;9(4):230–46. https://doi.org/10.1080/15710882.2013.846384.

Crocker JC, Boylan AM, Bostock J, Locock L. Is it worth it? Patient and public views on the impact of their involvement in health research and its assessment: a UK-based qualitative interview study. Health Expect. 2017;20(3):519–28. https://doi.org/10.1111/hex.12479.

Hyett N, Kenny A, Dickson-Swift V, Farmer J, Boxall A. How can rural health be improved through community participation. Deeble Institute Issues Brief. 2014;2:1–20.

Farmer J, Taylor J, Stewart E, Kenny A. Citizen participation in health services co-production: a roadmap for navigating participation types and outcomes. Aust J Prim Health. 2017;23(6):509–15. https://doi.org/10.1071/PY16133.

Phillips NM, Street M, Haesler E. A systematic review of reliable and valid tools for the measurement of patient participation in healthcare. BMJ Quality Safety. 2015;25(2):110–7. https://doi.org/10.1136/bmjqs-2015-004357.

Mockford C, Staniszewska S, Griffiths F, Herron-Marx S. The impact of patient and public involvement on UK NHS health care: a systematic review. Int J Qual Health Care. 2011;24(1):28–38. https://doi.org/10.1093/intqhc/mzr066.

Clarke D, Jones F, Harris R, Robert G. What outcomes are associated with developing and implementing co-produced interventions in acute healthcare settings? A rapid evidence synthesis. BMJ Open. 2017;7:e014650.

Gallivan J, Kovacs Burns K, Bellows M, Eigenseher C. The many faces of patient engagement. J Particip Med. 2012;4:e32.

Ocloo J, Garfield S, Dawson S, Dean Franklin B. Exploring the theory, barriers and enablers for patient and public involvement across health, social care and patient safety: a protocol for a systematic review of reviews. BMJ Open. 2017;7:e018426.

Privacy Act. (Cth) [Internet]. Canberra: The Office of Parliamentary Counsel. 1988. [cited 2019 June 25]. Available from: https://www.legislation.gov.au/Details/C2020C00025.

Fry KR. Why Hospitals Need Service Design. In: Pfannstiel MA, Rasche C, editors. Service Design and Service Thinking in Healthcare and Hospital Management: Theory, Concepts, Practice. Cham: Springer International Publishing; 2019. p. 377–99.

Members and Partners [http://www.oecd.org/about/membersandpartners/]. Accessed 7 Feb 2018.

Covidence systematic review software. In. Melbourne, Australia: Veritas Health Innovation; 2018.

IAP2 Public participation spectrum [https://www.iap2.org.au/Resources/IAP2-Published-Resources]. Accessed 3 Feb 2018.

Hong QN, Pluye P, Fabregues S, Bartlett G, Boardman F, Cargo M, Dagenais P, Gagnon M-P, Griffiths F, Nicolau B et al: Mixed methods appraisal tool (MMAT), version 2018: Canadian intellectual property office, Industry Canada.; 2018.

Souto RQ, Khanassov V, Hong QN, Bush PL, Vedel I, Pluye P. Systematic mixed studies reviews: updating results on the reliability and efficiency of the mixed methods appraisal tool. Int J Nurs Stud. 2015;52(1):500–1. https://doi.org/10.1016/j.ijnurstu.2014.08.010.

Pace R, Pluye P, Bartlett G, Macaulay AC, Salsberg J, Jagosh J, et al. Testing the reliability and efficiency of the pilot mixed methods appraisal tool (MMAT) for systematic mixed studies review. Int J Nurs Stud. 2012;49(1):47–53. https://doi.org/10.1016/j.ijnurstu.2011.07.002.

Mixed Methods Appraisal Tool public wiki [http://mixedmethodsappraisaltoolpublic.pbworks.com/w/page/24607821/FrontPage]. Accessed 4 Oct 2018.

Wright D, Foster C, Amir Z, Elliott J, Wilson R. Critical appraisal guidelines for assessing the quality and impact of user involvement in research. Health Expect. 2010;13(4):359–68. https://doi.org/10.1111/j.1369-7625.2010.00607.x.

Rieger KL, West CH, Kenny A, Chooniedass R, Demczuk L, Mitchell KM, et al. Digital storytelling as a method in health research: a systematic review protocol. Syst Reviews. 2018;7(1):41–7. https://doi.org/10.1186/s13643-018-0704-y.

Hsieh H-F, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15(9):1277–88. https://doi.org/10.1177/1049732305276687.

Popay J, Roberts H, Sowden A, Petticrew M, Arai L, Rodgers M, Britten N, Roen K, Duffy S. Guidance on the conduct of narrative synthesis in systematic reviews: A product from the ESRC methods programme. Lancaster: Institute for Health Research, Lancaster University; 2006. p. 1–92.

Kidd S, Kenny A, McKinstry C. Exploring the meaning of recovery-oriented care: an action-research study. Int J Ment Health Nurs. 2015;24(1):38–48. https://doi.org/10.1111/inm.12095.

Piper D, Iedema R, Gray J, Verma R, Holmes L, Manning N. Utilizing experience-based co-design to improve the experience of patients accessing emergency departments in new South Wales public hospitals: an evaluation study. Health Serv Manag Res. 2012;25(4):162–72. https://doi.org/10.1177/0951484812474247.

Irving A, Turner J, Marsh M, Broadway-Parkinson A, Fall D, Coster J, et al. A coproduced patient and public event: an approach to developing and prioritizing ambulance performance measures. Health Expect. 2018;21(1):230–8. https://doi.org/10.1111/hex.12606.

Adamou M, Graham K, MacKeith J, Burns S, Emerson L-M. Advancing services for adult ADHD: the development of the ADHD Star as a framework for multidisciplinary interventions. BMC Health Serv Res. 2016;16.

Airoldi M. Disinvestments in practice: overcoming resistance to change through a sociotechnical approach with local stakeholders. J Health Polit Policy Law. 2013;38(6):1149–71. https://doi.org/10.1215/03616878-2373175.

Baggott C, Baird J, Hinds P, Ruland CM, Miaskowski C. Evaluation of Sisom: a computer-based animated tool to elicit symptoms and psychosocial concerns from children with cancer. Eur J Oncol Nurs. 2015;19(4):359–69. https://doi.org/10.1016/j.ejon.2015.01.006.

Bauer AM, Hodsdon S, Bechtel JM, Fortney JC. Applying the principles for digital development: case study of a smartphone app to support collaborative Care for Rural Patients with Posttraumatic Stress Disorder or bipolar disorder. J Med Internet Res. 2018;20(6):e10048. https://doi.org/10.2196/10048.

Beauchamp A, Batterham RW, Dodson S, Astbury B, Elsworth GR, McPhee C, et al. Systematic development and implementation of interventions to OPtimise Health Literacy and Access (Ophelia). BMC Public Health. 2017;17:1–18.

Blanco T, Casas R, Marco A, Martinez I. Micro ad-hoc Health Social Networks (uHSN). Design and evaluation of a social-based solution for patient support. J Biomed Inform. 2019;89:68–80. https://doi.org/10.1016/j.jbi.2018.11.009.

Burbach FR, Amani SK. Appreciative enquiry peer review improving quality of services. Int J Health Care Qual Assur. 2019;32(5):857–66. https://doi.org/10.1108/IJHCQA-01-2018-0015.

Chapman H, Farndon L, Matthews R, Stephenson J. Okay to stay? A new plan to help people with long-term conditions remain in their own homes. Prim Health Care Res Dev. 2018;20:1–6.

Cheng D, Patel P. Optimizing Women’s health in a title X family planning program, Baltimore County, Maryland, 2001-2004. Prev Chronic Dis. 2011;8(6):A126.

Cook JA, Ruggiero K, Shore S, Daggett P, Butler SB. Public-academic collaboration in the application of evidence-based practice in Texas mental health system redesign. Int J Ment Health. 2007;36(2):36–49.

Coylewright M, Shepel K, LeBlanc A, Pencille L, Hess E, Shah N, et al. Shared decision making in patients with stable coronary artery disease: PCI choice. PLoS One. 2012;7(11):e49827. https://doi.org/10.1371/journal.pone.0049827.

Cramp G. Development of an integrated and sustainable rural service for people with diabetes in the Scottish highlands. Rural Remote Health. 2006;6(1):422.

Dinniss S, Roberts G, Hubbard C, Hounsell J, Webb R. User-led assessment of a recovery service using DREEM. Psychiatr Bull. 2007;31(4):124–7. https://doi.org/10.1192/pb.bp.106.010025.

Doherty K, Barry M, Marcano-Belisario J, Arnaud B, Morrison C, Car J, et al. A Mobile app for the self-report of psychological well-being during pregnancy (BrightSelf): qualitative design study. JMIR Ment Health. 2018;5(4):e10007. https://doi.org/10.2196/10007.

Dorrington MS, Herceg A, Douglas K, Tongs J, Bookallil M. Increasing pap smear rates at an urban Aboriginal community controlled health service through translational research and continuous quality improvement. Aust J Prim Health. 2015;21(4):417–22. https://doi.org/10.1071/PY14088.

Douglas CH, Douglas MR. Patient-centred improvements in health-care built environments: perspectives and design indicators. Health Expect. 2005;8(3):264–76. https://doi.org/10.1111/j.1369-7625.2005.00336.x.

Doyle J, Atkinson-Briggs S, Atkinson P, Firebrace B, Calleja J, Reilly R, et al. A prospective evaluation of first people’s health promotion program design in the goulburn-murray rivers region. BMC Health Serv Res. 2016;16:645.

Ennis L, Robotham D, Denis M, Pandit N, Newton D, Rose D, et al. Collaborative development of an electronic personal health record for people with severe and enduring mental health problems. BMC Psychiatry. 2014;14(1):305. https://doi.org/10.1186/s12888-014-0305-9.

Farr M, Pithara C, Sullivan S, Edwards H, Hall W, Gadd C, et al. Pilot implementation of co-designed software for co-production in mental health care planning: a qualitative evaluation of staff perspectives. J Ment Health. 2019;28(5):495–504. https://doi.org/10.1080/09638237.2019.1608925.

Forchuk C, Schofield R, Martin M-L, Sircelj M, Woodcox V, Jewell J, et al. Bridging the discharge process: staff and client experiences over time. J Am Psychiatr Nurses Assoc. 1998;4(4):128–33. https://doi.org/10.1177/107839039800400404.

Gardener A, Ewing G, Farquhar M. Enabling patients with advanced chronic obstructive pulmonary disease to identify and express their support needs to health care professionals: a qualitative study to develop a tool. Palliat Med. 2019;33(6):663–75. https://doi.org/10.1177/0269216319833559.

Hahn-Goldberg S, Okrainec K, Huynh T, Zahr N, Abrams H. Co-creating patient-oriented discharge instructions with patients, caregivers, and healthcare providers. J Hosp Med. 2015;10(12):804–7.

Hahn-Goldberg S, Damba C, Solomon F, Okrainec K, Abrams H, Huynh T. Using co-design methods to create a patient-oriented discharge summary. J Clin Outcomes Manag. 2016;23(7):321–8.

Han N, Han SH, Chu H, Kim J, Rhew KY, Yoon JH, et al. Service design oriented multidisciplinary collaborative team care service model development for resolving drug related problems. PLoS One. 2018;13(9).

Hickman IJ, Coran D, Wallen MP, Kelly J, Barnett A, Gallegos D, et al. 'Back to Life'-using knowledge exchange processes to enhance lifestyle interventions for liver transplant recipients: a qualitative study. Nutr Diet. 2019;76(4):399–406. https://doi.org/10.1111/1747-0080.12548.

Holloway M. Traversing the network: a user-led care pathway approach to the management of Parkinson's disease in the community. Health Soc Care Community. 2006;14(1):63–73. https://doi.org/10.1111/j.1365-2524.2005.00600.x.

Isenberg SR, Crossnohere NL, Patel MI, Conca-Cheng A, Bridges JFP, Swoboda SM, et al. An advance care plan decision support video before major surgery: a patient- and family-centred approach. BMJ Support Palliat Care. 2018;8(2):229–36. https://doi.org/10.1136/bmjspcare-2017-001449.

Kennedy A, Rogers A, Blickem C, Daker-White G, Bowen R. Developing cartoons for long-term condition self-management information. BMC Health Serv Res. 2014;14(1):60. https://doi.org/10.1186/1472-6963-14-60.

Kilander H, Brynhildsen J, Alehagen SW, Fagerkrantz A, Thor J. Collaboratively seeking to improve contraceptive counselling at the time of an abortion: a case study of quality improvement efforts in Sweden. BMJ Sex Reprod Health. 2019;45(3):190–9. https://doi.org/10.1136/bmjsrh-2018-200299.

Kildea S, Hickey S, Nelson C, Currie J, Carson A, Reynolds M, et al. Birthing on country (in our community): a case study of engaging stakeholders and developing a best-practice indigenous maternity service in an urban setting. Aust Health Rev. 2018;42(2):230–8. https://doi.org/10.1071/AH16218.

Krist AH, Peele E, Woolf SH, Rothemich SF, Loomis JF, Longo DR, et al. Designing a patient-centered personal health record to promote preventive care. BMC Med Inform Decis Mak. 2011;11:1–11.

Lo C, Zimbudzi E, Teede H, Cass A, Fulcher G, Gallagher M, et al. Models of care for co-morbid diabetes and chronic kidney disease. Nephrology. 2018;23(8):711–7. https://doi.org/10.1111/nep.13232.

Manning JC, Carter T, Latif A, Horsley A, Cooper J, Armstrong M, et al. Our Care through Our Eyes’. Impact of a co-produced digital educational programme on nurses’ knowledge, confidence and attitudes in providing care for children and young people who have self-harmed: a mixed-methods study in the UK. BMJ Open. 2017;7:e014750.

Marshall M, Noble J, Davies H, Waterman H, Walshe K, Sheaff R, et al. Development of an information source for patients and the public about general practice services: an action research study. Health Expect. 2006;9(3):265–74. https://doi.org/10.1111/j.1369-7625.2006.00394.x.

McWilliams A, Reeves K, Shade L, Burton E, Tapp H, Courtlandt C, et al. Patient and family engagement in the Design of a Mobile Health Solution for pediatric asthma: development and feasibility study. JMIR Mhealth Uhealth. 2018;6(3):e68. https://doi.org/10.2196/mhealth.8849.

Olding M, Hayashi K, Pearce L, Bingham B, Buchholz M, Gregg D, et al. Developing a patient-reported experience questionnaire with and for people who use drugs: a community engagement process in Vancouver's downtown eastside. Int J Drug Policy. 2018;59:16–23. https://doi.org/10.1016/j.drugpo.2018.06.003.

Probst MA, Hess EP, Breslin M, Frosch DL, Sun BC, Langan M-N, et al. Development of a patient decision aid for Syncope in the emergency department: the SynDA tool. Acad Emerg Med. 2018;25(4):425–33. https://doi.org/10.1111/acem.13373.

Reaume-Zimmer P, Chandrasena R, Malla A, Joober R, Boksa P, Shah JL, et al. Transforming youth mental health care in a semi-urban and rural region of Canada: a service description of ACCESS open minds Chatham-Kent. Early Interv Psychiatry. 2019;13(Suppl 1):48–55. https://doi.org/10.1111/eip.12818.

Robinson LJ, Stephens NM, Wilson S, Graham L, Hackett KL. Conceptualizing the key components of rehabilitation following major musculoskeletal trauma: a mixed methods service evaluation. J Eval Clin Pract. 2020;26(5):1–12.

Taylor J, Coates E, Wessels B, Mountain G, Hawley MS. Implementing solutions to improve and expand telehealth adoption: participatory action research in four community healthcare settings. BMC Health Serv Res. 2015;15:1–11.

Tsimicalis A, Rennick J, Stinson J, May SL, Louli J, Choquette A, et al. Usability testing of an interactive communication tool to help children express their Cancer symptoms. J Pediatr Oncol Nurs. 2018;35(5):320–31. https://doi.org/10.1177/1043454218777728.

Woods L, Cummings E, Duff J, Walker K. Conceptual design and iterative development of a mHealth app by clinicians, patients and their families. Stud Health Technol Inform. 2018;252:170–5.

Yu CH, Ke C, Jovicic A, Hall S, Straus SE. Beyond pros and cons - developing a patient decision aid to cultivate dialog to build relationships: insights from a qualitative study and decision aid development. BMC Med Inform Decis Mak. 2019;19(1):186. https://doi.org/10.1186/s12911-019-0898-5.

Latif A, Carter T, Rychwalska-Brown L, Wharrad H, Manning J. Co-producing a digital educational programme for registered children’s nurses to improve care of children and young people admitted with self-harm. J Child Health Care. 2017;21(2):191–200.

Crowley P, Green J, Freake D, Drinkwater C. Primary care trusts involving the community: is community development the way forward? J Manag Med. 2002;16(4):311–22. https://doi.org/10.1108/02689230210445121.

Cook JA, Shore SE, Burke-Miller JK, Jonikas JA, Ferrara M, Colegrove S, et al. Participatory action research to establish self-directed care for mental health recovery in Texas. Psychiatr Rehabil J. 2010;34(2):137–44. https://doi.org/10.2975/34.2.2010.137.144.

Cotterell P, Sitzia J, Richardson A. Evaluating partnerships with cancer patients. Pract Nurs. 2004;15(9):430–5. https://doi.org/10.12968/pnur.2004.15.9.15950.

Diamond B, Parkin G, Morris K, Bettinis J, Bettesworth C. User involvement: substance or spin? J Ment Health. 2003;12(6):613–26. https://doi.org/10.1080/09638230310001627964.

Knight JA. Change management in cancer care: a one-stop gynaecology clinic. Br J Nurs. 2007;16(18):1122–6. https://doi.org/10.12968/bjon.2007.16.18.27505.

Calvillo-Arbizu J, Roa-Romero LM, Estudillo-Valderrama MA, Salgueira-Lazo M, Areste-Fosalba N, del-Castillo-Rodriguez NL, et al. User-centred design for developing e-health system for renal patients at home (AppNephro). Int J Med Inform. 2019;125:47–54. https://doi.org/10.1016/j.ijmedinf.2019.02.007.

Cooke M, Campbell M. Comparing patient and professional views of expected treatment outcomes for chronic obstructive pulmonary disease: a Delphi study identifies possibilities for change in service delivery in England, UK. J Clin Nurs. 2014;23(13–14):1990–2002. https://doi.org/10.1111/jocn.12459.

Cooper K, Gillmore C, Hogg L. Experience-based co-design in an adult psychological therapies service. J Ment Health. 2016;25(1):36–40. https://doi.org/10.3109/09638237.2015.1101423.

de Souza S, Galloway J, Simpson C, Chura R, Dobson J, Gullick NJ, et al. Patient involvement in rheumatology outpatient service design and delivery: a case study. Health Expect. 2017;20(3):508–18. https://doi.org/10.1111/hex.12478.

Durey A, McEvoy S, Swift-Otero V, Taylor K, Katzenellenbogen J, Bessarab D. Improving healthcare for Aboriginal Australians through effective engagement between community and health services. BMC Health Serv Res. 2016;16:1–13.

Hobson EV, Baird WO, Partridge R, Cooper CL, Mawson S, Quinn A, et al. The TiM system: developing a novel telehealth service to improve access to specialist care in motor neurone disease using user-centered design. Amyotrophic Lateral Sclerosis Frontotemporal Degeneration. 2018;19(5-6):1–11.

Kohler G, Sampalli T, Ryer A, Porter J, Wood L, Bedford L, et al. Bringing value-based perspectives to care: including patient and family members in decision-making processes. Int J Health Policy Manag. 2017;6(11):661–8. https://doi.org/10.15171/ijhpm.2017.27.

Larkin M, Boden ZV, Newton E. On the brink of genuinely collaborative care experience-based co-design in mental health. Qual Health Res. 2015;25(11):1463–76. https://doi.org/10.1177/1049732315576494.

Melnick ER, Hess EP, Guo G, Breslin M, Lopez K, Pavlo AJ, et al. Patient-centered decision support: formative usability evaluation of integrated clinical decision support with a patient decision aid for minor head injury in the emergency department. J Med Internet Res. 2017;19(5):1–12.

Pilgrim D, Waldron L. User involvement in mental health service development: how far can it go? J Ment Health. 1998;7(1):95–104.

Romm KL, Gardsjord ES, Gjermundsen K, Ulloa MA, Berentzen LC, Melle I. Designing easy access to care for first-episode psychosis in complex organizations. Early Interv Psychiatry. 2019;13(5):1276–82.

Boaz A, Robert G, Locock L, Sturmey G, Gager M, Vougioukalou S, et al. What patients do and their impact on implementation: an ethnographic study of participatory quality improvement projects in English acute hospitals. J Health Organ Manag. 2016;30(2):258–78. https://doi.org/10.1108/JHOM-02-2015-0027.

Boyd H, McKernon S, Mullin B, Old A. Improving healthcare through the use of co-design. N Z Med J. 2012;125(1357):76–87.

Castensøe-Seidenfaden P, Husted GR, Teilmann G, Hommel E, Olsen BS, Kensing F. Designing a Self-Management App for Young People With Type 1 Diabetes: Methodological Challenges, Experiences, and Recommendations. JMIR Mhealth Uhealth. 2017;5:e124.

Chappel D, Bailey J, Stacy R, Rodgers H, Thomson R. Implementation and evaluation of local-level priority setting for stroke. Public Health. 2001;115(1):21–9. https://doi.org/10.1016/S0033-3506(01)00409-7.

Collins R, Notley C, Clarke T, Wilson J, Fowler D. Participation in developing youth mental health services: “Cinderella service” to service re-design. J Public Ment Health. 2017;16(4):159–68. https://doi.org/10.1108/JPMH-04-2017-0016.

Dewar B, Mackay R, Smith S, Pullin S, Tocher R. Use of emotional touchpoints as a method of tapping into the experience of receiving compassionate care in a hospital setting. J Res Nurs. 2010;15(1):29–41. https://doi.org/10.1177/1744987109352932.

Fitzgerald MM, Kirk GD, Bristow CA. Description and evaluation of a serious game intervention to engage low secure service users with serious mental illness in the design and refurbishment of their environment. J Psychiatr Ment Health Nurs. 2011;18(4):316–22. https://doi.org/10.1111/j.1365-2850.2010.01668.x.

Locock L, Robert G, Boaz A, Vougioukalou S, Shuldham C, Fielden J, et al. Using a national archive of patient experience narratives to promote local patient-centered quality improvement: an ethnographic process evaluation of ‘accelerated’experience-based co-design. J Health Serv Res Policy. 2014;19(4):200–7. https://doi.org/10.1177/1355819614531565.

McClelland GT, Fitzgerald M. A participatory mobile application (app) development project with mental health service users and clinicians. Health Educ J. 2018;77(7):815–27. https://doi.org/10.1177/0017896918773790.

Tsianakas V, Robert G, Maben J, Richardson A, Dale C, Wiseman T. Implementing patient-centred cancer care: using experience-based co-design to improve patient experience in breast and lung cancer services. Support Care Cancer. 2012;20(11):2639–47. https://doi.org/10.1007/s00520-012-1470-3.

Valaitis R, Longaphy J, Ploeg J, Agarwal G, Oliver D, Nair K, et al. Health TAPESTRY: co-designing interprofessional primary care programs for older adults using the persona-scenario method. BMC Fam Pract. 2019;20(1):122. https://doi.org/10.1186/s12875-019-1013-9.

Coad J, Flay J, Aspinall M, Bilverstone B, Coxhead E, Hones B. Evaluating the impact of involving young people in developing children’s services in an acute hospital trust. J Clin Nurs. 2008;17(23):3115–22. https://doi.org/10.1111/j.1365-2702.2008.02634.x.

Jackson AM. ‘Follow the fish’: involving young people in primary care in Midlothian. Health Expect. 2003;6(4):342–51. https://doi.org/10.1046/j.1369-7625.2003.00233.x.

Jones SP, Auton MF, Burton CR, Watkins CL. Engaging service users in the development of stroke services: an action research study. J Clin Nurs. 2008;17(10):1270–9. https://doi.org/10.1111/j.1365-2702.2007.02259.x.

Kenyon SL, Johns N, Duggal S, Hewston R, Gale N. Improving the care pathway for women who request Caesarean section: an experience-based co-design study. BMC Pregnancy Childbirth. 2016;16:1–13.

Outlaw P, Tripathi S, Baldwin J. Using patient experiences to develop services for chronic pain. Br J Pain. 2018;12(2):122–31. https://doi.org/10.1177/2049463718759782.

Powell J, Lovelock R, Bray J, Philp I. Involving consumers in assessing service quality: benefits of using a qualitative approach. Qual Health Care. 1994;3(4):199–202. https://doi.org/10.1136/qshc.3.4.199.

Thomson A, Rivas C, Giovannoni G. Multiple sclerosis outpatient future groups: improving the quality of participant interaction and ideation tools within service improvement activities. BMC Health Serv Res. 2015;15:1–11.

Csipke E, Papoulias C, Vitoratou S, Williams P, Rose D, Wykes T. Design in mind: eliciting service user and frontline staff perspectives on psychiatric ward design through participatory methods. J Ment Health. 2016;25(2):114–21. https://doi.org/10.3109/09638237.2016.1139061.

Edwards M, Lawson C, Rahman S, Conley K, Phillips H, Uings R. What does quality healthcare look like to adolescents and young adults? Ask the experts! Clin Med (Lond). 2016;16(2):146–51.

Borosund E, Mirkovic J, Clark MM, Ehlers SL, Andrykowski MA, Bergland A, et al. A stress management app intervention for Cancer survivors: design, development, and usability testing. JMIR Form Res. 2018;2(2):e19. https://doi.org/10.2196/formative.9954.

Das A, Svanæs D. Human-centred methods in the design of an e-health solution for patients undergoing weight loss treatment. Int J Med Inform. 2013;82(11):1075–91. https://doi.org/10.1016/j.ijmedinf.2013.06.008.

Lyles CR, Altschuler A, Chawla N, Kowalski C, McQuillan D, Bayliss E, et al. User-centered design of a tablet waiting room tool for complex patients to prioritize discussion topics for primary care visits. JMIR Mhealth Uhealth. 2016;4:e108.

Ruland CM, Starren J, Vatne TM. Participatory design with children in the development of a support system for patient-centered care in pediatric oncology. J Biomed Inform. 2008;41(4):624–35. https://doi.org/10.1016/j.jbi.2007.10.004.

Wärnestål P, Svedberg P, Lindberg S, Nygren JM. Effects of using child personas in the development of a digital peer support service for childhood cancer survivors. J Med Internet Res. 2017;19(5):e161. https://doi.org/10.2196/jmir.7175.

Jessup RL, Osborne RH, Buchbinder R, Beauchamp A. Using co-design to develop interventions to address health literacy needs in a hospitalised population. BMC Health Serv Res. 2018;18:1–13.

Cushen N, South J, Kruppa S. Patients as teachers: the patient's role in improving cancer services. Prof Nurse. 2004;19(7):395–9.

Meldrum J, Pringle A. Sex, lives and videotape. J R Soc Promot Heal. 2006;126(4):172–7. https://doi.org/10.1177/1466424006066284.

Castillo H. Social inclusion and personality disorder. Ment Health Soc Incl. 2013;17(3):147–55. https://doi.org/10.1108/MHSI-05-2013-0019.

Lopatina E, Miller JL, Teare SR, Marlett NJ, Patel J, Barber CEH, et al. The voice of patients in system redesign: a case study of redesigning a centralized system for intake of referrals from primary care to rheumatologists for patients with suspected rheumatoid arthritis. Health Expect. 2019;22(3):348–63. https://doi.org/10.1111/hex.12855.

Bombard Y, Baker GR, Orlando E, Fancott C, Bhatia P, Casalino S, et al. Engaging patients to improve quality of care: a systematic review. Implement Sci. 2018;13(1):1–22.

Owens C, Farrand P, Darvill R, Emmens T, Hewis E, Aitken P. Involving service users in intervention design: a participatory approach to developing a text-messaging intervention to reduce repetition of self-harm. Health Expect. 2011;14(3):285–95. https://doi.org/10.1111/j.1369-7625.2010.00623.x.

Tsimicalis A, Stone PW, Bakken S, Yoon S, Sands S, Porter R, et al. Usability testing of a computerized communication tool in a diverse urban pediatric population. Cancer Nurs. 2014;37(6):E25–34. https://doi.org/10.1097/NCC.0000000000000115.

Tsimicalis A, Le May S, Stinson J, Rennick J, Vachon M-F, Louli J, et al. Linguistic validation of an interactive communication tool to help French-speaking children express their cancer symptoms. J Pediatr Oncol Nurs. 2017;34(2):98–105. https://doi.org/10.1177/1043454216646532.

Arvidsson S, Gilljam B-M, Nygren J, Ruland CM, Nordby-Bøe T, Svedberg P. Redesign and validation of Sisom, an interactive assessment and communication tool for children with cancer. JMIR Mhealth Uhealth. 2016;4:e76.

Doherty K, Marcano-Belisario J, Cohn M, Mastellos N, Morrison C, Car J, et al. Engagement with Mental Health Screening on Mobile Devices: Results from an Antenatal Feasibility Study. In: Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems: 2019; 2019. p. 1–15.

Owens C, Charles N. Implementation of a text-messaging intervention for adolescents who self-harm (TeenTEXT): a feasibility study using normalisation process theory. Child Adolesc Psychiatry Ment Health. 2016;10 London.

Woods LS, Duff J, Roehrer E, Walker K, Cummings E. Patients’ Experiences of Using a Consumer mHealth App for Self-Management of Heart Failure: Mixed-Methods Study. JMIR Human Factors. 2019;6(2).

Husted GR, Weis J, Teilmann G, Castensøe-Seidenfaden P. Exploring the influence of a smartphone app (young with diabetes) on young people’s self-management: qualitative study. JMIR Mhealth Uhealth. 2018;6:e43.

Cook JA, Shore S, Burke-Miller JK, Jonikas JA, Hamilton M, Ruckdeschel B, et al. Mental health self-directed care financing: efficacy in improving outcomes and controlling costs for adults with serious mental illness. Psychiatr Serv. 2019;70(3):191–201. https://doi.org/10.1176/appi.ps.201800337.

Castensøe-Seidenfaden P, Husted GR, Jensen AK, Hommel E, Olsen B, Pedersen-Bjergaard U, et al. Testing a smartphone app (Young with Diabetes) to improve self-management of diabetes over 12 months: randomized controlled trial. JMIR Mhealth Uhealth. 2018;6:e141.

Forchuk C, Chan L, Schofield R, Martin M-L, Sircelj M, Woodcox V, et al. Bridging the discharge process. Can Nurse. 1998;94(3):22–6.

Aslakson RA, Isenberg SR, Crossnohere NL, Conca-Cheng AM, Moore M, Bhamidipati A, et al. Integrating advance care planning videos into surgical oncologic care: a randomized clinical trial. J Palliat Med. 2019;22(7):764–72.

Coylewright WM, Dick DS, Zmolek PB, Askelin MJ, Hawkins HE, Branda HM, et al. PCI choice decision aid for stable coronary artery disease: a randomized trial. Circ Cardiovasc Qual Outcomes. 2016;9(6):767–76.

D’Souza W, Te Karu H, Fox C, Harper M, Gemmell T, Ngatuere M, et al. Long-term reduction in asthma morbidity following an asthma self-management programme. Eur Respir J. 1998;11(3):611–6.

D'Souza W, Slater T, Fox C, Fox B, Te Karu H, Gemmell T, et al. Asthma morbidity 6 yrs after an effective asthma self-management programme in a Maori community. Eur Respir J. 2000;15(3):464–9. https://doi.org/10.1034/j.1399-3003.2000.15.07.x.

Hong QN, Pluye P. A conceptual framework for critical appraisal in systematic mixed studies reviews. J Mixed Methods Res. 2019;13(4):446–60.

Edelman N, Barron D. Evaluation of public involvement in research: time for a major re-think? J Health Serv Res Policy. 2016;21(3):209–11. https://doi.org/10.1177/1355819615612510.

Collins M, Long R, Page A, Popay J, Lobban F. Using the public involvement impact assessment framework to assess the impact of public involvement in a mental health research context: a reflective case study. Health Expect. 2018;21(6):950–63. https://doi.org/10.1111/hex.12688.

Abelson J, Humphrey A, Syrowatka A, Bidonde J, Judd M. Evaluating patient, family and public engagement in health services improvement and system redesign. Healthcare Quarterly. 2018;21(Special Issue):31–7. https://doi.org/10.12927/hcq.2018.25636.

Staley K, Buckland SA, Hayes H, Tarpey M. ‘The missing links’: understanding how context and mechanism influence the impact of public involvement in research. Health Expect. 2014;17(6):755–64. https://doi.org/10.1111/hex.12017.

Stretton A. Notes on project-related outputs, outcomes, and benefits realization in an organisational strategic management context. PM World J. 2020;IX(VIII):1–20.

Esmail L, Moore E, Rein A. Evaluating patient and stakeholder engagement in research: moving from theory to practice.(Report). J Comp Eff Res. 2015;4(2):133.

Russell J, Fudge N, Greenhalgh T. The impact of public involvement in health research: what are we measuring? Why are we measuring it? Should we stop measuring it? Res Involv Engagem. 2020;6(1):63. https://doi.org/10.1186/s40900-020-00239-w.

Janamian T, Crossland L, Wells L. On the road to value co-creation in health care: the role of consumers in defining the destination, planning the journey and sharing the drive. Med J Aust. 2016;204(7):S12–4.

Australian Commission on Safety and Quality in Health Care. National Safety and Quality Health Service Standards. 2nd ed. Sydney: ACSQHC; 2017. p. 1–82.

Patient and public engagement evaluation toolkit [https://ceppp.ca/en/our-projects/evaluation-toolkit/]. Accessed 8 Apr 2019.

Kjellström S, Areskoug-Josefsson K, Gäre BA, Andersson A-C, Ockander M, Käll J, et al. Exploring, measuring and enhancing the coproduction of health and well-being at the national, regional and local levels through comparative case studies in Sweden and England: the ‘Samskapa’research programme protocol. BMJ Open. 2019;9:e029723.

Beresford P. Public participation in health and social care: exploring the co-production of knowledge. Front Sociol. 2019;3(41):1.

Rose D. Patient and public involvement in health research: ethical imperative and/or radical challenge? J Health Psychol. 2014;19(1):149–58. https://doi.org/10.1177/1359105313500249.

Kidd S: Recovery conversations : the centrality of consumer participation in developing and sustaining recovery-oriented public mental health services for people experiencing low prevalence disorders and enduring mental illness in the Loddon Mallee catchment in Victoria. Bundoora: La Trobe University; 2015.

Taylor J, Wilkinson D, Cheers B. Is it consumer or community participation? Examining the links between 'community' and 'participation'. Health Sociol Rev. 2006;15(1):38–47. https://doi.org/10.5172/hesr.2006.15.1.38.

Rifkin SB. Examining the links between community participation and health outcomes: a review of the literature. Health Policy Plan. 2014;29(Supp.2):98–106.

Staniszewska S, Brett J, Simera I, Seers K, Mockford C, Goodlad S, et al. GRIP P2 reporting checklists: tools to improve reporting of patient and public involvement in research. Res Involv Engagem. 2017;3:1–11.

Acknowledgements

The authors acknowledge the assistance of Angela Johns-Hayden, Librarian, in refining the search strategy.

Funding

The review was unfunded.

Author information

Authors and Affiliations

Contributions

All listed authors significantly contributed to this project. Study protocol was developed by NL. NH and AK provided the study design expertise. All authors completed study selection. NL completed the data extraction, quality appraisal, and the initial manuscript draft. All authors contributed to, read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Search strategy example: MEDLINE database.

Additional file 2.

Characteristics of included studies, the outcomes and impacts reported, and how they were evaluated.

Additional file 3:

Quality apprisals of included studies.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Lloyd, N., Kenny, A. & Hyett, N. Evaluating health service outcomes of public involvement in health service design in high-income countries: a systematic review. BMC Health Serv Res 21, 364 (2021). https://doi.org/10.1186/s12913-021-06319-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-021-06319-1