Abstract

Background

Assessment of clinical variation has attracted increasing interest in health systems internationally due to growing awareness about better value and appropriate health care as a mechanism for enhancing efficient, effective and timely care. Feedback using administrative databases to provide benchmarking data has been utilised in several countries to explore clinical care variation and to enhance guideline adherent care. Whilst methods for detecting variation are well-established, methods for determining variation that is unwarranted and addressing this are strongly debated. This study aimed to synthesize published evidence of the use of feedback approaches to address unwarranted clinical variation (UCV).

Methods

A rapid review and narrative evidence synthesis was undertaken as a policy-focused review to understand how feedback approaches have been applied to address UCV specifically. Key words, synonyms and subject headings were used to search the major electronic databases Medline and PubMed between 2000 and 2018. Titles and abstracts of publications were screened by two reviewers and independently checked by a third reviewer. Full text articles were screened against the eligibility criteria. Key findings were extracted and integrated in a narrative synthesis.

Results

Feedback approaches that occurred over a duration of 1 month to 9 years to address clinical variation emerged from 27 publications with quantitative (20), theoretical/conceptual/descriptive work (4) and mixed or multi-method studies (3). Approaches ranged from presenting evidence to individuals, teams and organisations, to providing facilitated tailored feedback supported by a process of ongoing dialogue to enable change. Feedback approaches identified primarily focused on changing clinician decision-making and behaviour. Providing feedback to clinicians was identified, in a range of a settings, as associated with changes in variation such as reducing overuse of tests and treatments, reducing variations in optimal patient clinical outcomes and increasing guideline or protocol adherence.

Conclusions

The review findings suggest value in the use of feedback approaches to respond to clinical variation and understand when action is warranted. Evaluation of the effectiveness of particular feedback approaches is now required to determine if there is an optimal approach to create change where needed.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

Assessment of clinical variation has attracted increasing interest in health systems internationally due to growing awareness about better value and appropriate health care as a mechanism for enhancing efficient, effective and timely care [1,2,3]. Countries including the United States of America (USA), Canada, Spain, United Kingdom (UK), Germany, the Netherlands, Norway, New Zealand and Australia have produced atlases of variation in health care to guide system and service improvements [4,5,6]. Through these atlases, substantial variations in the healthcare provided to patients have been identified across each country, with implications for patient outcomes [7]. Variations have been reported in a range of care areas including surgery for hysterectomy, cataract surgery, planned Caesarian section, arthroscopic surgery and potentially preventable hospitalisations for selected conditions [8, 9].

It is widely acknowledged that not all variation is unwarranted and that some variation may in fact be a marker of effective, patient-centred care [10]. Unwarranted clinical variation (UCV) describes “patient care that differs in ways that are not a direct and proportionate response to available evidence; or to the healthcare needs and informed choices of patients.” [7] Understanding variation and what is unwarranted has been identified as important in guiding value-based healthcare [8, 11]. Value-based healthcare has been conceptualised in the US context in terms of the ‘health outcome achieved per dollar spent,’ but more recently in the UK in terms of optimising the value of resources through their utilisation for each patient sub-group, which is determined by clinicians [12, 13]. In healthcare systems such as the US, healthcare providers are also transitioning from volume-based to value-based payments for care. In the context of these shifts, understanding the variations that exist and care that is considered ‘low value’ are critical [11, 12, 14, 15].

Application of well-established statistical frameworks to the processes and treatments undertaken across health systems internationally has produced a substantial body of literature documenting the nature of variations [16,17,18]. Whilst methods for detecting variation, such as exploring statistically significant deviation from acceptable parameters, are widely acknowledged, methods for determining variation that warrants action or is considered problematic, is debated strongly [18]. Furthermore, the optimal approach for reducing UCV is also unclear. In 2017, a review of approaches to address UCV highlighted that determining clinical variation that is unwarranted is a challenge for care decisions that may vary based on patient preferences or for which there is mixed evidence of its effectiveness [19].

Feedback using administrative databases to provide benchmarking data has been utilised in several countries to explore clinical care variation and to enhance guideline adherent care [18, 19]. The Australian Commission on Safety and Quality in Health Care has developed the Framework for Australian Clinical Quality Registries as a mechanism for governments and health services to capture the appropriateness and effectiveness of care within their jurisdiction [20, 21]. In the UK, clinical registries have been adopted and also linked with financial incentives encouraging appropriate care. Mechanisms for providing immediate feedback to individual clinicians about their practice are also identified in the context of responding to clinical variation, with training and checklists to accompany feedback data [22, 23]. Furthermore, the provision of feedback using these clinical registry data has been identified as an approach that can contribute to improved patient outcomes [24].

An extensive literature has explored the impact of audit and feedback approaches as methods for changing health professional practice, addressing variations and the quality of care, with publications focused to quantitative synthesis [25,26,27]. The value of feedback approaches to address unwarranted clinical variation across health systems and services, explored through a range of study designs, has not been subject to evidence synthesis. Synthesis is required to explore the range of approaches taken by healthcare teams, services or at a network or system level in using feedback approaches to address unwarranted clinical variation and the data regarding their effectiveness. This review sought to address this knowledge gap by answering the questions below.

Review questions

What are the feedback approaches currently used to address unwarranted clinical variation and what is the evidence of their effectiveness?

Method

This literature review utilised a rapid evidence assessment (REA) methodology, which employs the same methods and principles as a systematic review but makes concessions to the breadth or depth of the process to suit a shorter timeframe and address key issues in relation to the topic under investigation [28]. For example, in this case we were establishing evidence relevant for a contemporary policy issue requiring a time-sensitive, evidence-informed response. The review protocol was therefore also not registered. The purpose of a REA is to provide a balanced assessment of what is already known about a specific problem or issue. REAs utilise strategies to assist in facilitating rapid synthesis of information. In this instance, the strategies used were to limit the number of data sources searched to the major databases in the field of healthcare quality improvement [29]. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses—PRISMA statement—was used to guide reporting of this rapid review [30].

Eligibility criteria

Publications were included if they were available in English, reported original primary empirical or theoretical work, were published from January 2000–August 2018, involved public or private hospitals, day procedure centres, general practice or other primary/community care facilities. Conceptual, theoretical, quantitative or qualitative studies of any research design were included. Studies had to report the use of any mode of feedback to respond to clinical variation, with a focus to addressing unwarranted variations. The definition for facilitated feedback applied in this work was the reporting of outcomes directly to key stakeholders with dialogue geared toward change or any other activities to support change that addressed unwarranted variation. Studies reporting feedback processes provided by health system agencies or directly to health services providers, health districts, or clinicians were eligible. Studies were eligible if they reported feedback in the context of continuous quality improvement, defined as the use of quality “indicators” to initiate and drive practice changes in an ongoing cycle of continuous improvement. Reported outcomes had to include perceived or actual change in clinical practice variation.

Study identification

A range of text words, synonyms and subject headings were developed for the major concepts of clinical variation, quality improvement and feedback. These text words, synonyms and subject headings were used to undertake a systematic search of two electronic databases that index journals of particular relevance to the review topic (Medline and PubMed) from January 2000 to August 2018 in order to focus the search for contemporary policy development (See Additional file 1 for electronic search strategy). Hand searching of reference lists of published papers ensured that relevant published material was captured. Results were merged using reference-management software (Endnote, version X8) and duplicates removed.

Study selection and data extraction

Three reviewers (EM, DH, RH) independently screened the titles and abstracts. Copies of the full articles were obtained for those that were potentially relevant. Inclusion criteria were then independently applied to the full text articles by each of the members of the reviewer team (all authors). Disagreements were resolved through final discussion between two members of the review team (RH, EM). The following data were extracted from eligible literature: author(s), publication year, sample, setting, objective, feedback approach and main findings.

Narrative data synthesis

Findings were analysed using a narrative empirical synthesis in stages, based on the study objectives [28, 31]. A narrative approach was necessary in order to synthesise the qualitative and quantitative findings. A quantitative analytic approach was inappropriate due to the heterogeneity of study designs, contexts, and types of literature included. Initial descriptions of eligible studies and results were tabulated (Appendix). Patterns in the data were explored to identify consistent findings in relation to the study objectives. Interrogation of the findings explored relationships between study characteristics and their findings; the findings of different studies; and the influence of the use of different outcome measures, methods and settings on the resulting data. The literature was then subjected to a quality appraisal process before a narrative synthesis of the findings was produced.

Assessment of the quality of the studies

An assessment of study quality was undertaken using the Quality Assessment Tool of Studies of Diverse Design (QATSDD) for assessing heterogeneous groups of studies [32]. This tool is suitable for assessing the quality and transparency of reporting of research studies in reviews that synthesise qualitative, quantitative and mixed-methods research. Publications identified in the database search were scored against each criterion on a four-point scale (0–3) to indicate the quality of each publication and the overall body of evidence. The criteria are shown in Table 1.

Results

Results of the search

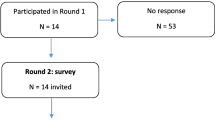

After removing duplicates, 342 records were identified. Title and abstract screening review resulted in 53 publications that fulfilled the inclusion criteria (Fig. 1). Twenty-seven studies were included in the review. Feedback approaches that occurred over a duration of 1 month to 9 years to address clinical variation emerged from 27 publications with quantitative (20), theoretical/conceptual/descriptive work (4) and mixed or multi-method studies (3). A summary table of included studies and feedback approaches used is shown in Table 2.

Excluded studies

Studies were excluded at the full-text review stage because they did not meet the inclusion criteria in being primary empirical or theoretical work (n = 17) or did not include a feedback approach (9).

Study quality

The data appraisal identified that the studies reported in included papers were generally of good quality with particular strengths in the use of evidence-based quality improvement strategies, selection of appropriate study designs, and application of rigorous analytic techniques. A key limitation across the body of evidence was the use of a small sample, often in a single site study, limiting the generalisability of results.

Review findings

The included studies were reported from nine countries: US (14), UK (4), Australia (3), The Netherlands (1), Canada (2), Sweden (1), Egypt (1), and New Zealand (1).

National reporting and feedback

Four studies outlined approaches for benchmarking care nationally or in contributing to publicly-reported datasets as strategies to identify variation that may be problematic, and to incite change [33,34,35,36]. These studies incorporated steps to address variation by providing feedback to service providers about the variations arising in their care compared to benchmarks. Eagar et al. 2010 reported the Palliative Care Outcomes Collaboration (PCOC) to measure the outcomes and quality of palliative care services and to benchmark across Australia. A PCOC quality improvement facilitator met with the services in the collaboration to embed the collection of standardised clinical assessment into practice to improve care quality, in addition to convening national benchmarking meetings. The success of the approach at reducing variation or addressing unwanted variation was not reported [35].

The role of national quality registries in quality improvement was explored in one study [33]. The authors explored the use of quality registry data amongst heads of clinics and clinicians in quality improvement activities as a strategy to address variation. The findings indicate that national quality registries can provide data that, when used in feedback to staff, can provide the basis for identifying and discussing variations and appropriate responses. Use of national quality registries varies widely and these are not routinely incorporated into efforts to address variation [33]. Similarly, Grey et al. (2014) explored how the Atlas of Healthcare Variation in New Zealand is presented, interpreted and applied as a tool to understand and target variation within a quality improvement paradigm. Stakeholders reported using funnel plots to enable clinicians to benchmark against peers and identify areas of variation for scrutiny. This benchmarking provides the basis for quality improvement activities to address variation [36]. The study by Abdul-Baki et al. (2014) reported that public reporting as an intervention was associated with an increase in adenoma detection rates in a private endoscopy practice. The investigators of this study suggested that simply providing feedback data may improve care quality and reduce variations [34]. However, the mechanism by which this feedback approach may work is not established and the pre- and post-study design used was not sufficiently sensitive or controlled to determine causation. On a smaller scale, in a secondary analysis of 228 senior gastroenterologists, Das et al. (2008) reported that data on the quality and management of Barrett’s esophagus (BE) through surveillance also led to reduced variation from the adherence to the recommended four-quadrant biopsy protocol for histological sampling of those with macroscopically suspected BE [37].

Local reporting and feedback

Data were captured about the practice of individuals or teams and reported back at local level within a network, an organisation, an organisational unit or to individuals in six studies [38,39,40,41,42,43]. Individual provider reports were explored in two studies [38, 39]. In a study by Stafford (2002), primary care providers were given data over a nine-month period comparing their use of the electrocardiogram (ECG) compared to peers to reduce non-essential ECG ordering based on a range of national guidelines and recommendations. Variation in the ordering of ECG and its use reduced after the nine-month period [39]. In a project exploring variation in two pathology indicators: one for prostate and one for colorectal cancer, urologists, surgeons and pathologists of four hospitals were provided data supported by evidence-based guidelines [38]. The aim was to encourage behaviour change and improving quality through reduced unwarranted variation. Individual feedback increased appropriate treatment demonstrated in a reduced prostate margin positivity rate from 57.1 to 27.5% on one indicator but did not impact the colorectal cancer indicator [38]. A key finding was the group of urological surgeons who did not show improvement on one of the indicators also had the poorest attendance at the engagement sessions held before and during the project [38].

Chart review was used in a study by Kelly et al. (2016) to establish adherence to the local treatment pathway for the management of atrial fibrillation with rapid ventricular response (AFRVR). Local teams made emergency departments aware of their adherence levels and best practice guidelines leading to a substantial increase in adherence to the pathway from 8 to 68% over the nine-month period [40]. Qualitative findings revealed success factors to be a strong local clinical lead with multi-disciplinary team support, access to evidence-based resource materials, regular feedback about performance throughout the process [40].

Local monitoring and feedback were also used by Smith et al. (2012) to review and understand variation in cardiac surgical procedures. Data from the regular monitoring of quality data between 2003 and 2012 was reported back to the cardiac surgery unit’s bi-monthly morbidity and mortality meetings in order to explore variations and determine action to be taken. The authors reported that this approach was valuable in distinguishing individual and systemic variation issues and those requiring action [41].

In primary care, Gaumer, Hassan and Murphy (2008) developed an information system, ‘Feedback and Analytic Comparison Tool’ to enable clinicians to monitor their own performance data and act accordingly. This system provided feedback to allow clinicians to identify practice variations but did not utilise health information technology (HIT) to identify feedback warranting action [42].

One study explored provision of data across a network [43]. A cancer primary care network in the UK identified a clinical audit and the provision of risk assessment tools as two of four quality improvement approaches for reducing variation. The impact of clinical audit feedback alone was not established in isolation of the other quality improvement activities but a significant increase of 29% in referral rates was reported across the participating general practices [43]. In the context of cancer networks, clinicians felt better supported to sustain improvement efforts to address UCV when there was effective leadership marked by organisational stability and consistent messaging [43].

Facilitated feedback

Fifteen studies employed facilitated feedback methods to explore variations and address areas in which changes were required. The largest group of facilitated feedback approaches were identified in local-level small scale quality improvement projects within health services (3), or those that operated across an organisation (2) or network (6). One paper was a review of multiple quality improvement projects [44]. HIT was identified in several studies as part of the approach to identifying variation, but a sub-set of three studies focused on HIT methods for providing facilitated feedback on variation warranting action.

Quality improvement projects

Twelve quality improvement (QI) projects were retrieved from the search, most of which identified process variation and then utilised educational approaches to change clinician behaviour [23, 24, 45,46,47,48,49,50,51,52,53]. Table 3 provides a summary of the projects identified. Approaches taken to inform the facilitated feedback approaches included the use of the Theoretical Domains Framework for behaviour change, clinical algorithms as a basis for understanding variation and HIT for implementation [45, 47,48,49]. In their narrative review, Tomson and Sabine (2013) detail a range of local and national projects in the UK that utilise evidence-based guidelines to support QI initiatives to address unwarranted variation. They reported that local level QI projects that engaged a package of clinical actions to achieve the improvement aim were those that saw reductions in problematic variation and enhanced quality. The authors also highlight the inefficiency of a multitude of local level projects and the potential value but also challenges of national or collaborative approaches. A central difficulty identified by the review authors was the completion of such QI initiatives as an additional activity to routine clinical work [44]. This finding was reflected in several of the included studies.

At the simplest level, Lee at al (2016) reported a process in which a random selection of medical records was audited against 15 quality measures for inflammatory bowel disease. They then re-audited after an educational session in which performance against the quality measures was reviewed. Lee at al identified a positive correlation between the intervention and compliance with the quality measures, with compliance increasing by 16% [53]. Two studies progressed this approach by developing algorithms for a range of evidence-based practices as the basis for determining compliance [45, 47]. Key performance indicators were used by Griffiths and Gillibrand (2017) to identify variations in individual practice and report this back alongside a quality improvement project [24]. The project included implementing four checklists based on evidence-based guidelines along with a weekly training event to try to reduce variations in pathology practices. The project isolated the effect of the intervention from the training component and established that utilising a checklist alone was associated with conforming to the evidence-based approach rather than the addition of the training component [24].

At a network level, a measurement and education project was reported by Deyo et al. (2000) with the Institute for Healthcare Improvement to address variations in lower back pain care across 22 participating organisations including health plans and medical centres. Those organisations and service with “outlier” rates of imaging or referral (identified as statistical outliers from the normal range of imaging or referral in each organisation) were used to identify clinics or clinicians for targeted intervention [49]. The intervention program including three learning sessions, focusing on areas of practice variation identified by the participating organisations from their own data, in addition to a final national congress. Participants worked within their own teams to problem-solve and then across teams from other organisations. A key component of the process was to present their clinical variation data and perform continuous repeated measurements to track change in variations. Findings suggest that the approach was effective in reducing unwarranted variations, although outcome measures used to assess variation were different across the participating sites based on their clinical goals and data sources. Reduced variations were identified in outcomes such as levels of x-rays ordered, prescribed bed-rest and also increased the use of patient education materials by 100% that may also work to address unwarranted variations [49].

A further network education model was reported by Nguyen et al. (2007) as a strategy to reduce unwarranted variation in dialysis using arteriovenous fistula (AVF) [51]. Forty-six facilities contributed to four targeted regional workshops that explored the root causes of low AVF rates by interviews with vascular surgeons, nephrologists, dialysis staff, and interventional radiologists. The analysis identified three key barriers to a higher AVF rate: 1) Failure of nephrologists to act as vascular access team leaders; 2) Lack of AVF training for vascular access surgeons, including vessel assessment skills, vein mapping, and complex surgical techniques and 3) Late referral of chronic kidney failure (CKF) patients to nephrology. A literature review was then conducted to identify best demonstrated practice regionally and the strategies successfully used by this team were included in the quality improvement project. Four intervention workshop meetings were held and intervention site participants took away follow-up materials to address the content locally. Of the 35 attending physicians, 91% reported that they had changed their practice to address variations based on the intervention in consistent areas relating to AVF use over the five-year period in which outcome data were collected [51]. Similarly, Nordstrom et al. 2016 report the impacts of a learning collaborative between 28 primary care practices that collected and reported on their quality improvement data through four sessions, in addition to didactic lectures, case presentations and discussion of practice-improvement strategies to reduce variation in the provision of buprenorphine [52]. Findings indicated that there was a substantial reduction of up to 50% in variations across all seven quality measures [52].

Health information technology (HIT)

Progressing a thread within many quality improvement projects that were reported, three studies outlined HIT clinical decision support tools explicitly as tailored feedback approaches to reduce unwarranted variation. Two studies reported clinical decision support tools to optimise the appropriate use of imaging for lower back pain [55, 56]. Ip et al. (2014) report a clinical decision support intervention on magnetic resonance imaging (MRI) for low back pain, which incorporated two accountability tools. One component of the intervention was a mandatory peer-to-peer consultation when test utility was uncertain. The second intervention component was quarterly practice variation reports to providers. The multi-faceted intervention demonstrated a 32–33% decrease in the use of MRI for any body part, indicating that this approach could address unwarranted variation relating to overutilisation [55]. Min et al. (2017) embedded a point of care checklist in the computerised entry form for image ordering in addition to a patient education program in which a summary document explaining when medical imaging is necessary was included in the lower back pain pamphlet [56]. Post-intervention, the median proportion of lower back pain patients who received an imaging order reduced by 5% and the median decrease of image ordering amongst the 43 emergency department physicians in the study reduced by 13% [56].

Cook et al. (2014) utilised HIT to develop a mechanism for determining pre-operatively those patients for whom a standardised care pathway would be appropriate in their cardiac surgical care [54]. Post-operatively, the patients on the standardised pathway are confirmed as continuing this pathway in Intensive Care Unit (ICU) and then to the Progressive Care Unit. For those remaining on the pathway, an electronic protocol triggers the removal of the bladder catheter; therefore, practice variation in the time to remove a catheter for those on the pathway should be minimal. The electronic decision tool was complemented by quality improvement methods including educational reinforcement and procedural training around catheter removal, and performance reports provided back to staff at 1, 3 and 6-month intervals. Findings indicated that an improvement from 91% at baseline to 97% post-intervention compliance with guidelines was achieved in relation to removal of catheter, suggesting that the decision support tool contributed to reduced unwarranted variation [54].

Discussion

Responses to clinical variation range from simply presenting evidence to individuals, teams and organisations, to facilitated tailored feedback that may be integrated in broader quality improvement projects. Whilst providing feedback on clinical variation data alone can encourage reflection and improvement, data tailored to particular health professionals, services or systems, and disseminating information to these audiences via facilitated feedback processes, may have greater capacity to drive large-scale change. Current evidence demonstrates variability in approaches to providing feedback around variation. No single optimal model for structuring facilitated feedback was identified as widely adopted. Insufficient evidence was available to determine that one feedback approach is more or less effective than another.

Extensive theory-based research in the psycho-social literature has provided evidence of the critical elements of feedback that influence behaviour change, including aspects of the content and delivery of feedback [57]. Yet, as evident in the quality appraisal process, the included studies rarely referred to any theoretical basis for the interventional approach in the context of addressing UCV. It is apparent that many non-experimental approaches used to provide clinician feedback at the clinical front-line, system and service level are not grounded in theory, which creates challenges for understanding how and why an approach or its elements did or did not work to address UCV. Although theory-based approaches were not explicitly identified, it is apparent that features of the interventions identified in this review reflect common behaviour change techniques, such as the use of goal-setting, self-monitoring and prompting. Further integration of theory into practice would be valuable in the context of addressing UCV to understand the mechanisms by which feedback approaches may or may not work and how these may be utilised across teams, services and systems [58].

Most approaches identified for responding to variation and reducing unwanted variation focus solely or predominantly on variations in clinicians’ practice over a period of several months to several years [19]. This type of variation is important to tackle, but the limited scope of work does not give sufficient consideration to variation due to patient preferences or factors [59]. Mercuri and Gafni (2017) highlight a range of evidence that indicates only around 5–10% of variations relate to clinician choice [59]. There is a need to more fully understand the roles of patient preference and factors in variation, which is information that can be captured and integrated in facilitated feedback approaches. Studies that examined the impact of decisions based on deviations from guidelines (e.g. limiting MRI ordering rights for GPs) in terms of cost and care improvements were lacking. This information is important when considering UCV as a system-wide concept.

Implications

HIT was the principal method for capturing and, in some cases, reporting variation data back to facilitate change [54,55,56]. HIT was central to continuous quality improvement projects that occurred in teams or organisations, for example through generation of clinical treatment algorithms and automated generation of quality indicators to drive or contribute to the feedback sessions [46]. Outcomes that were assessed in facilitated feedback and enabled continuous quality improvement approaches included reduced overuse of technologies or treatments, changes in patient clinical outcomes and adherence to practice protocols [54,55,56].The increasing availability of HIT and real-time analytics in health services internationally makes it likely that the relationship between HIT and clinical variation data and subsequent behaviour change will only continue to strengthen over time. There are opportunities for exploring the use of HIT in recording patient preferences as a feedback approach to contribute to understanding and reducing unwarranted variations.

In the context of uncertainty regarding how to define and tackle unwarranted clinical variation, feedback and clinical review are important as avenues to ensure a nuanced approach. Methods for providing feedback specifically for the purpose of reducing UCV vary between teams, units and organisations. Understanding the features of feedback approaches that are effective in the identification and reduction of UCV is required to support system-wide efforts. This knowledge is crucial for developing an evidence-based methodology to address UCV.

Limitations

Limiting the electronic search to published works identified in only two databases studies post 2008 may have shaped the review findings. A full-scale systematic review was beyond the scope of the review, which used REA methodology to undertake a focused review to address a contemporary, high priority policy question in Australia and internationally. The breadth of areas covered by the concept of clinical variation would also limit the suitability of a full-scale systematic review to this area.

Conclusion

Providing feedback to clinicians is identified in a range of settings as being associated with changes in variation such as reducing overuse of tests and treatments, reducing variations in optimal patient clinical outcomes, and increasing guideline or protocol adherence. Feedback approaches that relate to performance indicators may address variations arising due to clinicians’ behaviours but may not necessarily address variations that relate to patient preferences. Evaluation of the effectiveness of approaches utilising facilitated feedback is needed to provide evidence firstly regarding whether facilitated feedback offers advantages over feedback without facilitation in the context of addressing variation, and secondly, to determine if there is an optimal approach to and/or method of facilitation that is more likely to create change where needed.

Availability of data and materials

The datasets used and/or analysed during the current study are available in the published works included in the manuscript or from the corresponding author on reasonable request.

Abbreviations

- AFRVR:

-

Atrial fibrillation with rapid ventricular response

- AVF:

-

Arteriovenous fistula

- ECG:

-

Electrocardiogram

- GP:

-

General Practitioner

- HIT:

-

Health information technology

- ICU:

-

Intensive Care Unit

- PCOC:

-

Palliative Care Outcomes Collaboration

PRISMA

Preferred reporting items for systematic reviews and meta-analyses

- QATSDD:

-

Quality Assessment Tool for Studies of diverse design

- REA:

-

Rapid evidence appraisal

- UCV:

-

Unwarranted clinical variation

- UK:

-

United Kingdom

- USA:

-

United States of America

References

Corrigan J. Crossing the quality chasm. In: Proctor R, Compton D, Grossman J, et al., editors. Building a better delivery system: a new engineering/health care partnership. Washington DC: The National Academies; 2005.

Gray JM, Abbasi K. How to get better value healthcare. J R Soc Med. 2007;100(10):480.

Madden RC, Wilson A, Hoyle P. Appropriateness of care: why so much variation. Med J Aust. 2016;205(10):452–3. https://doi.org/10.5694/mja16.01041 [published Online First: 2016/11/18].

Da Silva P, Gray JA. English lessons: can publishing an atlas of variation stimulate the discussion on appropriateness of care? Med J Aust. 2016;205(10):S5–s7. https://doi.org/10.5694/mja15.00896 [published Online First: 2016/11/18].

World Bank. Health atlas as a policy tool: how to investigate geographic variation and utilize the information for decision-making. 2015. Available from: http://documents.worldbank.org/curated/en/539331530275649169/pdf/Colombia-Health-Atlas-Methodology.pdf.

Health Quality & Safety Commission New Zealand. The atlas of healthcare variation. 2019. Available from: https://www.hqsc.govt.nz/our-programmes/health-quality-evaluation/projects/atlas-of-healthcare-variation/. Accessed 9 Dec 2019.

Sutherland K, Levesque JF. Unwarranted clinical variation in health care: Definitions and proposal of an analytic framework. J Eval Clin Pract. 2019. https://doi.org/10.1111/jep.13181.

Australian Commission on Safety and Quality in Health Care and Australian Institute of Health and Welfare. The third Australian atlas of healthcare variation. Sydney: ACSQHC; 2018.

Betran AP, Temmerman M, Kingdon C, et al. Interventions to reduce unnecessary caesarean sections in healthy women and babies. Lancet. 2018;392(10155):1358–68. https://doi.org/10.1016/s0140-6736(18)31927-5 [published Online First: 2018/10/17].

Buchan H. Gaps between best evidence and practice: causes for concern. Med J Aust. 2004;180(S6):S48–9 [published Online First: 2004/03/12].

Woolcock K. Value based health care: setting the scene for Australia. Value in Health: Deeble Institute for Health Policy Research. 2019. p. 323–25.

Gray M. Value based healthcare: British Medical Journal Publishing Group; 2017.

Porter ME. Value-based health care delivery. Ann Surg. 2008;248(4):503–9. https://doi.org/10.1097/SLA.0b013e31818a43af [published Online First: 2008/10/22].

Hillis DJ, Watters DA, Malisano L, et al. Variation in the costs of surgery: seeking value. Med J Aust. 2017;206(4):153–4. https://doi.org/10.5694/mja16.01161 [published Online First: 2017/03/03].

Baker DW, Qaseem A, Reynolds PP, et al. Design and use of performance measures to decrease low-value services and achieve cost-conscious care. Ann Intern Med. 2013;158(1):55–9. https://doi.org/10.7326/0003-4819-158-1-201301010-00560 [published Online First: 2012/10/31].

Wennberg J, Gittelsohn A. Variations in medical care among small areas. Sci Am. 1982;246(4):120–34. https://doi.org/10.1038/scientificamerican0482-120 [published Online First: 1982/04/01].

Wennberg JE. Unwarranted variations in healthcare delivery: implications for academic medical centres. BMJ. 2002;325(7370):961–4. https://doi.org/10.1136/bmj.325.7370.961 [published Online First: 2002/10/26].

Mercuri M, Gafni A. Medical practice variations: what the literature tells us (or does not) about what are warranted and unwarranted variations. J Eval Clin Pract. 2011;17(4):671–7 https://doi.org/10.1111/j.1365-2753.2011.01689.x.

Harrison R, Manias E, Mears S, et al. Addressing unwarranted clinical variation: a rapid review of current evidence. J Eval Clin Pract. 2019;25(1):53–65.

Fredriksson M, Halford C, Eldh AC, et al. Are data from national quality registries used in quality improvement at Swedish hospital clinics? Int J Qual Health Care. 2017;29(7):909–15 https://doi.org/10.1093/intqhc/mzx132.

Australian Commission on Safety and Quality in Health Care. Framework for Australian clinical quality registries. Sydney: ACSQHC. 2014.

Department of Health Payment by Results team. A simple guide to Payment by Results: Department of Health. 2013.

Dykes PC, Acevedo K, Boldrighini J, et al. Clinical practice guideline adherence before and after implementation of the HEARTFELT (HEART Failure Effectiveness & Leadership Team) intervention. J Cardiovasc Nurs. 2005;20(5):306–14. https://doi.org/10.1097/00005082-200509000-00004 [published Online First: 2005/09/06].

Griffiths M, Gillibrand R. Use of key performance indicators in histological dissection. J Clin Pathol. 2017;70(12):1019–23 https://doi.org/10.1136/jclinpath-2017-204639.

Colquhoun HL, Squires JE, Kolehmainen N, et al. Methods for designing interventions to change healthcare professionals’ behaviour: a systematic review. Implement Sci. 2017;12(1):30. https://doi.org/10.1186/s13012-017-0560-5 [published Online First: 2017/03/06].

Ivers N, Jamtvedt G, Flottorp S, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012;(6):CD000259 https://doi.org/10.1002/14651858.CD000259.pub3.

Ivers NM, Sales A, Colquhoun H, et al. No more ‘business as usual’ with audit and feedback interventions: towards an agenda for a reinvigorated intervention. Implement Sci. 2014;9:14 https://doi.org/10.1186/1748-5908-9-14.

Grant MJ, Booth A. A typology of reviews: an analysis of 14 review types and associated methodologies. Health Inf Libr J. 2009;26(2):91–108.

Varker T, Forbes D, Dell L, et al. Rapid evidence assessment: increasing the transparency of an emerging methodology. J Eval Clin Pract. 2015;21(6):1199–204.

Moher D, Liberati A, Tetzlaff J, et al. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151(4):264–9.

Popay J, Roberts H, Sowden A, et al. Guidance on the conduct of narrative synthesis in systematic reviews. A product from the ESRC methods programme Version, vol. 1; 2006. p. b92.

Sirriyeh R, Lawton R, Gardner P, et al. Reviewing studies with diverse designs: the development and evaluation of a new tool. J Eval Clin Pract. 2012;18(4):746–52.

Wilcox N, JJ MN. Clinical quality registries have the potential to drive improvements in the appropriateness of care. Med J Aust. 2016;205(10):S27–s29 [published Online First: 2016/11/18].

Abdul-Baki H, Schoen RE, Dean K, et al. Public reporting of colonoscopy quality is associated with an increase in endoscopist adenoma detection rate. Gastrointest Endosc. 2015;82(4):676–82 https://doi.org/10.1016/j.gie.2014.12.058.

Eagar K, Watters P, Currow DC, et al. The Australian Palliative Care Outcomes Collaboration (PCOC)--measuring the quality and outcomes of palliative care on a routine basis. Aust Health Rev. 2010;34(2):186–92 https://doi.org/10.1071/AH08718.

Grey C, Wells S, Exeter DJ, et al. Stakeholder engagement for the New Zealand Atlas of Healthcare Variation: cardiovascular disease secondary prevention: VIEW-3. N Z Med J. 2014;127(1400):81–91.

Das D, Ishaq S, Harrison R, et al. Management of Barrett's esophagus in the UK: overtreated and underbiopsied but improved by the introduction of a national randomized trial. Am J Gastroenterol. 2008;103(5):1079–89 https://doi.org/10.1111/j.1572-0241.2008.01790.x.

McFadyen C, Lankshear S, Divaris D, et al. Physician level reporting of surgical and pathology performance indicators: a regional study to assess feasibility and impact on quality. Can J Surg. 2015;58(1):31–40 https://doi.org/10.1503/cjs.004314.

Stafford RS. Feedback intervention to reduce routine electrocardiogram use in primary care. Am Heart J. 2003;145(6):979–85.

Kelly AM, Pannifex J, Emergency Care Clinical Network IHHSI. A clinical network project improves care of patients with atrial fibrillation with rapid ventricular response in victorian emergency departments. Heart Lung Circ. 2016;25(3):e33–6 https://doi.org/10.1016/j.hlc.2015.07.009.

Smith IR, Gardner MA, Garlick B, et al. Performance monitoring in cardiac surgery: application of statistical process control to a single-site database. Heart Lung Circ. 2013;22(8):634–41 https://doi.org/10.1016/j.hlc.2013.01.011.

Gaumer G, Hassan N, Murphy M. A simple primary care information system featuring feedback to clinicians. Int J Health Plann Manag. 2008;23(3):185–202.

Rubin G, Gildea C, Wild S, et al. Assessing the impact of an English national initiative for early cancer diagnosis in primary care. Br J Cancer. 2015;112(Suppl 1):S57–64 https://doi.org/10.1038/bjc.2015.43.

Tomson CR, van der Veer SN. Learning from practice variation to improve the quality of care. Clin Med. 2013;13(1):19–23.

MA AL, Sparto PJ, Marchetti GF, et al. A quality improvement project in balance and vestibular rehabilitation and its effect on clinical outcomes. J Neurol Phys Ther. 2016;40(2):90–9 https://doi.org/10.1097/NPT.0000000000000125.

Baker RA, Newland RF. Continous quality improvement of perfusion practice: the role of electronic data collection and statistical control charts. Perfusion. 2008;23(1):7–16.

Caterson SA, Singh M, Orgill D, et al. Development of Standardized Clinical Assessment and Management Plans (SCAMPs) in plastic and reconstructive surgery. Plast Reconstr Surg Glob Open. 2015;3(9):e510. https://doi.org/10.1097/gox.0000000000000504 [published Online First: 2015/10/27].

Tavender EJ, Bosch M, Gruen RL, et al. Developing a targeted, theory-informed implementation intervention using two theoretical frameworks to address health professional and organisational factors: a case study to improve the management of mild traumatic brain injury in the emergency department. Implement Sci. 2015;10:74 https://doi.org/10.1186/s13012-015-0264-7.

Deyo RA, Schall M, Berwick DM, et al. Continuous quality improvement for patients with back pain. J Gen Intern Med. 2000;15(9):647–55. https://doi.org/10.1046/j.1525-1497.2000.90717.x [published Online First: 2000/10/13].

Miller DC, Murtagh DS, Suh RS, et al. Regional collaboration to improve radiographic staging practices among men with early stage prostate cancer. J Urol. 2011;186(3):844–9 https://doi.org/10.1016/j.juro.2011.04.078.

Nguyen VD, Lawson L, Ledeen M, et al. Successful multidisciplinary interventions for arterio-venous fistula creation by the Pacific Northwest Renal Network 16 vascular access quality improvement program. J Vasc Access. 2007;8(1):3–11.

Nordstrom BR, Saunders EC, McLeman B, et al. Using a learning collaborative strategy with office-based practices to increase access and improve quality of care for patients with opioid use disorders. J Addict Med. 2016;10(2):117–23 https://doi.org/10.1097/ADM.0000000000000200.

Lee AJ, Kraemer DF, Smotherman C, et al. Providing our fellows in training with education on inflammatory bowel disease health maintenance to improve the quality of care in our health care system. Inflamm Bowel Dis. 2016;22(1):187–93 https://doi.org/10.1097/MIB.0000000000000573.

Cook DJ, Thompson JE, Suri R, et al. Surgical process improvement: impact of a standardized care model with electronic decision support to improve compliance with SCIP Inf-9. Am J Med Qual. 2014;29(4):323–8 https://doi.org/10.1177/1062860613499401.

Ip IK, Gershanik EF, Schneider LI, et al. Impact of IT-enabled intervention on MRI use for back pain. Am J Med. 2014;127(6):512–8.e1 https://doi.org/10.1016/j.amjmed.2014.01.024.

Min A, Chan VWY, Aristizabal R, et al. Clinical decision support decreases volume of imaging for low back pain in an urban emergency department. J Am Coll Radiol. 2017;14(7):889–99. https://doi.org/10.1016/j.jacr.2017.03.005 [published Online First: 2017/05/10].

Michie S, West R, Sheals K, et al. Evaluating the effectiveness of behavior change techniques in health-related behavior: a scoping review of methods used. Transl Behav Med. 2018;8(2):212–24. https://doi.org/10.1093/tbm/ibx019 [published Online First: 2018/01/31].

Carey RN, Connell LE, Johnston M, et al. Behavior change techniques and their mechanisms of action: a synthesis of links described in published intervention literature. Ann Behav Med. 2019;53(8):693–707. https://doi.org/10.1093/abm/kay078 [published Online First: 2018/10/12].

Mercuri M, Gafni A. Examining the role of the physician as a source of variation: are physician-related variations necessarily unwarranted? J Eval Clin Pract. 2018;24(1):145–51 https://doi.org/10.1111/jep.12770.

Acknowledgements

The authors thank the Sax Institute and their staff for supporting the conduct of this work.

Funding

This review was funded by the Cancer Institute New South Wales in an agreement brokered by the Sax Institute. The funding body were responsible for the conceptualisation of the research but did not participate in the design of the study and collection, analysis or interpretation. RK and VW as authors of the manuscript contributed to interpretation of data relating to the manuscript content and to drafting the manuscript.

Author information

Authors and Affiliations

Contributions

RK and VW conceptualised the research. SM developed and executed the search strategy, informed by feedback from all authors. RH led and managed the conduct of the overall project and initial manuscript draft. EM, RAH and DH extracted the data. All authors contributed to the evidence synthesis and reviewed draft manuscripts in addition to the final manuscript. All authors have read and approved the manuscript

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

Reema Harrison and Reece Hinchcliff are members of the editorial board of BMC Health Services Research. The other authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Harrison, R., Hinchcliff, R.A., Manias, E. et al. Can feedback approaches reduce unwarranted clinical variation? A systematic rapid evidence synthesis. BMC Health Serv Res 20, 40 (2020). https://doi.org/10.1186/s12913-019-4860-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-019-4860-0