Abstract

Background

Given that patient safety measures are increasingly used for public reporting and pay-for performance, it is important for stakeholders to understand how to use these measures for improvement. The Agency for Healthcare Research and Quality (AHRQ) Patient Safety Indicators (PSIs) are one particularly visible set of measures that are now used primarily for public reporting and pay-for-performance among both private sector and Veterans Health Administration (VA) hospitals. This trend generates a strong need for stakeholders to understand how to interpret and use the PSIs for quality improvement (QI). The goal of this study was to develop an educational program and tailor it to stakeholders’ needs. In this paper, we share what we learned from this program development process.

Methods

Our study population included key VA stakeholders involved in reviewing performance reports and prioritizing and initiating quality/safety initiatives. A pre-program formative evaluation through telephone interviews and web-based surveys assessed stakeholders’ educational needs/interests. Findings from the formative evaluation led to development and implementation of a cyberseminar-based program, which we tailored to stakeholders’ needs/interests. A post-program survey evaluated program participants’ perceptions about the PSI educational program.

Results

Interview data confirmed that the concepts we had developed for the interviews could be used for the survey. Survey results informed us on what program delivery mode and content topics were of high interest. Six cyberseminars were developed—three of which focused on two content areas that were noted of greatest interest: learning how to use PSIs for monitoring trends and understanding how to interpret PSIs. We also used snapshots of VA PSI reports so that participants could directly apply learnings. Although initial interest in the program was high, actual attendance was low. However, post-program survey results indicated that perceptions about the program were positive.

Conclusions

Conducting a formative evaluation was a highly important process in program development. The useful information that we collected through the interviews and surveys allowed us to tailor the program to stakeholders’ needs and interests. Our experiences, particularly with the formative evaluation process, yielded valuable lessons that can guide others when developing and implementing similar educational programs.

Similar content being viewed by others

Background

Patient safety measures are increasingly used for public reporting and pay-for-performance [1,2,3]. Implicit in this use of the measures is the assumption that it will drive improvement, which continues to be critical [4]. Thus, it is important for the key stakeholders within organizations whose performance is being measured to understand and appropriately use some of these measures to identify, prioritize, and act on safety improvement opportunities [5,6,7,8,9]. One particularly visible set of measures in this domain is the Agency for Healthcare Research and Quality (AHRQ) Patient Safety Indicators (PSIs) [10, 11]. Originally developed for case finding activities and quality improvement (QI) [11, 12], the PSIs are now used primarily for public reporting and pay-for-performance among both private sector and Veterans Health Administration (VA) hospitals [1, 3, 13]. However, similar to other administrative data-based measures, PSIs can present particular challenges when being interpreted for QI purposes [5]. Stakeholders with inadequate understanding of the PSIs may also be averse to working with them for QI [5,6,7,8,9].

Among the strategies that have been used to educate users and other stakeholders about the PSIs are the AHRQ Quality Indicators Toolkit [14], a modified version of the Institute for Healthcare Improvement Virtual Breakthrough Series (IHI VBTS) [15], and AHRQ’s podcasts/cyberseminars [16]. Although the AHRQ Quality Indicators Toolkit and IHI VBTS have provided hospitals with an opportunity to learn about and implement PSI-related QI initiatives, these approaches can be very resource-intensive. On the other hand, similar to tele- and web-based training programs [17, 18], cyberseminars can be less resource intensive, and allow for widespread participation and dissemination of information to stakeholders [17, 18]. Evidence also suggests that cyberseminars can be a viable strategy for educating individals about clinical and research issues and findings [16, 18,19,20]. However, there is little empirical evidence as to the relative usefulness of these various strategies in facilitating stakeholder engagement and educating them about performance measures. To actively engage stakeholders in an educational program, it is important to tailor the program according to their needs and interests [21,22,23].

The present study was prompted by information within the VA that public reporting of VA PSI rates was imminent. Based on interviews with VA patient safety managers in a prior study [24], we knew that knowledge about the PSIs within the VA was both sparse and inconsistent. To address this gap, we obtained funding to develop an educational program that was tailored to stakeholders’ needs and could potentially help them in learning more about the PSIs for QI. Our study’s specific aims were to: 1) obtain VA stakeholders’ input on their educational needs related to the PSIs; 2) develop and implement a PSI educational program tailored to stakeholders’ needs; and 3) explore stakeholders’ perceptions about the program. The purpose of this paper is to share what we learned about developing a quality indicators education program (specifically, the AHRQ PSIs) and tailoring it to stakeholders’ needs and interests.

Methods

Overview

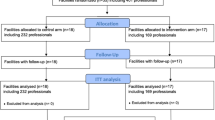

We conducted an implementation and evaluation study from 2011 to 2013. We began with a formative evaluation [25] involving telephone interviews and web-based surveys with stakeholders, which allowed us to learn more about their PSI educational needs and how the program should be tailored. Next, we developed and implemented a program based on the formative evaluation. Finally, we conducted a post-program evaluation to explore stakeholders’ perceptions about the program. We received approval from the Institutional Review Board (IRB) at the VA Boston Healthcare System (IRB #2563). Study participation was voluntary; we obtained informed consent from participants for each study aim.

Study population

Our overall study population consisted of key VA stakeholders at 132 VA acute-care hospitals nationwide who were involved in quality/safety initiatives: middle managers (Quality/Performance Improvement Managers/Officers, and Patient Safety Managers/Officers) and senior managers (Medical Center Directors, Chiefs of Staff, Nurse Executives/Associate Directors of Patient Care Services, Associate Directors).

Within the overall population, we anticipated higher levels of participation from the middle manager group than the senior managers, based on our prior work [24]. The middle managers are most likely to be the initial recipients of performance reports, have a strong understanding of hospital safety and quality improvement programs and priorities, initiate improvement priorities based on performance reports, and be the first in line to provide training to other managers (senior, middle, and unit) within the organization about performance measures.

However, we did include senior managers because they are also key stakeholders: they are heavily involved in setting their organizations’ improvement priorities and reviewing performance reports with the patient safety, quality, and performance improvement managers for their hospital. Thus, when developing the PSI educational program, it was also important to understand their needs on learning about the PSIs.

Formative evaluation: Telephone interviews

As a first step in the formative evaluation, we sought interviews with informants from eight of the 132 VA hospitals. We selected these sites based on their geographic diversity, variation in PSI rates, hospital size (number of Veterans served), and our knowledge about these facilities’ QI activities within the VA. Within each of these sites, we recruited 16 potential informants from our middle manager stakeholder group—all either Patient Safety Managers or Quality/Performance Improvement Managers—for the reasons described above, as hospital-level content experts on PSI-related learning needs at their facilities.

We developed an interview guide based on our experience from a prior PSI study and a literature review [24, 26,27,28]. This guide covered four a priori concepts: education about performance measures (“performance measure education”), education about the PSIs (“PSI education”), knowledge about the PSIs (“PSI knowledge”), and prioritization of improvement efforts within the organization (“improvement prioritization”). Additional file 1 provides the interview guide that we developed and used for the telephone interviews. We sought to obtain a general understanding of potential PSI educational needs and to assess whether similar a priori concepts should inform the survey questionnaire. Using the detailed notes taken during the 30-min telephone interviews, we summarized informants’ answers and conducted a rapid content analysis to identify evidence related to the a priori concepts and to identify emergent themes that would then guide development of the survey [29, 30].

Formative evaluation: Pre-program web-based survey

Using the findings from the interviews, we developed a pre-program web-based survey that we then administered to all key VA stakeholders (described in the study population section above). The survey consisted of 56 closed-ended questions covering the a priori concepts plus the additional concepts that emerged from the data analysis of the interview guides as well as an additional literature review on organizational improvement [31]. Concepts included: awareness of performance reporting (“performance reporting”), stakeholders’ current/planned use of the PSIs (“PSI use”), and facilitators/barriers to PSI use and QI activities (“facilitators/barriers”). Response options included a five-point Likert scale (1 = strongly disagree to 5 = strongly agree) and yes/no. In addition, there were five open-ended questions where respondents could elaborate upon some of their answers. Additional file 2 provides the pre-program web-based survey that we administered to all key VA stakeholders.

The web-based survey was programmed and administered using Verint® Enterprise Feedback Management, a VA-approved software package that is widely used for survey development and administration. We used descriptive statistics to characterize our study sample and their responses to the survey questions, and qualitatively analyzed the open-ended responses to identify prevalent themes across respondents.

Development and implementation of the PSI educational program

Program development was informed by the formative evaluation survey results. It was also informed by a review of existing patient safety/quality educational materials from organizations including VA Health Services Research and Development’s Center for Information Dissemination and Education Resources (CIDER), AHRQ, and IHI [14, 16, 20, 32, 33]. Once our PSI educational program was developed, we emailed all the VA stakeholders who had responded to the survey and invited them to participate in the educational program. We implemented the program over a four-month period (December 2012–March 2013).

Post-program evaluation: Web-based survey

To learn about stakeholders’ perceptions of the program, a post-program evaluation survey was administered approximately 1 month after the PSI education program ended. This survey, consisting of 90 closed-ended questions and 12 open-ended questions, was administered to those VA stakeholders who had both (1) responded to the pre-program survey and (2) registered for the educational program. Response options were either a five-point Likert scale that ranged from 1 = strongly disagree to 5 = strongly agree, or yes/no. Additional file 3 provides the post-program web-based survey that we administered to stakeholders.

We used similar survey administration processes and data analyses for both the pre-program and post-program surveys. In addition, we matched the pre- and post-responses of individuals that participated in both surveys. Our analyses included comparison of pre- and post-program survey responses for the same 7 questions that appeared on both surveys.

Results

Obtain VA stakeholders’ input on their educational needs related to the PSIs.

Formative evaluation: Telephone interviews

We interviewed nine of the 16 potential informants: four Patient Safety Managers and five Quality/Performance Improvement Managers. This included at least one informant from each of the eight selected sites. Several themes that emerged from the interviews (below) helped guide our development of the formative evaluation pre-program survey and were also taken into consideration when developing the educational program:

-

Some informants had heard about the PSIs at meetings held by national VA quality and safety offices, whereas other informants had not heard about the PSIs and were not aware that PSI rates were in reports that they received.

-

Some were unclear on how to interpret PSIs.

-

While some had received education on other performance measures from national VA offices, most reported that they had not received any formal education on the PSIs.

-

Informants suggested ways they would like the PSI education to be delivered, such as web-based training and information placed on a VA SharePoint.

-

Informants suggested making the educational program more relevant to the audience we were trying to educate and also more interactive.

-

Informants believed that improvement priorities can be heavily driven by a hospital’s performance on quality/safety measures.

-

Informants felt that improvement priorities are largely set by senior management according to internal hospital priorities; however, they can also be set according to mandates from the VA National and Regional Offices.

The interviews informed us about stakeholders’ potential PSI educational needs as well as aspects of organizational contextual factors that may drive hospital-level improvement priorities. Review of the interview data confirmed that the concepts we had developed for the interviews could be used for the survey. Because we were able to identify helpful information through our a priori concepts and interview questions, we used similar concepts and several of the specific interview questions in the survey questionnaire. For example, in the interview, we asked informants “If you were to receive education on the PSIs, how would you like it to be delivered?” Some of the options that emerged from informants’ answers to this question included: having access to materials on a website, conducting presentations of case studies, conducting presentations via LiveMeeting (a widely used VA web-based meeting platform), and including specific information about the PSIs in a handout or PowerPoint. We then included several of those options as response items when developing the survey question:

I would prefer to learn about the PSIs through:

-

Web conferencing (e.g. LiveMeeting)

-

Video conferencing (e.g., v-tel)

-

Reports or journal articles

-

Written case studies

-

Video/audio materials (e.g., links to pre-recorded materials)

-

Face-to-face conference

-

Q&A sessions (e.g., via teleconference)

Table 1 provides additional examples of the concepts, interview questions, and survey questions that were developed based on the interviews.

Formative evaluation: Web-based survey

We administered the pre-program survey to 782 VA stakeholders at 94 out of the 132 VA hospitals (71%); 181 (23%) responded. Table 2 shows the range of positions of these survey respondents. A majority of the respondents were Quality/Performance Improvement Managers/Officers and Patient Safety Managers/Officers. The surveys were helpful in informing us as to whether stakeholders understood the PSIs in the reports they received, and what their educational needs were related to the PSIs.

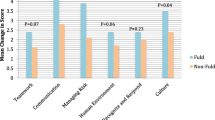

The majority of respondents indicated that they were aware of the PSIs and had received reports containing PSI rates (91% and 73%, respectively), yet their understanding of how rates were calculated and interpreted was considerably lower (21% and 35%, respectively). Almost 50% of respondents reported having received education about the PSIs from VA Central Office. They were most interested in receiving the education via web-conferencing (e.g., LiveMeeting) (84%); education about the PSIs through written materials was of least interest. Respondents’ top two PSI-related educational interests were in monitoring trends in patient safety/quality (93%) and interpreting PSI rates (92%), although many of the other content areas also appeared to be of interest(> 80% of respondents answered yes to learning more about how to use the PSIs for case finding and QI, and research related to the PSIs). Figures 1, 2, and 3 provide additional details about survey responses for “PSI Knowledge,” “PSI Education” (delivery mode), and “PSI Education” (content areas), respectively.

In addition, we learned more about whether survey respondents perceived the PSIs as an organizational priority and what factors might be barriers to using the PSIs for QI. As shown in Fig. 4 (survey responses about “Improvement Prioritization” and “PSI Use”), while respondents agreed that the PSIs fit within the quality/patient safety goals of the VA (86%), were a valuable quality/safety measure (71%), and time would be devoted to improve rates (70%), there was less agreement on whether the PSIs were a current priority (57%) and would be an improvement priority at their hospital in the future (64%). In the open-ended response section, respondents most frequently noted the following as barriers to adopting the PSIs for patient safety/quality improvement initiatives: lack of training on the PSIs, lack of understanding and knowledge about the PSI data, lack of timeliness of VA performance reports (e.g., not real-time), and having too many measures and too much data to manage.

Develop and implement a PSI educational program based on stakeholders’ input.

We invited all 181 VA stakeholders who responded to the pre-program survey to participate in the educational program; 82 (45%) registered to participate. Guided by stakeholders’ input from the findings of the pre-program survey and the interviews, we developed and implemented a PSI educational program that was tailored to stakeholders’ needs in the following ways:

Program delivery mode

-

We offered six cyberseminar sessions via LiveMeeting (a web-conferencing platform used throughout the VA) because survey respondents preferred this mode. Each session was 45 min and was offered once. On average, nine participants attended each of the cyberseminar sessions. A majority of cyberseminar participants were from our middle manager stakeholder group.

-

In addition, by offering the program as cyberseminars, we were able to easily record and archive the sessions on the program’s SharePoint site so that stakeholders who were unable to attend the live cyberseminars, or who wanted to revisit the sessions, could readily access them. (Recordings can be made available upon request from the corresponding author.) This allowed us to tailor the program based on stakeholders’ input from the interview.

-

To make the sessions more interactive, the cyberseminars were conducted in an interview format, similar to the style that AHRQ uses in its podcasts about the Quality Indicators and Toolkit, with a moderator interviewing a speaker. Cyberseminar speakers were study team members with extensive knowledge about the topic presented, or representatives from our VA national partners’ offices [VA National Center for Patient Safety (NCPS) and VA Inpatient Evaluation Center (IPEC)]. Using LiveMeeting also facilitated an interactive platform, as it allowed participants to ask questions at any time during the presentation and receive answers directly from the speakers at that time.

-

We integrated snapshots of the VA’s PSI report into our cyberseminars so that participants could directly apply what they learned from our program because survey respondents indicated that they were interested in learning how to use the PSIs, and interview informants suggested making the education more relevant to participants.

Program content

-

Cyberseminar session topics and content corresponded to survey respondents’ expressed needs and interests. For example, because respondents indicated that they did not understand how to interpret the PSI rates in reports, we developed two sessions substantially devoted to this (Session 2: The PSIs and Your Facility’s Report; Session 3: How to Interpret PSI Rates). These sessions also focused on two of the three content areas that were of most interest to survey respondents (how to interpret PSI rates and specific definitions of selected PSIs). Table 3 provides additional information about content areas of interest as indicated by survey respondents, each cyberseminar session, topic, and outline of content covered.

-

We also developed educational materials to complement what participants were learning in the cyberseminars: 1) an informational sheet, “Interpreting the AHRQ PSIs: A Basic Overview,” which was to be used, along with the cyberseminars, as a tool to help in interpreting and understanding the PSIs, as provided in Additional files 4 and 2) a matrix that highlighted information on each session and provided a list of materials referenced in each session for ease of access to materials, as provided in Additional file 5.

Explore stakeholders’ perceptions about the program.

Post-program evaluation: Web-based survey

Thirteen out of the 82 stakeholders who had registered to attend the cyberseminars responded to both the pre- and post-program surveys. In general, respondents’ answers between pre- and post-program survey questions reflected positive changes for “PSI knowledge,” although changes were somewhat negative for “PSI use.” Table 4 provides the findings of those 7 items that were asked in both the pre- and post-program surveys. For example, we found that for the two questions about “PSI Knowledge” (I understand how to interpret the PSI rates contained in the reports; and I understand how PSI rates are calculated in the reports), survey respondents perceived that they better understood how to interpret and understand the PSI rates after our program, as noted by the positive changes between pre- and post-program surveys.

Overall, survey respondents had positive perceptions of the PSI educational program. A majority of respondents agreed/strongly agreed that the sessions were useful (73%), were satisfied with what they learned (64%), and would recommend this program to their staff/colleagues (82%). Sessions 2 (“The PSIs and Your Facility’s Reports”) and 3 (“How to Interpret PSI Rates”) contributed most to respondents’ learning about the PSIs.

Respondents noted several factors in the open-ended questions that contributed the most to their learning about the PSIs, including: the program’s structure (e.g., LiveMeeting, archived/recorded sessions on study SharePoint site to access on their own time, availability of archived handouts) and content (e.g., clear, concise, and then applied examples, how VA obtains the data to calculate the PSI rates, what the PSIs measure and their limitations). Respondents noted that PSI data validity concerns were a barrier to using the PSIs for QI at their hospitals (e.g., coding issues and clinical staff will not “believe the PSI rates”).

Discussion

We conducted this implementation and evaluation study with the goal of developing an educational program that was tailored to stakeholders’ needs. Ultimately, we hoped that our educational program would help VA stakeholders learn more about how to interpret and use the PSIs for QI. Our experiences in developing, implementing, and evaluating a PSI educational program yielded several valuable lessons.

First, consistent with the literature [21,22,23, 25, 34,35,36], we found that stakeholder input was highly useful for program development, emphasizing the importance of conducting a formative evaluation with the key stakeholders involved in the specific activities to which the program is most relevant. Through our formative evaluation, we engaged stakeholders in the development process, and built and tailored a program based on their needs and interests. Interviews with a small sample of hospital-level content experts were adequate to provide us with a general awareness of the potential needs and preferences of the larger stakeholder population. This helped us set the scope of the stakeholder survey in a way that yielded very informative results.

Second, the formative evaluation enabled us to tailor the program to stakeholders’ needs and preferences. In particular, the formative evaluation influenced two key components of our educational program: the content areas for the cyberseminars and the mechanism by which the content should be delivered. Although the impact of the program was limited by a low participation rate, our post-program survey results showed that those respondents who did participate expressed positive perceptions of what they had learned from the program.

Third, we learned that the timing of program implementation can be critical for success. When we began the study, we had anticipated that public reporting of VA PSI rates was imminent. However, as we moved forward with implementation, public reporting of VA PSI rates was postponed. Similar to what we found, prior studies show that hospitals’ responses to public reporting of performance measures may differ depending on hospitals’ perceptions of the need to improve on a given measure [7,8,9]. Public reporting of a performance measure may provide the urgency and impetus for a hospital to act on reports of poor performance [7,8,9]. In our study, postponement of public reporting of the PSIs apparently mitigated this sense of urgency as well as impetus for hospitals to seek active guidance on ways to improve PSI rates. This shift in timing may partly explain why attendance at our cyberseminars and response rates to our post-program surveys were relatively low, despite the initial high interest in our program. This may also explain why survey respondents’ perceptions about PSI use may have changed. Given that, as this is written, we are seeing increased interest in PSIs due to reporting on the Centers for Medicare and Medicaid Services (CMS) Hospital Compare and the VA Strategic Analytics for Improvement Learning (SAIL), we presume that VA stakeholders would now be much more receptive to education on how to interpret and use the PSIs. Our study emphasizes the importance of aligning program implementation with organizational priorities to ensure proper timing, active engagement by stakeholders, and stronger buy-in from key leadership.

Fourth, this study adds to the limited empirical evidence in the literature about the most useful strategies on how to educate stakeholders about the PSIs. Respondents preferred to obtain PSI education through web-based meetings (e.g., cyberseminars). Consistent with the literature, we also learned that cyberseminars were relatively easy to implement, could be used to widely disseminate information, and appeared to be less resource-intensive than other options [19]. Our findings suggest that cyberseminars can, indeed, be a suitable strategy to educate stakeholders about the PSIs as well as other performance measures.

Limitations and strengths

Our study has some limitations. As mentioned in the discussion above, although the program was developed through stakeholder input and initial interest in our PSI education program was high, actual attendance at our cyberseminar sessions was low. The response rate for our post-program survey was also low, potentially due to the timing of PSI reporting. In addition, although we intended the pre- and post-program surveys to take less than 20 min to complete, some respondents may have found the length of the surveys to be somewhat burdensome; thus, this may have impacted the response rate. Finally, while the U.S. is seeing growth in large integrated healthcare delivery systems that resemble the VA in some respects, there are, of course, organizational differences between the VA and those private sector systems.

However, our study had strengths worth highlighting. A major strength of the study is that we engaged stakeholders in developing the cyberseminars within a national healthcare system through interviews and surveys. As we learned from this study, while there are challenges in developing and implementing programs within a national healthcare system, the lessons learned allowed us to gain a better understanding of how different strategies can be useful for disseminating information about quality and safety measures and helping stakeholders to effectively use these measures. Despite the small sample size at the end, the PSI educational program was developed through wider stakeholder input, and had initial interest from numerous stakeholders. In addition, although our study findings are within the context of the VA setting and are focused on the PSIs (one performance measure), we have attempted to present the lessons learned in this study in ways that facilitate their application to other health care settings, other program (tool, strategy, intervention) development and implementation efforts, and other performance measures.

Conclusions

Although this study focused on the AHRQ PSIs, our experiences yielded valuable lessons that others should consider when developing and implementing programs. Timing of implementation and alignment with organizational priorities are important elements for successful engagement of stakeholders in program development and implementation. However, we learned that one of the most critical elements of program development is the formative evaluation process, which we conducted through interviews and surveys. While the interviews provided us with an opportunity to engage with and get feedback from a smaller group of VA stakeholders (which helped to guide our development of the survey and gave us initial insight into educational needs), the pre-program survey allowed us to gather information more broadly across a range of VA stakeholders. Ultimately, through the formative evaluation process, we developed and implemented a PSI educational program that was tailored to stakeholders’ needs and interests by triangulating the information gathered from both methods.

Abbreviations

- AHRQ:

-

Agency for Healthcare Research and Quality

- CIDER:

-

Center for Information Dissemination and Education Resources

- CMS:

-

Centers for Medicare and Medicaid Services

- IHI VBTS:

-

Institute for Healthcare Improvement Virtual Breakthrough Series

- IRB:

-

Institutional Review Board

- PSIs:

-

Patient Safety Indicators

- QI:

-

Quality improvement

- SAIL:

-

Strategic Analytics for Improvement and Learning

- VA:

-

Veterans Health Administration

References

Centers for Medicare and Medicaid Services. Hospital Compare. https://www.medicare.gov/hospitalcompare. Accessed Jan 2018.

Centers for Medicare and Medicaid Services. Hospital-acquired condition reduction program (HACRP). https://www.cms.gov/Medicare/Medicare-Fee-for-Service-Payment/AcuteInpatientPPS/HAC-Reduction-Program.html. Accessed Jan 2018.

Centers for Medicare and Medicaid Services. Overview of hospital value-based purchasing (VBP). http://www.qualityreportingcenter.com/wp-content/uploads/2016/03/VBP_20160223_QATranscript_vFINAL508.pdf. Accessed Jan 2018.

Gandhi TK, Berwick DM, Shojania KG. Patient safety at the crossroads. JAMA. 2016;315(17):1829–30.

Hussey PS, Burns RM, Weinick RM, Mayer L, Cerese J, Farley DO. Using a hospital quality improvement toolkit to improve performance on the AHRQ quality indicators. Jt Comm J Qual Patient Saf. 2013;39(4):177–84.

de Vos ML, van der Veer SN, Graafmans WC, de Keizer NF, Jager KJ, Westert GP, et al. Implementing quality indicators in intensive care units: exploring barriers to and facilitators of behaviour change. Implement Sci. 2010;5(1):1.

Lindenauer PK, Lagu T, Ross JS, Pekow PS, Shatz A, Hannon N, et al. Attitudes of hospital leaders toward publicly reported measures of health care quality. JAMA. 2014;174(12):1904–11.

Hafner JM, Williams SC, Koss RG, Tschurtz BA, Schmaltz SP, Loeb JM. The perceived impact of public reporting hospital performance data: interviews with hospital staff. Int J Qual Health Care. 2011;23(6):697–704.

Rothberg MB, Benjamin EM, Lindenauer PK. Public reporting of hospital quality: recommendations to benefit patients and hospitals. J Hosp Med. 2009;4(9):541–5.

Elixhauser A, Pancholi M, Clancy CM. Using the AHRQ quality indicators to improve health care quality. Jt Comm J Qual Patient Saf. 2005;31(9):533–8.

Agency for Healthcare Research and Quality. Patient Safety Indicators overview. http://qualityindicators.ahrq.gov/Modules/psi_overview.aspx. Accessed Jan 2018.

Rosen AK, Itani KM, Cevasco M, Kaafarani HM, Hanchate A, Shin M, et al. Validating the patient safety indicators in the veterans health administration: do they accurately identify true safety events? Med Care. 2012;50(1):74–85.

Department of Veterans Affairs, Veterans Health Administration. SAIL: Strategic Analytics for Improvement and Learning Value Model. http://www.va.gov/QUALITYOFCARE/measure-up/Strategic_Analytics_for_Improvement_and_Learning_SAIL.asp. Accessed Jan 2018.

Agency for Healthcare Research and Quality. Quality Indicators Toolkit. http://www.ahrq.gov/professionals/systems/hospital/qitoolkit/index.html. Accessed Jan 2018.

Zubkoff L, Neily J, Mills PD, Borzecki A, Shin M, Lynn M, et al. Using a virtual breakthrough series collaborative to reduce postoperative respiratory failure in 16 veterans health administration hospitals. Joint Comm J Qual Patient Saf. 2014;40(1):11–20.

Agency for Healthcare Research and Quality. Webinar on AHRQ Quality Indicators Toolkit for hospitals. https://www.ahrq.gov/professionals/systems/hospital/qitoolkit/webinar080116/index.html. Accessed Jan 2018.

Ruzek JI, Rosen RC, Marceau L, Larson MJ, Garvert DW, Smith L, et al. Online self-administered training for post-traumatic stress disorder treatment providers: design and methods for a randomized, prospective intervention study. Implement Sci. 2012;7(1):1.

Chipps J, Brysiewicz P, Mars M. A systematic review of the effectiveness of videoconference-based Tele-education for medical and nursing education. Worldviews Evid-Based Nurs. 2012;9(2):78–87.

Gilkey MB, Moss JL, Roberts AJ, Dayton AM, Grimshaw AH, Brewer NT. Comparing in-person and webinar delivery of an immunization quality improvement program: a process evaluation of the adolescent AFIX trial. Implement Sci. 2014;9(1):1.

Department of Veterans Affairs, Veterans Health Administration. Center for Information Dissemination and Education Resources: Cyberseminars. http://www.cider.research.va.gov/cyberseminars.cfm. Accessed Jan 2018.

Chen Q, Shin MH, Chan JA, Sullivan JL, Borzecki AM, Shwartz M, et al. Partnering with VA stakeholders to develop a comprehensive patient safety data display: lessons learned from the field. Am J Med Qual. 2016;31(2):178–86.

Hamilton AB, Brunner J, Cain C, Chuang E, Luger TM, Canelo I, et al. Engaging multilevel stakeholders in an implementation trial of evidence-based quality improvement in VA women's health primary care. Transl Behav Med. 2017. https://doi.org/10.1007/s13142-017-0501-5.

Cottrell E, Whitlock E, Kato E, Uhl S, Belinson S, Chang C, et al. AHRQ methods for effective health care. Defining the benefits of stakeholder engagement in systematic reviews. Rockville (MD): Agency for Healthcare Research and Quality (US); 2014.

Rosen A (Principal Investigator). VA HSR&D QUERI Service Directed Research #07–002, “Validating the Patient Safety Indicators in the VA: a multifaceted approach.”

Stetler CB, Legro MW, Wallace CM, Bowman C, Guihan M, Hagedorn H, et al. The role of formative evaluation in implementation research and the QUERI experience. J Gen Intern Med. 2006;21(Suppl 2):s1–8.

Joshi MS, Hines SC. Getting the board on board: engaging hospital boards in quality and patient safety. Jt Comm J Qual Patient Saf. 2006;32(4):179–87.

de Vos M, Graafmans W, Kooistra M, Meijboom B, Van Der Voort P, Westert G. Using quality indicators to improve hospital care: a review of the literature. Int J Qual Health Care. 2009;21(2):119–29.

Hibbard JH, Stockard J, Tusler M. Does publicizing hospital performance stimulate quality improvement efforts? Health Aff (Millwood). 2003;22(2):84–94.

Hamilton A. Qualitative methods in rapid turn-around health services research. In: VA HSR&D National Cyberseminar Series: spotlight on Women’s health; 2013.

Miles M, Huberman M. An expanded sourcebook of qualitative data analysis. 2nd ed. Thousand Oaks, CA: Sage Publications; 1994.

Lukas CV, Holmes SK, Cohen AB, Restuccia J, Cramer IE, Shwartz M, et al. Transformational change in health care systems: an organizational model. Health Care Manag Rev. 2007;32(4):309–20.

Institute for Healthcare Improvement. http://www.ihi.org. Accessed Jan 2018.

Agency for Healthcare Research and Quality. http://www.ahrq.gov. Accessed Jan 2018.

Guise J-M, O’Haire C, McPheeters M, Most C, LaBrant L, Lee K, et al. A practice-based tool for engaging stakeholders in future research: a synthesis of current practices. J Clin Epidemiol. 2013;66(6):666–74.

Wong G, Greenhalgh T, Internet-based PR. Medical education: a realist review of what works, for whom and in what circumstances. BMC Med Educ. 2010;10(1):1.

O'Haire C, McPheeters M, Nakamoto E, LaBrant L, Most C, Lee K, et al. AHRQ methods for effective health care. Engaging stakeholders to identify and prioritize future research needs. Rockville (MD): Agency for Healthcare Research and Quality (US); 2011.

Acknowledgements

The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs or the United States government. We are grateful for the strong participation, guidance, and support in this study and would like to thank the following individuals and groups: Participants of this study, national partners and speakers from VA National Center for Patient Safety and VA Inpatient Evaluation Center, Whitney Rudin and Melissa Afable (research coordinators), VA Center for Information Dissemination and Education Resources (CIDER), colleagues at CHOIR for manuscript review (Qi Chen, Judy George, Bo Kim, Barbara Lerner, Nathalie McIntosh, Christopher Miller, Hillary Mull, Jennifer Sullivan), and Siena Easley (administrative assistance).

Funding

The work reported in this article was funded by the Department of Veterans Affairs, Veterans Health Administration, Health Services Research and Development, Quality Enhancement Research Initiative as a Rapid Response Project #11–022 (Principal Investigator: Marlena H. Shin, JD, MPH).

Availability of data and materials

The datasets generated and/or analyzed during the current study are available from the corresponding author on reasonable request.

Author information

Authors and Affiliations

Contributions

MHS, PR, MS, AB, LZ and AK made substantial contributions to the conception of the study. MHS, PR, MS, AB, LZ and AK made substantial contributions to the design of the study. MHS, PR, MS, EY, KS and AK interpreted and analyzed the data. MHS, PR, MS, AB and AK made substantial contributions to the writing of the manuscript. All authors have read and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

We received approval from the Institutional Review Board (IRB) at the VA Boston Healthcare System (IRB #2563). Study participation was voluntary and we obtained informed consent from participants for each phase of the study. The VA Boston IRB approved a waiver of documented informed consent for participation in the telephone interviews, web-based surveys, and educational program. Thus, participants provided us with verbal consent for the telephone interviews, consent through an opt-in link embedded in an email for the web-based surveys, and consent through an opt-in link embedded in an email for the educational program.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Formative Evaluation: Interview Guide. This file provides the interview guide we used for the telephone interviews to obtain a general understanding of potential PSI educational needs and assess whether similar a priori concepts should inform the survey. (PDF 168 kb)

Additional file 2:

Formative Evaluation: Pre-program Survey. This file provides the survey that we administered to obtain stakeholders’ input on their educational needs related to the PSIs. (PDF 169 kb)

Additional file 3:

Post-program Evaluation Survey. This file provides the post-program survey that we administered to learn about stakeholders’ perceptions of the PSI Educational Program. (PDF 259 kb)

Additional file 4:

Informational Sheet, Interpreting the AHRQ PSIs: A Basic Overview. This file provides the informational sheet which PSI Educational Program participants could use to help them interpret and understand the PSIs. (PDF 87 kb)

Additional file 5:

PSI Educational Program Matrix. This file highlights information covered in each session of the PSI Educational Program and provides a list of materials referenced in each session. (PDF 213 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Shin, M.H., Rivard, P.E., Shwartz, M. et al. Tailoring an educational program on the AHRQ Patient Safety Indicators to meet stakeholder needs: lessons learned in the VA. BMC Health Serv Res 18, 114 (2018). https://doi.org/10.1186/s12913-018-2904-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12913-018-2904-5