Abstract

Background

We developed a novel intervention that uses behavioral economics incentives and mobile-health text messages to increase HIV knowledge and testing frequency among Latinx sexual minority men and Latinx transgender women. Here we provide a theoretically-grounded assessment regarding the intervention’s acceptability and feasibility.

Methods

We conducted 30-min exit interviews with a stratified sample of participants (n = 26 Latinx sexual minority men, 15 Latinx transgender women), supplemented with insights from study staff (n = 6). All interviews were recorded, transcribed, and translated for a content analysis using Dedoose. Cohen’s Kappa was 89.4% across coded excerpts. We evaluated acceptability based on how participants cognitively and emotionally reacted to the intervention and whether they considered it to be appropriate. We measured feasibility based on resource, scientific and process assessments (e.g., functionality of text messaging service, feedback on study recruitment procedures and surveys).

Results

Regarding acceptability, most participants clearly understood the intervention as a program to receive information about HIV prevention methods through text messages. Participants who did not complete the intervention shared they did not fully understand what it entailed at their initial enrollment, and thought it was a one-time engagement and not an ongoing program. Though some participants with a higher level of education felt the information was simplistic, most appreciated moving beyond a narrow focus on HIV to include general information on sexually transmitted infections; drug use and impaired sexual decision-making; and differential risks associated with sexual positions and practices. Latinx transgender women in particular appreciated receiving information about Pre-Exposure Prophylaxis. While participants didn’t fully understand the exact chances of winning a prize in the quiz component, most enjoyed the quizzes and chance of winning a prize. Participants appreciated that the intervention required a minimal time investment. Participants shared that the intervention was generally culturally appropriate. Regarding feasibility, most participants reported the text message platform worked well though inactive participants consistently said technical difficulties led to their disengagement. Staff shared that clients had varying reactions to being approached while being tested for HIV, with some unwilling to enroll and others being very open and curious about the program. Both staff and participants relayed concerns regarding the length of the recruitment process and study surveys.

Conclusions

Our theoretically-grounded assessment shows the intervention is both acceptable and feasible.

Trial registration

The trial was registered on May 5, 2017 with the ClinicalTrials.gov registry [NCT03144336].

Similar content being viewed by others

Background

HIV disproportionately affects Latinx sexual minority men (LSMM) and Latinx transgender women (LTGW), yet they are often unaware of their HIV status. The prevalence of HIV among the general U.S. population is less than 1%, [1] but HIV rates are markedly higher among LSMM and LTGW. In Los Angeles County, home to one of the country’s largest HIV epidemics [2] and site of the proposed study, the estimated HIV prevalence is 15% among LSMM [3] and 17% among LTGW [4]. Latinx adults are also less likely to have their infections diagnosed, [5] posing a critical public health problem as the undiagnosed population accounts for nearly 40% of new HIV infections [6]. A U.S. Centers for Disease Control report highlights the urgent need to increase HIV testing among individuals at higher risk for acquiring HIV such as LSMM and LTGW [7].

LSMM and LTGW face difficulties engaging with the formal health care system – often due to fear of engaging with perceived authorities such as providers - and mobile health (mHealth) approaches can help them access up-to-date HIV prevention information [8, 9]. MHealth technologies are a promising way to engage and remain in contact with communities facing a range of barriers in accessing health services. As the current COVID-19 pandemic shows, mHealth technologies can provide a crucial link to relay quickly evolving information. Recent studies [10,11,12,13,14,15] including our own [8, 16, 17] have shown how increasingly restrictive U.S. immigration policies [18] have heightened the unwillingness of many LSMM and LTGW to engage with formal systems, [19] further elevating the need to utilize mHealth platforms to maintain a critical line of communication. MHealth shows potential to improve HIV testing frequency among these groups, [20,21,22,23,24,25] but evidence of technology disengagement over time [26] makes it unclear how best to achieve this outcome [9, 27,28,29,30].

Our completed R34 study (MOTIVES – MObile Technology and IncentiVES), uses simple mHealth text messages combined with behavioral economics incentives, and produced extremely promising results [8, 31,32,33,34]. We partnered with Bienestar, Los Angeles County’s largest Latinx-serving HIV service organization. We randomized 166 participants into either the ‘Information Only’ group (receiving weekly text messages with HIV prevention information) or the ‘Information Plus’ group (who in addition could win small incentives conditional on responding to the quizzes). The quizzes included questions on the weekly text messages. Correctly answering questions improved the chance of winning a prize at the next testing visit from 1:10 if no correct answers to 1:5 if all correct. Our data show large effect sizes: 49% of participants in the ‘Information Only’ group and 59% of the ‘Information Plus’ group tested at Bienestar at least once in a year, compared to 35% in a matched control group (40 and 66% increases). Participants were also substantially more likely than controls to have at least two tests per year (78% increase in ‘Information Only’ and 115% increase in ‘Information Plus’) [34]. MOTIVES did not explicitly target Pre-Exposure Prophylaxis (PrEP - a daily pill that if taken consistently and correctly can reduce the risk of HIV from sex by about 99%) [35], but its uptake in the intervention groups more than doubled (p < 0.01).

In order to take the intervention to scale, a careful assessment of the intervention’s acceptability and feasibility are needed. Therefore, our goal is to provide a theoretically-grounded examination of intervention acceptability and feasibility to inform future scale-up and lessons learned for other mHealth interventions focused on sexual and gender minority populations such as LSMM and LTGW.

Methods

Extensive details on the study design and quantitative data have been published elsewhere, [34] and here we provide a summary of key aspects and focus on the qualitative data that has not been previously described.

Study aim, design and setting of the study

The goal of the study is to assess the acceptability and feasibility of the MOTIVES intervention. We designed the exit interviews to evaluate different domains of acceptability and feasibility based on theoretically-grounded frameworks (described in detail below).

The study was conducted at Bienestar Human Services, Inc., a community-based organization with multiple locations across Los Angeles County offering programs and services to primarily Latinx communities. Programs and services are available for HIV-positive people, individuals at risk for HIV, gay and bisexual men, lesbian women, transgender women, youth and people who use substances.

Participant characteristics

The study sample included 41 study participants (n = 26 LSMM and 15 LTGW) and study staff (n = 6). Eligibility criteria for LSMM and LTGW participants were: being HIV-negative; self-identifying as a sexual minority man or transgender woman as well as Latinx; 18 years of age or older; owning or having regular mobile phone access; and fluency in English or Spanish. Staff participants included all providers enrolling LSMM and LTGW for the study.

Procedures

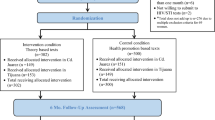

The Institutional Review Board approved all study protocols and materials. Qualitative data was collected between April and June 2019. Participants provided written consent as part of their participation in the overall study, and for the phone interviews, all participants were read a copy of the informed consent document and provided verbal consent, as was approved by our Institutional Review Board. With respect to our stratified sampling approach, we were interested in speaking to people who were active participants as well as those who signed up for MOTIVES but never engaged in the program (inactive participants); those who were assigned to the ‘Information Only’ component of the intervention and those who were in the ‘Information Plus’ component. This gave us six groups to sample from (active LSMM in the ‘Information Only’ component; active LSMM in the ‘Information Plus’ component; active LTGW in the ‘Information Only’ component; active LTGW in the ‘Information Plus’ component; inactive LSMM; and inactive LTGW). We divided the study sample into these six groups, randomly selected participants within each of the groups to recruit for interviews and created a stratified sample that was proportional to the amount of total participants in each of the six groups. A breakdown of the sampling frame can be seen in Fig. 1 and descriptive statistics are included in Table 1.

All qualitative interviews were done by phone and conducted by a cisgender female, Latinx Assistant Policy Researcher from the RAND Corporation, who is fluent in both English and Spanish and is trained in qualitative data collection and analysis (AMG). Interviews were pilot tested several times, and various members of the research team provided feedback. All interviews were recorded, translated when necessary, and transcribed. Transcriptions and translations were carried out by an external transcription company. The translations were reviewed by the interviewer for quality assurance and were sent back for improvements when necessary, until high quality translations were obtained. The interviews lasted approximately 30 min, and participants were sent a $15 electronic gift card for participating in the qualitative interviews.

Interview scripts to Assess Acceptability & Feasibility

We drew on theoretically-grounded frameworks in the peer-reviewed literature to discuss core components of acceptability [36] and feasibility [37]. We describe acceptability based on the framework provided by Sekhon and colleagues that assesses acceptability of an intervention based on cognitive and emotional responses to it. Tickle-Degnen suggests determining feasibility based on four types of assessments: management, resource, scientific, and process. Adequate management of the study and adequate resources to conduct it are requirements for NIH funding. Here we focus on the scientific and process assessments (e.g., reliability of our measurement tools, adherence to study recruitment) that determine the feasibility of large-scale implementation. Interview scripts for participants and study staff are included as Additional file 1.

Qualitative data analysis

The approach to the data analysis was based on a directed content analysis, allowing for themes to emerge throughout an iterative coding process [38]. Two study investigators (SM and AMG) independently reviewed each transcript and then together developed a preliminary codebook, which was revised during joint coding of two subsequent interviews. The codebook included descriptions, inclusion/exclusion criteria, and example quotes. The final codebook included 10 higher-level, main themes and 16 sub-themes. To establish inter-rater reliability on the main themes, the coders jointly coded 10 transcripts and then randomly selected a set of 31 new transcript excerpts. Cohen’s Kappa was 89.4% across coded excerpts, indicating “good agreement” between reviewers [39]. After attainment of reliability, one coder coded the remainder of the client interviews. Because of the small number of staff interviews, illustrative quotes will not be included to maintain confidentiality. The two coders met weekly to review the work of one another and to identify emergent topics and discuss outliers. Finally, both coders reviewed all coded excerpts and collectively wrote a summary of results. Dedoose software (Version 8.3.21) was used to manage the qualitative data.

Results

The below summarizes results regarding the intervention’s acceptability and feasibility. Illustrative quotes are included in Tables 2 and 3, respectively.

Intervention acceptability

Intervention coherence – did participants understand MOTIVES?

Participants gave a range of descriptions of the intervention, but most said MOTIVES was a program where they received information about HIV prevention methods through text messages. However, when providing explanation as to why they did not continue with MOTIVES, some inactive participants shared they did not fully understand MOTIVES at their initial enrollment, and thought it was a one-time engagement rather than an ongoing program.

Affective attitude – how did participants feel about MOTIVES?

Most participants shared they had a good experience with MOTIVES. With regards to the structure of the intervention, participants said they found it useful to receive texts reminding them to get tested, and they liked getting texts on the same day of the week. Though some participants with a higher level of education felt the information was simplistic, most appreciated the content of MOTIVES because of the specificity and practicality of the information, as well as the variety of topics covered. Key topical areas that participants appreciated were: moving beyond a narrow focus on HIV to include general information on sexually transmitted infections; drug use and impaired sexual decision-making; and differential risks associated with sexual positions and practices. Additionally, many participants – and especially LTGW participants - appreciated receiving information about PrEP. Other topics participants thought should be integrated into the messages included individual-level concerns such as general mental health and more information on drug use, in addition to HIV-specific issues such as medical advancements and more on the side-effects of PrEP. Participants also said they wanted more information on structural-level issues such as knowing your rights with respect to accessing publicly available services and domestic violence. An example of an LTGW-specific concern included parenting for those who are transitioning.

Self-efficacy – were participants able to perform the MOTIVES activities?

Most seemed to enjoy the intervention and saw it as fun. With respect to the weekly text messages, most participants reported only having used the links a few times, primarily when they were less familiar with the topic covered in the text message. Participants generally participated in the quizzes, though some critiques of the quizzes were noted. For example, several said the quiz questions were poorly worded, and that they did not clearly understand the chances of winning.

Perceived effectiveness – did participants think MOTIVES was effective?

Participants shared positive changes in all three outcomes of interest for the intervention (i.e., knowledge about HIV, getting tested for HIV, and changing sexual behavior), as well as increased knowledge about PrEP. Most participants mentioned they learned new things about HIV, and even those who felt they already had adequate knowledge said they appreciated receiving updated information. In terms of getting tested for HIV, most participants shared intentions to continue getting tested every 3 months, though a few participants who were not sexually active shared they would likely only get tested every 6 months. With regard to changes in sexual behavior, participants mentioned feeling more confident about how to practice safer sex and feeling more comfortable asking their partner’s HIV status before having sex.

Despite the study not specifically targeting PrEP, we found a statistically significant increase in its uptake among MOTIVES participants [34]. Most participants said they had previously heard about it but learned a lot more about it through MOTIVES. Participants reporting that they were not currently on PrEP relayed individual-level barriers (e.g., not having many sex partners, concerned that it didn’t protect against other sexually transmitted infections, concerns about side effects and interaction with body affirming hormones for LTGW) as well as structural-level barriers (e.g., not having health insurance).

Burden – what was the perceived effort of participating in MOTIVES?

Participants liked that MOTIVES required a minimal investment of their time and said that it was both easy to participate in MOTIVES and that it did not take a lot of time out of their day. Some mentioned feeling like it took effort either because they spent more time thinking through the questions to improve the likelihood of answering correctly or because they were busy when the texts came in. Further, most were satisfied with the frequency and timing of the text messages, though some LTGW expressed wanting to receive messages more frequently, while a few LSMM expressed wanting to receive messages less frequently.

Ethicality – was the intervention a good fit with the participant’s value system?

Both LSMM and LTGW shared that MOTIVES was generally culturally appropriate. Many also said that the information received would likely be appropriate for a broader audience and should not be limited to just people in Latinx LGBT communities. Additionally, participants generally shared feeling comfortable with receiving information about HIV on their cell phones, with only one participant sharing feelings of discomfort.

Opportunity costs – were sufficient benefits given to engage participants in MOTIVES?

Most participants appreciated receiving gift cards for their participation in MOTIVES. Some felt the gift cards motivated them to stay engaged with the intervention, but many others said they would have likely participated in MOTIVES even without the gift cards. Many participants shared that the gift cards helped them buy essential items, like shampoo, eggs, milk, and condoms. Some reported difficulties with receiving their gift cards or with using their gift cards to pay for things. Suggestions for future incentives included having additional options for different kinds of gift cards and increasing the value of each gift card.

Intervention feasibility

Management assessment

These components (e.g., investigators’ administrative capacity to manage the randomized controlled trial as well as the research investigators and staff capacities, expertise and availability for the planned research) were required to receive the grant. Other aspects (e.g., formats and structures of the trial) are described in detail elsewhere [34].

Resource assessment – technological capacity & software

Overall, most participants mentioned the use of text messages worked well. Of note, the main reason given for not continuing with the intervention related to technical difficulties. Almost all participants said that they believed they had signed up for the intervention and then simply never received subsequent text messages. Other examples of technical problems included participants being unsure of whether their quiz responses had been logged; receiving messages split up and out of order; and issues receiving their mobile gift cards. Several participants also noted not being signed up for their language of preference. Recommendations for technical issues included having a staff person on the spot send the message and have them confirm they received it; and having someone follow up to see whether or not they are receiving the messages as planned.

Resource assessment: institutional support – what is [the organization’s] willingness, motivation, and capacity to carry through with project-related tasks and support investigator time and effort?

Bienestar staff reported they felt it was important to work with LSMM and LTGW and that MOTIVES provided a better understanding of the study populations, uncovered some of the barriers they face in trying to remain HIV-negative, and provided critical information. The staff previously had varied levels of experience with research, with some having participated in research projects in the past and others having never been part of such a project. However, staff generally shared an interest in participating in future research activities, often because they wanted to make a difference and wanted to expand their skillset. Some did say they would be more motivated if additional compensation was made available, more recognition and involvement in other aspects of the research project (e.g., analysis, developing results), and had more flexibility in their workload to accommodate research activities. Further, most felt that it would not be difficult to gain supervisor approval to participate in future research activities, and they estimated they could spend between half an hour to 2 h per day working on research projects.

Process assessment – what is the recruitment process?

Staff shared that clients had varying reactions to being approached while being tested for HIV, with some unwilling to enroll and others being very open and curious about MOTIVES. There were a number of tactics that staff members found helpful for recruitment, including preparatory activities like having role playing scenarios and being able to ask questions among team members. However, there were also several challenges that staff members faced during recruitment. For instance, some felt that it was difficult to only be able to enroll walk-ins (in an effort to engage new rather than existing clients of Bienestar). Others had a difficult time enrolling people who were skeptical of the personal information being collected about them or of having information about HIV sent to their phones. Timing also seemed to be a challenge during recruitment, for both staff and prospective participants, with staff feeling like they had to juggle more priorities, and prospective participants feeling like the recruitment process took too long (between 30 and 40 min) when they had come in expecting a quick HIV test.

Scientific assessments – what is the level of safety and burdensomeness of the frequency, intensity, and duration of MOTIVES?

With respect to the surveys, most participants felt the survey was an appropriate length, with only a few sharing that they felt the surveys were too long – a sentiment that was echoed by some the study staff. Participants generally found the survey questions easy to understand; however, there were some who felt that the translations were done poorly, making some questions difficult to interpret. With respect to the midline and endline surveys, staff faced participant non-responsiveness or not keeping to appointments, as well as outdated participant contact information (e.g., either outdated emails, phone numbers, or contact information of a family or friend given at the start of the study). Staff found the following to be useful for getting participants to complete the surveys: explaining the purpose and importance of the research, stressing the gift card incentive, and being able to do some of the surveys over the phone rather than all in person (for participant convenience).

Discussion

Our exit interviews provide a comprehensive assessment of the intervention’s acceptability and feasibility. Here we compare and contrast key issues raised with existing literature.

With respect to acceptability, most participants relayed high intervention coherence and said they understood MOTIVES, but some noted confusion in what the study entailed and how long it would last. Though we created a range of tools to ensure participants understood key aspects of the consenting (e.g., highlighting summary points after reading the full consent document) and study (e.g., visuals relaying the chances of winning), these findings underscore the need to identify engaging and helpful ways to ensure study participants understand core components of the research they are agreeing to participate in. The HIV Prevention Trials Network – a worldwide collaborative clinical trials network responsible for large randomized controlled trials globally – has called for improvements to these processes to ensure studies adequately address the informational needs of participants, [40] yet more concrete examples of tips and tools employed by researchers to achieve this understanding is needed.

The affective attitude (i.e. how people felt about MOTIVES), self-efficacy (i.e. whether participants were able to do the tasks) and perceived effectiveness relayed by participants was promising. Overall, participants appreciated the intervention and the fact that it required minimal time given their busy professional and personal lives. They thought the intervention was enjoyable (despite not fully understanding their exact chances of winning) and helped them improve key prevention behaviors. Our findings highlight the value of and need for such interventions in conjunction with others that address ongoing structural challenges [41].

Participants responded well to the tailoring of the intervention to the unique needs of LTGW, however participants reported confusion when asked if the intervention met their needs as a Latinx participant. A first-generation immigrant on our research team suggested this challenge may be due to the fact that, without significant interaction with other cultures (e.g., through higher education and other employment opportunities) it can be challenging to identify what is unique to your own culture without points of comparison. There is extensive literature on the importance of cultural tailoring [42] and even how to operationalize it [43, 44]. Beyond ensuring materials are accurately translated, that the right literacy levels are achieved, and that appropriate visual representations are included, our experience suggests that more nuanced domains of cultural competence remain hard to achieve. Perhaps most important is the meaningful engagement of the study population in all aspects of the study, from design through implementation. Further, integrating not just the feedback but ensuring the study population is represented in the staffing (both on the research team and study staff) is critical to ensuring diverse perspectives are not only considered but put into practice.

While the perceived opportunity costs of participation reflected here were minimal, we find somewhat contrasting results between participants’ preferences for gift cards. Specifically, in our formative interviews participants requested gift cards for fun activities such as going to the movies, and especially for LTGW, to buy make up at upscale stores [33]. However our exit interviews suggest that participants wanted more practical options to purchase food and other household items. One consistent point was that people wanted cash, but our community partner – like many others - have strict rules about the safety of storing cash at their offices for research purposes. Despite extensive investigation, our study team could not identify a credit card-like gift card that did not have fees attached to it – all existing options had prohibitively high fees that would have undermined the overall low-cost approach employed in the intervention and further limited the ability of future community organizations to include the incentive component. Going forward, providing a range of options that can be used to buy both essential and non-essential items may be ideal.

With respect to intervention feasibility, the use of technology was mentioned as both a strength and sometimes a weakness. While technology enabled a streamlining of many aspects of the intervention, it also introduced some challenges (e.g., changed phone numbers, inconsistent text messaging formats) and underscores the need for study staff to check in and confirm technological components of the intervention throughout the intervention, which in some ways undermines the essence of the light touch approach of mHealth. Other studies have also found mobile technology to be feasible for implementing health interventions to increase knowledge and improve health behaviors, [45,46,47,48] though some of them have also encountered difficulties during implementation, [49] suggesting the need for further exploration of the ways in which these interventions may be further improved.

Another overarching theme with respect to institutional and scientific assessments is the constant balance between programmatic and research goals. We designed the study with a focus on implementation science [50,51,52,53,54] to improve the integration of the research procedures into the existing workflow. Despite these efforts, study staff consistently raised concerns about the time constraints it placed on them. Collectively our team has over 20 years of HIV prevention research experience and to date this study included some of the most streamlined research instruments. Still, participant and study staff raised concerns about the time taken to complete research activities including consenting, surveys etc. [40] This emphasizes the need for ongoing discussion in order for study staff to better understand why the range of data are collected and how gathering more robust information allows for a more detailed understanding of the factors driving the intervention’s overall impact.

This study has both strengths and limitations. The strengths include theoretically-grounded assessments of acceptability and feasibility – two often talked about concepts in research that are however rarely rigorously assessed. Further, the study includes both participant and staff perspectives, helping to address issues raised from multiple vantage points. Finally, the structured sampling approach provided more balanced insights regarding the study’s strengths and weaknesses, rather than limited to only those who successfully completed the intervention and therefore were more likely biased in a positive direction. These strengths must be noted, however, in the context of limitations. First, exit interviews were all conducted at the end of the pilot study and would have garnered more recent recollections if conducted more immediately after dropping out or completing the study. Relatedly, the lapse in time made it particularly difficult to gather helpful information regarding participants who dropped out of the study shortly after it started (versus those who were recruited towards the end of the enrollment period and therefore conducted exit interviews more recently following their study completion). Second, due to the small size of our community partner, interviews with management-level staff would be impossible to appropriately de-identify; therefore, our discussion of institutional support is limited to study staff perspectives. Finally, exit interviews did not generate robust information on whether or not study procedures were followed beyond the recruitment process.

Conclusions

Our theoretically-grounded assessment shows our intervention is both acceptable and feasible. While there are areas for improvement, the concerns raised are addressable. Further, our in-depth analysis provides a template regarding how best to comprehensively assess the diverse domains of acceptability and feasibility. Taken together, our research highlights the potential of this intervention and transparently discusses the ways in which its acceptability and feasibility can be improved going forward.

Availability of data and materials

Data underlying the study cannot be shared publicly or on request due to ethical restrictions imposed by the authors’ ethics committee, the Certificate of Confidentiality received from the NIH, and the consent form signed by participants. Any questions about this can be directed to Sarah MacCarthy through the following modes of communication:

Phone: 310–393-0411, ext. 6743.

Fax: 310–393-4818.

E-mail: sarahm@rand.org

Abbreviations

- LTGW:

-

Latinx transgender women

- LSMM:

-

Latinx sexual minority men

- PrEP:

-

Pre-Exposure Prophylaxis

- MOTIVES:

-

MObile Technology and IncentiVES

- mHealth:

-

Mobile health

References

CDC. CDC - Statistics Overview - Statistics Center - HIV/AIDS. 2013; Available from: http://www.cdc.gov/hiv/statistics/basics/.

Fauci AS, et al. Ending the HIV epidemic: a plan for the United States. JAMA. 2019;321(9):844–5.

Division of HIV and STD Programs, et al., Los Angeles County Five - Year Comprehensive HIV Plan (2013–2017). 2013.

Division of HIV and STD Programs and Los Angeles County Department of Public Health. Transgender Population Estimates 2–12-13 pub (Read-Only) - Transgender Population Estimates 2–12-13 pub.pdf. 2013; Available from: http://publichealth.lacounty.gov/wwwfiles/ph/hae/hiv/Transgender%20Population%20Estimates%202-12-13%20pub.pdf.

CDC. HIV Continuum of Care, US, 2014 Overall by Age, Race/Ethnicity, Transmission Route and Sex 2017; Available from: https://www.cdc.gov/nchhstp/newsroom/2017/HIV-Continuum-of-Care.html#.

Li Z, et al. Vital signs: HIV transmission along the continuum of care—United States, 2016. MMWR Morb Mortal Wkly Rep. 2019;68(11):267.

CDC. Recommendations for HIV Screening of Gay, Bisexual, and Other Men who have Sex with Men - United States, 2017. 2017; Available from: https://www.cdc.gov/mmwr/volumes/66/wr/mm6631a3.htm.

Barreras J, Linnemayr S, MacCarthy S. “We have a stronger survival mode”: exploring knowledge gaps and culturally sensitive messaging of PrEP among Latino men who have sex with men and Latina transgender women in Los Angeles, CA. AIDS Care. 2019;31(10):1221–7.

Brown JL, Sales JM, DiClemente RJ. Combination HIV prevention interventions: the potential of integrated behavioral and biomedical approaches. Curr HIV/AIDS Rep. 2014;11(4):363–75.

Galindo GR, et al. Community member perspectives from transgender women and men who have sex with men on pre-exposure prophylaxis as an HIV prevention strategy: implications for implementation. Implement Sci. 2012;7(1):116.

Mantell JE, et al. Knowledge and attitudes about preexposure prophylaxis (PrEP) among sexually active men who have sex with men and who participate in New York City Gay Pride events. LGBT Health. 2014;1(2):93–7.

Krakower DS, et al. Limited awareness and low immediate uptake of pre-exposure prophylaxis among men who have sex with men using an internet social networking site. PLoS One. 2012;7(3):e33119.

Bauermeister JA, et al. PrEP awareness and perceived barriers among single young men who have sex with men in the United States. Curr HIV Res. 2013;11(7):520.

Jaiswal J, et al. Structural barriers to pre-exposure prophylaxis use among young sexual minority men: The P18 Cohort Study. Curr HIV Res. 2018;16(3):237–49.

Lelutiu-Weinberger C, Golub SA. Enhancing PrEP access for Black and Latino men who have sex with men. J Acquir Immune Defic Syndr. 2016;73(5):547.

Brooks R, et al. Experiences of anticipated and enacted Pre-Exposure Prophylaxis (PrEP) stigma among Latino MSM in Los Angeles. AIDS Behav. 2019;23(7):1964–73.

Brooks RA, et al. Persistent stigmatizing and negative perceptions of pre-exposure prophylaxis (PrEP) users: implications for PrEP adoption among Latino men who have sex with men. AIDS Care. 2019;31(4):427–35.

Pew Research Center. ICE arrests went up in 2017, with biggest increases in Florida, northern Texas, Oklahoma. 2018 Accessed July 2018]; Available from: http://www.pewresearch.org/fact-tank/2018/02/08/ice-arrests-went-up-in-2017-with-biggest-increases-in-florida-northern-texas-oklahoma/.

Ortega AN, et al. Health care access, use of services, and experiences among undocumented Mexicans and other Latinos. Arch Int Med. 2007;167(21):2354–60.

Lim MSC, et al. SMS STI: a review of the uses of mobile phone text messaging in sexual health. Int J STD AIDS. 2008;19:287.

Sun CJ, et al. Acceptability and feasibility of using established geosocial and sexual networking mobile applications to promote HIV and STD testing among men who have sex with men. AIDS Behav. 2015;19(3):543–52.

Newell A. A mobile phone text message and Trichomonas vaginalis. Sex Transm Infect. 2001;77:225.

Gabarron E, et al. Mobile phone applications for the care and prevention of HIV and other sexually transmitted diseases: a review. J Med Internet Res. 2013;15:e1.

Sullivan PS, Grey JA, Rosser BRS. Emerging technologies for HIV prevention for MSM: What we’ve learned, and ways forward. J Acquir Immune Defic Syndr. 2013;63(0 1):S102–7.

Dhar J, Leggat C, Bonas S. Texting – a revolution in sexual health communication. Int J STD AIDS. 2006;17:375.

Druce KL, Dixon WG, McBeth J. Maximizing engagement in mobile health studies: lessons learned and future directions. Rheum Dis Clin North Am. 2019;45(2):159–72.

CDC. Prevalence and awareness of HIV infection among men who have sex with men--21 cities, United States, 2008. MMWR Morb Mortal Wkly Rep. 2010;59(37):1201–7.

Pop-Eleches C, et al. Mobile phone technologies improve adherence to antiretroviral treatment in a resource-limited setting: a randomized controlled trial of text message reminders. AIDS. 2011;25(6):825–34.

Free C, et al. Txt2stop: a pilot randomised controlled trial of mobile phone-based smoking cessation support. Tob Control. 2009;18(2):88–91.

Bramley D, et al. Smoking cessation using mobile phone text messaging is as effective in Maori as non-Maori. N Z Med J. 2005;118(1216):U1494.

Linnemayr S, et al. Behavioral economics-based incentives supported by mobile technology on HIV knowledge and testing frequency among Latino/a men who have sex with men and transgender women: Protocol for a randomized pilot study to test intervention feasibility and acceptability. Trials. 2018;19(1):540.

Linnemayr S, et al. Using behavioral economics to promote HIV prevention for key populations. J AIDS Clin Res. 2018;9(11):780.

MacCarthy S, et al. Strategies for improving mobile-technology-based HIV prevention interventions with Latino men who have sex with men and Latina transgender women. AIDS Educ Prev. 2019;31(5):407–20.

Maccarthy S, et al. Using behavioral economics to increase HIV knowledge and testing among Latinx sexual minority men and transgender women: A quasi-experimental pilot study. JAIDS J Acquir Immune Defic Syndr. 2020;85:189.

Centers for Disease Control and Prevention. Pre-Exposure Prophylaxis (PrEP). 2020 May 13, 2020 [cited 2020 October 9, 2020]; Available from: https://www.cdc.gov/hiv/risk/prep/index.html.

Sekhon M, Cartwright M, Francis JJ. Acceptability of healthcare interventions: an overview of reviews and development of a theoretical framework. BMC Health Serv Res. 2017;17(1):88.

Tickle-Degnen L. Nuts and bolts of conducting feasibility studies. Am J Occup Ther. 2013;67(2):171–6.

Hsieh H-F, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15(9):1277–88.

Cohen J. A coefficient of agreement for nominal scales. Educ Psychol Measurement. 1960;20(1):37–46.

Brown BJ, Sugarman J. Why ethics guidance needs to be updated for contemporary HIV prevention research. J Int AIDS Soc. 2020;23(5):e25500.

Blankenship KM, et al. Structural interventions: concepts, challenges and opportunities for research. J Urban Health. 2006;83(1):59–72.

Truong M, Paradies Y, Priest N. Interventions to improve cultural competency in healthcare: a systematic review of reviews. BMC Health Serv Res. 2014;14(1):99.

Butler, M., et al., Improving cultural competence to reduce health disparities. 2016.

Agency for Healthcare Research and Quality, Evidence-based Practice Center Systematic Review Protocol: Improving Cultural Competence to Reduce Health Disparities for Priority Populations 2014, AHRQ. https://effectivehealthcare.ahrq.gov/products/cultural-competence/research-protocol.

Hashemian TS, Kritz-Silverstein D, Baker R. T ext2 F loss: the feasibility and acceptability of a text messaging intervention to improve oral health behavior and knowledge. J Public Health Dentistry. 2015;75(1):34–41.

MacCarthy S, et al. A randomized controlled trial study of the acceptability, feasibility, and preliminary impact of SITA (SMS as an Incentive To Adhere): a mobile technology-based intervention informed by behavioral economics to improve ART adherence among youth in Uganda. BMC Infect Dis. 2020;20(1):1–10.

Le D, et al. Feasibility and acceptability of SMS text messaging in a prostate cancer educational intervention for African American men. Health Informatics J. 2016;22(4):932–47.

Reback CJ, et al. Text messaging reduces HIV risk behaviors among methamphetamine-using men who have sex with men. AIDS Behav. 2012;16(7):1993–2002.

Senn TE, et al. Development and preliminary pilot testing of a peer support text messaging intervention for HIV-infected black men who have sex with men. J Acquir Immune Defic Syndr (1999). 2017;74(Suppl 2):S121.

Ober AJ, et al. An organizational readiness intervention and randomized controlled trial to test strategies for implementing substance use disorder treatment into primary care: SUMMIT study protocol. Implementation Sci. 2015;10(1):66.

Ober AJ, et al. Assessing and improving organizational readiness to implement substance use disorder treatment in primary care: findings from the SUMMIT study. BMC Family Pract. 2017;18(1):107.

Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: The Evidence-Based Practice Attitude Scale (EBPAS). Mental Health Serv Res. 2004;6(2):61–74.

Weiner BJ, Amick H, Lee S-YD. Conceptualization and measurement of organizational readiness for change: a review of the literature in health services research and other fields. Med Care Res Rev. 2008;65(4):379–436.

Fernandez ME, et al. Developing measures to assess constructs from the inner setting domain of the consolidated framework for implementation research. Implementation Sci. 2018;13(1):52.

Acknowledgments

We would like to thank Mary Vaiana for her careful review of the manuscript.

Funding

Funding for this study was provided by the NIH, grant number 1R34MH109373-01A1 (PI: Linnemayr).The sponsor (NIH) had no involvement in the: 1) study design; 2) collection, analysis and interpretation of data; 3) the writing of the report; and (4) the decision to submit the manuscript for publication. SM wrote the first draft of the manuscript and no honorarium, grant, or other form of payment was given to anyone to produce the manuscript.

Author information

Authors and Affiliations

Contributions

SM and SL designed and supervised all aspects of the study; RG and ACD provided input during the study conceptualization; SM, AMG, AK, and JB participated in the data collection; SM and AMG led the data analysis, and ZW provided analysis of other study data to serve as background for the paper. All authors reviewed and provided insight on the manuscript. The author(s) read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Ethics approval was received from all participating institutions (RAND - 2016–0234-AM01, Bienestar Human Services, Inc. - 2016-09-657, New York State Psychiatric Institute - 7401) and verbal consent was approved. Participants provided written consent at the start of the study, and additional verbal consent was obtained to conduct study interviews over the phone with participants.

Consent for publication

Nothing to declare and there is no identifiable information from participants so consent for publication is not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

MacCarthy, S., Mendoza-Graf, A., Wagner, Z. et al. The acceptability and feasibility of a pilot study examining the impact of a mobile technology-based intervention informed by behavioral economics to improve HIV knowledge and testing frequency among Latinx sexual minority men and transgender women. BMC Public Health 21, 341 (2021). https://doi.org/10.1186/s12889-021-10335-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12889-021-10335-5