Abstract

Cancer-related cognitive impairment (CRCI) is one of the most concerning conditions experienced by patients living with cancer and has a major impact on their quality of life. Available cognitive assessment tools are too time consuming for day-to-day clinical setting assessments. Importantly, although shorter, screening tools such as the Montreal Cognitive Assessment or the Mini-Mental State Evaluation have demonstrated a ceiling effect in persons with cancer, and thus fail to detect subtle cognitive changes expected in patients with CRCI. This study addresses this lack of cognitive screening tools by developing a novel tool, the Fast Cognitive Evaluation (FaCE).

A population of 245 patients with 11 types of cancer at different illness and treatment time-points was enrolled for the analysis. FaCE was developed using Rasch Measurement Theory, a model that establishes the conditions for a measurement tool to be considered a rating scale.

FaCE shows excellent psychometric properties. The population size was large enough to test the set of items (item-reliability-index=0.96). Person-reliability (0.65) and person-separation (1.37) indexes indicate excellent internal consistency. FaCE’s scale is accurate (reliable) with high discriminant ability between cognitive levels. Within the average testing time of five minutes, FaCE assesses the main cognitive domains affected in CRCI.

FaCE is a rapid, reliable, and sensitive tool for detecting even minimal cognitive changes over time. This can contribute to early and appropriate interventions for better quality of life in patients with CRCI. In addition, FaCE could be used as a measurement tool in research exploring cognitive disorders in cancer survivors.

Similar content being viewed by others

Introduction

In 2020, the Canadian Cancer Society estimated that 225,800 Canadians were diagnosed with cancer [1]. It is reported that the 5- and 10-year average survival rates for persons with cancer, all cancers combined, are 63% and 57%, respectively [1]. Although survival rates have risen, cancer is often accompanied by secondary symptomatology from the malignancy itself or its treatments, months, or years into remission. Cancer-related cognitive impairment (CRCI) is amongst the most troublesome, as it not only affects patients’ quality of life, but also significantly limits their daily and social functioning including their return to active professional life [2,3,4,5]. CRCI was first recognized by cancer survivors themselves [6] and is estimated to affect 75% of patients during treatment [7] and approximately 25-35% years after treatment [3, 8]. Unfortunately, CRCI remains underdiagnosed and challenging to treat [5, 9]. CRCI is described as a syndrome presenting as a combination of difficulties with working memory, decreased concentration, attention deficits, reduced verbal fluency, and impaired executive function [9].

The first step in treating any condition, including CRCI, is the ability to detect its occurrence. Presently, there is a lack of cognitive screening tools applicable to CRCI adapted to the clinical setting and none are specific to this population, nor can they detect the subtle cognitive changes seen in CRCI, detect them early, and evaluate them over time (before, during, and after the course of treatment). Currently available cognitive assessment tests such as the Repeatable Battery for the Assessment of Neuropsychological status [10] and the High Sensitivity Cognitive Screen [11] are too time-intensive to be used in everyday clinical practice [12]. The Mini-Mental State Examination (MMSE) [13] and the Montreal Cognitive Assessment (MoCA) [14] have been used for decades as cognitive screening tools in the geriatric population for suspected dementia and more recently, in other populations. Although these tools are more time-efficient, our group has previously demonstrated that the MoCA, when administered to persons with cancer, presents a noticeable ceiling effect, meaning that it cannot detect subtle cognitive deficits [4, 15]. These results confirmed the conclusions of other authors [16, 17]. This limitation is of great importance since patients with cancer are expected to show better cognitive ability than patients with dementia as they do not present with widespread organic brain degeneration [18, 19].

This present study addresses the current gap of cognitive assessment tools specific to cancer survivors as defined by the American Cancer Society [20] by presenting a novel tool developed specifically with persons with cancer. This tool is rapid, valid, reliable, specific, and sensitive in detecting even minimal cognitive changes.

Methodology

First, a French cognitive assessment tool, the Fast Cognitive Screen (FCS) (Appendix 2), was developed using our previous research findings with the MoCA [15] and the recommendations and systematic reviews published by various expert groups [9, 21,22,23]. Second, by applying Rasch Measurement Theory (RMT) to the FCS, a novel tool with excellent psychometric properties was developed which we called the Fast Cognitive Evaluation (FaCE).

The study was approved the Research Ethics Board of the CHU de Québec (2014-1209; A13-05-1209; and 2017-3312).

Population

Patients diagnosed with cancer at any stage, before chemotherapy, currently or previously treated, were recruited from outpatient clinics of the CHU de Québec-Université Laval, Québec City, QC, Canada, between August 2013 and August 2018 by contacting patients with the permission of the treating team, and a portion of women with breast cancer before chemotherapy within a study on chemotherapy-induced peripheral neuropathy [24]. Patients with brain tumors or brain metastases, history of cerebrovascular disease, psychiatric illness, or dementia were excluded. Informed consent was obtained for all participants.

Tool development, data collection and testing

The FCS included questions assessing cognitive domains commonly impacted by CRCI such as: orientation in time and space, visuospatial abilities (copying a cube, drawing a clock), executive functioning (a Trial Test (A-H; 1-8)), attention (5 sequential subtractions, repetition of 4 numbers forward and 3-7 numbers backward). Moreover, the difficulty levels of working memory [25] tasks were increased to address the ceiling effects previously highlighted [15] (repeating 7 words twice instead of only 5 words (as in the MoCA)), verbal fluency (naming as many fruits and vegetables as possible in one minute), and delayed recall (recalling the 7 previously stated words).

Socio-demographic and medical information were collected through a structured questionnaire and medical chart review. The FCS was then administered to all participants by trained evaluators. All four evaluators received standardized training including a minimum of two shadowing sessions with skilled evaluators and an instruction booklet.

Rasch measurement theory analysis

Rasch Measurement Theory [26] was then applied to the FCS to develop the FaCE tool. RMT is based on the premise that the probability of each response is correlated with the interaction between the difficulty level of an item and the ability level of the examinee: people with higher abilities are more likely to correctly answer more difficult items than people with lower ability levels. It depicts the conditions to be satisfied for a measurement tool to be considered a rating scale, that is, with proportional level of difficulties [27]. Rasch analyses were conducted using Winsteps version 4.1.5 statistical software [28].

Test-of-fit statistics (infit and outfit residuals) between 0.5 and 1.5 were considered suitable fit [29]. Differential item functioning (DIF) analyses for age, sex, education, cancer stage, chemotherapy and radiotherapy status, comorbidities, and interviewers were also conducted to determine response patterns to items which did not fall within the RMT predictions [30]. A principal components analysis (PCA) of residuals was performed to determine if the FCS is unidimensional, meaning that it evaluates a single latent trait (i.e., cognition) [31]. Reckase’s criterion proposes that unidimensionality is achieved when the variance explained by a measure is at least 20 % [32] and, as proposed by Linacre, an eigenvalue of at least 3 in each contrast was used as a cut-point to determine the need for further investigation of the items included in the identified cluster [31]. To support the scale’s reliability (internal consistency), separation statistics above 2 and reliability above 0.8 were used [33].

Development of the Fast Cognitive Evaluation tool based on the Fast Cognitive Screen

The FCS underwent two subsequent Rasch analyses to improve its measurement performance and select the minimal and most reliable set of items. After each analysis, items were removed if they did not fit the model or prevented the FCS from meeting the a priori requirements to be considered an ideal rating scale.The tool demonstrated excellent psychometric properties after two rounds of analysis. . An Analysis of Variance (ANOVA) was performed to test the effect of both the time needed to complete the FCS and which evaluator conducted the test on the score. The optimized version of the FCS was then renamed the Fast Cognitive Evaluation (FaCE) (Appendix 1).

Development of a second French and two English Versions of the Fast Cognitive Evaluation

A second equivalent French version and two additional English versions of FaCE were developed by a linguistic specialist by carefully matching French-English word-stimuli on a set of psycholinguistic variables, including word frequency [34], word/syllable length [35], imageability [36], age of acquisition [37] and reaction time norms [38]. One pair of French/English versions of FaCE measures semantic verbal fluency through animal naming, while the other uses fruits/vegetables, as these are stable across French and English in terms of the number of items typically named in 60 seconds [39, 40]. Full details are provided in Appendix 3.

Results

Population

During the study period, 246 participants met eligibility criteria. One was excluded because of major discrepancies in responses. The study population included 67% women, from 26 to 95 years old, where approximately half (48%) held a university degree. The study participants had 11 different cancer types, with 46% of participants having breast cancer, as expected since one of our largest recruitment sites was a breast cancer clinic. Table 1 summarizes population characteristics. FaCE was administered at different timepoints, before (44%), during (35%), and after completing chemotherapy (21%).

Results of rasch analyses of the FCS to develop FaCE

The FCS went from 55 items assessing 6 cognitive dimensions to FaCE with 31 items assessing 5 dimensions. After the first Rasch analysis, orientation questions, clock drawing, and backward and forward repetition of a series of numbers were removed because of weak correlation between the observed and expected scores (0 < Point measure correlation < 0.1). It is estimated that FaCE takes an average of 5 minutes to administer.

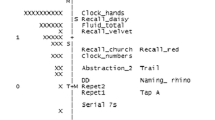

As shown in Table 2, the Rasch analysis conducted on FaCE showed no gaps in the scale, nor floor or ceiling effects, since no participant scored 0 or 27, respectively. All items adequately fit the model (0.5 < Outfit MNSQ < 1.5). Figure 1 presents a visual conceptualization of FaCE and its measures. The vertical axis is on a Logit scale, which is a mathematical conversion of the probability (0 to 1) of an individual obtaining a particular score. To the left of the vertical axis is the distribution of persons arranged by increasing cognitive capacity from bottom to top. The letter ‘M’ represents the mean cognitive capacity (0.3 Logit). To the right of the vertical axis are the questionnaire items, arranged by increasing difficulty from bottom to top. The letter ‘+M’ represents the mean probability of successfully answering the items (0 Logit). According to RMT, the results in Fig. 1 show that the most difficult item (WM1.4) exceeds the person with the highest cognitive capacity. Similarly, there are multiple items located below the person with the lowest capacity.

Person-Item Map: Measures for category scores (maximum probability of observing a category). In the Person-Item Map the items are positioned at their highest level of probability of reaching a pre-defined category on the rating scale (expected score on the item; for example, VF.7 is positioned at 2 Logit). The vertical axis is on a Logit scale; to its left is the distribution of persons (by ‘#’ for 2 persons or ‘.’ for 1 person) and to its right is the items hierarchy (where each item is abbreviated, e.g., VF: Verbal Fluency). The persons are arranged by increasing cognitive capacity from bottom to top; the items by increasing difficulty. The number following each abbreviation corresponds to the scoring category used for the analysis, which could correspond to more than 1 possible answer (e.g. VF.6 corresponds to naming 25 to 26 fruits & vegetables in one minute). ‘M’: Mean cognitive capacity. ‘+M’: Mean probability of successfully answering the items. ‘S’ and ‘T’: First and second standard deviations of mean cognitive capacity, respectively. ‘+S’ and ‘+T’: First and second standard deviations of probability of successfully answering the items, respectively

Test-of-fit statistics demonstrated outfit residuals between 0.6 and 2.8, meaning that the observed (measured) data fit the predicted model. There are almost no gaps within the scale (less than 0.4 Logit differences), as the items are separated by less than 1.5 Logits [31]. The polarity of items showed good fit with what was expected by the model as the items fit the hypothesized mathematical model (predictive validity); thus, as the difficulty level of items increases, a higher ability level is required (point measure correlations were positive, ranging from 0.37 to 0.71).

The DIF analysis showed that age, sex, education, cancer stage, chemotherapy and radiotherapy status, comorbidities, and interviewers did not have significant differential functioning (p > 0.05).

The PCA indicated that unidimensionality was satisfied. The explained variance of the items was 52.7% (expected value was 56.2%). No potential sub-dimensions (eigenvalue > 2) were present since no clusters were identified on each contrast (eigenvalues ranged between 0.7 and 1.8). The results of the PCA on the standardized residuals showed no sub-dimension, meaning that FaCE is unidimensional. The item characteristic curves of each item show no major deviation from what was expected by the model. Figure 2 shows the test characteristic curves; the sigmoidal shape illustrates good response probability. FaCE showed excellent item reliability (96%). Item separation indexes confirm that our population size was large enough to test the set of items. Person reliability index of 0.65 and person separation index of 1.37 were observed.

Results of the ANOVA showed no significant effect of the time needed to complete the test on the score (p = 0.88) nor of the evaluators on the time needed to complete the test (p = 0.85).

Fast Cognitive Evaluation Scoring System and Interpretation

Table 3 describes FaCE and its scoring system. From left to right, the columns present the assessed cognitive dimensions, the corresponding tasks to be performed by the patient, the response categories for each task, and the attributed values for each response category.

Individuals earn points for successfully completing items. The total number of points earned is termed the ‘total items score’ (0 to 27 points), which is converted to a percentage ‘FaCE score’ (0 to 100%) using a conversion scale. The conversion scale was created using the Logit values obtained by RMT modelling of the total items score converted to a percentage scale. Therefore, the FaCE score is linear, with equidistant scores representing equal differences in cognitive performance, unlike to the total items score, which resembles the MoCA [14]. For instance, if two patients both ‘lose’ three (3) points on their total items score but start with different original scores (e.g., 27 to 24 vs. 25 to 22), they will have experienced different degrees of cognitive decline (30% vs. 12%, respectively). Conversely, if two patients lose the same percentage of their FaCE score, regardless of their original scores (e.g., 90% to 80% vs. 20% to 10%), they have experienced the same degree of cognitive decline (10%). Therefore, the FaCE score behaves like a ruler. In the research context, this means that estimating changes in cognitive performance over time is more accurately done using the linear FaCE score than the non-linear total items score [25].

Fast Cognitive Evaluation linguistic results

Results from cross-linguistic comparisons showed comparability between the French and English versions of FaCE (appendix 3). Benchmarking of the stimuli and tasks against databases of psycholinguistic norms for both languages offer good face validity that the cognitive demands of both instruments are similar. This methodology is robust and could be used for translation into other languages.

Discussion

FaCE is an easily administrable tool for clinical and research use with excellent psychometric properties and is suitable for patients living with cancer. It is linear, has no ceiling or floor effects, no gaps along the scale, with good internal consistency and discriminatory power. Only the necessary number of items are used. It accurately evaluates cognitive abilities with minimal interviewer training requirements; thus, it is accessible for any healthcare professional or research staff. A population of cancer patients along the whole spectrum of the illness trajectory, from diagnosis, remission, to advanced stage, were involved, ensuring that FaCE is adapted to all patients with cancer [3].

As previously mentioned, psychometric properties of the MoCA showed a significant floor and ceiling effect [15, 16]. The authors tried to overcome the floor effect of the MoCA by combining it with MMSE [41]. The results showed improved precision in the lower range of patients’ cognitive performance; however, the dimensionality remained questionable, and the ceiling effect could not be overcome. Other authors proposed an extensive cognitive test evaluating five domains for the early recognition of atypical dementia in patients over 50 years old [42, 43]. This test requires 20 to 30 minutes to administer and advanced training. Its psychometric properties have not been tested. Additionally, it may not meet criteria for unidimensionality as the measure includes a behavioral index score reported by caregivers or close relatives. Other domains, such as semantic knowledge, and identification of surface dyslexia or dysgraphia may affect dimensionality. Linearity and floor and ceiling effects have also not been formally tested.

In clinical settings, FaCE could overcome the lack of existing assessment tools that leads to underestimation of cognitive difficulties during cancer treatment and illness trajectory. Subjective complaints of cognitive impairment could be objectively measured using FaCE for better clinical patient-tailored management and improved quality of life [44]. Furthermore, as patients with cancer are often affected by fatigue, sleep disruption, pain, and nutritional deficits, it is important for tools that screen for cognitive changes to be short while being thorough. This measurement tool can track subtle cognitive changes across a wide range of cognitive performance in patients at risk of CRCI even at onset of symptoms.

To overcome any potential learning (practice) effects, two versions of FaCE were developed per language (French and English) based on neuropsychometric and neurolinguistic parameters. Considering the simplicity of the tool, it would be safe to assume that comparison of the different versions would result in similar psychometric properties; this should be confirmed in future research.

Limitations

Despite approximately half of our sample being breast cancer survivors, this did not influence the results as no significant differences were observed between the scores of participants with a diagnosis of breast cancer and the scores of participants with one of the 10 other cancer types enrolled in this study.

Psychological status, fatigue, sleep disruption, pain, infection, nutritional deficits, and hormonal changes can influence cognitive function [5, 45]. While these aspects were not measured, we can deduce that if they had influenced the score, clusters or extreme scores would have appeared. As the patients with breast cancer were overrepresented, there was concern about the impact of menopausal status on cognitive ability as described in several studies [6], but it was demonstrated that menopausal changes had no effect on CRCI in this population [6].

The design of this study did not allow for the identification of cut-off scores to classify patients according to the degree of cognitive impairment. Further studies testing the behaviour of FaCE in populations without documented illnesses would be necessary for this purpose. However, this tool is sufficiently developed to screen for cognitive changes over time at the patient and population levels. We recommend using FaCE for clinical and research purposes for persons with cancer at any stage of the disease trajectory, including complete remission.

Future directions

When using the FaCE in clinical or research settings, it would be relevant to explore the effect on cognitive performance of patients’ personal and clinical characteristics, such as family background, history of depression, type and duration of cancer treatments. Given the subjective impact of CRCI on an individual’s functioning [6, 8], it would also be interesting to explore the person’s subjective experience of CRCI by comparing FaCE scores with those of a tool with a subjective component such as FACT-Cog [8]. Furthermore, given that FaCE does not have a multi-tasking item, it would be interesting to explore the addition of such an item. It is also known that an important component of CRCI is the increasing time requirement to perform cognitive tests. It would be interesting to test the value of implementing the time factor into the FaCE score. Since CRCI may be similar to other impairments described by persons with other chronic pathologies than cancer, it would be relevant to explore the psychometric properties of FaCE in these populations.

Conclusion

In conclusion, the Fast Cognitive Evaluation (FaCE), developed using Rasch Measurement Theory, is a novel, valid, and reliable measurement tool that can be used to accurately screen cognitive deficits in persons with cancer and to track any changes over time. One very important benefit of this screening tool is that it can be administered to nearly any person with cancer by any healthcare provider with minimal training. The detection of cognitive deficits through repeated administration of FaCE should trigger appropriate non-pharmacological and pharmacological interventions for better quality of life of cancer survivors. FaCE could also be considered a reliable and simple measurement tool in research that explores cognitive disorders in people living with cancer.

Availability of data and materials

The datasets generated during and analyzed during the current study are not publicly available (due to not obtaining participant informed consent for public availability of study data) but are available from the corresponding author on reasonable request.

References

Comité-consultatif-des-statistiques-canadiennes-sur-le-cancer (2020) Statistiques canadiennes sur le cancer. In: Editor Book Statistiques canadiennes sur le cancer, City

Boykoff N, Moieni M, Subramanian SK (2009) Confronting chemobrain: an in-depth look at survivors’ reports of impact on work, social networks, and health care response.Journal of Cancer Survivorship 3: 223-232 https://doi.org/10.1007/s11764-009-0098-x

Janelsins MC, Heckler CE, Peppone LJ, Ahles TA, Mohile SG, Mustian KM, Palesh O, O’Mara AM, Minasian LM, Williams AMJJoCO (2018) Longitudinal trajectory and characterization of cancer-related cognitive impairment in a nationwide cohort study.Journal of Clinical Oncology 36: 3231 https://doi.org/10.1200/JCO.2018.78.6624

Olson B, Marks DLJC (2019) Pretreatment cancer-related cognitive impairment—Mechanisms and outlook.Cancers 11: 687 https://doi.org/10.3390/cancers11050687

Allen DH, Myers, J. S., Jansen, C. E., Merriman, J. D., & Von Ah, D (2018) Assessment and Management of Cancer-and Cancer TreatmenteRelated Cognitive Impairment.J Nurse Pract 14(4):217-224.e5.https://doi.org/10.1016/j.nurpra.2017.11.026

Vega JN, Dumas J, Newhouse PAJPo (2018) Self‐reported chemotherapy‐related cognitive impairment compared with cognitive complaints following menopause.Psychooncology 27: 2198-2205 https://doi.org/10.1002/pon.4796

Janelsins MC, Kesler SR, Ahles TA, Morrow GR (2014) Prevalence, mechanisms, and management of cancer-related cognitive impairment.Int Rev Psychiatry 26: 102-113 https://doi.org/10.3109/09540261.2013.864260

Dyk KV, Crespi CM, Petersen L, Ganz PAJJcs (2020) Identifying cancer-related cognitive impairment using the FACT-Cog Perceived Cognitive Impairment.JNCI Cancer Spectr 4: pkz099 https://doi.org/10.1093/jncics/pkz099

Wefel JS, Vardy J, Ahles T, Schagen SBJTlo (2011) International Cognition and Cancer Task Force recommendations to harmonise studies of cognitive function in patients with cancer.Lancet Oncol 12: 703-708 https://doi.org/10.1016/S1470-2045(10)70294-1

Randolph C, Tierney MC, Mohr E, Chase TN (1998) The Repeatable Battery for the Assessment of Neuropsychological Status (RBANS): preliminary clinical validity.Journal of clinical and experimental neuropsychology 20: 310-319 https://doi.org/10.1076/jcen.20.3.310.823

Fogel BS (1991) The high sensitivity cognitive screen.International psychogeriatrics 3: 273-288 https://doi.org/10.1017/s1041610291000728

Shilling V, Jenkins V, Trapala ISJBCR, Treatment,. The (mis) classification of chemo-fog–methodological inconsistencies in the investigation of cognitive impairment after chemotherapy. Breast Cancer Res Treat. 2006;95:125–9. https://doi.org/10.1007/s10549-005-9055-1.

Folstein MF, Folstein SE, McHugh PR (1975) "Mini-mental state". A practical method for grading the cognitive state of patients for the clinician.Journal of psychiatric research 12: 189-198 https://doi.org/10.1016/0022-3956(75)90026-6

Nasreddine ZS, Phillips NA, Bedirian V, Charbonneau S, Whitehead V, Collin I, Cummings JL, Chertkow H (2005) The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment.Journal of the American Geriatrics Society 53: 695-699 https://doi.org/10.1111/j.1532-5415.2005.53221.x

Arcuri GG, Palladini L, Dumas G, Lemoignan J, Gagnon B (2015) Exploring the measurement properties of the Montreal Cognitive Assessment in a population of people with cancer.Supportive care in cancer : official journal of the Multinational Association of Supportive Care in Cancer 23: 2779-2787 https://doi.org/10.1007/s00520-015-2643-7

Koski L, Xie H, Finch L. Measuring cognition in a geriatric outpatient clinic: Rasch analysis of the Montreal Cognitive Assessment. J Geriatr Psychiatry. 2009;22:151–60. https://doi.org/10.1177/0891988709332944.

Larner AJ (2012) Screening utility of the Montreal Cognitive Assessment (MoCA): in place of--or as well as--the MMSE?Int Psychogeriatr 24: 391-396 https://doi.org/10.1017/s1041610211001839

De Reuck J, Maurage C-A, Deramecourt V, Pasquier F, Cordonnier C, Leys D, Bordet RJFN (2018) Aging and cerebrovascular lesions in pure and in mixed neurodegenerative and vascular dementia brains: a neuropathological study.Folia Neuropathol 56: 81-87 https://doi.org/10.5114/fn.2018.76610

Wefel JS, Kesler SR, Noll KR, Schagen SBJCacjfc (2015) Clinical characteristics, pathophysiology, and management of noncentral nervous system cancer‐related cognitive impairment in adults.CA Cancer J Clin 65: 123-138 https://doi.org/10.3322/caac.21258

American-Cancer-Society Survivorship: During and After Treatment. In: Editor Book Survivorship: During and After Treatment. Cancer.org, City.https://www.cancer.org/treatment/survivorship-during-and-after-treatment.html

Block CK, Johnson-Greene D, Pliskin N, Boake CJTCN (2017) Discriminating cognitive screening and cognitive testing from neuropsychological assessment: implications for professional practice.Clin Neuropsychol 31: 487-500 https://doi.org/10.1080/13854046.2016.1267803

Roebuck-Spencer TM, Glen T, Puente AE, Denney RL, Ruff RM, Hostetter G, Bianchini KJ (2017) Cognitive screening tests versus comprehensive neuropsychological test batteries: a national academy of neuropsychology education paper.Arch Clin Neuropsycho 32: 491-498 https://doi.org/10.1093/arclin/acx021

Torrente NC, Pastor J-BN, de la Osa Chaparro NJJoCS (2020) Systematic review of cognitive sequelae of non-central nervous system cancer and cancer therapy.J cancer surviv: 1-19 https://doi.org/10.1007/s11764-020-00870-2

Lynn R. Gauthier L-AR, Maud Bouffard, Frédérique Therrien, Sarah Béland, Marianne Bouvrette, Jennifer Gewandter, Lucia Gagliese, Robert H Dworkin, Julie Lemieux, Josée Savard, Philip L. Jackson, Michèle Aubin, Sophie Lauzier, Bruno Gagnon, Anne Dionne, Cindy Shobbrook, Pierre Gagnon (2021) Factors associated with chemotherapy-induced peripheral neuropathy-related reduced taxane dose or premature discontinuation in women with early-stage breast cancer.medRxiv 2021.09.13.21263539https://doi.org/10.1101/2021.09.13.21263539

Ciesielska N, Sokołowski R, Mazur E, Podhorecka M, Polak-Szabela A, Kędziora-Kornatowska K (2016) Is the Montreal Cognitive Assessment (MoCA) test better suited than the Mini-Mental State Examination (MMSE) in mild cognitive impairment (MCI) detection among people aged over 60? Meta-analysis.J Psychiatr Pol 50: 1039-1052 https://doi.org/10.12740/PP/45368

Rasch G (1993) Probabilistic models for some intelligence and attainment tests. ERIC

Yu CH (2020) Objective Measurement: How Rasch Modeling Can Simplify and Enhance Your AssessmentSpringer, Singapore, pp. 47-73.https://doi.org/10.1007/978-981-15-1800-3_4

Linacre JM. Winsteps® Rasch measurement computer program (Version 4.1.5). Portland: Winsteps.com; 2018.

Linacre J. What do infit, outfit, mean-square, and standardization mean? Arch Rasch Meas. 2002;16:871–82.

Boone WJ, Staver JR, Yale MS. Rasch analysis in the human sciences. Dordrecht: Springer; 2014. p. 273–97. http://dx.doi.org/10.1007/978-94-007-6857-4.

Linacre JM. Detecting multidimensionality: which residual data-type works best? J Outcome Meas. 1998;2(3):266–83.

Reckase MD (1979) Unifactor latent trait models applied to multifactor tests: Results and implications.Journal of educational statistics 4: 207-230 https://doi.org/10.2307/1164671

Linacre J (2014) Reliability and separation of measures.A user’s guide to Winsteps Ministep Rasch-model computer programs (version 381 0) Retrieved fromhttps://www.winsteps.com/winman/reliability.htm

Brysbaert M, Buchmeier M, Conrad M, Jacobs AM, Bölte J, Böhl AJEp (2011) The word frequency effect https://doi.org/10.1027/1618-3169/a000123

Baddeley AD, Thomson N, Buchanan M (1975) Word length and the structure of short-term memory.J Journal of verbal learning verbal behavior 14: 575-589 https://doi.org/10.1016/S0022-5371(75)80045-4

Rofes A, Zakariás L, Ceder K, Lind M, Johansson MB, De Aguiar V, Bjekić J, Fyndanis V, Gavarró A, Simonsen HG (2018) Imageability ratings across languages.J Behavior research methods 50: 1187-1197 https://doi.org/10.3758/s13428-017-0936-0

Ferrand L, Bonin P, Méot A, Augustinova M, New B, Pallier C, Brysbaert M (2008) Age-of-acquisition and subjective frequency estimates for all generally known monosyllabic French words and their relation with other psycholinguistic variables.J Behavior research methods 40: 1049-1054 https://doi.org/10.3758/BRM.40.4.1049

Levelt WJ, Roelofs A, Meyer AS (1999) Multiple perspectives on word production.J Behavioral Brain Sciences 22: 61-69 https://doi.org/10.1017/S0140525X99451775

Roberts PM, Le Dorze G (1997) Semantic organization, strategy use, and productivity in bilingual semantic verbal fluency.J Brain language. 59: 412-449https://doi.org/10.1006/brln.1997.1753

Binetruy M, Mauny F, Lavaux M, Meyer A, Sylvestre G, Puyraveau M, Berger E, Magnin E, Vandel P, Galmiche J (2018) The RAPID-II Neuropsychological Test battery for subjects aged 20 to 49 years: Norms and cognitive profile.J Revue neurologique (Paris) 174: 44-55 https://doi.org/10.1016/j.neurol.2017.05.010

Koski L, Xie H, Konsztowicz S. Improving precision in the quantification of cognition using the Montreal Cognitive Assessment and the Mini-Mental State Examination. Int Psychogeriatr. 2011;23:1107–15. https://doi.org/10.1017/s1041610210002450.

Sellami L, Meilleur-Durand S, Chouinard A-M, Bergeron D, Verret L, Poulin S, Jean L, Fortin M-P, Nadeau Y, Molin P (2018) The Depistage Cognitif de Quebec: a new clinician’s tool for early recognition of atypical dementia.J Dement geriatr cogn disord 46: 310-321 https://doi.org/10.1159/000494348

Laforce Jr R, Sellami L, Bergeron D, Paradis A, Verret L, Fortin M-P, Houde M, Roy M, Poulin S, Macoir J (2018) Validation of the Dépistage Cognitif de Québec: a new cognitive screening tool for atypical dementias.J Archives of Clinical Neuropsychology 33: 57-65 https://doi.org/10.1093/arclin/acx048

Pergolizzi D, Crespo I (2020) Recognition of cognitive complaints for patients with advanced cancer.J Supportive Care in Cancer 28: 5055-5057 https://doi.org/10.1007/s00520-020-05678-5

O’Farrell E, MacKenzie J, Collins B (2013) Clearing the air: a review of our current understanding of “chemo fog”.J Current oncology reports 15: 260-269 https://doi.org/10.1007/s11912-013-0307-7

Acknowledgments

We would like to thank the patients that generously offered their time, in sometimes difficult periods within their life journey. We would like to extend our gratitude to the oncologists, healthcare professionals, and the entire team of personnel that work at the outpatient oncology clinics of the Centre de Recherche Clinique et Évaluative en Oncologie and the Centre des Maladies du Sein Deschênes-Fabia (Québec City) for the work that they do and for assisting us in identifying prospective participants. Furthermore, we would like to thank the Équipe de Recherche Michel Sarazin en Oncologie Psychosociale (ERMOS) and all students that in any way contributed to this research over the years. This study was supported in part from a student grant from the Centre de Recherche du CHU de Québec-Université Laval. A portion of the data from women with breast cancer were drawn from a study supported by funds awarded to LR Gauthier from the Canadian Pain Society/Pfizer Early Career Investigator Pain Research Grant, the Fonds de recherche du Quebec – Santé, and the Fondation J.-Louis Lévesque.

Funding

This study was supported in part from a student grant from the Centre de Recherche du CHU de Québec-Université Laval awarded to Dr. Bruno Gagnon. A portion of the data from women with breast cancer were drawn from a study supported by funds awarded to Dr. Lynn R. Gauthier from the Canadian Pain Society/Pfizer Early Career Investigator Pain Research Grant, the Fonds de recherche du Quebec – Santé, and the Fondation J.-Louis Lévesque.

Author information

Authors and Affiliations

Contributions

Conceptualization: Amel Baghdadli, Giovanni G. Arcuri, Clarence G. Green, Pierre Gagnon, Bruno Gagnon. Methodology: All authors. Formal analysis and investigation: Amel Baghdadli, Giovanni G. Arcuri, Clarence G. Green, Bruno Gagnon. Writing – original draft preparation: Amel Baghdadli, Bruno Gagnon. Writing – reviewing and editing: All authors. Funding acquisition: Lynn R. Gauthier, Bruno Gagnon. Resources: Bruno Gagnon. Supervision: Bruno Gagnon. Data collection: Amel Baghdadli, Lynn R. Gauthier, Bruno Gagnon. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was performed in line with the principles of the Declaration of Helsinki. Approval was granted by the Research Ethics Board of the CHU de Québec-Université Laval (2014-1209; A13-05-1209; and 2017-3312). Informed consent was obtained from all individual participants included in the study.

Consent for publication

Not applicable.

Competing interests

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Baghdadli, A., Arcuri, G.G., Green, C.G. et al. The Fast Cognitive Evaluation (FaCE): a screening tool to detect cognitive impairment in patients with cancer. BMC Cancer 23, 35 (2023). https://doi.org/10.1186/s12885-022-10470-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12885-022-10470-1