Abstract

Background

Interventions to improve fecal testing for colorectal cancer (CRC) exist, but are not yet routine practice. We conducted this systematic review to determine how implementation strategies and contextual factors influenced the uptake of interventions to increase Fecal Immunochemical Tests (FIT) and Fecal Occult Blood Testing (FOBT) for CRC in rural and low-income populations in the United States.

Methods

We searched Medline and the Cochrane Library from January 1998 through July 2016, and Scopus and clinicaltrials.gov through March 2015, for original articles of interventions to increase fecal testing for CRC. Two reviewers independently screened abstracts, reviewed full-text articles, extracted data and performed quality assessments. A qualitative synthesis described the relationship between changes in fecal testing rates for CRC, intervention components, implementation strategies, and contextual factors. A technical expert panel of primary care professionals, health system leaders, and academicians guided this work.

Results

Of 4218 citations initially identified, 27 unique studies reported in 29 publications met inclusion criteria. Studies were conducted in primary care (n = 20, 74.1%), community (n = 5, 18.5%), or both (n = 2, 7.4%) settings. All studies (n = 27, 100.0%) described multicomponent interventions. In clinic based studies, components that occurred most frequently among the highly effective/effective study arms were provision of kits by direct mail, use of a pre-addressed stamped envelope, client reminders, and provider ordered in-clinic distribution. Interventions were delivered by clinic staff/community members (n = 10, 37.0%), research staff (n = 6, 22.2%), both (n = 10, 37.0%), or it was unclear (n = 1, 3.7%). Over half of the studies lacked information on training or monitoring intervention fidelity (n = 15, 55.6%).

Conclusions

Studies to improve FIT/FOBT in rural and low-income populations utilized multicomponent interventions. The provision of kits through the mail, use of pre-addressed stamped envelopes, client reminders and in-clinic distribution appeared most frequently in the highly effective/effective clinic-based study arms. Few studies described contextual factors or implementation strategies. More robust application of guidelines to support reporting on methods to select, adapt and implement interventions can help end users determine not just which interventions work to improve CRC screening, but which interventions would work best in their setting given specific patient populations, clinical settings, and community characteristics.

Trial registration

In accordance with PRISMA guidelines, our systematic review protocol was registered with PROSPERO, the international prospective register of systematic reviews, on April 16, 2015 (registration number CRD42015019557).

Similar content being viewed by others

Background

Colorectal cancer (CRC) is the third most common cancer, and the second leading cause of cancer deaths, in the United States [1]. Unequivocal evidence demonstrates that guideline concordant screening decreases CRC incidence and mortality by 30–60% [2]. Numerous modalities are currently recommended for CRC screening in average risk adults aged 50–75 years, including colonoscopy every 10 years or a fecal occult blood test (FOBT) or fecal immunochemical test (FIT) within the past year [3,4,5]. However, current CRC screening rates are 63% across the United States [6], well below targets set by the National CRC Roundtable (80% by 2018) [7] and by Healthy People 2020 (70.5%) [8]. More striking are the consistent disparities in CRC screening in rural areas, among adults with low income, and in racial and ethnic minorities [6, 9,10,11,12]. Although improving, screening rates in these vulnerable populations may be 15%–30% lower than their non-rural, higher income, non-minority counterparts [13].

Multiple systematic reviews identify interventions that effectively increase CRC screening [14,15,16]. In 2016, the Community Preventive Services Task Force recommended the use of multicomponent interventions to increase screening for CRC. Multicomponent interventions combine two or more approaches to increase community demand (e.g., client reminders, small media), community access (e.g., reducing client costs), or provider delivery of screening services (e.g., provider reminders) or two or more approaches to reduce different structural barriers [17]. However, understanding how these interventions can be best implemented and in what populations (e.g., screening naïve versus experienced) and settings (e.g., rural versus urban settings, health system versus independent clinics) remains a neglected area of study [14, 18, 19].

Implementing interventions into routine care in clinic and community-based settings often involves the active engagement of multiple stakeholders and the adaptation of program elements to local contexts. In Oregon and elsewhere, primary care and health plan leaders are eager to identify, adapt, and implement interventions to improve CRC screening in order to achieve state performance benchmarks and to improve patient quality and experience of care. However, our work with primary care and health system partners found that stakeholders are interested not just in which interventions work to improve CRC screening, but which interventions would work best in their setting given specific patient populations, clinical settings, and community characteristics (see www.communityresearchalliance.org).

Therefore, we designed this systematic review to compare the effectiveness of interventions to improve fecal testing for CRC in clinic and community settings serving rural, low-income populations and their associated implementation strategies and contextual factors (see definitions and sources, Fig. 1) [17, 30, 32,33,34,35,36,37]. Determining how implementation strategies and contextual factors influence the uptake of interventions to increase fecal testing for CRC may help stakeholders identify the interventions best suited for use in their local settings.

We focused on fecal testing because this modality plays an important role in early detection of CRC, particularly in population groups at risk for experiencing disparities. Despite the increasing transition to FIT as the preferred modality for fecal testing due to superior adherence, usability, and accuracy [20, 21], we included studies of FOBT as well based on feedback from our technical expert panel (which included primary care professionals, health system leaders, and academicians) that early research on interventions to increase FOBT would likely inform current efforts to increase FIT [22,23,24]. Our key questions were:

-

1.

What is the effectiveness of various interventions to increase CRC screening with FIT/FOBT compared with other interventions or usual care in rural or low-income populations?

-

2.

How do implementation strategies (e.g., clinician champions, external practice facilitation) influence the effectiveness of interventions to increase FIT/FOBT screening for CRC in rural or low-income populations?

-

3.

How do contextual factors (e.g., patient, clinic, community features) influence the effectiveness of interventions to increase FIT/FOBT screening for CRC in rural or low-income populations?

-

4.

What are the adverse effects of interventions to increase FIT/FOBT screening for CRC in rural or low-income populations?

Methods

We followed systematic review methods described in the Cochrane Handbook for Systematic Reviews of Interventions [25] and AHRQ [26]. The review was guided by a technical expert panel of primary care professionals, health system leaders, and academicians. Our protocol was registered with PROSPERO, the international prospective register of systematic reviews (registration number CRD42015019557). The review is reported in accordance with the PRISMA publication standards [27,28,29].

Search strategy

We developed our search strategy with a research librarian with keywords for colorectal cancer, screening, stool, and FIT or FOBT (Additional file 1: Appendix A). We searched MEDLINE®, Cochrane Library, Scopus, and clinicaltrials.gov from January 1, 1998 through March 31, 2015, and updated our search of MEDLINE® and the Cochrane Library on July 19, 2016. Additionally, we reviewed reference lists of included studies and relevant systematic reviews.

Study selection

We screened studies using specific inclusion criteria detailed in Additional file 1: Appendix B. Included studies targeted patients aged 50–75 years, occurred in settings serving rural, Medicaid, or lower socioeconomic status populations in the United States, and reported outcomes for FOBT/FIT screening for CRC. We included randomized controlled trials, non-randomized controlled trials, cohort studies, and pre-post studies. Two research members (MMD, MF) screened titles and abstracts for eligibility; and then obtained the full-text of potentially eligible citations for further evaluation. Two investigators independently reviewed the full articles to determine final inclusion, with differences resolved through consensus or inclusion of a third investigator.

Data abstraction

Data from included studies were abstracted into a customized Microsoft Excel Spreadsheet by one investigator and reviewed for accuracy and completeness by a second investigator. Our overall analytic framework was informed by the Consolidated Framework for Implementation Research (CFIR) [30] and recent reviews on complex multicomponent interventions [18, 31]. Information was abstracted from each study on study setting, design, intervention attributes (e.g., intervention arms tested, type of FIT/FOBT used, theoretical framework), and study results (CRC screening rates, impact by intervention component, recruitment success). Additionally, for each intervention arm tested in an included study we categorized intervention components into distinct, individual categories starting with the Community Preventive Services Task Force recommendations for CRC screening (see Fig. 1) and refined based on study findings (e.g., 5 individual sub-categories identified under reducing structural barriers) [17].

We attempted to abstract data related to implementation strategies and contextual factors when feasible (see Fig. 1). Specifically, we tried to catalogue implementation strategies according to recent work by Proctor, Powell, and colleagues, which encourages the identification of discrete strategies and documentation of elements such as the actor, action, action targets, dose, and theoretical justification [30, 32,33,34]. We also attempted to classify contextual factors based on work by Stange and colleagues focused on the identification of factors across multiple levels (e.g., patient, practice, organization, and environment), the motivation for the intervention, and change in context over time [35,36,37]. However, a paucity of detail in the manuscripts led us to abstract any information regarding implementation strategies and contextual factors in a figure summary, rather than as discrete components as originally planned. Disagreements were resolved by discussion; one author (DIB) adjudicated decisions as needed.

Risk of bias/quality assessment

Two authors independently assessed the quality of each included study. We used a tool developed by the Cochrane Collaboration for randomized controlled trials and control trial designs [25]. We used a tool developed by the National Institutes of Health for quality assessment in pre-post studies which included questions about pre-specification of study details (e.g., aims, eligibility criteria, outcome measures) and methods for data collection and analysis (e.g., method for outcome assessment, analysis controlled for clustering) [38, 39] We did not assess the quality of feasibility studies because no validated criteria are available. Rated studies were given an overall summary assessment of “low”, “high”, or “unclear” risk of bias. Disagreements were resolved through discussion.

Data synthesis

We constructed evidence tables showing the study characteristics and results for included studies by key question. We clustered studies based on the (1) intervention setting: primary care clinic, community-based settings, or both and (2) intervention components used in each study arm (e.g., client reminder or recall, small media, provider incentives). We assessed the effectiveness of the study arms based on the percent of CRC screening completion in the intervention arms compared with the control/usual care arm. We categorized the study arms as being highly effective if screening at the end of follow-up was higher in treatment than the usual care/control condition by more than 25%; effective if the difference was 10–25%; marginally effective if there was an increase of less than 10%; or having no effect. We developed this categorization based on the proportional distribution of the outcome and author assessments of clinical significance. We then assessed which intervention components occurred more frequently among the effective and highly effective study arms, compared with intervention arms that had little or no effect. Finally, we assessed studies to compare their intervention components, implementation strategies, contextual factors, methods, and findings. We compiled a summary of findings for each key question and drew conclusions based on qualitative synthesis of the findings. We did not combine the studies in a quantitative manner via meta-analysis because of the heterogeneity of interventions, methods, and settings.

Rating the body of evidence

We attempted to grade the overall strength of the evidence as high, moderate, low, or insufficient using a method developed by AHRQ [26]. This method considers the consistency, coherence, and applicability of a body of evidence, as well as the internal validity of individual studies. However, because the studies used multicomponent interventions and did not assess the effectiveness of individual components, we were limited from applying AHRQ criteria to the strength of evidence for the individual intervention components.

Results

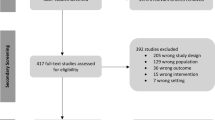

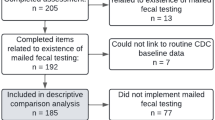

The combined literature searches initially yielded 4218 titles and abstracts, including 4203 from electronic database searches, and 15 from reference lists of systematic reviews and other relevant articles. As summarized in Fig. 2, we assessed 278 full-text articles for eligibility, of which 27 studies reported in 29 publications met inclusion criteria. We identified 20 RCTs (published in 21 articles [40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60], 2 non-randomized controlled trials [61, 62], 3 pre-post studies [63,64,65], 1 cohort study [66] and 1 feasibility study [67] that contained primary data relevant to the key questions. The descriptive characteristics and findings of the 27 included studies are found in Additional file 1: Appendix D, Table D1.

Studies occurred in primary care clinics (n = 20, 74.1%), communities (n = 5, 18.5%), or in both settings (n = 2, 7.4%). Over half of the studies used FOBT (n = 18, 66.6%); FIT was used in 8 studies (29.6%); one study used both FIT and FOBT (3.7%). As summarized in Additional file 1: Appendix D, Table D2, patient eligibility and the informed consent process varied across the studies. Of the 20 clinic-based studies, 5 (25%) did not report on the patient consent process, 8 (40%) received a waiver of informed consent, 4 (20%) utilized an informed consent process, 2 (10%) used opt-out, and 1 was conducted as a quality improvement study (5%). Six of the seven studies (85.7%) conducted in community or both clinic/community settings did not report on the patient consent process; the one remaining study required informed consent. Many of the included studies rated as high or unclear risk of bias, this was often related to insufficient detail in the methods as well as lack of blinding. Quality assessment details appear in Additional file 1: Appendix C, Tables C1 and C2.

Interventions and effectiveness

Study-level

All studies (n = 27, 100.0%) described multicomponent interventions. As presented in Additional file 1: Appendix D, Table D3, the majority of studies used some form of strategy to increase community demand (n = 25, 92.6%) and/or to increase community access (n = 24, 88.9%) in the most complex intervention arm. Commonly used intervention components to increase community demand included small media (n = 17, 63.0%), client reminder or recall (n = 16, 59.3%), and one-on-one education (n = 14, 51.9%). Strategies to reduce structural barriers included increasing in-clinic distribution of FIT/FOBT by providers (n = 11, 40.7%) or clinical staff/research team members (n = 12, 44.4%), programs that mailed FIT/FOBT materials directly to the patient’s home (n = 10, 37.0%, aka “direct mail programs”), provision of pre-addressed stamped envelopes to facilitate return of the completed FIT/FOBT (n = 12, 44.4.7%), or FIT/FOBT distribution by participant request (n = 4, 14.8%). Each of the 10 studies that used a direct mail program also utilized client reminder and recall. Although 12 studies used pre-addressed stamped envelopes, this intervention component was only employed in 70% (7/10) of the studies using a direct mail program (see Additional file 1: Appendix D, Table D3). Many studies also used patient navigators (n = 12, 44.4%) or other miscellaneous intervention components (n = 17, 63.0%) such as by providing culturally tailored materials or having participants complete surveys about CRC screening knowledge and behaviors.

Study arm-level

As summarized in Table 1, most studies tested multicomponent interventions in varied combinations across multiple study arms. Among the 20 clinic-based studies [40, 43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58, 60, 61, 64, 66], there were 27 active treatment arms. The most frequently used intervention components in clinic-based treatment arms included client reminder or recall (16/27, 59.3%,), small media (16/27, 59.3%), provision of pre-addressed stamped envelopes (15/27, 55.6%), and direct mail programs (13/27, 48.1%). No clinic-based treatment arms used client incentives, mass media, or group education. Over half (60%, 12/20) of the control arms in the clinic-based studies distributed FIT/FOBT kits upon order by clinicians as part of usual care.

Among the seven studies conducted either in community settings [41, 42, 59, 63, 65] or that targeted both clinic and community settings [62, 67], there were 11 active treatment arms. Group education (n = 7, 63.6%), small media (n = 9, 81.8%), and one-on-one education (n = 7, 63.6%) were the most frequently used intervention components used in these settings. The strategy of leveraging social networks was only used in community-based studies.

All studies that included highly effective treatment arms were clinic-based. In these clinic-based studies, 9 treatment arms were rated as highly effective (greater than 25% higher screening rate compared with the control/usual care group), and 12 treatment arms were rated as effective (10% to 25% higher screening rate compared with control/usual care group). As detailed in Table 2, the components that occurred most frequently among the highly effective and effective study arms were provision of kits by direct mail, provision of a pre-addressed stamped envelope, client reminder or recall, and provider ordered in-clinic distribution. Additionally, some studies used a layered approach, by adding intervention components among multiple study arms, or by adding successive interventions within a single intervention arm in an attempt to reach non-responders. Studies with highly effective treatment arms occurred in a variety of ethnic populations, however, most of these studies occurred in urban areas (6 of 7 studies).

Among 10 studies that used direct mailing of the FIT/FOBT as an intervention strategy [40, 43, 46, 47, 49, 50, 52, 54, 55, 58, 61], the mailed kit plus 1–3 phone reminders were more effective than usual care in increasing FIT/FOBT use; the frequency of FIT/FOBT completion in the intervention arms ranged from 29% to 82% compared with 1.1% to 37% in the control arms. Intensive outreach using navigators (e.g., home visits, phone calls) was used as a core component of some study interventions [49, 51,52,53], as a more complex study intervention arm [44, 54, 55, 61], or as a technique for reaching non-responders in layered interventions [40, 47]. FIT/FOBT completion among non-responders generally remained lower after outreach compared with early responders. The overall effectiveness of intensive outreach compared with minimal or automated phone/text outreach was not consistent across studies. Our data suggest that effectiveness may relate to the timing at which navigation was delivered (e.g., early on as a core component of the initial intervention or as a follow-up strategy used with non-responders to another intervention).

Contextual factors

Few studies provided details on contextual factors beyond the study location or the population of interest, see summary in Table 3 (details in Additional file 1: Appendix D, Table D4). Twenty (74%) of the 27 studies were conducted in urban settings, five studies (18.5%) were conducted in rural settings and one did not designate a geographic setting (3.7%). Of the five studies conducted in rural settings, three occurred in primary care clinics and two were based in the community. Of the 20 studies conducted in clinics, 14 (70.0%) occurred in clinics affiliated with a larger system (e.g., hospital, FQHC, health department), two occurred in clinics affiliated with practice-based research networks (10.0%), two in independent clinics (10.0%), and two could not be determined (10.0%). Irrespective of study setting as detailed in Additional file 1: Appendix D, Table D4, very limited details, if any, were provided about organizational priorities or environmental factors that may have influenced a site’s interest in participating in the study. A few studies built on existing community-based programs [65], quality improvement initiatives [40, 47], or emerged based on community or health system identified needs [62, 67].

Thirteen out of the 27 studies (48.1%) targeted patients of a specific race or ethnic group [40,41,42,43, 45,46,47, 59, 60, 62, 63, 65, 66] and six (22.2%) occurred in clinics that largely served racial/ethnic minority or multicultural patients [50, 52, 53, 56, 58, 64]. Five studies focused on Latino populations, four were conducted in Asian patient populations, three focused on African Americans, and one study was conducted among native Hawaiians [41]. Of the six studies conducted in community settings or both clinic/community settings, none reported on the prior screening history of eligible patients (0%). Of the 20 clinic-based studies, 13 (65.0%) targeted patients who were not-up-to-date with CRC screening [44, 45, 47,48,49, 51,52,53,54,55,56, 58, 61, 66]. Six studies (30.0%) did not specify participant’s prior screening history and one (5.0%) targeted patients who had completed a prior FOBT. Six of the 20 clinic-based studies (30.0%) reported on CRC screening rates within a clinic or health system prior to the intervention [40, 44, 47, 52, 54, 55, 61], and two of these studies occurred in the same health system [40, 47]. Baseline screening rates in these settings varied widely, from 1 to 2% to 54.3%. In one study setting, the health system had initiated work to improve CRC screening prior to the current studies by reducing structural barriers, introducing audit and feedback, implementing provider reminders, and using CRC as a quality metric by which providers received incentive payments. These changes had increased CRC screening from 17% in 2007 to 43% in 2009 prior to any interventions facilitated by the study teams [40, 47].

None of the studies in community or both community/clinic settings reported on use of an electronic health record (EHR) system. In the 20 clinic-based studies, 10 had an EHR (3 specified, 7 unspecified), 7 were unclear, 2 used both paper and an EHR, and 1 used paper charts (see Table 3 and details in Additional file 1: Appendix D, Table D4).

Implementation strategies

As summarized in Table 3 and detailed in Additional file 1: Appendix D, Table D5, studies provided very limited information on the methods used to encourage the adoption of the interventions into practice; it was often hard to determine if any implementation support occurred. Among clinic-based interventions, 8 (40.0%) did not report how the intervention was developed, 4 (20.0%) used focus groups, interviews, or surveys to inform intervention development, 4 (20.0%) were based on a pilot or prior intervention, and 4 (20.0%) were informed by stakeholder input or other methods. In community and community/clinic-based studies, intervention development was informed either by focus groups, interviews, or surveys (n = 3, 42.9%) or by stakeholder input or other methods (n = 4, 57.1%).

Methods to support training on the intervention and monitoring fidelity of the intervention components over time was not reported for over half of the 27 studies (n = 15, 55.6%). Although training was mentioned in 12 of these studies, the details provided were often limited and varied widely: 6 (50.0%) did not report on the length of training, 4 (33.3%) had one session lasting 30 min to 2 h, 1 (8.3%) had a 2 day training, and 1 (8.3%) delivered multiple in-service presentations to the medical assistants or clinic staff. Potter et al. provided the most robust description of their training which included a 1-h training for nursing staff; onsite review of study procedures with individual nurses; frequent site-visits to ensure nursing staff were aware of the week’s study protocol; and daily availability of research assistants to ask questions regarding study implementation [56]. Only six studies (22.2%) provided any data on monitoring intervention fidelity, which could include meetings, observation visits, supervision, or random audits with compliance feedback [52, 53, 56, 57, 61, 66].

Interventions were delivered by clinic staff, community members, the research team, or a combination. Delivery was unclear in one clinic-based study, the others were almost equally distributed across the three categories: 7 (35.0%) were delivered by both clinic staff and the research team, 6 (30.0%) by clinic staff, and 6 (30.0%) by the research team. Community or community/clinic studies were delivered by community members (n = 4, 57.1%) or by both community members and the research team (n = 3, 42.9%). While few studies discussed strategies to support implementation over time, Roetzheim et al. [57] described routine feedback sessions with research members and clinical staff 6 and 12 months after the intervention had been implemented to discuss intervention progress, challenges occurring, and what could be done to improve implementation.

Seven studies provided highly heterogeneous information on intervention costs [40, 42, 44, 46, 50, 57, 68], see Additional file 1: Appendix D, Table D5. Some studies providing data on the cost of individual intervention components and others calculating the cost per patient or per patients screened. Three studies determined intervention costs per patient screened, which ranged from $43 to $1688 [40, 44, 68].

Discussion

We found that studies to promote fecal testing in rural and low-income populations used multicomponent interventions that were tested in varied combinations across multiple study arms. Over half of the studies (16/27, 59.3%) used an informed consent process or did not provide details on the consent process, leaving it difficult to interpret if the participating patients were “different” than the general population served by the clinic or in the community. The most frequently used interventions in clinics included client reminder or recall, small media, provision of pre-addressed stamped envelopes for FIT/FOBT return, and direct mail programs. Many studies also employed outreach using navigators. In clinic-based studies, the components that occurred most frequently among the highly effective and effective study arms were provision of kits by direct mail, use of a pre-addressed stamped envelope, client reminders, and provider ordered in-clinic distribution.

Few studies included an adequate description of the contextual factors and approaches used to implement the intervention needed for health system leaders, primary care providers, or researchers to replicate the study in their own settings or to determine if the intervention was a good “fit” for the local context. For example, only 25.9% (7/27) reported on baseline screening rates prior to study implementation and only three clinic-based studies (15.0%, 3/19) named the EHR system used. In relation to implementation factors, delivery of the interventions occurred by clinic/community staff, research team members, or both – yet over half of the studies did not report on the type or length of training used. Few studies indicated if new clinical staff were hired or additional resources were provided to support intervention implementation. Seven studies reported on intervention costs, yet methods were heterogeneous, estimates highly variable, and details inadequate for determining how much an intervention might cost in a target setting. Only six studies described monitoring intervention fidelity, which was often limited to an acknowledgement that some form of interaction between the study team and clinical staff occurred (e.g., meetings, supervision).

Our review describes the effectiveness of interventions to improve fecal testing in low-income and rural patients in clinic and community-based settings. As in the recent review on CRC interventions by the Community Preventive Services Task Force, we found that most interventions tested in these settings were multicomponent in nature (i.e., included two or more strategies) [17]. Strategies to increase community demand (i.e., client reminders) as well as to increase community access (i.e., direct mail, use of a pre-addressed stamped envelope, in-clinic distribution) were intervention components commonly found in highly effective/effective study arms tested in clinic settings. However, generating precise estimates of specific intervention components was difficult in part because of the limited information on baseline screening levels for participating clinics, different patient targets (e.g., prior FIT/FOBT versus screening naïve), and variation in the consent process (e.g., waived versus informed). Moreover, none of the articles included in our review explicitly compared implementation strategies or the impact of contextual factors on intervention effectiveness.

One key challenge identified by our review was that the articles frequently lacked information in relation to local contextual factors and implementation strategies that could be used to inform end users as to which interventions would work best in their setting given specific patient populations, clinical settings, and community characteristics – and how to implement them. In their recent review to develop a taxonomy for CRC screening promotion, Ritvo and colleagues emphasized the need to describe the engagement sponsor, population targeted, alternative screening tests, delivery methods, and support for test performance (EPADS) in future reporting [69]. Numerous recent articles suggest strategies and approaches that can improve reporting of intervention studies, including TIDieR (Template for Intervention Description and Replication [70]) or PARIHS (Promoting Action on Research Implementation in Health Services [71]). Moreover, recent work by Powell and colleagues identified 73 implementation strategies grouped in 9 categories; thus providing a common terminology that can be used to design and evaluate effectiveness as well as implementation research studies [32, 72]. However, as indicated in our review, this data is either not being gathered by research investigators or it is not being reported in publications.

We speculate that lack of reporting on the impact of implementation strategies and contextual factors may reflect funder preferences for novel discoveries, researcher bias toward intervention effectiveness measured by statistical significance rather than nuanced understanding of how, when, and why a specific intervention works, and journal restrictions on manuscript length. Our experience also suggests that pragmatic studies conducted in settings serving rural and vulnerable populations are often better equipped for implementing interventions owing to greater capacity for data reporting, workflow refinements, and leadership interest/engagement than would be needed to scale these interventions across average or “below average” settings. This presents challenges and opportunities for cancer control researchers and implementation scientists. Namely, it presents opportunities to compare the effectiveness of implementation strategies needed to translate effective multicomponent interventions into diverse clinic, community, and population settings; to use SMART designs to test stepped-implementation support in response to baseline setting capacity and intervention responsiveness over time; and to implement “layered” interventions which may first build clinic capacity and visit-based workflows prior to implementing population outreach programs [73].

We are seeing a shift to address these needs through funding opportunities through the Cancer Moonshot, in NCI and CDC’s continued support of collaborative research networks and implementation research, as well as the emergence of local initiatives in response to advanced payment models that reward improved CRC screening and disparities reduction [74, 75]. Taking the next step forward in implementation of evidence-based approaches to improve cancer screening also argues for investing in academic-community collaborations before research studies begin through the infrastructure of practice-based research networks [76], researcher in residence models [77], or participatory research methods [78, 79]. Such partnered approaches can help actualize the call for rapid and relevant science [80]. Shifting the research paradigm to support collaborative, partnered implementation enables leveraging “teachable moments” and “tipping points” when research evidence can be used to inform local, regional, or system-wide interventions that are under consideration or actively underway.

There are a few important limitations in the present study. First, we limited our review to studies targeting rural and low-income patients in the United States. These populations experience CRC screening disparities, and our stakeholder partners wanted to know what interventions worked best for settings serving these patients. It is also possible that interventions that are effective for these hard-to-reach populations need to be different or more intensive than those implemented in higher resourced populations. Second, we acknowledge that the requirement to have quantitative outcome data on changes in FIT/FOBT may have led to the exclusion of studies targeting any type of CRC screening or to studies focused on qualitative methods. Future systematic reviews on this topic would benefit from interviews with the lead or senior author or a realist review [81,82,83] which could generate a more contextualized understanding of how and why complex interventions achieve particular effects in particular contexts. Finally, we created cut-offs for evaluating the individual intervention components based on a proportional distribution of the outcomes. This approach identified four intervention components that commonly appeared in the highly effective/effective study arms in clinic based studies (i.e., direct mail, client reminders, addressed stamped envelopes, in-clinic distribution); the use of alternative cut-points may have identified additional strategies. Despite these limitations, our findings document the general deficiency in reporting on contextual factors, implementation strategies, and how these factors interact with the intervention. Our review provides a critical starting point for future reviews and informs research funding and publication in this area.

There is growing interest in the conduct and reporting of pragmatic research that can be used by stakeholders to inform practice in primary care and community settings. Such evidence can guide health systems administrators in selecting an appropriate intervention in their setting, as well as approaches for adapting a given intervention or implementation approach based on contextual factors. Interventions to improve FIT/FOBT for CRC screening should be considered generally as complex interventions (e.g., multicomponent, context-sensitive, and highly dependent on the behaviors of participants and providers) [84]. As the field of pragmatic research continues to advance, our findings suggest that the use of standard guidelines for the reporting of implementation strategies and contextual factors within trials may be useful. Building on the policy of the National Institute of Health (NIH) to require detailed on rigor and reproducibility in grant submissions [85], journals, editors, and funders could mandate scientists to more freely share protocols, implementation toolkits, or intervention websites. Tools used in our review may inform such guidelines, including more routine application of Proctor, Powell and colleague’s recommendations for tracking and reporting implementation strategies [32, 34, 72] or Stange and colleague’s conceptualization of contextual factors [35,36,37]. Rather than reducing the nuances of implementation in diverse, complex adaptive systems to soundbites, we need to apply the principles of precision medicine to research design to determine what works for whom and why, rather than what works after variation has been removed. Funders and journal editors should apply these policies so that investigation of how intervention effects are modified by context and implementation strategies moves from being a “new methodological frontier” to a standard of practice for research [86].

Conclusion

Multicomponent interventions can effectively increase fecal testing for CRC across diverse rural and low-income communities. Few community based studies were effective, but this may be because they targeted patients irrespective of CRC screening eligibility. For clinic-based studies, the intervention components frequently found in highly effective and effective treatment arms included mailing FIT/FOBT materials directly to patient’s homes (i.e., “direct mail programs”), provision of a pre-addressed stamped envelope to facilitate kit return, client reminder and recall, and provider ordered in-clinic distribution. Based on the community guide, these strategies are designed to improve community access by reducing structural barriers and to increase community demand. Few studies described contextual factors or implementation strategies in the detail needed for stakeholders to clearly determine how and which interventions would work in their local contexts. More robust application of guidelines to support reporting of implementation strategies and contextual factors in funding and publication are needed so that stakeholders can determine not just which interventions work to improve CRC screening, but which interventions would work best in their setting given specific patient populations, clinical settings, and community characteristics.

Abbreviations

- CFIR:

-

Consolidated Framework for Implementation Research

- CRC:

-

Colorectal cancer

- EHR:

-

Electronic Health Record

- EPADS:

-

Engagement sponsor, population targeted, alternative screening tests, delivery methods, and support for test performance

- FIT:

-

Fecal Immunochemical Test

- FOBT:

-

Fecal Occult Blood Test

- TIDieR:

-

Template for Intervention Description and Replication

References

Cancer Facts & Figures 2017. [https://www.cancer.org/content/dam/cancer-org/research/cancer-facts-and-statistics/annual-cancer-facts-and-figures/2017/cancer-facts-and-figures-2017.pdf] Accessed Nov 14 2017.

Whitlock EP, Lin JS, Liles E, Beil TL, Fu R. Screening for colorectal cancer: a targeted, updated systematic review for the U.S. preventive services task force. Ann Intern Med. 2008;149(9):638–58.

US Preventive Services Task Force. Final Update Summary: Colorectal Cancer: Screening. U.S. Preventive Services Task Force. June 2016. https://www.uspreventiveservicestaskforce.org/Page/Document/UpdateSummaryFinal/colorectal-cancer-screening2. Accessed Nov 14 2017.

Lieberman D, Ladabaum U, Cruz-Correa M, Ginsburg C, Inadomi JM, Kim LS, Giardiello FM, Wender RC. Screening for colorectal cancer and evolving issues for physicians and patients: a review. JAMA. 2016;316(20):2135–45.

American Cancer Society. Colorectal Cancer Facts & Figures 2014–2016. Vol. 2014. Atlanta: American Cancer Society; 2014.

American Cancer Society. Colorectal Cancer Facts & Figures 2017–2019. Atlanta: American Cancer Society. https://www.cancer.org/content/dam/cancer-org/research/cancer-facts-and-statistics/colorectal-cancer-factsand-figures/colorectal-cancer-facts-and-figures-2017-2019.pdf. Accessed 27 Nov 2017.

National Colorectal Cancer Roundtable. 80% by 2018. http://nccrt.org/what-we-do/80-percent-by-2018/. Accessed 27 Nov 2017.

Healthy People 2020. 2020 Topics & Objectives: Cancer. https://www.healthypeople.gov/2020/topicsobjectives/topic/cancer/objectives#4054. Accessed Nov 27 2017.

Cole AM, Jackson JE, Doescher M. Urban–rural disparities in colorectal cancer screening: cross-sectional analysis of 1998–2005 data from the centers for disease Control's behavioral risk factor surveillance study. Cancer Med. 2012;1(3):350–6.

Tammana VS, Laiyemo AO. Colorectal cancer disparities: Issues, controversies and solutions. World J Gastroenterol. 2014;20(4):869–76.

Klabunde CN, Joseph DA, King JB, White A, Plescia M. Vital signs: colorectal cancer screening test use - United States, 2012. SEARCH Morbidity and Mortality Weekly Report (MMWR). 2013;62(44):881–888. https://www.cdc.gov/mmwr/preview/mmwrhtml/mm6244a4.htm.

Davis MM, Renfro S, Pham R, Hassmiller Lich K, Shannon J, Coronado GD, Wheeler SB. Geographic and population-level disparities in colorectal cancer testing: a multilevel analysis of Medicaid and commercial claims data. Prev Med. 2017;101:44–52.

Steele CB, Rim SH, Joseph DA, King JB, Seeff LC. Centers for disease C, prevention: colorectal cancer incidence and screening - United States, 2008 and 2010. Morb Mortal Wkly Rep Surveill Summ. 2013;62(Suppl 3):53–60.

Holden DJ, Jonas DE, Porterfield DS, Reuland D, Harris R. Systematic review: enhancing the use and quality of colorectal cancer screening. Ann Intern Med. 2010;152(10):668–76.

Brouwers M, De Vito C, Bahirathan L, Carol A, Carroll J, Cotterchio M, Dobbins M, Lent B, Levitt C, Lewis N, et al. What implementation interventions increase cancer screening rates? A systematic review. Implement Sci. 2011;6(1):111.

Sabatino SA, Lawrence B, Elder R, Mercer SL, Wilson KM, DeVinney B, Melillo S, Carvalho M, Taplin S, Bastani R, et al. Effectiveness of interventions to increase screening for breast, cervical, and colorectal cancers: nine updated systematic reviews for the guide to community preventive services. Am J Prev Med. 2012;43(1):97–118.

The Community Guide. Cancer Screening: Multicomponent Interventions - Colorectal Cancer. August 2016. https://www.thecommunityguide.org/findings/cancer-screening-multicomponent-interventions-colorectal-cancer. Accessed 27 Nov 2017.

Rojas Smith L, Ashok M, Dy SM, Wines RC, Teixeira-Poit S. Contextual frameworks for research on the implementation of complex system interventions. Rockville, MD: Agency for Healthcare Research and Quality; 2014.

Yoong SL, Clinton-McHarg T, Wolfenden L. Systematic reviews examining implementation of research into practice and impact on population health are needed. J Clin Epidemiol. 2015;68(7):788–91.

Schreuders EH, Grobbee EJ, Spaander MC, Kuipers EJ. Advances in fecal tests for colorectal cancer screening. Curr Treat Options Gastroenterol. 2016;14(1):152–62.

Robertson DJ, Lee JK, Boland CR, Dominitz JA, Giardiello FM, Johnson DA, Kaltenbach T, Lieberman D, Levin TR, Rex DK. Recommendations on fecal immunochemical testing to screen for colorectal Neoplasia: a consensus statement by the US multi-society task force on colorectal cancer. Gastroenterology. 2017;152(5):1217–37. e1213

Allison JE, Fraser CG, Halloran SP, Young GP. Population screening for colorectal cancer means getting FIT: the past, present, and future of colorectal cancer screening using the fecal immunochemical test for hemoglobin (FIT). Gut Liver. 2014;8(2):117–30.

Tinmouth J, Lansdorp-Vogelaar I, Allison JE. Faecal immunochemical tests versus guaiac faecal occult blood tests: what clinicians and colorectal cancer screening programme organisers need to know. Gut. 2015;64(8):1327–37.

World Endoscopy Organization (WOE) Colorectal Cancer Screening Expert Working Group. FIT for Screening. 2017. http://www.worldendo.org/about-us/committees/colorectal-cancer-screening/ccs-testpage2-level4/fit-for-screening. Accessed 27 Nov 2017.

Higgins JPT, Green S, Cochrane handbook for systematic reviews of interventions version 5.1.0 [updated march 2011]. The Cochrane Collaboration, 2011. http://handbook-5-1.cochrane.org/. Accessed 27 Nov 2017.

Berkman ND, Lohr KN, Ansari M, McDonagh M, Balk E, Whitlock E, Reston J, Bass E, Butler M, Gartlehner G et al: Grading the Strength of a Body of Evidence When Assessing Health Care Interventions for the Effective Health Care Program of the Agency for Healthcare Research and Quality: An Update. Methods Guide for Comparative Effectiveness Reviews (Prepared by the RTI-UNC Evidence-based Practice Center under Contract No. 290–2007-10056-I). AHRQ Publication No. 13(14)-EHC130-EF. Rockville, MD: Agency for Healthcare Research and Quality. November 2013. https://www.ncbi.nlm.nih.gov/books/NBK174881/pdf/Bookshelf_NBK174881.pdf. Accessed 27 Nov 2017.

Liberati A, Altman DG, Tetzlaff J, Mulrow C, Gotzsche PC, Ioannidis JP, Clarke M, Devereaux PJ, Kleijnen J, Moher D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. Ann Intern Med. 2009;151(4):W65–94.

Moher D, Shamseer L, Clarke M, Ghersi D, Liberati A, Petticrew M, Shekelle P, Stewart LA. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Systematic Rev. 2015;4(1):1.

Shamseer L, Moher D, Clarke M, Ghersi D, Liberati A, Petticrew M, Shekelle P, Stewart LA. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015: elaboration and explanation. Br Med J. 2015;349

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Guise J-M, Chang C, Viswanathan M, Glick S, Treadwell J, Umscheid CA, Whitlock E, Fu R, Berliner E, Paynter R, et al. Agency for Healthcare Research and Quality evidence-based practice center methods for systematically reviewing complex multicomponent health care interventions. J Clin Epidemiol. 2014;67(11):1181–91.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, Proctor EK, Kirchner JE. A refined compilation of implementation strategies: results from the expert recommendations for implementing change (ERIC) project. Implement Sci. 2015;10:21.

Bunger AC, Powell BJ, Robertson HA, MacDowell H, Birken SA, Shea C. Tracking implementation strategies: a description of a practical approach and early findings. Health Res Policy Syst. 2017;15(1):15.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:139.

Tomoaia-Cotisel A, Scammon DL, Waitzman NJ, Cronholm PF, Halladay JR, Driscoll DL, Solberg LI, Hsu C, Tai-Seale M, Hiratsuka V, et al. Context matters: the experience of 14 research teams in systematically reporting contextual factors important for practice change. Ann Fam Med. 2013;11(Suppl 1):S115–23.

Stange K: An overall approach to assessing important contextual factors. Edited by Davis M; 2017.

Stange KC, Glasgow RE. Considering and reporting important contextual factors in research on the patient-centered medical home. Agency for Healthcare Research and Quality: Rockville; 2013.

National Heart Lung and Blood Institute. Quality Assessment Tool for Before-After (Pre-Post) Studies With No Control Group. US Department of Health & Human Services, National Institutes of Health. March 2014. https://www.nhlbi.nih.gov/health-pro/guidelines/in-develop/cardiovascular-risk-reduction/tools/before-after. Accessed 27 Nov 2017.

Wells GA, Shea B, O'Connell D, Peterson J, Welch V, Losos M, Tugwell P: The Newcastle-Ottawa scale (NOS) for assessing the quality of nonrandomised studies in meta-analyses. 2014. http://www.Ohri.Ca/programs/clinical_epidemiology/oxford.Asp. Accessed 27 Nov 2017.

Baker DW, Brown T, Buchanan DR, Weil J, Balsley K, Ranalli L, Lee JY, Cameron KA, Ferreira MR, Stephens Q, et al. Comparative effectiveness of a multifaceted intervention to improve adherence to annual colorectal cancer screening in community health centers: a randomized clinical trial. JAMA Intern Med. 2014;174(8):1235–41.

Braun KL, Fong M, Kaanoi ME, Kamaka ML, Gotay CC. Testing a culturally appropriate, theory-based intervention to improve colorectal cancer screening among native Hawaiians. Prev Med. 2005;40(6):619–27.

Campbell MK, James A, Hudson MA, Carr C, Jackson E, Oakes V, Demissie S, Farrell D, Tessaro I. Improving multiple behaviors for colorectal cancer prevention among african american church members. Health Psychol. 2004;23(5):492–502.

Coronado GD, Golovaty I, Longton G, Levy L, Jimenez R. Effectiveness of a clinic-based colorectal cancer screening promotion program for underserved Hispanics. Cancer. 2011;117(8):1745–54.

Davis T, Arnold C, Rademaker A, Bennett C, Bailey S, Platt D, Reynolds C, Liu D, Carias E, Bass P 3rd, et al. Improving colon cancer screening in community clinics. Cancer. 2013;119(21):3879–86.

Friedman LC, Everett TE, Peterson L, Ogbonnaya KI, Mendizabal V. Compliance with fecal occult blood test screening among low-income medical outpatients: a randomized controlled trial using a videotaped intervention. J Cancer Educ. 2001;16(2):85–8.

Goldberg D, Schiff GD, McNutt R, Furumoto-Dawson A, Hammerman M, Hoffman A. Mailings timed to patients’ appointments: a controlled trial of fecal occult blood test cards. Am J Prev Med. 2004;26(5):431–5.

Goldman SN, Liss DT, Brown T, Lee JY, Buchanan DR, Balsley K, Cesan A, Weil J, Garrity BH, Baker DW. Comparative effectiveness of multifaceted outreach to initiate colorectal cancer screening in community health centers: a randomized controlled trial. J Gen Intern Med. 2015;30(8):1178–84.

Greiner KA, Daley CM, Epp A, James A, Yeh HW, Geana M, Born W, Engelman KK, Shellhorn J, Hester CM, et al. Implementation intentions and colorectal screening: a randomized trial in safety-net clinics. Am J Prev Med. 2014;47(6):703–14.

Gupta S, Halm EA, Rockey DC, Hammons M, Koch M, Carter E, Valdez L, Tong L, Ahn C, Kashner M, et al. Comparative effectiveness of fecal immunochemical test outreach, colonoscopy outreach, and usual care for boosting colorectal cancer screening among the underserved: a randomized clinical trial. JAMA Intern Med. 2013;173(18):1725–32.

Hendren S, Winters P, Humiston S, Idris A, Li SX, Ford P, Specht R, Marcus S, Mendoza M, Fiscella K. Randomized, controlled trial of a multimodal intervention to improve cancer screening rates in a safety-net primary care practice. J Gen Intern Med. 2014;29(1):41–9.

Jandorf L, Gutierrez Y, Lopez J, Christie J, Itzkowitz SH. Use of a patient navigator to increase colorectal cancer screening in an urban neighborhood health clinic. J Urban Health. 2005;82(2):216–24.

Jean-Jacques M, Kaleba EO, Gatta JL, Gracia G, Ryan ER, Choucair BN. Program to improve colorectal cancer screening in a low-income, racially diverse population: a randomized controlled trial. Ann Fam Med. 2012;10(5):412–7.

Lasser KE, Murillo J, Lisboa S, Casimir AN, Valley-Shah L, Emmons KM, Fletcher RH, Ayanian JZ. Colorectal cancer screening among ethnically diverse, low-income patients: a randomized controlled trial. Arch Intern Med. 2011;171(10):906–12.

Levy BT, Daly JM, Xu Y, Ely JW. Mailed fecal immunochemical tests plus educational materials to improve colon cancer screening rates in Iowa research network (IRENE) practices. J Am Board Fam Med. 2012;25(1):73–82.

Levy BT, Xu Y, Daly JM, Ely JW. A randomized controlled trial to improve colon cancer screening in rural family medicine: an Iowa research network (IRENE) study. J Am Board Fam Med. 2013;26(5):486–97.

Potter MB, Walsh JM, Yu TM, Gildengorin G, Green LW, McPhee SJ. The effectiveness of the FLU-FOBT program in primary care a randomized trial. Am J Prev Med. 2011;41(1):9–16.

Roetzheim RG, Christman LK, Jacobsen PB, Cantor AB, Schroeder J, Abdulla R, Hunter S, Chirikos TN, Krischer JP. A randomized controlled trial to increase cancer screening among attendees of community health centers. Ann Fam Med. 2004;2(4):294–300.

Singal AG, Gupta S, Tiro JA, Skinner CS, McCallister K, Sanders JM, Bishop WP, Agrawal D, Mayorga CA, Ahn C, et al. Outreach invitations for FIT and colonoscopy improve colorectal cancer screening rates: a randomized controlled trial in a safety-net health system. Cancer. 2016;122(3):456.

Thompson B, Coronado G, Chen L, Islas I. Celebremos la salud! A community randomized trial of cancer prevention (United States). Cancer Causes Control. 2006;17(5):733–46.

Tu SP, Taylor V, Yasui Y, Chun A, Yip MP, Acorda E, Li L, Bastani R. Promoting culturally appropriate colorectal cancer screening through a health educator: a randomized controlled trial. Cancer. 2006;107(5):959–66.

Coronado GD, Vollmer WM, Petrik A, Aguirre J, Kapka T, Devoe J, Puro J, Miers T, Lembach J, Turner A, et al. Strategies and opportunities to STOP colon cancer in priority populations: pragmatic pilot study design and outcomes. BMC Cancer. 2014;14:55.

Sarfaty M, Feng S. Uptake of colorectal cancer screening in an uninsured population. Prev Med. 2005;41(3–4):703–6.

Larkey L. Las mujeres saludables: reaching Latinas for breast, cervical and colorectal cancer prevention and screening. J Community Health. 2006;31(1):69–77.

Tu SP, Chun A, Yasui Y, Kuniyuki A, Yip MP, Taylor V, Bastani R. Adaptation of an evidence-based intervention to promote colorectal cancer screening: a quasi-experimental study. Implement Sci. 2014;9:85.

Wu TY, Kao JY, Hsieh HF, Tang YY, Chen J, Lee J, Oakley D. Effective colorectal cancer education for Asian Americans: a Michigan program. J Cancer Educ. 2010;25(2):146–52.

Potter MB, Yu TM, Gildengorin G, Yu AY, Chan K, McPhee SJ, Green LW, Walsh JM. Adaptation of the FLU-FOBT program for a primary care clinic serving a low-income Chinese American community: new evidence of effectiveness. J Health Care Poor Underserved. 2011;22(1):284–95.

Redwood D, Holman L, Zandman-Zeman S, Hunt T, Besh L, Katinszky W. Collaboration to increase colorectal cancer screening among low-income uninsured patients. Prev Chronic Dis. 2011;8(3):A69.

Sarfaty M, Feng S. Choice of screening modality in a colorectal cancer education and screening program for the uninsured. J Cancer Educ. 2006;21(1):43–9.

Ritvo P, Myers RE, Serenity M, Gupta S, Inadomi JM, Green BB, Jerant A, Tinmouth J, Paszat L, Pirbaglou M, et al. Taxonomy for colorectal cancer screening promotion: lessons from recent randomized controlled trials. Prev Med. 2017;101:229-234.

Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, Altman DG, Barbour V, Macdonald H, Johnston M, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348:g1687.

Harvey G, Kitson A. PARIHS revisited: from heuristic to integrated framework for the successful implementation of knowledge into practice. Implement Sci. 2016;11:33.

Waltz TJ, Powell BJ, Matthieu MM, Damschroder LJ, Chinman MJ, Smith JL, Proctor EK, Kirchner JE. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the expert recommendations for implementing change (ERIC) study. Implement Sci. 2015;10:109.

Almirall D, Nahum-Shani I, Sherwood NE, Murphy SA. Introduction to SMART designs for the development of adaptive interventions: with application to weight loss research. Transl Behav Med. 2014;4(3):260–74.

National Cancer Institute. Upcoming Cancer Moonshot℠ Funding Opportunities. Accelerating colorectal cancer screening and follow-up through implementation science (ACCSIS). https://www.cancer.gov/research/key-initiatives/moonshot-cancerinitiative/funding/upcoming#accsis. Accessed 27 Nov 2017.

Centers for Disease Control and Prevention. Colorectal Cancer Control Program (CRCCP). August 2017. https://www.cdc.gov/cancer/crccp/index.htm. Accessed 27 Nov 2017.

Davis MM, Keller S, DeVoe JE, Cohen DJ. Characteristics and lessons learned from practice-based research networks (PBRNs) in the United States. J Healthc Leadersh. 2012;4:107–16.

Marshall M, Pagel C, French C, Utley M, Allwood D, Fulop N, Pope C, Banks V, Goldmann A. Moving improvement research closer to practice: the researcher-in-residence model. BMJ Qual Saf. 2014;23(10):801–5.

Wheeler SB, Davis MM. “Taking the bull by the horns”: four principles to align public health, primary care, and community efforts to improve rural cancer control. J Rural Health. 2017;33(4):345–9.

Pham R, Cross S, Fernandez B, Corson K, Dillon K, Yackley C, Davis MM. “Finding the right FIT”: rural patient preferences for fecal immunochemical test (FIT) characteristics. J Am Board Fam Med. 2017;30(5):632–44.

Glasgow RE, Chambers D. Developing robust, sustainable, implementation systems using rigorous, rapid and relevant science. Clin Transl Sci. 2012;5(1):48–55.

Pawson R, Greenhalgh T, Harvey G, Walshe K. Realist review--a new method of systematic review designed for complex policy interventions. J Health Serv Res Policy. 2005;10(Suppl 1):21–34.

Rycroft-Malone J, McCormack B, Hutchinson AM, DeCorby K, Bucknall TK, Kent B, Schultz A, Snelgrove-Clarke E, Stetler CB, Titler M, et al. Realist synthesis: illustrating the method for implementation research. Implement Sci. 2012;7:33.

Pawson R. Evidence-based policy: a realist perspective. Thousand Oaks, CA: Sage; 2006.

Anderson R. New MRC guidance on evaluating complex interventions. BMJ. 2008;337:a1937.

National Institutes of Health. Research & Training: Rigor and Reproducibility. https://www.nih.gov/researchtraining/rigor-reproducibility. Accessed 27 Nov 2017.

Hawe P, Shiell A, Riley T, Gold L. Methods for exploring implementation variation and local context within a cluster randomised community intervention trial. J Epidemiol Community Health. 2004;58(9):788–93.

Acknowledgements

Andrew Hamilton, a research librarian, assisted with the search strategy. The authors appreciate the input of our Technical Expert Panel Members (which includes national experts and regional primary care and health system stakeholders): Jonathan Tobin, PhD – President/CEO of Clinical Directors Network, Inc. (CDN) and Co-Director, Community Engaged Research Core, The Rockefeller University Center for Clinical and Translational Science; David Lieberman, MD – OHSU Division of Gastroenterology; Jack Westfall, MD, MPH – Chief Medical Office, Colorado Health OP; Bryan Weiner, PhD – Professor of Global Health & Health Services, University of Washington; Kristen Dillon, MD – Director, Columbia Gorge Coordinate Care Organization & Associate Medical Director Medicaid, PacificSource Community Solutions; Daisuke Yamashita, MD – Medical Director, OHSU Family Medicine at South Waterfront; Ron Stock, MD – Clinical Innovation Advisor, Oregon Health Authority (OHA) Transformation Center; Sharon Straus, MD, FRCPC, MSc,– Director, Knowledge Translation Program at St. Michaels. Rose Gunn, MA assisted with manuscript formatting.

Funding

This research was supported through a career development award to Dr. Davis (AHRQ 1 K12 HS022981 01). Input from primary care and health system partners through our technical expert panel was made possible through a series of Pipeline to Proposal Awards from the Patient Centered Outcomes Research Institute (ID# 7735932 Tier II and Tier III). The funders played no role in the design of the study and collection, analysis, data interpretation, or in writing the manuscript. The findings and conclusions in this study are those of the authors and do not necessarily represent the official position of the funders.

Availability of data and materials

The datasets generated and analyzed during the current study are included in this published article and its supplementary information files.

Author information

Authors and Affiliations

Contributions

MMD is the guarantor. All authors contributed to the design of the study including the development of selection criteria and risk of bias assessment strategy. MMD, MF, DIB, and JMG developed the search strategy, with guidance from a research librarian. MMD and MF selected studies and extracted data. MMD, MF, and DIB drafted the manuscript. All authors assisted in interpreting data from studies, synthesizing findings, reading and editing manuscript drafts, and approved the final manuscript.

Corresponding author

Ethics declarations

Authors’ information

No additional information provided.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

Appendix A. Search Strategies. Appendix B. Study Selection: Table of Inclusion/Exclusion Codes, Definitions and Key Questions. Appendix C. Quality Assessment: Quality Assessment for Randomized Controlled Trials (RTC) or Control Trial (CT) Designs, Quality Assessment for Studies using a Pre-Post Design. Appendix D. Data Supplement: D1. Characteristics and findings of included studies, stratified by intervention, D2. Participant recruitment and recruitment success in intervention studies to improve FIT/FOBT screening for CRC, stratified by intervention setting, D3. Intervention Components – Tracked for the most complex intervention arm tested in a study, D4. Contextual Factors in Interventions to Improve FIT/FOBT screening for CRC, stratified by setting, and D5. Implementation strategies used in interventions to improve FIT/FOBT CRC screening, stratified by setting. (DOCX 442 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Davis, M.M., Freeman, M., Shannon, J. et al. A systematic review of clinic and community intervention to increase fecal testing for colorectal cancer in rural and low-income populations in the United States – How, what and when?. BMC Cancer 18, 40 (2018). https://doi.org/10.1186/s12885-017-3813-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12885-017-3813-4