Abstract

Background

Soft tissue sarcoma is a rare and highly heterogeneous tumor in clinical practice. Pathological grading of the soft tissue sarcoma is a key factor in patient prognosis and treatment planning while the clinical data of soft tissue sarcoma are imbalanced. In this paper, we propose an effective solution to find the optimal imbalance machine learning model for predicting the classification of soft tissue sarcoma data.

Methods

In this paper, a large number of features are first obtained based on \(T_1\)WI images using the radiomics methods.Then, we explore the methods of feature selection, sampling and classification, get 17 imbalance machine learning models based on the above features and performed extensive experiments to classify imbalanced soft tissue sarcoma data. Meanwhile, we used another dataset splitting method as well, which could improve the classification performance and verify the validity of the models.

Results

The experimental results show that the combination of extremely randomized trees (ERT) classification algorithm using SMOTETomek and the recursive feature elimination technique (RFE) performs best compared to other methods. The accuracy of RFE+STT+ERT is 81.57% , which is close to the accuracy of biopsy, and the accuracy is 95.69% when using another dataset splitting method.

Conclusion

Preoperative predicting pathological grade of soft tissue sarcoma in an accurate and noninvasive manner is essential. Our proposed machine learning method (RFE+STT+ERT) can make a positive contribution to solving the imbalanced data classification problem, which can favorably support the development of personalized treatment plans for soft tissue sarcoma patients.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

Soft tissue sarcoma is a clinically rare and highly heterogeneous tumor, accounting for about 1% of all malignant tumors [1, 2]. Based on features such as histologic type and subtype, tumor necrosis and mitotic activity, French Federation Nationale des Centres de Lutte Contre le Cancer (FNCLCC) divides soft tissue sarcoma into grades I \(\sim\) III [3]. In adults, histologic grading is the most important prognostic factor and the best indicator of the risk of metastasis in soft tissue sarcoma [3,4,5]. It is critical to patient prognosis and the development of treatment plans. Currently, biopsy is a primary method for obtaining pathologic grade preoperatively. But errors in biopsy may lead to inaccurate results due to tumor heterogeneity [3], especially in fatty tumors with large lesions [6]. Therefore, it is necessary to explore an accurate and non-invasive method for preoperative grading of soft tissue sarcoma.

In recent years, radiomics has been widely used for neoplastic lesions in various systems. Because of its objective and descriptive characteristics, it can analyse, refine and quantify medical images, so that the most valuable imaging features can be selected to analyze clinical information, differential diagnosis of tumors, and provide accurate guidance for treatment and prognosis [7, 8]. Previous studies have shown that MRI-based histological features are associated with pathological grade of soft tissue sarcoma [9].

For classification tasks on graded predictions of soft tissue sarcoma, the dataset is often imbalanced. That is, there is a class in the dataset that contains much more data than other classes. With the development of science and technology, in the current era of big data, more and more imbalanced data sets appear, so there is an urgent need for well-performing classifiers to accomplish such grading tasks. Ideally, the classifier can provide a better classification accuracy for both positive and negative examples. However, existing studies have shown that class imbalance will reduce the performance of some standard classifiers, such as decision trees, support vector machine, artificial neural networks, etc [10]. In fact, traditional classifiers usually have high classification accuracy for majority classes, while for minority classes, classification accuracy is very low. Taking the classification problem of soft tissue sarcoma as an illustration, if there are 1000 patients, 10 are positive examples (low grade), and 990 are negative (high grade). In this case, if the classifier maps all inputs as negative examples, the accuracy rate is as high as 99%. Obviously, this classifier is wrong and unusable, and the evaluation indicators are also not practicable. In recent years, researchers tend to pay more attention to the classification performance of the classifier for minority classes, such as medical diagnosis [11,12,13,14], bankruptcy prediction [15], natural disaster prediction [16], credit card fraud detection [17], anomaly detection [18], and so on. Using machine learning methods can overcome the problem of data imbalance, and achieve better results for medical data classification problems.

For the classification problem of imbalanced dataset, the solutions are divided into three categories [19,20,21]: (i) data level approaches, sampling the data to achieve the balance of the number of samples, undersampling and oversampling are generally the most common methods [19, 22]; (ii) algorithmic approaches, optimizing the algorithm to modify the conventional classification method to the situation of data imbalance, so that the improved conventional algorithm can have better results on imbalanced data [21]. (iii) cost-sensitive learning approaches, combining data level and algorithms to give higher costs on the minority classes in the sample that are classified incorrectly to achieve the final good results [19]. In this paper, we follow the first category approach to achieve excellent classify method on imbalance data, that is conventional methods are applied to classify the preprocessed data by oversampling and undersampling techniques.

When it comes to conventional classification method, researchers mainly use decision tree/random forest analyses and neural networks [23]. Some other popular machine learning methods are adapted to solve this kind problem, included support vector machine classifiers [24], latent growth mixture modeling [25], boosting methods [26] and so on.

In addition to the above methods, some specialized classification methods are designed for handling imbalanced data to achieve better result. Khalilia et al. combine repeated random subsampling with RF and predict disease risk from highly imbalanced data [27]. Majid et al. use K-nearest neighbors and support vector machines to predict human breast and colon cancer from imbalanced data [28] . Barot et al. propose an improved decision tree algorithm to diagnose Covid-19 [29] . Xie et al. propose a new data resampling technique called Gaussian Distribution based Oversampling (GDO), which combines SVM to classify imbalanced data [30]. Rustum et al. propose a hybrid resampling approach and combine the extra tree classifier to predict Pulsars [31]. Rupapara et al. propose an ensemble method called regression vector voting classifier (RVVC) for identifying the toxic comments on social media platforms [32]. Fatima et al. present three feature selection algorithms (RONS/ROS/ROA) to minimize the overlapping and perform fraud detection [33]. Rustum et al. adopt a deep neural network approach and propose a model named BIR (bleedy image recognizer) ,which combines the MobileNet with a custom-built convolutional neural network (CNN) model to classify the bleedy images of wireless capsule endoscopy [34]. Reshi et al. propose a deep CNN architecture for diagnosing COVID-19 based on the chest X-ray image classification [35]. Table 1 shows the specific methods. These are all effective ways to deal with imbalanced data, and achieve good results.

Notably, classification problems for medical imbalanced data usually do not work well with an individual machine learning method. In general, it is a common process to learning the data: performing feature selection, sampling it, and then classifying it with the specified classification method. This series of processes needs to be considered as a whole. Existing methods only consider classifiers or only improve classification methods, which are not effective in solving the soft tissue sarcoma grading problem. Such, we take researches accordingly in order to advance the implementation of imbalance learning.

In this paper, a feature dataset based on the MR\(T_1\)WI is first obtained by using radiomics methods, then different sampling and classification methods are adopted, and such that different machine learning models are composed for training the recursive feature elimination. We try to explore these machine learning methods and find an optimal one for predicting the pathological grading of soft tissue sarcoma. The main contributions of this paper are as follows:

-

(1)

This study explore multiple machine learning models with several well-known classification algorithms, such as extremely randomized trees (ERT), balanced random forest (BRF), random forest (RF), and support vector machine (SVM).

-

(2)

252 MRI image data of soft tissue sarcoma are collected and processed in this study. A feature dataset is calculated after analyzing the images by recursive feature elimination (RFE). Resampling the imbalanced dataset with multiple sampling methods like random oversampling examples (ROSE), synthetic minority oversampling technique (SMOTE), SMOTETomek (STT) and adaptive synthetic sampling (ADASYN), are discussed here.

-

(3)

Different methods of feature selection, sampling and classification are combined, and extensive experiments are performed to classify imbalanced soft tissue sarcoma data. We find that the best one is RFE+STT+ERT. A dataset splitting method called SRS is used, which could improve the classification performance and verify the validity of the methods.

Method

In this section, we first show the dataset used in the experiments then introduce the methods and reasons that we choose in feature selection method, sampling technology and classification algorithm in details. After that, we specifically explore effective classification methods for imbalanced soft tissue sarcoma data and present the training process of 17 different machine learning models. Furthermore, A dataset splitting method called SRS is used to verify the validity of the methods. The final, we show the evaluation metrics adopted for the experiments.

The dataset

This paper uses preoperative MRI data of 252 patients with soft tissue sarcoma from January 2007 to the March 2018, 122 cases from the Affiliated Hospital of Qingdao University, 130 cases from Shandong Provincial Hospital Affiliated to Shandong First Medical University and The Third Hospital of Hebei Medical University. We name this dataset MRI-QSH. The dataset has following inclusion and exclusion criteria:

Inclusion criteria:

-

(1)

Histopathologically confirmed soft tissue sarcoma with complete clinical data after surgery;

-

(2)

Soft tissue sarcoma is graded according to the FNCLCC system (grade I \(\sim\) III);

-

(3)

MRI scanning is performed within 2 weeks before treatment, and the cross-sectional \(T_1\)WI images were included.

Exclusion criteria:

-

(1)

Poor MRI image quality, signal-to-noise ratio \(\le\)1.0;

-

(2)

There are some other malignant tumors during treatment.

According to the FNCLCC classification of soft tissue sarcoma data, grade I is low-grade, grade II and grade III are high-grade. The MRI-QSH dataset includes 62 patients with low-grade soft tissue sarcoma and 190 patients with high-grade soft tissue sarcoma. Table 2 shows the details of the number of high-grade and low-grade samples. Some selected soft tissue sarcoma images are shown in Fig. 1.

By using MR scanning method, image segmentation, image standardization, and feature calculation, the features of MR\(T_1\)WI of MRI-QSH before machine learning methods were extracted by the company of Deepwise, such that we get the 2758 dimensional feature space dataset with 252 samples, and name it DW-QSH.

Feature selection method

The data DW-QSH has a large number of feature parameters, 2758 in total, many of which do not contribute to the classification or have low contribution. Therefore, using feature selection will be beneficial to the accuracy of classification and can speed up the classification speed. For the feature selection method, this research chooses to use the recursive feature elimination (RFE) algorithm [36, 37]. RFE belongs to one of the packing method feature selection algorithms, which is a common method. RFE is used with a learner, which is usually a classifier. When we train the classifier, it sorts all the features and removes the ones that contribute the least to the classification. This process is performed recursively and is an example of reverse feature elimination. If removing some unnecessary features using RFE, it is more beneficial for the training of the model.

Data preprocessing

In the dataset DW-QSH, there are 62 samples belonging low-grade and 190 samples belonging high-grade. It is an imbalance data learning problem that the number of high-grade samples is much larger than that of low-grade samples. In order to overcome the problem of data imbalance, we adapt some sampling methods for data preprocessing. There are three common sampling methods: (i) undersampling; (ii) oversampling; (iii) the combination of oversampling and undersampling. Due to the small amount of total data in this study, taking undersampling will cause the sample size to be further reduced and the sampling results will not be representative, which will also lead to less accurate final results. Therefore, we select some oversampling methods (random oversampling examples, synthetic minority oversampling technique and adaptive synthetic sampling) [38,39,40], and a combination method of oversampling and undersampling (SMOTETomek) [41].

Oversampling technique

Oversampling is to generate a minority of samples for imbalanced data to achieve data balance. Two oversampling methods are commonly used, that is the random oversampling examples method (ROSE) and the synthetic minority oversampling technique (SMOTE).

Random oversampling examples (ROSE) [39, 42, 43] randomly replicate samples from the minority class and add them to the training dataset, eventually making the number of minority classes equal to the number of majority classes, resulting in a new balanced dataset. Thus a single instance may be selected multiple times, and ROSE may increase the possibility of overfitting, but this sampling technique is very effective for machine learning algorithms that are subject to skewed distributions.

The synthetic minority oversampling technique (SMOTE) [38] is an improved method based on the random oversampling algorithm, where the minority class is oversampled by generating “synthetic” data rather than directly by replication. The basic idea of SMOTE is to analyze minority samples, artificially synthesize new samples based on minority samples, and add them to the dataset. However, influenced by the parameters, data distribution and other factors, the artificially generated data from the minority class may appear in the majority class, which will affect the final classification results.

Adaptive synthetic sampling (ADASYN) [40] is an improved method based on SOMTE. It assigns different weights to different minority classes of samples according to the data distribution, thus generating different numbers of new samples. ADASYN not only can reduce the learning bias caused by the imbalanced distribution of the original data, but also adaptively shifts the decision boundary to the difficult-to-learn samples. It has the disadvantage of being susceptible to outliers. If the K nearest neighbors of a minority class sample are all majority class samples, its weights become large and may generate noise.

The combination of oversampling and undersampling

In the SMOTE method, it is likely to generate some noise data when the boundary sample and the others are oversampled. It can be eliminated by cleaning the sample after oversampling. Tomek Link is an undersampling technique used to clean up overlapping samples. The synthetic minority oversampling technique+Tomek Link (SMOTETomek) [41] combines TomekLink and SMOTE, which is a combination of oversampling and undersampling.

Classification methods

Random forest (RF) and support vector machine (SVM) are the most common classifiers in tumor image segmentation, tumor image classification and other applications [44]. In soft tissue sarcoma grading prediction problems, previous studies have shown that RF perform better than SVM [1]. In the following content, RF and its derivative methods are introduced in this subsection. For better comparison, SVM is also selected as one of the classification methods. We aim to explore the most effective classifier for the soft tissue sarcoma grade problem.

Random forest

Random forest [45] is a kind of ensemble learning, and its basic cell is decision tree. For each node of the decision tree, it has a put-back for sampling. For a sample set, it randomly selectes features to train and then uses the cart algorithm for calculation. This process is not pruned. For the classification of soft tissue sarcoma, each decision tree is a classifier and they perform classification independently. If there are n decision trees, then n classification results are generated. RF integrates all classification voting results and chooses the category with the most votes as the final output. RF is simple and easy to implement, suitable for handling imbalanced data, but not friendly for small data or low-dimensional datasets.

Balanced random forest

In the case of data imbalance, RF may contains a large number of majority classes and a small number of minority in the selected samples when building decision trees, and may favor the majority classes in the final classification vote. Balanced Random Forest (BRF) [46] combines the ideas of random undersampling and ensembing, where the majority of classes are undersampled and an equal number of minority classes are randomly selected for replacement, as a way to achieve a balanced training set. In the early stage of this study, sampling methods have been used to overcome the problem of data imbalance. Therefore, the performance of BRF in this experiment is not necessarily outperform random forest, but due to inconsistent sampling methods, the results achieved are also different. So we also select BRF as one of the classification methods to get the performance of each model combination.

Extremely randomized trees

Extremely randomized trees (ERT) [47] is an extension of RF. ERT is also an ensemble of decision trees, where each decision tree t \(\in\) {1...T}, T is the number of decision trees. In the process of selecting data samples, ERT differs from RF in that each decision tree is independently trained using the entire data sample. In node partitioning, RF selects the optimal feature value to partition the points after searching in the feature subset, while ERT randomly selects features to partition the decision tree. ERT uses random features and random thresholds for partitioning.

For a given data point x and dataset \(D_{train}\), a feature vector is represented by f(x,\(D_{train}\)). When classifying class c of the data, \(p_t\) represents the conditional probability that the feature vector f(x,\(D_{train}\)) belongs to class c. For data point \(x'\) , the probability that it belongs to class c is calculated by calculating the average of the probabilities on all trees [48] :

Compared with RF, ERT makes the shape and difference of each decision tree larger and more random. In theory, the effect of generalization will also be better. The specific performance of the two classifiers will be obtained in the later experiments.

Support vector machine

Support Vector Machine (SVM) is a generalized linear classifier that performs binary classification of data by supervised learning. The basic model of SVM is to find the best separating hyperplane on the feature space that maximizes the positive and negative sample interval on the training set. SVM is applied in character recognition, facial recognition, pedestrian detection, text classification and other fields.

The state-of-the-art method

One of the latest imbalanced data classification method called GDO-SVM [30] is used as the comparison. Xie et al. proposed an oversampling-based Gaussian distribution (GDO) that weights the minority class points by calculating their density information and distance information, probabilistically selecting anchor instances and generating new minority class instances based on the Gaussian distribution. After that, using SVM for Classification.

However, GDO-SVM is mainly an improvement on the sampling method, GDO-SVM performs well in KEEL and some public datasets of UCI, but from the performance of classifying real medical data listed in the literature, its improvement is not obviously good enough. The methods discussed in this paper are tackling this issue.

Model definition

In the experiment, the feature selection method of RFE is applied. After that, selecting different sampling strategies and classification algorithms, and use the discard-one cross-validation method to obtain 16 different machine learning models and a state-of-the-art method, as shown in Table 3. The original data DW-QSH is divided into a “training set” and a “testing set” at a fixed ratio of 4 : 1. For each machine learning model, the “training set” and “testing set” are first divided on the dataset, perform resampling and model training on the “training set”, and verify the performance of the model on the “testing set”. Fig. 2 shows the specific process.

In order to ensure the validity of the results, for each model, we did 10 experiments with different “random state” in the process of splitting the dataset randomly, calculated the average and standard deviation of each evaluation metric.

A dataset spiltting method

Due to the low-incidence of soft tissue sarcoma, it is very difficult to collect data, resulting in the number of samples is small. Meanwhile, the data is imbalanced, which is a greater challenge to train the model. It may cause that the classifier cannot identify the minority class samples (low-grade) well.

In order to better validate the performance of the models, we use the following dataset splitting method: firstly, 20% of the dataset is randomly divided into “testing set”, then the whole data set is oversampled, 70% of the oversampled data is randomly divided into “training set”. The classifier is trained on the ‘training set” and tested on the “testing set”, we call this method SRS. The detailed process is shown in Fig. 3.

Evaluation criteria and procedure

To better evaluate the performance of the model, we use the following evaluation metrics: area under the curve (AUC) of the receiver operating characteristic (ROC), accuracy, specificity, sensitivity and G-mean of the model on predicting high-level and low-level soft tissue sarcomas in the experiment.

The ROC curve is a curve drawn with “True Positive Rate (TPR)” (reflecting the sensitivity of the classification result) as the ordinate, and “False Positive Rate (FPR)” (reflecting the specificity of the classification result) as the abscissa. “True Positive Rate” and “False Positive Rate” are derived from the “confusion matrix” of the classification results, as shown in Table 4. The rows are the predicted results, and the columns are the actual results. TP (True Positive) is the number of positive examples classified correctly, FN (False Negative) is the number of incorrectly classified negative examples, FP (False Positive) is the number of incorrectly classified positive examples, and TN (True Negative) is the correct number of negative examples.

Accuracy (Acc) is the ratio of the number of correctly classified instances to the total number of instances in the test set, which measures the classification ability of the model. G-mean is a composite metric for evaluating the accuracy of positive and negative instances for imbalanced data sets which consists of two subcomponents: Sensitivity (Sens) and Specificity (Spec). The following Eqs. (2)–(5) are given to describe these metrics.

Area Under Curve (AUC) is the area enclosed by the coordinate axis under the ROC curve and the area is always \(\le\) 1. Meanwhile, since the ROC curve is generally located above the straight line y=x, the value of AUC ranges from 0.5 \(\sim\) 1, which can be used as an indicator to evaluate the performance the model. The closer the AUC is to 1, the better the effectiveness of classifier is. When AUC=0.5, the model has no practical meaning.

Results

Experimental results

In the “testing set” of this study, various machine learning models exhibit different classification abilities. Since 10 different “random state” is selected and tested for each model when dividing the dataset, the performance of each model is eventually evaluated by taking the average of the metrics obtained from the 10 experiments.

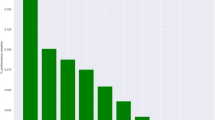

Results on the conventional dataset spitting method

Experiments are performed with the conventional dataset splitting method, and the results are shown in Table 5, \(\sigma\) represents the standard deviation of 10 experiments. The histogram of results of 17 models is shown in Fig. 4, to compare the performance of each model on soft tissue sarcoma data classification prediction. It can be obtained that the ERT classification combined with RFE and STT technology (named Model 3) predicts the classification of soft tissue sarcoma data more effectively than others. The AUC, accuracy, sensitivity, specificity and G-mean of high-grade and low-grade soft tissue sarcomas of Model 3 are 0.6879, 81.57%, 96.03%, 41.55% and 0.6263, respectively. Even though the sensitivity (Sens) , specificity(Spec) and G-mean of Model 3 is not the best one among 17 models, but the AUC and Accuracy(Acc) perform best. Combining the performance of all evaluation metrics, Model 3 is the most effective model for identifying high and low grade of soft tissue sarcoma. The accuracy of RFE+STT+ERT is 81.57% , which is close to 82% by biopsy [49].

Results on the SRS dataset spitting method

Experiments are performed again, using the SRS dataset splitting memthod, and the performance of the 17 models is shown in Table 6. The histogram of classification performance of 17 models using the SRS method is shown in Fig. 5. Obviously, after using SRS method, the performance of all models has been improved, especially the specificity (Spec). Models 2 and 3 performed best, with the same results in ten experiments. The AUC, accuracy, sensitivity, specificity and G-mean of high-grade and low-grade soft tissue sarcomas of Model 3 are 0.9438, 95.69%, 96.66%, 92.10% and 0.9429, respectively. Even the sensitivity (Sens) and specificity (Spec) are not the best, but the sensitivity (Sens) is close to 98.69% and the specificity (Spec) is clost to 93.78%.

In the experiment, SRS dataset splitting method can verify the effectiveness of the models. In general, Model 3 (RFE+STT+ERT) is the most effective method for predicting the grade of soft tissue sarcoma and it is better than the GDO-SVM. Since the whole process for classifying soft tissue sarcoma data is designed as: performing feature selection, sampling it, and then classifying it with the specified classification method, GDO-SVM only improves the sampling method, and for the data in this study, the performance of this method is not as good as RFE+STT+ERT.

Running time

In addition to those above evaluation metrics, the running time is employed to compare the performance of different models on the DW-QSH. In measuring the running time, each model is subjected to 10 experiments individually (each “random state” is a test) , and the value is taken average value to obtain the final running time in seconds. Table 7 and Fig. 6 show the final results, the running times of the two different dataset splits are shown.

It can be obtained that the running time of each model has little difference, the method of dataset splitting has little effect on the running time of the model. The running time is around 66s, the highest is 70s, and the lowest is 64s. Since the running times of the 17 models differs little, the method with the best performance can be chosen, which is RFE+STT+ERT.

Discussion

Impact of this article

According to the study in this paper, the imbalance machine learning model of the combination of extremely randomized trees classification algorithm using SMOTETomk and recursive feature elimination technique, that is RFE+STT+ERT, performs best in classification prediction on the MR\(T_1\)WI soft tissue sarcoma data. In the future, we will further explore the performance of classifying other imbalanced data by this model, discuss more effective model on solving different medical data imbalance problems.

Model performance when using SRS for dataset splitting

In the conventional dataset splitting method, the amount of low-grade data is small, so the models cannot resample valuable samples well during training, resulting in the classifier cannot identify low-grade soft tissue sarcoma well.

After using SRS, the performance of all models is improved. Because resampling is performed on the entire dataset, the classifier learns more characteristics of low-grade soft tissue sarcoma during training. Therefore, the performance of the models improves quickly, especially the specificity. Meanwhile, only 70% of the resampled data are selected to split in the training, and the testing set keep independent to prevent a large amount of data in the training set and the testing set being repeated. The validity of the experiment is guaranteed.

Study limitations

There are some shortcomings in this paper as well. During the experiment, the data adopted is relatively small because soft tissue sarcomas are rare and not easy to obtain in practise. If a larger amount of valid data can be collected, it will better validate the classification efficiency of the machine learning model proposed in this paper. The obtained feature dataset DW-QSH is high-dimension, because we do not use a specified and targeted feature extraction method. Such that, we will explore to find an optimal feature extraction method for the present data to enhance the performance of imbalance machine learning model in the future.

Conclusions

This paper analysis some imbalance machine learning approaches on classifying soft tissue sarcoma data, and aims to find a best research method for the pathological garding problem of soft tissue sarcoma. Firstly, based on the MR\(T_1\)WI radiomics, a large number of features are obtained as a feature dataset DW-QSH. Then, we explore the combinations of different sampling techniques, feature selection methods, and classification algorithms, and get nine imbalance machine learning models based on the DW-QSH. We also used a dataset splitting method called SRS, which can verify the effectiveness of the models. The experimental results show that the combination of RFE+STT+ERT performs best compared to other combination methods, even better than the state-of-the-art GDO-SVM method. The receiver operating characteristic area under the curve, accuracy, sensitivity, specificity and G-mean of this method for predicting high-grade versus low-grade soft tissue sarcoma are 0.6879, 81.57%, 96.03%, 41.55% and 0.6263. The accuracy of RFE+STT+ERT is 81.57% , which is close to 82% by biopsy. Meanwhile the value is 0.9438, 95.69%, 96.66% 92.10% and 0.9429 by using SRS, respectively. The running time of the method is about 66 seconds.

The classification results of this method are similar to those of the pre-surgical biopsy puncture, which means that the explored machine learning method has high research value for the classification of soft tissue sarcomas data. Therefore, it can provide useful support for developing personalized treatment plans for soft tissue sarcoma patients before surgery.

Availability of data and materials

The data used during the current study are available from the Hexiang Wang on reasonable request, email: wanghexiang@qdu.edu.cn. The URL of the code: https://github.com/Ally509/Code-Lxx-ML_FOR_MRT1WI

Abbreviations

- N:

-

Number

- FS:

-

Feature selection

- ST:

-

Sampling technique

- CM:

-

Classification method

- AUC:

-

Area under the curve

- Sens:

-

Sensitivity

- Spec:

-

Specificity

- ROSE:

-

Random oversampling examples

- SMOTE:

-

Synthetic minority oversampling technique

- STT:

-

SMOTETomek

- ADS:

-

Adaptive synthetic sampling

- ADASYN:

-

Adaptive synthetic sampling

- RFE:

-

Recursive feature elimination

- ERT:

-

Extremely randomized trees

- RF:

-

Random forest

- BRF:

-

Balanced random forest

- SVM:

-

Support vector machine

References

Hexiang W, Jihua L, Dapeng H, Shaofeng D, Wenjian X. Mrt1wi based radiomics and machine learning model for predicting the histopathological grades of soft tissue sarcomas. Chin J Radiol. 2020;54(4):6.

Siegel R, Naishadham D, Jemal A. Cancer statistics, 2012. CA Cancer J Clin. 2012;62(1):10–29.

Coindre JM. Grading of soft tissue sarcomas—review and update. Archiv Pathol Lab Med. 2006;130(10):1448–53.

Pasquali S, Gronchi A. Neoadjuvant chemotherapy in soft tissue sarcomas: latest evidence and clinical implications. Therap Adv Med Oncol. 2017;9(6):415.

Gronchi A, Ferrari S, Quagliuolo V, Broto JM, Pousa AL, Grignani G, Basso U, Blay JY, Tendero O, Beveridge RDa Histotype-tailored neoadjuvant chemotherapy versus standard chemotherapy in patients with high-risk soft-tissue sarcomas (isg-sts 1001): an international, open-label, randomised, controlled, phase 3, multicentre trial. The Lancet Oncology 2017

Ikoma N, Torres KE, Somaiah N, Hunt KK, Cormier JN, Tseng W, Lev D, Pollock R, Wang WL, Feig B. Accuracy of preoperative percutaneous biopsy for the diagnosis of retroperitoneal liposarcoma subtypes. Ann Surg Oncol. 2015;22(4):1068–72.

Shan H, Changhong L, Zaiyi L, Biao H, Hui L. The application and progress of texture analysis and radiomics in nonneoplastic lesion. Chin J Radiol. 2019;53(6):4.

Pianpian C, Yunfei C. Research progress of radiomics in musculoskeletal diseases. Chin J Radiol. 2019;53(9):3.

Yu Z, Yzbom A, Xsbom B, Jtmom C, Jcbos D, Yue D, Mzmom E, Swdom A. Soft tissue sarcomas: preoperative predictive histopathological grading based on radiomics of MRI. Acad Radiol. 2019;26(9):1262–8.

Japkowicz N, Stephen S. The class imbalance problem: a systematic study1. Intell Data Anal. 2002;6(5):429–49.

Kwek YS. A data reduction approach for resolving the imbalanced data issue in functional genomics. Neural Comput Appl. 2007;16:295–306.

Chen JX, Cheng TH, Chan ALF, Wang HY An application of classification analysis for skewed class distribution in therapeutic drug monitoring - the case of vancomycin. In: Workshop on Medical Information Systems: the Digital Hospital 2004

Ziba M, Tomczak JM, Lubicz M, Witek J. Boosted SVM for extracting rules from imbalanced data in application to prediction of the post-operative life expectancy in the lung cancer patients. Appl Soft Comput J. 2014;14(1):99–108.

El-Shafeiy E, Abohany A Medical imbalanced data classification based on random forests. In: AICV, pp. 81–91 2020

Zieba M, Tomczak SK, Tomczak JM. Ensemble boosted trees with synthetic features generation in application to bankruptcy prediction. Expert Syst Appl. 2016;58:93–101.

Chawla N.V C4. 5 and imbalanced data sets: investigating the effect of sampling method, probabilistic estimate, and decision tree structure. In: Proceedings of the ICML’03 workshop on class imbalances 2003

Chan PK, Fan W, Prodromidis AL, Stolfo SJ. Distributed data mining in credit card fraud detection. IEEE Intell Syst. 1999;14(6):67–74.

Promper C, Engel D, Green R.C Anomaly detection in smart grids with imbalanced data methods. In: 2017 IEEE symposium series on computational intelligence (SSCI) 2017

He H, Garcia EA. Learning from imbalanced data. IEEE Trans Knowl Data Eng. 2009;21(9):1263–84.

Sun Y, Wong AK, Kamel MS. Classification of imbalanced data: a review. Int J Pattern Recogn Artif Intell. 2009;23(04):687–719.

Galar M, Fernandez A, Barrenechea E, Bustince H, Herrera F. A review on ensembles for the class imbalance problem: bagging-, boosting-, and hybrid-based approaches. IEEE Trans Syst Man Cybern Part C (Appl Rev). 2011;42(4):463–84.

Huang Z, Yang C, Chen X, Huang K, Xie Y. Adaptive over-sampling method for classification with application to imbalanced datasets in aluminum electrolysis. Neural Comput Appl. 2020;32(11):7183–99.

Brnabic A, Hess LM. Systematic literature review of machine learning methods used in the analysis of real-world data for patient-provider decision making. BMC Med Inform Decis Mak. 2021;21(1):1–19.

Oviedo S, Contreras I, Quirós C, Giménez M, Conget I, Vehi J. Risk-based postprandial hypoglycemia forecasting using supervised learning. Int J Med Informatics. 2019;126:1–8.

Hertroijs DF, Elissen AM, Brouwers MC, Schaper NC, Köhler S, Popa MC, Asteriadis S, Hendriks SH, Bilo HJ, Ruwaard D. A risk score including body mass index, glycated haemoglobin and triglycerides predicts future glycaemic control in people with type 2 diabetes. Diabetes Obes Metab. 2018;20(3):681–8.

Alaa AM, Bolton T, Di Angelantonio E, Rudd JH, van der Schaar M. Cardiovascular disease risk prediction using automated machine learning: a prospective study of 423,604 UK biobank participants. PLoS ONE. 2019;14(5):0213653.

Khalilia M, Chakraborty S, Popescu M. Predicting disease risks from highly imbalanced data using random forest. BMC Med Inform Decis Mak. 2011;11(1):1–13.

Majid A, Ali S, Iqbal M, Kausar N. Prediction of human breast and colon cancers from imbalanced data using nearest neighbor and support vector machines. Comput Methods Programs Biomed. 2014;113(3):792–808.

Barot PA, Jethva HB. Mgini-improved decision tree using minority class sensitive splitting criterion for imbalanced data of covid-19. J Inf Sci Eng. 2021;37(5):1097–108.

Xie Y, Qiu M, Zhang H, Peng L, Chen Z. Gaussian distribution based oversampling for imbalanced data classification. IEEE Trans Knowl Data Eng. 2020;34(2):667–79.

Lee E, Rustam F, Aljedaani W, Ishaq A, Rupapara V, Ashraf I. Predicting pulsars from imbalanced dataset with hybrid resampling approach. Adv Astron. 2021;2021:4916494. https://doi.org/10.1155/2021/4916494.

Rupapara V, Rustam F, Shahzad HF, Mehmood A, Ashraf I, Choi GS. Impact of smote on imbalanced text features for toxic comments classification using RVVC model. IEEE Access. 2021;9:78621–34. https://doi.org/10.1109/ACCESS.2021.3083638.

Fatima EB, Omar B, Abdelmajid EM, Rustam F. Choi GS Minimizing the overlapping degree to improve class-imbalanced learning under sparse feature selection: Application to fraud detection. IEEE Access. 2021;9:28101–10.

Rustam F, Siddique MA, Siddiqui H, Ullah S, Choi GS. Wireless capsule endoscopy bleeding images classification using CNN based model. IEEE Access. 2021;9:33675–88.

Reshi AA, Rustam F, Mehmood A, Alhossan A, Alrabiah Z, Ahmad A, Alsuwailem H, Choi, G.S.: An efficient cnn model for covid-19 disease detection based on x-ray image classification. COMPLEXITY,. 2021 MAY 17. Article. 2021. https://doi.org/10.1155/2021/6621607.

Li F, Yang Y. Analysis of recursive feature elimination methods. In: Proceedings of the 28th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 633–634 2005

Park YW, Oh J, You SC, Han K, Ahn SS, Choi YS, Chang JH, Kim SH, Lee S-K. Radiomics and machine learning may accurately predict the grade and histological subtype in meningiomas using conventional and diffusion tensor imaging. Eur Radiol. 2019;29(8):4068–76.

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP. Smote: synthetic minority over-sampling technique. J Artif Intell Res. 2002;16:321–57.

Provost F Machine learning from imbalanced data sets 101 2000

He H, Bai Y, Garcia EA, Li S Adasyn: Adaptive synthetic sampling approach for imbalanced learning. In: 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), pp. 1322–1328 2008. https://doi.org/10.1109/IJCNN.2008.4633969

Wang Z, Wu C, Zheng K, Niu X, Wang X. Smotetomek-based resampling for personality recognition. IEEE Access. 2019;7:129678–89.

Fernández A, García S, Galar M, Prati RC, Krawczyk B, Herrera F Learning from Imbalanced Data Sets, 2018

Lunardon N, Menardi G, Torelli N Rose: Rose: Random over-sampling examples 2014

Pinto A, Pereira S, Rasteiro D, Silva CA. Hierarchical brain tumour segmentation using extremely randomized trees. Pattern Recogn. 2018;82:105–17.

Khalilia M, Chakraborty S, Popescu M. Predicting disease risks from highly imbalanced data using random forest. BMC Medi Inf Decis Making. 2011;11(1):51–51.

Asim Y, Malik AK, Raza B, Shahaid AR, Qamar N. Predicting influential blogger’s by a novel, hybrid and optimized case based reasoning approach with balanced random forest using imbalanced data. IEEE Access. 2020;9:6836–54.

Geurts P, Ernst D, Wehenkel L. Extremely randomized trees. Mach Learn. 2006;63(1):3–42.

Soltaninejad M, Yang G, Lambrou T, Allinson N, Jones TL, Barrick TR, Howe FA, Ye X. Automated brain tumour detection and segmentation using superpixel-based extremely randomized trees in flair MRI. Int J Comput Assist Radiol Surg. 2017;12(2):183–203.

Didolkar MM. MS: Image guided core needle biopsy of musculoskeletal lesions: Are nondiagnostic results clinically useful? Clin Orthop Related Res. 2013;471(11):3601–9.

Acknowledgements

The data of this study came from the data of soft tissue sarcoma collected by 3 Hospital. 122 cases collected by The Affiliated Hospital of Qingdao University, 130 cases collected by Shandong Provincial Hospital Affiliated to Shandong First Medical University and The Third Hospital of Hebei Medical University.

Funding

This work is supported by National Natural Science Foundation of China (NSFC) under Grant No. 62006134, Natural Science Foundation of Shandong Provincial under Grant No. ZR2020QF033.

Author information

Authors and Affiliations

Contributions

In this paper, LG put forward the research ideas. XL selected the methods, carried out experiments, collected the results and drafted the paper. JG wrote the medical section. HW, SY and LD collected and organized the MR scans images of soft tissue sarcoma, performed segmentation, image normalization and feature calculation on all images. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study was reviewed by the Medical Ethics Committee of the Affiliated Hospital of Qingdao University, all methods were performed in accordance with the relevant guidelines and regulations, and the informed consent from the patient was abandoned.

Consent for publication

Not applicable for this paper.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Liu, X., Guo, L., Wang, H. et al. Research on imbalance machine learning methods for MR\(T_1\)WI soft tissue sarcoma data. BMC Med Imaging 22, 149 (2022). https://doi.org/10.1186/s12880-022-00876-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12880-022-00876-5