Abstract

Background

With the rapid spread of COVID-19 worldwide, quick screening for possible COVID-19 patients has become the focus of international researchers. Recently, many deep learning-based Computed Tomography (CT) image/X-ray image fast screening models for potential COVID-19 patients have been proposed. However, the existing models still have two main problems. First, most of the existing supervised models are based on pre-trained model parameters. The pre-training model needs to be constructed on a dataset with features similar to those in COVID-19 X-ray images, which limits the construction and use of the model. Second, the number of categories based on the X-ray dataset of COVID-19 and other pneumonia patients is usually imbalanced. In addition, the quality is difficult to distinguish, leading to non-ideal results with the existing model in the multi-class classification COVID-19 recognition task. Moreover, no researchers have proposed a COVID-19 X-ray image learning model based on unsupervised meta-learning.

Methods

This paper first constructed an unsupervised meta-learning model for fast screening of COVID-19 patients (UMLF-COVID). This model does not require a pre-trained model, which solves the limitation problem of model construction, and the proposed unsupervised meta-learning framework solves the problem of sample imbalance and sample quality.

Results

The UMLF-COVID model is tested on two real datasets, each of which builds a three-category and four-category model. And the experimental results show that the accuracy of the UMLF-COVID model is 3–10% higher than that of the existing models.

Conclusion

In summary, we believe that the UMLF-COVID model is a good complement to COVID-19 X-ray fast screening models.

Similar content being viewed by others

Background

Coronavirus disease 2019 (COVID-19) caused by SARS-CoV-2 has become one of the most serious epidemic diseases in the world since the twentieth century [1,2,3]. The main symptoms of COVID-19 include dry cough, sore throat, fever, organ failure, septic shock, severe pneumonia, acute respiratory distress syndrome (ARDS), etc. [3,4,5]. Due to the highly contagious nature of COVID-19, medical systems in many countries are on the verge of collapse [6]. To date, there remains no specific medicine for COVID-19. Therefore, patients can only clear the virus through their own immune systems [7], directly leading to the rapid increase in the death rate of COVID-19. Tens of thousands of have people died because of COVID-19 [2, 8]. In this situation, stopping the spread of the virus has become the focus of international researchers.

Researchers have proposed many methods to combat the COVID-19 pandemic [9,10,11,12]. However, previous studies have shown that the best way to stop the spread of COVID-19 is to screen people infected with COVID-19 as quickly as possible [13,14,15]. Currently, reverse transcription-polymerase chain reaction (RT–PCR) is the most commonly used diagnostic test for COVID-19 [16,17,18]. However, the sensitivity of the RT–PCR test is low, and the test time of RT–PCR is long in the early stage [19,20,21,22]. The cost of RT–PCR testing in hospitals is also high. Therefore, it is difficult for many countries to conduct large-scale nucleic acid testing. In this case, using CT images or X-ray images for rapid preliminary screening of subjects with potential pneumonia symptoms is a feasible solution to this problem [22, 23]. Many machine learning and deep learning methods have brought great help to the fight against COVID-19[24,25,26,27]. However, CT/X-ray images of COVID-19 are very similar to those of traditional pneumonia, which requires experienced experts to diagnose COVID-19 patients based on CT/X-ray images [28, 29]. Many regions cannot implement the program due to a lack of experts. Therefore, some COVID-19 potential patient detection models based on CT images have been proposed and have achieved good results [30,31,32]. Although CT images can provide better details than X-ray scans, CT scans cause more harm to the human body, and the cost of CT scanning is also high. Therefore, many researchers recommend using X-ray imaging instead of CT imaging for preliminary screening [33, 34]. X-ray imaging has the characteristics of fast speed, low cost and minor damage.

At present, researchers have established some X-ray image datasets of COVID-19 patients. For example, Mahmud et al. proposed CovXNet, which is a COVID-19 X-ray image detection model based on transfer learning [34]. Shorfuzzamana et al. proposed MetaCOVID, which is a supervised meta-learning model [35].

However, the existing models still have two main problems. First, most of the existing models are supervised. The initialization of these models is based on pre-training, and the dataset images used by the pre-trained model need to have features similar to those of COVID-19 X-ray images. These issues limit the construction of models. Second, the number of categories based on the X-ray dataset of COVID-19 and other pneumonia patients is usually imbalanced. The quality is also difficult to distinguish, making it difficult for the model to use the supervised meta-learning model directly for training. This issue also increases the difficulty of transfer learning and leads to non-ideal results with the model in the multi-class classification COVID-19 recognition task.

This paper proposes an unsupervised meta-learning recognition model for COVID-19 X image detection (UMLF-COVID). The UMLF-COVID model does not require pre-trained model parameters, which solves the limitation problem of model construction. The proposed unsupervised meta-learning framework only needs to have few pneumonia pictures in each cycle, which solves the problem of sample imbalance and sample quality. An n-way k-shot training form and a gradient-based meta-learning optimization strategy are adopted in this paper. The UMLF-COVID model is unsupervised in the meta-learning step, which randomly samples K images for each N class and uses artificial labels to build a training set. This training set is related to the target but does not require proper category labels. Next, the model uses a validation dataset to update the gradient based on the deep learning model. Another feature of the model is that the validation set is created based on a training data sample using an enhancement function, which solves the limitation on the number of categories in the COVID-19 dataset (Fig. 1). The experiment uses two real datasets to test UMLF-COVID and constructs three-category and four-category models for each dataset individually. In addition, the model contains a 4-layers neural network. In the experimental results, the model can effectively identify COVID-19 patients and others. The UMLF-COVID model achieves a comprehensive recognition accuracy of 0.94 in the three-classification experiment (COVID-19, normal person, and other pneumonia). In the four-classification experiment (COVID-19, normal person, virus pneumonia, bacterial pneumonia), the model reached a recognition accuracy of 0.9.

The main contributions of this paper can be summarized as follows:

First, the UMLF-COVID model is the first approach based on unsupervised meta-learning to identify COVID-19 X-ray images.

Second, the UMLF-COVID model does not need pre-trained parameters, which solves the limitation problem of model construction.

Third, it does not have high requirements for the number of samples of each type of pneumonia, which solves the problem of small sample size and unbalanced COVID-19 X-ray data.

Fourth, according to the experimental results, the UMLF-COVID model is better than the existing supervised model. Because it can learn the experience of multiple pneumonia X-ray classification tasks during the training step, the UMLF-COVID model ultimately achieves better results on the fixed COVID-19 pneumonia classification task.

Fifth, two real datasets are set to verify the performance, and the experimental results show that the UMLF-COVID is better than existing models. At the same time, only a 4-layer neural network is needed to construct an outstanding prediction model.

Methods

Dataset

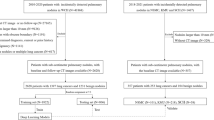

Because the number of patients diagnosed with COVID-19 is small. Therefore, only conducting an experiment on one data set is not enough to prove the performance of the model. In order to verify the performance and stability of the UMLF-COVID model. This paper obtained three available COVID-19 X-ray and other pneumonia datasets to construct two experimental datasets, and the description is as follows.

BIMCV-COVID19 + dataset

The BIMCV-COVID19 + dataset [36] is a large dataset that contains chest X-ray images (CXR) and Computed Tomography (CT) images of some COVID-19 patients. The dataset includes the demographic information of the patient and the label information of the image. The first version of this dataset includes 1,380 CX images, 885 DX images and 163 CT images.

Kaggle dataset

The fourth external validation was performed on an open public Kaggle-pneumonia dataset [37]. This dataset contains three folders (training, testing, validation), and each folder includes subfolders of the image category (viral pneumonia/bacterial pneumonia/normal). There were 5,663 chest X-ray images (front and back) collected from a retrospective study of paediatric patients aged 1–5 years in Guangzhou Maternal and Child Health Center. These images were first screened for quality control by removing low-quality or unreadable scans. Two professional physicians classified the diagnosis of the image and then cleared it to train the AI system. To resolve any grading errors, the dataset was also checked by another expert.

Chest XRay_AI dataset

De-identified and anonymized data were deposited into the China National Center for Bioinformation [38]. The chest X-ray image (CXR) dataset was constructed by the China Chest X-ray Image Investigation Association (CC-CXRI). This dataset can be used globally to help researchers study COVID-19. An AI model is first used to identify common chest diseases, including atelectasis, cardiac hypertrophy, consolidation, oedema, effusion, emphysema, fibrosis, hernia, infiltration, nodules, masses, pleural thickening, pneumonia and pneumothorax. ChestDX and ChestDx-PE are datasets of patients. The CC-CXRI-P dataset contains viral pneumonia (including COVID-19 pneumonia), other types of pneumonia and normal images.

These three public datasets are divided into two experimental datasets based on the random sampling method, namely, BIMCV and Xray_AI. The experimental dataset is shown in Table 1. The total number of X-ray images is 1,027, including 395 of COVID-19 patients and 632 of non-COVID-19 patients. Each experimental dataset contains five classification labels: COVID-19, normal person, bacterial pneumonia, viral pneumonia and other pneumonia. This paper only uses the frontal X-ray images of the chest to experiment (Fig. 2).

Model

This paper resizing all the images in the dataset to the same size as the preliminary pre-processing step, making the subsequent processes faster and easier to fine-tune. The image size that this paper used is 500 × 500.

Meta-learning framework

The idea of meta-learning is to learn a general learning algorithm that can achieve good learning results in multiple tasks [39]. Ideally, the model can learn the common experience in different tasks, and in each new task, it can obtain a better result than the previous task. This is the reason why meta-learning is better than traditional single-task models, and the performance of \(\omega\) can be evaluated on a task distribution \(p\left( {\mathcal{T}} \right)\) for each task involving a dataset and a loss function \(T = \left\{ {{\mathcal{D}},{\mathcal{L}}} \right\}\). Here, \(\omega\) is a parameter indicating ‘how to study’, such as the optimizer that selects model parameter \(\theta\). Therefore, the general model of meta-learning can be represented as Formula (1):

where \({\mathcal{L}}\) is the loss of the task. While divide the training dataset into \({\mathcal{D}} = \left( {{\mathcal{D}}^{train} ,{\mathcal{D}}^{val} } \right)\). Then, the task-specific loss can be defined as Formula (2):

where \(\theta^{*}\) is the parameter trained in the \(\omega\) model and \({\mathcal{D}}^{train}\).

Normally, assume a set of M source tasks are sampled from \(p\left( {\mathcal{T}} \right)\). Denote the M source task set used in the meta-training stage as \({\mathcal{D}}_{source} = \left\{ {\left( {{\mathcal{D}}_{source}^{train} ,{\mathcal{D}}_{source}^{val} } \right)} \right\}_{1}^{M}\), where each task has training and verification data. The source training and validation datasets are called the supporting and query datasets, respectively. The meta-training step of \(\omega\) can be written as Formula (3):

Moreover, the target datasets used in the test step are denoted as \({\mathcal{D}}_{target} = \left\{ {\left( {{\mathcal{D}}_{target}^{train} ,{\mathcal{D}}_{target}^{test} } \right)} \right\}\), where the test dataset still contains training and test data. In the training step, the model uses the learned meta-knowledge \(\omega^{*}\) to train and test the fixed task of the test set, and the optimization goal of the parameter \(\theta\) can be written as Formula (4):

Compared with the traditional supervised training model, the target task of the meta-learning method can benefit from meta-knowledge \(\omega^{*}\), which can be the empirical information of multiple tasks. This makes it possible for the meta-learning method to achieve better training effects than traditional methods on the target task.

Construct training dataset

Assuming there is a dataset \({\mathcal{U}}\) that contains N classes and M samples, it can be denoted as Formula (5):

UMLF-COVID model performs meta-training with n-way k-shot classification. That is, the number of classifications of the classifier during training is n, and each category contains k images. To remove existing labels, the model randomly samples n*k images from the whole dataset and attaches artificial labels. However, it is important to keep the labels distinguished by classes in actual operation: if two images have the same labels, they should have the same artificial labels. Therefore, UMLF-COVID model adopt the following strategy to ensure label distinction.

First, the UMLF-COVID model randomly \(n\left( {n \in N} \right)\) classes from dataset \({\mathcal{U}}\) to build a subset. Each class is regarded as a group and can be denoted by Formula (6):

where h is the number of images from selected classes. Then, the UMLF-COVID model shuffle the order of \({\hat{\mathcal{U}}}\) by group and remove labels to construct an unsupervised dataset, which can be denoted as Formula (7):

The UMLF-COVID model randomly select k images for each group and put them into the training set. The UMLF-COVID model can obtain an unlabelled metadata training set \({\mathcal{T}}\), which can be denoted as Formula (8):

Attaching artificial labels to \({\mathcal{T}}\), the UMLF-COVID model obtain the training set \({\mathcal{D}}_{source}^{train}\) required by the model, which can be denoted as Formula (9):

Formula (7) ensures that the classes of the meta-training set extracted by the model will be disrupted every time, and artificial labels are given in order. Even if the model extrac n of the same classes, it may show different classification forms in the artificial labels. Formula (8) ensures the classification of artificial labels. After the above operations, the UMLF-COVID model can build an unsupervised training set of metadata for the model, given the classification information through artificial labels. The algorithm for constructing the training dataset is denoted as Algorithm 1.

Construct validation datasets

Generally, the meta-learning model based on the gradient update principle requires the data distribution of the training dataset and validation datasets to be completely different, ensuring that the effect of gradient update is good enough. However, due to the limitation of the number of categories for the COVID-19 dataset, it is difficult to find a new class to build a validation dataset. The UMLF-COVID model adopt an enhancement function on the training set to construct a validation dataset to solve this problem. This paper believe that the distribution of the validation dataset generated by the enhancement function is different from that of the training dataset, which guarantees the effect of the gradient update. The final experimental results also verified this ideas.

The UMLF-COVID model use three kinds of enhancement functions: salt and pepper noise, Gaussian noise and random shift. One or more kinds of enhancement functions were used in the training set. The UMLF-COVID model can obtain a validation set \({\mathcal{D}}_{source}^{val}\), which can be denoted as Formula (10):

Update gradient

The goal of the UMLF-COVID model is to find a universal model in the multi-classification pneumonia dataset so that it can be quickly generalized to the three-class and four-class tasks of identifying COVID-19 X-ray images with only a small quantity of data. Therefore, the UMLF-COVID model also use the principle of gradient-based optimization to build a meta-learning model, and the loss function of the model is shown in Formula (11):

where \(\emptyset\) denotes parameter of network. \(\hat{\theta }^{n}\) is the parameter of the n sub-task learned based on \(\emptyset\). \(l^{n}\) is the loss function based on \(\hat{\theta }^{n}\).

Because the task of the UMLF-COVID model is classification, the UMLF-COVID use cross-entropy as the loss function of the task, which can be denoted as Formula (12):

where \({\mathcal{T}}_{i}\) is the i-th task and \(x^{\left( j \right)} ,y^{\left( j \right)}\) are the input and output of \({\mathcal{T}}_{i}\). \(f_{\emptyset }\) denotes the model function, which is determined by the parameter θ.

After establishing the loss function, the UMLF-COVID use the following method to update the gradient and set the initial parameters of the deep learning model in task \({\mathcal{T}}_{i}\) as \(\theta\). The UMLF-COVID use Formula (13) to compute gradient (Fig. 3):

where \(\vartheta_{i}^{\prime} = \theta\). Then, the UMLF-COVID use a validation set \({\mathcal{D}^{\prime}}\) to update gradient \(\theta\) as Formula (14):

Finally, the UMLF-COVID algorithm can be denoted as Algorithm 2.

Parameters of deep learning

Based on the above-unsupervised learning framework, the UMLF-COVID construct a 4-layer neural network model containing 4-layer 2-dimensional convolution layers, and each convolution layer has max-pooling and a batch normalization layer.

The LXCRM model uses a 3*3 convolution kernel. A small convolution kernel means fewer parameters and less time complexity. The first convolution layer uses 16 convolution kernels, the second uses 32 convolution layers, and the third uses 64 convolution layers (Fig. 4).

Comparison models

LeNet5

LeNet-5 comes from the paper Gradient-Based Learning Applied to Document Recognition, which is a classic convolutional neural network [40] and is widely used in handwritten text recognition and other object classification applications. LeNet-5 is a simpler convolutional neural network. The main structure of LeNet-5 is as follows: the two-dimensional input image first passes through the convolutional layer twice to the pooling layer, then passes through the fully connected layer, and finally uses softmax classification as the output layer. The purpose of comparing the LeNet-5 model is to verify whether a simple deep learning model can effectively identify X-ray images of COVID-19 patients.

AlexNet

AlexNet was designed by Hinton and his student Alex Krizhevsky and won the 2012 ImageNet competition [41]. AlexNet is a classic deep learning architecture. The architecture contains 5 convolutional layers and three fully connected layers and uses the dropout layer. The original text uses 2 GPUs for training and limits the network size. Due to the improvement of hardware equipment, this paper only uses a GPU for computing.

VGG19

VGG was proposed by the Visual Geometry Group of Oxford. The network is related to the work on ILSVRC 2014 and shows that increasing the depth of the network can affect the performance to a certain extent. The improvement of VGG compared to AlexNet is using several consecutive 3 × 3 convolution kernels to replace the larger convolution kernel in AlexNet. A smaller convolution kernel reduces the number of model parameters and can better maintain the properties of the image. With the idea of the VGG model, the LXCRM model uses a 3 × 3 convolution kernel. VGG19 contains 19 hidden layers (16 convolutional layers and three fully connected layers). The purpose of comparing the VGG19 model is to verify whether the complex deep learning model can effectively identify X-ray images of COVID-19 patients.

CovXNet

CovXNet was proposed by Shorfuzzaman et al. and uses transfer learning and depthwise convolution with varying dilation rates to efficiently extract diversified features from chest X-rays [34]. In the training phase, the CovXNet model first uses a large database of normal people and non-COVID-19 patients for migration learning. Next, the trained model is migrated to a dataset containing COVID-19 patients to fine-tune the parameters. In the process of data reading, the CovXNet model uses convolution kernels of various sizes to integrate the local and global features of the image.

CNN-LSTM

In 2020, Islam et al. proposed a combination of a convolutional neural network (CNN) and long short-term memory (LSTM) with a deep learning model to automatically diagnose COVID-19 from X-ray images [42]. In this system, the CNN is used for deep feature extraction, and LSTM is used for detection using the extracted features.

CNN-RNN

The CNN-RNN model was used by RAKHAMI et al. in 2021 to quickly identify COVID-19 cases from chest X-rays. Similar to CNN-LSTM, the CNN is also used to extract features [43]. Then, the model uses the recurrent neural network (RNN) method to classify images.

EMCNet

In 2021, Saha et al. proposed the EMCNet model [44]. The model first uses the CNN to extract features from the chest X-ray image and then adds machine learning methods for image classification.

Results

In this experiment, UMLF-COVID is constructed in JupyterLab with 32 GB RAM NVIDIA Tesla V100. The classification neural network is implemented by TensorFlow. The versions of Python and TensorFlow are 3.6.9 and 2.4.1, respectively. The experiment builds three-class and four-class classifiers for five models including comparison methods and adopts three strategies involving 1-shot, 5-shot, and 10-shot for each classifier in UMLF-COVID.

There are two datasets used in the experiment to test performance. This paper uses tenfold cross-validation for the experiment. For each model, the average results are reported in the following sections.

There are seven indicators used to evaluate the models: accuracy, precision, recall, F1-score, AUC using receiver operating characteristic (ROC) and precision-recall (PR). Accuracy is commonly used in deep learning, indicating the ratio of judgements for image classification. Due to the imbalance of the dataset, accuracy cannot express the performance of the model well. Therefore, this paper calculates the precision, recall, and F1-score indicators. Precision indicates the proportion of potential COVID-19 patients predicted to be COVID-19 potential patients. Recall is the proportion of potential COVID-19 patients who are correctly predicted. The PR curve is drawn based on the precision and recall values. The F1-score is a comprehensive manifestation of precision and recall indicators. Moreover, the ROC curve uses two parameters, the true positive rate and the false positive rate, to indicate the performance of classification tasks. The details of the comparison methods can be seen in the Additional file 2 and Additional file 1: Table S1.

Results of BIMCV dataset

Because UMLF-COVID is a few-shot generalized learning model, it will randomly select k images to train in each epoch. This paper first tested the effect of k-shot, involving 1-shot, 5-shot, and 10-shot methods. Figure 5 shows the value changes of these strategies, and the loss of the model gradually decreases and stabilizes. Figures 6, 7 and Tables 2, 3 show the results of the three and four classifiers of UMLF-COVID. It is worth noting that the accuracy of the model increases with increasing k-shots, while the four classifiers have similar results. With an increased number of shots, the model exploits the benefit of more available pairs of images where it has to distinguish a similar image from different images.

The experiment also compares the n-way 10-shot strategy of UMLF-COVID with other CNN classification models. Because COVID-19 is also a kind of viral pneumonia, COVID-19, normal, and virus classes are retained in the three-classifier. This model also randomly selects three classes from the whole dataset for each epoch. Table 4 shows the classification accuracy results. With the 10-shot strategy, UMLF-COVID obtains the best result at approximately 97%. Compared with the CNN-LSTM model, the best of the compared models, UMLF-COVID shows a 4% accuracy improvement, while the LeNet, Alexnet, VGG, CovXNet CNN-RNN, and EMCNet models only reach 92%, 89%, 83%, 89%, 92%, and 91% accuracy, respectively. Moreover, the precision, recall and F1-score indicators of UMLF-COVID reach nearly 97%. The ROC curve and PR curve are also used to show the performance of models. As shown in Fig. 8 and Additional file 2: Figures S1–S7, the UMLF-COVID model is expected to perform well and is better than the other comparison methods. Especially in COVID-19 patients, the recognition rate can reach 100%.

Finally, this paper retains the COVID-19, normal, virus and bacteria classes to test the models in four-classification tasks. Although four-classification tasks are more difficult than three-classification tasks, the UMLF-COVID model can also obtain 90% accuracy, which is much better than that of other models, and all metrics also present good results (Table 5). The precision, recall and F1-score reach nearly 92%, 90%, and 89%, respectively. However, the accuracy of other compared models only reaches 65–88%, and the precision, recall and F1-score of CNN-LSTM and CovXNet reach 88%. As shown in Fig. 8 and Additional file 2: Figures S1–S7, similar to the three-classification task results, the UMLF-COVID model is superior to the other comparison methods. It can still reach 100% for COVID-19 patients.

Results of the XRay_AI dataset

The XRay_AI dataset used different batches of pneumonia diseases: COVID-19, normal, virus, bacteria, and other pneumonia. First, the results of UMLF-COVID with different strategies are showed in Fig. 9. The accuracy of the three-classification tasks is higher than that of the four-classification tasks. With increasing k-shot parameter k, the accuracy is higher; the details of the results are shown in Tables 6, 7 and Figs. 10, 11.

In the three-classification tasks, the accuracy of UMLF-COVID reaches 93%, and the precision, recall and F1-score are 94%, 93%, and 93%, respectively. The best performing model is CNN-LSTM; its accuracy is 90%, and the precision, recall and F1-score only reach 0.9, 0.9 and 0.9, respectively (Table 8). In addition, according to Fig. 12 and Additional file 2: Figures S8–S14, UMLF-COVID still performs well on the XRay_AI dataset, which is better than the comparison model.

The experiment also tests the XRay_AI dataset in four-classification tasks, and the UMLF-COVID model has obvious improvements. The accuracy of UMLF-COVID reaches 90%, and the precision, recall and F1-score are 91%, 90%, and 90%, respectively. CNN-LSTM was the best performing model in the comparison models; its accuracy reached 80%, and the precision, recall and F1-score were 80%, 80%, and 80%, respectively (Table 9). According to Fig. 12 and Additional file 2: Figures S8–S14, the ROC curve and PR curve of UMLF-COVID on the XRay_AI dataset are better than those of the comparison model.

In summary, it can be seen from these two datasets that although UMLF-COVID is an unsupervised model, its performance is better than that of existing classification models. Specifically, as the number of task classes increases, the improvement is more pronounced.

Discussion

X-ray imaging has many advantages in clinical applications, such as low cost and less damage to patients. However, the characteristic of X-ray images is that when encountering a blocked part, the film will not be exposed, and the part will appear white after imaging. This issue creates greater requirements for the accurate judgement of clinicians. Inexperienced clinicians may find it difficult to accurately judge whether a patient has a potential COVID-19 infection based on X-ray images. However, the CT images will pass through the human body in layers, which means that the CT scan can observe the patient's lungs hierarchically, containing more information. This means that it is more difficult to establish a patient identification model for COVID-19 based on X-ray images than for datasets based on CT images. However, considering the convenience of X-ray, it is necessary to establish a patient identification model for COVID-19 based on X-ray images. At present, there are two main limitations to the existing deep learning model, which is based on COVID-19 X-ray images. First, the number of COVID-19 X-ray images is small, which means that the distribution of the training dataset is very unbalanced. Therefore, it is challenging to construct a supervised deep learning model. Second, most deep learning models to process COVID-19 images are based on transfer learning. This method is limited by the reference dataset, and the parameters are difficult to adjust. Therefore, this paper proposes the UMLF-COVID model, which is the first to use an unsupervised meta-learning method to process COVID-19 images. This model does not need pre-training, and it only needs a small number of samples in each cycle, which solves the problem of sample quality and data imbalance in the COVID-19 X-ray dataset. Moreover, we use artificial labels to solve the limitation on the number of categories.

The UMLF-COVID model was discussed with radiologists at the Macau University of Science and Technology Hospital. Radiographers have a positive attitude towards using the UMLF-COVID model to diagnose COVID-19 X-ray data. They believe that the use of big data and intelligent detection (AI) can increase the detection rate of lesions, increase the speed of reporting, and reduce the workload of doctors. However, at the same time, the final detected lesions still require doctors to combine comprehensive clinical analysis to draw accurate conclusions.

Conclusion

In summary, this paper proposes a COVID-19 X-ray image classification model based on unsupervised meta-learning. The experiment shows that the UMLF-COVID model is better than the existing deep learning models. In two datasets, the performance of three-classification tasks is better than comparison models by 4–14%, and the performance of four-classification tasks is better by at most 17%. The results are satisfactory, but the UMLF-COVID model still has some problems. For example, the UMLF-COVID model can determine whether a patient is a COVID-19 patient but cannot distinguish between mild and severe cases. This idea is very important for clinicians and has higher requirements for the model. In the future, the UMLF-COVID model will be further expanded to improve the multi-class accuracy of the model and enable the model to distinguish between mild and severe COVID-19 patients.

Availability of data and materials

All datasets used in this article are publicly available online. A Python package of the UMLF-COVID model is available at https://github.com/mr1528126360/UMLF_COVID. The web links of three datasets is available at BIMCV-COVID19 + . https://osf.io/nh7g8/. Chest X-Ray Images. https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia. Chest XRay_AI. https://miracle.grmh-gdl.cn/chest_xray_ai/.

Abbreviations

- CT:

-

Computed Tomography

- COVID-19:

-

Coronavirus disease 2019

- ARDS:

-

Acute respiratory distress syndrome

- RT–PCR:

-

Reverse transcription-polymerase chain reaction

- UMLF-COVID:

-

Unsupervised meta-learning recognition model for COVID-19 X image detection

- CXR:

-

Chest X-ray images

- CNN:

-

Convolutional neural network

- LSTM:

-

Long short-term memory

- RNN:

-

Recurrent neural network

- ROC:

-

Receiver operating characteristic

- PR:

-

Precision-recall

References

COVID TC, Team R. Severe outcomes among patients with coronavirus disease 2019 (COVID-19)-United States, February 12–March 16, 2020. MMWR Morb Mortal Wkly Rep 2020, 69(12):343–346.

Organization WH. Coronavirus disease 2019 (COVID-19): situation report, 82. 2020.

Velavan TP, Meyer CG. The COVID-19 epidemic. Trop Med Int Health. 2020;25(3):278.

Bedford J, Enria D, Giesecke J, Heymann DL, Ihekweazu C, Kobinger G, Lane HC, Memish Z. Oh M-d, Schuchat A: COVID-19: towards controlling of a pandemic. The Lancet. 2020;395(10229):1015–8.

Mo P, Xing Y, Xiao Y, Deng L, Zhao Q, Wang H, Xiong Y, Cheng Z, Gao S, Liang K. Clinical characteristics of refractory COVID-19 pneumonia in Wuhan, China. Clinical Infectious Diseases 2020.

Remuzzi A, Remuzzi G. COVID-19 and Italy: What next? The Lancet 2020.

Mehta P, McAuley DF, Brown M, Sanchez E, Tattersall RS, Manson JJ, Collaboration HAS. COVID-19: consider cytokine storm syndromes and immunosuppression. Lancet (Lond, Engl). 2020;395(10229):1033.

COVID TC, Stephanie B, Virginia B, Nancy C, Aaron C, Ryan G, Aron H, Michelle H, Tamara P, Matthew R. Geographic differences in COVID-19 cases, deaths, and incidence-United States, February 12–April 7, 2020. MMWR Morbidity and mortality weekly report 2020, 69.

Ullah SMA, Islam MM, Mahmud S, Nooruddin S, Raju STU, Haque MR. Scalable Telehealth services to combat novel coronavirus (COVID-19) pandemic. Sn Comput Sci. 2021;2(1):1–8.

Islam MM, Mahmud S, Muhammad L, Islam MR, Nooruddin S, Ayon SI. Wearable technology to assist the patients infected with novel coronavirus (COVID-19). SN Comput Sci. 2020;1(6):1–9.

Islam MM, Ullah SMA, Mahmud S, Raju STU. Breathing aid devices to support novel coronavirus (COVID-19) infected patients. SN Comput Sci. 2020;1(5):1–8.

Rahman MM, Manik MMH, Islam MM, Mahmud S, Kim J-H. An automated system to limit COVID-19 using facial mask detection in smart city network. In: 2020 IEEE International IOT, electronics and mechatronics conference (IEMTRONICS): 2020, pp. 1–5. IEEE.

Gostic K, Gomez AC, Mummah RO, Kucharski AJ, Lloyd-Smith JO. Estimated effectiveness of symptom and risk screening to prevent the spread of COVID-19. Elife 2020, 9:e55570.

Organization WH. Coronavirus disease 2019 (COVID-19): situation report, 72. 2020.

Parmet WE, Sinha MS. Covid-19—the law and limits of quarantine. New Engl J Med 2020, 382(15):e28.

Lan L, Xu D, Ye G, Xia C, Wang S, Li Y, Xu H. Positive RT-PCR test results in patients recovered from COVID-19. JAMA. 2020;323(15):1502–3.

Tahamtan A, Ardebili A. Real-time RT-PCR in COVID-19 detection: issues affecting the results. Expert Rev Mol Diagn. 2020;20(5):453–4.

van Kasteren PB, van Der Veer B, van den Brink S, Wijsman L, de Jonge J, van den Brandt A, Molenkamp R, Reusken CB, Meijer A. Comparison of seven commercial RT-PCR diagnostic kits for COVID-19. J Clin Virol. 2020;128:104412.

Fang Y, Zhang H, Xie J, Lin M, Ying L, Pang P, Ji W. Sensitivity of chest CT for COVID-19: comparison to RT-PCR. Radiology 2020:200432.

Ai T, Yang Z, Hou H, Zhan C, Chen C, Lv W, Tao Q, Sun Z, Xia L. Correlation of chest CT and RT-PCR testing in coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases. Radiology 2020:200642.

Tahamtan A, Ardebili A: Real-time RT-PCR in COVID-19 detection: issues affecting the results. In.: Taylor & Francis; 2020.

Yang W, Yan F. Patients with RT-PCR-confirmed COVID-19 and normal chest CT. Radiology. 2020;295(2):E3–E3.

Bai HX, Hsieh B, Xiong Z, Halsey K, Choi JW, Tran TML, Pan I, Shi L-B, Wang D-C, Mei J: Performance of radiologists in differentiating COVID-19 from viral pneumonia on chest CT. Radiology 2020.

Islam MM, Karray F, Alhajj R, Zeng J. A review on deep learning techniques for the diagnosis of novel coronavirus (covid-19). IEEE Access. 2021;9:30551–72.

Asraf A, Islam MZ, Haque MR, Islam MM. Deep learning applications to combat novel coronavirus (COVID-19) pandemic. SN Comput Sci. 2020;1(6):1–7.

Rahman MM, Islam MM, Manik MMH, Islam MR, Al-Rakhami MS. Machine learning approaches for tackling novel coronavirus (COVID-19) Pandemic. SN Comput Sci. 2021;2(5):1–10.

Muhammad L, Islam MM, Usman SS, Ayon SI. Predictive data mining models for novel coronavirus (COVID-19) infected patients’ recovery. SN Comput Sci. 2020;1(4):1–7.

Zhao J, Zhang Y, He X, Xie P: COVID-CT-Dataset: a CT scan dataset about COVID-19. arXiv preprint https://arxiv.org/abs/2003.13865 2020.

Hall LO, Paul R, Goldgof DB, Goldgof GM: Finding covid-19 from chest x-rays using deep learning on a small dataset. arXiv preprint https://arxiv.org/abs/2004.02060 2020.

Li L, Qin L, Xu Z, Yin Y, Wang X, Kong B, Bai J, Lu Y, Fang Z, Song Q: Artificial intelligence distinguishes COVID-19 from community acquired pneumonia on chest CT. Radiology 2020.

Gozes O, Frid-Adar M, Greenspan H, Browning PD, Zhang H, Ji W, Bernheim A, Siegel E: Rapid ai development cycle for the coronavirus (covid-19) pandemic: Initial results for automated detection & patient monitoring using deep learning ct image analysis. arXiv preprint https://arxiv.org/abs/2003.05037 2020.

Harmon SA, Sanford TH, Xu S, Turkbey EB, Roth H, Xu Z, Yang D, Myronenko A, Anderson V, Amalou A. Artificial intelligence for the detection of COVID-19 pneumonia on chest CT using multinational datasets. Nat Commun. 2020;11(1):1–7.

Wang L, Wong A: COVID-Net: A Tailored Deep Convolutional Neural Network Design for Detection of COVID-19 Cases from Chest X-Ray Images. arXiv preprint https://arxiv.org/abs/2003.09871 2020.

Mahmud T, Rahman MA, Fattah SA: CovXNet: A multi-dilation convolutional neural network for automatic COVID-19 and other pneumonia detection from chest X-ray images with transferable multi-receptive feature optimization. Comput Biol Med 2020, 122:103869.

Shorfuzzaman M, Hossain MS: MetaCOVID: A Siamese neural network framework with contrastive loss for n-shot diagnosis of COVID-19 patients. Pattern Recogn 2021, 113:107700.

BIMCV-COVID19+. https://osf.io/nh7g8/. Accessed October 17, 2020.

Chest X-Ray Images. https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia. Accessed October 17, 2020.

Chest XRay_AI. https://miracle.grmh-gdl.cn/chest_xray_ai/. Accessed October 17, 2020.

Hospedales T, Antoniou A, Micaelli P, Storkey A: Meta-learning in neural networks: A survey. arXiv preprint https://arxiv.org/abs/2004.05439 2020.

LeCun Y, Bottou L, Bengio Y, Haffner P. Gradient-based learning applied to document recognition. Proc IEEE. 1998;86(11):2278–324.

Krizhevsky A, Sutskever I, Hinton G. AlexNet. Adv Neural Inf Process Syst. 2012;2012:1–9.

Islam MZ, Islam MM, Asraf A: A combined deep CNN-LSTM network for the detection of novel coronavirus (COVID-19) using X-ray images. Inf Med Unlock 2020, 20:100412.

Al-Rakhami MS, Islam MM, Islam MZ, Asraf A, Sodhro AH, Ding W. Diagnosis of COVID-19 from X-rays using combined CNN-RNN architecture with transfer learning. MedRxiv 2021:2020.2008. 2024.20181339.

Saha P, Sadi MS, Islam MM. EMCNet: Automated COVID-19 diagnosis from X-ray images using convolutional neural network and ensemble of machine learning classifiers. Inf Med Unlock. 2021;22:100505.

Acknowledgements

We thank Macau University of Science and Technology for their support.

Funding

This work is supported by the Macau Science and Technology Development Funds Grant 0056/2020/AFJ and 0158/2019/A3 from the Macau Special Administrative Region of the People’s Republic of China. The funding body played no role in the design of the study and collection, analysis, and interpretation of data and in writing the manuscript.

Author information

Authors and Affiliations

Contributions

R.M. and X.D. conducted experiments and completed the first draft of the article. S.L.X., Y.L. and S.L.L. revised and reviewed the final version of the article.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1:

Supplementary Table 1.

Additional file 2:

Supplementary Materials.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Miao, R., Dong, X., Xie, SL. et al. UMLF-COVID: an unsupervised meta-learning model specifically designed to identify X-ray images of COVID-19 patients. BMC Med Imaging 21, 174 (2021). https://doi.org/10.1186/s12880-021-00704-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12880-021-00704-2