Abstract

Background

In recent years, studies that used routinely collected data (RCD), such as electronic medical records and administrative claims, for exploring drug treatment effects, including effectiveness and safety, have been increasingly published. Abstracts of such studies represent a highly attended source for busy clinicians or policy-makers, and are important for indexing by literature database. If less clearly presented, they may mislead decisions or indexing. We thus conducted a cross-sectional survey to systematically examine how the abstracts of such studies were reported.

Methods

We searched PubMed to identify all observational studies published in 2018 that used RCD for assessing drug treatment effects. Teams of methods-trained collected data from eligible studies using pilot-tested, standardized forms that were developed and expanded from “The reporting of studies conducted using observational routinely collected health data statement for pharmacoepidemiology” (RECORD-PE) statement. We used descriptive analyses to examine how authors reported data source, study design, data analysis, and interpretation of findings.

Results

A total of 222 studies were included, of which 118 (53.2%) reported type of database used, 17 (7.7%) clearly reported database linkage, and 140 (63.1%) reported coverage of data source. Only 44 (19.8%) studies stated a predefined hypothesis, 127 (57.2%) reported study design, 140 (63.1%) reported statistical models used, 142 (77.6%) reported adjusted estimates, 33 (14.9%) mentioned sensitivity analyses, and 39 (17.6%) made a strong claim about treatment effect. Studies published in top 5 general medicine journals were more likely to report the name of data source (94.7% vs. 67.0%) and study design (100% vs. 53.2%) than those in other journals.

Conclusions

The under-reporting of key methodological features in abstracts of RCD studies was common, which would substantially compromise the indexing of this type of literature and prevent the effective use of study findings. Substantial efforts to improve the reporting of abstracts in these studies are highly warranted.

Similar content being viewed by others

Background

In recent years, routinely collected health data (RCD), such as electronic healthcare records and administrative claims, have been commonly used for exploring drug treatment effects [1,2,3]. However, such studies are often complex, not only in the use of data (e.g., epidemiology design and statistical analysis) but also reporting of methodological details and study findings. To enhance transparent reporting of studies using RCD, the REporting of studies Conducted using Observational Routinely collected health Data (RECORD) statement was issued in 2015 [4]. Subsequently, its extension to pharmacoepidemiology (RECORD-PE) was released [5].

In the reporting of studies using RCD, abstracts are often the first and probably the primary piece of information for clinicians and policy makers to read, which could have profound impact on the subsequent use of evidence by clinicians and policy makers [4]. Sufficient reporting would improve the assessment of study validity and results, and facilitate appropriate interpretation of study findings, thus achieving judicious use of the evidence [6,7,8]. One additional issue about such studies is how the information about RCD databases can be effectively indexed by literature databases (e.g., PubMed) to facilitate searching; this issue has been ignored and largely compromised identification of such studies.

Nevertheless, abstracts usually have strict word limits in the vast majority of journals, which often makes it highly challenging to adequately present important details. This is particularly the case for the studies using RCD, since such studies are inherently more complex in the methodological details and resulting findings than the classical well-established randomized controlled trials. Earlier studies found that the reporting of abstracts was often suboptimal [7, 9,10,11]. For instance, a survey of 124 studies using RCD—published in 2012—found that 62.9% of studies did not clearly describe study design in the abstract [11]. Another survey involving 25 studies suggested that only 44.0% of studies clearly reported data source [7]. However, these studies either included a relatively small sample size, were outdated, or did not focus on studies about drug effects. In addition, several important issues, such as how the investigators claimed treatment effect [12, 13], were not investigated before. Therefore, we conducted a cross-sectional survey of published RCD studies exploring drug treatment effects to investigate their reporting of titles or abstracts.

Methods

Eligibility criteria

We included studies that explicitly used RCD and a comparative study design (e.g., cohort study or case-control study) to explore drug treatment effects, including effectiveness and/or safety. RCD were defined as those data generated form routine care without a priori research purposes, such as electronic medical records, administrative claims data or insurance data. We excluded a study if it was unable to determine whether RCD were used. We also excluded studies that involved primary data collection for a research purpose.

Search strategy

This study is part of a major research project addressing reporting and methodological issues about studies using RCD. Our major research project was conceptualized in early 2019. We searched PubMed to identify studies using RCD to explore treatment effects, published in the year of 2018 (search date September 18, 2019). We used terms correlated to routinely collected data, including “administrative claims data”, “routinely collected data” and “datalink”. We also integrated the search strategy for “electronic health records”, which was developed by the National Library of Medicine [14] and was peer-reviewed by an information specialist [11, 15], into our search. The details of the search strategy are presented in Additional file 1. The search was restricted to English language.

Sample size and selection process

This study is part of a major research project addressing methodological issues about studies using RCD. The sample size for the major project was calculated based on number of factors that were potentially associated with study quality, measured as a continuous variable. These factors were selected according to group discussion and previously published studies [16,17,18]. Seven characteristics with eleven categories were taken in to consideration as independent variables, including whether the journal endorses RECORD (yes vs. no), the type of journal (top 5 general medicine journals versus other journals), the source of funding (any funding from for-profit organizations vs. funding exclusively from government or nonprofit organizations vs. no funding/not reported), type of data sources (EMR/EHR vs. claims vs. both), sample size (≤1000 vs. 1000–5000 vs. ≥5000), the type of outcome (exclusively safety outcome vs. exclusively effectiveness outcome vs. both safety and effectiveness outcome) and significance of the primary outcome (yes vs. no). Twenty studies per category were planned to provide sufficient observations and to avoid overfitting, resulting in a sample of 220 [19].

We stratified journals into top 5 general medicine journals and other journals according to impact factor (2018) from the Institute for Scientific Information (ISI) Web of Knowledge Journal Citation Reports. According to the impact factor of 2018, the top 5 general medicine journals included New England Journal of Medicine (NEJM), The Lancet, Journal of the American Medical Association (JAMA), British Medical Journal (BMJ), and JAMA Internal Medicine. We included all studies published in top 5 general medicine journals. With regard to studies published in other journals, we randomly sampled 1000 of the searched reports at a time and screened their tittles, abstracts and full texts for eligibility. We repeated the random sampling process until reaching the planned sample size of 220.

Two teams of paired, method-trained investigators (ML, WW, QH, MW) performed title/abstract screening in duplicate and independently. Subsequently, all potentially eligible full texts were screened by the two teams independently. We designed a Microsoft Access database, in which the screening forms and citation list were compiled, to perform the study screening. Decisions on inclusion or exclusion were entered into this database by investigators. Discrepancies were addressed through discussion or adjudication by a third reviewer (XS).

Information extraction

We evaluated the reporting of titles or abstracts based on the RECORD-PE [5] checklist. The RECORD-PE checklist contained five items for titles and abstracts reporting, including the use of common study design terms, an informative and balanced summary about the research, the data source types and names, the linkage between databases, the geographical region and the timeframe. On the basis of the checklist, we developed structured, pilot-tested data extraction form to document whether the following items were reported: name of database; explicit statement of data source (i.e., healthcare, administrative, insurance, claims, primary care, secondary care, hospital); data coverage (i.e., single center, multiple or regional center, national center of international center); geographic region where the data came from; number of participants; follow-up duration; statistical methods (e.g., cox proportional hazard model); effect estimates (e.g., absolute risk, relative risk, confidence intervals or P-values, crude or adjusted estimates); mention of sensitive analysis; and mention of subgroup analysis.

In addition, we added new items deemed important specifically for RCD studies, through reviewing existing guidance documents [20,21,22,23] and brainstorming. We convened a group of five experts in pharmacoepidemiology, routinely collected health data research, and clinical epidemiology, to consult the importance and appropriateness of these items. Three items were finally included after multiple teleconferences meetings, including 1) whether specific wording was used to indicate the direction of effect, 2) whether a new user design was mainly considered, and 3) the claim about treatment effect (i.e., strong, moderate, weak). Using a pre-specified rule, we judged the strength of claim according to the statement authors made about the primary outcome in the conclusion of an abstract [24].

To ensure the quality of study screening and data abstraction, the data collection forms were pilot-tested and standardized. A sample of 20 studies were extracted and assessed by reviewers (ML, WW, QH, MW) to test the operationalization of the items and improve detailed extraction instructions. For the challenging item, such as claim of effect, 10% of the included full texts were randomly sampled for calibration exercise to ensure the consistency among reviewers. After calibration exercises, we achieved a good inner-reviewer agreement (kappa > 0.8).

Data analysis

Descriptive analyses were conducted to evaluate the study characteristics of the included studies. We used descriptive analysis to explore the reporting characteristics of the data source, study conduction (including design, analysis and results) and interpretation in the title or abstracts. Categorical variables were presented as numbers and percentages. We also examined whether these characteristics differed in the journal impact (top 5 general medicine journals versus other journals).

Continuous variables were presented as the mean and standard deviation (SD) when normally distributed or otherwise the median and interquartile range (IQR). We used Stata/SE (version 14.0) for data analysis.

Results

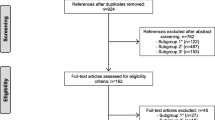

Our search yielded 23,849 reports. After full-text screening, 19 studies published in top 5 general medicine journals and 203 studies in other journals were finally included, a total of 222 studies (Fig. 1). Of the included studies, 35 (15.8%) were published in journals endorsing the RECORD Statement and 6 (2.7%) reported that they endorsed the RECORD Statement. Among included studies, 40 (18.02%) involved patients with endocrinologic disease, 40 (18.02%) involved patients with cardiovascular disease, 18 (8.11%) involved patients with cancers and 14 (6.31%) involved patients with mental health conditions. The general characteristics were displayed in Supplementary Table 1.

Reporting of data source

Of the included studies, 17 (7.7%) clearly specified that they applied data linkage, and 118 (53.2%) reported type of databases used (Table 1); 154 (69.4%) reported name of database. However, 57 studies (25.7%) did not report any information about type of data source. Database coverage was reported in 140 (63.1%) studies, of which 72 (51.4%) used national data sources and 54 (38.6%) studies used multiple or regional data sources; 152 (68.5%) studies reported the country of origin, of which 46 (30.3%) studies used data sources from Taiwan, China, 34 (22.4%) from the US, and 21 (13.8%) from the UK.

Reporting of study designs, statistical analyses and results

In total, 100 (45.1%) studies clearly reported the comparator in their conclusion; 127 (57.1%) studies reported study design, of which 96 (75.6%) were cohort study designs, 14 (11.0%) case-control designs and 16 (12.6%) nested case-controls; 57 (25.7%) reported the use of new user design, and 79 (35.6%) reported follow-up duration; 140 (63.1%) reported statistical models used.

A total of 113 (50.90%) studies reported absolute risk, of which 48 (42.5%) reported crude absolute risk and 9 (8.0%) reported adjusted absolute risk. Among 183 (82.4%) studies that reported relative risk, 142 (77.6%) were adjusted; 33 (14.9%) reported sensitivity analyses, in which 28 (84.9%) reported no significant change of findings; 33 (14.9%) reported subgroup analyses (Table 2).

Interpretation of findings

Of the included studies, only 44 (19.8%) clearly stated a predefined hypothesis, and 61 studies (27.5%) yielded negative findings. Among studies with a predefined hypothesis, 8 (18.6%) reported inconsistent results with their prespecified hypotheses. In the reporting of primary outcome, 39 (17.6%) had a strong treatment effect claim, 143 (64.4%) conveyed a moderate claim and 40 (18.0%) conveyed a weak claim (Table 3).

Comparisons between top 5 general medicine and other journals

Studies published in top 5 general medicine journals were more likely to include more participants (median: 154,162 vs. 15,597), involve methodologists (79.0% vs. 56.2%), and receive nonprofit grants (84.2% vs. 48.3%) than those published in other journals (Supplementary Table 1).

Compared to studies in lower impact journals, those in top 5 general medicine journals were more likely to report name of database (94.7% vs. 67.0%), study design (100% vs. 53.2%) and follow-up time (79.0% vs. 31.5%), and apply a new user design (84.2% vs. 20.2%). Comparative rates were found in the reporting of type of data source (52.6% vs. 53.2%) and statistical models (47.4% vs. 64.5%).

Discussions

Main findings and interpretations

In recent years, studies that used RCD for exploring drug treatment effects have rapidly increased, thanks to the increasing availability of these data and the rapid development of complex epidemiological and statistical methods. However, its special methodological features—such as the use of existing healthcare databases, complex pharmacoepidemiological designs and statistical methods—often make these studies differ from traditional observational studies. Clear reporting of these methodological details in the abstracts have important implications for these studies to be effectively indexed in the literature database and to be appropriately used by clinicians and policy makers.

Our study found important limitations in the reporting of abstracts among studies that used RCD for exploring treatment effects. In identifying themselves as database studies, nearly half of the studies did not clearly specify type of data sources, and a quarter did not report any detailed information regarding data sources. In the reporting of important methodological features, we also found that only 57.2% of studies reported study designs, 63.1% reported statistical models, and 77.9% reported time frame of the research. Only 19.8% clearly reported predefined hypotheses.

We also found that reporting of abstracts was generally better among top 5 general medicine journals. However, such important items as type of data source and statistical models were not well reported across all journals.

Our study strongly suggested the need to improve the reporting of abstracts among studies using RCD to examine drug treatment effects. In particular, the reporting of database information is critical in identifying such studies as a database study. Both authors and journal editors may consider adding a suffix to the title and claiming them as database studies. In addition, authors should also consider concisely describe the type of database and the data source to enable better capture of key methodological features of the study.

Although abstracts are usually highly condensed, both authors and journal editors should consider include minimal requirements about the reporting of epidemiological designs and statistical methods and should always consider reporting adjusted effect estimates. Although only a relatively small proportion of studies made a strong claim of treatment effects in the abstracts, this may sometimes mislead decisions, as the nature of the abstract may not be able to convey sufficient information for readers to judge if the claim is appropriate. At least, authors should be highly cautious in the claim of treatment effect in the abstracts. In summary, existing reporting guidelines— such as RECORD and RECORD-PE—are important to improve reporting of these studies [4, 5, 17]. Authors and journal editors should work together to adhere to these requirements.

Comparison with previous studies

Similar to our findings, previously published studies showed substantial reporting deficits in several important items among RCD studies [7, 11], including research questions, types of data sources, geographic regions and time frames, study designs, and statistical models. For instance, a study including 25 RCD studies showed that only 44.0% of studies mentioned type of database in abstract, and only 56.0% reported geographic region and time frame [7]. Another study also found that over a quarter of the studies did not contain clear wording regarding the type of data and 62.9% did not adequately report study design in the abstract [11]. Although a relatively high proportion of studies (69.4%) reported the name of the database in this study, naming the database cannot replace reporting the type of data source in articles indexing [25].

Our findings showed that top journals were associated with better general reporting, consistent with previous studies [11, 26]. For instance, a survey involving 124 RCD studies found a significant association between the journal impact factor and reporting domains such as statistical analyses, outcomes, and the coding and classification of participants [11]. Nevertheless, the reporting in some domains remains suboptimal regardless of impact factors [10, 11]. Only 47.3% of studies published in top-5 general medicine journals clearly reported a statistical model in our study, lower than that in other journals (64.5%).

Strengths and limitations

There are several strengths in our study. First, we included the identification of large number of representative RCD studies exploring drug treatment effects by systematically researching. Second, we used standardized and pilot-tested forms for information extraction, and calibration exercises for reviewers to improve the extraction accuracy.

Our study has some limitations. First, there was a lack of accepted MeSH subject headings for RCD. By using search terms related to RCD, we may have missed studies. Nevertheless, the search strategy was developed together with information specialists, which aimed to ensure completeness of searching. Second, our sample was drawn from English publications in PubMed-indexed journals in 2018, and only 19 studies published in top 5 general medical journals were included. Therefore, our findings may not be generalizable to other years and journals outside the sample. They also may not be representative of all papers published in top 5 medical journals. Nevertheless, the PubMed database included a wide spectrum of journals. Moreover, the reporting of such studies was unlikely to have a significant change in a relatively short time [11]. Third, the judgment on some of the items may be subject to change across investigators. To ensure consistency of the judgement, we developed detailed instructions for these items and performed calibration exercises.

Conclusion

Our work revealed that under-reporting of important methodological characteristics was common in abstracts of studies that used RCD for exploring drug treatment effects. Clear reporting of research hypotheses, information about database, epidemiological designs, statistical methods, and key findings is critical. In addition, authors should also be cautious in the claim of treatment effects in an abstract, since the findings from the studies using RCD may often be susceptible to bias.

Availability of data and materials

The datasets analyzed during the current study available from the corresponding author on reasonable request.

Abbreviations

- RCD:

-

Routinely Collected Data

- RECORD:

-

REporting of studies Conducted using Observational Routinely collected health Data

- RECORD-PE:

-

the RECORD for Pharmacoepeidemiology

- EMR:

-

Electronic Medical Record

- EHR:

-

Electronic Health Record

- ISI:

-

Institute for Scientific Information

- NEJM:

-

New England Journal of Medicine

- JAMA:

-

Journal of the American Medical Association

- BMJ:

-

British Medical Journal

References

McMahon AW, Dal Pan G. Assessing drug safety in children - the role of real-world data. N Engl J Med. 2018;378(23):2155–7.

Corrigan-Curay J, Sacks L, Woodcock J. Real-world evidence and real-world data for evaluating drug safety and effectiveness. Jama. 2018;320(9):867–8.

Berger ML, Sox H, Willke RJ, Brixner DL, Eichler HG, Goettsch W, et al. Good practices for real-world data studies of treatment and/or comparative effectiveness: recommendations from the joint ISPOR-ISPE special task force on real-world evidence in health care decision making. Pharmacoepidemiol Drug Saf. 2017;26(9):1033–9.

Benchimol EI, Smeeth L, Guttmann A, Harron K, Moher D, Petersen I, Sørensen HT, von Elm E, Langan SM. The REporting of studies Conducted using Observational Routinely-collected health Data (RECORD) statement. PLoS medicine. 2015;12(10):e1001885.

Langan SM, Schmidt SA, Wing K, Ehrenstein V, Nicholls SG, Filion KB, et al. The reporting of studies conducted using observational routinely collected health data statement for pharmacoepidemiology (RECORD-PE). BMJ (Clinical research ed). 2018;363:k3532.

Glasziou P, Altman DG, Bossuyt P, Boutron I, Clarke M, Julious S, et al. Reducing waste from incomplete or unusable reports of biomedical research. Lancet. 2014;383(9913):267–76.

Nie X, Zhang Y, Wu Z, Jia L, Wang X, Langan SM, et al. Evaluation of reporting quality for observational studies using routinely collected health data in pharmacovigilance. Expert Opin Drug Saf. 2018;17(7):661–8.

Beller EM, Glasziou PP, Altman DG, Hopewell S, Bastian H, Chalmers I, et al. PRISMA for abstracts: reporting systematic reviews in journal and conference abstracts. PLoS Med. 2013;10(4):e1001419.

Penning de Vries BBL, van Smeden M, Rosendaal FR, Groenwold RHH. Title, abstract, and keyword searching resulted in poor recovery of articles in systematic reviews of epidemiologic practice. J Clin Epidemiol. 2020;121:55–61.

Berwanger O, Ribeiro RA, Finkelsztejn A, Watanabe M, Suzumura EA, Duncan BB, et al. The quality of reporting of trial abstracts is suboptimal: survey of major general medical journals. J Clin Epidemiol. 2009;62(4):387–92.

Hemkens LG, Benchimol EI, Langan SM, Briel M, Kasenda B, Januel JM, et al. The reporting of studies using routinely collected health data was often insufficient. J Clin Epidemiol. 2016;79:104–11.

Filion KB, Yu Y-H. Invited commentary: the prevalent new user design in pharmacoepidemiology: challenges and opportunities. Am J Epidemiol. 2021;190(7):1349-52.

Ray WA. Evaluating medication effects outside of clinical trials: new-user designs. Am J Epidemiol. 2003;158(9):915–20.

Medicine. NLo: MEDLINE / PubMed Search Strategy & Electronic Health Record Information Resources. https://www.nlm.nih.gov/services/queries/ehr_details.html. Accessed at 10 Apr 2019.

Sampson M, McGowan J, Cogo E, Grimshaw J, Moher D, Lefebvre C. An evidence-based practice guideline for the peer review of electronic search strategies. J Clin Epidemiol. 2009;62(9):944–52.

Jin Y, Sanger N, Shams I, Luo C, Shahid H, Li G, et al. Does the medical literature remain inadequately described despite having reporting guidelines for 21 years? - a systematic review of reviews: an update. J Multidiscip Healthc. 2018;11:495–510.

Samaan Z, Mbuagbaw L, Kosa D, Borg Debono V, Dillenburg R, Zhang S, et al. A systematic scoping review of adherence to reporting guidelines in health care literature. J Multidiscip Healthc. 2013;6:169–88.

Turner L, Shamseer L, Altman DG, Weeks L, Peters J, Kober T, et al. Consolidated standards of reporting trials (CONSORT) and the completeness of reporting of randomised controlled trials (RCTs) published in medical journals. Cochrane Database Syst Rev. 2012;11:MR000030.

Heinze G, Wallisch C, Dunkler D. Variable selection - a review and recommendations for the practicing statistician. Biom J. 2018;60(3):431–49.

Berger ML, Mamdani M, Atkins D, Johnson ML. Good research practices for comparative effectiveness research: defining, reporting and interpreting nonrandomized studies of treatment effects using secondary data sources: the ISPOR Good Research Practices for Retrospective Database Analysis Task Force Report--Part I. Value Health. 2009;12(8):1044–52.

Cox E, Martin BC, Van Staa T, Garbe E, Siebert U, Johnson ML. Good research practices for comparative effectiveness research: approaches to mitigate bias and confounding in the design of nonrandomized studies of treatment effects using secondary data sources: the International Society for Pharmacoeconomics and Outcomes Research Good Research Practices for Retrospective Database Analysis Task Force Report--Part II. Value Health. 2009;12(8):1053–61.

Johnson ML, Crown W, Martin BC, Dormuth CR, Siebert U. Good research practices for comparative effectiveness research: analytic methods to improve causal inference from nonrandomized studies of treatment effects using secondary data sources: the ISPOR Good Research Practices for Retrospective Database Analysis Task Force Report--Part III. Value Health. 2009;12(8):1062–73.

AHRQ. Methods for Effective Health Care. In: Velentgas P, Dreyer NA, Nourjah P, Smith SR, Torchia MM, editors. Developing a Protocol for Observational Comparative Effectiveness Research: A User's Guide. Rockville: Agency for Healthcare Research and Quality (US). Copyright © 2013, Agency for Healthcare Research and Quality; 2013.

Sun X, Briel M, Busse JW, Akl EA, You JJ, Mejza F, et al. Subgroup analysis of trials is rarely easy (SATIRE): a study protocol for a systematic review to characterize the analysis, reporting, and claim of subgroup effects in randomized trials. Trials. 2009;10:101.

Benchimol EI, Smeeth L, Guttmann A, Harron K, Moher D, Peteresen I, et al. The REporting of studies conducted using observational routinely-collected health data (RECORD) Statement. PLoS Med. 2015;12(10):e1001885.

Péron J, Pond GR, Gan HK, Chen EX, Almufti R, Maillet D, et al. Quality of reporting of modern randomized controlled trials in medical oncology: a systematic review. J Natl Cancer Inst. 2012;104(13):982–9.

Acknowledgements

The authors would like to thank Xiaoxia Peng (Clinical Epidemiology and Evidence-based Medicine, Capital Medical University), Xiaochen Shu (School of Public Health, Medical College of Soochow University), Guowei Li (Centre of Clinical Epidemiology and Methodology, Guangdong Second Provincial General Hospital), Pei Gao (School of Public Health, Peking University) and Zehuai Wen (The Second Clinical College, Guangzhou University of Chinese Medicine), for their providing technical consultation.

Funding

This study was funded by National Key R&D Program of China (Grant No. 2017YFC1700406 and 2017YFC1700400), Sichuan Youth Science and Technology Innovation Research Team (Grant No. 2020JDTD0015), and 1·3·5 project for disciplines of excellence, West China Hospital, Sichuan University (Grant No. ZYYC08003), National Natural Science Foundation of China (Grant No. 72104155). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

XS, WW and ML conceived and planned the survey; ML, WW, QH, MW abstracted the information; ML planned and performed statistical analysis; XS, WW, ML, HL and GL interpretated the results; ML and WW took the lead in writing the manuscript; XS, LL, GL, HL, QH, MW provided critical feedback and helped shape manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Search Strategies.

Additional file 2: Supplementary Table 1.

Basic characteristics of the analyzed electronic healthcare data study sample.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Liu, M., Wang, W., Wang, M. et al. Reporting of abstracts in studies that used routinely collected data for exploring drug treatment effects: a cross-sectional survey. BMC Med Res Methodol 22, 6 (2022). https://doi.org/10.1186/s12874-021-01482-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12874-021-01482-9