Abstract

Background

With the emergence of molecularly targeted agents and immunotherapies, the landscape of phase I trials in oncology has been changed. Though these new therapeutic agents are very likely induce multiple low- or moderate-grade toxicities instead of DLT, most of the existing phase I trial designs account for the binary toxicity outcomes. Motivated by a pediatric phase I trial of solid tumor with a continuous outcome, we propose an adaptive generalized Bayesian optimal interval design with shrinkage boundaries, gBOINS, which can account for continuous, toxicity grades endpoints and regard the conventional binary endpoint as a special case.

Result

The proposed gBOINS design enjoys convergence properties, e.g., the induced interval shrinks to the toxicity target and the recommended dose converges to the true maximum tolerated dose with increased sample size.

Conclusion

The proposed gBOINS design is transparent and simple to implement. We show that the gBOINS design has the desirable finite property of coherence and large-sample property of consistency. Numerical studies show that the proposed gBOINS design yields good performance and is comparable with or superior to the competing design.

Similar content being viewed by others

Introduction

In oncology phase I trial studies, one main objective is to determine the maximum tolerated dose (MTD) or the recommended phase II dose (RP2D). Targeting on pahse I trial studies, numerous methods have been proposed and can be generally classified into three classes: algorithm-based design like the 3+3 design [1], the accelerated titration design [2], and the biased coin design [3]; model-based design like the continual reassessment method (CRM) [4, 5] and its various extensions [6–8]; and recently developed model-assisted design like the Bayesian optimal interval (BOIN) design [9], and the Keyboard design [10]. Note that, all these methods accounting for the binary toxicity outcomes, experienced dose-limiting toxicity (DLT) or not, and thus may not suitable for the trials with multiple low- or moderate-grade toxicities, such as the molecularly targeted or immunotherapy trials [11–14]. To incorporate the toxicity grades (please refer National Cancer Institute Common Terminology Criteria) into dose-finding trials, one way is assigning severity weights to each grade and type of toxicity and combine the weights as a composite score, eg., total toxicity burden (TTB) [15], toxicity burden score (TBS) [16], and total toxicity profile (TTP) [17]. After appropriate transformations, these scores can be taken as the normally distributed variable [14]. The other way is translating toxicity grades to numeric scores which represent their relative severity in the unit of DLT, the ‘equivalent toxicity score’ (ETS) [18] and treating them as quasi-binary end points which take the values ranging from 0 to 1 and can be modelled by the quasi-Bernoulli likelihood [19].

To the best of our knowledge, very few methods in phase I were developed to account for different toxicity scores, e.g., binary, continuous, count, in a unified framework except a design by [20] and a gBOIN design by [14]. The design by [20] is an algorithm-based design and the gBOIN is a model-assisted design, which is a generalized version of the BOIN design by [9] to account for various toxicity grades. This paper will take further steps to extend the gBOIN. The gBOIN design assumes two fixed boundaries ϕ1 and ϕ2, by which the dose transition was conducted. Though gBOIN with fixed boundaries enjoys the desirable performance in finite sample size in the previous study by [14], it leaves the potential for us to improve the performance of gBOIN by exploring its behaviors with non-fixed boundaries. The rationale of studying the non-fixed boundaries is straightforward, since different boundaries are associated with different risks of making wrong dose allocations. In this article, we propose a gBOINS design method, which generalized the gBOIN method with two shrinkage boundaries \(\phi _{1}^{*}\) and \(\phi _{2}^{*}\). These two boundaries are obtained based on the theory of the uniformly most powerful Bayesian test [21]. The trial will be guided by replacing the two fixed boundaries ϕ1 and ϕ2 in the gBOIN with \(\phi _{1}^{*}\) and \(\phi _{2}^{*}\), respectively. We show that, in contrast to the gBOIN design which will oscillate among the doses within the equivalent interval, the new proposed gBOINS design has the ideal large-sample behavior that converges to one of the dose levels within the equivalent interval, because its decision boundaries shrink to a point mass toward the target. This distinctive feature of gBOINS provides a theoretical foundation and guarantees the MTD convergence. Numerical studies also show that: for small sample size, the gBOINS yields good performance that is comparable or superior to its raw version gBOIN; for large sample size, compared to the gBOIN design, the performance of gBOINS has a substantial improvement.

Remainder of the paper is organized as follows. In “Method” section, after a brief introduction of gBOIN design we introduce the gBOINS design, its theoretical foundation and derive its properties. In “Simulation” section, we compare the gBOINS design to the gBOIN design with various types of toxicity grades. In “Conclusion” section, we conclude the paper with a discussion.

Method

Introduction of gBOIN design

Assume there are J specified doses d1<⋯<dJ under investigation. Let y denote the toxicity outcome which is either binary or quasi-binary (e.g., DLT or ETS) or continuous (e.g., TTB, TBS or TTP). For the motivating trial, after an appropriate transformation, we take the AUC as a continuous end point and model it by a normal distribution. [14] adopted the binomial and the normal distributions for binary (or quasi-binary) and continuous endpoints, respectively. Define μ=E(y) and μj=E(y|dj). Given the dose dj, the distribution of y belongs to the exponential family,

where,

-

θj=μj, η(θj)= log{μj/(1−μj)}, A(θj)=− log(1−μj), T(y)=y, and h(y)=1, if y follows a binomial distribution;

-

θj=(μj,σ2), η(θj)=μj/σ2, \(A(\theta _{j}) = \mu _{j}^{2}/(2\sigma ^{2})\), T(y)=y, and \(h(y) = \frac {1}{\sqrt {\pi }\sigma }\exp \left \{-y^{2}/(2\sigma ^{2})\right \}\), if y follows a normal distribution.

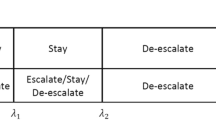

Let ϕ0 denote the target value of μ for dose finding. Specifically, for binary or quasi-binary toxicity endpoints, ϕ0 is the target DLT probability; for continuous endpoints, ϕ0 is the targeted value of the TTB, TBS or TTP. Assume there are nj patients treated at dose level dj and let \({\mathcal {D}}_{j} = (y_{1}, \cdots, y_{n_{j}})\) denote the observed toxicity data. Based on \({\mathcal {D}}_{j}\), the sample mean can be obtained as \(\hat {\mu }_{j} = \sum _{i=1}^{n_{j}} y_{i} /n_{j}\). For the interval-based design, dose transition decisions are made by comparing \(\hat {\mu }_{j}\) with the decision boundaries, λe(dj,nj,ϕ0) and λd(dj,nj,ϕ0). Specifically, if \(\hat {\mu }_{j} < \lambda _{e}(d_{j}, n_{j}, \phi _{0})\), escalate to the higher dose level j+1, and if \(\hat {\mu }_{j} > \lambda _{d}(d_{j}, n_{j}, \phi _{0})\), de-escalate to the lower dose level j−1, otherwise retain the same dose level j. The selection of the decision boundaries λe(dj,nj,ϕ0) and λd(dj,nj,ϕ0) is critical because these two parameters essentially determine operating characteristics of a design. Let the decisions retainment, escalation and de-escalation (each based on the current dose level), denoted as \({\mathcal {R}}\), \({\mathcal {E}}\) and \({\mathcal {D}}\), respectively and let \(\overline {\mathcal {R}}\) denote the decisions that are complementary to \({\mathcal {R}}\) (i.e., \(\overline {\mathcal {R}}\) includes \({\mathcal {E}}\) and \({\mathcal {D}}\)), and \(\overline {\mathcal {E}}\) and \(\overline {\mathcal {D}}\) denote the decisions that are complementary to \({\mathcal {E}}\) and \({\mathcal {D}}\), respectively. Following the same rule of [9], to obtain optimal decision boundaries under some criteria, the gBOIN [14] considers three point hypotheses H0:μj=ϕ0, H1:μj=ϕ1, H2:μj=ϕ2 and minimize an incorrect decision probability α,

where ϕ1 is a value deemed subtherapeutic such that dose escalation is warranted, and ϕ2 is a value deemed overly toxic such that dose de-escalation is required. Note that, H0 indicates that the current dose is the MTD and we should retain the current dose for the next cohort of patient; H1 indicates that the current dose is below the MTD and we should escalate the dose; and H2 indicates that the current dose is overly toxic and we should deescalate the dose. Thus, the correct decisions under hypotheses H0, H1 and H2 are retainment, escalation and de-escalation. Correspondingly, the incorrect decisions under H0, H1 and H2 are \(\overline {\mathcal {R}}\), \(\overline {\mathcal {E}}\) and \(\overline {\mathcal {D}}\), respectively. For example, under H0 (i.e., the current dose is the target), the correct decision is to retain the current dose (i.e., \({\mathcal {R}}\)), and incorrect decisions are dose escalation and de-escalation (i.e., \({\mathcal {E}}\) and \({\mathcal {D}}\)). Taking a noninformative prior, i.e., P(H0)=P(H1)=P(H2)=1/3, and minimizing the incorrect decision probability α in Eq. (2), the decision boundaries can be obtained as (details can be found in [14]),

Specifically, when y follows a Bernoulli or quasi-Bernoulli distribution, we have 𝜗k=ϕk, A(𝜗k)=− log(1−ϕk), η(𝜗k)= log{ϕk/(1−ϕk)}. Then,

which are exactly the same as boundaries provided by the original BOIN design [9]. When y follows a normal distribution, we have \(\vartheta _{k}=\left (\phi _{k}, \sigma _{j}^{2}\right)\), \(A\left (\vartheta _{k}\right) = \phi _{k}^{2}/\left (2\sigma _{j}^{2}\right)\), \(\eta \left (\vartheta _{k}\right) = \phi _{k}/\sigma _{j}^{2}\). Then,

Based on the above decision boundaries, the gBOIN design is summarized as follows:

-

(a)

Patients in the first cohort are treated at the lowest dose level or at a prespecified dose level.

-

(b)

At the current dose level j, assign a dose to the next cohort of patients,

-

if \({\hat \mu _{j}} \le {\lambda _{e}^{*}}\), escalate the dose level to j+1,

-

if \({\hat \mu _{j}} \ge {\lambda _{d}^{*}}\), de-escalate the dose level to j−1, and

-

otherwise, i.e., \({\lambda _{e}^{*}} < {\hat \mu _{j}} < {\lambda _{d}^{*}}\), retain the same dose level, j.

-

-

(c)

This process is continued until the maximum sample size is reached or the trial is terminated because of excessive toxicities.

It is remarkable that the optimal decision boundaries \(\left ({\lambda _{e}^{*}}, {\lambda _{d}^{*}}\right)\) are free of dj and nj, which means that the same pair of boundaries are used throughout the trial no matter which dose is the current dose, nor how many patients have been treated at the current dose.

Adaptive gBOIN design

Extensive simulation studies have shown that the gBOIN is transparent and simple to implement, and it yields good performance that is comparable or superior to more complicated model-based designs. As we described in the “Introduction” section, the un-fixed boundaries may allow a flexibility to penalize mis-allocation rate of patients at over-toxic doses. To account for un-fixed boundaries, firstly, we reformulate the above three hypotheses as follows,

and

In the Bayesian paradigm, the Bayes factor in favor of the alternative hypothesis H1 against a fixed null hypothesis H0 is defined as,

and the null hypothesis H0 is rejected if BF10(Dj) exceeds a prespecified threshold γ1. Similarly, the Bayes factor in favor of the alternative hypothesis H2 against a fixed null hypothesis H0 is defined as,

and the null hypothesis H0 is rejected if BF20(Dj) exceeds a prespecified threshold γ2. Note that, if we want to put more penalties on over-toxic allocation, values of γ1 and γ2 would be different and presumably γ1 should be greater than γ2 since smaller γ2 means decisions of de-escalation are easier made if over-toxicities occur. Given the prior odds P(Hk)/P(H0)=1 and the threshold γk,(k=1,2), we can determine an alternative hypothesis that maximize the probability that the Bayes factor forms a test exceed the specified threshold γk. In other words, here we can choose the value of \(\phi _{k}^{*}, (k=1,2)\) (this notation has been introduced in the “Introduction” section) to maximize P(BFk0(Dj)>γk).

By the Lemma 1 of [21], \(\phi _{1}^{*}\) and \(\phi _{2}^{*}\) can be obtained by,

respectively, where \(g_{\gamma _{k}}(\mu _{j}, \phi _{0}) = \frac {\log (\gamma _{k})+n_{j}\{A(\theta _{j})-A(\theta _{0})\}}{\eta (\theta _{j})-\eta (\theta _{0})}\), k=1,2.

Specifically, for binomial distribution, \(\phi _{1}^{*}\) and \(\phi _{2}^{*}\) can be given as,

Obviously, the values of \(\phi _{1}^{*}\) and \(\phi _{2}^{*}\) depend on the target ϕ0, the sample size nj and the threshold γk, k=1,2. Although their close forms cannot be obtained, they can be solved via numerical optimization methods. For normal distribution, \(\phi _{1}^{*}\) and \(\phi _{2}^{*}\) can be given as,

Note that, for the normal distribution, values of \(\phi _{1}^{*}\) and \(\phi _{2}^{*}\) depend on the value of σ. So, if σ is unknown, we can replace it with its sample estimation \(\hat {\sigma } = \sqrt {\left \{\sum _{i=1}^{n_{j}} \left (y_{i} - \hat {\mu }_{j}\right)^{2}\right \}/n_{j}}\), or alternatively, we can take an Inverse Gamma distribution with shape parameter α0 and rate parameter β0 as its prior, then σ can be replaced by using its posterior mean \(\left (2\beta _{0} + \sum _{i=1}^{n_{j}} \left (y_{i} - {\mu }_{j}\right)^{2}\right)/ \left (n_{j} + \alpha _{0}\right)\) with μ replaced by \(\hat {\mu }_{j}\).

Replacing ϕk in \(\lambda _{e}^{*}\) and \(\lambda _{d}^{*}\) with \(\phi _{k}^{*}\), k=1,2, we can get the adaptive shrinkage decision boundaries \(\lambda _{e}^{*}(n_{j})\) and \(\lambda _{d}^{*}(n_{j})\). Note that, for a standard binary toxicity endpoint, if we take the same values for γk, k=1,2, the boundaries are the same as the UMPBI design [22]. Based on Lemma 2 in [21], we have the following double-shrinkage property theorem about the shrinkage boundaries \(\lambda _{e}^{*}(n_{j})\) and \(\lambda _{d}^{*}(n_{j})\).

Theorem 1

As nj→∞, the decision boundaries \(\lambda _{e}^{*}(n_{j})\) and \(\lambda _{d}^{*}(n_{j})\) will converge to the target ϕ0 at the rate of \(O\bigl (\sqrt {\log (\gamma _{1})/n_{j}}\bigr)\) and \(O\bigl (\sqrt {\log (\gamma _{2})/n_{j}}\bigr)\) respectively.

Theorem 1 introduces a double-shrinkage property for the proposed adaptive gBOIN design: The optimal values \(\phi _{k}^{*}\) shrink toward the target toxicity probability ϕ0, and the optimal boundaries \(\lambda _{e}^{*}(n_{j})\) and \(\lambda _{d}^{*}(n_{j})\) based on each combination of \(\phi _{1}^{*}\) and \(\phi _{2}^{*}\) shrinkage toward the target value ϕ0.

Now we give the procedure of the proposed gBOINS design as follows.

-

(a)

Patients in the first cohort are treated at the lowest dose level or at a prespecified dose level.

-

(b)

At the current dose level j, to assign a dose to the next cohort of patients,

-

if \({\hat \mu _{j}} \le {\lambda _{e}^{*}(n_{j})}\), escalate the dose level to j+1,

-

if \({\hat \mu _{j}} \ge {\lambda _{d}^{*}(n_{j})}\), de-escalate the dose level to j−1, and

-

otherwise, i.e., \({\lambda _{e}^{*}(n_{j})} < {\hat \mu _{j}} < {\lambda _{d}^{*}(n_{j})}\), retain the same dose level, j.

-

-

(c)

This process is continued until the maximum sample size is reached or the trial is terminated because of excessive toxicities.

After the trial has been completed, we use the pooled adjacent violators algorithm [23] to select a dose level as the MTD. Denote the isotonically transformed values of the observed value \(\{\hat \mu _{j}\}\) by \(\{\tilde \mu _{j}\}\), to be specific, for finding the MTD, we select dose j∗, for which the isotonic estimate of the toxicity rate \(\tilde \mu _{j^{*}}\) is closest to ϕ0; if there are ties for \(\tilde \mu _{j^{*}}\), we select from the ties the highest dose level when \(\tilde \mu _{j^{*}} < \phi _{0}\) or the lowest dose level when \(\tilde \mu _{j^{*}} >\phi _{0}\).

For patient safety, we impose the following overdose control rule when using the gBOIN design.

If \({\mathrm {P}\left ({{\mu _{j}} > \phi _{0} \left | {\mathcal {D}}_{j} \right.} \right) > 0.95}\) and nj≥3, dose levels j and higher are eliminated from the trial, and the trial is terminated if the first dose level is eliminated.

Posterior probability \({\mathrm {P}\left ({{\mu _{j}} > \phi _{0} \left | {\mathcal {D}}_{j} \right.} \right) > 0.95}\) can be evaluated on the basis of a beta-binomial model for the binary or quasi-binary endpoint, assuming μj follows a vague beta prior, e.g., μj∼beta(1,1). For normal endpoint y with mean μj and variance \(\sigma _{j}^{2}\), assuming noninformative prior \((\mu, \sigma _{j}^{2}) \propto \sigma ^{-2}\), the posterior distribution of μj follows a t distribution with nj−1 degrees of freedom, mean \(\hat \mu _{j}\) and scale \(n_{j}^{-1}\sum _{i=1}^{n_{j}}\left (y_{i} - \hat \mu _{j}\right)^{2}\).

Design properties

From a practical viewpoint, a natural requirement for dose-finding trials is that dose escalation should be not allowed if the observed toxicity rate or mean toxicity score at the current dose is higher than the target, and dose de-escalation should not be allowed if the observed toxicity rate or mean toxicity score at the current dose is lower than the target. [9] referred to this finite sample property as “long-term memory coherent”, which is an extension of a similar concept originally proposed by [24]. That original definition of design coherence requires the prohibition of dose escalation (or de-escalation) when the observed toxicity rate in the most recently treated cohort is more (or less) than the target toxicity rate. Because that definition is based on the response from only the most recently treated cohort without considering responses from patients who were previously enrolled and treated, [9] refers this definition as “short-term memory coherence”. Clearly, short-term memory coherence is a stronger counterpart than long-term memory coherence.

As shown in the Appendix, the gBOINS design has the following desirable finite-sample property.

Theorem 2

The gBOINS design is long-term memory coherent in the sense that the design will never escalate the dose when \(\hat {\mu }_{j}>\phi _{0}\); and will never de-escalate the dose when \(\hat {\mu }_{j}<\phi _{0}\).

To further enhance safety of the design, we let the upper boundary \(\phi _{2}^{*}\) have a little bit faster shrinking rate than that of the lower boundary \(\phi _{1}^{*}\), since more strict or smaller \(\phi _{2}^{*}\) has less risk of exposing participated patients to over-toxic doses. We propose to take γk as \(\gamma _{k} = \exp (c_{k}n_{j}^{\varepsilon _{k}})\), k=1,2, 1>ε1≥ε2>0 and \(0< c_{1} < n_{j}^{1-\epsilon _{1}}\log (1/(1-\phi _{0}))\) and \(c_{1}< c_{2} < n_{j}^{1-\epsilon _{2}}\log (1/\phi _{0})\). It can be shown that the proposed adaptive gBOIN design has the following desirable large-sample property.

Theorem 3

As the number of patients goes to infinity, the dose assignment and the selection of the MTD under the gBOINS design converge almost surely to dose level j∗, if \(\phantom {\dot {i}\!}\mu _{j^{*}} = \phi _{0}\).

According to Theorem 1, the condition \(\gamma _{k} = \exp (c_{k}n_{j}^{\varepsilon _{k}})\), ck>0, k=1,2, imposed here to leverage the converge rate of \(\lambda _{e}^{*}{(n_{j})}\) and \(\lambda _{d}^{*}{(n_{j})}\), yielding \(\mathrm {P}\{\hat {\mu }_{j} \in (\lambda _{e}^{*}{(n_{j})}, \lambda _{d}^{*}{(n_{j})})\} =1 \), because \(\hat {\mu }_{j}\) converges in probability to μj at the \(\sqrt {n}\) rate. Following the proof of Theorem 1 of [25], the result can be directly obtained and is omitted here.

Practical implementation

To implement the proposed gBOINS design in practice, we need to specify the values of εk and ck, k=1,2. We recommend the εk=0.5, k=1,2. The values of ck, k=1,2 need to be calibrated by extensive simulation studies, and even there are no uniform values for different type of endpoints with the same target. For the normal endpoints, the shrinkage boundaries depend on the estimate of σ, this will influence the pre-tabulation and the simplicity of gBOINS. For practical applications, we suggest to replace it with 1.1ϕ0. Note that a big (small) value of \(\lambda _{e}^{*}(n_{j})\) (or \(\lambda _{d}^{*}(n_{j})\)) will make dose escalation (de-escalation) rapidly, this may lead serious safety problems and reduce the efficiency of the design when the sample size is small, since the smaller value of the sample size the bigger variance of \(\hat {\mu }_{j}\). To avoid this adverse event problem and improve the design’s efficiency, in practice, we introduce a lead-in process in a trial to follow the original gBOIN design for a pre-specified number of patients (denoted as N0). After nj>N0, the trial is then switched to the gBOINS design. For our simulations, N0=6 is recommended. Table 1 shows examples of the values of \((\lambda _{e}^{*}(n_{j}), \lambda _{d}^{*}(n_{j}))\) for target ϕ0=0.2 and ϕ0=0.3.

Simulation

Toxicity as a binary endpoint

We test the performance of the gBOINS design by comparing it to the gBOIN design under four different metrics: the percentage of correct selection (PCS) of the MTD, the average number of patients allocated to the MTD, the risk of overdosing, which is defined as the percentage of simulated trials in which a large percentage (e.g., more than 60% or 80%) of patients are treated at doses above the MTD and the risk of underdosing which is defined as the percentage of simulated trials in which more than 80% of patients are treated at doses below the MTD. We investigated two target toxicity rates ϕ0=0.2 and ϕ0=0.3, and for each of the target toxicity rate, we examined 16 representative toxicity scenarios with various parameters of ϕ0=0.2, c1 = log(1.05)/3 and c2 = log(1.05)/3, and c1 = log(1.1)/3 and c2 = log(1.1)/3 when ϕ0=0.3. Table 2 which were reproduced from [27]. All examined scenarios are varied in the location of the MTD and the gaps around the MTD. For each scenario, 30 patients and 10 cohorts were assumed.

Figures 1 and 2 present the results based on 4000 simulated trials. As shown in Fig. 1, when the target is 0.2, for all 16 scenarios the performance of gBOINS and gBOIN are comparable in the sense of percentage of correct selection of the target dose and the average number of patients allocated to the MTD. While the gBOIN has a higher risk of overdosing, for most scenarios, acromm scenarios 1 to 10. In addition, compared to the gBOIN design, the proposed gBOINS allocated fewer patients to sub-therapeutic doses for most scenarios, which may be explained by a higher risk of underdosing 80% for the gBOIN. Figure 2 shows that, when the target is 0.3, the performance of gBOINS and gBOIN are comparable, and the proposed gBOINS has a lower risk of overdosing.

At the end of the “Toxicity as a binary endpoint” section, we also conducted simulation studies to investigate the performance of the gBOINS with respect to different sample sizes. We consider four scenarios in Table 3 and the simulation results based on 4000 replications are presented in Fig. 3. As shown in the first two pictures on the left panel of Fig. 3, for scenarios 1 and 3, there was only one dose lying inside the interval (0.16,0.24) and (0.24,0.36) respectively, the performance of gBOINS is comparable to the gBOIN design. For scenarios 2 and 4, the simulation results are depicted on the right panel of Fig. 3, there were two doses lying inside the intervals and the gBOINS outperformed the gBOIN when the sample size was greater than 90.

The relationships of operating characteristics and sample sizes of gBOIN and gBOINS when the target toxicity rates are 20% and 30%. The two pictures on the left panel, only one dose lying inside (0.16,0.24) and (0.24,0.36) respectively for the top and bottom panel; on the right panel, there are two doses lying inside their corresponding intervals

Toxicity as a quasi-binary endpoint

We evaluated the performance of the gBOINS design when the toxicity endpoint was a quasi-binary endpoint (e.g., ETS) by comparing it to the gBOIN design method based on the ten scenarios considered by [14](see Table 4). Following [18], we adopted the following ETS definition: grades 0 and 1 were of no concern (no DLT); a grade-2 toxicity was equivalent to 0.5 DLT; a grade-3 toxicity counted as one DLT; and a grade-4 toxicity was equivalent to 1.5 DLT. The target ETS was 0.47, derived from the target profile of 49% grade 0 and grade 1, 18% grade 2, 23% grade 3, and 10% grade 4. That is, the target ETS = 0.49×0+0.18×0.5+0.23×1.0+0.10×1.5=0.47. A total sample of 30 patients in 10 cohorts was used in the simulation, with d1 as the starting dose. And the ck, k=1,2, are set to be c1 = log(1.2)/3 and c2 = log(1.2) throughout this subsection.

Table 5 shows the results based on 4,000 simulated trials. In general, for Scenarios 1, 2, 3, 4, 5 and 8, the performance of gBOINS are comparable with the gBOIN design in terms of PCS, number of patients allocating to the MTD, while the gBOIN assigns more patients to the overly toxic doses above the MTD. For example, in scenario 3, in which dose level 2 was the MTD, the gBOINS design yielded a PCS of 48% and allocated 14.5 patients to the MTD; the gBOIN yielded a PCS of 47% and allocated 12.1 patients to the MTD. While the gBOINS assigned 2.7 fewer patients than the gBOIN design to the overly toxic doses. In scenario 6, in which the MTD was dose level 1, the PCS of the gBOINS was 77% and has a 8% higher than that of the gBOIN design. In scenario 7, the MTD was located at the lose level 2 and the gBOIN design yielded a of 55%, which was 4% higher than that of the gBOINS. While the gBOINS allocated more patients to the MTD and assigned fewer patients to the overly toxic doses. In scenario 9, in which dose level 2 was the MTD, the gBOINS design yielded a PCS of 96% and was 5% higher than that of the gBOIN design. The number of patients allocated to MTD was similar, while the gBOINS assigned 3.4 fewer patients than the gBOIN design to the overly toxic doses. In scenario 10, in which dose level 4 was the MTD, the gBOINS design yielded a PCS of 76% and was 5% higher than that of the gBOIN design. The gBOIN design assigned 13.3 patients to MTD and was higher than that of the gBOINS, while gBOINS assigned fewer patients to the overly toxic doses. In addition, the gBOINS design yielded a 6% chose the overly toxic doses as MTD and had a substantially improvement compared with the gBOIN design which has a 26% chose the overly toxic doses as the MTD.

Continuous outcomes

In this section, we consider ten scenarios with continuous outcomes in Table 6, all responses follow the normal distribution adopted by [20]. For the first six scenarios, the response y at the dose level x∈{1,2,3,4,5,6} follows a normal distribution N(0.05+0.05x,0.052x2) and when the target at x=1, a sample size of 15 was used and when the target at other dose levels, a sample size of 60 was used. Cohort size of 1 was used for all scenarios. For the rest scenarios, scenarios 7 to 10, the response y at the dose x∈{1,2,3,4,5,6} also followed the normal distribution N(0.05+0.05x,0.052x2), and a moderate large sample size of 100 was used. And the ck, k=1,2, are set to be c1 = log(1.1)/3 and c2 = log(1.1) throughout this subsection.

Table 6 shows that when the sample size is 15 for scenario 1 and 60 for scenarios 2 to 6, performance of the gBOINS design is comparable with the gBOIN design, in correct selection percentage and number of patients treated at the target dose. While for the last four scenarios, when the sample size is moderate large, the gBOINS outperformed the gBOIN design in correct selection percentage and was comparable with gBOIN design in number of patients allocated to the target dose. Specifically, in scenario 7, in which dose level 3 was the target dose, gBOINS yielded a PCS of 89% and allocated 61.7 patients to the MTD, whereas the gBOIN yielded a PCS of 84% and allocated 64 patients to the MTD. In scenario 8, the MTD was located at the dose level 4, the PCS of gBOINS was 75% and 5% higher than that of the gBOIN. In scenarios 9-10, we also see that the PCS of gBOINS was superior to the gBOIN in PCS and was comparable with the gBOIN in the number of patients allocated to the MTD.

Conclusion

We proposed a new phase I trial design that incorporates toxicity grades into the dose finding trials. The proposed gBOINS design unifies the continuous and quasi-binary toxicity endpoints as well as the standard binary endpoint. Different from the gBOIN design, the decision boundaries of gBOINS design, \(\lambda _{e}^{*}(n_{j})\) and \(\lambda _{d}^{*}(n_{j})\) were adaptive, which provides the statistician a flexible tool to control the over-toxicities. The design can also converge to the target toxicity probability as the sample size goes to infinity. This unique convergence property of gBOINS was demonstrated both theoretically and numerically. Compared to the gBOIN design, when there were more than one doses lying inside the decision boundaries \(\left (\lambda _{e}^{*}, \lambda _{d}^{*}\right)\) determined by the gBOIN design, the gBOINS had a substantial improvement in terms of the PCS when the sample size was moderate large. Also, we showed that when the sample size was small, the performance of gBOINS design was comparable with the gBOIN design in terms of the PCS and can allocate more patients to safe doses by simulations.

Although the prosed gBOINS design focus on phase I trial designs, similarly to the BOIN-ET proposed by [28], it can be directly extended to the phase I/II designs. One limitation of the gBOINS design is that it assumes toxicity outcome can be observed quickly enough to make the dose assignment decisions for each enrolled cohort. One approach to extend the gBOINS design to accommodate late-onset or delayed outcomes, for example, would be to use the Bayesian data augmentation approach [29, 30] or the approximated likelihood approach [31]. This is a topic of our future research.

Availability of data and materials

Not applicable.

References

Storer BE. Design and analysis of phase I clinical trials. Biometrics. 1989; 45:925–37.

Simon R, Rubinstein L, Arbuck SG, Christian MC, Freidlin B, Collins J. Accelerated titration designs for phase I clinical trials in oncology. J Natl Cancer Inst. 1997; 89:1138–47.

Durham SD, Flournoy N, Rosenberger WF. A random walk rule for phase I clinical trials. Biometrics. 1997; 53:745–60.

O’Quigley J, Pepe M, Fisher L. Continual reassessment method: a practical design for phase I clinical trials in cancer. Biometrics. 1990; 46:33–48.

Cheung YK. Dose Finding by the Continual Reassessment Method. New York: CRC Press; 2011.

Babb J, Rogatko A, Zacks S. Cancer phase I clinical trials: efficient dose escalation with overdose control. Stat Med. 1998; 17:1103–20.

Tighiouart M, Rogatko A. Dose finding with escalation with overdose control (EWOC) in cancer clinical trials. Stat Sci. 2010; 25:217–26.

Yin G, Yuan Y. Bayesian model averaging continual reassessment method in phase I clinical trials. J Am Stat Assoc. 2009; 104:954–68.

Liu S, Yuan Y. Bayesian optimal interval designs for phase I clinical trials. J R Stat Soc Ser C. 2015; 64:507–23.

Yan F, Mandrekar SJ, Yuan Y. Keyboard: a novel bayesian toxicity probability interval design for phase I clinical trials. Clin Cancer Res. 2017; 23:3994–4003.

Brahmer JR, Drake CG, Wollner I, Powderly JD, Picus J, Sharfman WH, Stankevich E, Pons A, Salay TM, McMiller TL, et al. Phase I study of single-agent anti–programmed death-1 (MDX-1106) in refractory solid tumors: safety, clinical activity, pharmacodynamics, and immunologic correlates. J Clin Oncol. 2010; 28:3167–75.

Le Tourneau C, Diéras V, Tresca P, Cacheux W, Paoletti X. Current challenges for the early clinical development of anticancer drugs in the era of molecularly targeted agents. Target Oncol. 2010; 5:65–72.

Penel N, Adenis A, Clisant S, Bonneterre J. Nature and subjectivity of dose-limiting toxicities in contemporary phase I trials: comparison of cytotoxic versus non-cytotoxic drugs. Investig New Drugs. 2011; 29:1414–9.

Mu R, Yuan Y, Xu J, Mandrekar SJ, Yin J. gBOIN: a unified model-assisted phase I trial design accounting for toxicity grades, and binary or continuous end points. J R Stat Soc Ser C. 2019; 68:289–308.

Bekele BN, Thall PF. Dose-finding based on multiple toxicities in a soft tissue sarcoma trial. J Am Stat Assoc. 2004; 99:26–35.

Lee S, Hershman D, Martin P, Leonard J, Cheung Y. Toxicity burden score: a novel approach to summarize multiple toxic effects. Ann Oncol. 2012; 23:537–41.

Ezzalfani M, Zohar S, Qin R, Mandrekar SJ, Deley M-CL. Dose-finding designs using a novel quasi-continuous endpoint for multiple toxicities. Stat Med. 2013; 32:2728–46.

Yuan Z, Chappell R, Bailey H. The continual reassessment method for multiple toxicity grades: A Bayesian quasi-likelihood approach. Biometrics. 2007; 63:173–9.

Papke LE, Wooldridge JM. Econometric methods for fractional response variables with an application to 401(k) plan participation rates. J Appl Econom. 1996; 11:619–32.

Ivanova A, Kim SH. Dose finding for continuous and ordinal outcomes with a monotone objective function: A unified approach. Biometrics. 2009; 65:307–15.

Johnson VE. Uniformly most powerful bayesian tests. Ann Stat. 2013; 41:1716–41.

Lin R, Yin G. Uniformly most powerful bayesian interval design for phase I dose-finding trials. Pharm Stat. 2018; 17:710–24.

Barlow RE, Bartholomew DJ, Bremner J, Brunk HD. Statistical Inference Under Order Restrictions. London: Wiley; 1972.

Cheung YK. Coherence principles in dose-finding studies. Biometrika. 2005; 92:863–73.

Oron AP, Azriel D, Hoff PD. Dose-finding designs: the role of convergence properties. Int J Biostat. 2011; 7:39.

Yuan Y, Hess KR, Hilsenbeck SG, Gilbert MR. Bayesian optimal interval design: a simple and well-performing design for phase I oncology trials. Clin Cancer Res. 2016; 22:4291–301.

Yuan Y, Hess KR, Hilsenbeck SG, Gilbert MR. Bayesian optimal interval design: a simple and well-performing design for phase I oncology trials. Clin Cancer Res. 2016; 22:4291–301.

Takeda K, Taguri M, Morita S. Boin-et: Bayesian optimal interval design for dose finding based on both efficacy and toxicity outcomes. Pharm Stat. 2018; 17:383–95.

Liu S, Yin G, Yuan Y. Bayesian data augmentation dose finding with continual reassessment method and delayed toxicity. Ann Appl Stat. 2013; 7:1837–2457.

Jin IH, Liu S, Thall P, Yuan Y. Using data augmentation to facilitate conduct of phase I/II clinical trials with delayed outcomes. J Am Stat Assoc. 2014; 109:525–36.

Lin R, Yuan Y. Time-to-event model-assisted designs for dose-finding trials with delayed toxicity. Biostatistics. 2019; 21:807–24.

Acknowledgments

The research is supported by the National Natural Science Foundation of China(grant 11901519, grant 12001378), China Postdoctoral Science Foundation(2019M661416), Guangdong Basic and Applied Basic Research Foundation (Grant No. 2019A1515110449) and Natural Science Foundation of Guangdong Province (Grant No. 2020A1515010372). Pan’s research is partially supported by American Lebanese Syrian Associated Charities (ALSAC).

Funding

The research is supported by the National Natural Science Foundation of China(grant 11901519, grant 12001378), China Postdoctoral Science Foundation(2019M661416), Guangdong Basic and Applied Basic Research Foundation (Grant No. 2019A1515110449) and Natural Science Foundation of Guangdong Province (Grant No. 2020A1515010372). Pan’s research is partially supported by American Lebanese Syrian Associated Charities (ALSAC).

Author information

Authors and Affiliations

Contributions

HP and RM contributed to the conception, design, and development of the R code of this work. ZG and GX reviewed and approved the accompanying R code. Both HP and RM wrote, revised, and approved the final manuscript.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Mu, R., Hu, Z., Xu, G. et al. An adaptive gBOIN design with shrinkage boundaries for phase I dose-finding trials. BMC Med Res Methodol 21, 278 (2021). https://doi.org/10.1186/s12874-021-01455-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12874-021-01455-y