Abstract

Background

Cluster randomised trials (CRTs) are increasingly used to evaluate non-pharmacological interventions for improving child health. Although methodological challenges of CRTs are well documented, the characteristics of school-based CRTs with pupil health outcomes have not been systematically described. Our objective was to describe methodological characteristics of these studies in the United Kingdom (UK).

Methods

MEDLINE was systematically searched from inception to 30th June 2020. Included studies used the CRT design in schools and measured primary outcomes on pupils. Study characteristics were described using descriptive statistics.

Results

Of 3138 articles identified, 64 were included. CRTs with pupil health outcomes have been increasingly used in the UK school setting since the earliest included paper was published in 1993; 37 (58%) studies were published after 2010. Of the 44 studies that reported information, 93% included state-funded schools. Thirty six (56%) were exclusively in primary schools and 24 (38%) exclusively in secondary schools. Schools were randomised in 56 studies, classrooms in 6 studies, and year groups in 2 studies. Eighty percent of studies used restricted randomisation to balance cluster-level characteristics between trial arms, but few provided justification for their choice of balancing factors. Interventions covered 11 different health areas; 53 (83%) included components that were necessarily administered to entire clusters. The median (interquartile range) number of clusters and pupils recruited was 31.5 (21 to 50) and 1308 (604 to 3201), respectively. In half the studies, at least one cluster dropped out. Only 26 (41%) studies reported the intra-cluster correlation coefficient (ICC) of the primary outcome from the analysis; this was often markedly different to the assumed ICC in the sample size calculation. The median (range) ICC for school clusters was 0.028 (0.0005 to 0.21).

Conclusions

The increasing pool of school-based CRTs examining pupil health outcomes provides methodological knowledge and highlights design challenges. Data from these studies should be used to identify the best school-level characteristics for balancing the randomisation. Better information on the ICC of pupil health outcomes is required to aid the planning of future CRTs. Improved reporting of the recruitment process will help to identify barriers to obtaining representative samples of schools.

Similar content being viewed by others

Background

Cluster randomised trials (CRTs) are studies in which groups, or clusters, of individuals are allocated to trial arms rather than the individuals themselves [1]. The clusters may be geographic areas, health organisations or social units. CRTs are used when the intervention is delivered to the entire cluster or there is a chance of contamination between trial arms if individuals are randomised [2].

CRTs can be more complex to design and analyse than individually randomised controlled trials. The most documented methodological consideration for CRTs is that observations on participants from the same cluster are more likely to be similar to each other than those on participants from different clusters [2]. This similarity is quantified by the intra-cluster correlation coefficient (ICC), defined as the proportion of the total variability in the trial outcome that is between clusters as opposed to between individuals within clusters [3]. The statistical dependence between observations within clusters needs to be taken account of when calculating the sample size and analysing data in CRTs [1]. The use of standard methods may result in the sample size being too small to detect the intervention effect, and analysis results that exaggerate the evidence for a true intervention effect. Estimates of the ICC or coefficient of variation of clusters for the outcome from previous studies are required to calculate the design effect, the factor by which the number of individuals that would be required in an individually randomised trial needs to be inflated to account for within-cluster correlation in the sample size calculation. In addition, when calculating the sample size in CRTs, a degrees of freedom correction should be incorporated to take account of the uncertainty with which variability in the outcome across clusters is estimated in the analysis [4], and a further inflation of the sample size should be considered to allow for loss of efficiency that results from recruiting unequal numbers of participants from the clusters[5]. When estimating the intervention effect from the resulting trial data the main analytical approaches are to either apply standard statistical methods to summary statistics that represent the cluster response (cluster-level analyses) or use methods at the individual participant level that account for within-cluster correlation in the model or by weighting the analysis. Another important methodological consideration in CRTs is the potential for recruitment bias that might occur in studies where the participating individuals are recruited after the clusters are randomised. Finally, when using meta-analysis to pool findings from studies that use the CRT design, there is the need to consider how best to incorporate estimated effects from studies that did not allow for clustering in the analysis, and consider the extent to which differences in the types of clusters that were randomised are a source of heterogeneity. These considerations are detailed in several textbooks [1, 2, 6,7,8].

CRTs are increasingly used to evaluate non-pharmacological interventions for improving child health outcomes [9,10,11]. Although the use of CRTs to evaluate the effectiveness of interventions for improving educational outcomes is long established [12, 13], their use to evaluate health interventions in schools is more recent [10]. Schools provide a natural environment to recruit, deliver public health interventions to and measure outcomes on children, due to the amount of time they spend there [10]. Cluster randomisation is consistent with the natural clustering found within school settings (i.e., classrooms within year groups within schools). School-based CRTs share common challenges with other settings, but specific considerations may be more challenging when schools are randomised, for example, consent procedures [10, 14].

In 2011, a methodological systematic review on the characteristics and quality of reporting of CRTs involving children reported a marked increase in such studies [9]; three quarters of the included studies randomised schools. To date, no systematic review has focussed specifically on the characteristics of school-based CRTs for improving pupil health outcomes. Such a review would help identify common methodological challenges, obtain estimates of parameters (e.g., the ICC) that are of use to researchers planning similar trials and inform the design of simulation studies that use synthetic data to evaluate the properties of statistical methods applied in the context of school-based CRTs with health outcomes.

The aim of this methodological systematic review is to describe the characteristics and practices of school-based CRTs for improving health outcomes in pupils in the United Kingdom (UK).

Methods

This is a systematic review of school-based CRTs with pupil health outcomes that were conducted in the UK. The review was focussed on the UK to align with constraints on available resources and collect richer data on CRT methodology in a single education system.

Data sources and search methods

The systematic review was registered with PROSPERO (CRD42020201792) and the protocol has been published [15]. After extensive scoping of the subject area, a pragmatic decision was made to search MEDLINE (through Ovid) in order to make the review more time-efficient and align with available resources. MEDLINE was exclusively searched from inception to 30th June 2020 for peer-reviewed articles of school-based CRTs. The search strategy (Table 1) was developed in consultation with information specialists, based on a sensitive MEDLINE search strategy for identifying CRTs [16]. Cluster design-related terms ‘cluster*’, ‘group*’ and ‘communit*’ were combined with the terms ‘random’ and ‘trial’, along with the ‘Schools’ Medical Subject Heading (MeSH) term. The search was limited to English language.

Inclusion and exclusion criteria

The systematic review included school-based definitive CRTs of the effectiveness of an intervention versus a comparison group that evaluated health outcomes on pupils. The population of interest was children in full-time education in the UK. Studies that took place outside the UK were excluded. The pragmatic decision was made to limit the population to educational settings within the UK as it made the review more focussed and applicable to a specific setting. Eligible studies included pupils in pre-school, primary school and secondary school. The types of eligible clusters included schools themselves, year groups, classes, teachers or any other relevant school-related unit. All school types were eligible, including special schools. Any health-related intervention(s) and control groups were considered. The primary outcome had to be related to pupils’ health. Studies for which the primary outcome was not health-based (e.g., academic attainment) were excluded. All types of CRT design were eligible including parallel group, factorial, crossover and stepped wedge studies.

If more than one publication of the primary outcome result for an eligible CRT was identified, a key study (index) report was designated and used for data extraction. Papers that did not report the primary outcome were excluded along with pilot/feasibility studies, protocol/design articles, process evaluations, economic evaluations/cost-effectiveness studies, statistical analysis plans, commentaries and mediation/mechanism analyses.

Sifting and validation

Two reviewers (KP and OU) independently screened the titles and abstracts of all references (downloaded into Endnote [17]) for eligibility against the inclusion criteria. Any studies for which the reviewers were uncertain of for inclusion were taken to full text screening. Full-text articles were evaluated by the same reviewers based on the inclusion criteria using a pre-piloted coding method. Any discrepancies which could not be resolved through discussion were sent to a third reviewer (ZMX) for a decision.

Data extraction and analysis

For each eligible study, data were extracted using a pre-piloted form in Microsoft Excel. Data were extracted by two reviewers (KP and OU), and any discrepancies that could not be resolved through discussion were sent to a third reviewer (ZMX) for a final decision. Missing information that was not available in the index papers was sought from corresponding protocol papers and other “sibling” publications.

The items of information extracted are listed as follows:

-

Publication details: year of publication and journal name.

-

Setting characteristics: country/region, school level and type of school.

-

Intervention: health area and intervention type.

-

Primary outcome: name, health area, reporter of outcome and method of data collection.

-

Study design and analysis methods: unit of randomisation (i.e., type of cluster), justification for using the cluster trial design, method used to sample schools, method used to balance the randomisation, length and number of follow-ups, design of follow-up (cohort versus repeated cross-sectional design) and method used to account for clustering in the analysis.

-

Sample size calculation: target sample size (i.e., number of clusters and pupils) and assumptions underlying the sample size calculation (e.g., assumed ICC, percentage loss to follow-up).

-

Ethics and consent procedures: activities covered by the consent agreements and use of “opt-out” consent.

-

Other study characteristics of methodological interest: number of clusters and pupils that were recruited and lost to follow-up, estimate of the ICC of the primary outcome.

Study characteristics were described using medians, interquartile ranges (IQRs) and ranges for continuous variables, and numbers and percentages for categorical variables, using Stata software [18]. Formal quality assessment was not performed as it was not an objective of this review to estimate intervention effects in the included studies. Some information relevant to the quality of CRTs was, however, extracted and summarised as part of the review.

Results

Search results

After deduplication, 3103 articles were identified through MEDLINE, 159 were full-text screened and 64 were included in the review [19,20,21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82]. Of 95 excluded studies, 88 did not meet the inclusion criteria, and 7 studies met inclusion criteria but were subsequently excluded because they were sibling reports of an index paper. The PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) flow diagram is in Fig. 1.

Study characteristics

The included papers were published in 36 different journals, including: British Medical Journal (n = 9 papers); BMC Public Health (n = 4); International Journal of Behavioural Nutrition and Physical Activity (n = 4); Archives of Disease in Childhood (n = 3); BMJ Open (n = 3); Journal of Epidemiology and Community Health (n = 3); Public Health Nutrition (n = 3); and The Lancet (n = 3). The CRT design has been increasingly used in the UK school setting to evaluate health interventions for pupils since the first paper was published in 1993 (Fig. 2). Twenty three papers were published between 2001 and 2010, compared to 37 between January 2011 and June 2020.

Table 2 summarises the characteristics of included studies.

Setting

Almost three quarters of the studies were conducted exclusively in England (n = 47; 73%); most studies (50 of the 52 studies that provided the data) took place in one or two geographic regions (e.g., West Midlands). Just over half the studies (56%) were based exclusively in primary schools (age 5–11 years), and 38% were exclusively in secondary schools (age 11–16 years). Of the 44 studies that reported information on the types [83] of schools recruited, 93% included state-funded schools.

Intervention type

Eighteen (28%) studies evaluated interventions that targeted nutrition, 15 (23%) physical activity, 15 (23%) socioemotional function and its influences, 7 (11%) dental health, 5 (8%) smoking and 5 (8%) injury, amongst others. Physical health interventions are increasingly prominent (13 published since 2011 in contrast to just 2 prior to then). Of the 15 studies targeting socioemotional function and its influences, 13 were published since 2011, highlighting increasing use of the CRT design in this area. Of the 7 CRTs related to dental health, the most recent one was published in 2011. The vast majority of interventions were in primary prevention (94%).

In 53 (83%) studies, the intervention had at least one component that necessarily had to be administered to entire clusters (“cluster–cluster” interventions [1]). Such components often included educational lessons (e.g., classroom-based lessons [23], physical activity [43] and gardening [25]). Other less common components included breakfast clubs [46, 73], funding/resources [37], change in school policy [50] and advertisements [40]. Eleven (17%) studies had intervention components that directly targeted individual pupils (“individual-cluster” interventions [1]), such as the use of fluoride varnish [72]. Thirty three (52%) studies had “professional-cluster” interventions [1]: in 30 (47%) studies the teacher was either trained in or provided with guidance to deliver components of the intervention, in 3 studies pupils were trained to deliver peer-led intervention components [21, 26, 42], and in 1 study the school nurse was trained [66]. Half the studies (n = 32) had “external-cluster” interventions [1] where people external to the school delivered intervention components (e.g., researchers [23], trained facilitators [53], dental professionals [51], dance instructors [41] and student volunteers [47]).

Two studies [53, 78] had 2 control groups (one “usual care” and one active) and 16 (25%) used a delayed intervention (waitlist) design.

Primary outcome

Health areas assessed by the primary outcomes are summarised in Table 2. In 53% of the studies pupils reported the primary outcome, with researchers reporting primary outcomes in 20%, teachers in 8%, and parents in 8%. In 28% of the studies the primary outcome reporter was blind to allocation status (some authors specifically commented on the challenges of blinding trial arm status [33, 36, 56, 60]), and 22% measured the outcome using an objective method.

Study design and analysis methods

Explicit justification for use of the CRT design was only provided in 17 (27%) studies; the most common reason was to avoid contamination (13 studies altogether). Most studies (n = 56; 88%) randomised school clusters, while classes and year groups were allocated in 6 (9%) and 2 (3%) studies, respectively. Two authors said that in order to maintain power, classes were randomised instead of schools and that this may have led to contamination between the intervention and control arms [22, 28]. Nearly all studies used a parallel group design (n = 61; 95%); the remaining 3 used a factorial design [21, 37, 39]. Of the 46 studies with sufficient information to establish the approach used to sample schools, 33 initially invited all potentially eligible schools to participate, 5 used random sampling, 4 used purposive sampling, 3 used convenience sampling, and 1 used a mixed random/convenience sampling approach.

Eighty percent of studies reported using a restricted allocation method to balance cluster-level characteristics between the trial arms. Most commonly a measure of socio-economic status (SES) was balanced on (48%), with a third of studies (21/64) specifically balancing the allocation on the percentage of pupils eligible for free school meals. Other commonly-used balancing factors are described in Table 3. Few studies gave justification for their choice of balancing factors.

One of the challenges of CRTs is to avoid recruitment bias that might occur if participants are recruited after the clusters are randomised [88, 89]. One third (33%) of studies avoided this by recruiting pupils before the clusters were randomised; furthermore, 25% collected baseline data before randomisation. This information, however, was unclear in many studies (41% and 33%, respectively). Generally, insufficient information was provided on whether recruitment bias was avoided in studies where pupils were recruited after randomisation of clusters. A notable exception was one study [57] where recruitment bias was avoided because allocation was not revealed to the schools until after recruitment and baseline assessment.

Nearly all studies used the cohort design as their method of follow-up (n = 62, 97%), where the same pupils provided data at each study wave. One study used a repeated cross-sectional design where different pupils provided data at each wave [46], and one used an a priori mixed design incorporating elements of the cohort and repeated cross-sectional designs, with only a subset of participating pupils providing data at each wave [49].

Seventy two percent of studies analysed their data using individual-level methods that allow for clustering, 16% used cluster-level analysis methods, and 12% did not allow for clustering in their analysis.

Sample size calculation

Seventy eight percent of studies accounted for clustering in their sample size calculation and 72% reported the ICC or coefficient of variation [90] that was assumed for the outcome. None of the studies made a degrees of freedom correction to the sample size calculation. Only two studies [57, 63] allowed for unequal cluster sizes in their sample size calculation, and only one of these [57] specified the anticipated variation in the number of pupils across clusters. The median (range) assumed ICC for school clusters was 0.05 (0.005 to 0.175) based on the 37 studies that provided these data. Of the 3 studies that specified the coefficient of variation of the outcome, 2 assumed it to be 0.2 [42, 60] and 1 assumed it to be 0.25 [19]. The median (range) assumed design effect was 2.21 (1.22 to 8.11). The median targeted sample size was 30 and 964 clusters and pupils, respectively. Most studies (94%) did not state whether their sample size calculation allowed for loss to follow-up of clusters.

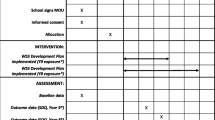

Ethics and consent procedures

Information regarding consent procedures was not well reported and consent for the participation of the cluster was often implied rather than explicitly detailed. In 63% of studies it was stated that both parents/guardians and pupils provided consent or assent for study participation. Forty five percent of studies reported that opt-out consent [14] from either the parent/guardian and/or the pupil was used for participation.

Other study characteristics of methodological interest

A median (IQR) of 31.5 (21 to 50) clusters, 29 (15 to 50) schools and 1308 (604 to 3201) pupils were recruited. The CRT studies that used a cohort design and reported both targeted and achieved recruitment figures at the cluster (n = 45) and pupil (n = 43) levels achieved those recruitment targets in 89% and 77% of studies, respectively. Some authors noted challenges with recruitment at the cluster [45, 47, 50] and pupil [24, 55] levels. Based on the 33 studies that provided data, the median (IQR) percentage of pupils categorised as “White” was 76.8% (51.5% to 86.2%). Thirty out of 62 (48%) studies that provided information reported that at least one cluster was lost to follow-up. Missing data resulting from entire school drop-out was highlighted as a problem in some reports (e.g., [42, 48, 54]). The median follow-up at the pupil level was 79.9%.

Only 26 (41%) studies overall, and 18 of the 37 (49%) studies published after 2010, reported the ICC from the analysis of the primary outcome; the specific ICC values are reported in Table 4. The median (range) ICC for school clusters was 0.028 (0.0005 to 0.21). For many studies that reported both values there was a marked difference between the observed school-level ICC in the study data and the corresponding assumed value of the ICC in the sample size calculation (Fig. 3). The median (range) of the differences between the observed ICC and the assumed ICC was -0.006 (-0.117 to 0.16) indicating that: on average, the observed ICC was slightly smaller than the assumed ICC; at one extreme, the observed ICC in one study was 0.117 smaller than the assumed value [25]; and at the other extreme, the observed ICC in one study was 0.16 larger than the assumed value [68]. The intra-class correlation coefficient of agreement between the observed and assumed ICCs was 0.24.

Seven studies [24, 26, 44, 59, 68, 71, 74] that reported ICCs had a binary primary outcome, but none of these stated whether the ICC was calculated on the proportions scale or the logistic scale [3]. It is possible that five of these studies [24, 26, 68, 71, 74] that used mixed effects (“multi-level”) models [91] to analyse the data reported the ICC on the logistic scale, which could potentially account for some of the differences between the observed and assumed ICCs. Further scrutiny of the data, however, revealed marked differences for only two of the aforementioned studies: 0.21 for the observed ICC versus 0.05 for the assumed ICC in Mulvaney and colleagues [68], and 0.028 versus 0.1, respectively, in Obsuth and colleagues [71].

Discussion

The number of UK school-based CRTs evaluating the effects of interventions on pupil health outcomes has increased in recent years, reflecting growing recognition of the role that schools can play in improving the health of children [10, 92,93,94,95]. The findings of this systematic review indicate a number of methodological considerations that are worthy of reflection.

Interpretation

Seventy two percent of the studies reported the level of clustering assumed in their sample size calculation, a little more than the 62% observed in a 2015 review of the reporting of sample size calculations in CRTs [96]. Our review found that the observed ICC in the study data often differed markedly from the ICC assumed in the sample size calculation. This will be partly due to sampling variation and adjustment for prognostic factors in the analysis, but it may also reflect the lack of availability of good estimates of the ICC at the time of sample size calculation. Knowledge of the ICC for pupil health outcomes in the school setting is less well established than for patient health outcomes in the primary care setting where general practices are allocated as clusters [1, 97]. It has been reported that general practice-level ICCs for health outcomes are generally less than 0.05 [98]; in our review, only 13 of 23 studies that randomised school clusters and reported observed ICCs had values that were less than 0.05. School-based ICC estimates are widely available for educational outcomes [99], but these are markedly higher than those reported in this review for pupil health outcomes; this is to be expected given that the primary role of the school is to provide education. The importance of reporting ICCs from study data for planning future similar CRTs has long been established [100] and the 2012 CONSORT extension to CRTs includes a specific reporting item for this [101]. Only two-fifths (41%) of studies in this review, however, reported the ICC for the primary outcome; this figure rises to 48% (16/33) for studies published after 2012. Improved reporting of the ICC in the increasing number of CRTs in the school-based setting, and further papers written specifically to report ICCs [102, 103], will provide valuable knowledge. This review focussed on CRTs in the UK setting; a useful area to investigate is the extent to which school-based ICC estimates for health outcomes from other countries (e.g., [102, 104]) are similar to those in the UK.

Representativeness of school and pupil characteristics in school-based trials is important for external validity and inclusiveness. For most studies in this review, schools were recruited from only one or two geographic regions/counties. A median 23% of participating pupils were in a minority ethnic group, lower than the national percentages reported by the UK Department for Education (33.5% of primary school pupils and 31.3% of secondary school pupils) [105]. The study reports generally provided little information on specific aspects of the recruitment process, such as why some schools declined to participate and details of their characteristics. Many of the studies evaluated interventions that involved classroom lessons and necessitated teachers being trained to deliver the intervention. Additionally, the teachers reported pupil outcomes in some studies [32, 34, 60, 73, 82]. Insufficient school resources to deliver the intervention and the wider trial may be a barrier to participation and result in lack of representation of certain types of schools.

Eighty percent of the studies used some form of restricted allocation to balance the randomisation on cluster-level characteristics, which is higher than previous methodological reviews of CRTs [106,107,108,109]. The percentage of pupils in the school that are eligible for free school meals was often used as a balancing factor, perhaps partly because this information is readily available from the UK Department for Education [110]. School characteristics that are predictive of the study outcomes, account for within-cluster correlation or influence effectiveness of the intervention are candidates on which to balance the randomisation [1, 111]; previous school-based CRTs could be used to identify such factors.

Strengths

This systematic review used a defined search strategy tailored to identify school-based CRTs. The strategy was developed following an iterative process and allowed us to achieve the right balance of sensitivity and specificity relevant to our available resources. Identifying reports of CRTs is a challenge given that many articles do not used the term ‘cluster’ in their title or abstract. Therefore, a search strategy was used which included terms such as ‘group’ and ‘community’ to improve sensitivity. The ‘School’ MeSH term was also used to identify publications that randomised any type of school-related unit. The piloting of our screening procedure and data extraction were conducted by two independent reviewers, improving accuracy. The review identified school-based CRTs with interventions spanning a variety of different health conditions/areas.

Limitations

A potential limitation of the review is that the search was limited to one database. MEDLINE was used because the focus of the review was on describing the characteristics of trials that evaluate the impact of health interventions on pupil’s health outcomes, but it is possible that we have not identified eligible publications that are not indexed in MEDLINE. Translating our search in the EMBASE, DARE, PsycINFO and ERIC databases for potential includes published in the last 3 years, however, revealed only one additional eligible school-based CRT.

Given resource constraints, we focussed the review on the UK, making the decision to collect rich data on CRT methodology in a single education system. As a result, the findings are readily applicable to a specific context. Despite being focussed on the UK, the findings of this review will be of global interest. Other high income countries, such as Australia, have a similar school system to the UK, and many of our findings may be applicable in those settings. Furthermore, some of the methodological challenges in the design of CRTs will be similar across different settings.

Future directions

The results provide a summary of the methodological characteristics of school-based CRTs with pupil health outcomes in the UK. To our knowledge, there has been no systematic review of the characteristics of school-based CRTs for evaluating interventions for improving education outcomes, despite the fact that the use of the CRT design is more established in that area. A comparison of methodology between health-based CRTs and education-based CRTs in the school setting would be valuable to both areas. The results in our review indicate that better information on the ICC is needed to design school-based CRTs with health outcomes. Cataloguing of ICCs from previous studies will help researchers choose better values for the assumed ICC when calculating sample size.

Conclusions

CRTs are increasingly used in the school setting for evaluating interventions for improving children’s health and wellbeing. The emerging pool of published trials in the UK provides investigators and methodologists with relevant experiential knowledge for the design of future similar studies. This review of school-based CRTs has highlighted the need for more information on the ICCs to calculate the required sample size. Better reporting of the recruitment process in CRTs will help to identify common barriers to obtaining representative samples of schools and pupils. Finally, previous school-based CRTs may provide a useful source of data to identify the school-level characteristics that are strong predictors of pupil health outcomes and, therefore, potentially good factors on which to balance the randomisation.

Availability of data and materials

The datasets generated and/or analysed during the current study are not publicly available because they are also being used for a wider ongoing programme of research but are available from the corresponding author on reasonable request.

Abbreviations

- CRT:

-

Cluster randomised trial

- DARE:

-

Database of Abstracts of Reviews of Effects

- EMBASE:

-

Excerpta Medica Database

- ERIC:

-

Education Resources Information Center

- ICC:

-

Intra-cluster correlation coefficient

- IQR:

-

Interquartile range

- MeSH:

-

Medical Subject Headings

- PRISMA:

-

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- PsycINFO:

-

Psychological Information Database

- SES:

-

Socio-economic status

- UK:

-

United Kingdom

References

Eldridge SM, Kerry S. A Practical Guide to Cluster Randomised Trials in Health Services Research. Chichester: John Wiley & Sons; 2012.

Donner A, Klar N. Design and Analysis of Cluster Randomization Trials in Health Research. Chichester: Wiley; 2000.

Eldridge SM, Ukoumunne OC, Carlin JB. The intra-cluster correlation coefficient in cluster randomized trials: A review of definitions. Int Stat Rev. 2009;77(3):378–94.

Cornfield J. Randomization by group: a formal analysis. Am J Epidemiol. 1978;108(2):100–2.

Eldridge SM, Ashby D, Kerry S. Sample size for cluster randomized trials: effect of coefficient of variation of cluster size and analysis method. Int J Epidemiol. 2006;35(5):1292–300.

Campbell MJ, Walters S. How to Design, Analyse and Report Cluster Randomised Trials in Medicine and Health Related Research. Chichester: John Wiley and Sons; 2014.

Hayes R, Moulton L. Cluster Randomised Trials. Florida: CRC Press; 2009.

Murray DM. Design and Anaylsis of Group-Randomized Trials. New York: Oxford University Press; 1998.

Walleser S, Hill SR, Bero LA. Characteristics and quality of reporting of cluster randomized trials in children: reporting needs improvement. J Clin Epidemiol. 2011;64(12):1331–40.

Goesling B. A practical guide to cluster randomized trials in school health research. J School Health. 2019;89(11):916–25.

Thomson D, Hartling L, Cohen E, Vandermeer B, Tjosvold L, Klassen TP. Controlled trials in children: quantity, methodological quality and descriptive characteristics of pediatric controlled trials published 1948–2006. PLoS One. 2010;5(9):e13106.

Torgerson D, Torgerson C. Designing Randomised Trials in Health, Education and the Social Sciences: An Introduction. London: Palgrave Macmillan UK; 2008.

Spybrook J, Zhang Q, Kelcey B, Dong N. Learning from cluster randomized trials in education: An assessment of the capacity of studies to determine what works, for whom, and under what conditions. Educ Eval Policy Anal. 2020;42(3):354–74.

Felzmann H. Ethical issues in school-based research. Res Ethics Rev. 2009;5(3):104–9.

Parker K, Nunns MP, Xiao Z, Ford T, Ukoumunne OC. Characteristics and practices of school-based cluster randomised controlled trials for improving health outcomes in pupils in the UK: a systematic review protocol. BMJ Open. 2021;11(2):e044143.

Taljaard M, McGowan J, Grimshaw J, Brehaut J, McRae A, Eccles M, et al. Electronic search strategies to identify reports of cluster randomized trials in MEDLINE: Low precision will improve with adherence to reporting standards. BMC Med Res Methodol. 2010;10:15.

The EndNote Team. EndNote. EndNote X9 version ed. Philadelphia, PA: Clarivate; 2013.

StataCorp. Stata. Stata Statistical Software: Release 16 ed. College Station, TX: StataCorp LLC; 2019.

Lakshman RR, Sharp SJ, Ong KK, Forouhi NG. A novel school-based intervention to improve nutrition knowledge in children: cluster randomised controlled trial. BMC Public Health. 2010;10:123.

Marcano-Olivier M, Pearson R, Ruparell A, Horne PJ, Viktor S, Erjavec M. A low-cost Behavioural Nudge and choice architecture intervention targeting school lunches increases children’s consumption of fruit: a cluster randomised trial. Int J Behav Nutr Phys Act. 2019;16(1):1–9.

Tymms PB, Curtis SE, Routen AC, Thomson KH, Bolden DS, Bock S, et al. Clustered randomised controlled trial of two education interventions designed to increase physical activity and well-being of secondary school students: the MOVE Project. BMJ Open. 2016;6(1):e009318.

Chisholm K, Patterson P, Torgerson C, Turner E, Jenkinson D, Birchwood M. Impact of contact on adolescents’ mental health literacy and stigma: the SchoolSpace cluster randomised controlled trial. BMJ Open. 2016;6(2):e009435.

Giles M, McClenahan C, Armour C, Millar S, Rae G, Mallett J, et al. Evaluation of a theory of planned behaviour–based breastfeeding intervention in Northern Irish Schools using a randomized cluster design. Br J Health Psychol. 2014;19(1):16–35.

McKay M, Agus A, Cole J, Doherty P, Foxcroft D, Harvey S, et al. Steps Towards Alcohol Misuse Prevention Programme (STAMPP): a school-based and community-based cluster randomised controlled trial. BMJ Open. 2018;8(3):e019722.

Christian MS, Evans CE, Nykjaer C, Hancock N, Cade JE. Evaluation of the impact of a school gardening intervention on children’s fruit and vegetable intake: a randomised controlled trial. Int J Behav Nutr Phys Act. 2014;11(1):1–15.

Campbell R, Starkey F, Holliday J, Audrey S, Bloor M, Parry-Langdon N, et al. An informal school-based peer-led intervention for smoking prevention in adolescence (ASSIST): a cluster randomised trial. Lancet. 2008;371(9624):1595–602.

Henderson M, Wight D, Raab G, Abraham C, Parkes A, Scott S, et al. Impact of a theoretically based sex education programme (SHARE) delivered by teachers on NHS registered conceptions and terminations: final results of cluster randomised trial. BMJ. 2007;334(7585):133.

James J, Thomas P, Cavan D, Kerr D. Preventing childhood obesity by reducing consumption of carbonated drinks: cluster randomised controlled trial. BMJ. 2004;328(7450):1237.

Sahota P, Rudolf MC, Dixey R, Hill AJ, Barth JH, Cade J. Randomised controlled trial of primary school based intervention to reduce risk factors for obesity. BMJ. 2001;323(7320):1029.

Howlin P, Gordon RK, Pasco G, Wade A, Charman T. The effectiveness of Picture Exchange Communication System (PECS) training for teachers of children with autism: a pragmatic, group randomised controlled trial. J Child Psychol Psychiatry. 2007;48(5):473–81.

Worthington HV, Hill KB, Mooney J, Hamilton FA, Blinkhorn AS. A cluster randomized controlled trial of a dental health education program for 10-year-old children. J Public Health Dentistry. 2001;61(1):22–7.

Ford T, Hayes R, Byford S, Edwards V, Fletcher M, Logan S, et al. The effectiveness and cost-effectiveness of the Incredible Years® Teacher Classroom Management programme in primary school children: results of the STARS cluster randomised controlled trial. Psychol Med. 2019;49(5):828–42.

Sayal K, Taylor JA, Valentine A, Guo B, Sampson CJ, Sellman E, et al. Effectiveness and cost-effectiveness of a brief school-based group programme for parents of children at risk of ADHD: a cluster randomised controlled trial. Child Care Health Dev. 2016;42(4):521–33.

Humphrey N, Barlow A, Wigelsworth M, Lendrum A, Pert K, Joyce C, et al. A cluster randomized controlled trial of the Promoting Alternative Thinking Strategies (PATHS) curriculum. J School Psychol. 2016;58:73–89.

Conrod PJ, O’Leary-Barrett M, Newton N, Topper L, Castellanos-Ryan N, Mackie C, et al. Effectiveness of a selective, personality-targeted prevention program for adolescent alcohol use and misuse: a cluster randomized controlled trial. JAMA Psychiat. 2013;70(3):334–42.

Hodgkinson A, Abbott J, Hurley MA, Lowe N, Qualter P. An educational intervention to prevent overweight in pre-school years: a cluster randomised trial with a focus on disadvantaged families. BMC Public Health. 2019;19(1):1–13.

Sharpe H, Patalay P, Vostanis P, Belsky J, Humphrey N, Wolpert M. Use, acceptability and impact of booklets designed to support mental health self-management and help seeking in schools: results of a large randomised controlled trial in England. Eur Child Adolesc Psychiatry. 2017;26(3):315–24.

Pine C, McGoldrick P, Burnside G, Curnow M, Chesters R, Nicholson J, et al. An intervention programme to establish regular toothbrushing: understanding parents’ beliefs and motivating children. Int Dental J. 2000;50(6):312–23.

Nutbeam D, Macaskill P, Smith C, Simpson JM, Catford J. Evaluation of two school smoking education programmes under normal classroom conditions. BMJ. 1993;306(6870):102–7.

Croker H, Lucas R, Wardle J. Cluster-randomised trial to evaluate the ‘Change for Life’mass media/social marketing campaign in the UK. BMC Public Health. 2012;12(1):1–14.

Jago R, Edwards MJ, Sebire SJ, Tomkinson K, Bird EL, Banfield K, et al. Effect and cost of an after-school dance programme on the physical activity of 11–12 year old girls: The Bristol Girls Dance Project, a school-based cluster randomised controlled trial. Int J Behav Nutr Phys Act. 2015;12(1):1–15.

Stephenson J, Strange V, Forrest S, Oakley A, Copas A, Allen E, et al. Pupil-led sex education in England (RIPPLE study): cluster-randomised intervention trial. The Lancet. 2004;364(9431):338–46.

Breheny K, Passmore S, Adab P, Martin J, Hemming K, Lancashire ER, et al. Effectiveness and cost-effectiveness of The Daily Mile on childhood weight outcomes and wellbeing: a cluster randomised controlled trial. Int J Obesity. 2020;44(4):812–22.

Axford N, Bjornstad G, Clarkson S, Ukoumunne OC, Wrigley Z, Matthews J, et al. The effectiveness of the KiVa bullying prevention program in Wales, UK: Results from a pragmatic cluster randomized controlled trial. Prev Sci. 2020;21(5):615–26.

Diedrichs PC, Atkinson MJ, Steer RJ, Garbett KM, Rumsey N, Halliwell E. Effectiveness of a brief school-based body image intervention ‘Dove Confident Me: Single Session’when delivered by teachers and researchers: Results from a cluster randomised controlled trial. Behav Res Ther. 2015;74:94–104.

Murphy S, Moore G, Tapper K, Lynch R, Clarke R, Raisanen L, et al. Free healthy breakfasts in primary schools: a cluster randomised controlled trial of a policy intervention in Wales. UK Public Health Nutr. 2011;14(2):219–26.

Breslin G, Shannon S, Rafferty R, Fitzpatrick B, Belton S, O’Brien W, et al. The effect of sport for LIFE: all island in children from low socio-economic status: a clustered randomized controlled trial. Health Qual Life Outcomes. 2019;17(1):1–12.

Foulkes J, Knowles Z, Fairclough S, Stratton G, O’Dwyer M, Ridgers N, et al. Effect of a 6-week active play intervention on fundamental movement skill competence of preschool children: a cluster randomized controlled trial. Perceptual Motor Skills. 2017;124(2):393–412.

Moore L, Tapper K. The impact of school fruit tuck shops and school food policies on children’s fruit consumption: a cluster randomised trial of schools in deprived areas. J Epidemiol Commun Health. 2008;62(10):926–31.

Rowland D, DiGuiseppi C, Gross M, Afolabi E, Roberts I. Randomised controlled trial of site specific advice on school travel patterns. Arch Dis Childhood. 2003;88(1):8–11.

Milsom K, Blinkhorn A, Walsh T, Worthington H, Kearney-Mitchell P, Whitehead H, et al. A cluster-randomized controlled trial: fluoride varnish in school children. J Dental Res. 2011;90(11):1306–11.

Evans C, Greenwood DC, Thomas JD, Cleghorn CL, Kitchen MS, Cade JE. SMART lunch box intervention to improve the food and nutrient content of children’s packed lunches: UK wide cluster randomised controlled trial. J Epidemiol Commun Health. 2010;64(11):970–6.

Stallard P, Sayal K, Phillips R, Taylor JA, Spears M, Anderson R, et al. Classroom based cognitive behavioural therapy in reducing symptoms of depression in high risk adolescents: pragmatic cluster randomised controlled trial. BMJ. 2012;345:e6058.

Scott S, O’Connor TG, Futh A, Matias C, Price J, Doolan M. Impact of a parenting program in a high-risk, multi-ethnic community: The PALS trial. J Child Psychol Psychiatry. 2010;51(12):1331–41.

Markham WA, Bridle C, Grimshaw G, Stanton A, Aveyard P. Trial protocol and preliminary results for a cluster randomised trial of behavioural support versus brief advice for smoking cessation in adolescents. BMC Res Notes. 2010;3(1):1–10.

Fairclough SJ, Hackett AF, Davies IG, Gobbi R, Mackintosh KA, Warburton GL, et al. Promoting healthy weight in primary school children through physical activity and nutrition education: a pragmatic evaluation of the CHANGE! randomised intervention study. BMC Public Health. 2013;13(1):1–14.

Lloyd J, Creanor S, Logan S, Green C, Dean SG, Hillsdon M, et al. Effectiveness of the Healthy Lifestyles Programme (HeLP) to prevent obesity in UK primary-school children: a cluster randomised controlled trial. Lancet Child Adolescent Health. 2018;2(1):35–45.

Harrington DM, Davies MJ, Bodicoat DH, Charles JM, Chudasama YV, Gorely T, et al. Effectiveness of the ‘Girls Active’school-based physical activity programme: A cluster randomised controlled trial. Int J Behav Nutr Phys Act. 2018;15(1):1–18.

Milsom K, Blinkhorn A, Worthington H, Threlfall A, Buchanan K, Kearney-Mitchell P, et al. The effectiveness of school dental screening: a cluster-randomized control trial. J Dental Res. 2006;85(10):924–8.

Hislop MD, Stokes KA, Williams S, McKay CD, England ME, Kemp SP, et al. Reducing musculoskeletal injury and concussion risk in schoolboy rugby players with a pre-activity movement control exercise programme: a cluster randomised controlled trial. Br J Sports Med. 2017;51(15):1140–6.

Kendrick D, Royal S. Cycle helmet ownership and use; a cluster randomised controlled trial in primary school children in deprived areas. Arch Dis Child. 2004;89(4):330–5.

Bonell C, Allen E, Warren E, McGowan J, Bevilacqua L, Jamal F, et al. Effects of the Learning Together intervention on bullying and aggression in English secondary schools (INCLUSIVE): a cluster randomised controlled trial. Lancet. 2018;392(10163):2452–64.

Adab P, Pallan MJ, Lancashire ER, Hemming K, Frew E, Barrett T, et al. Effectiveness of a childhood obesity prevention programme delivered through schools, targeting 6 and 7 year olds: cluster randomised controlled trial (WAVES study). BMJ. 2018;360:k211.

Kipping RR, Howe LD, Jago R, Campbell R, Wells S, Chittleborough CR, et al. Effect of intervention aimed at increasing physical activity, reducing sedentary behaviour, and increasing fruit and vegetable consumption in children: active for Life Year 5 (AFLY5) school based cluster randomised controlled trial. BMJ. 2014;348:g3256.

Aveyard P, Cheng K, Almond J, Sherratt E, Lancashire R, Lawrence T, et al. Cluster randomised controlled trial of expert system based on the transtheoretical (“stages of change”) model for smoking prevention and cessation in schools. BMJ. 1999;319(7215):948–53.

Patterson E, Brennan M, Linskey K, Webb D, Shields M, Patterson C. A cluster randomised intervention trial of asthma clubs to improve quality of life in primary school children: the School Care and Asthma Management Project (SCAMP). Arch Dis Childhood. 2005;90(8):786–91.

Norris E, Dunsmuir S, Duke-Williams O, Stamatakis E, Shelton N. Physically active lessons improve lesson activity and on-task behavior: A cluster-randomized controlled trial of the “Virtual Traveller” Intervention. Health Educ Behav. 2018;45(6):945–56.

Mulvaney CA, Kendrick D, Watson MC, Coupland CA. Increasing child pedestrian and cyclist visibility: cluster randomised controlled trial. J Epidemiol Commun Health. 2006;60(4):311–5.

Rees G, Bakhshi S, Surujlal-Harry A, Stasinopoulos M, Baker A. A computerised tailored intervention for increasing intakes of fruit, vegetables, brown bread and wholegrain cereals in adolescent girls. Public Health Nutr. 2010;13(8):1271–8.

Graham A, Moore L, Sharp D, Diamond I. Improving teenagers’ knowledge of emergency contraception: cluster randomised controlled trial of a teacher led intervention. BMJ. 2002;324(7347):1179.

Obsuth I, Sutherland A, Cope A, Pilbeam L, Murray AL, Eisner M. London Education and Inclusion Project (LEIP): Results from a cluster-randomized controlled trial of an intervention to reduce school exclusion and antisocial behavior. J Youth Adolescence. 2017;46(3):538–57.

Hardman M, Davies G, Duxbury J, Davies R. A cluster randomised controlled trial to evaluate the effectiveness of fluoride varnish as a public health measure to reduce caries in children. Caries Res. 2007;41(5):371–6.

Shemilt I, Harvey I, Shepstone L, Swift L, Reading R, Mugford M, et al. A national evaluation of school breakfast clubs: evidence from a cluster randomized controlled trial and an observational analysis. Child Care Health Dev. 2004;30(5):413–27.

Conner M, Grogan S, West R, Simms-Ellis R, Scholtens K, Sykes-Muskett B, et al. Effectiveness and cost-effectiveness of repeated implementation intention formation on adolescent smoking initiation: A cluster randomized controlled trial. J Consulting Clin Psychol. 2019;87(5):422.

Griffin TL, Jackson DM, McNeill G, Aucott LS, MacDiarmid JI. A brief educational intervention increases knowledge of the sugar content of foods and drinks but does not decrease intakes in scottish children aged 10–12 years. J Nutr Educ Behav. 2015;47(4):367–73.

Hubbard G, Stoddart I, Forbat L, Neal RD, O’Carroll RE, Haw S, et al. School-based brief psycho-educational intervention to raise adolescent cancer awareness and address barriers to medical help-seeking about cancer: a cluster randomised controlled trial. Psycho-Oncol. 2016;25(7):760–71.

Cunningham CJ, Elton R, Topping GV. A randomised control trial of the effectiveness of personalised letters sent subsequent to school dental inspections in increasing registration in unregistered children. BMC Oral Health. 2009;9(1):1–8.

Stallard P, Skryabina E, Taylor G, Phillips R, Daniels H, Anderson R, et al. Classroom-based cognitive behaviour therapy (FRIENDS): a cluster randomised controlled trial to Prevent Anxiety in Children through Education in Schools (PACES). Lancet Psychiatry. 2014;1(3):185–92.

Kendrick D, Groom L, Stewart J, Watson M, Mulvaney C, Casterton R. “Risk Watch”: Cluster randomised controlled trial evaluating an injury prevention program. Inj Prev. 2007;13(2):93–9.

Evans CE, Ransley JK, Christian MS, Greenwood DC, Thomas JD, Cade JE. A cluster-randomised controlled trial of a school-based fruit and vegetable intervention: Project Tomato. Public Health Nutr. 2013;16(6):1073–81.

Redmond CA, Blinkhorn FA, Kay EJ, Davies RM, Worthington HV, Blinkhorn AS. A cluster randomized controlled trial testing the effectiveness of a school-based dental health education program for adolescents. J Public Health Dentistry. 1999;59(1):12–7.

Connolly P, Miller S, Kee F, Sloan S, Gildea A, McIntosh E, et al. A cluster randomised controlled trial and evaluation and cost-effectiveness analysis of the Roots of Empathy schools-based programme for improving social and emotional well-being outcomes among 8-to 9-year-olds in Northern Ireland. Public Health Research. 2018;6(4).

HM Government. Types of School [Available from: https://www.gov.uk/types-of-school]. Accessed 1 May 2021.

Raab GM, Butcher I. Balance in cluster randomized trials. Stat Med. 2001;20(3):351–65.

Moulton LH. Covariate-based constrained randomization of group-randomized trials. Clin Trials. 2004;1(3):297–305.

Dale A, Marsh C. The 1991 Census User’s Guide. London: HM Stationery Office; 1993.

HM Government. The English Indices of Deprivation 2019. 2019 [Available from: https://www.gov.uk/government/statistics/english-indices-of-deprivation-2019]. Accessed 1 May 2021.

Eldridge S, Kerry S, Torgerson DJ. Bias in identifying and recruiting participants in cluster randomised trials: what can be done? BMJ. 2009;339:b4006.

Bolzern J, Mnyama N, Bosanquet K, Torgerson DJ. A review of cluster randomized trials found statistical evidence of selection bias. J Clin Epidemiol. 2018;99:106–12.

Hayes R, Bennett S. Simple sample size calculation for cluster-randomized trials. Int J Epidemiol. 1999;28(2):319–26.

Goldstein H, Browne W, Rasbash J. Multilevel modelling of medical data. Stat Med. 2002;21(21):3291–315.

HM Government. Childhood obesity: a plan for action 2019 [Available from: https://www.gov.uk/government/publications/tackling-obesity-government-strategy]. Accessed 1 May 2021.

Bonell C, Humphrey N, Fletcher A, Moore L, Anderson R, Campbell R. Why schools should promote students’ health and wellbeing. BMJ. 2014;348:g3078.

Bonell C, Farah J, Harden A, Wells H, Parry W, Fletcher A, et al. Systematic review of the effects of schools and school environment interventions on health: evidence mapping and synthesis. Public Health Res. 2013;1(1).

Bartlett R, Wright T, Olarinde T, Holmes T, Beamon ER, Wallace D. Schools as Sites for Recruiting Participants and Implementing Research. J Commun Health Nurs. 2017;34(2):80–8.

Rutterford C, Taljaard M, Dixon S, Copas A, Eldridge S. Reporting and methodological quality of sample size calculations in cluster randomized trials could be improved: a review. J Clin Epidemiol. 2015;68(6):716–23.

Adams G, Gulliford MC, Ukoumunne OC, Eldridge S, Chinn S, Campbell MJ. Patterns of intra-cluster correlation from primary care research to inform study design and analysis. J Clin Epidemiol. 2004;57(8):785–94.

Campbell MJ. Cluster randomized trials in general (family) practice research. Stat Methods Med Res. 2000;9(2):81–94.

Hedges LV, Hedberg EC. Intraclass correlation values for planning group-randomized trials in education. Educ Eval Policy Anal. 2007;29(1):60–87.

Ukoumunne O, Gulliford M, Chinn S, Sterne J, Burney P. Methods for evaluating area-wide and organisation-based interventions in health and health care: a systematic review. Health Technol Assessment. 1999;3(5).

Campbell MK, Elbourne DR, Altman DG. CONSORT statement: extension to cluster randomised trials. BMJ. 2004;328(7441):702–8.

Shackleton N, Hale D, Bonell C, Viner RM. Intra class correlation values for adolescent health outcomes in secondary schools in 21 European countries. SSM - Popul Health. 2016;2:217–25.

Hale DR, Patalay P, Fitzgerald-Yau N, Hargreaves DS, Bond L, Görzig A, et al. School-level variation in health outcomes in adolescence: analysis of three longitudinal studies in England. Prev Sci. 2014;15(4):600–10.

Murray DM, Short BJ. Intraclass correlation among measures related to tobacco use by adolescents: estimates, correlates, and applications in intervention studies. Addict Behav. 1997;22(1):1–12.

HM Government. Schools, pupils and their characteristics: January 2019. 2019 [Available from: https://www.gov.uk/government/statistics/schools-pupils-and-their-characteristics-january-2019]. Accessed 1 May 2021.

Murray DM, Pals SL, George SM, Kuzmichev A, Lai GY, Lee JA, et al. Design and analysis of group-randomized trials in cancer: A review of current practices. Prev Med. 2018;111:241–7.

Diaz-Ordaz K, Froud R, Sheehan B, Eldridge S. A systematic review of cluster randomised trials in residential facilities for older people suggests how to improve quality. BMC Med Res Methodol. 2013;13(1):1–10.

Ivers N, Taljaard M, Dixon S, Bennett C, McRae A, Taleban J, et al. Impact of CONSORT extension for cluster randomised trials on quality of reporting and study methodology: review of random sample of 300 trials, 2000–8. BMJ. 2011;343.

Froud R, Eldridge S, Diaz Ordaz K, Marinho VCC, Donner A. Quality of cluster randomized controlled trials in oral health: a systematic review of reports published between 2005 and 2009. Commun Dentistry Oral Epidemiol. 2012;40:3–14.

HM Government. Get in formation about schools [Available from: https://www.gov.uk/government/organisations/department-for-education]. Accessed 1 May 2021.

de Hoop E, Teerenstra S, van Gaal BG, Moerbeek M, Borm GF. The “best balance” allocation led to optimal balance in cluster-controlled trials. J Clin Epidemiol. 2012;65(2):132–7.

Acknowledgements

Kitty Parker and Obioha Ukoumunne were supported by the National Institute for Health Research Applied Research Collaboration South West Peninsula. The views expressed in this publication are those of the author(s) and not necessarily those of the National Institute for Health Research or the Department of Health and Social Care.

Funding

This research was funded by the National Institute for Health Research Applied Research Collaboration South West Peninsula.

Author information

Authors and Affiliations

Contributions

KP, MN, ZMX, TF and OU conceived the study. ZMX and TF advised on the design of the study and contributed to the protocol. KP, MN and OU contributed to the design of the study, wrote the protocol and designed the data extraction form. KP and OU undertook data extraction. KP conducted the analyses of the data. All authors had full access to all the data. KP took primary responsibility for writing the manuscript. All authors provided feedback on all versions of the paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

No competing interests to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Parker, K., Nunns, M., Xiao, Z. et al. Characteristics and practices of school-based cluster randomised controlled trials for improving health outcomes in pupils in the United Kingdom: a methodological systematic review. BMC Med Res Methodol 21, 152 (2021). https://doi.org/10.1186/s12874-021-01348-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12874-021-01348-0