Abstract

Background

Due to clinical and methodological diversity, clinical studies included in meta-analyses often differ in ways that lead to differences in treatment effects across studies. Meta-regression analysis is generally recommended to explore associations between study-level characteristics and treatment effect, however, three key pitfalls of meta-regression may lead to invalid conclusions. Our aims were to determine the frequency of these three pitfalls of meta-regression analyses, examine characteristics associated with the occurrence of these pitfalls, and explore changes between 2002 and 2012.

Methods

A meta-epidemiological study of studies including aggregate data meta-regression analysis in the years 2002 and 2012. We assessed the prevalence of meta-regression analyses with at least 1 of 3 pitfalls: ecological fallacy, overfitting, and inappropriate methods to regress treatment effects against the risk of the analysed outcome. We used logistic regression to investigate study characteristics associated with pitfalls and examined differences between 2002 and 2012.

Results

Our search yielded 580 studies with meta-analyses, of which 81 included meta-regression analyses with aggregated data. 57 meta-regression analyses were found to contain at least one pitfall (70%): 53 were susceptible to ecological fallacy (65%), 14 had a risk of overfitting (17%), and 5 inappropriately regressed treatment effects against the risk of the analysed outcome (6%). We found no difference in the prevalence of meta-regression analyses with methodological pitfalls between 2002 and 2012, nor any study-level characteristic that was clearly associated with the occurrence of any of the pitfalls.

Conclusion

The majority of meta-regression analyses based on aggregate data contain methodological pitfalls that may result in misleading findings.

Similar content being viewed by others

Background

Due to clinical and methodological diversity, clinical studies included in meta-analyses often differ in ways that lead to differences in treatment effects across studies [1]. Thus, a simple pooled effect size generally does not solely reflect the treatment effect on the outcome of interest, but also the effects of clinical or methodological characteristics that are unequally distributed across studies, and thus, potential effect modifiers. The assessment of covariates that are potentially associated with treatment effect is generally recommended, [2, 3] using meta-regression to explore associations between study-level characteristics and treatment effect [3]. However, three key pitfalls of meta-regression, if overlooked or ignored, may lead to invalid conclusions.

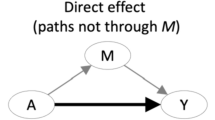

First, in meta-regression on aggregate data, associations between average patient characteristics and the pooled treatment effect do not necessarily reflect true associations between the individual patient-level characteristics and treatment effect [4, 5]. The difference between associations of treatment effects with average patient characteristics at group level and true associations with individual patient level characteristics has been referred to as ecological fallacy or aggregation bias [4]. It reflects a logical fallacy in the interpretation of observed data, and findings at the group level may be either an under- or overestimation of the real association between patient-level characteristics and treatment effect. Second, meta-regression models can be overfitted if the number of studies per examined covariate is low. A consequence of overfitting can be spurious associations between covariates and treatment effect due to idiosyncrasies of the data [6]. The latest version of the Cochrane Handbook suggests a minimum of 10 studies per examined covariate in meta-regression, [3] analogous to the traditional rule of thumb used to minimize the risk of overfitting in logistic and Cox regression models of at least 10 events per included covariate [7]. However, a recent study suggested that the number of observations required per covariate in ordinary least-squares linear regression may be considerably lower [6], and it remains unclear whether this also partially applies to the case of weighted random-effects meta-regression. Third, meta-regression analyses that regress treatment effects against the risk of the analysed outcome observed in included trials are difficult to interpret as the observed risk included as a covariate is also incorporated in the expression of the treatment effect used as the dependent variable in meta-regression. Regression to the mean will therefore result in an inherent correlation between covariate and dependent variable in meta-regression. In the extreme case of the true risk ratio or odds ratio of every trial being equal to 1, the introduced correlation can be as pronounced as -0.71, [8] even though covariate and treatment effect are truly unrelated [4, 9]. Methods to overcome this problem have been published, but are rarely used [10,11,12,13]. The primary objectives of this meta-epidemiological study were therefore, to determine the frequency of these three pitfalls in meta-regression analyses published in the medical literature. Due to limited resources and based on the publication timing of most methodological literature on meta-regression analysis, we limited our focus to the years 2002 and 2012. The secondary objectives were to examine associations between characteristics of journals, authors and methods with the occurrence of these pitfalls, and explore changes over time between 2002 and 2012.

Methods

Data source

We searched Medline through PubMed using the following search terms: meta-analysis OR systematic[sb], as used in previous meta-epidemiological studies [14, 15] to search for systematic reviews and meta-analyses. As the use of meta-regression analysis is not always reported in the title and abstract of publications, we first identified meta-analyses in the published medical literature and then screened their full-text for meta-regression analyses. We limited our search to the PubMed entry years 2002 and 2012. We chose 2002 as the comparator year for 2012 because most methodological articles addressing issues in meta-regression analyses [4, 5, 9, 16] had been published before 2002. The search for 2002 reports was done in June 2012; the search for 2012 reports was done in June 2013 and updated in January 2014. Based on a computer-generated sequence of random numbers, we identified random samples of reports from 2002 and 2012.

Study selection

Eligible were all aggregate-level meta-analyses published in 2002 and 2012 in which at least one study was a randomised or quasi-randomised controlled trial, which reported on a meta-regression analysis [4] examining the association between one or several covariates and the estimated pooled treatment effect on a clinical outcome. Meta-regression analyses were considered irrespective of whether they related to the primary or secondary outcome variables. We excluded meta-regression analyses that did not have between-group comparisons (i.e., treatment effect estimates comparing two trial arms on a specific outcome) as the dependent variable, and meta-regression analyses for which results were not reported. During the first phase, one reviewer (MG) identified all aggregate-level meta-analyses based on titles and abstracts, excluding guidelines, network meta-analyses, and meta-analyses of individual patient data. A second reviewer (BdC) screened a randomly selected sample of 200 citations for each year. Percent agreement between the two screeners was 88% (chance-corrected agreement: kappa, 0.68). During a second phase, one reviewer (MG) determined eligibility based on full texts of all aggregate-level meta-analyses, and of all citations, for which it was unclear whether they reported on an aggregate-level meta-analysis.

Data extraction

A standardised and piloted data extraction form accompanied by a codebook was used for data extraction. We determined whether meta-regression analyses included at least one of three previously described pitfalls of meta-regression: (1) ecological fallacy, [4, 5] (2) a high risk of overfitting (conceptualized as a meta-regression with less than 5 studies per covariate) [3, 6, 7] and (3) inappropriate methods to regress treatment effects (assessed with binary or continuous outcomes) against the risk of the analysed outcome observed in included studies [10,11,12,13]. We classified meta-regression analysis as susceptible to ecological fallacy if one or more of the covariates included in the analysis was an average estimate of a patient level characteristic such as mean age or proportion of females. We also examined whether authors of published reports recognized the limitations associated with these pitfalls if their meta-regression analysis was susceptible to them. The extraction of these data was done independently and in duplicate (MG, SA). Any disagreements were resolved by discussion, and consultation with a third author (BdC), if needed.

Additional data extraction, done by a single author (MG), involved extraction of the following characteristics: the name of the journal and journal characteristics (impact factor, type of journal, and appearance on the list of core clinical journals on PubMed), affiliation of the authors with industry, affiliation of the authors with an institute with statistical expertise (mainly biostatistics or epidemiology department), the number of studies included in the review, design of the studies included in the review and in the meta-regression analysis, whether the outcome was continuous or binary, the medical field of speciality, and the category of therapy (e.g., drugs, surgery, etc.). For all reports, we used the impact factor in the year 2007 (midpoint between 2002 and 2012). Our operationalization of these variables is reported in Additional file 1: Tables A-C.

Analysis

Our sample of 2,404 records per entry year (4,808 total) was based on a pilot study of a random sample of 50 records from 2012, in which we identified 20 (40%) meta-analyses, 3 of which included a meta-regression analysis (6.0%, 95% confidence interval [CI] 2.1 to 16.2%). Assuming that the yield of meta-regression analyses in 2002 would be about one third of the yield in 2012, we estimated that the yield of meta-regression analyses in the total random sample of 4,808 citations would be 192 (95% uncertainty interval, 66 to 519). Our target sample size of 192 would yield 95% confidence intervals ranging from 14 to 26% if the prevalence of a potential pitfall was 20%, and ranging from 43 to 57% if the prevalence of a potential pitfall was 50%.

The primary outcome was the proportion of meta-analyses with at least one meta-regression analysis that was potentially influenced by one of the 3 assessed methodological pitfalls in our random samples for the years 2002 and 2012. Secondary outcomes were the proportion of meta-analyses with at least one meta-regression analysis with ecological fallacy; the proportion of meta-analyses with at least one meta-regression analysis with a high risk of overfitting; the proportion of meta-analyses with at least one meta-regression analysis with inappropriate methods to regress treatment effects against the risk of the analysed outcome observed in included studies; and the proportion of meta-analyses with at least one meta-regression analysis that recognized the limitations when these pitfalls were present. We used Firth’s logistic regression to investigate the association between study characteristics and the occurrence of pitfalls, using one predictor at a time. Firth’s logistic regression model uses a penalized maximum likelihood that produces more accurate inference in small samples than the standard maximum likelihood based logistic regression estimator, and achieves convergence in the presence of separation with better coverage probability [17, 18]. Odds ratios (ORs) and 95% CIs were derived from the models, with ORs above 1 indicating that the study characteristic was positively associated with a pitfall, and ORs below 1 indicating a negative association. All analyses were conducted in STATA 13, and 95% CIs were two-sided.

Results

Our search of 2002 and 2012 reports resulted in 7,000 citations for 2002 and 20,169 citations for 2012 (Fig. 1). Out of the random samples of 2,404 reports for each year, 599 in 2002 and 735 in 2012 were considered to be potentially eligible based on their title and abstract. Full-text analysis of these yielded 246 and 334 published reports describing aggregate-level meta-analyses that included at least one randomized trial in 2002 and 2012, respectively. 29 and 52 reports published in 2002 and 2012, respectively, were deemed to meet our eligibility criteria (81 meta-analyses with at least one meta-regression analysis in total).

Table 1 presents characteristics of included meta-analyses with meta-regression by year. Overall, the 81 meta-analyses included a median of 23 studies (IQR 13 to 41 studies), with 73 of these (90%) including ≥ 10 studies. The median journal impact factor was 4.2 (IQR 2.3 to 6.1). 15 meta-analyses (19%) were published in a general medical journal, and 19 meta-analyses (23%) in one of PubMed’s core clinical journals. 38 meta-analyses (47%) examined drug interventions, and 48 (59%) used a continuous primary outcome measure. The most common medical fields represented were psychiatry and psychology (16%), cardiology (12%) and oncology (10%), with a wide range of fields making use of meta-regression analyses (Table 1). Five meta-analyses (6%) had authors affiliated with industry, while 35 (43%) had authors affiliated with a biostatistics or epidemiology department.

Main outcome

Table 2 summarizes the prevalence of the three key pitfalls of meta-regression-analyses in the years 2002 and 2012. Overall, we found at least one of the assessed pitfalls in 70% of included reports (57/81; 95% CI 60% to 79%) with similar numbers in 2002 (72%, 95% CI 54% to 85%) and 2012 (69%, 95% CI 56% to 80%). Ecological fallacy was the most common issue, observed in 20 reports in 2002 (69%, 95% CI 51% to 83%) and 33 in 2012 (63%, 95% CI 50% to 75%). A high risk of overfitting was found in 6 reports in 2002 (21%, 95% CI 10% to 38%) and 8 in 2012 (15%, 95% CI 8% to 28%). Inappropriate methods to regress treatment effects against the risk of the analysed outcome were described in 2 reports in 2002 (7%, 95% CI 2% to 22%) and 3 in 2012 (6%, 95% CI 2% to 16%). Only two out of the 57 meta-analyses with at least one problematic meta-regression analysis (4%) provided a frank discussion of the limitations of meta-regression; in both, average patient characteristics were regressed against treatment effects, and the limitations of this approach were addressed in the discussion section [19, 20].

Characteristics associated with pitfalls

In logistic regression analyses, our results were most compatible with no important associations between publication year, journal characteristics, affiliation of study authors, the number of studies included in the review, whether the outcome was continuous or binary, category of therapy, and a composite of any of the three pitfalls (Table 3), or each of the three pitfalls separately (Additional file 1: Table C).

Discussion

In this meta-epidemiological study based on a random sample of 4,808 reports published in 2002 and 2012, we found 580 aggregate-level meta-analyses, of which 81 included at least one meta-regression analysis. Of these 81 meta-analyses, 57 (70%) were affected by at least one of three key pitfalls of meta-regression analyses addressed in our study—ecological fallacy, overfitting, or inappropriate methods to regress treatment effects against the risk of the analysed outcome. This suggests that about one out of ten meta-analyses are potentially flawed and may report invalid findings. In only two of the 57 meta-regression analyses with pitfalls, did authors explicitly acknowledge the limitations of their findings derived from meta-regression analysis. We found little evidence for associations between inappropriate meta-regression and characteristics of meta-analyses. There was inconclusive evidence with regard to the association between journal, author, and characteristics of meta-analyses and the odds of pitfalls of meta-regression. The negative association between the prevalence of pitfalls in meta-regression analyses and authors affiliated with biostatistics or epidemiology department (OR 0.68, 95% CI 0.27 to 1.75), although imprecise, is noteworthy. In addition, authors with such affiliation were less frequent in analyses published in 2012 (37%) than in 2002 (55%). This is striking and may reflect an increase in the availability of software for non-statisticians to conduct meta-regression analysis. This underlines the importance of a collaboration with a statistician experienced in evidence synthesis when conducting meta-regression analyses [21]. In addition, we did not find evidence for a difference when comparing the years 2002 and 2012.

Strengths and limitations

Our meta-epidemiological study has several strengths. First, we used a systematic and broad search strategy using a validated filter to find systematic reviews and meta-analyses. We refrained from using filters specific to meta-regression, as many meta-regression analyses were not mentioned in titles and abstracts of eligible meta-analyses. Second, our use of two random samples of meta-analyses from 2002 and 2012 suggests generalizability of our findings to published meta-analyses with meta-regression indexed in PubMed. Third, we conducted a sample size consideration prior to our screening, which was recently proposed by Giraudeau et al. [22] who also provide a relevant framework for this purpose. In addition, data extraction was done using a dedicated data extraction form, and carried out partly in duplicate with discrepancies resolved through discussion and consensus, which minimized data extraction errors.

Our study has limitations. First, the number of meta-analyses with meta-regression analysis identified in our random samples was only 81, and therefore at the lower end of what we had expected based on our sample size consideration, which in turn has limited our statistical power to detect associations between pre-specified study characteristics and pitfalls. Second, our findings on overfitting may underestimate the true frequency of this pitfall as the threshold of less than 5 trials per covariate was more conservative than currently suggested in the Cochrane Handbook [3]. Third, we only considered meta-regression analyses of clinical studies on treatment effects, but the same principles and pitfalls also apply to meta-analyses of studies with other purposes and designs [4]. Forth, the most recent studies included in our analyses were published in 2012. Fifth, we did not investigate whether meta-regression analyses were pre-specified in the protocol. It is important that meta-regression analyses are pre-specified with as much detail as possible in a review protocol to reduce the risk of false-positive conclusions [4]. With the ever-growing increase in publications of review protocols, this would be a feasible and important issue to be investigated in a future methodological study of meta-regression analyses.

Context

To our knowledge, no prior meta-epidemiological study has quantified the number of meta-regression analyses affected by these pitfalls. The problem of ecological fallacy in meta-regression analysis is well known [4, 5, 23, 24]. For example, Berlin and colleagues [5] showed that meta-regression based on aggregate data failed to detect an interaction between allograft failure after anti-lymphocyte antibody induction therapy and elevated panel reactive antibodies in patients after renal transplantation, whereas an analysis of individual patient data showed evidence for such an interaction. The high prevalence of meta-analyses with meta-regression that are subject to the ecological fallacy in both 2002 and 2012 suggests that published recommendations have had limited impact [2, 4, 25]. Common covariates prone to ecological bias were age, gender or baseline value of the outcome variable. Valid investigations of interactions between treatment effect and patient-level characteristics require the analysis of individual patient data in at least some of the trials included in a meta-analysis [26]. When some trials have individual patient data available, a Bayesian meta-regression approach combining associations derived from individual and aggregate level data can be used [27]. This method first quantifies the association between patient characteristics and treatment effect for each type of data separately. It then tests whether the association estimated based on individual patient data is different from the association based on aggregate level data. If the test indicates no difference above and beyond chance, the associations can then be combined using appropriate weighting.

The minimum number of trials per covariate in meta-regression analyses required to minimize the risk of overfitting is unknown. The Cochrane Handbook suggest a minimum of 10 studies per examined covariate, [3] but a recent study suggested a considerably lower number of observations required per covariate in ordinary, unweighted least-squares linear regression [6]. Whether this also applies to weighted random-effects meta-regression models is unclear. Given this uncertainty, we chose a cut-off of < 5 studies to identify meta-regression analyses at risk of overfitting. If a cut-off of < 10 studies had been used, as suggested in the Cochrane Handbook, the prevalence of meta-regression analyses at risk of overfitting would have been 53% (43 out of 81 meta-regression analyses, data not shown).

Meta-regression is often used to determine whether treatment effects are associated with the underlying baseline or population risk using the event rate observed in the control group as a surrogate for baseline risk [4, 9]. This approach is problematic as the observed risk included as a covariate is also incorporated in the expression of the treatment effect. Regression to the mean will therefore result in potentially spurious associations [1, 4, 8, 9]. Advanced methods should be used to overcome this problem [10,11,12,13].

Much like the decision to conduct a fixed-effect or random-effects meta-analysis, the decision to conduct a meta-regression analysis should not be based on heterogeneity assessments using, for instance, a chi-squared test, Cochrane Q, or I-squared [2]. Instead, a meta-regression analysis should be considered whenever a clinically important variation in treatment effects is observed on graphical display or indicated by the tau-squared [28].

Conclusions

Results of the majority of meta-regression analyses based on aggregate data may be misleading. A considerable body of methodological literature and recommendations appear to have had little impact on the use of meta-regression in the medical literature. Authors, editors and peer reviewers need to become more aware of the methodological pitfalls of meta-regression analyses.

Availability of data and materials

The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

References

Davey Smith G, Egger M, Phillips AN. Meta-analysis beyond the grand mean? BMJ. 1997;315(7122):1610–4. https://doi.org/10.1136/bmj.315.7122.1610.

da Costa BR, Jüni P. Systematic reviews and meta-analyses of randomized trials: principles and pitfalls. Eur Heart J. 2014;35(47):3336–45. https://doi.org/10.1093/eurheartj/ehu424.

Higgins JPT, Thomas J, Chandler J, et al. Cochrane handbook for systematic reviews of interventions. 2nd edn. Chichester: Wiley; 2019. https://doi.org/10.1002/9781119536604.

Thompson SG, Higgins JPT. How should meta-regression analyses be undertaken and interpreted? Stat Med. 2002;21(11):1559–73. https://doi.org/10.1002/sim.1187.

Berlin JA, Santanna J, Schmid CH, Szczech LA, Feldman HI. Anti-Lymphocyte antibody induction therapy study group. Individual patient- versus group-level data meta-regressions for the investigation of treatment effect modifiers: ecological bias rears its ugly head. Stat Med. 2002;21(3):371–87. https://doi.org/10.1002/sim.1023.

Austin PC, Steyerberg EW. The number of subjects per variable required in linear regression analyses. J Clin Epidemiol. 2015;68(6):627–36. https://doi.org/10.1016/j.jclinepi.2014.12.014.

Vittinghoff E, McCulloch CE. Relaxing the Rule of Ten Events per Variable in Logistic and Cox Regression. Am J Epidemiol. 2007;165(6):710–8. https://doi.org/10.1093/aje/kwk052.

Senn S. Importance of trends in the interpretation of an overall odds ratio in the meta-analysis of clinical trials. Stat Med. 1994;13(3):293–6. https://doi.org/10.1002/sim.4780130310.

Sharp SJ, Thompson SG, Altman DG. The relation between treatment benefit and underlying risk in meta-analysis. BMJ. 1996;313(7059):735–8. https://doi.org/10.1136/bmj.313.7059.735.

McIntosh MW. The population risk as an explanatory variable in research synthesis of clinical trials. Stat Med. 1996;15(16):1713–28. https://doi.org/10.1002/(sici)1097-0258(19960830)15:16%3c1713::aid-sim331%3e3.0.co;2-d.

Thompson SG, Smith TC, Sharp SJ. Investigating underlying risk as a source of heterogeneity in meta-analysis. Stat Med. 1997;16(23):2741–58. https://doi.org/10.1002/(sici)1097-0258(19971215)16:23%3c2741::aid-sim703%3e3.0.co;2-0.

Sharp SJ, Thompson SG. Analysing the relationship between treatment effect and underlying risk in meta-analysis: comparison and development of approaches. Stat Med. 2000;19(23):3251–74. https://doi.org/10.1002/1097-0258(20001215)19:23%3c3251::aid-sim625%3e3.0.co;2-2.

Arends LR, Hoes AW, Lubsen J, Grobbee DE, Stijnen T. Baseline risk as predictor of treatment benefit: three clinical meta-re-analyses. Stat Med. 2000;19(24):3497–518. https://doi.org/10.1002/1097-0258(20001230)19:24%3c3497::aid-sim830%3e3.0.co;2-h.

Song F, Xiong T, Parekh-Bhurke S, et al. Inconsistency between direct and indirect comparisons of competing interventions: meta-epidemiological study. BMJ. 2011;343(aug16 2):d4909-9. https://doi.org/10.1136/bmj.d4909.

Song F, Loke YK, Walsh T, Glenny AM, Eastwood AJ, Altman DG. Methodological problems in the use of indirect comparisons for evaluating healthcare interventions: survey of published systematic reviews. BMJ. 2009;338(apr03 1):b1147-7. https://doi.org/10.1136/bmj.b1147.

Schmid CH, Lau J, McIntosh MW, Cappelleri JC. An empirical study of the effect of the control rate as a predictor of treatment efficacy in meta-analysis of clinical trials. Stat Med. 1998;17(17):1923–42. https://doi.org/10.1002/(sici)1097-0258(19980915)17:17%3c1923::aid-sim874%3e3.0.co;2-6.

Heinze G. A comparative investigation of methods for logistic regression with separated or nearly separated data. Stat Med. 2006;25(24):4216–26. https://doi.org/10.1002/sim.2687.

Heinze G, Schemper M. A solution to the problem of separation in logistic regression. Stat Med. 2002;21(16):2409–19. https://doi.org/10.1002/sim.1047.

Cammà C, Schepis F, Orlando A, et al. Transarterial chemoembolization for unresectable hepatocellular carcinoma: meta-analysis of randomized controlled trials. Radiology. 2002;224(1):47–54. https://doi.org/10.1148/radiol.2241011262.

Najaka SS, Gottfredson DC, Wilson DB. A meta-analytic inquiry into the relationship between selected risk factors and problem behavior. Prev Sci. 2001;2(4):257–71. https://doi.org/10.1023/a:1013610115351.

Zapf A, Rauch G, Kieser M. Why do you need a biostatistician? BMC Med Res Methodol. 2020;20(1):23–6. https://doi.org/10.1186/s12874-020-0916-4.

Giraudeau B, Higgins JPT, Tavernier E, Trinquart L. Sample size calculation for meta-epidemiological studies. Stat Med. 2016;35(2):239–50. https://doi.org/10.1002/sim.6627.

Lambert PC, Sutton AJ, Abrams KR, Jones DR. A comparison of summary patient-level covariates in meta-regression with individual patient data meta-analysis. J Clin Epidemiol. 2002;55(1):86–94. https://doi.org/10.1016/s0895-4356(01)00414-0.

Schmid CH, Stark PC, Berlin JA, Landais P, Lau J. Meta-regression detected associations between heterogeneous treatment effects and study-level, but not patient-level, factors. J Clin Epidemiol. 2004;57(7):683–97. https://doi.org/10.1016/j.jclinepi.2003.12.001.

Fisher DJ, Carpenter JR, Morris TP, Freeman SC, Tierney JF. Meta-analytical methods to identify who benefits most from treatments: daft, deluded, or deft approach? BMJ. 2017;356:j573. https://doi.org/10.1136/bmj.j573.

Burke DL, Ensor J, Riley RD. Meta-analysis using individual participant data: one-stage and two-stage approaches, and why they may differ. Stat Med. 2017;36(5):855–75. https://doi.org/10.1002/sim.7141.

Sutton AJ, Kendrick D, Coupland CAC. Meta-analysis of individual- and aggregate-level data. Stat Med. 2008;27(5):651–69. https://doi.org/10.1002/sim.2916.

Rücker G, Schwarzer G, Carpenter JR, Schumacher M. Undue reliance on I 2 in assessing heterogeneity may mislead. BMC Med Res Methodol. 2008;8(1):841–9. https://doi.org/10.1186/1471-2288-8-79.

Acknowledgements

Not applicable.

Funding

Peter Jüni is a Tier 1 Canada Research Chair in Clinical Epidemiology of Chronic Diseases. This research was completed, in part, with funding from the Canada Research Chairs Programme. These organisations were not involved in the design of this study nor in the data collection, data analyses, interpretation of the outcomes, or the writing of this manuscript.

Author information

Authors and Affiliations

Contributions

All authors made a significant contribution to the work reported, whether that is in the conception, study design, execution, acquisition of data, analysis and interpretation, or in all these areas; took part in drafting, revising or critically reviewing the article; gave final approval of the version to be published; have agreed on the journal to which the article has been submitted; and agree to be accountable for all aspects of the work.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study did not require ethics board approval.

Consent for publication

Not applicable.

Competing interests

We wish to confirm that there are no known conflicts of interest associated with this publication and there has been no significant financial support for this work that could have influenced its outcome.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Geissbühler, M., Hincapié, C.A., Aghlmandi, S. et al. Most published meta-regression analyses based on aggregate data suffer from methodological pitfalls: a meta-epidemiological study. BMC Med Res Methodol 21, 123 (2021). https://doi.org/10.1186/s12874-021-01310-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12874-021-01310-0