Abstract

Background

Changes to physiological parameters precede deterioration of ill patients. Early warning and track and trigger systems (TTS) use routine physiological measurements with pre-specified thresholds to identify deteriorating patients and trigger appropriate and timely escalation of care. Patients presenting to the emergency department (ED) are undiagnosed, undifferentiated and of varying acuity, yet the effectiveness and cost-effectiveness of using early warning systems and TTS in this setting is unclear. We aimed to systematically review the evidence on the use, development/validation, clinical effectiveness and cost-effectiveness of physiologically based early warning systems and TTS for the detection of deterioration in adult patients presenting to EDs.

Methods

We searched for any study design in scientific databases and grey literature resources up to March 2016. Two reviewers independently screened results and conducted quality assessment. One reviewer extracted data with independent verification of 50% by a second reviewer. Only information available in English was included. Due to the heterogeneity of reporting across studies, results were synthesised narratively and in evidence tables.

Results

We identified 6397 citations of which 47 studies and 1 clinical trial registration were included. Although early warning systems are increasingly used in EDs, compliance varies. One non-randomised controlled trial found that using an early warning system in the ED may lead to a change in patient management but may not reduce adverse events; however, this is uncertain, considering the very low quality of evidence. Twenty-eight different early warning systems were developed/validated in 36 studies. There is relatively good evidence on the predictive ability of certain early warning systems on mortality and ICU/hospital admission. No health economic data were identified.

Conclusions

Early warning systems seem to predict adverse outcomes in adult patients of varying acuity presenting to the ED but there is a lack of high quality comparative studies to examine the effect of using early warning systems on patient outcomes. Such studies should include health economics assessments.

Similar content being viewed by others

Background

Serious clinical adverse events are related to physiological abnormalities and changes in physiological parameters, such as blood pressure, pulse rate, temperature, respiratory rate, level of consciousness, often precede the deterioration of patients [1,2,3,4]. Early intervention may improve patient outcomes and failure to recognise acute deterioration in patients may lead to increased morbidity and mortality [5, 6]. Early warning systems and track and trigger systems (TTS) use routine physiological measurements to generate a score with pre-specified alert thresholds. Their aim is to identify patients at risk of deterioration early and trigger appropriate and timely responses known as escalation of care.

Early warning systems are used increasingly in acute care settings and several countries have developed National Early Warning Scores (NEWS). In Ireland, the National Clinical Guideline on the use of NEWS for adult patients came into effect in 2013 [7]. In the UK, The Royal College of Physicians (RCoP) published a National Early Warning Score in 2012 [8], and the National Institute for Health and Care Excellence (NICE) recommends the use of a TTS to monitor hospital patients [9]. In Australia, the Early Recognition of Deteriorating Patient Program introduced a TTS [10]. Similarly, in the USA, Rapid Response Systems with fixed “Calling Criteria” are recommended to trigger adequate medical response [11].

Many acutely ill patients first present to the emergency department (ED). The ED is a complex environment, distinctly different from other hospital departments. Visits are unscheduled and patients attend with undiagnosed, undifferentiated conditions of varying acuity. Medical staff must care for several patients simultaneously, deal with constantly shifting priorities and respond to multiple demands due to the unpredictable nature of the ED environment [12, 13]. Initial triage determines the priority of patients’ treatments but following triage, continuous monitoring and prompt recognition of deteriorating patients is crucial to escalate care appropriately. Early warning systems are sometimes used as an adjunct to triage for early identification of deterioration in the ED, particularly in situations of crowding [14]. Common early warning systems such as the Modified Early Warning Score (MEWS) [15] are used frequently and validated against specific subgroups of patients (e.g. acute renal failure, myocardial infarction, etc.) but may not be directly transferable to an ED setting [14] where patients present with a variety of unspecified conditions. There was an urgent need to evaluate the use of early warning systems and TTS in the ED.

The review addressed five objectives:

-

1.

To describe the use, including the extent of use, the variety of systems in use, and compliance with systems used, of physiologically based early warning systems or TTS for the detection of deterioration in adult patients presenting to the ED;

-

2.

To evaluate the clinical effectiveness of physiologically based early warning systems or TTS in adult patients presenting to the ED;

-

3.

To describe the development and validation of such systems;

-

4.

To evaluate the cost effectiveness, cost impact and resources involved in such systems;

-

5.

To describe the education programmes, including the evaluation of such programmes, established to train staff in the delivery of such systems.

Methods

Study design & scope

We conducted a systematic review, which we report according to the PRISMA guidelines [16]. The scope is presented in Table 1 using the PICOS (Population, Intervention, Comparison, Outcomes, types of Studies) format.

Search strategy

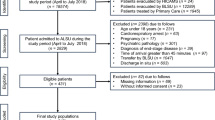

Search strategies using keywords and subject terms were developed for four electronic databases: the Cochrane Library (all databases therein up to 4 March 2016), Ovid Medline (up to 4 March 2016), Embase (up to 22 February 2016) and CINAHL (up to 4 March 2016). Additional grey literature resources that were searched included cost-effectiveness resources (n = 4; up to 11 March 2016), guidance resources (n = 6; up to 13 March 2016), professional bodies’ resources (n = 22; up to 11 March 2016), grey literature resources (n = 3; up to 13 March 2016) and clinical trial registries (n = 4; up to 13 March 2016). The searches were not restricted by language, however, only data in English were included. Full details of search strategies are provided in Additional file 1. Details of the search results are presented in Fig. 1 [16].

Search and selection Flow diagram. We searched both electronic databases, cost-effectiveness resources, professional bodies’ websites, clinical trial registries and grey literature resources. Experts in the fields were also contacted. We conducted double independent study selection based on title/abstract and full-text

Study selection & extraction

Two reviewers (FW, and PM or SD) independently screened the titles/abstracts. For additional resources, the information specialist (AC) sifted through the search results for potentially eligible studies. Full text reports from databases and additional resources were assessed for inclusion by two reviewers independently (FW, PM) and discrepancies were resolved by discussion or by involving a third person (DD).

Data extraction forms were designed for each of the six types of studies. Data extraction was completed by two reviewers (FW, PM). Each reviewer extracted data from half of the included reports and 50% of entries were checked by a second reviewer for accuracy. The data elements that were extracted are available in Additional file 2. Two reviewers (FW, and VS or DD) independently assessed the Risk of Bias (ROB)/methodological quality of the included reports, using the instruments listed in Table 2.

Data analysis

Data were summarised in evidence tables and synthesised narratively for use of warning systems, compliance, effects of systems on patient outcomes, development and validation of systems, and cost-effectiveness studies. For the effects of systems on patient outcomes, a meta-analysis was planned but was not performed due to the limited number of studies (n = 1). For validation studies, we provided results for AUROC (area under the receiver operating characteristic curve) [17]. It equals one for a perfect test and 0.5 for a completely uninformative test. For health economics studies, we planned to examine the cost-effectiveness but no such studies were identified. The GRADE (Grades of Recommendation, Assessment, Development and Evaluation) approach was used to assess the certainty of the body of evidence for effects of systems on patient outcomes.

Results

A total of 6397 records were identified. After removal of duplicates, 1147 database records were screened by title/abstract. Full texts of 83 records were assessed of which 43 studies (44 records) were included. The most common reason for exclusion was ‘non ED setting’ (n = 24). One study in Chinese was identified but the abstract was in English and presented relevant data that we included [18]. Five studies of the 56 screened additional resources were included. The results of the search/selection are presented in Fig. 1.

Risk of bias and quality of reports

Three of the four descriptive studies assessing the extent of early warning system use in EDs were judged to be of fair quality [19,20,21] and one of poor quality [22]. The five descriptive studies assessing compliance with using early warning systems were assessed as being of good [23,24,25] and fair quality [26, 27]. The single effectiveness study was rated as having high ROB [28]. Eight studies that developed and validated a system (in the same sample) were rated as having low (n = 6) [29,30,31,32,33,34] and unclear (n = 2) [35, 36] ROB. The 28 studies that validated an existing system in a new cohort were judged as having an overall low (n = 16) [37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52], unclear (n = 9) [18, 53,54,55,56,57,58,59,60] and high ROB (n = 3) [61,62,63]. The domains of selection bias and factor measurement were most commonly rated as unclear ROB because they did not specify the methods of sampling (n = 10) [18, 36,37,38, 47, 48, 54, 58,59,60] or did not state cut-off values used (n = 12) [31, 33,34,35, 42, 46, 49, 57, 58, 60, 61, 63]. One study also did not pre-specify the outcomes clearly [59]. One scoping review of predictive ability of early warning systems was rated of good quality [64]. We have provided full details of the ROB and quality of reports in Additional file 3.

Extent of use and compliance with early warning systems and track and trigger systems (1)

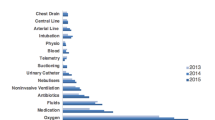

Four studies described the use of early warning systems within the ED and five studies examined compliance. The studies examining the extent of use collected data from medical records [19], a survey [20], a web-survey [21], and through participatory action research [22]. Considine et al. [19] described a pilot study of a 4-parameter system in the ED of a hospital in Australia and found that nurses made 93.1% of activations, the most common reasons being respiratory (25%) and cardiac (22.5%) and the median time between documenting physiological abnormalities and ED early warning system activation was 5 min (range 0–20). A survey in 2012 of 145 (57% response rate) clinical leads of EDs in the UK showed that 71% used an early warning system, most commonly the MEWS (80%) [20]. A survey in seven jurisdictions in Australia, found that 20 of 220 hospitals had a formal rapid response system in the ED but the prevalence of early warning systems in EDs was not reported [21]. Coughlan et al. [22] reported insufficient information in a conference abstract. The findings of these four studies demonstrate that multiple early warning systems are available and the extent of their use in the ED may vary geographically but limited data precludes comparisons between countries.

Three retrospective studies [23,24,25], one prospective study [27] and one audit (before and after early warning system implementation) [26] examined compliance with recording early warning system parameters. There was large variation in compliance ranging from 7% to 66% and factors such as patients’ triage category, age, gender, number of medications, length of hospital stay and the level of crowding in ED affected compliance with early warning systems [24]. Christensen et al. [23] reported a rate of 7% (22/300) of calculated scores in the clinical notes; however, 16% of records included all five vital signs. Heart rate (HR), shortness of breath (SOB) and loss of consciousness (LOC) were reported in 90–95% of records. Compliance with escalation of care varied; all nine patients that met the trauma call activation criteria had triggered a trauma call but only 24 of the 48 emergency call activation criteria had been responded to. Austen et al. [25] found a higher compliance with 66% of records containing an aggregate score, although only 72.6% were accurate. In an audit, the pre-implementation rate (30%) of abnormal vital sign identification was significantly lower than the post-implementation (53.5%) rate (p = 0.007) but no details of the implementation strategy were described [26]. Wilson et al. [27] compared the TTS scores recorded in charts with scores calculated retrospectively and found that 60.6% of charts contained at least one calculated TTS score but 20.6% (n = 211) were incorrect. This was mainly because of incorrect assignment of the score to an individual vital sign, which led to underscoring and reduced escalation activation. Hudson et al. [26] found that using a standardised emergency activation chart resulted in a higher percentage of abnormal vital signs recording (p = 0.007).

Effects of early warning systems and track and trigger systems (2)

One non-randomised controlled design compared the effect of the MEWS (n = 269), recorded by emergency nurses every four hours, with clinical judgment (n = 275) in patients who are waiting for in-patient beds in the ED of a large hospital in Hong Kong [28]. It found that the MEWS might increase the rate of activating a critical pathway (1 per 10 patients with a MEWS >4 versus 1 in 20 patients based on clinical judgement) but might make little or no difference to the detection of deterioration or adverse events (0.4% is both groups). We assessed the overall body of evidence as very low quality (GRADE) due to serious imprecision and high ROB (Additional file 3).

Development & Validation studies of early warning systems and track and trigger systems (3)

A scoping review by Challen et al. [64] identified 119 tools related to outcome prediction in ED; however, the majority were condition-specific tools (n = 94). They found the APACHE II score to have the highest reported AUROC curve (0.984) in patients with peritonitis.

Of the 36 primary development and/or validation studies, 13 were retrospective, 22 were prospective studies and one was a secondary analysis of a Randomised Controlled Trial (RCT) [48]. Eight studies developed and validated (in the same sample) an early warning system, while 28 validated an existing system in a different sample. Three studies included a random sample [30, 39, 43] and participants in the remaining studies were recruited consecutively or the sampling strategy was not stated clearly.

A total of 28 early warning systems were developed and/or validated. Churpek et al. [65] classified early warning systems into single-parameter systems, multiple-parameter systems and aggregate weighted scores. The early warning systems examined in the studies included primarily aggregate weighted scores (Table 3).

The most common outcomes examined were in-hospital mortality (n = 21), admission to ICU (n = 12), mortality (not specified where or during a specific follow up time frame possibly beyond hospital discharge) (n = 11), hospital admission (n = 7), and length of hospital stay (n = 5). Only one study measured the number of patients identified as critically ill as outcome [50]. Overall, the APACHE II score, PEDS, VIEWS-L, and THERM scores appeared relatively better at predicting mortality and ICU admission. The MEWS was the most commonly assessed tool and the cut-off value used was 4 or 5, with the exception of Dundar et al. [41] who found an optimal cut-off of 3 for predicting hospitalisation. To synthesise the findings, studies were categorised into three groups according to the degree of differentiation of the ED patient group: a patient group in a specific triage category(ies), a patient group with a certain (suspected) condition or an undifferentiated patient group. Findings are presented in Tables 4, 5 and 6 and full details are provided in Additional file 4.

Twelve of the 36 validation studies only included participants in (a) specific triage category(ies) (Table 4). Triage systems varied but included categories of patients that were critically ill (e.g. Manchester triage system I-III, Patient acuity category scale 1 or 2) or were admitted to the resuscitation room. In predicting mortality, the AUROC for the MEWS ranged from 0.63 to 0.75 [36, 37, 44, 57], from 0.70–0.77 for REMS [31, 37], 0.77–0.87 for NEWS [53], 0.90 for PEDS, 0.83 for APACHE II, and 0.77 for RTS [31]. Predicting ICU admission, the AUROC were 0.54 [37] and 0.49 [44] for MEWS and 0.59 for REMS [37], while to predict hospital admission the AUROC for NEWS was 0.66–0.70 [53]. Cattermole et al. [31] and Cattermole et al. [35] used a combined outcome of death and ICU admission and found an AUROC of 0.76 and 0.73 for MEWS, 0.90 and 0.75 for PEDS, 0.73 for APACHE II, 0.75 for RTS, 0.70 and 0.70 for REMS, 0.75 for MEES, 0.71 for NEWS, 0.70 for SCS and 0.84 for THERM. One study assessed the prediction of septic shock by NEWS (AUROC 0.89) [49].

Eleven other studies (12 records; Table 5) included a differentiated patient group with a specific (suspected) condition. Five studies only included patients with (suspected) sepsis [29, 32, 38, 40, 51, 59]. Other study populations were restricted to patients with trauma [46], suspected infection [45, 52], pneumonia [47] or who had signs of shock [48]. Assessing the predictive ability of systems to predict mortality, MEWS had an AUROC of 0.61 [38] and 0.72 [51], CCI of 0.65 [38], mREMS of 0.80 [45], NEWS of 0.70 [47], NEWS-L of 0.73 [47], VIEWS-L of 0.83 [46], SAPS II of 0.72 [48] and 0.90 [52], MPMO II of 0.69 [48], LODS of 0.60 [48], PIRO of 0.71 [59], APACHE II of 0.71 [59] and 0.90 [52], and SOFA of 0.86 [52].

The remaining 13 studies assessed early warning systems in an undifferentiated ED population (Table 6). The AUROC to predict mortality was 0.71 [42], 0.73 [43], and 0.89 [41] for MEWS, 0.76 for MEWS plus [43], 0.91 [33] and 0.85 [34] for REMS, 0.87 [33] and 0.65 [34] for RAPS and 0.90 for APACHE II [33].

We did not identify studies that examined the cost effectiveness of early warning systems or TTS in EDs, nor did we find any studies evaluating related educational programmes (objectives (4) and (5)).

Discussion

Multiple early warning systems were identified but the extent to which they are used in the ED seems to vary across countries for which data were available in the nine included descriptive studies. Moreover, incorrect score calculation was common. Compliance with recording aggregate scores was relatively low although the vital signs HR and BP were usually recorded. This finding emphasises the importance of effective implementation strategies. However, we did not identify any studies examining educational programmes for early warning systems. Existing guidelines regarding the use of early warning systems to monitor acute patients in hospital do include educational tools but are not specific to the ED [7, 8]. Using early warning systems in the ED would likely require contextual adaptation to the ED environment, for example broadening of the ranges of physiological parameters to reflect acutely unwell patients’ physiology. In implementing an early warning system in the ED, staff training could consist of a joined core package applicable to any service supplemented by an ED specific component. The performance of early warning systems in the ED will also depend on the time patients spend in the ED, which varies substantially between countries.

Evidence from 36 validation and development studies demonstrated that early warning systems used in ED settings seem to be able to predict adverse outcomes, based on the AUROC, but there is variability between studies. All but two early warning systems were aggregated scores, which limited the ability to compare between single, multiple parameter and aggregated scores. The APACHE II score, PEDS, VIEWS-L, and THERM scores were relatively best at predicting mortality and ICU admission, providing excellent discrimination ability (AUROC >0.8) [66]. The MEWS was the most commonly assessed system but findings suggest a relatively lower ability to predict mortality and ICU admissions compared to the four scores mentioned above, with only some studies indicating acceptable discriminatory ability (AUROC >0.7) and other studies indicating a lack of discriminatory ability (AUROC <0.7) [66], especially for the outcome of ICU admission. The exception was one low ROB study that found excellent discriminatory ability of MEWS for the outcome in-hospital mortality (AUROC 0.89) [41]. This was the only study that examined the MEWS in an undifferentiated sample, which could contribute to this observed difference. However, the ability of early warning systems to predict adverse outcomes does not mean that they are effective at preventing adverse outcomes through early detection of deterioration. Only one study addressed this question and it found that the introduction of an early warning system may have little or no difference in detecting deterioration or adverse events; however, the evidence was of very low quality making it impossible to draw any strong conclusions. The effectiveness of early warning systems also highly depends on an appropriate response to such systems. If effective, the role of early warning systems in the ED could primarily be to assist with patient and resource management in the post-triage phase, when the time for patients to see a treating clinicians is prolonged (overcrowding). They could also provide additional information to help determine who to refer to critical care admission or to guide discharge from the ED, but this is currently not generally their purpose in places where they have been implemented in the ED. Recent studies also show that additional laboratory data (e.g. D-dimer, lactate) might enhance the performance of early warning systems in predicting adverse outcome [67, 68].

The cost effectiveness of early warning systems remains unclear. While it is clear that implementing early warning systems requires a healthcare resource investment, the degree to which such systems may or may not result in cost savings remains unclear, particularly since the effectiveness of early warning systems in the ED is uncertain. The limited evidence base suggests that early warning systems might be effective in, for example, identifying deteriorating patients. This could result in improved patient outcomes and, should these effects exist, the potential healthcare cost savings could go towards funding, at least to some degree, their implementation. While this theory is open to question, it highlights the need to conduct primary research studies that directly evaluate their cost effectiveness. Such studies should focus on the monitoring of resource use, costs and patient outcomes in order to determine whether early warning systems are likely to deliver good value for money.

Limitations

We did not translate reports although only one non-English study was identified. We could not pool findings of the validation studies due to clinical heterogeneity; however, the AUROC were provided to inform accuracy of the models. Strengths of the review lie in its thorough search strategy, its scope and inclusion of different designs to best address the objectives and in its rigorous methodology with dual independent screening and quality assessment.

Conclusions

There are a lack of high quality RCTs examining the effects of using early warning systems in the ED on patient outcomes. The cost-effectiveness of such interventions, compliance, the effectiveness of related educational programmes and barriers and facilitators to implementation also need to be examined and reported as presently there is a clear lack of such evidence.

Abbreviations

- AGREE II:

-

Appraisal of Guidelines for Research & Evaluation

- AMSTAR:

-

Assessing the Methodological quality of Systematic Reviews

- APACHE II:

-

Acute Physiology and Chronic Health Evaluation score

- ASSIST:

-

Assessment Score for Sick patient Identification and Step-up in Treatment

- AUROC:

-

Area under the Receiver Operating Curve

- BEWS:

-

Bispebjerg Early Warning Score

- BP:

-

Blood Pressure

- CCI:

-

Charlson comorbidity index

- CI:

-

Confidence Interval

- CINAHL:

-

Cumulative Index to Nursing and Allied Health Literature

- CURB-65:

-

Confusion, Urea, Respiratory rate, Blood pressure, Age 65 or older

- DIST:

-

An Euclidean Distance-based Scoring System

- ED CIC:

-

ED Critical Instability Criteria

- ED:

-

Emergency Department

- EDWIN:

-

Emergency Department Work INdex

- EPOC:

-

Effective Practice and Organisation of Care

- ESI:

-

Emergency severity index

- ESS:

-

Proposed Ensemble-Based Scoring System

- eTTS:

-

Electronically calculated Track & Trigger Score

- EWS:

-

Early Warning Score

- GRADE:

-

Grading of Recommendations Assessment, Development and Evaluation

- HDU:

-

High Dependency Unit

- HR:

-

Heart Rate

- HRV:

-

Heart Rate Variability

- ICER:

-

Incremental Cost-Effectiveness Ratios (ICERs)

- ICU:

-

Intensive Care Unit

- IMEWS*:

-

Irish Maternity Early Warning System

- LOC:

-

Loss of Consciousness

- LODS:

-

Logistic Organ Dysfunction System

- MEDS:

-

Mortality in emergency department sepsis

- MEES:

-

Mainz Emergency Evaluation Score

- MeSH:

-

Medical Subject Headings

- MEWS plus:

-

Modified Early Warming Score plus

- MEWS*:

-

Modified Early Warning Score

- MPM0 II:

-

Morbidity Probability Model at admission

- mREMS:

-

Modified Rapid Emergency Medicine Score

- MTS:

-

Manchester Triage System

- NEDS:

-

Nationwide Emergency Department Sample

- NEWS (Ireland):

-

Irish National Early Warning Score

- NEWS:

-

National Early Warning Score

- NEWS-L:

-

National Early Warning Score + Lactate

- NICE:

-

National Institute for Health and Care Excellence

- OR:

-

Odds Ratio

- PACS:

-

Patient acuity category scale

- PARS:

-

Patient at Risk Score

- PEDS:

-

Prince of Wales ED Score

- PEWS:

-

Paediatric Early Warning System

- PIRO:

-

Predisposition, Insult/Infection, Response, and Organ dysfunction

- PSI:

-

Patient Status Index

- QALYs:

-

Quality Adjusted Life Years

- RAPS:

-

Rapid Acute Physiology Score

- REMS:

-

Rapid Emergency Medicine Score

- ROB:

-

Risk of Bias

- ROC:

-

Receiver Operating Curve

- RR:

-

Risk Ratio

- RTS:

-

Revised Trauma Score

- SAPS II:

-

New Simplified Acute Physiology Score

- SCS:

-

Simple Clinical Score

- SD:

-

Standard Deviation

- SIRS:

-

Systemic Inflammatory Response Syndrome

- SOFA:

-

Sequential Organ Failure Assessment

- SOS:

-

Sepsis in Obstetrics Score

- SSSS:

-

Severe Sepsis and Septic Shock score

- TEWS:

-

Triage Early Warning Score

- THERM:

-

The Resuscitation Management score

- TRISS:

-

Trauma – Injury Severity Score

- TTS:

-

Track and Trigger System

- VIEWS:

-

VitalPAC Early Warning Score

- VIEWS-L:

-

VitalPAC Early Warning Score-Lactate

References

Goldhill DR, McNarry AF. Physiological abnormalities in early warning scores are related to mortality in adult inpatients. Br J Anaesth. 2004;92(6):882–4.

Buist M, Bernard S, Nguyen TV, Moore G, Anderson J. Association between clinically abnormal observations and subsequent in-hospital mortality: a prospective study. Resuscitation. 2004;62(2):137–41.

Schein RM, Hazday N, Pena M, Ruben BH, Sprung CL. Clinical antecedents to in-hospital cardiopulmonary arrest. Chest. 1990;98(6):1388–92.

Smith AF, Wood J. Can some in-hospital cardio-respiratory arrests be prevented? A prospective survey. Resuscitation. 1998;37(3):133–7.

Franklin C, Mathew J. Developing strategies to prevent inhospital cardiac arrest: analyzing responses of physicians and nurses in the hours before the event. Crit Care Med. 1994;22(2):244–7.

Rivers EP, Coba V, Whitmill M. Early goal-directed therapy in severe sepsis and septic shock: a contemporary review of the literature. Curr Opin Anaesthesiol. 2008;21(2):128–40.

Department of Health. National Early Warning Score: National Clinical Guideline no.1. Dublin: Department of Health; 2013.

Royal College of Physicians. National Early Warning Score (NEWS): Standardising the assessment of acute-illness severity in the NHS. Report of a working party. London: Royal College of Physicians; 2012.

National Institute for Health and Care Excellence. Acutely ill patients in hospital. Recognition of and response to acute illness in adults in hospital (CG50). London: NICE; 2007.

Mitchell IA, McKay H, Van Leuvan C, Berry R, McCutcheon C, Avard B, et al. A prospective controlled trial of the effect of a multi-faceted intervention on early recognition and intervention in deteriorating hospital patients. Resuscitation. 2010;81(6):658–66.

Agency for Healthcare Research and Quality. Rapid Response Systems USA: AHRQ, U.S. Department of Health and Human Services, USA; 2014. Available from: https://psnet.ahrq.gov/primers/primer/4/rapid-response-systems.

Smith M, Feied C. The Emergency Department as a Complex System; 1999. Available from: http://www.necsi.edu/projects/yaneer/emergencydeptcx.pdf.

Courtney DM, Neumar RW, Venkatesh AK, Kaji AH, Cairns CB, Lavonas E, et al. Unique characteristics of emergency care research: scope, populations, and infrastructure. Acad Emerg Med. 2009;16(10):990–4.

Roland D, Coats TJ. An early warning? Universal risk scoring in emergency medicine. Emerg Med J. 2011;28(4):263.

Subbe CP, Kruger M, Rutherford P & Gemmel L. Validation of a modified early warning score in medical admissions. QJM - Monthly Journal of the Association of Physicians. 2001;94:521–526.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ. 2009;339:1–8. doi:10.1136/bmj.b2535.

Macaskill P, Gatsonis C, Deeks JJ, Harbord RM, Takwoingi Y. Chapter 10: Analysing and Presenting Results. Handbook for Systematic Reviews of Diagnostic Test Accuracy Version 1.0. The Cochrane Collaboration; 2010. Available from: http://srdta.cochrane.org/.

Gu M, Fu Y, Li C, Chen M, Zhang X, Xu J, et al. The value of modified early warning score in predicting early mortality of critically ill patients admitted to emergency department. Chinese Crit Care Med. 2015;27(8):687–90.

Considine J, Lucas E, Wunderlich B. The uptake of an early warning system in an Australian emergency department: a pilot study. Crit Care Resusc. 2012;14(2):135–41.

Griffiths JR, Kidney EM. Current use of early warning scores in UK emergency departments. Emerg Med J. 2012;29(1):65–6.

Australian Commission on Safety and Quality in Health Care (the Commission). Recognising and responding to clinical deterioration, survey of recognition and response systems in australia. 2011.

Coughlan E, Deasy C, McDaid F, Geary U, Ward M, O'Sullivan R, et al. An introduction to the emergency department adult clinical escalation protocol: ED-ACE. Resuscitation. 2015;96:33.

Christensen D, Jensen NM, Maaloe R, Rudolph SS, Belhage B, Perrild H. Low compliance with a validated system for emergency department triage. Dan Med Bull. 2011;58(6):A4294.

Johnson KD, Winkelman C, Burant CJ, Dolansky M, Totten V. The factors that affect the frequency of vital sign monitoring in the emergency department. JEN: Journal of Emergency Nursing. 2014;40(1):27–35.

Austen C, Patterson C, Poots A, Green S, Weldring T, Bell D. Using a local early warning scoring system as a model for the introduction of a national system. Acute Med. 2012;11(2):66–73.

Hudson P, Ekholm J, Johnson M, Langdon R. Early identification and management of the unstable adult patient in the emergency department. J Clin Nurs. 2015;24(21–22):3138–46.

Wilson SJ, Wong D, Clifton D, Fleming S, Way R, Pullinger R, et al. Track and trigger in an emergency department: an observational evaluation study. Emerg Med J. 2013;30(3):186–91.

Shuk-Ngor S, Chi-Wai O, Lai-Yee W, Chung JYM, Graham CA. Is the modified early warning score able to enhance clinical observation to detect deteriorating patients earlier in an Accident & Emergency Department? Australas Emerg Nurs J. 2015;18(1):24–32.

Albright CM, Ali TN, Lopes V, Rouse DJ, Anderson BL. The sepsis in obstetrics score: a model to identify risk of morbidity from sepsis in pregnancy. Am J Obstet Gynecol. 2014;211(1):39.e1–8.

Christensen D, Jensen NM, Maaløe R, Rudolph SS, Belhage B, Perrild H. Nurse-administered early warning score system can be used for emergency department triage. Dan Med Bull. 2011;58(6):A4221.

Cattermole GN, Mak SKP, Liow CHE, Ho MF, Hung KYG, Keung KM, et al. Derivation of a prognostic score for identifying critically ill patients in an emergency department resuscitation room. Resuscitation. 2009;80(9):1000–5.

Geier F, Popp S, Greve Y, Achterberg A, Glockner E, Ziegler R, et al. Severity illness scoring systems for early identification and prediction of in-hospital mortality in patients with suspected sepsis presenting to the emergency department. Wien Klin Wochenschr. 2013;125(17–18):508–15.

Olsson T, Lind L. Comparison of the rapid emergency medicine score and APACHE II in nonsurgical emergency department patients. Acad Emerg Med. 2003;10(10):1040–8.

Olsson T, Terent A, Lind L. Rapid emergency medicine score: a new prognostic tool for in-hospital mortality in nonsurgical emergency department patients. J Intern Med. 2004;255(5):579–87.

Cattermole GN, Liow ECH, Graham CA, Rainer TH. THERM: the resuscitation management score. A prognostic tool to identify critically ill patients in the emergency department. Emerg Med J. 2014;31(10):803–7.

Liu FY, Qin J, Wang RX, Fan XL, Wang J, Sun CY, et al. A prospective validation of national early warning score in emergency intensive care unit patients at Beijing. Hong Kong Journal of Emergency Medicine. 2015;22(3):137–44.

Bulut M, Cebicci H, Sigirli D, Sak A, Durmus O, Top AA, et al. The comparison of modified early warning score with rapid emergency medicine score: A prospective multicentre observational cohort study on medical and surgical patients presenting to emergency department. Emerg Med J [Internet]. 2014;31(6):[476–81 pp.]. Available from: http://emj.bmj.com/content/31/6/476.full.pdf.

Çildir E, Bulut M, Akalin H, Kocabaş E, Ocakoǧlu G, Aydin ŞA. Evaluation of the modified MEDS, MEWS score and Charlson comorbidity index in patients with community acquired sepsis in the emergency department. Intern Emerg Med. 2013;8(3):255–60.

Considine J, Rawet J, Currey J. The effect of a staged, emergency department specific rapid response system on reporting of clinical deterioration. Australas Emerg Nurs J. 2015;18(4):218–26.

Corfield AR, Lees F, Houston G, Zealley I, Dickie S, Ward K, et al. Early warning scores in sepsis: utility of a single early warning score in the emergency department? Intensive Care Med. 2012;38:S296–S7.

Dundar ZD, Ergin M, Karamercan MA, Ayranci K, Colak T, Tuncar A, et al. Modified early warning score and VitalPac early warning score in geriatric patients admitted to emergency department. Eur J Emerg Med; 2015. doi:10.1097/MEJ.0000000000000274. [ahead of print].

Eick C, Rizas KD, Meyer-Zürn CS, Groga-Bada P, Hamm W, Kreth F, et al. Autonomic nervous system activity as risk predictor in the medical emergency department: a prospective cohort study. Crit Care Med. 2015;43(5):1079–86.

Heitz CR, Gaillard JP, Blumstein H, Case D, Messick C, Miller CD. Performance of the maximum modified early warning score to predict the need for higher care utilization among admitted emergency department patients. J Hosp Med. 2010;5(1):E46–52.

Ho LO, Li H, Shahidah N, Koh ZX, Sultana P, Ong MEH. Poor performance of the modified early warning score for predicting mortality in critically ill patients presenting to an emergency department. World J Emerg Med. 2013;4(4):273–7.

Howell MD, Donnino MW, Talmor D, Clardy P, Ngo L, Shapiro NI. Performance of severity of illness scoring systems in emergency department patients with infection. Acad Emerg Med. 2007;14(8):709–14.

Jo S, Lee JB, Jin YH, Jeong TO, Yoon JC, Jun YK, et al. Modified early warning score with rapid lactate level in critically ill medical patients: the ViEWS-L score. Emerg Med J. 2013;30(2):123–9.

Jo S, Jeong T, Lee JB, Jin Y, Yoon J, Park B. Validation of modified early warning score using serum lactate level in community-acquired pneumonia patients. The National Early Warning Score-Lactate score. Am J Emerg Med. 2016;34(3):536–41.

Jones AE, Fitch MT, Kline JA. Operational performance of validated physiologic scoring systems for predicting in-hospital mortality among critically ill emergency department patients. Crit Care Med. 2005;33(5):974–8.

Keep JW, Messmer AS, Sladden R, Burrell N, Pinate R, Tunnicliff M, et al. National early warning score at emergency department triage may allow earlier identification of patients with severe sepsis and septic shock: a retrospective observational study. Emerg Med J. 2016;33(1):37–41.

Subbe CP, Slater A, Menon D, Gemmell L. Validation of physiological scoring systems in the accident and emergency department. Emerg Med J. 2006;23:841–5.

Vorwerk C, Loryman B, Coats TJ, Stephenson JA, Gray LD, Reddy G, et al. Prediction of mortality in adult emergency department patients with sepsis. Emerg Med J. 2009;26(4):254–8.

Williams J, Greenslade J, Chu K, Brown A, Lipman J. Severity scores in emergency department patients with presumed infection: a prospective validation study. Crit Care Med. 2016;44(3):539–47.

Alam N, Vegting IL, Houben E, van Berkel B, Vaughan L, Kramer MH, et al. Exploring the performance of the National Early Warning Score (NEWS) in a European emergency department. Resuscitation. 2015;90:111–5.

Armagan E, Yilmaz Y, Olmez OF, Simsek G, Gul CB. Predictive value of the modified early warning score in a turkish emergency department. Eur J Emerg Med. 2008;15(6):338–40.

Correia N, Rodrigues RP, Sá MC, Dias P, Lopes L, Paiva A. Improving recognition of patients at risk in a Portuguese general hospital: results from a preliminary study on the early warning score. Int J Emerg Med. 2014;7(1):Article number 22.

Graham CA, Choi KM, Ki CW, Leung YK, Leung PH, Mak P, et al. Evaluation and validation of the use of modified early warning score (MEWS) in emergency department observation ward...2007 Society for Academic Emergency Medicine annual meeting. Acad Emerg Med. 2007;14:S199–200.

Hock Ong ME, Lee Ng CH, Goh K, Liu N, Koh ZX, Shahidah N, et al. Prediction of cardiac arrest in critically ill patients presenting to the emergency department using a machine learning score incorporating heart rate variability compared with the modified early warning score. Crit Care. 2012;16(3): R108.

Junhasavasdikul D, Theerawit P, Kiatboonsri S. Association between admission delay and adverse outcome of emergency medical patients. Emerg Med J. 2013;30(4):320–3.

Nguyen HB, Van Ginkel C, Batech M, Banta J, Corbett SW. Comparison of predisposition, insult/infection, response, and organ dysfunction, acute physiology and chronic health evaluation II, and mortality in emergency department sepsis in patients meeting criteria for early goal-directed therapy and the. J Crit Care. 2012;27(4):362–9.

Wang AY, Fang CC, Chen SC, Tsai SH, Kao WF. Periarrest modified early warning score (MEWS) predicts the outcome of in-hospital cardiac arrest. J Formos Med Assoc. 2016;115(2):76–82.

Wilson SJ, Wong D, Pullinger RM, Way R, Clifton DA, Tarassenko L. Analysis of a data-fusion system for continuous vital sign monitoring in an emergency department. Eur J Emerg Med. 2016;23(1):28–32.

Naidoo DK, Rangiah S, Naidoo SS. An evaluation of the triage early warning score in an urban accident and emergency department in KwaZulu-Natal. South African Family Practice. 2014;56(1):69–73.

Burch VC, Tarr G, Morroni C. Modified early warning score predicts the need for hospital admission and inhospital mortality. Emerg Med J. 2008;25(10):674–8.

Challen K, Goodacre SW. Predictive scoring in non-trauma emergency patients: a scoping review. Emerg Med J. 2011;28(10):827–37.

Churpek MM, Yuen TC, Edelson DP. Risk stratification of hospitalized patients on the wards. Chest. 2013;143(6):1758–65.

Hosmer D, Lemeshow S. Applied logistic regression. 2 ed. New York: John Wiley & Sons, Inc.; 2000.

Abbott TE, Torrance HD, Cron N, Vaid N, Emmanuel J. A single-centre cohort study of National Early Warning Score (NEWS) and near patient testing in acute medical admissions. Eur J Intern Med. 2016;35:78–82.

Nickel CH, Kellett J, Cooksley T, Bingisser R, Henriksen DP, Brabrand M. Combined use of the National Early Warning Score and D-dimer levels to predict 30-day and 365-day mortality in medical patients. Resuscitation. 2016;106:49–52.

National Heart Lung and Blood Institute. Study Quality Assessment Tools. Secondary Study Quality Assessment Tools; 2014. Available from: http://www.nhlbi.nih.gov/health-pro/guidelines/in-develop/cardiovascular-risk-reduction/tools.

Higgins J, Green S. Cochrane Handbook for systematic Reviews of Interventions Version 5.1.0 [updated March 2011]: The Cochrane Collaboration; 2011. Available from: www.cochrane-handbook.org.

Effective Practice and Organisation of Care (EPOC). Suggested risk of bias criteria for EPOC reviews. EPOC Resources for review authors Oslo: Norwegian Knowledge Centre for the Health Services; 2015. Available from: http://epoc.cochrane.org/epoc-specific-resources-review-authors.

Drummond MF, Jefferson TO. Guidelines for authors and peer reviewers of economic submissions to the BMJ. The BMJ economic evaluation working party. BMJ : British Medical Journal. 1996;313(7052):275–83.

Philips Z, Ginnelly L, Sculpher M, Claxton K, Golder S, Riemsma R, et al. Review of guidelines for good practice in decision-analytic modelling in health technology assessment. Health Technol Assess 2004;8(36):iii-iv, ix-xi, 1-158.

Kansagara D, Englander H, Salanitro A, et al. Risk prediction models for hospital readmission: a systematic review. JAMA. 2011;306(15):1688–98.

Corfield AR, Lees F, Zealley I, Houston G, Dickie S, Ward K, et al. Utility of a single early warning score in patients with sepsis in the emergency department. Emergency medicine journal : EMJ. 2014;31(6):482–7.

Acknowledgements

We would like to thanks Dr. Sinead Duane (SD) (National University of Ireland, Galway) for her assistance with title/abstract screening.

Funding

This systematic review was commissioned by National Clinical Effectiveness Committee (NCEC), Ireland. The NCEC is a Ministerial committee established in 2010 as part of the Patient Safety First Initiative. The NCEC is supported by the Clinical Effectiveness Unit (CEU), Department of Health (Ireland). Invitations to tender were issued in July 2015 and a public procurement competition held for the provision of systematic literature reviews and budget impact analysis to support the development of National Clinical Guidelines. Subsequently, a series of reports were commissioned by the CEU/NCEC Department of Health. This review was the first under this contract. It supports the development of a National Clinical Guideline on Emergency Department Monitoring and Clinical Escalation Tool for Adults. The funder did not have any role in the design, conduct, analysis and write up of this study.

Availability of data and materials

All data generated or analysed during this study are included in this published article and its supplementary information files.

Author information

Authors and Affiliations

Contributions

FW and DD designed the protocol with input from all authors. AC constructed and conducted the search. All authors reviewed the search. FW and PM conducted study selection and data extraction, with input from DD, FM, and/or FH if necessary. FW, VS and DD conducted the ROB assessment. FW and NS conducted the GRADE quality assessment. PG and AR assessed and synthesised the economic literature. FW drafted the manuscript. All authors were involved in the data interpretation. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional files

Additional file 1:

Search strategies. This additional file contains a detailed description of the search strategies for the individual databases and other resources searched. (DOCX 49 kb)

Additional file 2:

Data Extraction. The elements that were extracted for each study type included in this review. (DOCX 33 kb)

Additional file 3:

Risk of bias and methodological quality assessment. A detailed description of the risk of bias/quality assessment of the included studies. (DOCX 53 kb)

Additional file 4:

Development and validation studies, additional information. In depth study information on the included studies developing and/or validating early warning system(s). (DOCX 69 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Wuytack, F., Meskell, P., Conway, A. et al. The effectiveness of physiologically based early warning or track and trigger systems after triage in adult patients presenting to emergency departments: a systematic review. BMC Emerg Med 17, 38 (2017). https://doi.org/10.1186/s12873-017-0148-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12873-017-0148-z