Abstract

Background

Emotion recognition dysfunction has been reported in both autism spectrum disorders (ASD) and attention-deficit/hyperactivity disorder (ADHD). This suggests that emotion recognition is a cross-disorder trait that may be utilised to understand the heterogeneous psychopathology of ASD and ADHD. We aimed to identify emotion recognition subtypes and to examine their relation with quantitative and diagnostic measures of ASD and ADHD to gain further insight into disorder comorbidity and heterogeneity.

Methods

Factor mixture modelling was used on speed and accuracy measures of auditory and visual emotion recognition tasks. These were administered to children and adolescents with ASD (N = 89), comorbid ASD + ADHD (N = 64), their unaffected siblings (N = 122), ADHD (N = 111), their unaffected siblings (N = 69), and controls (N = 220). Identified classes were compared on diagnostic and quantitative symptom measures.

Results

A four-class solution was revealed, with the following emotion recognition abilities: (1) average visual, impulsive auditory; (2) average-strong visual and auditory; (3) impulsive/imprecise visual, average auditory; (4) weak visual and auditory. The weakest performing class (4) contained the highest percentage of patients (66.07%) and the lowest percentage controls (10.09%), scoring the highest on ASD/ADHD measures. The best performing class (2) demonstrated the opposite: 48.98% patients, 15.26% controls with relatively low scores on ASD/ADHD measures.

Conclusions

Subgroups of youths can be identified that differ both in quantitative and qualitative aspects of emotion recognition abilities. Weak emotion recognition abilities across sensory domains are linked to an increased risk for ASD as well as ADHD, although emotion recognition impairments alone are neither necessary nor sufficient parts of these disorders.

Similar content being viewed by others

Background

Autism spectrum disorder (ASD) and attention-deficit/hyperactivity disorder (ADHD) are highly comorbid, which is likely due to shared aetiological mechanisms [1, 2]. Although many models of the relationship between ASD, ADHD, and their comorbidity have been hypothesised [2, 3], the inherent heterogeneity within both diagnostic categories complicates the study of the relationship between these disorders and their developmental trajectories [4, 5]. Overlap has also been noted in the cognitive deficits found in these disorders, including emotion recognition dysfunction. This important aspect of social cognition has been reported as being impaired in many disorders including ASD, ADHD, and disorders they are comorbid with, such as depression and oppositional defiant disorder (for a review see [6]).

For ASD, there is already a considerable body of literature regarding emotion recognition, for visual emotion recognition abilities in particular, while this area of research is relatively underdeveloped in ADHD. Nonetheless, studies of ASD and also ADHD have demonstrated visual emotion recognition impairments for specific emotions [7,8,9,10,11,12,13,14,15,16,17] and impairments for visually identifying all emotions [18,19,20,21,22,23,24,25,26,27,28,29]. However, other studies did not report impairments in recognising emotions at all [30,31,32,33]. Indeed, meta-analyses speak to the heterogeneity of visual emotion recognition performance in both ASD and ADHD [34,35,36].

For auditory emotion recognition, the literature is less advanced for both ASD and ADHD. Similar to visual emotion recognition, this literature also demonstrates inconsistent results, with auditory emotion recognition in both ASD and ADHD suggested to be comparable to controls [10, 17, 22, 24, 32, 37,38,39], worse than controls [19, 23, 24, 40,41,42], or only worse for specific emotions [12, 43,44,45].

Comparisons of emotion recognition impairments in ASD and ADHD are scarce. Bora and Pantellis [34] indirectly compared visual emotion recognition in ASD and ADHD and suggested that impairments were present in ADHD, although potentially milder than ASD. Only a handful of studies directly compared ASD and ADHD and occasionally comorbid ASD + ADHD [8, 25, 40, 46,47,48]. Similar to the findings for the disorders individually, the results of the comparisons were also inconsistent, with ASD and ADHD not demonstrating significant differences in emotion recognition impairments [46, 48] or showing quantitative differences [40]. Berggren et al. [8] and Van der Meer et al. [47] found overall differences in visual emotion recognition reaction time, but not in accuracy between ASD (+ ADHD) and ADHD. Therefore, to what extent emotion recognition impairments are similar and/or unique in ASD and ADHD is still largely unknown due to these heterogeneous results.

Previous research has demonstrated that empirical subtyping is a valuable tool for improving our understanding of ASD and ADHD [4, 5, 49,50,51]. Techniques that explicitly utilise heterogeneity, as observed for emotion recognition abilities in ASD and ADHD, can be used to optimally investigate overlap and differences between disorders. In our previous study [48], we identified a factor structure of emotion recognition that underlies ASD and ADHD, which can serve as a useful basis to identify homogeneous subgroups. Factor mixture modelling (FMM) can be used for this and is a logical extension of factor analysis, as it combines factor analysis with latent class analysis. It therefore allows the data to be viewed from categorical, person-based perspectives, and dimensional, variable-based perspectives simultaneously. This modelling technique can identify homogeneous subgroups without a-priori specifications, the use ofclass probabilities allows for classes with subtle differences to be identified, statistics determining the goodness-of-fit can be used to determine the most appropriate model and independence of participants is not an issue because family structures can be taken into account. In this way, FMM has advantages over other clustering methods, such as hierarchical clustering and community detection [52,53,54].

Consequently, the current study aimed to investigate the heterogeneity of emotion recognition in individuals with neurodevelopmental disorders ASD and ADHD, and its potential as a transdiagnostic phenotype by identifying emotion recognition subtypes with FMM. The emotion recognition data was reduced to underlying, continuously distributed factors that drive performance on emotion recognition tasks (as identified in Waddington et al. [48]). These factors were then used to create homogeneous subgroups of participants that have very similar emotion recognition profiles. By including patients with ASD, ADHD, and ASD + ADHD, unaffected siblings of ASD and ADHD patients, and healthy controls, we covered the entire spectrum from low risk (controls) to moderate risk (unaffected siblings) and high risk (patients) for emotion recognition dysfunction. The FMM-based emotion recognition subgroups were compared on their diagnostic (ASD, ADHD) and quantitative symptom profiles (autism symptom severity, inattention, hyperactivity/impulsivity, oppositional behaviour, anxiety) to examine how emotion recognition dysfunction relates to (cross-disorder) symptoms. To our knowledge, this is the first study to directly compare pure and comorbid ASD and ADHD on emotion recognition deficits and the role of ASD and ADHD behavioural symptoms, whilst accounting for heterogeneity.

Methods

Participants

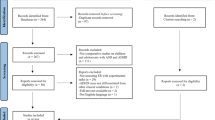

The data used in this study originally came from two cohorts, the NeuroIMAGE study, which is a follow-up (2009–2012) of the Dutch part of the International Multicenter ADHD Genetics (IMAGE) study performed between 2003 and 2006 [55,56,57,58] and the Biological Origins of Autism (BOA) study [59]. In these cohort studies, the recruited patient families were included if (1) they had one child with a clinical diagnosis of ADHD (NeuroIMAGE) or ASD (BOA) and (2) at least one biological sibling (regardless of possible clinical diagnosis) willing to participate. Healthy control families had at least one child, with no formal or suspected ADHD or ASD in the participant or any first-degree relatives. All participants were of European Caucasian descent. In both cohorts, the exclusion criteria were an IQ < 70, a diagnosis of epilepsy, brain disorders, known genetic disorders (e.g. down syndrome or fragile X syndrome), with an additional criterion of a clinical diagnosis of autistic disorder or Asperger disorder in the NeuroIMAGE cohort. For the current study, a subsample of participants was selected from both cohorts, which was matched on mean chronological age (M = 12.6 years, SD = 2.4, age range 7–18 years; see Additional file 1: Figure S1 and Table S4 for details). The number of comorbid ASD + ADHD unaffected siblings was relatively small, and subsequently, these unaffected siblings were grouped with the ASD-unaffected siblings. In total, 89 participants with ASD (further mentioned as ASD-only patients), 64 participants with comorbid ASD + ADHD (further mentioned as ASD + ADHD patients), 122 of their unaffected siblings, 111 patients with ADHD (further mentioned as ADHD-only patients), 69 of their unaffected siblings, and 220 healthy controls were included. The BOA data used in this study partly overlaps (79%) with a previous study [12].

Diagnostic assessment

All participants were phenotyped for ASD and ADHD using validated and standardised questionnaires and diagnostic interviews. Briefly, children already clinically diagnosed with ASD and/or ADHD, their siblings, and the control children were screened for the presence of ASD and ADHD symptoms using the parent-reported Social Communication Questionnaire (SCQ) [60] and the parent- and teacher-reported Conners Rating Scales—Revised (CPRS and CTRS respectively) [61]. Raw scores of ≥ 10 on the SCQ total score and T scores ≥ 63 on the Conners DSM-IV inattention, hyperactivity-impulsivity, or combined scales were considered as potential clinical cases.

All youth scoring above cutoff on any of the screening questionnaires underwent full clinical ADHD assessment using the Parental Account of Childhood Symptoms ADHD subversion (PACS) for ADHD (BOA cohort) [62] or the Schedule for Affective Disorders and Schizophrenia for School-Age Children—Present and Lifetime Version (K-SADS; in NeuroIMAGE) [63]. Clinical assessment for ASD was performed using the Autism Diagnostic Interview-Revised (ADI—R) structured interview for ASD (BOA cohort) [64]. Control youth were required to obtain non-clinical scores (i.e. a raw score < 10 on the SCQ and T score < 63 on both CPRS and CTRS) to qualify for this study (further details in Additional file 1).

Cognitive measures

Emotion recognition

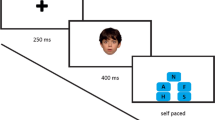

Speed (mean reaction time) and accuracy (percentage of errors) of visual and auditory emotion recognition were measured using the Identification of Facial Emotions (IFE) task and the Affective Prosody (AP) task from the battery of the Amsterdam Neuropsychological Tasks (ANT) [65]. In the IFE task, participants viewed individual photos of facial expressions and indicated if they saw the target emotion (happy, fearful, or angry) in these photos (Additional file 1: Figure S1). In the AP task, participants listened to sentences of neutral content that differed in prosody. The participants had to identify the emotion (happy, fearful, sad, or angry) of the voice they heard. Both tasks are fully described elsewhere [12].

Intelligence

An estimate of the Full-Scale Intelligence Quotient (FSIQ) was derived from two subtests (Vocabulary and Block Design) of the Wechsler Intelligence Scale for Children version III (WISC-III) [66] for participants younger than 16 years or the Wechsler Adult Intelligence Scale version III (WAIS-III) [67] for participants 16 years and older.

Procedure

The tasks described were part of broader assessment batteries used in the BOA and NeuroIMAGE cohorts. Testing was conducted in quiet rooms with minimal distractions. Participants were asked to withhold use of psychoactive drugs for at least 24 h before measurement. During the testing day, participants were motivated with short breaks, and at the end of the day, the children were rewarded. Both studies were approved by the local medical ethics board. Written informed consent was obtained from all participants and their parents (parents signed informed consent for participants under 12 years of age).

Analyses

SPSS version 22 was used for the analysis of the data. Less than 5% of the data was missing. Data was imputed for each cohort separately using SPSS based on the data from the IFE and AP tasks as well as gender, age, IQ, and family and diagnostic status. The measures for both cohorts together were normalised and standardised using Van der Waerden transformation, and the IQ scoring was reversed. Consequently, all of the variables had scores on the same z scale, with lower scores implying better performance (fewer errors, faster reaction times, and a higher IQ). Standardised age-regressed residuals were calculated.

The model-fitting analyses were performed using MPlus version 6 [68]. The stepwise FMM strategy suggested by [52] was used on the combined cohorts, using the models derived from less demanding techniques (i.e. confirmatory factor analysis (CFA), latent class analysis (LCA)) as input for the more complex FMM estimation process. The following four factors underlying the 14 emotion recognition variables from the previous factor analysis of this sample [48] were used: visual speed, visual accuracy, auditory speed, and auditory accuracy. As the next step, LCA was conducted on the four factors to explore how many classes could be distinguished. Subsequently, we conducted the FMM, using the models derived from CFA conducted in Waddington et al. [48] and LCA to guide model fitting. For all stages of the LCA and FMM, the model fit was assessed using the Akaike information criteria (AIC), Bayesian information criteria (BIC), Lo-Mendell-Rubin test, and entropy. The details of criterion for adequate fit and the results of the models can be found in the Additional file 1: Tables S1–S3.

Mixed models were used to assess the differences between the classes on scores for the emotion recognition factors, speed-accuracy trade-offs in the visual and auditory domains, as well as gender, age, IQ, and phenotypic measures. Additionally, chi-square tests were performed to assess differences in diagnostic proportions between the classes. Multiple comparisons were corrected according to the false discovery rate (FDR) controlling procedure, with the q value set at .05. Post hoc analyses comparing patients and controls separately across classes were conducted to assess if different emotion recognition subtypes within diagnostic groups were related to symptoms. A comprehensive overview of the results of the mixed models and T tests can be found in the Additional file 1: Tables S5–S6.

Results

Utilising the four-factor solution (visual speed, visual accuracy, auditory speed, and auditory accuracy) identified in Waddington et al. [48] for this sample, four classes were suggested in the LCA. A four-factor four-class solution was therefore used as an initial criterion to guide the fitting of the FMM, which had an optimal fit for the data (see Additional file 1 for details). The classes were labelled according to the characteristics of their emotion recognition profiles (Fig. 1, Additional file 1: Tables S4–S6). Class 4 (n = 149) was the worst-performing class, demonstrating weaknesses on both visual and auditory emotion recognition. This class was significantly slower and less accurate than the other classes across visual and auditory emotion recognition, with the exception of class 3 on visual accuracy. Comparing modalities within class 4, participants were significantly faster and more accurate at visual than auditory emotion recognition (visual versus auditory speed: pFDR < .001, d = 0.77; visual versus auditory accuracy: pFDR = .004, d = 0.48). Class 2 (n = 303) was an average-performing class, having average to strong visual and auditory emotion recognition abilities, relative to the other classes. This class was faster and more accurate than class 4 in both visual and auditory emotion recognition. Class 2 also showed signs of a speed-accuracy trade-off, favouring accuracy over speed (visual speed versus visual accuracy: pFDR < .001, d = 0.98; auditory speed versus auditory accuracy: pFDR < .001, d = 2.00). Classes 1 and 3 can be referred to as an impulsive auditory, average visual emotion recognition class and an impulsive and imprecise visual, average auditory emotion recognition class, respectively. Class 1 (n = 173) showed significant speed-accuracy trade-offs, trading both visual and auditory accuracy in favour of speed, strongest for auditory recognition (visual speed versus visual accuracy: pFDR = .001, d = 0.51; auditory speed versus auditory accuracy: pFDR < .001, d = 5.84). Class 3 had a greater speed-accuracy trade-off in visual emotion recognition: pFDR < .001, d = 13.87) than auditory emotion recognition: pFDR = .003, d = 0.88). The inclusion of age, sex, and IQ covariates did not attenuate these effects.

Box: emotion recognition classes identified in the current study across patients with pure and comorbid ASD and ADHD, their unaffected siblings, and controls. Each line represents the emotion recognition profile (mean factor scores − speed and accuracy of IFE and AP tasks ± 1 S.E.) for each class. Lower scores represent faster reaction time and fewer errors made. An age covariate was applied. Lower panel: pie charts represent the within-class weighted proportions of each diagnostic group. For each class, diagnostic groups were weighted using a weighting coefficient of %within diagnostic group/% within-class. Right panel: pie charts represent the proportion of each diagnostic group across each class

The four identified classes differed in IQ (F(3, 671) = 15.17, pFDR < .001, d = 0.24) and sex (F(3, 671) = 5.33, pFDR = .002, d = − 0.30), but not in age (F(3, 671) = 1.83, pFDR = .14 d = − 0.05). Class 4 had a significantly lower IQ than the other classes (class 4 versus class 1: pFDR < .001, d = 0.43; class 4 versus class 2: pFDR < .001, d = 0.40; class 4 versus class 3: pFDR = .006, d = 0.45). The proportion of males did not differ significantly between the classes (see Additional file 1: Table S7).

Sex and IQ affected the individual factors differentially. Sex and IQ only affected accuracy factors (visual—sex: F(1, 675) = 7.27, pFDR = .028, d = 0.02; IQ: F(1, 675) = 5.14, pFDR = .048, d = 0.02; auditory—sex: F(1, 675) = 9.20, pFDR = .006, d = − 0.21; IQ: F(1, 675) = 28.19, pFDR = .006, d = − 0.36), but not visual or auditory speed.

Behavioural and diagnostic profiles of the classes

As shown in Fig. 2 and Additional file 1: Table S7, when testing dimensional measures of ASD- and ADHD-related behaviour, class 1 had lowest symptom levels for ASD, ADHD, and related behaviours, whereas class 4 had the highest. These classes significantly differed in all symptom domains (pFDRs < .001–.017, d’s = 0.27–0.50) with the exception of oppositional behaviour (p = .07). Similarly, class 4 also differed significantly from class 2 on almost all measures of ASD, ADHD, and comorbid symptoms (pFDRs < .003–.024, d’s = 0.23–0.30) except for oppositional symptoms (p = .07) and fear of changes (p = .08). Class 1 and 2 differed in several ASD symptoms. Class 3 did not differ significantly from any of the classes, potentially due to the small sample size.

Class profiles of IQ and ASD, ADHD, and comorbid symptoms. Each bar represents the mean z score (± 1 S.E.) for ADHD symptoms from Conners’ Parents Rating Scale (CPRS), Conners’ Teachers Ratings Scale (CTRS), and the Children’s Social Behaviour Questionnaire (CSBQ). IQ z scores were reversed (higher IQ z score = lower IQ). One asterisk indicates the significant difference between classes 1 and 4. Two asterisks indicate the significant differences between classes 1 and 4 or classes 2 and 4. Three asterisks indicate the significant differences between class 4 and classes 1, 2, and 3

The pattern of results did not change, when age, sex, and IQ were accounted for, but the significance of the majority of these class differences was attenuated (Additional file 1: Table S7). Class 1–class 4 differences in two CSBQ items remained significant (not tuned in: pFDR = .002, d = 0.34; Tendency to withdraw: pFDR = .002, d = 0.35).

Diagnostic profiles of members of the classes are given in Fig. 1, which provides weighted percentages to account for differences in the numbers of participants in the diagnostic groups. Pure and comorbid ASD and ADHD patients, their unaffected siblings, and controls all were present in every class. The average to strong performing classes (classes 1 and 2) consisted of 28.3% and 15.3% controls, respectively, class 3 had 10.9% controls, but also the weakest performing class (class 4) contained 10.1% of controls. With regard to patients, class 4 consisted of 25.1% ASD + ADHD patients, 17.2% ASD-only patients, and 23.8% ADHD-only patients. Class 1 contained 11.4% ASD + ADHD patients, 9.0% ASD-only patients, and 15.2% ADHD-only patients. Similarly, class 2 also had substantial proportions of patients, with 16.5% ASD + ADHD patients, 19.3% ASD-only patients, and 13.2% ADHD-only patients. Nonetheless, within-class proportions comparison showed that only class 1 had significantly more controls than ASD + ADHD patients (X2(1) = 7.41, pFDR = .045, d = 0.68) and ASD-only patients (X2(1) = 9.76, pFDR = .03, d = 0.76) after FDR correction (Additional file 1: Table S8).

How do patients with and without emotion recognition impairments differ?

To examine how patients with and without emotion recognition impairments diverged in their symptoms, post hoc analyses comparing the patients from class 4 with those from the best performing classes 1 and 2 were performed. Again, in these analyses, the phenotypic profiles of classes were compared using mixed models and corrected for age and familial effects. ASD + ADHD, ASD-only, and ADHD-only patients in classes 4, 1, and 2 did not significantly differ in ASD- or ADHD-related symptom scores (see Additional file 1: Figure S2). These results did not change when age, sex, and IQ were accounted for.

Discussion

In this study, we aimed to investigate emotion recognition as a transdiagnostic phenotype in order to understand the heterogeneity and comorbidity of ADHD and ASD. We did so by identifying emotion recognition subtypes in pure and comorbid ASD and ADHD patients, their unaffected siblings, and healthy controls. This was done using FMM, which is powerful in integrating variable-based data reduction (factor analysis) with a person-based identification of qualitatively different subtypes (latent class analysis). We explored whether the identified subtypes provide insight into the heterogeneity and comorbidity of ASD and ADHD. Analyses revealed four groups of participants: an average to strong performing class with reasonably fast and accurate performance across visual and auditory domains (class 2), a class with impulsive auditory recognition yet reasonably fast and accurate in their visual emotion recognition (class 1), a poor performing class with slow and inaccurate performance across both domains (class 4), and an intermediate class with a large speed-accuracy trade-off in the visual domain (class 3). None of the identified classes or related emotion recognition impairments in either modality were particularly linked to ASD or ADHD. All classes contained patients from all three diagnostic categories (ASD-only, ADHD-only, and ASD + ADHD) as well as their unaffected siblings and controls. Emotion recognition impairments were similarly frequent in ASD as in ADHD and ASD + ADHD, with 17.2% of ASD patients, 23.8% of ADHD patients, and 25.1% of patients with comorbid ASD and ADHD falling into the weakest performing class. At the level of quantitative measures of disease-related behaviour, the relationship between the two ADHD symptom domains inattention vs hyperactivity/impulsivity and class status was comparable, indicating emotion recognition problems linked similarly to both ADHD symptom domains. There was a clear enrichment of ASD- and ADHD-related symptoms seen in the worst-performing class 4. However, post hoc tests demonstrated that although these symptoms are associated with emotion recognition impairments, there is certainly no one-to-one relationship.

The poorest performing emotion recognition class (class 4) differed from the two good/average performing classes (classes 1 and 2) in quantitative measures, with higher levels of ASD, ADHD, and comorbid symptoms being present in class 4. Previous studies [12, 26] suggested that behavioural symptoms, such as inattention, are important contributors to emotion recognition dysfunction. Our results partially support this notion, given that higher levels of ASD, ADHD, and comorbid symptoms were present in the weakest performing class. Yet, the pure and comorbid ASD and ADHD patients in the strong to average performing classes (classes 1 and 2) did not differ significantly in quantitative measures of ASD and ADHD symptoms compared to the weakest class (class 4), indicating that the relationship between ASD and ADHD behavioural symptoms and emotion recognition dysfunction is not 1:1. Furthermore, our findings speak to the presence of cognitive heterogeneity in both ASD and ADHD, which corroborates previous findings of multiple developmental pathways in ADHD [4, 50] and ASD [5, 69]. Moreover, none of the identified emotion recognition subtypes were specific to ASD or ADHD, neither in symptoms nor in diagnosis. This lack of specificity, combined with observed associations with comorbid symptoms, suggests that emotion recognition dysfunction is a trait that can be utilised to understand the co-occurrence of other disorders that are frequently comorbid with ASD and ADHD (e.g. bipolar disorder, anxiety disorders, conduct disorder) and that are also marked by emotion recognition dysfunction [6].

Despite the commonalities in findings for emotion recognition problems in relation to both quantitative ASD and ADHD symptom measures as well as diagnostic/categorical measures, it is possible that the mechanisms underlying poor emotion recognition in ASD and ADHD differ. For example, individuals with ASD allocated to the weakest emotion recognition class may have a primary emotion recognition deficit, whereas individuals with ADHD allocated to the same class might have more general information processing difficulties, with emotion recognition problems being secondary to that. To address this issue, mechanistic measures are needed, foremost functional brain activation whilst performing emotion recognition tasks. Unfortunately, in the current study, these data were not available. However, even if underlying mechanisms contributing to a poor emotion recognition performance may be partly dissimilar in individuals with ASD and ADHD, the end result is that on a performance level, a large proportion of individuals with ADHD performs on the worst level as measured by the tasks administered in this study. This likely translates to social difficulties in daily life, where a poor registration of emotional expressions of other people (whether due to primary emotion recognition difficulties or secondary general information processing difficulties) is likely to interfere with social interaction. In conclusion, although functional brain imaging measures are needed to shed light on the issue of (non-) overlapping mechanisms underlying poor emotion recognition in individuals with ASD and ADHD, on a performance level, much similarities are observed with poor emotion recognition abilities present in a substantial proportion of individuals with ASD and ADHD alike.

This study demonstrates the benefits of utilising a model-based approach to gain insight into the comorbidity of disorders, in this case, ASD and ADHD. Our model suggests that emotion recognition dysfunction may not be a feature that distinguishes between ASD and ADHD. Previous literature has shown emotion recognition to be a plausible endophenotype [12, 48] and that the development of social cognition is suggested to be functionally dependent on the maturation of cognitive skills, and possibly vice versa [47, 70, 71]. Therefore, our findings, combined with current literature, could indicate support for models like the step-endophenotype framework [5]. This model poses that below a certain threshold, an individual’s risk is low; yet, once a threshold has been reached, the risk markedly increases. In this case, it may be hypothesised that—similar to cognitive dysfunction—emotion recognition dysfunction only increases the likelihood of neurodevelopmental symptoms and disorders in combination with other risk factors and/or after a certain threshold has been exceeded. However, much remains to be investigated regarding this relationship between emotion recognition, these neurodevelopmental disorders and their symptoms, as well as other risk factors. The identified subtypes may differ in neural correlates, genetics, and their developmental trajectories, which may affect their response to treatment. Studies including longitudinal designs are required to further clarify the role of emotion recognition in its effects on developmental psychopathology.

This study has several strengths, including the use of both categorical and dimensional measures of psychopathology and analyses, employing validated emotion recognition paradigms, and the inclusion of a large sample size containing patients, unaffected siblings, and controls. This has enabled comparisons of both quantitative and qualitative differences in emotion recognition impairments across ASD and ADHD and the assessment of the heterogeneity of such impairments across the entire breadth of the symptom distribution. However, even with our large sample size, the least prevalent class (class 3) reached only a limited size. For this class, power was limited to investigate links to diagnostic status and quantitative behavioural measures. This study was also limited by the type of emotion recognition tasks used. Although these tasks have been validated [65], they assess visual and auditory emotion recognition separately. Future studies of simultaneous visual and auditory emotion recognition could provide more insight into multimodal emotion processing abilities. Another potential limitation of our study was that all of our participants had an IQ higher than 70, limiting the generalisability of the results to individuals with an IQ below 70. IQ also significantly differed between subtypes, with the average-strong performing classes (classes 1 and 2) having higher IQs. Furthermore, IQ was associated with the accuracy of emotion recognition, corroborating with reports that IQ and emotion recognition abilities are intertwined [72, 73]. However, controlling for IQ differences did not alter the findings, indicating that class differences were not driven by overall cognitive performance differences. The male to female ratio was more balanced in the group of individuals with ADHD than it was in the group of individuals with ASD. However, since results were analysed with gender-corrected means, it is unlikely that this has influenced the outcome of the clustering analysis. Although we used FMM because of its many advantages, there are other methodologies that can investigate the heterogeneity of these disorders. For example, Lombardo et al. [74] used hierarchical clustering of participants patterns of response on the Reading the Minds Eye Test (RMET) to understand the heterogeneity of metalizing in ASD and typically developing participants. This study found response patterns specific to ASD subgroups and other response patterns specific to typically developing participants. Though this was not the aim of the current study, elucidating diagnostic specific response patterns are potentially informative of diagnostic differentiating factors. As such, the advantages and disadvantages of each modelling technique should be considered in relation to the aims of a study.

The findings of this study have clear clinical implications. Emotion recognition dysfunction cannot be used either to confirm or disconfirm the presence of ASD and/or ADHD. However, this does not mean that emotion recognition impairments should not be assessed and treated when necessary in both ASD and ADHD. As demonstrated, emotion recognition impairments are at least as important in ADHD as they are in ASD, particularly as these impairments show links to cognitive functioning and are likely to contribute to emotion dysregulation, both of which can be identified in ASD and ADHD. Therefore, for some individuals with ASD and/or ADHD, the inclusion of emotion recognition skills training could be highly beneficial.

Conclusions

This study identified emotion recognition subtypes in patients with pure and comorbid ASD and ADHD, their unaffected siblings, and healthy controls using FMM. Emotion recognition dysfunction behaves as a risk factor for developing ASD and/or ADHD, although heterogeneity of impairments across clinical groups and controls clearly shows that there is no 1:1 relationship with ASD and/or ADHD symptoms. The observed classes with differential emotion recognition profiles warrant further investigation, as they could differ in neural correlates and genetics, and prognosis may be different. We conclude that emotion recognition dysfunction should be considered when assessing and treating both ASD and ADHD, demonstrating the need to broadly assess an individual’s strengths and weaknesses to provide optimal care.

Abbreviations

- ADHD:

-

Attention-deficit/hyperactivity disorder

- ADI—R:

-

Autism Diagnostic Interview—Revised

- AIC:

-

Akaike information criteria

- ANT:

-

Amsterdam Neuropsychological Task

- AP:

-

Affective Prosody task

- ASD + ADHD:

-

Comorbid autism spectrum disorders and attention-deficit/hyperactivity disorder

- ASD:

-

Autism spectrum disorders

- BIC:

-

Bayesian information criteria

- BOA:

-

Biological Origins of Autism study

- CFA:

-

Confirmatory factor analysis

- CPRS:

-

Conners Parents Rating Scale—Revised

- CTRS:

-

Conners Teachers Rating Scale—Revised

- FDR:

-

False discovery rate

- FMM:

-

Factor mixture modelling

- FSIQ:

-

Full Scale Intelligence Quotient

- IFE:

-

Identification of Emotional Expressions task

- IMAGE:

-

International Multicenter ADHD Genetics study

- IQ:

-

Intelligence quotient

- K-SADS:

-

Schedule for Affective Disorders and Schizophrenia for School-Age Children (Present and Lifetime version)

- LCA:

-

Latent class analysis

- PACS:

-

Parental Account of Childhood Symptoms

- pFDR:

-

FDR-corrected p value (q value)

- RMET:

-

Reading the Minds Eye Test

- SCQ:

-

Social Communication Questionnaire

- WAIS-III:

-

Wechsler Adult Intelligence Scale III

- WISC-III:

-

Wechsler Intelligence Scale for Children version III

References

Pinto R, Rijsdijk F, Ronald A, Asherson P, Kuntsi J. The genetic overlap of attention-deficit/hyperactivity disorder and autistic-like traits: an investigation of individual symptom scales and cognitive markers. J Abnorm Child Psychol. 2016;44:335–45.

Rommelse NNJ, Geurts HM, Franke B, Buitelaar JK, Hartman CA. A review on cognitive and brain endophenotypes that may be common in autism spectrum disorder and attention-deficit/hyperactivity disorder and facilitate the search for pleiotropic genes. Neurosci Biobehav Rev. 2011;35:1363–96.

Banaschewski T, Hollis C, Oosterlaan J, Roeyers H, Rubia K, Willcutt E, Taylor E. Towards an understanding of unique and shared pathways in the psychopathophysiology of ADHD. Dev Sci. 2005;8:132–40.

Fair DA, Bathula D, Nikolas MA, Nigg JT. Distinct neuropsychological subgroups in typically developing youth inform heterogeneity in children with ADHD. Proc Natl Acad Sci U S A. 2012;109:6769–74.

Rommelse NN, van der Meer JM, Hartman CA, Buitelaar JK. Cognitive profiling useful for unraveling cross-disorder mechanisms support for a step-function endophenotype model. Clin Psychol Sci. 2016;4:957–70.

Collin L, Bindra J, Raju M, Gillberg C, Minnis H. Facial emotion recognition in child psychiatry: a systematic review. Res Dev Disabil. 2013;34:1505–20.

Aspan N, Bozsik C, Gadoros J, Nagy P, Inantsy-Pap J, Vida P, Halasz J. Emotion recognition pattern in adolescent boys with attention-deficit/hyperactivity disorder. Biomed Res Int. 2014;2014:1–8.

Berggren S, Engström A-C, Bölte S. Facial affect recognition in autism, ADHD and typical development. Cogn Neuropsychiatry. 2016;21:213–27.

Boraston Z, Blakemore SJ, Chilvers R, Skuse D. Impaired sadness recognition is linked to social interaction deficit in autism. Neuropsychologia. 2007;45:1501–10.

Jones CR, Pickles A, Falcaro M, Marsden AJ, Happé F, Scott SK, Sauter D, Tregay J, Phillips RJ, Baird G. A multimodal approach to emotion recognition ability in autism spectrum disorders. J Child Psychol Psychiatry. 2011;52:275–85.

Losh M, Adolphs R, Poe MD, Couture S, Penn D, Baranek GT, Piven J. Neuropsychological profile of autism and the broad autism phenotype. Arch Gen Psychiatry. 2009;66:518–26.

Oerlemans AM, van der Meer JMJ, van Steijn DJ, de Ruiter SW, de Bruijn YGE, de Sonneville LMJ, Buitelaar JK, Rommelse NNJ. Recognition of facial emotion and affective prosody in children with ASD ( plus ADHD) and their unaffected siblings. Eur Child Adolesc Psychiatry. 2014;23:257–71.

Rinke L, Candrian G, Loher S, Blunck A, Mueller A, Jancke L. Facial emotion recognition deficits in children with and without attention deficit hyperactivity disorder: a behavioural and neuropsychological approach. Neuroreport. 2017;28:917–21.

Sucksmith E, Allison C, Baron-Cohen S, Chakrabarti B, Hoekstra R. Empathy and emotion recognition in people with autism, first-degree relatives, and controls. Neuropsychologia. 2013;51:98–105.

Sjöwall D, Roth L, Lindqvist S, Thorell LB. Multiple deficits in ADHD: executive dysfunction, delay aversion, reaction time variability, and emotional deficits. J Child Psychol Psychiatry. 2013;54:619–27.

Wallace GL, Case LK, Harms MB, Silvers JA, Kenworthy L, Martin A. Diminished sensitivity to sad facial expressions in high functioning autism spectrum disorders is associated with symptomatology and adaptive functioning. J Autism Dev Disord. 2011;41:1475–86.

Xavier J, Vignaud V, Ruggiero R, Bodeau N, Cohen D, Chaby L. A multidimensional approach to the study of emotion recognition in autism spectrum disorders. Front Psychol. 2015;6:1954.

Brosnan M, Johnson H, Grawmeyer B, Chapman E, Benton L. Emotion recognition in animated compared to human stimuli in adolescents with autism spectrum disorder. J Autism Dev Disord. 2015;45:1785–96.

Corbett B, Glidden H. Processing affective stimuli in children with attention-deficit hyperactivity disorder. Child Neuropsychol. 2000;6:144–55.

Da Fonseca D, Seguier V, Santos A, Poinso F, Deruelle C. Emotion understanding in children with ADHD. Child Psychiatry Hum Dev. 2009;40:111–21.

Dan O, Raz S. Response patterns to emotional faces among adolescents diagnosed with ADHD. J Atten Disord. 2015;2015:1–8.

Doyle-Thomas K, Goldberg J, Szatmari P, Hall G. Neurofunctional underpinnings of audiovisual emotion processing in teens with autism spectrum disorders. Front Psych. 2013;4:48.

Lindner JL, Rosén LA. Decoding of emotion through facial expression, prosody and verbal content in children and adolescents with Asperger’s syndrome. J Autism Dev Disord. 2006;36:769–77.

Mazefsky CA, Oswald DP. Emotion perception in Asperger’s syndrome and high-functioning autism: the importance of diagnostic criteria and cue intensity. J Autism Dev Disord. 2007;37:1086–95.

Sinzig J, Morsch D, Lehmkuhl G. Do hyperactivity, impulsivity and inattention have an impact on the ability of facial affect recognition in children with autism and ADHD? Eur Child Adolesc Psychiatry. 2008;17:63–72.

Rump KM, Giovannelli JL, Minshew NJ, Strauss MS. The development of emotion recognition in individuals with autism. Child Dev. 2009;80:1434–47.

Tehrani-Doost M, Noorazar G, Shahrivar Z, Banaraki AK, Beigi PF, Noorian N. Is emotion recognition related to core symptoms of childhood ADHD? J Can Acad Child Adolesc Psychiatry. 2017;26:31.

Wong N, Beidel DC, Sarver DE, Sims V. Facial emotion recognition in children with high functioning autism and children with social phobia. Child Psychiatry Hum Dev. 2012;43:775–94.

Yuill N, Lyon J. Selective difficulty in recognising facial expressions of emotion in boys with ADHD. Eur Child Adolesc Psychiatry. 2007;16:398–404.

Buitelaar JK, Van der Wees M, Swaab-Barneveld H, Van der Gaag RJ. Theory of mind and emotion-recognition functioning in autistic spectrum disorders and in psychiatric control and normal children. Dev Psychopathol. 1999;11:39–58.

Castelli F. Understanding emotions from standardized facial expressions in autism and normal development. Autism. 2005;9:428–49.

Greenbaum RL, Stevens SA, Nash K, Koren G, Rovet J. Social cognitive and emotion processing abilities of children with fetal alcohol spectrum disorders: a comparison with attention deficit hyperactivity disorder. Alcohol Clin Exp Res. 2009;33:1656–70.

Reinvall O, Voutilainen A, Kujala T, Korkman M. Neurocognitive functioning in adolescents with autism spectrum disorder. J Autism Dev Disord. 2013;43:1367–79.

Bora E, Pantelis C. Meta-analysis of social cognition in attention-deficit/hyperactivity disorder (ADHD): comparison with healthy controls and autistic spectrum disorder. Psychol Med. 2016;46:699–716.

Lozier LM, Vanmeter JW, Marsh AA. Impairments in facial affect recognition associated with autism spectrum disorders: a meta-analysis. Dev Psychopathol. 2014;26:933–45.

Shaw P, Stringaris A, Nigg J, Leibenluft E. Emotion dysregulation in attention deficit hyperactivity disorder. Am J Psychiatry. 2014;171:276–93.

Chevallier C, Noveck I, Happé F, Wilson D. What’s in a voice? Prosody as a test case for the theory of mind account of autism. Neuropsychologia. 2011;49:507–17.

Heikkinen J, Jansson-Verkasalo E, Toivanen J, Suominen K, Väyrynen E, Moilanen I, Seppänen T. Perception of basic emotions from speech prosody in adolescents with Asperger’s syndrome. Logoped Phoniatr Vocol. 2010;35:113–20.

Le Sourn-Bissaoui S, Aguert M, Girard P, Chevreuil C, Laval V. Emotional speech comprehension in children and adolescents with autism spectrum disorders. J Commun Disord. 2013;46:309–20.

Demopoulos C, Hopkins J, Davis A. A comparison of social cognitive profiles in children with autism spectrum disorders and attention-deficit/hyperactivity disorder: a matter of quantitative but not qualitative difference? J Autism Dev Disord. 2013;43:1157–70.

Korpilahti P, Jansson-Verkasalo E, Mattila ML, Kuusikko S, Suominen K, Rytky S, Pauls DL, Moilanen I. Processing of affective speech prosody is impaired in Asperger syndrome. J Autism Dev Disord. 2007;37:1539–49.

Rosenblau G, Kliemann D, Dziobek I, Heekeren HR. Emotional prosody processing in autism spectrum disorder. Soc Cogn Affect Neurosci. 2017;12:224–39.

Chronaki G, Garner M, Hadwin JA, Thompson MJJ, Chin CY, Sonuga-Barke EJS. Emotion-recognition abilities and behavior problem dimensions in preschoolers: evidence for a specific role for childhood hyperactivity. Child Neuropsychol. 2015;21:25–40.

Gebauer L, Skewes J, Horlyck L, Vuust P. Atypical perception of affective prosody in autism spectrum disorder. Neuroimage Clin. 2014;6:370–8.

Köchel A, Schöngaßner F, Feierl-Gsodam S, Schienle A. Processing of affective prosody in boys suffering from attention deficit hyperactivity disorder: a near-infrared spectroscopy study. Soc Neurosci. 2015;10:583–91.

Bühler E, Bachmann C, Goyert H, Heinzel-Gutenbrunner M, Kamp-Becker I. Differential diagnosis of autism spectrum disorder and attention deficit hyperactivity disorder by means of inhibitory control and ‘theory of mind’. J Autism Dev Disord. 2011;41:1718–26.

van der Meer JM, Oerlemans AM, van Steijn DJ, Lappenschaar MG, de Sonneville LM, Buitelaar JK, Rommelse NN. Are autism spectrum disorder and attention-deficit/hyperactivity disorder different manifestations of one overarching disorder? Cognitive and symptom evidence from a clinical and population-based sample. J Am Acad Child Adolesc Psychiatry. 2012;51:1160–72 e3.

Waddington F, Hartman CA, De Bruijn Y, Lappenschaar GAM, Oerlemans A, Buitelaar J, Franke B, Rommelse NNJ. Visual and auditory emotion recognition problems as familial cross-disorder phenomenon in ASD and ADHD. Eur Neuropsychopharmacol. 2018; Epub.

Clarke SD, Kohn MR, Hermens DF, Rabbinge M, Clark CR, Gordon E, Williams LΜ. Distinguishing symptom profiles in adolescent ADHD using an objective cognitive test battery. Int J Adolesc Med Health. 2007;19(3):355–67.

van Hulst BM, de Zeeuw P, Durston S. Distinct neuropsychological profiles within ADHD: a latent class analysis of cognitive control, reward sensitivity and timing. Psychol Med. 2015;45:735–45.

Veatch OJ, Veenstra-VanderWeele J, Potter M, Pericak-Vance MA, Haines JL. Genetically meaningful phenotypic subgroups in autism spectrum disorders. Genes Brain Behav. 2014;13:276–85.

Clark SL, Muthen B, Kaprio J, D’Onofrio BM, Viken R, Rose RJ. Models and strategies for factor mixture analysis: an example concerning the structure underlying psychological disorders. Struct Equ Model. 2013;20:681–703.

Haver, Meisnere, Doja. Committee on the Development of a Consensus Case Definition for Chronic Multisymptom Illness in 1990–1991 Gulf War Veterans; Board on the Health of Select Populations; Institute of Medicine. Chronic Multisymptom Illness in Gulf War Veterans: Case Definitions Reexamined. Washington (DC): National Academies Press (US); 2014.

Varriale R, Vermunt JK. Multilevel mixture factor models. Multivar Behav Res. 2012;47:247–75.

Müller UC, Asherson P, Banaschewski T, Buitelaar JK, Ebstein RP, Eisenberg J, Gill M, Manor I, Miranda A, Oades RD, Roeyers H, Rothenberger A, Sergeant JA, Sonuga-Barke EJS, Thompson M, Faraone SV, Steinhausen HC. The impact of study design and diagnostic approach in a large multi-centre ADHD study. Part 1: ADHD symptom patterns. BMC Psychiatry. 2011;11:54.

Müller UC, Asherson P, Banaschewski T, Buitelaar JK, Ebstein RP, Eisenberg J, Gill M, Manor I, Miranda A, Oades RD, Roeyers H, Rothenberger A, Sergeant JA, Sonuga-Barke EJS, Thompson M, Faraone SV, Steinhausen HC. The impact of study design and diagnostic approach in a large multi-centre ADHD study: part 2: dimensional measures of psychopathology and intelligence. BMC Psychiatry. 2011;11:55

Nijmeijer JS, Hoekstra PJ, Minderaa RB, Buitelaar JK, Altink ME, Buschgens CJM, Fliers EA, Rommelse NNJ, Sergeant JA, Hartman CA. PDD symptoms in ADHD, an independent familial trait? J Abnorm Child Psychol. 2009;37:443–53.

Rommelse NN, Buitelaar JK. Executive functioning in attention-deficit–hyperactivity disorder–crucial or trivial? Eur Psychiatric Rev. 2008;2:17–20.

van Steijn DJ, Richards JS, Oerlemans AM, de Ruiter SW, van Aken MAG, Franke B, Buitelaar JK, Rommelse NNJ. The co-occurrence of autism spectrum disorder and attention-deficit/hyperactivity disorder symptoms in parents of children with ASD or ASD with ADHD. J Child Psychol Psychiatry. 2012;53:954–63.

Rutter M, Bailey A, Lord C. The social communication questionnaire: manual; 2003.

Conners CK. Conners’ parent rating scale--revised (L); 1997.

Taylor E, Sandberg S, Thorley G, Giles S. The epidemiology of childhood hyperactivity. New York: Oxford University Press; 1991.

Kaufman J, Birmaher B, Brent D, Rao U, Flynn C, Moreci P, Williamson D, Ryan N. Schedule for affective disorders and schizophrenia for school-age children present and lifetime version (K-SADS-PL): initial reliability and validity data. J Am Acad Child Adolesc Psychiatry. 1997;36:980–8.

Le Couteur A, Lord C, Rutter M. The autism diagnostic interview-revised (ADI-R). Los Angeles: Western Psychological Services; 2003.

De Sonneville L. Amsterdam neuropsychological tasks: a computer-aided assessment program. Comp Psych. 1999;6:187–203.

Wechsler D. WISC-III Handleiding. London: The Psychological Corporation; 2000.

Wechsler D. WAIS-III-NL: Nederlandstalige bewerking: Technische handleiding (herziene uitgave). Amsterdam: Pearson Assessment and Information BV; 2000.

Muthén LK, Muthén BO. 1998–2010 Mplus user’s guide. Los Angeles: Muthén and Muthén; 2010.

Fountain C, Winter AS, Bearman PS. Six developmental trajectories characterize children with autism. Pediatrics. 2012;129:e1112–20.

Baribeau DA, Doyle-Thomas KA, Dupuis A, Iaboni A, Crosbie J, McGinn H, et al. Examining and comparing social perception abilities across childhood-onset neurodevelopmental disorders. J Am Acad Child Adolesc Psychiatry. 2015;54:479–86.

Hartman CA, Geurts HM, Franke B, Buitelaar JK, Rommelse NN. Changing ASD-ADHD symptom co-occurrence across the lifespan with adolescence as crucial time window: illustrating the need to go beyond childhood. Neurosci Biobehav Rev. 2016;71:529–41.

Lawrence K, Bernstein D, Pearson R, Mandy W, Campbell R, Skuse D. Changing abilities in recognition of unfamiliar face photographs through childhood and adolescence: performance on a test of non-verbal immediate memory (Warrington RMF) from 6 to 16 years. J Neuropsychol. 2008;2:27–45.

Lawrence K, Campbell R, Skuse D. Age, gender, and puberty influence the development of facial emotion recognition. Front Psychol. 2015;6:761.

Lombardo MV, Lai MC, Auyeung B, Holt RJ, Allison C, Smith P, et al. Unsupervised data-driven stratification of mentalizing heterogeneity in autism. Sci Rep. 2016;6:35333.

Acknowledgements

The authors would like to thank all the parents, teachers and children, who kindly participated.

Funding

This project was supported by the European Community’s Horizon 2020 research and innovation program under the Marie Sklodowska-Curie grant agreement #643051 (MiND). The study was also partly funded by grants assigned to Dr. Rommelse by the Netherlands Organisation for Scientific Research (NWO grant #91610024) and to Dr. Buitelaar (NWO Large grant #1750102007010). Dr. Franke is supported by a Vici grant from NWO (grant # 016–130-669) and by the European Community’s Seventh Framework Program (FP7) under grant agreement #278948 (TACTICS), the Innovative Medicines Initiative Joint Undertaking under grant agreement #115300 (EU-AIMS), resources of which are composed of financial contribution from the European Union’s Seventh Framework Program (FP7/2007–2013) and the European Federation of Pharmaceutical Industries and Associations (EFPIA) companies’ in-kind contribution, and the European Community’s Horizon 2020 Program grant #642996 (BRAINVIEW). Funding agencies had no role in study design, data collection, interpretation nor influence on writing.

Availability of data and materials

The data that support the findings of this study are available from the NeuroIMAGE and BOA cohort studies, but restrictions apply to the availability of these data, which were used under licence for the current study, and so are not publicly available. Data are however available from the authors upon reasonable request and with permission of the responsible persons of the NeuroIMAGE and BOA cohorts.

Author information

Authors and Affiliations

Contributions

FW, NR, and BF were responsible for the study concept and design. AO and YdB contributed to the acquisition of data in BOA. FW performed the analyses. ML assisted with the data analysis. NR assisted with the interpretation of findings. FW drafted the manuscript. NR, BF, JB, CH, and AO provided the critical revision of the manuscript for important intellectual content. All authors critically reviewed the content and approved the final version for publication.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

Both the Biological Origins of Autism (BOA) study and the NeuroIMAGE study were approved by the local medical ethics board. Written informed consent was obtained from all participants and their parents. The parents signed consent forms for children under the age of 12 years.

Consent for publication

Not applicable.

Competing interests

Waddington, Hartman, de Bruijn, Lappenschaar, Oerlemans, and Rommelse have no conflict of interest to disclose. In the past 4 years, Buitelaar has been a consultant to/member of advisory board of/and/or speaker for Janssen Cilag BV, Eli Lilly, Bristol-Myer Squibb, Organon/Shering Plough, UCB, Shire, Medice and Servier. Franke has received educational speaking fees from Merz and Shire.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

Emotion Recognition Subtyping Supplement. (DOCX 954 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Waddington, F., Hartman, C., de Bruijn, Y. et al. An emotion recognition subtyping approach to studying the heterogeneity and comorbidity of autism spectrum disorders and attention-deficit/hyperactivity disorder. J Neurodevelop Disord 10, 31 (2018). https://doi.org/10.1186/s11689-018-9249-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s11689-018-9249-6