Abstract

Background

Telemetry systems that estimate animal positions with hyperbolic positioning algorithms also provide a technology-specific estimate of position precision (e.g., horizontal position error (HPE) for the VEMCO positioning system). Position precision estimates (e.g., dilution of precision for a global positioning system (GPS)) have been used extensively to identify and remove positions with unacceptable measurement error in studies of terrestrial and surfacing aquatic animals such as turtles and seals. Few underwater acoustic telemetry studies report using position precision estimates to filter data in accordance with explicit data quality objectives because the relationship between the precision estimate and measurement error is not understood or not evaluated. A four-step filtering approach which incorporates data-filtering principles developed for GPS tracking of terrestrial animals is demonstrated. HPE was evaluated for its effectiveness to remove uncertain fish positions acquired from a new underwater fine-scale passive acoustic monitoring system.

Results

Four filtering objectives were identified based on the need for three sequential future analyses and four data quality criteria were developed for evaluating the performance of individual filters (step 1). The unfiltered, baseline position confidence from known-position test tags was considered to determine if filtering was necessary (step 2). An HPE filter cutoff of 8 was selected to meet the four criteria (step 3), and it was determined that one analysis may need to be adjusted for use with this dataset. The data quality objectives, criteria, and filter selection rationale were reported (step 4).

Conclusions

The use of position precision estimates that reflect the confidence in the positioning process should be considered prior to the use of biological filters that rely on a priori expectations of the subject’s movement capacities and tendencies. Position confidence goals should be determined based upon the needs of the research questions and analysis requirements versus arbitrary selection, in which filters of previous studies are adopted. Data filtering with this approach ensures that data quality is sufficient for the selected analyses and presents the opportunity to adjust or identify a different analysis in the event that the requisite precision was not attained. Ignoring these steps puts a practitioner at risk of reporting errant findings.

Similar content being viewed by others

Background

Advances in animal-tracking systems have made high-resolution, high-density position data increasingly available, affording the scientific community a substantial opportunity to finely examine the habits of difficult-to-detect individuals and species [1–4]. Any technology that assigns spatiotemporal positions to an animal produces values that contain varying degrees of measurement error. Determining an appropriate method to detect and remove positions with excessive error from datasets presents a real challenge to researchers as over-filtering (i.e., the omission of ‘good positions’) is a significant source of error in animal movement studies [5–7]. Each time location data are filtered, researchers must also be cognizant of the tradeoff between increasing confidence in position accuracy and the possibility of introducing systematic biases [8]. A useful technique must therefore discern between measurement error and true variation in animal movement. Two common approaches involve the use of biological filters, which equate to detecting violations of an animal’s expected maximum movement capacity (e.g., maximum observed velocity) or known habits (e.g., observing a fish on land), or a position precision estimate (PPE) in which each measured position has an associated position-specific estimate of measurement error or confidence. We outline a widely applicable approach to use a PPE to remove positions with unacceptable measurement error, and provide an example of a specific application to a telemetry dataset collected from an underwater acoustic positioning system, the VEMCO Positioning System (hereafter VPS; VEMCO Division of AMIRIX Systems; Halifax, Nova Scotia).

The VPS system

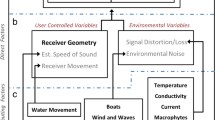

VEMCO provides two-dimensional positions derived from raw detection data using proprietary hyperbolic positioning algorithms that estimate positions from the time-difference-of-arrival (TDOA) of a coded signal at three or more receivers in the VPS array [9]. Each detection record in a receiver consists of a transmission code that is unique to a tag, the time of signal reception, and, depending on the tag configuration, an environmental variable measured by the tag (e.g., pressure, temperature, etc.). As with all TDOA-based processes, time synchronization of the autonomous receivers is critical. The VPS system makes use of synchronization transmitters (synch-tags) placed at stationary locations throughout the array to account for time drift in individual receiver clocks [9, 10] (Figure 1). Like other multi-receiver positioning systems [11], the VPS provides a weighted average location from every combination of three receivers that detected each tag transmission (hereafter referred to as a position ‘fix’), and an associated PPE termed horizontal position error (HPE) [9, 12].

Acoustic telemetry activities at the Hammond Bay field site. A schematic of the VPS array that was located in Lake Huron around the mouth of the Ocqueoc River (blue line). Triangles represent receiver (VR2W) positions. VPS array testing in 2010 included two stationary tag tests (Gray dots, with median point as a black dot) and three mobile test transects (black dots forming lines). The schematic is oriented with north up and the black line running from left to right (east to west) represents the coast.

Filtering spatial data with HPE

HPE is assigned by VEMCO to positions for both synch-tags and animal-implanted tags, but practitioners must perform auxiliary testing to be able to discern when and how HPE can be used to filter data. HPE is a unitless estimate of position precision based on the relationship between theoretical position error sensitivities and observed measurement errors (measured horizontal position error in meters, HPEM) for synchronization tags [9]. If the relation between HPE and measurement error developed for synch-tags is representative of the relation for animal implanted tags, then the dimensionless HPE value can be used as a confidence estimate for each fish position.

Choosing an appropriate HPE cutoff to remove inaccurate fixes is the primary challenge in filtering VPS data, but few studies have provided an exposition of the filtering approach [13]. Studies either used a conservatively high HPE cutoff to remove the major problematic positions but retain most data (e.g., for an HPE number of 20, 83% retention [14]), or employed a lower HPE cutoff for the study and sacrificed large amounts of data (e.g., for an HPE number of 10, 58% retention [15]). In other cases, HPE was used but the level of data reduction was not reported (for an HPE number of 15 [12]), no filtering occurred and the HPE estimated for synch-tags was assumed to characterize the precision of all animal positions in the array [10], or the authors referenced an example of the accuracy attained from a similarly configured array used in an unrelated study [16]. Despite an extensive literature on PPE estimates [5–8], a consistent framework does not exist for developing and applying an HPE-based filter to positional telemetry data, and many studies using HPE do not clearly report the selection criteria for the cutoff other than reference to prior studies. Confusion exists among practitioners over what filtering steps are broadly applicable to all studies and what components should be independently derived due to unique research questions.

We propose a generalizable four-step process for filtering VPS spatial datasets via HPE that accommodates the specific data quality objectives of each analysis. The steps include: 1) establishing position confidence goals (maximum tolerable errors) contingent on the scientific question and the chosen data analysis technique; 2) evaluating the unfiltered, baseline position confidence from known-position test tags; 3) selecting a filter cutoff and assessing if data reductions have introduced biases (a-c); and, 4) reporting the data filtering criteria and process in the final manuscript or report. The details that comprise the four steps are not interchangeable for all studies but completing each step will improve the rationale and transparency of data filtering for many telemetry studies. Importantly, the process does not set rigid standards for data inclusion; rather, it requires the assignment of standards that are specific and defensible for each project.

To demonstrate our approach, an extensive data set was derived from a 41-receiver array placed in the waters of Lake Huron (Michigan, USA) near the outlet of the Ocqueoc River (45° 29ʹ 24.53ʺ N, 84° 4ʹ 23.45ʺ W). The array had an internal area of 0.92 km2 and a maximum coverage of 8.22 km2 in waters that ranged from 0.2 to 20.8 m deep. The dataset included recorded positions taken from stationary tags (9 synch-tags and two additional stationary position tags), three mobile tag tests (submerged tags dragged behind a slow-moving boat with continuous GPS recording), and animal location data recorded for 76 free-swimming adult female sea lampreys (Petromyzon marinus) with surgically-implanted tags (V9P-2H, 15 to 45 second transmission rate). With this dataset we demonstrate a framework for filtering VPS data with HPE, provide a comparison to current filtering approaches used by other VPS studies, and contrast the advantages of PPE based filters and biological filters.

Results

We demonstrate our approach by filtering the example dataset according to the data quality objectives of the study.

Step 1: Establishing data quality objectives

We adopted four data quality objectives. Data quality objectives are explicit assignments of acceptable errors in precision that derive from the requirements of the data analysis technique. Any defensible objective could have been adopted to fulfill this step because the details are study-specific and could be simplified to a single criterion.

Our four objectives included the ability to detect changes in trajectories (e.g., ground speed, turn angle, etc.), perform basic behavioral assignments (e.g., is the animal moving or stationary?), assign fish positions to habitat types (point data), and avoid the loss of acceptably accurate data while ensuring the removal of unacceptable positions if filtering is necessary to perform habitat assignment (Table 1). These objectives include both position specific criteria (i.e., <15 m error) and global criteria (i.e., mean error <1.77 m), though in each case a position specific filter (i.e., precision target) must be identified. The filter value should represent the minimum value that must have been met by a filtered dataset in order to achieve each objective. Among candidate filters, we identified the preferred filter as the one with the largest HPE that best met all criteria. The criteria provided a clear framework with which to select the best filter.

Step 2: Estimating the baseline position confidence in the array

Baseline position confidence refers to the quality of the unfiltered position data and is partly a function of array coverage (i.e., low quality positions are likely more abundant in regions of the array with poor coverage). Baseline confidence must be identified to determine if filtering is required. The baseline position confidence was described by mean and median array accuracy; position yield, the proportion of data that was below 6 m error, and the number of positions with greater than 15 m error were enumerated for stationary and mobile test tags that collectively determined if the array met the first three criteria. The fourth criteria dealt with data reduction. The initial evaluation was based on position yield (percentage of positions estimated vs. expected during the time period), average data accuracy, presence of large errors (>15 m), the twice the distance root mean square (2DRMS) model equations, the proportion of data that was below 6 m error, and array coverage based on actual fish telemetry data.

There were three tests of accuracy and each test varied in length, including 29,355, 16,400, and 138 positions (stationary site one, two, and mobile test) (Figure 1). The position yield for the stationary tags were 82.3% (location #1) and 89.4% (location #2), and 94.8% for the mobile test; this indicates that 5.2 to 17.7% of the tag transmissions did not result in a position. The estimated positions of the stationary tags were generally clustered around a central position; each exhibited a ‘tail’ of increasingly erroneous positions offset to the west. Without filtering, the mean error was 11.7 m for stationary test one (median: 2.9 m, range: 2.7 to 29,289.3 m), 4.2 m for stationary test two (median: 2.6 m, range: 2.5 to 1,425.8 m), and 5.81 m for the mobile test (median: 2.95 m, range: 2.50 to 186.40 m). There were 625, 134, and 0 positions with greater than 15 m of error (stationary test one, stationary test two, mobile test). The linear models for the 2DRMS regression line relating HPE and measured error obtained from the stationary-location tags were y = 0.18x – 0.89 (r2 = 0.92) for location #1 and y = 1.1x – 0.57 (r2 = 0.78) for location #2 (Figure 2). The slopes were both near 1 (0.81 and 1.1), indicating a similar relationship of HPE and synchronization tags to HPE and fish tags, with HPE potentially representing a conservative estimate of position error for fish tags (the slope of the 2DRMS line was less than 1). The proportion of test positions with 6 meter accuracy exceeded 90% for all three tests (Figure 3). Maximum array coverage was 8.22 km2, estimated by forming a polygon around the outer most estimated fish positions.

The stationary test schematics and 2DRMS plots of all stationary test positions. Two schematics depict all VPS positions during two stationary tag tests, including (a) 29,355 positions between the dates (6/17/2010 to 7/01/2010), and (b) 16,400 positions between the dates (7/01/2010 to 7/08/2010), allowing us to evaluate array performance at two locations through an extended period of time. The white dot in the center of the clusters is the median location. The HPE versus measured error to the median point is shown for each estimated position during test one (c) and test two (d). The white circles with black outline and red x represent twice the distance root mean square error of x and y components of error within an HPE bin of one; 95% of tag detections have an error less than this point within each bin. Note there is a minimum HPE of 2.7 and 2.5 within the data. The line running between these points represents the 2DRMS and the equation and fit for this line are shown in the top left corner of (c) and (d), respectively. Data points above the 15 m bin, which can be seen in (a) and (b) are not shown in (c) or (d), because they are outside of the zone of interest.

Proportion of test positions with measured error from 1 to 10 m. Percent of positions with accuracy equal to or less than each measured value for the mobile tag test (circle), stationary tag at location one (triangle), and the stationary tag at location two (square) depicted an array with most positions having accuracy better than 6 m. The average error of the unfiltered data for the stationary tag at location one (1.98 m), location two (1.11 m), and the mobile test (6.83 m) are marked by representative symbols along the x-axis.

Step 3a: Evaluating HPE filters consistent with the data quality objectives and guides

The necessary filter cutoff for each criterion to be met must be determined prior to selection of a filter cutoff. Although the highest acceptable HPE value will retain the most data, the range of HPE values that fulfill each criterion to reach each objective should be evaluated and reported. If no value meets all criteria, either the analysis associated with an objective must be reevaluated, a different analysis should be selected, or the research question will prove difficult to address with the original planned approach.

Criterion 1

Any HPE cutoff from 3 (minimum observed in the test data) to 15 (maximum considerable due to objective 2) was sufficient to meet criterion 1 for stationary tests but not for the mobile test, although the mobile test methodology was susceptible to inherently high average error (Table 2).

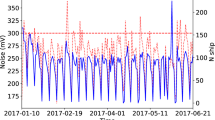

Criterion 2

The number of positions that violated criterion 2 increased greatly between HPE filters of 6 and 15 (max error: location 1: 10.0 to 26.7 m, location 2: 9.2 to 25.0 m; violating positions: location 1: 0 to 21, location 2: 0 to 10) (Figure 4). Only HPE cutoffs less than 7 (stationary location 1) or 8 (stationary location 2) met criteria 2, although no position had greater than 15 m error for the mobile test (Table 2).

Resultant data quality for HPE cutoffs of 3 to 15. The mean and maximum measured error is below 1.77 m for all HPE thresholds, sufficient to meet criteria 1 for both stationary test one (black) and test two (red). The maximum error exceeds 15 m at an HPE of 7 for test one and 8 for test two (violating criteria 2). The number of positions violating each test is located at the top of the figure for HPE cutoffs of 3 to 15.

Criterion 3

The 2DRMS equation for desired 95% confidence in 6 m accuracy (Criterion 3) returned an HPE cutoff of 8.5 for stationary test one and 6.0 for stationary test two (Figure 2). There was not enough mobile test data to calculate this metric.

Criterion 4

The incorrectly retained proportion of all positions was less than 1% for HPE cutoffs of 3 to 10 for stationary test one and was 1% for all HPE cutoffs 3 to 15 for stationary test two (Figure 5). For the mobile test, only an HPE of 3 reduced the incorrectly retained proportion below 1%, though only four positions were incorrectly retained (3% of total; max. error = 7.7 m) when HPE cutoffs ranged from 6 to 10 (Figure 6; Table 2). Incorrectly rejected positions occurred at less than 5% of all positions for any HPE cutoff greater than 8 for stationary test one, greater than 3 for stationary test two, and greater than 5 for the mobile test, representing a minimal loss of acceptable data. When comparing the relationship between incorrectly retained and incorrectly rejected, acceptable HPE cutoffs of 8 to 10 (stationary test one), 4 to 15 (stationary test two), and no HPE was effective for the mobile test, although 5 to 10 was closest.

Data loss versus error retention for HPE cutoffs of 3 to 15. The relationship of the percent of incorrectly rejected positions of all acceptable positions and percent of incorrectly retained positions of all retained positions suggested that HPE cutoffs of 8 to 10 for stationary test one and 3 to 15 for stationary test two, met criteria 4.

An evaluation of the performance of the VPS array with a mobile tag. (a) A schematic depicting receiver positions (+) and the coast (black line) during the 2010 research season. A mobile tag test was completed on 7/6/2010. The small dots represent the VPS estimated positions during the mobile test. There were a total of 126 correctly retained positions (black , <6 m error, <HPE 8), 5 correctly rejected positions (blue), 3 incorrectly rejected positions (orange), and 5 incorrectly retained positions (red). The incorrectly rejected (n = 3) positions occurred consecutively and were located at the furthest distance from the array in the left transect. (b) A graph scaled to cover positions with an HPE between 0 and 20 depicts correctly rejected, correctly retained, incorrectly rejected, and incorrectly retained positions. All values with an HPE greater than 20 were correctly rejected (n = 3, not shown). Euclidean distance is our best estimate of measured error.

Step 3b: Selecting an HPE cutoff

The highest HPE cutoff that met or was the closest to meeting all criteria was an HPE of 8, although from this dataset HPE cutoffs between 6 to 8 all fit the criteria similarly. There were only two positions remaining with error greater than 15 m and an HPE less than 8 (criterion 2) during the combined 45,744 transmissions during the two stationary tests, which may be good enough for first passage time analysis if visual inspection of the remaining data points allows the remaining problematic values to be easily identified or the analysis for this step may need to be adapted. If we selected a lower HPE to meet this criterion perfectly, an HPE cutoff of 6 would have been required. An HPE cutoff of 6 would have incorrectly rejected 7.7% of data from stationary test one. Although a practitioner could make a case for this level of filtering, we preferred the increased coverage as data loss is a major issue with trajectory based analyses (Figure 7); 4,124 more positions would have been lost with an HPE of 6 vs. 8 (28.35% lost outside array and 11.22% lost inside array for HPE 6) and total areal coverage would have reduced from 2.11 km2 (HPE 8) to 1.89 km2 (HPE 6) (Figure 8).

HPE values for all fish positions. The schematic depicts the HPE value for each fish position during active monitoring of study subjects. The coast is represented by the black line, receivers are represented by black triangles, and all sea lamprey positions are color coded by their associated HPE estimate and it is clear that HPE greatly increases when the sea lamprey position is outside of the array proximity, other than for a few positions inside the array.

Fish positions filter by an HPE cutoff of 8. A depiction of how many of all fish locations in 2010 would be rejected by an HPE filter of 8.0. Fish locations are represented by small black dots, receiver positions are shown by red triangles and the coast is depicted by a gray line. These are cumulative graphs with all sea lamprey tags shown in (a), fish positions with an HPE below or equal to 8.0 are displayed in (b), and fish positions with an HPE greater than 8.0 are shown in (c).

Step 3c: Evaluating the potential for introduced bias through application of the chosen HPE cutoff

There was evidence for spatial bias in filtering inside versus outside of the array and potentially behavioral or habitat filter bias. Only three mobile test positions with acceptable accuracy (<6 m) were rejected and all occurred at the array edge, suggesting that HPE may over-filter at the periphery of coverage (Figure 6a). In the sea lamprey data set, the HPE cutoff (8) rejected 8.8% of 58,025 total positions inside the array and 23.5% of 53,435 total positions outside of the array, though this comparison does not discriminate between removal of inaccurate and accurate positions (Figure 8). There was no evidence for spatial filter bias inside of the array as no region appeared more prone to poor positioning across individuals (Figure 8). A behavioral or habitat filter bias was evident, as the majority of positions rejected from inside of the array (3,070 positions, 74.6% of all rejections) were associated with seven lampreys during daylight hours that were likely stationary, as sea lamprey are nocturnal. This observation is consistent with previous observations that sea lamprey may settle in locations that interfere with acoustic tag signal transmission, blocking the line of sight between receivers and tags [19].

Discussion

We developed a straightforward conceptual approach for using a PPE to filter hyperbolically positioned data and demonstrated this approach with a technology (underwater acoustic telemetry) in which users evaluate position accuracy with HPE. The framework included selection of defensible data quality objectives, evaluation of the array’s positioning accuracy with an independent dataset, and determination of the relationship of the selected PPE to measured accuracy and data retention.

Data-filtering with a PPE estimate of position accuracy has certain conceptual advantages over a biological filter if the PPE is properly evaluated. PPE’s are calculated for each position obtained from the telemetry apparatus, whereas biological filters ignore the positioning process and only evaluate resultant positions based on an expectation of what is biologically reasonable for the study species. Biological filters can be useful and are frequently used because they are conceptually straightforward, and at times, the only available option. Some habitat filters are quite reasonable (e.g., fish do not swim 500 m onto land); although the rule could introduce filter bias as positions closer to the physical habitat edge are more likely to be rejected. Similarly, as HPE represents a 95% confidence value, HPE becomes large when solutions become less precise, as typically occurs outside the array periphery where the overlapping of parabolas allows for multiple potential solutions [9]. Failure to select a proper filter is more problematic for calculating movement trajectories (e.g., maximum ground speed), and identifying a useful biological filter is especially challenging for aquatic species for which maximum movement capacities are often unknown [22], or poorly estimated. Selection of a biological filter cutoff that is high allows incorrectly retained positions to remain in the dataset, whereas cutoffs that are low near the average speed remove valuable data. Either case will serve to infuse the data with a perceived improvement in accuracy that is not supportable (i.e., the rejected positions may be no less accurate than many retained positions). Unlike biological filters, PPE filters are position specific and rely principally on the assumption that animal-integrated tags match the performance of stationary or towed tags, and that the array is well-constructed to ensure sufficient areal coverage, avoidance of obstructions, etc. [9, 23]. If these assumptions are supported, carefully selected PPEs that are based on the position quality should be used prior to biological filters that are based upon the biological plausibility of resultant positions and not the quality of the positioning process.

PPE filter selection ranges from choosing an arbitrary HPE based on subjective operator preferences to developing a complete algorithm that would output a filter threshold based on a set of inputs (i.e., the model determines both performance and value). The approach we suggest clearly falls in the middle (i.e., performance measurement is objective but value judgment can be subjective) but still represents a significant improvement over the use of arbitrarily selected filter values. The selection of an HPE of 8 fell within the most effective range for the criteria and ensures high confidence in 6 m accuracy (criteria 3). Although we identified three different criteria to complete a complex analysis, a single criterion could be chosen for a single analysis, or, if multiple analyses are contemplated, a different cutoff could be chosen for each, which would make selection and reporting straightforward. In our example, the criteria for objective 2 was not met, as it required no positions to have greater than 15 m of error, clearly representing an ineffective criteria for an objective. At very low numbers of violating positions, only extreme positions remain and improving the filter to remove these positions came at a high cost (increase in positions incorrectly rejected). These extreme outliers may not even represent predictable performance of the system. If the filter is to be useful, the proper response is to adjust the first passage time analysis, which is very easy and would have only required shifting the moving designation by a few meters (17.7 m was the largest remaining error). However, perhaps a better criterion would be to choose a high percentile (e.g., 99.5%) that represents an acceptable level of risk or error in your future analysis. With the VPS system, little effort has been made to defend filtering cutoffs beyond reference to prior use [13], and ambiguous filter criteria are at risk of inadvertently becoming acceptable practice through the accumulation of use. In our case, adoption of an ambiguous filter based on a previous study (HPE 10 to 20 [14–16]), would have been less useful than the carefully evaluated filter cutoff of 8 and indefensible (Table 2). Telemetry technology represents a very different tool than typical scientific instruments as its design is rarely consistent, is difficult to standardize, and does not generate data points with fixed accuracy and precision. For this reason, we suggest that data filtering should be a flexible process that may progress towards more concrete rules if some level of standardization of use occurs for telemetry equipment as has occurred for the use of positional dilution of precision in certain GPS applications (e.g., [24]).

A VPS system is capable of attaining accurate positions (2DRMS <6 m, <2 m average) with a high position yield (>82%) via autonomous receivers that are capable of covering large areas (>2 km2), although these results were specific to this system and environment. As with all systems, the VPS was susceptible to spatial, temporal, and behavioral or habitat bias in position yield and position precision which could cloak important biological phenomena [6, 24]. For example, when an animal occupies a habitat that blocks the line of sight from tags to receivers, as was suspected for the seven stationary sea lamprey that composed 74.6% of our filtered data inside of the array [19], there is a potential habitat and behavioral bias [9]. Observed HPE values also increased with distance outside of the test array, consistent with observations from other studies [9, 13]. Confirming a temporal or spatial bias is challenging because, depending on placement, stationary tags may not reveal systematic spatial biases in the array [9, 25], and may be sensitive to regular variation in environmental conditions (e.g., louder waves in shallow vs. deep waters) that differentially impact receiver performance. Mobile range testing is recommended for spatial evaluations of filter performance, though results may not be representative of the full range of environmental conditions encountered by tagged animals due to the typically short duration of mobile tests, selection of favorable boating conditions, etc. The mobile test appeared to be biased towards higher error estimates, though the spatial patterns were consistent between fish tracking and mobile test data.

Both mobile and stationary tests presented unforeseen challenges and unexpected findings. Mobile testing suggested an HPE of 8 was overly conservative outside of the internal array area, though we lacked stationary tests in this region that would have confirmed this conclusion (Figure 6). The mobile test presented some challenges to evaluation because the GPS clock was not synchronized with VPS time and only recorded a position every second. To minimize the effects of clock differences on position error estimates, we applied a constant time offset to all mobile test positions that minimized error between the mobile test tag tracks and corresponding GPS tracks (Additional file 1). In addition, we likely over-estimated error by assuming that the GPS track represented the true path of the test tag because we only collected a single post-processed position every second along the track. Collecting positions with sub-meter accuracy usually requires averaging several GPS points at a given location, but point averaging over time is not an option while moving. The tail of positions that were observed in the stationary tests could not be explained with the example dataset but was likely the result of a specific set of receivers with poor geometry that consistently estimated inaccurate positions in one direction. The tails were not troubling to us as these positions were easily filtered with HPE. Many challenges can be avoided in advance by careful project planning. We recommend multiple mobile and stationary tag tests with the animal tag during the same time period that the animals are being monitored, and ideally, at least some fraction of the synch-tags would be the same model as the fish tags. Receivers should be positioned outside the maximum spatial extent of interest, or at a minimum, stationary animal tags should be monitored in any area of interest to ensure the chosen HPE cutoff has met the filter goals.

Although we present a process for selecting a single fixed HPE cutoff and provide the R code necessary for performing this approach (Additional file 1), a dynamic filter in which the HPE cutoff could be either temporally (e.g., [26]), or both temporally and spatially flexible, might prove useful, although it also would require synchronization tags that match fish tags. Regardless of how the HPE selection process is fine-tuned, the key is following a standard process like the one we have described, including reporting the process for others to properly assess the research findings. Even if the necessary accuracy required to perform the most-preferred analysis cannot be attained with the data collected, PPEs can be used to tailor the selection of an analytical tool, or the spatial scale at which the behavior is considered. Either adaptation to the study is preferred over the reporting of errant observations [17, 27].

Conclusions

PPE error estimates are frequently available from animal telemetry systems that rely on hyperbolic positioning and can be used to evaluate data quality prior to analysis. When using PPE to filter data, practitioners should undertake (a) a priori determination of data accuracy requirements; (b) independent assessment of the telemetry system performance; (c) a determination of how well the PPE represents measured accuracy; (d) selection of a filter cutoff based on the balance between accuracy improvement and data retention; and (e) explicit consideration of spatial, behavioral, and habitat bias associated with the telemetry system and the animal under observation. A carefully constructed PPE filter is more defensible than biological filters that can improve data accuracy but require (1) an interpretation of the data vs. an assessment of its precision, and (2) are only applied to a subset of the data collected (extreme movements). HPE offers the intriguing possibility for direct use in the analysis as an error estimate (vs. a criterion for data retention); akin to bench apparatus precision estimates, though there is no evidence that this approach has been used in such a manner with other hyperbolic positioning systems. Because data analysis requirements are likely to be as varied as the movement data to which they are applied, complete exposition of the selection process and criteria should be included in the methods section of any subsequent reports or publications. The minimum level of a reporting should include a description of the data quality objectives, criteria, rational for cutoff selection, and evidence of reaching the criteria, which could come in the form of a paragraph, table, or appendix and does not need to be a substantial component of the report or paper.

Methods

A telemetry system (VPS, VEMCO) composed of 41 receivers (VR2W) in diamond formations with between-receiver spacing ranging from 125 m to 250 m covering 2 km2 was used to position a stationary tag at two locations, a mobile tag, and 76 tagged sea lamprey. Sea lamprey were implanted with acoustic transmitters (VEMCO model V9P-2H, 69 kHz, 150 db, 47 mm (length), 3.5 g (dry weight), random interval: 15 to 45 s, burst length: 3.54 s or 3.26 s, between 09 April 2010 and 09 July 2010 (Figure 1). The depth of the site ranged from 0 to 5.8 m within the array but sea lamprey were positioned at greater depths on the fringes of the array. Nine synchronization transmitters (VEMCO model V16-2H, 69 kHz) were deployed in stationary positions through the array. Compared to fish tags, synch-tags emitted a more powerful signal (160 db) less frequently (500 to 700 s). The performance of the VPS array was tested by comparing the position estimates of one stationary V9P-2H transmitter (stationary testing) at two locations (Location 1: 17 June 2010 19:43:20 to 01 July 2010 14:34:36, Location 2: 01 July 2010 14:52:47 to 08 July 2010 17:03:20) and a slow moving V9P-2H transmitter (mobile testing) inside the body cavity of a sea lamprey that was recently deceased, located 1 m off the bottom and below a floating boat powered by an electric motor with a GPS (Trimble Geo XH) mounted directly above the tag monitoring the tags’ true position. Due to a GPS malfunction we did not have GPS measured positions for the stationary tests and were forced to use position averages for comparison. This is not an ideal solution. The GPS and tag were maintained in alignment with a down rigger and there was no visual layback of the tag. The mobile test was comprised of three transects through the array starting near the coast and ending outside of the internal array area on 06 July 2010 at an average speed of 0.60 ms-1 (range: 0.25 to 1.15 ms-1) to mimic sea lamprey ground speed: (i) Boat Path 1, 13:12:59 to 13:57:24 (distance 934 m); (ii) Boat Path 2, from 14:22:19 to 15:10:08 (distance 754 m); and (iii) Boat Path 3, from 15:35:08 to 15:59:42 (distance 309 m) (Figure 1; left to right). The GPS positions were post-processed with three local reference stations that have one second reference intervals (CHB5, MIAL, MIMC) and the positions had an estimated accuracy of 0.36 m, 0.29 m, and 1.03 m (mean, min., max.). In our analysis, we assume that these GPS positions represent the true mobile tag position. We were not able to synchronize the acoustic receivers to the GPS clock at the time of tests, so we applied a constant offset to the timestamps of GPS positions until error between the GPS track and mobile tag track (derived from VPS) was minimized. Error was minimized using the Optim function in R (Additional file 1), which used the Broyden–Fletcher–Goldfarb–Shanno method [28]. The test tag was identical to the tag inserted into female sea lamprey (V9P-2H, 69 kHz, 150 db, 47 mm (length), 3.5 g (dry weight)), which were monitored as they approached, entered or bypassed the Ocqueoc River mouth (45.490278°, -84.072931°) in Hammond Bay (Lake Huron), where lake water temperatures ranged from 6.0 to 19.7°C throughout the study (April 9, 2010 to June 7, 2010). The use of sea lampreys was approved by the Michigan State University Institutional Animal Use and Care Committee via animal use permit 04/07-033-00.

Error evaluation based on test data

To evaluate the baseline array accuracy the position yield (number of positions observed/maximum possible number of positions) based on transmission rate, the proportions of data that were below 6 m error and the number of positions with greater than 15 m error were enumerated for stationary and mobile test tags. The position yield was calculated by determining the total number of locations positioned by the VPS versus the number of acoustic tag signals emitted in the time period (maximum transmission number). The true number of fixes can be readily counted. To estimate position yield, the average time for one complete transmission cycle must be determined by adding the average programmed transmission interval between the start and end of a transmission plus the length of the transmission (burst length). The V9P-2H tag had a pre-set transmission interval that ranged from 15 to 45 seconds (average: 30 seconds) and a burst length that alternated between 3.26 and 3.54 seconds. The burst length was always composed of a series of eight pings, but varied because two different alternating signals were transmitted from these tags as one provided a depth code and a short tag identifier (two digit) and the other provided a longer tag identifier (five digit) that is more robust to individual misidentification. The resulting average length for one transmission cycle was 33.4 seconds. The time between transmissions is readily available; however, the burst length should be obtained prior to performing this calculation. Second, the total transmission period must be divided by the length of one transmission cycle to determine the maximum transmission number. Average error was calculated and the presence of positions with unacceptably large error values (>15 m error) were enumerated for all three tests (two stationary and one mobile).

HPE evaluation with test data

The average error (criteria 1) and number of positions with large error were estimated (>15 m, criteria 2) for all HPE cutoff possibilities (3 to 15) to evaluate the first two criteria (Table 1). To evaluate if the HPE value provided for fish tags and calculated by VEMCO using synch-tag detections was a representative estimate of locational error for fish tags, we calculated 2DRMS for each stationary test tag by first calculating the Euclidian distance between each individual test position and the median or best estimate of the ‘true’ test tag location. The HPE calculated by VEMCO is scaled to an approximately 1:1 relationship between HPE and measured error in m for synch-tags [9]. 2DRMS can be used to compare the relationship to fish tags and is reported in the base line evaluation of the dataset. The 2DRMS for the median value is actually a measure of precision [8], but it was also the best available estimate of accuracy. The 2DRMS linear model was calculated by first binning all data by one unit HPE increments and an average of the HPE within the bin was calculated to represent the bin. The error in the x direction (Xe) and y direction (Ye) was estimated for each location within a bin. The 2DRMS was then estimated for the 95% confidence interval using Xe and Ye within each bin and a line was fit to the 2DRMS data . A linear model fit to those 2DRMS values for each HPE (2DRMS line) of interest (3 to 15) was used to predict the proper HPE filter for 95% confidence about a given target error value. The 2DRMS regression developed for the mobile test was not considered due to the small number of recorded transmissions (138). The 2DRMS model was used to estimate the HPE at which there was 95% confidence that positions had less than 6 m accuracy (criteria 3). A range of HPE cutoffs (3 to 15) for removing unacceptably erroneous positions (>6 m error) while avoiding the loss of acceptable positions (<6 m error) were considered based on the percentage of incorrectly retained (of all retained) and incorrectly rejected positions (percentage of acceptable positions) (Table 2). The goal for the incorrectly retained percentage of all positions was >99% and incorrectly rejected of all positions was >95%, as the loss of data was considered more acceptable than allowing the erroneous positions to remain. This was an arbitrary proportion, though the loss of positions when evaluating trajectory data can quickly become problematic and retaining unacceptably erroneous positions could result in incorrect habitat assignment, so we selected high target proportions. The variation in temporal gaps was not considered but could be very important to a study.

Filtering fish data

We examined all fish data classified by HPE in a schematic to assess any spatial pattern in HPE and to identify areas of poor array coverage. For each candidate HPE cutoff, we calculated the amount of data rejected inside and outside of the array area as defined as a polygon drawn around the outer most receivers. The post-filtering spatial coverage of the array was also tabulated for all candidate HPE values with the expectation that locations outside of the array are likely to have an increased HPE and lower accuracy [12] with increasing distance from the array periphery. Coverage was calculated by drawing a polygon around the outer most fish positions retained after filtering, and calculating the area (km2). Lastly, the evenness of filtered positions, referring to how grouped filtered positions were in the array, was considered for each individual fish to determine if specific subjects were more prone to being rejected. We visually assessed whether these positions were associated with any particular four-receiver diamond or if they were missed throughout the array, occurring in multiple four-receiver diamonds.

Abbreviations

- HPE:

-

Horizontal Positioning Error

- PPE:

-

Position Precision Estimate

- TDOA:

-

Time-difference-of-arrival

- VPS:

-

VEMCO Positioning System

- 2DRMS:

-

Twice the distance root mean square error in two dimensions.

References

Cagnacci F, Boitani L, Powell RA, Boyce MS: Animal ecology meets GPS-based radiotelemetry: a perfect storm of opportunities and challenges. Philos Trans R Soc London [Biol] 2010,365(1550):2157–2162. 10.1098/rstb.2010.0107

Cooke SJ, Hinch SG, Wikelski M, Andrews RD, Kuchel LJ, Wolcott TG, Butler PJ: Biotelemetry: a mechanistic approach to ecology. Trends Ecol Evolut 2004,19(6):334–343. 10.1016/j.tree.2004.04.003

Cooke SJ, Midwood JD, Thiem JD, Klimley P, Lucas MC, Thorstad EB, Eiler J, Holbrook C, Ebner BC: Tracking animals in freshwater with electronic tags: past, present and future. Anim Biotelem 2013, 1: 5. 10.1186/2050-3385-1-5

Giuggioli L, Bartumeus F: Animal movement, search strategies and behavioural ecology: a cross‒disciplinary way forward. J Anim Ecol 2010,79(4):906–909.

D’Eon RG: Effects of a stationary GPS fix rate bias on habitat selection analyses. J Wildlife Manage 2003, 67: 858–863. 10.2307/3802693

D'Eon RG, Delparte D: Effects of radio‒collar position and orientation on GPS radio‒collar performance, and the implications of PDOP in data screening. J Appl Ecol 2005,42(2):383–388. 10.1111/j.1365-2664.2005.01010.x

Frair JL, Nielsen SE, Merill EH, Lele SR, Boyce MS, Munro RHM, Stenhouse GB, Beyer HL: Removing GPS collar bias in habitat selection studies. J Appl Ecol 2004, 41: 201–212. 10.1111/j.0021-8901.2004.00902.x

Whithey JC, Bloxton TD, Marzluff JM: Effects of tagging and location error in wildlife telemetry studies. In Radio Tracking and Animal Populations. Edited by: Millspaugh JJ, Marzluff JM. Waltham, MA: Academic Press Inc; 2001:43–75.

Smith F: Understanding HPE in the VPS Telemetry System. VEMCO Tutorials; 2013. http://vemco.com/wp-content/uploads/2013/09/understanding-hpe-vps.pdf

Andrews KS, Tolimieri N, Williams GD, Samhouri JF, Harvey CJ, Levin PS: Comparison of fine-scale acoustic monitoring systems using home range size of a demersal fish. Mar Biol 2011,158(10):2377–2387. 10.1007/s00227-011-1724-5

White GC, Garrott RA: Analysis of Wildlife Radio-Tracking Data. Waltham, MA: Academic Press Inc.; 1990.

Espinoza M, Farrugia TJ, Webber DM, Smith F, Lowe CG: Testing a new acoustic telemetry technique to quantify long-term, fine-scale movements of aquatic animals. Fish Res 2011,108(2):364–371.

Roy R, Beguin J, Argillier C, Tissot L, Smith F, Smedbol S, De-Oliveira E: Testing the VEMCO Positioning System: spatial distribution of the probability of location and the positioning error in a reservoir. Anim Biotelm 2014, 2: 1. 10.1186/2050-3385-2-1

Scheel D, Bisson L: Movement patterns of giant Pacific octopuses, Enteroctopus dofleini (Wülker, 1910). J Exp Mar Biol Ecol 2012, 416–417: 21–31.

Furey NB, Dance MA, Rooker JR: Fine‒scale movements and habitat use of juvenile southern flounder Paralichthys lethostigma in an estuarine seascape. J Fish Biol 2013, 82: 1469–1483. 10.1111/jfb.12074

McMahan MD, Brady DC, Cowan DF, Grabowski JH, Sherwood GD: Using acoustic telemetry to observe the effects of a groundfish predator ( Atlantic Cod, Gadus morhua ) on movement of the American lobster ( Homarus americanus ). Can J Fish Aquat Sci 2013,70(11):1625–1634. 10.1139/cjfas-2013-0065

Bradshaw CJA, Sims DW, Hays GC: Measurement error causes scale-dependent threshold erosion of biological signals in animal movement data. Ecol Appl 2007,17(2):628–638. 10.1890/06-0964

Vrieze LA, Bergstedt RA, Sorensen PW: Olfactory-mediated stream-finding behavior of migratory adult sea lamprey ( Petromyzon marinues ). Can J Fish Aquat Sci 2011, 68: 523–533. 10.1139/F10-169

Meckley TD, Wagner CM, Gurarie E: Coastal movements of migrating sea lamprey (Petromyzon marinus) in response to a partial pheromone added to river water: implications for management of invasive populations. Can J Fish Aquat Sci 2014,71(4):533–544. 10.1139/cjfas-2013-0487

Fauchald P, Tveraa T: Using first-passage time in the analysis of area-restricted search and habitat selection. Ecology 2003,84(2):282–288. 10.1890/0012-9658(2003)084[0282:UFPTIT]2.0.CO;2

Barraquand F, Benhamou S: Animal movements in heterogeneous landscapes: identifying profitable places and homogenous movement bouts. Ecology 2008, 89: 3336–3348. 10.1890/08-0162.1

Katopodis C, Gervais R: Ecohydraulic analysis of fish fatigue data. River Res Applic 2012, 28: 444–456. 10.1002/rra.1566

Espinoza M, Farrugia TJ, Lowe CG: Habitat use, movements and site fidelity of the gray smooth-hound shark (Mustelus californicus, Gill 1863) in a newly restored southern California estuary. J Exp Mar Biol Ecol 2011,401(1):63–74.

Lewis JS, Rachlow JL, Garton EO, Vierling LA: Effects of habitat on GPS collar performance: using data screening to reduce location error. J Appl Ecol 2007,44(3):663–671. 10.1111/j.1365-2664.2007.01286.x

Biesinger Z, Bolker BM, Marcinek D, Grothues TM, Dobarro JA, Lindberg WJ: Testing an autonomous acoustic telemetry positioning system for fine-scale space use in marine animals. J Exp Mar Biol Ecol 2013, 448: 46–56.

Coates JH, Hovel KA, Butler JL, Klimley AP, Morgan SG: Movement and home range of pink abalone Haliotis corrugate : implications for restoration and population recovery. Mar Ecol Prog Ser 2013, 486: 189–201.

Hayes GC, Kesson SA, Godley BJ, Luschi P, Santidrian P: The implications of location accuracy for the interpretation of satellite-tracking data. Anim Behav 2001, 61: 1035–1040. 10.1006/anbe.2001.1685

Nocedal J, Wright SJ: Numerical Optimization. New York, NY: Springer; 1999.

Acknowledgements

The work described herein was funded through a grant from the Great Lakes Fishery Commission to CMW and was accomplished through considerable assistance from the US Fish and Wildlife Service and the USGS Hammond Bay Biological Station. We would like to thank Frank Smith of VEMCO for providing us with an informative document explaining the calculation of HPE prior to its public release and for informative discussions and critique of our use of HPE. We would also like to thank Dr. Robert Goodwin for assistance with developing GPS collection methodology and Eric Willman, Amber Masters, and Brett Diffin for assistance with deploying acoustic equipment. We also thank Dr. Daniel Hayes, Dr. Charles Krueger, and three anonymous reviewers for useful comments on the manuscript. This manuscript is contribution number 1843 of the Great Lakes Science Center. This work was funded by the Great Lakes Fishery Commission by way of Great Lakes Restoration Initiative appropriations (GL-00E23010-3). This paper is contribution 7 of the Great Lakes Acoustic Telemetry Observation System (GLATOS).

Disclaimer

Any use of trade, product, or firm names is for descriptive purposes only and does not imply endorsement by the U.S. Government.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

TDM, CMW, and CMH planned the underlying telemetry study as well as planned for and performed the field evaluation tests. All authors provided substantial intellectual contributions to the overall approach we suggest for filtering PPE associated data, and specifically influenced the way we suggest using HPE to filter VPS data. TDM wrote initial drafts of the manuscript. All authors provided substantial peer review of the final manuscript and read and approved the final manuscript.

Electronic supplementary material

40317_2013_27_MOESM1_ESM.pdf

Additional file 1: R code for filtering VEMCO positioning system data with horizontal positioning error (HPE). (PDF 18 MB)

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Meckley, T.D., Holbrook, C.M., Wagner, C.M. et al. An approach for filtering hyperbolically positioned underwater acoustic telemetry data with position precision estimates. Anim Biotelemetry 2, 7 (2014). https://doi.org/10.1186/2050-3385-2-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/2050-3385-2-7