Abstract

Background

Health interview surveys are important data sources for empirical research in public health. However, the diversity of methods applied, such as in the mode of data collection, make it difficult to compare results across surveys, time, or countries. The aim of this study was to explore whether the prevalence rates of health-related indicators amongst adults differ when self-administered paper mail questionnaires (SAQ-Paper), self-administered web surveys (SAQ-Web), and computer-assisted telephone interviews (CATI) are used for data collection in a health survey.

Methods

Data were obtained from a population-based mixed-mode health interview survey of adults in Germany carried out within the ‘German Health Update’ (GEDA) study. Data were collected either by SAQ-Paper (n = 746), SAQ-Web (n = 414), or CATI (n = 411). Predictive margins from logistic regression models were used to estimate the prevalence rates of chronic conditions, subjective health, mental health, psychosocial factors, and health behaviours, adjusted for the socio-demographic characteristics of each mode group.

Results

Socio-demographic characteristics were found to differ significantly between study participants who responded by SAQ-Paper, SAQ-Web, and CATI. Crude prevalence rates for health-related indicators also showed significant variation across all three survey modes. After adjusting for socio-demographic factors though, significant differences in prevalence rates between the two self-administered modes (SAQ-Paper and SAQ-Web) were found in only 2 out of the 19 health-related indicators studied. The differences between CATI and the two self-administered modes remained significant however, especially for indicators of mental and psychosocial health and self-reported sporting activity.

Conclusions

The findings of this study indicate that prevalence rates obtained from health interview surveys can vary with the mode of data collection, primarily between interviewer and self-administered modes. Hence, the type of survey mode used should be considered when comparing results from different health surveys. Mixing self-administered modes, such as paper-based questionnaires and web surveys, may be a combination to minimize mode differences in mixed-mode health interview surveys.

Similar content being viewed by others

Background

Empirical research in public health is frequently based on data derived from population-based health interview surveys in which people of the general population are questioned about health issues. However, the methods applied, such as in the sampling procedures and modes of data collection, are diverse [1]. As a result, investigators face numerous challenges when attempting comparison of results across individual surveys, time, or countries.

When designing a survey, researchers aim to optimize data collection procedures and reduce total survey errors within available time and budget parameters [2]. Investigators have to find the most affordable and feasible survey methods, which can, however, differ across countries and settings or between different target populations. In certain cases, the best affordable method is a mixed-mode survey design in which different modes of data collection are offered to respondents [2]. Mixed-mode designs are considered to increase response rates, improve sample composition and data quality, and lower survey costs [3].

Nevertheless, different modes of data collection are known to have differing influences on the response behaviour of study participants. For instance, the amount of effort needed to answer a question can vary across modes and lead to a range of response errors referred to as ‘satisficing effects’ [4]. Another well-known and frequently described mode effect is the presence of an interviewer, the so called ‘interviewer effect’ [2, 5]. In personal interviews, such as face-to-face interviews (F2F), computer-assisted personal interviews (CAPI), or paper and pencil interviews (PAPI), the effects of social desirability can also be observed. This means that respondents will answer sensitive questions (for instance on drug consumption or sexual behaviours) in a way that fits societal norms, so as not to upset the interviewer or appear themselves to be deviant. Another mode effect is the tendency of respondents to agree with interviewer statements and answer positively to questions related to these (acquiescence), especially when interacting with another person [3]. Additionally, in the presence of an interviewer, respondents tend to round-up scalar or number questions (the heaping effect). A possible explanation for this particular behaviour is that respondents may feel time pressed because the interviewer is waiting for their answer and, thus, they allocate less time to remember or calculate exactly [6, 7].

Independently of the presence or absence of an interviewer, data collection methods can differ in two other important dimensions: how the information is presented (visual, oral, or both), and how the respondents convey their answers (spoken, written, or typed) [8, 9]. Previous research argues that in visual modes respondents tend to answer to categorical questions with the first categories (the primacy effect), while in oral modes respondents tend to choose the last categories (the recency effect) [10]. In oral modes, respondents also tend to give more positive answers on scale questions than do respondents in visual modes [3, 11]. Moreover, it is assumed that self-administered mode responses result in fuller use of the entire scale, while administered modes favour the end points [12]. As a whole, it is recognised that when modes differ at the two levels (e.g. interviewer and oral versus self-administered and visual), the quality varies more than when modes differ only at one level [13].

Mode effects have implications for the comparability of data collected by different survey modes. In recent years, a considerable number of studies have dealt with possible mode effects, their strength, and their impact on survey estimates. Unfortunately, it is not that easy to compare their results as most studies examined different populations and topics, applied different sampling procedures, and tested different questions and instruments. This may be one possible reason why many of the results are inconsistent and partially contradictory. Even within one study conclusions could sometimes not be drawn, as for some measures the mode of data collection may have had a strong effect, whereas for others, there was minimal evidence of mode effects [14].

Studies that compared web and paper-based questionnaires have found either very few mode effects, or none at all. Bäckström and Nilsson [15] found that the most prominent differences are related to the gender effect in web questionnaires. De Bernando and Curtis [16] report, though, that there are no significant mode differences once demographic variables (such as employment and income) are added to the analyses. Other studies have revealed that there is a higher response from the highly educated in web questionnaires, but that this does not affect the response behaviour of the participants [17]. McCabe et al. [18] did not find substantial differences in estimates of alcohol consumption between web and paper-based questionnaires in a non-randomised mixed-mode study.

Mode effects are more often found when comparing computer-assisted telephone interviewing (CATI) and web questionnaires. One consistent result is that people interviewed by telephone tend to give more positive answers to scale questions than people completing web questionnaires [19–21]. Positive answers to questions on the mental dimensions of health-related quality of life were also found to be higher in telephone surveys [22].

Substantial mode differences in the reporting of self-assessed health items are also shown in comparisons between CATI and self-administered paper questionnaires, where extreme response categories are used more frequently among telephone respondents (extreme response style) [23]. The authors therefore suggest caution when comparing prevalence rates across surveys or when studying time trends, as mode effects may be as large as the effects under investigation. A study of cannabis consumption of adults in Germany showed that there was a lower prevalence among interviewed people by telephone than among those who participated through paper-based questionnaires [24]. Another study on mode effects in health surveys revealed that in comparing face-to-face and self-administered modes there were no significant mode effects for indicators related to the use of health services, but there were significant mode effects for indicators related to self-reported health-related quality of life, health behaviour, social relations and morbidity [25].

In general, the greatest differences between modes are attributed to interviewer effects, especially on sensitive topics. When a set of modes is compared, it is relatively frequently reported that the largest mode effects can be observed between self-administered and personal interview modes, rather than within modes [21, 26, 27]. Interviewer effects are most often reported on the desirable or undesirable aspects of certain societal behaviours [12, 28, 29]. Although it is also reported that mode effects exist, and a face-to-face survey mode generates more socially desirable responses than a web survey, it also has to be recognized that those effects may not be as pervasive as might be expected [30].

The aim of this study was to explore whether the prevalence rates of certain health-related indicators are affected by differences in the types of survey modes used for data collection in a health survey of adults. A comparison of the prevalence rates of chronic conditions, subjective health, psychosocial factors, mental health, and health behaviours, adjusted for socio-demographic differences between mode groups, revealed by paper (mailed) questionnaires, web surveys and telephone interviews was carried out. The results of this study will contribute to a better understanding of the differences in the results of population-based health surveys that use different modes of data collection.

Methods

Material and study design

Data were obtained from a pilot study carried out within the ‘German Health Update’ (GEDA), a national health interview survey among adults in Germany. The GEDA study is part of the nationwide Health Monitoring System administered by the Robert Koch Institute, the national public health institute in Germany. The aim of the regularly conducted cross-sectional GEDA surveys is to provide current data on population health, health determinants, and the use of health services. Data are used for national and European Union health reporting, health policies and public health research [31, 32]. Previous GEDA surveys were designed as single-mode telephone surveys in which data were collected by CATI. Those surveys were based on samples of telephone numbers from the entire German fixed-line network. In view of increasing non-response errors and selection bias, a mixed-mode pilot study (GEDA 2.0) was carried out using a sample of addresses derived from local registry offices instead of a sample of telephone numbers. The aims of this pilot study were (1) to compare two mixed-mode survey designs with a single-mode telephone design in respect of response rates, sample compositions, and data quality, and (2) to explore whether estimates of health indicators differ between different modes of data collection. In the present paper, the focus is on the second aim of the study. Accordingly, data from GEDA 2.0 were used to investigate differences in prevalence rates between the three modes of data collection used in the study. It has to be acknowledged that the GEDA 2.0 study did not have an experimental design specifically tailored to the investigation of pure mode effects; nevertheless the data obtained in GEDA 2.0 allow for comparisons of health indicators between the different modes used. This can be achieved by statistical adjustment for socio-demographic differences between the mode groups due to differential non-response, similar to what was done in previous studies of mode differences [3, 16, 24, 25].

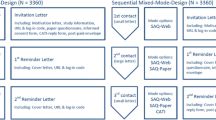

The GEDA 2.0 study was a pilot survey based on a sample of adults registered in the local resident registries of six municipalities covering urban and rural localities as well as the eastern and western regions of Germany. Subjects were selected using a disproportionate stratified random sampling procedure. A gross sample of 10,080 subjects was randomly allocated to three different designs: a) a sequential mixed-mode survey design; b) a simultaneous mixed-mode survey design; and c) a single-mode survey design. All selected subjects were invited by mail to participate. Data were collected by means of a self-administered web-based questionnaire (SAQ-Web), a self-administered mail questionnaire (SAQ-Paper), and a standardised computer-assisted telephone interview (CATI). In the sequential mixed-mode design, these three modes of data collection were offered step by step. First, subjects were invited to participate via web. If they did not answer, they were additionally offered a mail questionnaire. If they did not answer again, they were additionally asked to send their telephone number by mail for participating via CATI. In the simultaneous mixed-mode design, all three modes were offered to respondents at once and they could choose the one they preferred. Finally, those subjects allocated to the single-mode design could only participate via CATI.

A total of 1,571 respondents completed the GEDA 2.0 survey between August and November 2012. In the sequential mixed-mode design, 290 participants (51.7%) responded via SAQ-Web, 264 participants (47.1%) used SAQ-Paper, and 7 participants (1.3%) were interviewed via CATI. In the simultaneous mixed-mode design, 124 participants (20.1%) chose the SAQ-Web option, 482 participants (78.1%) responded by SAQ-Paper, and 11 participants (1,8%) chose CATI. In the single-mode design, a total of 393 CATI interviews were carried out. For the present study, data from all three survey designs were pooled to analyse differences in prevalence rates between the three modes of data collection. Due to the very low number of CATI participants in the mixed-mode designs and the very unequal number of SAQ-Web and SAQ-Paper participants in the mixed-mode designs, mode differences in prevalence rates by survey design could not be examined.

According to the internationally used AAPOR Standard Definitions of outcome rates for surveys [33], the ‘Response Rate 1’ for all three survey designs together was 16.3%. This response rate, also known as the AAPOR minimum response rate, is the number of complete interviews divided by the number of interviews plus the number of non-interviews plus all cases of unknown eligibility.

The questionnaires contained questions on health and diseases, health behaviour, and socio-demographic characteristics. The wording and order of questions and answers did not differ between modes. The study was approved by The Federal Commissioner for Data Protection and Freedom of Information. Informed consent was obtained from all participants in advance.

Health indicators

We compiled a set of health-related indicators for examining mode differences. Respondents were asked whether they had ever been diagnosed by a doctor as having had diabetes, hypertension, dyslipidaemia, coronary heart disease, chronic bronchitis, bronchial asthma, and/or osteoarthrosis. Respondents who answered ‘yes’ were asked whether they had been suffering from this disease during the past 12 months. The prevalence of obesity was assessed by calculating body mass index (BMI) using the World Health Organization criteria (BMI ≥ 30 kg/m2) [34]. We derived BMI from respondent’s self-reported height and weight. Subjective health was assessed with the Minimum European Health Module (MEHM) including questions on self-rated health, chronic conditions, and long-term activity limitations [35].

Depressive symptoms were measured using the eight-item Patient Health Questionnaire depression scale (PHQ-8) [36]. Respondents were asked about the presence and frequency of depressive symptoms over the last two weeks (response categories: ‘not at all’, ‘several days’, ‘more than half the days’, or ‘nearly every day’). Current depression was assessed using the diagnostic algorithm for PHQ-8 [36]. Additionally, one item of the Budapest Initiative Mark 2 questionnaire (BI-M2) was used to look at self-reports on mental health [37]. Participants were asked how often they felt depressed (‘daily’, ‘weekly’, ‘monthly’, ‘a few times a year’, or ‘never’). We then used the five-item WHO Well-Being Index (WHO-5) to measure current mental well-being [38]. This instrument consists of five questions about the frequency of happiness, calm, having energy, feeling fresh, and interest in daily things during the past two weeks. We considered the positive category ‘very good or excellent well-being’ (sum score > 75) and the negative category ‘poor or minimal well-being’ (sum score ≤ 25) in this analysis. Perceived social support was measured using the three-item Oslo Social Support scale (OSS-3) [39]. Respondents were asked about the number of people they could count on, the level of other people’s interest in their lives, and the availability of help from neighbours. We distinguished between ‘poor’, ‘intermediate’, and ‘strong’ social support [40]. We also used information on personal health behaviours, such as current smoking status, alcohol consumption [41, 42], physical activity [43], sporting activity in the last three months, and participation in vaccination programmes. For the most of the described health indicators, the percentage of missing values differed significantly between the modes of data collection, usually with the highest rate of missing values in the SAQ-Paper mode [see Additional file 1].

Socio-demographic characteristics

A range of socio-demographic characteristics was considered in the analyses. Age at time of interview was calculated using information on year and month of birth. Educational level was measured using the ‘Comparative Analysis of Social Mobility in Industrial Nations’ (CASMIN) [44]. Income level was assessed by a question on monthly household net income, and income quintiles were calculated. Furthermore, we included information on current employment status, marital status, and type of household.

Statistical analysis

As a first step, we calculated crude prevalence rates of diagnosed physical conditions, subjective health, depression, mental well-being, social support, and health behaviours by mode of data collection. Pearson’s χ2-tests were used to examine for statistically significant differences (α = 0.05). Second, we adjusted these prevalence rates for socio-demographic factors to investigate whether mode differences in crude prevalence rates can be accounted for by socio-demographic differences between the mode groups. Socio-demographically adjusted prevalence rates were then calculated using predictive margins [45] computed on the basis of logistic regression models containing socio-demographic factors (age, gender, education, income, labour status, marital status, type of household) and the mode of data collection as covariates. These predictive margins (also called ‘predicted marginal proportions’) represent the weighted average of the predicted probabilities of a respective health-related outcome in each mode group. We used z-tests to examine for statistically significant differences. Previous studies suggest that socio-demographic measures are widely mode-insensitive [22]. Hence, the socio-demographic measures used were assumed to be suitable for the adjustment of known socio-demographic differences between the three mode groups.

Results and discussion

Sample characteristics

Respondents who participated by SAQ-Paper, SAQ-Web, and CATI differed significantly in their socio-demographic characteristics (Table 1). SAQ-Web participants were younger, had higher education levels, higher incomes, and higher labour market participation rates, and were more likely to be single and to live in a multi-person household than those who responded by SAQ-Paper and CATI modes. The gender ratio was more balanced in SAQ-Web than in SAQ-Paper and CATI. Participants who responded by SAQ-Paper were less educated and had lower incomes than those who responded via CATI or SAQ-Web. More than a third of the people who participated through SAQ-Paper or CATI modes were aged 65 or above.

Physical conditions and subjective general health

Table 2 shows the prevalence of health-related physical conditions by mode. Most of the crude disease prevalence rates were significantly lower for SAQ-Web than for SAQ-Paper and CATI respondents. Adjustment for socio-demographic characteristics of the mode groups appreciably altered the patterns of prevalence rates. With regard to the 12-month prevalence rates of diseases, we did not find any mode differences after adjusting for socio-demographic factors. However, in respect of lifetime prevalence rates, differences in respiratory diseases (chronic bronchitis and bronchial asthma) between the telephone mode and self-administered modes remained significant.

The crude prevalence rates for subjective health indicators showed a higher self-rating for health by SAQ-Web participants compared with that by SAQ-Paper and CATI participants. However, after adjusting for socio-demographic characteristics no statistically significant differences between the two self-administered modes were evident. Participants interviewed by telephone rated their health more frequently as ‘very good’ (positive extreme category) than respondents who participated via SAQ-Paper.

The results indicate that any variations in the prevalence rates of particular physical conditions and self-rated global health across survey modes can largely be explained by socio-demographic differences between the groups of respondents. No differences between the two self-administered modes were found after adjustment for socio-demographic factors. These findings support previous research suggesting that self-reports on global health do not vary between the different kinds of self-administered modes [16]. However, Shim et al. [46] found that web respondents report better self-rated health than SAQ-Paper respondents. Because of this inconsistency, further research on differences in reports on global health between paper-based and web-based questionnaires is required. Other studies have indicated that people rate their health more highly in telephone surveys than they do in self-administered surveys [23, 47–49]. This is supported by our findings, but not every item of subjective health seems to be affected. The differences in self-reports of respiratory diseases between interviewer and self-administered modes indicated by the present findings have not been found in a comparison of face-to-face and self-administered modes [25]. Therefore, future studies should scrutinise potential mode differences in self-reports about chronic conditions.

Mental and psychosocial health

Prevalence rates relating to depression, mental well-being, and social support are presented in Table 3. The crude prevalence of current depression as defined by the PHQ-8 diagnostic algorithm was higher for CATI-based respondents than for those using SAQ-Web, while after adjusting for socio-demographic characteristics no significant mode difference for depression persisted. The subjective indicator ‘feeling depressed’ showed higher prevalence rates of the positive extreme category ‘never’ in the CATI interview mode compared with the self-administered modes when socio-demographic variables were controlled for. With regard to mental well-being, the crude prevalence of poor or minimal well-being differed significantly across modes. After adjustment for socio-demographic characteristics, the lower percentage of poor or minimal well-being in CATI administered surveys compared with those using SAQ-Paper remained significant. With regard to the positive category (very good or excellent well-being) no significant mode differences were observed. Social support was found to be strongest in CATI and poorest in SAQ-Paper. Large differences in social support between all three survey modes also persisted after adjustment for socio-demographic characteristics.

These results indicate that respondents surveyed by an interviewer rate their mental and psychosocial health as being better than respondents who participate via self-administered modes. The positive patterns found in telephone surveys are also apparent in questions related to the mental dimensions of people’s health related quality of life or depression and stress [22, 23]. The most favoured explanations for these differences is that the interview respondents might be subject to social desirability bias and may seek to answer the questions in a manner that will be viewed favourably by the interviewer [25]. Studies comparing other forms of modes also report differences in mental health syndrome when an interviewer is involved [50], and no differences when diverse self-administered modes are compared [51].

Similarly, the differences in the social support answers could be also to some extent be explained by a social desirability effect. However, the differences in strong social support between SAQ-Paper and SAQ-Web respondents may have other explanations. There could be influence from additional circumstances which we could not account for. Further research is necessary to identify the real reasons for the differences between SAQ-Paper and SAQ-Web answers, as inconsistencies in the results concerning social relations have also been found in other studies [25, 52].

Health behaviours

The prevalence of health behaviours is shown in Table 4. Smoking did not differ by mode, either before or after adjusting for socio-demographic variables. The crude prevalence of people never drinking alcohol was lower for the SAQ-Web respondents than for CATI respondents, while the socio-demographic-adjusted prevalence rates showed no statistically significant differences in drinking patterns across modes. With respect to the crude prevalence of physical activity measure, there were no significant differences between SAQ-Paper and SAQ-Web respondents, while there were significant differences between CATI and SAQ-Paper as well as CATI and SAQ-Web respondents. After adjusting for socio-demographic characteristics, all the significant differences disappeared except for the difference in high physical activity between CATI and SAQ-Web. High sporting activity was reported much more frequently by CATI respondents than by those respondents who participated via SAQ-Web and SAQ-Paper (differences between SAQ-Web and SAQ-Paper were not observed). The prevalence of no sporting activity was in turn higher for SAQ-Paper than for SAQ-Web and CATI respondents. These distinct differences remained significant after adjustment for socio-demographic factors. Conversely, differences in participation in influenza vaccination programmes were only observed in the crude prevalence rates, while the adjusted percentages showed no statistically significant variation across modes. Altogether, these results show that for the majority of the health-related behaviours there are no mode differences. Regarding sporting activity though, researchers should be cautious when interpreting data collected by different modes.

The results described here are to some extent comparable with findings from other studies. It was previously suggested that there are no differences between self-administered and interviewer modes according to smoking rates [23, 25] and alcohol consumption [18, 25]. A more detailed investigation, however, showed that while there are no differences in the number of people smoking and in alcohol consumption, there are differences in the level of consumption – the number of cigarettes smoked per day and the number of units of alcohol consumed [52]. There are also studies that have reported mode effects in alcohol consumption measures, with self-administered modes showing a higher rate of consumption compared with that of modes involving an interviewer [53, 54].

Studies that have looked at measures of physical activity also find differences according to survey mode. Modes in which an interviewer is involved show higher levels of physical activity than self-administered modes [25, 52]. This is consistent with our findings on physical activity, and partly on sporting activity. However, the differences between SAQ-Paper and SAQ-Web respondents in the category of no sporting activity might have another explanation. It is possible that there are some characteristics of the respondents that we have not accounted for in the adjusted models, such as their occupations. For instance, people who have an occupation which requires a lot of physical activity, normally, are less active in their leisure time [55]. People with such occupation possibly tend to participate in surveys using paper-based questionnaire rather than web-based questionnaires. This would explain the lower level of sporting activity among participants in SAQ-Paper compared with the SAQ-Web mode.

Strength and limitations

The strength of this study was that the respondents who participated via SAQ-Paper, SAQ-Web, and CATI were selected from an identical sampling frame. Moreover, all respondents were surveyed in the same time period and in the same regions of Germany. The contents of the questionnaires, the wording of questions and response categories, as well as the order of questions in the questionnaire were also identical in each mode. In spite of these strengths, there are several limitations to our study. The pilot study GEDA 2.0 was predominantly designed to compare two mixed-mode survey designs with one single mode survey design in respect of response rates and sample composition. Therefore, subjects were randomly allocated to three survey designs but not to the three modes of data collection. The present study on mode differences was, hence, a secondary analysis of the data obtained in the GEDA 2.0 study. Considering the study design, it has to be acknowledged that the identified differences in health indicators across survey modes might not solely be caused by the effects of mode type on response patterns. Because of selection effects arising from mode-specific non-response, there may also be differences between the three mode groups according to characteristics of the respondents that were not measured in the survey. As a consequence, the identified mode differences in health indicators might also be caused by composition effects, which probably could not be completely disentangled from the influence of mode effects by statistical adjustment for known socio-demographic differences. Furthermore, it has to be acknowledged that smaller differences between modes might not have been detected due to a lack of statistical power. Additionally, for the latter reason we did not investigate whether mode differences in prevalence rates vary by age group, gender, or educational level. Therefore, future research should examine whether mode differences are moderated by socio-demographic or other characteristics. In addition, it should be borne in mind that the results of this study are based on a cross-sectional survey. Probably, the mode differences found here cannot simply be transferred to longitudinal surveys. Another issue to be considered is that we had to combine two response categories of certain health indicators (feeling depressed: ‘daily’/‘weekly’, self-rated health: ‘poor’/’very poor’) due to low case numbers. This may have masked potential differences between modes.

Due to the relatively low response rate, the results of this study may be affected by selection bias. A possible explanation for the low response rate may be that those subjects allocated to the single-mode survey design (1/3 of the gross sample), in which solely CATI was offered, were asked to send their phone number by post in advance of the telephone interview. This additional effort and transfer of personal data may have substantially lowered the willingness to participate in the study. A possible selection bias due to this should be borne in mind when interpreting the findings.

Conclusions

In summary, the findings of this study indicate that prevalence rates obtained from health interview surveys can vary with the mode of data collection used in the survey. However, objective indicators based on factual issues, such as questions on prevalent diseases, may be less affected than subjective indicators of psychosocial and mental health, or health behaviours. Therefore, the mode of data collection should be considered when comparing results from different health interview surveys, or when the survey mode in periodically conducted surveys is changed over time. Moreover, our findings suggest that mode differences mainly exist between interviewer modes and self-administered modes, rather than between different kinds of self-administered questionnaires. Consequently, mixing self-administered modes, such as SAQ-Paper and SAQ-Web, may be a combination to minimize mode differences in mixed-mode health interview surveys [8]. However, the mode of data collection is only one among many factors that contribute to the total error of an estimate derived from a sample survey [56, 57]. The decision to use a mixed-mode design may depend on wider issues; such as the target population under study, the available time and budget for the study, or the questions to be asked in the survey [2].

References

Koponen P, Aromaa A: Survey Design and Methodology in National Health Interview and Health Examination Surveys. Review of Literature, European Survey Experiences and Recommendations. 2000, Helsinki: National Public Health Institute (KTL)

De Leeuw ED: To mix or not to mix data collection modes in surveys. J Off Stat. 2005, 21: 233-255.

Dillman D, Smyth JD, Christian LM: Internet, mail, and misex-node surveys: the tailored design method. 2009, Hoboken, New Jersey: John Wiley & Sons, Inc.

Krosnick JA: Response strategies for coping with the cognitive demands of attitude measures in surveys. Appl Cogn Psychol. 1991, 5: 213-236. 10.1002/acp.2350050305.

De Leeuw ED: Data Quality in Mail, Telephone, and Face to Face Surveys. 1992, Amsterdam: TT-Publikaties

Kraus F, Steiner V: Modelling heaping effects in unemployment duration models - with an application to retrospective event data in the German socio-economic panel. Zentrum für Europäische Wirtschaftsforschung. 1995, Discussion Paper No. 95–09

Wolff A: Heaping and its Consequences for Duration Analysis. 2000, Institut für Statistik, Ludwig-Maximilians-Universität München: Sonderforschungsbereich 386, Paper 203

De Leeuw E, Hox JJ: Internet surveys as part of a mixed-mode design. Social and Behavioral Research and the Internet. Edited by: Das M, Ester P, Kaczmirek L. 2011, New York, London: Routledge Taylor & Francis Group

De Leeuw E: International Handbook of Survey Methodology. Edited by: De Leeuw E, Hox JJ, Dillman D. 2008, New York: Taylor & Francis, Prychology Press, 113-135. Choosing the method of data collection EAM series,

Krosnick JA, Alwin DF: An evaluation of a cognitive theory of response-order effects in survey measurement. Public Opin Q. 1987, 51: 201-219. 10.1086/269029.

Christian LM: How Mixed-Mode Surveys are Transforming Social Research: The Influence of Survey Mode on Measurement in web and Telephone Surveys. 2007, Washington State University

Macer T: Weaving, not drowning: an update on take-up and best practices in mixed- and multi-mode research. 2005, [http://www.meaning.uk.com/resources/articles_papers/files/spss_directions_2005.pps]

Revilla M: Quality in unimode and mixed-mode designs: a multitrait-multimethod approach. Surv Res Methods. 2010, 4: 151-164.

Link MW, Mokdad A: Advance letters as a means of improving respondent cooperation in random digit dial studies: a multistate experiment. Public Opin Q. 2005, 69: 572-587. 10.1093/poq/nfi055.

Bäckström C, Nilsson C: Mixed mode surveying. A comparison of paper-questionnaires and web-questionnaires. Book Mixed Mode Surveying. A Comparison of Paper-Questionnaires and web-Questionnaires. 2002, City: Department of Information Technology and Media, Mid Sweden University

De Bernardo DH, Curtis A: Using online and paper surveys: the effectiveness of mixed-mode methodology for populations over 50. Res Aging. 2012

Smith AB, King M, Butow P, Olver I: A comparison of data quality and practicality of online versus postal questionnaires in a sample of testicular cancer survivors. Psycho-Oncology. 2013, 22: 233-237. 10.1002/pon.2052.

McCabe SE, Diez A, Boyd CJ, Nelson TF, Weitzman ER: Comparing web and mail responses in a mixed mode survey in college alcohol use research. Addict Behav. 2006, 31: 1619-1627. 10.1016/j.addbeh.2005.12.009.

Christian LM, Dillman D, Smyth JD: The effects of mode and format on answers to scalar questions in telephone and web surveys. Advances in telephone survey methodology. Edited by: Lepkowski JM, Tucker C, Brick JM, De Leeuw E, Japec L, Lavrakas PJ. 2008, Hoboken, NJ: Wiley, 250-275.

Lugtig PJ, Lensvelt-Mulders GJLM, Frerichs R, Greven F: Estimating nonresponse bias and mode effects in a mixed-mode survey. Int J Mark Res. 2011, 53: 669-686. 10.2501/IJMR-53-5-669-686.

Ye C, Fulton J, Tourangeau R: More positive or more extreme? A meta-analysis of mode differences in response choice. Public Opin Q. 2011, 75: 349-365. 10.1093/poq/nfr009.

Ravens-Sieberer U, Erhart M, Wetzel R, Krügel A, Brambosch A: Phone respondents reported less mental health problems whereas mail interviewee gave higher physical health ratings. J Clin Epidemiol. 2008, 61: 1056-1060. 10.1016/j.jclinepi.2007.12.003.

Feveile H, Olsen O, Hogh A: A randomized trial of mailed questionnaires versus telephone interviews: response patterns in a survey. BMC Med Res Methodol. 2007, 7: 27-10.1186/1471-2288-7-27.

Kraus L, Pabst A: Studiendesign und methodik des epidemiologischen suchtsurveys 2009. [study design and methodology of the 2009 epidemiological survey of substance abuse]. Sucht. 2010, 56: 315-326. 10.1024/0939-5911/a000043.

Christensen AI, Ekholm O, Glümer C, Juel K: Effect of survey mode on response patterns: comparison of face-to-face and self-administered modes in health surveys. 2013, The: European Journal of Public Health

Bowling A: Mode of questionnaire administration can have serious effects on data quality. J Public Health. 2005, 27: 281-291. 10.1093/pubmed/fdi031.

Klausch T, Hox JJ, Schouten B: Assessing the Mode-Dependency of Sample Selectivity Across the Survey Response Process. 2013, Statistics Netherlands: The Hague

Jäckle A, Roberts C, Lynn P: Telephone Versus Face-to-Face Interviewing: Mode Effects on Data Quality and Likely Causes. Report on Phase II of the ESS-Gallup Mixed Mode Methodology Projekt. 2006, Colchester: University of Essex

Béland Y, St-Pierre M: Mode effects in the Canadian community health survey: a comparison of CATI and CAPI. Advances in Telephone Survey Methodology. Edited by: Lepkowski JM, Tucker C, Brick JM, De Leeuw ED, Japec L, Lavrakas PJ, Link MW, Sangster RL. 2007, Hoboken (New Jersey): John Wiley & Sons, 297-314.

Heerwegh D: Mode differences between face-to-face and web surveys: an experimental investigation of data quality and social desirability effects. Int J Public Opin Res. 2009, 21: 111-121. 10.1093/ijpor/edn054.

Robert Koch-Institut: Daten und Fakten: Ergebnisse der Studie Gesundheit in Deutschland Aktuell 2009. [Data and Facts: Results of the German Health Update” Study 2009]. Beiträge zur Gesundheitsberichterstattung des Bundes. 2011, Berlin: Robert Koch-Institut

Robert Koch-Institut: Daten und Fakten: Ergebnisse der Studie Gesundheit in Deutschland Aktuell 2010. [Data and Facts: Results of the “German Health Update” Study 2010]. Beiträge zur Gesundheitsberichterstattung des Bundes. 2012, Berlin: Robert Koch-Institut

American Association for Public Opinion Research (AAPOR): Standard Definitions: Final Dispositions of Case Codes and Outcome Rates for Surveys (Revised 2011). 2011, Deerfield: AAPOR

World Health Organization: Obesity - Preventing and Managing the Global Epidemic. 2000, Geneva: World Health Organization, WHO Technical Report Series, No. 894

Cox B, Van Oyen H, Cambois E, Jagger C, Le Roy S, Robine JM, Romieu I: The reliability of the minimum european health module. Int J Public Health. 2009, 54: 55-60. 10.1007/s00038-009-7104-y.

Kroenke K, Strine TW, Spitzer RL, Williams JB, Berry JT, Mokdad AH: The PHQ-8 as a measure of current depression in the general population. J Affect Disord. 2009, 114: 163-173. 10.1016/j.jad.2008.06.026.

Washington Group on Disability Statistics (WG), Budapest Initiative (BI), United Nations Economic & Social Commission for Asia & the Pacific (UNESCAP): Development of disability measures for surveys: the extended set on functioning. http://www.cdc.gov/nchs/data/washington_group/Development_of_Disability_Measures_for_Surveys_The_Extended_Set_on_Functioning.pdf,

Bech P, Olsen LR, Kjoller M, Rasmussen NK: Measuring well-being rather than the absence of distress symptoms: a comparison of the SF-36 mental health subscale and the WHO-five well-being scale. Int J Methods Psychiatr Res. 2003, 12: 85-91. 10.1002/mpr.145.

Meltzer H: Development of a common instrument for mental health. EUROHIS: Developing Common Instruments for Health Surveys. Edited by: Nosikov A, Gudex C. 2003, Amsterdam: IOS Press

Kilpeläinen K, Arpo A, ECHIM Core Group: European Health Indicators. Development and Initial Implementation. 2008, Helsinki: National Public Health Institute

Bush K, Kivlahan DR, McDonell MB, Fihn SD, Bradley KA: The AUDIT alcohol consumption questions (AUDIT-C): an effective brief screening test for problem drinking. Arch Intern Med. 1998, 158: 1789-1795. 10.1001/archinte.158.16.1789.

Reinert DF, Allen JP: The alcohol use disorders identification test: an update of research findings. Alcohol Clin Exp Res. 2007, 31: 185-199. 10.1111/j.1530-0277.2006.00295.x.

Mensink GBM, Lampert T, Bergmann E: Übergewicht und Adipositas in Deutschland 1984–2003 [Overweight and obesity in Germany 1984–2003]. Bundesgesundheitsbl. 2005, 48: 1348-1356. 10.1007/s00103-005-1163-x.

Brauns H, Scherer S, Steinmann S: The CASMIN educational classification in international comparative research. Advances in Cross-National Comparison. Edited by: Hoffmeyer-Zlotnik JHP, Wolf C. 2003, New York: Kluwer, 221-244.

Graubard BI, Korn EL: Predictive margins with survey data. Biometrics. 1999, 55: 652-659. 10.1111/j.0006-341X.1999.00652.x.

Shim JM, Shin E, Johnson TP: Self-rated health assessed by web versus mail modes in a mixed mode survey: the digital divide effect and the genuine survey mode effect. Med Care. 2013, 51: 774-781. 10.1097/MLR.0b013e31829a4f92.

Fowler FJ, Roman AM, Xiao Di Z: Mode effects in a survey of medicare prostate surgery patients. Public Opin Q. 1998, 62: 29-46. 10.1086/297829.

Hanmer J, Hays RD, Fryback DG: Mode of administration is important in US national estimates of health-related quality of life. Med Care. 2007, 45: 1171-1179. 10.1097/MLR.0b013e3181354828.

McHorney CA, Kosinski M, Ware JE: Comparisons of the costs and quality of norms for the SF-36 health survey collected by mail versus telephone interview: results from a national survey. Med Care. 1994, 32: 551-567. 10.1097/00005650-199406000-00002.

Epstein JF, Barker PR, Kroutil LA: Mode effects in self-reported mental health data. Public Opin Q. 2001, 65: 529-549. 10.1086/323577.

Gwaltney CJ, Shields AL, Shiffman S: Equivalence of electronic and paper-and-pencil administration of patient-reported outcome measures: a meta-analytic review. Value Health. 2008, 11: 322-333. 10.1111/j.1524-4733.2007.00231.x.

Tipping S, Hope S, Pickering K, Erens B, Roth MA, Mindell JS: The effect of mode and context on survey results: analysis of data from the health survey for England 2006 and the boost survey for London. BMC Med Res Methodol. 2010, 10: 84-10.1186/1471-2288-10-84.

Link MW, Mokdad AH: Effects of survey mode on self-reports of adult alcohol consumption: a comparison of mail, web and telephone approaches. J Stud Alcohol. 2005, 66: 239-245.

Aquilino WS: Interview mode effects in surveys of drug and alcohol use: a field experiment. Public Opin Q. 1994, 58: 210-240. 10.1086/269419.

Finger J, Tylleskar T, Lampert T, Mensink G: Physical activity patterns and socioeconomic position: the German National Health Interview and Examination Survey 1998 (GNHIES98). BMC Public Health. 2012, 12: 1079-10.1186/1471-2458-12-1079.

Biemer PP: Total survey error: design, implementation, and evaluation. Public Opin Q. 2010, 74: 817-848. 10.1093/poq/nfq058.

Groves RM, Lyberg L: Total survey error: past, present, and future. Public Opin Q. 2010, 74: 849-879. 10.1093/poq/nfq065.

Acknowledgements

The pilot study GEDA 2.0 was funded by the German Federal Ministry of Health. We would like to thank the study participants who responded to the survey and all of our colleagues from the Robert Koch Institute who helped carry out the survey. Special thanks are due to Stephan Müters for discussion and helpful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare they have no competing interests.

Authors’ contributions

JH made substantial contributions to the conception of the study, performed the statistical analyses, interpreted of data and drafted the manuscript. EvdL reviewed the state of research, contributed substantially to the conception and design of the study, the interpretation of data, and drafting of the manuscript. CL and TZ made contributions to the conception and design of the study, the interpretation of the findings and to the critical revision of the manuscript. All authors reviewed, edited, and approved the final manuscript.

Electronic supplementary material

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Hoebel, J., von der Lippe, E., Lange, C. et al. Mode differences in a mixed-mode health interview survey among adults. Arch Public Health 72, 46 (2014). https://doi.org/10.1186/2049-3258-72-46

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/2049-3258-72-46