Abstract

Recent studies have suggested that the presence of iron overload prior to stem cell transplantation is associated with decreased survival. Within these studies, the criteria used to define iron overload have varied considerably. Given the lack of consensus regarding the definition of iron overload in the transplant setting, we sought to methodically examine iron status among transplant patients. We studied 78 consecutive patients at risk for transfusion-related iron overload (diagnoses included AML, ALL, MDS, and aplastic anemia) who received either autologous or allogeneic stem cell transplant. Multiple measures of iron status were collected prior to transplantation and examined for their association with survival. Using this data, three potentially prognostic iron measures were identified and incorporated into a rational and unified scoring system. The resulting Transplant Iron Score assigns a point for each of the following variables: (1) greater than 25 red cell units transfused prior to transplantation; (2) serum ferritin > 1000 ng/ml; and (3) a semi-quantitative bone marrow iron stain of 6+. In our cohort, the score (range 0 to 3) was more closely associated with survival than any available single iron parameter. In multivariate analysis, we observed an independent effect of iron overload on transplant survival (p = 0.01) primarily attributable to an increase in early treatment-related deaths (p = 0.02) and lethal infections. In subgroup analysis, the predictive power of the iron score was most pronounced among allogeneic transplant patients, where a high score (≥ 2) was associated with a 50% absolute decrease in survival at one year. In summary, our results lend further credence to the notion that iron overload prior to transplant is detrimental and suggest iron overload may predispose to a higher rate of lethal infections.

Similar content being viewed by others

Introduction

Long-standing iron overload can lead to heart and liver failure, resulting in premature death [1]. As our ability to treat iron overload improves, it is increasingly important to identify patients at risk for developing complications secondary to iron overload. Stem cell transplant patients are at risk for excess accumulation of iron resulting from repeated blood transfusions both before and during transplantation [2]. Because of this risk, it is recommended that transplant survivors with good long-term prognoses be assessed for iron overload [3]. Because iron overload has been perceived to be of primarily long term detriment, the measurement of iron status prior to transplant has not routinely been performed. However, recent evidence suggests that the determination of iron status before transplant has important prognostic implications [4–6].

Iron overload prior to transplantation was initially identified as a marker of poor prognosis in pediatric β-thalassemia patients [7]. Among those allogeneic transplant recipients, the presence of iron-induced portal fibrosis or hepatomegaly was associated with decreased survival. A later study by Altes et al. suggested that iron overload also adversely impacted those with hematologic malignancies [4]. In that study, very high levels of serum ferritin and transferrin saturation greater than 100% were used as surrogates for iron overload. Meanwhile, a larger study by Armand et al. defined iron overload based solely on serum ferritin, using the highest quartile for each disease type [6]. Using that definition of iron overload, a significant association with transplant survival was seen in patients with myelodysplastic syndrome (MDS) and acute myeloid leukemia (AML).

While each of these retrospective studies suggests that iron overload adversely affects transplant outcome, the clinical definition of iron overload varied considerably between studies. We set out to examine multiple measures of pre-transplant iron status with the goal of determining which marker(s) were most closely associated with clinical outcome following transplant. We chose to study patients at risk for transfusion related iron overload (diagnoses included acute leukemia, MDS, and aplastic anemia) undergoing either autologous or allogeneic transplant. Three measures related to transfusional iron overload were closely associated with transplant survival: (1) number of blood unit transfusions, (2) serum ferritin, and (3) bone marrow iron stores. These readily available measures were combined into a clinical scoring system termed the Transplant Iron Score.

The Transplant Iron Score showed a strong independent association with overall survival. Our findings further validate the detrimental impact of iron overload in the setting of stem cell transplantation and identify a potential mechanism of action.

Methods

We evaluated 78 consecutive adult patients admitted to the Wake Forest transplant unit with a diagnosis of AML, MDS, acute lymphoblastic leukemia (ALL), or aplastic anemia. The included patients were all undergoing their first hematopoietic stem cell transplant between September 9, 1999 and March 19, 2004. The patient demographics and characteristics are summarized in Table 1. This study was approved by both the Protocol Review Committee of the Comprehensive Cancer Center of Wake Forest University and the Institutional Review Board of Wake Forest University School of Medicine.

All serum samples were obtained upon admission to our bone marrow transplant unit, prior to the initiation of the preparative chemotherapy. Samples were continuously stored at -20°C, until measurements of iron parameters were performed. Serum ferritin levels were measured using a two-site chemiluminometric sandwich immunoassay (ADVIA Centaur® Ferritin assay, Bayer Diagnostics, Tarrytown, NY). Transferrin saturation was calculated using the method by Huebers and Finch [8]. Serum levels of transferrin receptor (sTfR) were measured using a commercially available sandwich enzyme immunoassay (EIA) (Ramco Laboratories, Inc. Stafford, TX). C-reactive protein was measured using an enzyme-linked immunosorbent assay (high sensitivity) kit from American Laboratory Products Company, Windham, NH. The kit shows no cross-reactivity against albumin, lysozyme, alpha-1 antitrypsin and other acute phase proteins. Values for aspartate transaminase (AST), alanine transaminase (ALT), total bilirubin (TB), and the international normalized ratio (INR) for blood clotting time were obtained by review of the medical record. All recorded values were within 1 month of the admission date to our unit.

Bone marrow samples obtained within two months of transplantation were reviewed by two of the authors for specimen adequacy (I.M. and Z.L.), and representative sections of the patient's samples were stained with the Gomori's iron stain method [9]. Iron content of the bone marrow was graded by one of the authors (Z.L.) who was blinded to patients' laboratory and clinical parameters. Grading of marrow iron stores was scored according to previously published methods using a 0 to 6+ classification scheme described in detail by Gale et al [10]. Higher grades were associated with increased visible iron with a score of 6+ having large visible iron clumps that obscure cellular details. The number of packed red cell blood cell (pRBC) transfusions prior to transplantation was determined by blood bank records. The ejection fraction (EF) for each patient was based on the most recent pre-transplant echocardiogram or MUGA scan. All measures of EF were performed within 3 months of stem cell transplant. The highest quartile of total transaminases, TB, and INR among our study group was used as a surrogate for early liver dysfunction, while the lowest quartile of EFs was used to identify early pre-existing heart dysfunction.

Based on univariate quartile analysis, the three iron parameters with the strongest survival association were identified. Cutoff values for each parameter were determined independently using comparative statistics. Using this method, multiple pre-defined cutoffs were examined and compared for their association with survival. In order to maintain an adequate sample size on both sides of the cutoff, only values within the second and third quartile were considered. The selected cutoff values demonstrated the highest ability to discriminate survival based on a comparative analysis of hazard ratios.

Ultimately, these three iron parameters were incorporated into a unified scoring system. For each patient, a score was calculated by assigning a single point for each of the following: (1) serum ferritin ≥ 1,000 ng/mL, (2) greater than 25 transfused units of red cells, and (3) marrow iron stain of 6+. The sum of points, ranging between 0 and 3, was defined as the Transplant Iron Score. For missing data, no points were assigned. In the single patient where less than two parameters were available for scoring, the iron score was deemed indeterminate and was excluded from additional statistical analysis. The remaining 77 patients (99 percent) were included for analysis in our study. To allow further analysis within our study, a score of two or greater was deemed "high" and those patients were considered to have transfusion related iron overload. Meanwhile, a score of zero or one was classified as a "low" score. For purposes of comparing the Transplant Iron Score to other individual or combinations of iron parameters, each iron parameter was scaled be scored on a 0 to 3 scale. Using this approach, the individual iron parameters were divided into one of four quartile groups, similar to the four possible score groups defined by the Transplant Iron Score. Based on these groupings, a univariate relative risk of death was calculated for each iron parameter using hazard regression analysis.

Survival time was measured from the date of transplant to the date of death or last known follow-up. All data was censored as of July 1st 2007. The following clinical and demographic parameters were collected for statistical analysis: age at the time of transplant, gender, diagnosis, disease status (no remission, first remission, second remission), transplant type (autologous, related or unrelated allogeneic stem cell transplantation), and cytogenetic data for acute myeloid leukemia patients at the time of diagnosis. Cytogenetic information was grouped into poor, average and favorable categories based on the study by Byrd et al [11]. The specific cause of death for each patient was determined by chart review and categorized into disease related mortality, treatment related mortality, or not determined. All deaths following documented disease relapse were categorized as disease related mortality. Deaths attributable to treatment related mortality were further subdivided into deaths resulting from infection, graft-versus-host disease (GVHD), or veno-occlusive disease (VOD) of the liver. Documented infectious deaths were defined by the presence of a positive culture. Cases of suspected lethal infection met strict criteria for sepsis including radiologic imaging consistent with infection [12]. Kaplan-Meier curves were used to estimate median survival and overall survival differences. Cox proportional hazards regression was used to perform multivariate analysis. Results were considered significant when p-values were less than 0.05.

Results

Multiple measures related to iron homeostasis were collected prior to stem cell transplant and are listed in Table 2. The individual iron parameter most closely associated with overall survival was the transfusion total, defined as the number of red cell units received prior to transplant. For each increase in quartile (e.g. 50th to 75th quartile) of transfused blood, the risk of death following transplant increased by a factor of 1.4. Serum ferritin was also significantly associated with transplant survival (p = 0.02), while the marrow iron stain showed a strong trend towards statistical significance (p = 0.08). Though not significant by quartile analysis, a bone marrow iron stain score of +6 was significantly associated with increased mortality (p = 0.04). The number of patients above the cutoff for transfusion number, ferritin, and iron stain were 30, 41, and 7, respectively. The Transplant Iron Score, when compared with individual and a combination of iron parameters, was most closely associated with survival (p = 0.0006). The risk of death nearly doubled with each point increase of the Transplant Iron Score.

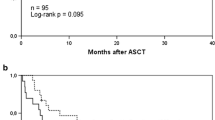

Trend analysis further supported that higher Transplant Iron Scores were associated with decreased overall survival (Figure 1A, p = 0.0003 by log-rank trend). The median survival for patients with no evidence of iron overload (score of 0) was estimated at over 6 years. Patients with higher scores had lower median survival times: 2.4 years for a score of 1; 6.5 months for a score of 2; and 8 days in those patients with a score of 3. The 27 patients (35%) with a high score had a substantially lower median survival of 5.0 months compared to 29.3 months in those with a low score (Figure 1B). The unadjusted hazard ratio associated with a high score was 2.60 (95% CI of 1.47 to 4.61). Our sample size did not allow us to perform rigorous subgroup analyses by disease type, however we did note that ALL (p = 0.02), AML (p = 0.06), and MDS (p = 0.004) patients with a high iron score exhibited a significant decrease in survival. Iron overload also resulted in decreased survival among aplastic anemia patients, however this did not reach statistical significance (p = 0.35).

The increase in mortality associated with iron overload resulted primarily from early deaths. In the first six months following transplant, 56% of those with a high iron score had died as compared to 22% among those with a low score (Figure 1B). This equates to a 34% absolute risk associated with transfusion related iron overload within the first six months. Subsequently, mortality rates were nearly identical between groups after the six-month time point. The difference in early survival was primarily due to an increased number of treatment related deaths (p = 0.018) (Figure 2B). Meanwhile, iron overload was not associated with a significant increase in relapse rate (p = 0.84) or disease related mortality (p = 0.40). The effect of iron overload was most pronounced among the patients undergoing allogeneic transplantation (p < 0.001) with a 50% absolute mortality difference at one year. Additionally, of the 19 allogeneic transplant patients with a high iron score, only two patients survived more than three years after transplant.

We further examined the cause of death among the 20 patients that died as a result of treatment related complications. The majority of these patients (55%) had either documented (5 patients) or suspected (6 patients) lethal infection. Furthermore, the rate of infection related mortality was disproportionately high among those with a high iron score (26%) as compared to those with a low score (8%) (p = 0.04 by Fisher's exact test). Clinical information regarding the 7 patients with a high iron score with infection-related mortality is detailed in Table 3. Rates of graft-versus-host disease (GVHD) and veno-occlusive disease (VOD) of the liver were statistically similar between groups (p = 0.9 and p = 0.3 by Fisher's exact test, respectively).

Multivariate analysis was used to establish whether the Transplant Iron Score was independently associated with transplant survival. Covariables included established predictors of transplant outcome, such as age, gender, donor-type, and remission status. In addition to standard transplant risk factors, an inflammatory marker (C-reactive protein) was included along with measures of end-organ damage (transaminase levels, INR, TB, and EF). Because frank organ failure is not likely to be present in eligible transplant patients, quartiles were used in the evaluation of end-organ damage in an attempt to identify early organ damage (i.e. mild transaminitis or a low-normal ejection fraction). The Transplant Iron Score had a significant independent effect on overall survival (p = 0.01) (Table 4). Among the subgroup of patients with AML, cytogenetic data (a marker for aggressive disease) did not influence the significance of this finding when added to the multivariate model. Furthermore, the iron score was independently associated with treatment related mortality (p = 0.04), infection related mortality (p = 0.02), and allogeneic transplant mortality (p = 0.03). When analyzing the subgroup of allogeneic transplant patients, a statistically significant difference in treatment related mortality (p = 0.01) and infection related mortality (p = 0.03) was maintained.

Discussion

Estimating systemic iron stores in stem cell transplant patients is challenging. Serum ferritin has frequently been used as an estimate of systemic iron stores, but is prone to false elevation in the setting of inflammation and malignancy [13]. Other blood markers, such as transferrin saturation and soluble transferrin receptor, have proven to be even less successful in establishing the diagnosis of iron overload [14, 15]. Non-invasive imaging techniques, such as T2* MRI, show promise for determining tissue iron stores but have not been extensively studied in transplant patients [16]. The current "gold standard" for assessing systemic iron overload remains dependent on liver biopsy [17]. However, invasive procedures are often not practical in patients awaiting stem cell transplant, as thrombocytopenia and neutropenia are common. Because of these difficulties, there is no consensus on how to best determine iron status in the transplant setting [18].

We identified three clinical markers of iron overload that were associated with decreased survival: (1) transfusion burden, (2) serum ferritin, and (3) bone marrow iron stores. Intuitively, each of these is a marker of transfusion related iron overload, and all have been used separately to estimate iron overload in transplant and non-transplant studies [5, 6, 19]. Each marker we identified also has the advantage of being readily available in the clinical setting, as exemplified by the high availability within our study.

In comparison to individual iron parameters, the Transplant Iron Score was more closely associated with transplant outcomes. Specifically, the iron score was more closely associated with survival than ferritin quartiles, which have previously been used to estimate pre-transplant iron overload [6]. Additionally, the iron score identified 35 percent of our study patients as having a "high" score, whereas quartiles by definition only identify the highest 25 percent. This suggests the Transplant Iron Score may be simultaneously more accurate and more inclusive than other proposed markers of iron overload in the transplant setting. Further evidence of the potential power of the iron score was seen in multivariate analysis, where the iron score maintained significance while controlling for other risk factors.

Using the Transplant Iron Score, we investigated the mechanism by which iron overload influences transplant survival. Classically, excess iron accumulates over decades resulting in progressive heart and liver dysfunction and eventually leading to premature death [1]. In contrast, our results demonstrate that iron overload at the time of transplant results in early mortality, and suggest that this process is not dependent on end-organ damage. Also differing from "classic" iron overload, our data suggests that a relatively low systemic iron burden is sufficient to substantially alter transplant survival. In adults, transfusion with more than 100 units of blood is generally required prior to clinical evidence of iron overload [20, 21]. Meanwhile, even our patients with a high iron score had only 46 units of pRBCs on average. Taken together, our results suggest that iron overload in the transplant setting influences mortality by an alternate mechanism of action, differing from the classic model of chronic free-radical induced organ damage. Interestingly, the degree of iron overload necessary to impact transplant survival appears to be sub-clinical, underscoring the need for a more sensitive clinical marker of iron such as the Transplant Iron Score.

To further explore the mechanism by which iron overload influences survival, we closely examined the cause of death for each of the transplant recipients. We observed that treatment related mortality occurred more frequently in those patients with a high iron score. The majority of these deaths resulted from infection, thereby suggesting that lethal infection is the dominant mechanism by which iron overload influences transplant survival. While it has been suggested that iron overload predisposes to infection [4, 5, 22], to our knowledge, this is the first report showing an independent association between iron overload and infection related mortality.

In addition to adding insight into the mechanism of action of transplant iron overload, our study also helps to define its clinical applicability. Specifically, our study simultaneously compares the impact of iron overload in both the autologous and allogeneic transplant setting. Using the Transplant Iron Score to define iron overload in both groups, we found allogeneic transplant patients to be at a disproportionately high risk of death associated with iron overload. Our data suggests that iron overload as a prognostic marker may be limited to, or at least more pronounced in, patients undergoing allogeneic stem cell transplant.

We acknowledge the limitations inherent in our small single-institution study and believe that validation of the Transplant Iron Score is necessary prior to its incorporation into clinical practice. Nevertheless, our results strongly support the notion that iron overload prior to transplant is detrimental and provide rationale to study chelation therapy within the transplant setting. Typically, there is only a small window of opportunity between when a patient is identified as needing transplantation and when the transplant is undertaken. If patients with iron overload are detected early in this process, it is conceivable that iron chelation could minimize the negative impact of iron overload on transplant survival. This exciting possibility merits study in a prospective randomized fashion.

References

Knovich MA, Storey JA, Coffman LG, Torti SV, Torti FM: Ferritin for the clinician. Blood Rev. 2009, 23 (3): 95-104. 10.1016/j.blre.2008.08.001.

Butt NM, Clark RE: Autografting as a risk factor for persisting iron overload in long-term survivors of acute myeloid leukaemia. Bone Marrow Transplant. 2003, 32 (9): 909-13. 10.1038/sj.bmt.1704244.

Rizzo JD, Wingard JR, Tichelli A, Lee SJ, Van Lint MT, Burns LJ: Recommended screening and preventive practices for long-term survivors after hematopoietic cell transplantation: joint recommendations of the European Group for Blood and Marrow Transplantation, Center for International Blood and Marrow Transplant Research, and the American Society for Blood and Marrow Transplantation (EBMT/CIBMTR/ASBMT). Bone Marrow Transplant. 2006, 37 (3): 249-61. 10.1038/sj.bmt.1705243.

Altes A, Remacha AF, Sureda A, Martino R, Briones J, Canals C: Iron overload might increase transplant-related mortality in haematopoietic stem cell transplantation. Bone Marrow Transplant. 2002, 29 (12): 987-9. 10.1038/sj.bmt.1703570.

Miceli MH, Dong L, Grazziutti ML, Fassas A, Thertulien R, Van Rhee F: Iron overload is a major risk factor for severe infection after autologous stem cell transplantation: a study of 367 myeloma patients. Bone Marrow Transplant. 2006, 37 (9): 857-64. 10.1038/sj.bmt.1705340.

Armand P, Kim HT, Cutler CS, Ho VT, Koreth J, Alyea EP: Prognostic impact of elevated pretransplantation serum ferritin in patients undergoing myeloablative stem cell transplantation. Blood. 2007, 109 (10): 4586-8. 10.1182/blood-2006-10-054924.

Lucarelli G, Galimberti M, Polchi P, Angelucci E, Baronciani D, Giardini C: Bone marrow transplantation in patients with thalassemia. N Engl J Med. 1990, 322 (7): 417-21. 10.1056/NEJM199002153220701.

Huebers HA, Finch CA: The physiology of transferrin and transferrin receptors. Physiol Rev. 1987, 67 (2): 520-82.

Mathis C: Gomori's Iron Stain. Theory and Practice of Histotechnology: Mosby Co. Edited by: Sheehan D. 1980, 1076-82.

Gale E, Torrance J, Bothwell T: The quantitative estimation of total iron stores in human bone marrow. J Clin Invest. 1963, 42: 1076-82. 10.1172/JCI104793.

Byrd JC, Mrozek K, Dodge RK, Carroll AJ, Edwards CG, Arthur DC: Pretreatment cytogenetic abnormalities are predictive of induction success, cumulative incidence of relapse, and overall survival in adult patients with de novo acute myeloid leukemia: results from Cancer and Leukemia Group B (CALGB 8461). Blood. 2002, 100 (13): 4325-36. 10.1182/blood-2002-03-0772.

Levy MM, Fink MP, Marshall JC, Abraham E, Angus D, Cook D: 2001 SCCM/ESICM/ACCP/ATS/SIS International Sepsis Definitions Conference. Crit Care Med. 2003, 31 (4): 1250-6. 10.1097/01.CCM.0000050454.01978.3B.

Pippard MJ: Detection of iron overload. Lancet. 1997, 349 (9045): 73-4. 10.1016/S0140-6736(05)60880-X.

Fernandez-Rodriguez AM, Guindeo-Casasus MC, Molero-Labarta T, Dominguez-Cabrera C, Hortal-Casc n L, Perez-Borges P: Diagnosis of iron deficiency in chronic renal failure. Am J Kidney Dis. 1999, 34 (3): 508-13. 10.1016/S0272-6386(99)70079-X.

Wish JB: Assessing iron status: beyond serum ferritin and transferrin saturation. Clin J Am Soc Nephrol. 2006, 1 (Suppl 1): S4-8. 10.2215/CJN.01490506.

Kornreich L, Horev G, Yaniv I, Stein J, Grunebaum M, Zaizov R: Iron overload following bone marrow transplantation in children: MR findings. Pediatr Radiol. 1997, 27 (11): 869-72. 10.1007/s002470050259.

Kowdley KV, Trainer TD, Saltzman JR, Pedrosa M, Krawitt EL, Knox TA: Utility of hepatic iron index in American patients with hereditary hemochromatosis: a multicenter study. Gastroenterology. 1997, 113 (4): 1270-7. 10.1053/gast.1997.v113.pm9322522.

Kamble R, Mims M: Iron-overload in long-term survivors of hematopoietic transplantation. Bone Marrow Transplant. 2006, 37 (8): 805-6. 10.1038/sj.bmt.1705335.

Davies S, Henthorn JS, Win AA, Brozovic M: Effect of blood transfusion on iron status in sickle cell anaemia. Clin Lab Haematol. 1984, 6 (1): 17-22. 10.1111/j.1365-2257.1984.tb00521.x.

Brittenham GM, Griffith PM, Nienhuis AW, McLaren CE, Young NS, Tucker EE: Efficacy of deferoxamine in preventing complications of iron overload in patients with thalassemia major. N Engl J Med. 1994, 331 (9): 567-73. 10.1056/NEJM199409013310902.

Marcus RE, Huehns ER: Transfusional iron overload. Clin Lab Haematol. 1985, 7 (3): 195-212.

Altes A, Remacha AF, Sarda P, Sancho FJ, Sureda A, Martino R: Frequent severe liver iron overload after stem cell transplantation and its possible association with invasive aspergillosis. Bone Marrow Transplant. 2004, 34 (6): 505-9. 10.1038/sj.bmt.1704628.

Acknowledgements

This research was supported, in part, by the Doug Coley Fund for Leukemia Research (I.M.), the Leukemia Research Fund of Wake Forest University School of Medicine (I.M.), the Bob MacKay Memorial Fund and a grant from the National Institute of Health (R37 DK42412).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

JAS co-authored the manuscript, participated in the study design, collected clinical data, and performed the statistical analysis. RFC co-authored the manuscript, collected clinical data, and performed the laboratory testing. ZL performed the iron stain grading and provided pathology expertise. DH provided the blood samples and participated in the design of the study. GP provided data and expertise from our blood bank. YKK participated in the design of the study. MG assisted with data collection. JL participated in the statistical design. SVT participated in design of the study and assisted with proofreading. FMT participated in the design and coordination of the study. IM conceived of the study, and participated in its design and coordination.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is published under license to BioMed Central Ltd. This is an Open Access article is distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Storey, J.A., Connor, R.F., Lewis, Z.T. et al. The transplant iron score as a predictor of stem cell transplant survival. J Hematol Oncol 2, 44 (2009). https://doi.org/10.1186/1756-8722-2-44

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1756-8722-2-44