Abstract

There is a growing appetite for mental health and wellbeing outcome measures that can inform clinical practice at individual and service levels, including use for local and national benchmarking. Despite a varied literature on child mental health and wellbeing outcome measures that focus on psychometric properties alone, no reviews exist that appraise the availability of psychometric evidence and suitability for use in routine practice in child and adolescent mental health services (CAMHS) including key implementation issues. This paper aimed to present the findings of the first review that evaluates existing broadband measures of mental health and wellbeing outcomes in terms of these criteria. The following steps were implemented in order to select measures suitable for use in routine practice: literature database searches, consultation with stakeholders, application of inclusion and exclusion criteria, secondary searches and filtering. Subsequently, detailed reviews of the retained measures’ psychometric properties and implementation features were carried out. 11 measures were identified as having potential for use in routine practice and meeting most of the key criteria: 1) Achenbach System of Empirically Based Assessment, 2) Beck Youth Inventories, 3) Behavior Assessment System for Children, 4) Behavioral and Emotional Rating Scale, 5) Child Health Questionnaire, 6) Child Symptom Inventories, 7) Health of the National Outcome Scale for Children and Adolescents, 8) Kidscreen, 9) Pediatric Symptom Checklist, 10) Strengths and Difficulties Questionnaire, 11) Youth Outcome Questionnaire. However, all existing measures identified had limitations as well as strengths. Furthermore, none had sufficient psychometric evidence available to demonstrate that they could reliably measure both severity and change over time in key groups. The review suggests a way of rigorously evaluating the growing number of broadband self-report mental health outcome measures against standards of feasibility and psychometric credibility in relation to use for practice and policy.

Similar content being viewed by others

Introduction

There is a growing number of children’s mental health and wellbeing measures that have the potential to be used in child and adolescent mental health services (CAMHS) to inform individual clinical practice e.g. [1], to provide information to feed into service development e.g. [2] and for local or national benchmarking e.g. [3]. Some such measures have a burgeoning corpus of psychometric evidence (e.g., Achenbach System of Empirically Based Assessment, ASEBA [4]; the Strengths and Difficulties Questionnaire, SDQ [5, 6]) and a number of reviews have usefully summarized the validity and reliability of such measures [7, 8]. However, it is also vital to determine which measures can be feasibly and appropriately deployed in a given setting or circumstance [8]. While some attempt has been made to identify measures that might be used in routine clinical practice [9] no reviews have evaluated in depth both the psychometric rigor and the utility of these measures.

National and international policy has focused on the importance of the voice of the child, of shared decision making for children accessing health services, and of self-defined recovery [10–13]. This policy context gives a clear rationale for the use of self-report measures for child mental health outcomes. Further rationale is provided by the costs of administration and burden for other reporters. For example, typical costs for a 30 minute instrument to be completed by a child mental health professional could be as much as £30 (clinical psychologist, £30.00; mental health nurse, £20.00; social worker, £27.00; generic CAMHS worker, £21.00; [14]). However, research has indicated that, due to their difficulties with reading and language and their tendencies to respond based on their state of mind at the moment (rather than on more general levels of adjustment), children may be less reliable in their assessments of their own mental health, and there is evidence of under-reporting behavioral difficulties [15, 16]. Yet, there is increasing evidence that even children with significant mental health problems understand and have insight on their difficulties and can provide information that is unique and informative. Providing efforts are made to ensure measures are age appropriate (in terms of presentation and reading age), young children can be accurate reporters of their own mental health [17–19]. Even in the case of conduct problems, which are commonly identified as problematic for child self-report, evidence suggests that the use of age appropriate measures can yield valid and reliable self-report data [20]. In particular, a number of interactive, online self-report measures have been developed e.g., Dominic interactive; and see [17, 21], which appear to elicit valid and reliable responses from children as young as eight years old.

Assessing mental health outcome measures for use in CAMHS also requires consideration of how outcomes should be compared across services. While more specific measures may provide a more detailed account of specific symptomatology, and may be more sensitive to change, they raise challenges in making comparisons across cases or across services where differences in case mix from one setting to the next are likely. Broad mental health indicators in contrast are designed to capture a constellation of the most commonly presented symptoms or difficulties and, therefore, are of relevance to most of the CAMHS population. They also reduce the need to isolate particular presenting problems at the outset of treatment in order to capture baseline problems to assess subsequent change against – a difficult task in the context of changing problems or situations across therapy sessions [22, 23]. Associated with breadth of the measure is the issue of brevity; even if costs associated with clinician reported measures are avoided, long child self-report measures are likely to either erode clinical time where completed in clinical sessions or present barriers to completion for children and young people when administered outside sessions [22].

The current study is motivated by the argument that challenges to valid and reliable measurement of child mental health outcomes for those accessing services do not simply relate to the selection of a psychometrically sound tool; issues of burden, financial cost and suitability for comparison across services are huge barriers to successful implementation. Failure to grapple with such efficacy issues is likely to lead to distortions (based on attrition, representativeness and perverse incentives) in the yielded data. This review places particular importance on: 1) measures that cover broad symptom and age ranges, allowing comparisons between services, regions and years; 2) child self-report measures that offer more service user oriented and feasible perspective on mental health outcomes; 3) measures with a range of available evidence relating to psychometric properties, and 4) the resource implications of measures (in terms of both time and financial cost).

Review

Method

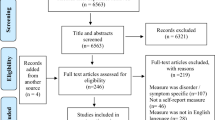

The review process to identify and filter appropriate measures consisted of four stages, summarized in Figure 1.

The review was carried out by a team of four researchers, one review coordinator and an expert advisory group (five experts in child mental health and development, two psychometricians, three educational psychology experts and one economist). The search strategy, and inclusion and exclusion criteria were developed and agreed by the expert advisory group. Searches in respective databases and filtering were carried out by the researchers and review coordinator. Any ambiguous cases were taken to the expert advisory group for discussion.

Stage 1: Setting review parameters, literature searching and consultation

The key purpose of this review was to identify measures that could be used in routine CAMHS in order to inform service development and facilitate regional or national comparison. Because any outcome data collected for these purposes would need to be aggregated to the service level in sufficient numbers to provide reliable information, and would need to allow comparison across services and across years, only measures that cover broad symptom and age ranges were considered. The review focused on measures that included a child self-report version. This was partly because of the cost and burden implications associated with other reporters, especially clinicians, but also because of the recent emphasis on patient reported outcome measures e.g. [11] and evidence that, where measures are developed specifically to be child friendly, children can be accurate reporters of their own mental health e.g. [17, 19]. The review focused on measures that had strong evidence of good psychometric properties and also took account of the resource implications associated with the measures (in terms of both time and financial cost).

Developing the search terms

For the purposes of this review, child mental health outcome measures were included if they sought to provide measurement of mental health in children and young people (up to age 18). To capture this, search terms were developed by splitting ‘child mental health outcomes measure’ into three categories: ‘measurement’, ‘mental health’ and ‘child’. A list of words and phrases reflecting each category was generated (see Table 1).

Search of key databases

Search terms were combined using ‘and’ statements to carry out initial searches focused on 4 key databases: EMBASE, ERIC, MEDLINE and PsychInfo. Searches resulting in over 200 papers were subjected to basic filtering using the following exclusion criteria: 1) the title made it clear that the paper was not related to children’s mental health outcome measures; or 2) the paper was not in English.

The remaining papers were further sorted based on more specific criteria. Papers were removed if:

-

No child mental health outcome measure was mentioned in the abstract;

-

The measure indicated was too narrow to provide a broad assessment of mental health;

-

They referred to a measure not used with children;

-

They were not in English;

-

They were a duplicate;

-

The measure was used solely as a tool for assessment or diagnosis.

A list of identified measures was collated from the papers that were retained.

Consultation with collaborators and stakeholders

In order to identify other relevant measures, consultation with two key groups about their knowledge of other existing mental health measures was conducted: 1) the experts in child and adolescent psychology, education and psychometrics from the research group, 2) child mental health practitioners accessed via established UK networks (from which 58 practitioners responded). At the completion of Stage 1, 117 measures had been identified.

Stage 2: Filtering of measures according to inclusion and exclusion criteria

In order to determine which of these measures were to be considered for more in-depth review, inclusion and exclusion criteria were established.

Inclusion criteria

A questionnaire or measure was included if it:

-

Provided measurement of broad mental health and/or wellbeing in children and young people (up to age 18), including measures of wellbeing and quality of life;

-

Was completed by children;

-

Had been validated in a child or adolescent context.

Exclusion criteria

A questionnaire or measure was excluded if it:

-

Was not available in English;

-

Concerned only a narrow set of specific mental disorders or difficulties;

-

Could only be completed by a professional;

-

Took over 30 minutes to complete;

-

Primarily employed open-ended responses;

-

Used an age range that was too narrow (e.g. only for preschoolers);

-

Had not been used with a variety of populations.

Applying these criteria generated a list of 45 measures see [24].

Stage 3: Secondary searches

The initial searches provided preliminary information on these 45 measures. However, secondary searches on these measures were conducted in order to gather further information about:

-

Psychometric properties;

-

Symptoms or subscales covered;

-

Response format;

-

Length;

-

Respondent;

-

Age range covered;

-

Number of associated published papers;

-

Settings in which the measure has been used.

Information on specific measures was sought from the following sources (in order of priority): measure manuals, review papers, published papers (prioritizing the most recent), contact with the measure developer(s), other web-based sources. Measures were excluded if no further information about them could be gathered from these sources.

Stage 4: Filtering of measures according to breadth and extent of research evidence

After collecting this information, the measures were filtered based on the quality of the evidence available for the psychometric properties. Measures were also removed at this stage if it transpired they were earlier versions of measures for which more recent versions had been identified. The original inclusion and exclusion criteria were also maintained. In addition, the following criteria were now applied:

-

1.

Heterogeneity of samples – the measure was excluded if the only evidence for it was in one particular population, specifically children with one type of problem or diagnosis (e.g., only those with conduct problems or only those with eating disorders).

-

2.

Extent of evidence – the measure was retained only if it had more than five published empirical studies that reported use with a sample or if psychometric evidence was available from independent researchers other than the original developers.

-

3.

Response scales – the measure was retained only if its response scale was polytomous; simple yes/no checklists or visual analogue scales (VAS) were excluded.

These relatively strict criteria were used to identify a small number of robust measures that are appropriate for gauging levels of wellbeing across populations and for evaluating service level outcomes. After these criteria were applied, the retained measures were subjected to a detailed review of implementation features (including versions, age range, response scales, length and financial costs) and psychometric properties. The range of psychometric properties considered included content validity, discriminant validity, concurrent validity, internal consistency and test-retest reliability. We also considered whether the measure had: undergone analysis using item response theory (IRT) approaches (including whether the measure had been tested for bias or differential performance in different UK populations); evidence of sensitivity to change; or, evidence of being successfully used to drive up performance within services.

Results

The application of the criteria outlined resulted in the retention of 11 measures. The implementation features and psychometric properties of these measures are outlined in Tables 2 and 3.

Discussion

This paper represents the first review that evaluates existing broadband measures of child and parent reported mental health and wellbeing outcomes in children, in terms of both psychometrics and implementation. The eleven measures identified (1. Achenbach System of Empirically Based Assessment (ASEBA), 2. Beck Youth Inventories (BYI), 3. Behavior Assessment System for Children (BASC), 4. Behavioral and Emotional Rating Scale (BERS), 5. Child Health Questionnaire (CHQ), 6. Child Symptom Inventories (CSI), 7. Health of the National Outcome Scale for Children and Adolescents (HoNOSCA), 8. Kidscreen, 9. Pediatric Symptom Checklist (PSC), 10. Strengths and Difficulties Questionnaire (SDQ), 11. Youth Outcome Questionnaire (YOQ)) all have potential for use in routine practice. Below we discuss some of the key properties, strengths and limitations of these measures and outline practice implications and suggestions for further research.

In terms of acceptability for routine use (including burden and possible potential for dissemination) three of the measures identified, though below the stipulated half hour completion time, were in excess of fifty items (ASEBA, BASC, the full BYI) which might limit their use for repeated measurement to track change over time in the way that many services are now looking to track outcomes [3]. These measures are most likely to be useful for detailed assessments and periodic reviews. In addition the majority of the measures require license fees to use, introducing a potential barrier to use in clinical services. Kidscreen, CHQ, SDQ, HoNOSCA and PSC are all free to use in non-profit organizations (though some only in paper form and some only under particular circumstances).

In terms of scale properties, all the measures identified have met key psychometric standards. Each of the final measures has been well validated in terms of classical psychometric evaluation. In addition, a range of modern psychometric and statistical modelling approaches have also been applied for some of these measures item response theory (IRT) methods, including categorical data factor analysis and differential item functioning, e.g. [51]. This is particularly true for the Kidscreen, which is less well known to mental health services than some of the other measures identified. However, analyses carried out for this measure include both Classical and IRT methods [38].

All measures were able to provide normative data and thus the potential for cut off criteria and to differentiate between clinical and non-clinical groups. However, we found no evidence of any measure being tested for bias or differential performance in different ethnic, regional or socio-economic status (SES) differences in the UK. Sensitivity to change evidence was only found for YOQ, ASEBA and SDQ, which were found to have the capacity to be used routinely to assess change over time [52]. The other measures may have such capacity but this was not identified by our searches. However, it is worth noting that many of the measures used a three-point Likert scale (e.g., PSC, SDQ). This may result in limited variability in the data derived, possibly leading to issues of insensitivity to change over time and/or floor or ceiling effects if used as a measure of change. In terms of impact of using these measures, we found no evidence that any measures had been successfully used to drive up performance within services.

In terms of implications for practice it is hoped that identifying these measures and their strengths and limitations may aid practitioners who are under increased pressure to identify and use child- and parent-report outcome measures to evaluate outcomes of treatment [12].

Some limitations should be acknowledged with respect to the current review. It is important to note that some measures were excluded from the current review purely because they did not fit our specific criteria. These measures may nevertheless be entirely appropriate for other purposes. In particular, all measures pertaining to specific psychological disorders or difficulties were excluded because the aim of the review was to identify broad measures of mental health. We recognize that many of these measures are psychometrically sound and practically useful in other settings or with specific groups. Furthermore, as recognized by Humphrey et al. [53], in their review of measures of social and emotional skills, we acknowledge that the publication bias associated with systematic reviews is relevant to the current study and may have affected the inclusion of measures at the final stage of the review. However, we maintain that this criterion is important to ensure the academic rigor of the measure validation.

In terms of future research what is required is more research into the sensitivity to change for these and related measures [54, 55], their applicability to different cultures and, the impact of their use us as performance measurement tools [56]. Research is also needed on the impact of these tools on clinical practice and service improvement [57]. In particular in the light of clinician and service user anxiety about use of such tools [58–60] it would be helpful to undertake further exploration of their acceptability directly with these groups (Wolpert, Curtis-Tyler, & Edbrooke-Childs: A qualitative exploration of clinician and service user views on Patient Reported Outcome Measures in child mental health and diabetes services in the United Kingdom, submitted).

Conclusions

Using criteria taking account of psychometric properties and practical usability, this review was able to identify 11 child self-report mental health measures with the potential to inform individual clinical practice, feed into service development, and to inform national benchmarking. The review identified some limitations in each measure in terms of either the time and cost associated with administration, or the strength of the psychometric evidence. The different strengths and weaknesses to some extent reflect the heterogeneity in purposes for which mental health measures have been developed (e.g., estimation of prevalence and progression in normative populations, assessment of intervention impact, individual assessment at treatment outset, tracking of treatment progress, and appraisal of service performance). While it is anticipated that as use of such measures diversifies the evidence base will expand, there are some gaps in current knowledge about the full range of psychometric properties of many of the shortlisted measures. However, current indications are that the 11 measures identified here provide a useful starting point for those looking to implement mental health measures in routine practice and suggest options for future research and exploration.

Abbreviations

- ASEBA:

-

Achenbach system of empirically based assessment

- ASI:

-

Adolescent symptom inventory

- BASC:

-

Behavior assessment system for children

- BERS:

-

Behavioral and emotional rating scale

- BYI:

-

Beck youth inventories

- CAMHS:

-

Child and adolescent mental health services

- CASS:

-

S: Conners-wells adolescent self-report scale: short form

- CBCL:

-

Child behaviour check list

- CDI:

-

Children’s depression inventory

- CGAS:

-

Children's global assessment scale

- CHIP:

-

Child health and illness profile

- CHQ:

-

Child health questionnaire

- CPRS-R:

-

Revised connors parents rating scale

- CSI:

-

Child symptom inventories

- CTRS-R:

-

Revised connors teacher rating scale

- ECI:

-

Early childhood inventory

- HoNOSCA:

-

Health of the national outcome scale for children and adolescents

- HUI:

-

Health utilities index

- PCS:

-

Paddington complexity scale

- PedsQL:

-

Pediatric quality of life inventory

- PHCSCS:

-

Piers- Harris children's self-concept scale

- PRS:

-

Parent report scale

- PSC:

-

Pediatric symptom checklist

- RCMAS:

-

Revised children’s manifest anxiety scale

- SAED:

-

Scale for assessing emotional disturbance

- SDQ:

-

Strengths and Difficulties Questionnaire (SDQ)

- SES:

-

Socio-economic status

- SR:

-

Self rated

- SRP:

-

Self-report of personality

- SSBD:

-

Systematic screening for behaviour disorders

- SSRS:

-

Social skills rating system

- TRF:

-

Teacher report

- TRS:

-

Teacher report scale

- VAS:

-

Visual analogue scale

- WMSSCSA:

-

Walker-McConnell scale of social competence and school-adjustment-adolescent version

- YI:

-

Youth’s inventory

- YOQ:

-

Youth outcome questionnaire

- Y-PSC:

-

Youth pediatric symptom checklist

- YSR:

-

Youth self report

- YQOL-S:

-

Youth quality of life surveillance version instrument.

References

Bickman L, Kelley SD, Breda C, de Andrade AR, Riemer M: Effects of routine feedback to clinicians on mental health outcomes of youths: results of a randomized trial. Psychiatr Serv. 2011, 62: 1423-1429.

Wolpert M, Ford T, Trustam E, Law D, Deighton J, Flannery H, Fugard A: Patient-reported outcomes in child and adolescent mental health services (CAMHS): use of idiographic and standardized measures. J Ment Health. 2012, 21: 165-173. 10.3109/09638237.2012.664304.

Wolpert M, Fugard AJB, Deighton J, Görzig A: Routine outcomes monitoring as part of children and young people's Improving Access to Psychological Therapies (CYP IAPT) – improving care or unhelpful burden?. Child Adolesc Ment Health. 2012, 17: 129-130. 10.1111/j.1475-3588.2012.00676.x.

Achenbach TM, Rescorla L: Manual for the ASEBA school-age forms & profiles: an integrated system of multi-informant assessment. 2001, Burlington, Vt: ASEBA

Goodman R: The Strengths and Difficulties Questionnaire: a research note. J Child Psychol Psychiatry. 1997, 38: 581-586. 10.1111/j.1469-7610.1997.tb01545.x.

Goodman R, Scott S: Comparing the strengths and difficulties questionnaire and the child behavior checklist: is small beautiful?. J Abnorm Child Psychol. 1999, 27: 17-24. 10.1023/A:1022658222914.

Cremeens J, Eiser C, Blades M: Characteristics of health-related self-report measures for children aged three to eight years: a review of the literature. Qual Life Res. 2006, 15: 739-754. 10.1007/s11136-005-4184-x.

Myers K, Winters NC: Ten-year review of rating scales. II: scales for internalizing disorders. J Am Acad Child Adolesc Psychiatry. 2002, 41: 634-659. 10.1097/00004583-200206000-00004.

Hunter J, Higginson I, Garralda E: Systematic literature review: outcome measures for child and adolescent mental health services. J Public Health Med. 1996, 18: 197-206. 10.1093/oxfordjournals.pubmed.a024480.

UK Government: Children Act, Volume 31. 2004, London: The Stationery Office

Black N: Patient reported outcome measures could help transform healthcare. BMJ. 2013, 346: f167-10.1136/bmj.f167.

Department of Health: Liberating the NHS: No decision about me without me. Government response. 2012, London: Stationary Office

Nations U: The United Nations Convention on the Rights of the Child. 1989, London: UNICEF

Lesley C: Unit Costs of Health and Social Care 2012. 2012, Canterbury: Personal Social services Research Unit

Marsh H, Debus R, Bornholt L: Validating Young Children's Self-Concept Responses: Methodological Ways and Means to Understand their Responses. Handbook of Research Methods in Developmental Science. 2008, Blackwell Publishing Ltd: Malden, Oxford and Victoria: Blackwell Publishing Ltd., 23-160.

Edelbrock C, Costello AJ, Dulcan MK, Kalas R, Conover NC: Age differences in the reliability of the psychiatric interview of the child. Child Dev. 1985, 56: 265-275. 10.2307/1130193.

Truman J, Robinson K, Evans AL, Smith D, Cunningham L, Millward R, Minnis H: The strengths and difficulties questionnaire: a pilot study of a new computer version of the self-report scale. Eur Child Adolesc Psychiatry. 2003, 12: 9-14. 10.1007/s00787-003-0303-9.

Schmidt LJ, Garratt AM, Fitzpatrick R: Instruments for Mental Health: A review. 2000, London: Patient-Reported Health Instruments Group

Sharp C, Croudace TJ, Goodyer IM, Amtmann D: The strength and difficulties questionnaire: predictive validity of parent and teacher ratings for help-seeking behaviour over one year. Educ Child Psychol. 2005, 22: 28-44.

Arseneault L, Kim-Cohen J, Taylor A, Caspi A, Moffitt TE: Psychometric evaluation of 5- and 7-year-old children's self-reports of conduct problems. J Abnorm Child Psychol. 2005, 33: 537-550. 10.1007/s10802-005-6736-5.

Deighton J, Tymms P, Vostanis P, Belsky J, Fonagy P, Brown A, Martin A, Patalay P, Wolpert M: The development of a school-based measure of child mental health. J Psychoeduc Assess. 2013, 31: 247-257. 10.1177/0734282912465570.

Chorpita BF, Reise S, Weisz JR, Grubbs K, Becker KD, Krull JL: Evaluation of the brief problem checklist: child and caregiver interviews to measure clinical progress. J Consult Clin Psychol. 2010, 78: 526-536.

Weisz JR, Jensen-Doss A, Hawley KM: Evidence-based youth psychotherapies versus usual clinical care: a meta-analysis of direct comparisons. Am Psychol. 2006, 61: 671-689.

Wolpert M, Aitken J, Syrad H, Munroe M, Saddington C, Trustam E, Bradley J, Nolas SM, Lavis P, Jones A, Fonagy P, Frederickson N, Day C, Rutter M, Humphrey N, Vostanis P, Meadows P, Croudace T, Tymms P, Brown J: Review and recommendations for national policy for England for the use of mental health outcome measures with children and young people. 2008, London: Department for Children, Schools and Families, [http://www.ucl.ac.uk/ebpu/publications/reports]

Beck JS, Beck AT, Jolly JB: Beck Youth Inventories Manual. 2001, San Antonio: Psychological Corporation

Flanagan D, Alfonso V, Primavera L, Povall L, Higgins D: Convergent validity of the BASC and SSRS: implications for social skills assessment. Psychol Sch. 1996, 33: 13-23. 10.1002/(SICI)1520-6807(199601)33:1<13::AID-PITS2>3.0.CO;2-X.

Flanagan R: A Review of the Behavior Assessment System for Children (BASC): Assessment Consistent With the Requirements of the Individuals With Disabilities Education Act (IDEA). J Sch Psychol. 1995, 33: 177-186. 10.1016/0022-4405(95)00003-5.

Sandoval J, Echandia A: Behavior assessment system for children. J Sch Psychol. 1994, 32: 419-425. 10.1016/0022-4405(94)90037-X.

Epstein MH: Behavioral and Emotional Rating Scale. 2004, PRO-ED: Austin

Reid R, Epstein MH, Pastor DA, Ryser GR: Strengths-based assessment differences across students with LD and EBD. Remedial Spec Educ. 2000, 21: 346-355. 10.1177/074193250002100604.

Landgraf JM, Abetz L, Ware JE: The CHQ: A user's manual. 1999, Boston: HealthAct

Raat H, Botterweck AM, Landgraf JM, Hoogeveen WC, Essink-Bot ML: Reliability and validity of the short form of the Child Health Questionnaire for parents (CHQ-PF28) in large random school based and general population samples. J Epidemiol Community Health. 2005, 59: 75-82. 10.1136/jech.2003.012914.

Sung L, Greenberg ML, Doyle JJ, Young NL, Ingber S, Rubenstein J, Wong J, Samanta T, McLimont M, Feldman BM: Construct validation of the health utilities index and the Child Health Questionnaire in children undergoing cancer chemotherapy. Br J Cancer. 2003, 88: 1185-1190. 10.1038/sj.bjc.6600895.

Gadow KD, Sprafkin J: Child Symptom Inventory 4: Screening and Norms Manual. 2002, Stony Brook, NY: Checkmate Plus

Garralda ME, Yates P, Higginson I: Child and adolescent mental health service use. HoNOSCA as an outcome measure. Br J Psychiatry. 2000, 177: 52-58. 10.1192/bjp.177.1.52.

Gowers SG, Harrington RC, Whitton A, Lelliott P, Beevor A, Wing J, Jezzard R: Brief scale for measuring the outcomes of emotional and behavioural disorders in children. Health of the Nation Outcome Scales for children and Adolescents (HoNOSCA). Br J Psychiatry. 1999, 174: 413-416. 10.1192/bjp.174.5.413.

Gowers SG, Levine W, Bailey-Rogers S, Shore A, Burhouse E: Use of a routine, self-report outcome measure (HoNOSCA-SR) in two adolescent mental health services. Health of the nation outcome scale for children and adolescents. Br J Psychiatry. 2002, 180: 266-269. 10.1192/bjp.180.3.266.

Ravens-Sieberer U, Gosch A, Rajmil L, Erhart M, Bruil J, Power M, Duer W, Auquier P, Cloetta B, Czemy L, Mazur J, Czimbalmos A, Tountas Y, Hagquist C, Kilroe J, KIDSCREEN Group: The KIDSCREEN-52 quality of life measure for children and adolescents: psychometric results from a cross-cultural survey in 13 European countries. Value Health. 2008, 11: 645-658. 10.1111/j.1524-4733.2007.00291.x.

Berra S, Ravens-Sieberer U, Erhart M, Tebe C, Bisegger C, Duer W, von Rueden U, Herdman M, Alonso J, Rajmil L: Methods and representativeness of a European survey in children and adolescents: the KIDSCREEN study. BMC Public Health. 2007, 7: 182-10.1186/1471-2458-7-182.

Ravens-Sieberer U, Erhart M, Rajmil L, Herdman M, Auquier P, Bruil J, Power M, Duer W, Abel T, Czemy L, Azur J, Czimbalmos A, Tountas Y, Hagquist C, Kilroe J, KIDSCREEN Group: Reliability, construct and criterion validity of the KIDSCREEN-10 score: a short measure for children and adolescents’ well-being and health-related quality of life. Qual Life Res. 2010, 19: 1487-1500. 10.1007/s11136-010-9706-5.

Robitail S, Ravens-Sieberer U, Simeoni MC, Rajmil L, Bruil J, Power M, Duer W, Cloetta B, Czemy L, Mazur J, Czimbalmos A, Tountas Y, Hagquist C, Kilroe J, Auquier P, Kidscreen Group: Testing the structural and cross-cultural validity of the KIDSCREEN-27 quality of life questionnaire. Qual Life Res. 2007, 16: 1335-1345. 10.1007/s11136-007-9241-1.

Gall G, Pagano ME, Desmond MS, Perrin JM, Murphy JM: Utility of psychosocial screening at a school-based health center. J Sch Health. 2000, 70: 292-298. 10.1111/j.1746-1561.2000.tb07254.x.

Gardner W, Lucas A, Kolko DJ, Campo JV: Comparison of the PSC-17 and alternative mental health screens in an at-risk primary care sample. J Am Acad Child Adolesc Psychiatry. 2007, 46: 611-618. 10.1097/chi.0b013e318032384b.

Jellinek MS, Murphy JM, Little M, Pagano ME, Comer DM, Kelleher KJ: Use of the Pediatric Symptom Checklist to screen for psychosocial problems in pediatric primary care: a national feasibility study. Arch Pediatr Adolesc Med. 1999, 153: 254-260.

Murphy JM, Jellinek M, Milinsky S: The Pediatric Symptom Checklist: validation in the real world of middle school. J Pediatr Psychol. 1989, 14: 629-639. 10.1093/jpepsy/14.4.629.

Wasserman RC, Kelleher KJ, Bocian A, Baker A, Childs GE, Indacochea F, Stulp C, Gardner WP: Identification of attentional and hyperactivity problems in primary care: a report from pediatric research in office settings and the ambulatory sentinel practice network. Pediatrics. 1999, 103: E38-10.1542/peds.103.3.e38.

Goodman R: Psychometric properties of the strengths and difficulties questionnaire. J Am Acad Child Adolesc Psychiatry. 2001, 40: 1337-1345. 10.1097/00004583-200111000-00015.

Goodman R, Meltzer H, Bailey V: The Strengths and Difficulties Questionnaire: a pilot study on the validity of the self-report version. Eur Child Adolesc Psychiatry. 1998, 7: 125-130. 10.1007/s007870050057.

Dunn TW, Burlingame GM, Walbridge M, Smith J, Crum MJ, Dunn TW, Burlingame GM, Walbridge M, Smith J, Crum MJ: Outcome assessment for children and adolescents: psychometric validation of the Youth Outcome Questionnaire 30.1 (Y-OQ®-30.1). Clin Psychol Psychother. 2005, 12: 388-401. 10.1002/cpp.461.

Edwards TC, Huebner CE, Connell FA, Patrick DL: Adolescent quality of life, Part I: conceptual and measurement model. J Adolesc. 2002, 25: 275-286. 10.1006/jado.2002.0470.

Bares C, Andrade F, Delva J, Grogan-Kaylor A, Kamata A: Differential item functioning due to gender between depression and anxiety items among Chilean adolescents. Int J Soc Psychiatry. 2012, 58: 386-392. 10.1177/0020764011400999.

McClendon DT, Warren JS, Green KM, Burlingame GM, Eggett DL, McClendon RJ: Sensitivity to change of youth treatment outcome measures: a comparison of the CBCL, BASC-2, and Y-OQ. J Clin Psychol. 2011, 67: 111-125. 10.1002/jclp.20746.

Humphrey N, Kalambouka A, Wigelsworth M, Lendrum A, Deighton J, Wolpert M: Measures of social and emotional skills for children and young people: a systematic review. Educ Psychol Meas. 2011, 71: 617-637. 10.1177/0013164410382896.

Fokkema M, Smits N, Kelderman H, Cuijpers P: Response shifts in mental health interventions: an illustration of longitudinal measurement invariance. Psychol Assess. 2013, 25: 520-531.

Oort FJ: Using structural equation modeling to detect response shifts and true change. Qual Life Res. 2005, 14: 587-598. 10.1007/s11136-004-0830-y.

Wolpert M: Uses and Abuses of Patient Reported Outcome Measures (PROMs): potential iatrogenic impact of PROMs implementation and how it can be mitigated. Adm Policy Ment Health. 2014, 41: 141-145. 10.1007/s10488-013-0509-1.

Guide to Using Outcomes and Feedback Tools With Children, Young People and Families. Edited by: Law D, Wolpert M. 2014, London: CAMHS Press, 2

Badham B: Talking about talking therapies: thinking and planning about how to make good and accessible talking therapies available to children and young people. 2011,http://www.iapt.nhs.uk/silo/files/talking-about-talking-therapies.pdf.

Curtis-Tyler K: Facilitating children's contributions in clinic? Findings from an in-depth qualitative study with children with Type 1 diabetes. Diabet Med. 2012, 29: 1303-1310. 10.1111/j.1464-5491.2012.03714.x.

Moran P, Kelesidi K, Guglani S, Davidson S, Ford T: What do parents and carers think about routine outcome measures and their use? A focus group study of CAMHS attenders. Clin Child Psychol Psychiatry. 2012, 17 (1): 65-79. 10.1177/1359104510391859.

Acknowledgements

The authors would like to thank members of the initial research group tasked with the review: Norah Frederickson, Crispin Day, Michael Rutter, Neil Humphrey, Panos Vostanis, Pam Meadows, Peter Tymms, Hayley Syrad, Maria Munroe, Cathy Saddington, Emma Trustam, Jenna Bradley, Sevasti-Melissa Nolas, Paula Lavis, and Alice Jones. The authors would also like to thank members of the Policy Research Unit in the Health of Children, Young People and Families: Terence Stephenson, Catherine Law, Amanda Edwards, Ruth Gilbert, Steve Morris, Helen Roberts, Russell Viner, and Cathy Street.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The initial review reported in this paper was jointly funded by the Department of Health (DH) and the Department for Children, Schools and Families (DCSF, now the Department for Education). Further work extending the review and developing the paper was funded by the DH Policy Research Programme. This is an independent report funded by DH. The views expressed are not necessarily those of the Department. Authors have no other interests (financial or otherwise) relevant to the submitted work.

Authors’ contributions

JD, developed the review protocol, led the initial literature review and drafted the article in full. TC and JB independently provided advice on the psychometric evidence for the review process and provided independent appraised of the final 11 measures selected for detailed review. PF advised on the review process, and revised and commented on the drafting of the paper. PP carried out secondary searches for the detailed review of the final 11 measures, summarized the information derived and populated the tables relating to implementation features and psychometric properties. MW led the initial project commissioned by DH and DCSF, contributed to the drafting of the paper and provided overall sign off of the final draft. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Deighton, J., Croudace, T., Fonagy, P. et al. Measuring mental health and wellbeing outcomes for children and adolescents to inform practice and policy: a review of child self-report measures. Child Adolesc Psychiatry Ment Health 8, 14 (2014). https://doi.org/10.1186/1753-2000-8-14

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1753-2000-8-14