Abstract

Background

The objective of this research is to generate quality of care indicators from systematic reviews to assess the appropriateness of obstetric care in hospitals.

Methods

A search for systematic reviews about hospital obstetric interventions, conducted in The Cochrane Library, clinical evidence and practice guidelines, identified 303 reviews. We selected 48 high-quality evidence reviews, which resulted in strong clinical recommendations using the Grading of Recommendations Assessment, Development and Evaluation (GRADE) system. The 255 remaining reviews were excluded, mainly due to a lack of strong evidence provided by the studies reviewed.

Results

A total of 18 indicators were formulated from these clinical recommendations, on antepartum care (8), care during delivery and postpartum (9), and incomplete miscarriage (1). Authors of the systematic reviews and specialists in obstetrics were consulted to refine the formulation of indicators.

Conclusions

High-quality systematic reviews, whose conclusions clearly claim in favour or against an intervention, can be a source for generating quality indicators of delivery care. To make indicators coherent, the nuances of clinical practice should be considered. Any attempt made to evaluate the extent to which delivery care in hospitals is based on scientific evidence should take the generated indicators into account.

Similar content being viewed by others

Background

Quality of care has been defined as the degree to which health services increase the likelihood of desired health outcomes for individuals and populations and are consistent with current professional knowledge[1]. Scientific knowledge is not the only component of the quality of care that must be taken into account, as other structural factors such as process or outcome are also important. In addition, local and particular circumstances of each situation and patients’ preferences cannot be ignored when assessing the appropriateness of a decision[2]. Nevertheless, evaluation of clinical practice through the filter of scientific evidence is an essential enterprise, coherent with the goals of a public health system, the ethical principles of health professionals and the basic rights of citizens, given the possibility that patients might receive inappropriate health care[3, 4].

Too little attention has been paid to the correlation between the availability of scientific evidence and clinical practice. A series of studies[5–7] faced the burden of having to analyse de novo the evidence relevant to each case. The establishment of a priori clinical indicators to be used as performance measures might be more efficient for systematically assessing the degree to which scientific evidence is applied in clinical practice.

Development of indicators based on professional consensus has a long history[8, 9], while systematic and explicit methods to incorporate scientific evidence have been developed to a lesser extent. Some recent initiatives, however, followed a systematic approach[10]. Moreover, a successful approach developed by the RAND Corporation combines scientific evidence with professional consensus: the process starts with defining topics of interest, continues with selecting available evidence from different sources, and ends with formulating indicators that are ultimately evaluated by panels of experts through a structured consensus method[11].

In the present study, we restricted the source of indicators of quality of care to systematic reviews (SR), based on the assumption that they provide the highest degree of reliability[12], are increasingly available, and that decision makers increasingly rely on them to cope with the ever-growing volume of healthcare research. Obstetric care during childbirth is particularly suitable for evaluation through evidence-based indicators because it is a field with a relatively high production of SRs. The Cochrane Pregnancy and Childbirth Cochrane Review Group had published hundreds of full SRs, which have been the basis for numerous recommendations and clinical practice guidelines (CPGs)[13]. In addition, some authors have challenged many indicators currently used in obstetrics; a recent study analysed the main indicators currently available (176 in total) and concluded that most did not meet the requirements to measure quality of care[14]. Similar results have been reported in other areas of healthcare[10].

In summary, we generated a set of quality indicators of obstetric care related to childbirth, based on SRs, which could be applicable in different settings and circumstances.

Methods

The first phase of our project consisted of a literature search and generation of a set of recommendations based on sound evidence, either in favour or against interventions in delivery care; the second phase consisted of developing and validating a set of indicators.

First phase

Table 1 summarizes the sequential steps followed in the first phase. This mainly consisted of identifying evidence, appraising literature, and generating and grading clinical recommendations. Only SRs of randomized clinical trials were considered.

Literature search

A literature search was conducted in the Cochrane Database of Systematic Reviews (The Cochrane Library, Issue 3, 2009, and updated in 2011), the Database of Abstracts of Reviews of Effects, and Clinical Evidence to identify SRs assessing obstetric interventions performed in a hospital setting. To retrieve supplementary relevant SR, we consulted the available CPG from the main obstetrical medical societies (the Royal College of Obstetricians and Gynaecologists, the American College of Obstetricians and Gynaecologists) and CPG of obstetric care from main guideline producers (the National Institute for Clinical Excellence (NICE), and the New Zealand Guidelines Group).

Selection of systematic reviews

Two researchers (MA and DR) independently applied selection criteria to the identified SR: pharmacological or non-pharmacological interventions, under the responsibility of the clinical team and registered at the clinical record or any other database. In case of disagreement, the criterion from a third author (XB) was applied.

Appraisal of selected reviews

Two researchers (MA and DR) independently appraised each SR and restricted the inclusion to SR that met all internal validity items established by the Scottish Intercollegiate Guidelines Network (SIGN)[15], as assessed on the review’s full text. These criteria assess whether a formulated question is clearly addressed, a description of the methodology is included, the search strategy is sufficiently rigorous, the quality of individual studies is analysed and taken into account, heterogeneity is evaluated and the original authors tried to explain it.

Generation and grading of recommendations

For each selected SR, we classified the outcomes by relevance (critical, important and relative). Two authors (MA and DR) independently rated the quality of evidence and assessed the strength of recommendations based on the Grading of Recommendations Assessment, Development and Evaluation (GRADE) system[16]. Applicability of the GRADE system to generate quality indicators has been described previously[17, 18]. Quality of evidence of critical outcomes was rated high, moderate, low or very low, based on: limitations in design of the primary studies; imprecision, inconsistency and indirectness of the estimates of effects; and likelihood of reporting bias and other biases. A set of clinical recommendations was generated based on balancing the desirable and undesirable consequences of an intervention and the quality of evidence. We used an adaptation of GRADE system, and patients’ values, preferences and resource use were not considered because they are context-specific. Additional file1: Table S4 shows the modified GRADE system we applied.

Two authors (MA and DR) independently selected recommendations that were considered strong (either in favour or against the application of a given intervention) and based on high quality evidence, at least for the most critical outcomes. In case of disagreements, a third author (XB) was consulted.

Second phase

Development and validation of indicators

From the selected clinical recommendations, we proceeded to construct indicators, following an adaptation of the methods proposed by the American College of Cardiology/American Heart Association (ACC/AHA)[19] and the Agency for Healthcare Research and Quality (AHRQ)[20]. Table 2 shows the general structure of an indicator and the sources of information for each section. Most of the information came either from the SR or current guidelines that summarize both evidence and clinical expertise. Specific sections such as the identification of sources of information to compute the indicator, factors that may explain variability in the results, and specific setting characteristics to ensure the viability of the indicator were needed from clinical experts’ input as well as additional supporting literature.

We subsequently consulted two specialists in obstetrics (CF and AV) to assess our design interpretation of the indicators, and the relevance of the indicators in current practice. This was followed by an email consultation with the authors of the SR on which the indicators were based, asking to what extent they agreed with the formulation of the indicator (content validity, robustness and reliability). The comments received from the review authors or consultants led us to modify or redefine various indicators.

Results

Search and selection of systematic reviews

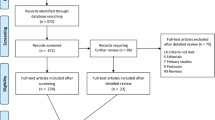

Figure1 shows the study flowchart. We identified 303 SR, 301 from the search in The Cochrane Library and 2 more[21, 22] from the CPG consulted; 102 were excluded for not targeting acute care interventions, 149 for not providing clear evidence (there was no benefit/harm associated with the intervention), and 4 because interventions were not implemented by clinical teams, or the clinical processes were not sufficiently measurable. Then, 48 SR were provisionally selected for further consideration[21–43].

Quality of evidence assessment and generation of clinical recommendations

The selected reviews consisted exclusively of Cochrane reviews. No SR was excluded based on their quality assessment. In the following stage, 28 SR were excluded for not providing a base for any strong recommendation (either in favour or against an intervention). Thus, we generated 20 clinical recommendations that were both strong and based on high-quality evidence.

Construction and validation of indicators

Approximately 75% of the authors of the selected SR responded to our request to review the indicator. Overall, they agreed on the indicator proposal, and their comments were used for further improvement of their definition and formulation.

Following advice of the obstetric consultants, two indicators were removed: the proportion of women with singleton pregnancies at risk of preterm delivery to be treated with a combination of corticosteroids with thyrotropin-releasing hormone[33], and the proportion of breech deliveries carried out by caesarean section[32]. The main reasons for excluding these indicators were: the first is an intervention no longer used in clinical practice, and the existing evidence on the second is controversial in nature.

The 18 indicators eventually accepted are shown in Table 3. These indicators are intended to assess the delivery of care during the antepartum period (8 indicators[22–30]), during delivery (8 indicators[21, 31, 34–40]), at the immediate postpartum (one indicator[41]), and the management of miscarriages (one indicator[42]).

Indicators are expressed in proportions and refer to process of care, while none refer to structure or outcome. To illustrate the process (see Additional file2: Table S5) presents the full content of one indicator (proportion of women with singleton pregnancies and threatened preterm labour who receive corticosteroids) and includes an example of its computation.

In 2011, we consulted the Cochrane Library in order to verify the updating status of SR that supports the indicators: all of them have been updated between 2009 and 2011. Three SR changed their conclusions; however none of those changes invalidate the indicators. The first SR, about using antibiotics in women with preterm rupture of membranes, concludes that despite the benefits at short term, during pregnancy, users should be aware of the unknown long term effects on newborns. The second SR, which likewise assesses the use of antibiotics in prophylaxis during caesarean section, provided a similar warning about the unknown long term effects in newborns. The third SR, about active versus expectant management in the third stage of labour, found potential adverse effects with several uterotonics and concludes that information about the benefits and harms should be provided in order to support an informed choice.

Discussion

The degree of justification or appropriateness of an intervention is directly related to the scientific evidence that supports its implementation and use in practice. Consequently, it seems logical to generate quality indicators through an explicit and systematic process and this has been our purpose. Other recent studies that have developed indicators in a variety of fields, including performance measures[19], clinical practice guidelines[44–46], or a mixed process of evidence appraisal and expert opinion[47, 48], have been published. However, to our knowledge, the present study is unique in its focus on SRs.

Several authors warned about potential errors that could be made using quality indicators[49, 50]. The most common criticism warns against a construction of quality indicators that is too mechanical. Such a construction would infringe upon the principle that clinical decisions should be flexible in nature, with a lack of individual assessment of each patient and circumstances before applying a particular intervention. Other concerns highlight potential consequences of inflexibility resulting from dichotomizing quality of care into adequate or inadequate in relation to a particular practice, and frequent methodological errors made in the design and construction of indicators[14].

SRs are one of the main instruments for synthesizing available evidence, although they remain little used to generate healthcare explicit quality indicators. In this study, a strategy for the formulation of indicators was based on two basic and differentiated approaches. First, the use of good quality SRs: in this case, predominantly Cochrane reviews as the primary source of evidence to identify interventions for which the potential benefits far outweigh the possible drawbacks; reviews in which that positive balance is not sufficiently significant were excluded. Priority was given to updated secondary sources of literature, so it is unlikely that any subsequent landmark clinical trials for the proposed indicators were missed.

Second, a rigorous and systematic process was conducted to extract relevant data from each review, and the strength of recommendations was assessed by a standardized method (GRADE)[16] to construct each indicator. Only high-quality evidence was considered and this resulted in a strong recommendation (in favour of, or against, the intervention) for the generation of indicators. It implies, according to the GRADE system, that most patients should receive the recommended intervention, or that it can be adopted as a policy in most situations[16]. Moreover, discussions with the obstetric consultants and SR authors resulted in improving additional aspects in the formulation, interpretation and applicability of the indicators. This might be considered a more informal consultation process than other methodologies, such as the aforementioned RAND Corporation approach[11]. Focusing, however, only on highly evidence-based interventions decreases the need to consult experts.

At the end of the process, 18 quality indicators for the delivery of obstetric care in hospitals were identified. Illustrated in Table 2, each proposed indicator has a clear definition, including specific inclusion and exclusion criteria that are consistent with those used in the studies that are the source of evidence, and establishes the population that could benefit from each intervention. All aspects that need to be taken into account for the use of the indicator are described, including clinical situations in which an intervention might not be suitable for a particular patient, meaning that the patient must be excluded from the calculation of the indicator. The possible rejection of the intervention by the patient has also been considered in the formulation of each indicator. This strategy permits one to overcome the classical tension between the generic approach that usually has recommendations contained in a policy document (e.g., a clinical guideline) and the necessity of providing personalized care to individuals who are different. In our opinion, only with such an approach can the evaluation of quality of care provided be made, taking the existing evidence and the characteristics and values of each patient simultaneously into account.

The 18 generated indicators represent a conservative sample of the available evidence, since the criteria of consistency, meaningfulness and applicability had priority. We do not expect them to be unique; however, we propose that they should be included in any quality assessment or performance measurement that is made relating to the delivery of care. Since they have been formulated while taking criteria of flexibility and feasibility into account, they could be applied in very different hospital obstetric settings[51].

Some potential limitations of the present study should be noted. First, since indicators help to identify quality problems over time, their applicability and usefulness may depend on the evolving needs of their potential users: policy-makers, health professionals, medical societies, etc., and, theoretically, indicators that specifically address all the issues that are relevant for different stakeholders should be available. However, one characteristic of our methodology is that we have generated indicators based on strong evidence, which should be equally important for all involved parties (e.g., assessing that an episiotomy was not performed unless justified). Second, the identified indicators reflect only those aspects of care that are supported by adequate evidence, which do not necessarily cover all the desirable dimensions; however, the reviews represented in the Cochrane Pregnancy and Childbirth Group encompass the most used interventions in the field. Therefore, the results of the present study are very specific (a limited number, if any, of the generated indicators are false positives in relation to their capacity for measuring quality of care) but probably less sensitive (some indicators could be lacking due to the aforementioned limitations) in relation to all possible cases. Third, the rigour applied in our methodology for defining indicators, necessary to guarantee its internal validity, might limit its external validity or applicability in clinical practice. Strictly defining the target population might reduce its applicability, leaving out large groups of people for whom an indicator is not suitable. Finally, calculation of detailed indicators in daily practice might involve the need for accurate information systems and is quite sensitive to the quality of clinical data registration. If clinicians know in advance the criteria applied for calculating quality indicators, they will likely be more aware of the actions that must be considered in each clinical scenario and the necessity of registering them or justifying an alternative. Indicators could not only be included in local clinical guidelines, but could also be part of electronic alarms or clinical reminders to be activated when the hospital information system detects one of the situations labelled as a priority (e.g., reminding the administration of antibiotics when a caesarean section is programmed). Future research should concentrate on establishing the corresponding standards for the proposed indicators and interpreting the influence of local circumstances and patient preferences on their observed values.

Conclusions

The present study demonstrated that the generation of healthcare quality indicators from SRs is feasible and efficient. This is not a simple process, and not all reviews are equally useful in generating indicators. We believe that the thoroughness of the proposed methodology makes these indicators essential references to assess the extent to which the delivery of care is based on scientific evidence. We propose that this methodology be applied to other areas of care where there is sufficiently sound evidence.

References

Institute of Medicine (IOM): Medicare: A Strategy for Quality Assurance, Volume I. 1990, Washington D.C

Eddy DM: Variations in physician practice: the role of uncertainty. Health Aff (Millwood). 1984, 3: 74-89. 10.1377/hlthaff.3.2.74.

Buen JM, Peiró S, Caldern SM: Variations in medical practice: importance, causes, and implications. Med Clin (Barc). 1998, 110: 382-390.

Grol R, Grimshaw J: From best evidence to best practice: effective implementation of change in patients' care. Lancet. 2003, 362: 1225-1230. 10.1016/S0140-6736(03)14546-1.

Ellis J, Mulligan I, Rowe J: Inpatient general medicine is evidence based. A-Team, Nuffield Department of Clinical Medicine. Lancet. 1995, 346: 407-410. 10.1016/S0140-6736(95)92781-6.

Bare M, Jordana R, Toribio R: In-patient treatment decisions. Med Clin (Barc). 2004, 122: 130-133. 10.1016/S0025-7753(04)74170-6.

Dubinsky M, Ferguson JH: Analysis of the National Institutes of Health Medicare coverage assessment. Int J Technol Assess Health Care. 1990, 6: 480-488. 10.1017/S0266462300001069.

Campbell SM, Braspenning J, Hutchinson A: Research methods used in developing and applying quality indicators in primary care. Qual Saf Health Care. 2002, 11: 358-364. 10.1136/qhc.11.4.358.

Brook RH, McGlynn EA, Cleary PD: Quality of health care. Part 2: measuring quality of care. N Engl J Med. 1996, 335: 966-970. 10.1056/NEJM199609263351311.

Wilson KC, Schünemann HJ: An Appraisal of the Evidence Underlying Performance Measures for Community-Acquired Pneumonia. Am J Respir Crit Care Med. 2011, 183: 1454-1462. 10.1164/rccm.201009-1451PP.

McGlynn E, Kerr E, Damberg C: RAND Health. Quality of Care for Woman. A review of selected clinical conditions and quality indicators. 2000, Santa Monica, CA: RAND Corporation, 431-

Glasziou P, Vandenbroucke JP, Chalmers I: Assessing the quality of research. BMJ. 2004, 328: 39-41. 10.1136/bmj.328.7430.39.

Dodd JM, Crowther CA: Cochrane reviews in pregnancy: the role of perinatal randomized trials and systematic reviews in establishing evidence. Semin Fetal Neonatal Med. 2006, 11: 97-103. 10.1016/j.siny.2005.11.005.

Bailit JL: Measuring the quality of inpatient obstetrical care. Obstet Gynecol Surv. 2007, 62: 207-213. 10.1097/01.ogx.0000256800.21193.ce.

SIGN 50: a guideline developer’s handbook. 2008, Edinburgh: Scottish Intercollegiate Guidelines Network, Available from: http://www.sign.ac.uk/pdf/sign50.pdf [Date accessed: July 2011]

GRADE Working Group: Grading quality of evidence and strength of recommendations. BMJ. 2004, 328: 1490-

Schunemann HJ, Jaeschke R, Cook DJ: An official ATS statement: grading the quality of evidence and strength of recommendations in ATS guidelines and recommendations. Am J Respir Crit Care Med. 2006, 174: 605-614. 10.1164/rccm.200602-197ST.

Kahn JM, Scales DC, Au DH: An official American Thoracic Society policy statement: pay-for-performance in pulmonary, critical care, and sleep medicine. Am J Respir Crit Care Med. 2010, 181: 752-761. 10.1164/rccm.200903-0450ST.

Spertus JA, Eagle KA, Krumholz HM: American College of Cardiology and American Heart Association methodology for the selection and creation of performance measures for quantifying the quality of cardiovascular care. Circulation. 2005, 111: 1703-1712. 10.1161/01.CIR.0000157096.95223.D7.

AHRQ Quality Indicators: Guide to Inpatient Quality Indicators: Quality of Care in Hospitals—Volume, Mortality, and Utilization. 2002, Rockville, MD: Agency for Healthcare Research and Quality, Revision 4 (December 22, 2004). AHRQ Pub. No. .02-RO204. Available from: http://www.qualityindicators.ahrq.gov/Downloads/Software/SAS/V21R4/iqi_guide_rev4.pdf [Date accessed: July 2011]

Lee A, Ngan Kee WD, Gin T: A quantitative, systematic review of randomized controlled trials of ephedrine versus phenylephrine for the management of hypotension during spinal anesthesia for cesarean delivery. Anesth Analg. 2002, 94: 920-926. 10.1097/00000539-200204000-00028.

Duley L, Gulmezoglu AM: Magnesium sulphate versus lytic cocktail for eclampsia. Cochrane Database Syst Rev. 2010, CD002960-10.1002/14651858.CD002960.pub2. 9

Duley L, Henderson-Smart D: agnesium sulphate versus diazepam for eclampsia. Cochrane Database Syst Rev. 2010, CD000127-10.1002/14651858.CD000127.pub2. 12

Duley L, Henderson-Smart D: Magnesium sulphate versus phenytoin for eclampsia. Cochrane Database Syst Rev. 2010, CD000128-10.1002/14651858.CD000128.pub2. 10

Roberts D, Dalziel S: Antenatal corticosteroids for accelerating fetal lung maturation for women at risk of preterm birth. Cochrane Database Syst Rev. 2006, CD004454-10.1002/14651858.CD004454.pub2. 3

King JF, Flenady VJ, Papatsonis DN: Calcium channel blockers for inhibiting preterm labour. Cochrane Database Syst Rev. 2003, CD002255-10.1002/14651858.CD002255. 1

Crowther CA, Hiller JE, Doyle LW: Magnesium sulphate for preventing preterm birth in threatened preterm labour. Cochrane Database Syst Rev. 2002, CD001060-10.1002/14651858.CD001060. 4

Kenyon S, Boulvain M, Neilson J: Antibiotics for preterm premature rupture of membranes. Cochrane Database Syst Rev. 2010, CD001058-10.1002/14651858.CD001058.pub2. 8

Gülmezoglu AM, Crowther CA, Middleton P, Heatley E: Induction of labour for improving birth outcomes for women at or beyond term. Cochrane Database Syst Rev. 2012, CD004945-10.1002/14651858.CD004945.pub3. CD004945, 6

Duley L, Gülmezoglu AM, Henderson-Smart DJ, Chou D: Magnesium sulphate and other anticonvulsants for women with pre-eclampsia. Cochrane Database Syst Rev. 2010, CD000025-10.1002/14651858.CD000025.pub2. 11

Hofmeyr GJ, Kulier R: External cephalic version for breech presentation at term. Cochrane Database Syst Rev. 2012, CD000083-10.1002/14651858.CD000083.pub2. 10

Hofmeyr GJ, Hannah ME: Planned caesarean section for term breech delivery. Cochrane Database Syst Rev. 2003, CD000166-10.1002/14651858.CD000166. 2

Crowther CA, Alfirevic Z, Haslam RR: Thyrotropin-releasing hormone added to corticosteroids for women at risk of preterm birth for preventing neonatal respiratory disease. Cochrane Database Syst Rev. 2004, CD000019-10.1002/14651858.CD000019.pub2. 2

Carroli G, Belizan J: Episiotomy for vaginal birth. Cochrane Database Syst Rev. 2009, CD000081-10.1002/14651858.CD000081.pub2. 1

Kettle C, Johanson RB: Continuous versus interrupted sutures for perineal repair. Cochrane Database Syst Rev. 2007, CD000947-10.1002/14651858.CD000947.pub2. 4

Reveiz L, Gaitán HG, Cuervo LG: Enemas during labour. Cochrane Database Syst Rev. 2007, CD000330-10.1002/14651858.CD000330.pub2. 4

Basevi V, Lavender T: Routine perineal shaving on admission in labour. Cochrane Database Syst Rev. 2000, CD001236-doi:10.1002/14651858.CD001236, 4

Begley CM, Gyte GML, Murphy DJ: Active versus expectant management for women in the third stage of labour. Cochrane Database Syst Rev. 2010, CD007412-10.1002/14651858.CD007412.pub2. 7

Smaill FM, Gyte GML: Antibiotic prophylaxis versus no prophylaxis for preventing infection after cesarean section. Cochrane Database Syst Rev. 2010, CD007482-10.1002/14651858.CD007482.pub2. 1

Bamigboye AA, Hofmeyr GJ: Closure versus non-closure of the peritoneum at caesarean section. Cochrane Database Syst Rev. 2003, CD000163-10.1002/14651858.CD000163. 4

Crowther C, Middleton P: Anti-D administration after childbirth for preventing Rhesus alloimmunisation. Cochrane Database Syst Rev. CD000021-10.1002/14651858.CD000021. 2

Tunçalp O, Gülmezoglu AM, Souza JP: Surgical procedures for evacuating incomplete miscarriage. Cochrane Database Syst Rev. 2010, CD001993-10.1002/14651858.CD001993.pub2. 9

Tanner J, Parkinson H: Double gloving to reduce surgical cross-infection. Cochrane Database Syst Rev. 2006, 19 (3): CD003087-

van den Boogaard E, Goddijn M, Leschot NJ: Development of guideline-based quality indicators for recurrent miscarriage. Reprod Biomed Online. 2010, 20: 267-273. 10.1016/j.rbmo.2009.11.016.

Radtke MA, Reich K, Blome C: Evaluation of quality of care and guideline-compliant treatment in psoriasis. Development of a new system of quality indicators. Dermatology. 2009, 219: 54-58. 10.1159/000218161.

Yazdany J, Panopalis P, Gillis JZ: A quality indicator set for systemic lupus erythematosus. Arthritis Rheum. 2009, 61: 370-377. 10.1002/art.24356.

Terrell KM, Hustey FM, Hwang U: Quality indicators for geriatric emergency care. Acad Emerg Med. 2009, 16: 441-449. 10.1111/j.1553-2712.2009.00382.x.

Ko DT, Wijeysundera HC, Zhu X: National expert panel. Canadian quality indicators for percutaneous coronary interventions. Can J Cardiol. 2008, 24: 899-903. 10.1016/S0828-282X(08)70696-2.

Peiro S, Garcia-Altes A: Possibilities and limitations of results-based management, pay-for-performance and the redesign of incentives. 2008 SESPAS Report. Gac Sanit. 2008, 22 (Suppl1): 143-155.

Walter LC, Davidowitz NP, Heineken PA: Pitfalls of converting practice guidelines into quality measures: lessons learned from a VA performance measure. JAMA. 2004, 291: 2466-2470. 10.1001/jama.291.20.2466.

Lichiello P, Turnock BJ: pub. 1991, Seattle, WA: Turning Point National Program Office, University of Washington, Available from: http://www.turningpointprogram.org/Pages/pdfs/perform_manage/pmc_guide.pdf [Date accessed: July 2011]

Acknowledgments

We would like to thank the comments made on previous versions of this manuscript provided by Salvador Peiró, Holger J. Schünemann, Pablo Alonso, and Alessandro Liberati (deceased). We are also very grateful to Mike Clarke, Jini Hetherington and Robin Vernooij for their suggestions to improve the text. Dr. Dimelza Osorio is a PhD candidate at the Pediatrics, Obstetrics and Gynecology and Preventive Medicine Department, Universitat Autònoma de Barcelona, Spain.

Funding

Project FIS PI06/90222, Instituto de Salud Carlos III.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

MA and DR carried out the search selection, data extraction, and evidence assessment. MR and DO helped to methodologically refine the indicators. AV and CF helped to interpret the clinical data and refine the quality indicators. XB conceived the study and participated in its design and coordination. MR, DR and XB drafted the manuscript. All authors read and approved the final manuscript.

Electronic supplementary material

13012_2012_608_MOESM1_ESM.doc

Additional file 1: Table S4: Adapted GRADE system for assessing clinical recommendations. Table presenting the adapted GRADE system used in this project to assess clinical recommendations derived from sound systematic reviews. (DOC 32 KB)

13012_2012_608_MOESM2_ESM.doc

Additional file 2: Table S5: Example of an indicator. Full text of a quality indicator developed in this project and an example of computation based on fictional data. (DOC 42 KB)

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Bonfill, X., Roqué, M., Aller, M.B. et al. Development of quality of care indicators from systematic reviews: the case of hospital delivery. Implementation Sci 8, 42 (2013). https://doi.org/10.1186/1748-5908-8-42

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1748-5908-8-42