Abstract

Background

Underuse and overuse of diagnostic tests have important implications for health outcomes and costs. Decision support technology purports to optimize the use of diagnostic tests in clinical practice. The objective of this review was to assess whether computerized clinical decision support systems (CCDSSs) are effective at improving ordering of tests for diagnosis, monitoring of disease, or monitoring of treatment. The outcome of interest was effect on the diagnostic test-ordering behavior of practitioners.

Methods

We conducted a decision-maker-researcher partnership systematic review. We searched MEDLINE, EMBASE, Ovid's EBM Reviews database, Inspec, and reference lists for eligible articles published up to January 2010. We included randomized controlled trials comparing the use of CCDSSs to usual practice or non-CCDSS controls in clinical care settings. Trials were eligible if at least one component of the CCDSS gave suggestions for ordering or performing a diagnostic procedure. We considered studies 'positive' if they showed a statistically significant improvement in at least 50% of test ordering outcomes.

Results

Thirty-five studies were identified, with significantly higher methodological quality in those published after the year 2000 (p = 0.002). Thirty-three trials reported evaluable data on diagnostic test ordering, and 55% (18/33) of CCDSSs improved testing behavior overall, including 83% (5/6) for diagnosis, 63% (5/8) for treatment monitoring, 35% (6/17) for disease monitoring, and 100% (3/3) for other purposes. Four of the systems explicitly attempted to reduce test ordering rates and all succeeded. Factors of particular interest to decision makers include costs, user satisfaction, and impact on workflow but were rarely investigated or reported.

Conclusions

Some CCDSSs can modify practitioner test-ordering behavior. To better inform development and implementation efforts, studies should describe in more detail potentially important factors such as system design, user interface, local context, implementation strategy, and evaluate impact on user satisfaction and workflow, costs, and unintended consequences.

Similar content being viewed by others

Background

Much of medical care hinges on performing the right test, on the right patient, at the right time. Apart from their financial cost, diagnostic tests have downstream implications on care and, ultimately, patient outcomes. Yet, studies suggest wide variation in diagnostic test ordering behavior for seemingly similar patients [1–4]. This variation may be due to overuse or underuse of tests and may reflect inaccurate interpretation of results, rapid advances in diagnostic technology, and challenges in estimating tests' performance characteristics [5–10]. Thus, developing effective strategies to optimize healthcare practitioners' diagnostic test ordering behavior has become a major concern [11].

A variety of methods have been considered, including educational messages, reminders, and computerized clinical decision support systems (CCDSSs) [2, 12–14]. For example, Thomas et al.[15] programmed a laboratory information system to automatically produce reminder messages that discourage future inappropriate use for each of nine diagnostic tests. A systematic review of strategies to change test-ordering behavior concluded that most interventions assessed were effective [2]. However, this review was limited by the low quality of primary studies. More recently, Shojania et al.[16] quantified the magnitude of improvements in processes of care from computer reminders delivered to physicians for any clinical purpose. Pooling data across randomized trials, they found a modest 3.8% median improvement (interquartile range [IQR], 15.9%) in adherence to test ordering reminders.

CCDSSs match characteristics of individual patients to a computerized knowledge base and provide patient-specific recommendations. The Health Information Research Unit (HIRU) at McMaster University previously conducted a systematic review assessing the effects of CCDSSs on practitioner performance and patient outcomes in 1994 [17], updated it in 1998 [18], and most recently in 2005 [19]. However, these reviews have not focused specifically on the use of diagnostic tests.

In this current update, we had the opportunity to partner with local hospital administration, clinical staff, and representatives of our regional health authority, in anticipation of major institutional investments in health information technology. Many new studies have been published in this field since our previous work in 2005 [19] allowing us to focus on randomized controlled trials (RCTs), with their lessened risk of bias. To better address the information needs of our decision-making partners, we focused on six separate topics for review: diagnostic test ordering, primary preventive care, drug prescribing, acute medical care, chronic disease management, and therapeutic drug monitoring and dosing. In this paper, we determine if CCDSSs improve practitioners' diagnostic test ordering behavior.

Methods

We previously published detailed methods for conducting this systematic review available at http://www.implementationscience.com/content/5/1/12[20]. These methods are briefly summarized here, along with details specific to this review of CCDSSs for diagnostic test ordering.

Research question

Do CCDSSs improve practitioners' diagnostic test ordering behavior?

Partnering with decision makers

The research team engaged key decision makers early in the project to guide its design and endorse its funding application. Direction for the overall review was provided by senior administrators at Hamilton Health Sciences (one of Canada's largest teaching hospitals) and our regional health authority. JY (Department of Medicine) and DK (Chair, Department of Radiology) provided specific guidance for the area of diagnostic test ordering by selecting from each study the outcomes relevant to diagnostic testing. HIRU research staff searched for and selected trials for inclusion, as well as extracted and synthesised pertinent data. All partners worked together through the review process to facilitate knowledge translation, that is, to define whether and how to transfer findings into clinical practice.

Search strategy

We previously published the details of our search strategy [20]. Briefly, we examined citations retrieved from MEDLINE, EMBASE, Ovid's Evidence-Based Medicine Reviews, and Inspec bibliographic databases up to 6 January 2010, and hand-searched the reference lists of included articles and relevant systematic reviews.

Study selection

In pairs, our reviewers independently evaluated each study's eligibility for inclusion, and a third observer resolved disagreements. We first included all RCTs that assessed a CCDSS's effect on healthcare processes in which the system was used by healthcare professionals and provided patient-specific assessments or recommendations. We then selected trials of systems that gave direct recommendations to order or not to order a diagnostic test, or presented testing options, and measured impact on diagnostic processes. Trials of systems that simply gave advice for interpreting test results were excluded (such as Poels et al.[21]), as were trials of diagnostic systems that only reasoned through patient characteristics to suggest a diagnosis without making test recommendations (such as Bogusevicius et al.[22]). Systems that provided only information, such as cost of testing [23] or past test results [24] without actionable recommendations or options were also excluded.

Data extraction

Pairs of reviewers independently extracted data from all eligible trials, including a wide range of system design and implementation characteristics, study methods, setting, funding sources, patient/provider characteristics, and effects on care process and clinical outcomes, adverse effects, effects on workflow, costs, and practitioner satisfaction. Disagreements were resolved by a third reviewer or by consensus. We attempted to contact primary authors of all included trials to confirm extracted data and to provide missing data, receiving a response from 69% (24/35).

Assessment of study quality

We assessed the methodological quality of eligible trials with a 10-point scale consisting of five potential sources of bias, including concealment of allocation, appropriate unit of allocation, appropriate adjustment for baseline differences, appropriate blinding of assessment, and adequate follow-up [20]. For each source of bias, a score of 0 indicated the highest potential for bias, whereas a score of 2 indicated the lowest, generating a range of scores from 0 (lowest study quality) to 10 (highest study quality). We used a 2-tailed Mann-Whitney U test to assess whether the quality of trials has improved with time, comparing methodologic scores between trials published before the year 2000 and those published later.

Assessment of CCDSS intervention effects

In determining effectiveness, we focused exclusively on diagnostic testing measures and defined these broadly to include performing physical examinations (e.g., eye and foot exams), blood pressure measurements, as well as ordering laboratory, imaging, and functional tests. Patient outcomes were excluded from this study because, in general, they are most directly affected by treatment action and could not be attributed solely to diagnostic testing advice, especially in systems that also recommended therapy. Impact on patient outcomes and other process outcomes was assessed in our other current reviews on primary preventive care, drug prescribing, acute medical care, chronic disease management, and therapeutic drug monitoring and dosing.

Whenever possible, we classified systems as serving at least one of three purposes: disease monitoring (e.g., measuring HbA1c in diabetes), treatment monitoring (e.g., measuring liver enzymes at time of statin prescription), and diagnosis (e.g., laboratory tests to detect source of fever). We classified trials in each area depending on whether they gave recommendations for that purpose and measured the outcome of those recommendations. Trials of systems for monitoring of medications with narrow therapeutic indexes, such as insulin or warfarin, are the focus of a separate report on CCDSSs for toxic drug monitoring and dosing and are not discussed here.

We looked for the intended direction of impact: to increase or to decrease testing. We considered a system effective if it changed, in the intended direction, a pre-specified primary outcome measuring use of diagnostic tests (2-tailed p < 0.05). If multiple pre-specified primary outcomes were reported, we considered a change in ≥50% of outcomes to represent effectiveness. We considered primary those outcomes reported by the author as 'primary' or 'main,' or if no such statements could be found, we considered the outcome used for sample size calculations to be primary. In the absence of a relevant primary outcome, we looked for a change in ≥50% of multiple pre-specified secondary outcomes. If there were no relevant pre-specified outcomes, systems that changed ≥50% of reported diagnostic process outcomes were considered effective. We included studies with multiple CCDSS arms in the count of 'positive' studies if any of the CCDSS arms showed a benefit over the control arm. These criteria are more specific than those used in our previous review [19]; therefore, some studies included in our earlier review [19] were re-categorised with respect to their effectiveness in this review.

Data synthesis and analysis

We summarized data using descriptive measures, including proportions, medians, and ranges. Denominators vary in some proportions because not all trials reported relevant information. We conducted our analyses using SPSS, version 15.0. Given study-level differences in participants, clinical settings, disease conditions, interventions, and outcomes measured, we did not attempt a meta-analysis.

A sensitivity analysis was conducted to assess the possibility of biased results in studies with a mismatch between the unit of allocation (e.g., clinicians) and the unit of analysis (e.g., individual patients without adjustment for clustering). Success rates comparing studies with matched and mismatched analyses were compared using chi-square for comparisons. No differences in reported success were found for diagnostic process outcomes (Pearson X2 = 0.44, p = 0.51). Accordingly, results have been reported without distinction for mismatch.

Results

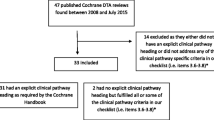

Figure 1 shows the flow of included and excluded trials. Across all clinical indications, we identified 166 RCTs of CCDSSs and inter-reviewer agreement on study eligibility was high (unweighted Cohen's kappa, 0.93; 95% confidence interval [CI], 0.91 to 0.94). In this review, we included 35 trials described in 45 publications because they measured the impact on test ordering behavior of CCDSSs that gave suggestions for ordering or performing diagnostic tests [15, 25–57, 57–67]. Thirty-two included studies contributed outcomes to both this review and other CCDSS interventions in the series; four studies [34, 37, 41, 68] to four reviews, 11 studies [25, 32, 33, 35, 36, 39, 40, 42–44, 46–49, 51, 57, 61] to three reviews, and 17 studies [26–31, 38, 45, 50, 52–56, 58–60, 62–65] to two reviews; but we focused here only on diagnostic test ordering process outcomes.

Flow diagram of included and excluded studies for the update 1 January 2004 to 6 January 2010 with specifics for diagnostic test ordering*. *Details provided in: Haynes RB et al. [20]. Two updating searches were performed, for 2004 to 2009 and to 6 January 2010 and the results of the search process are consolidated here.

Our assessment of trial quality is summarized in Additional file 1, Table S1; system characteristics in Additional file 2, Table S2; study characteristics in Additional file 3, Table S3; outcome data in Table 1 and Additional file 4, Table S4; and other CCDSS-related outcomes in Additional file 5, Table S5.

Study quality

Details of our methodological quality assessment can be found in Additional file 1, Table S1. Fifty-four percent of trials concealed group allocation [26, 27, 30, 32–35, 37–40, 42–44, 50, 52–55, 60–63, 66–68]; 51% allocated clusters (e.g., entire clinics or wards) to minimize contamination between study groups [15, 25, 28–30, 34, 36, 38–44, 46, 50–53, 60, 62–64, 68]; 77% either showed no differences in baseline characteristics between study groups or adjusted accordingly [15, 26–37, 39, 40, 45–55, 58, 59, 61–66, 68]; 69% of trials achieved ≥90% follow-up for the appropriate unit of analysis [15, 25, 28–35, 37, 39–41, 45, 50–56, 58–61, 66, 67]; and all but one used blinding or an objective outcome [45].

Most studies had good methodological quality (median quality score, 8; ranging from 2 to 10) and 63% (22/35) [15, 25–38, 50–55, 58–61, 65] were published after our previous search in September 2004. Study quality improved with time (median score before versus after year 2000, 7 versus 8, 2-tailed Mann-Whitney U = 44.5; p = 0.002), mainly because early trials did not conceal allocation and failed to achieve adequate follow-up.

CCDSS and study characteristics

Systems' design and implementation characteristics are presented in Additional file 2, Table S2, but not all trials reported these details. CCDSSs in 80% of trials (28/35) gave advice at the time of care [25–27, 30, 31, 34–37, 39–51, 54, 56–64, 66–68]; most were integrated with electronic medical records (82%; 27/33) [15, 26, 27, 30–34, 36, 37, 39, 40, 42–51, 54, 56–58, 60–64, 66–68] and some were integrated with computerized physician order entry (CPOE) systems (26%; 7/27) [31–33, 37, 50, 54, 67, 68]; 77% (24/31) automatically obtained data needed to trigger recommendations from electronic medical records [15, 26, 27, 30–34, 36, 37, 39, 40, 45, 46, 50, 51, 54, 56–58, 60–64, 66–68], while others relied on practitioners, existing non-prescribing staff, or research staff to enter data. In most trials (61%; 20/33) advice was delivered on a desktop or laptop computer [15, 26, 27, 31, 34, 36–41, 50, 51, 54, 58–63, 66–68], but other methods included personal digital assistants, email, or existing staff. Seventy-four percent (26/35) of systems were implemented in primary care [15, 25–40, 42–45, 50–54, 58, 60–65, 67]; 56% (14/25) were pilot tested [25, 28–33, 36, 38, 42–45, 51, 54, 62, 63, 66, 67]; and users of 59% (17/29) were trained [25–29, 31–33, 35, 37, 39–44, 46, 51, 54, 58–60, 67]. Eighty-three percent of trials (29/35) declared that at least one author was involved in the development of the system [15, 25–33, 36, 37, 39–41, 45–53, 55–60, 62–68]. In general, user interfaces were not described in detail. Additional file 3, Table S3 gives further description of the setting and method of CCDSS implementation.

The 35 trials included a total of 4,212 practitioners (median, 132; ranging from 14 to 600, when reported) caring for 626,382 patients (median, 2,765; ranging from 164 to 400,000, when reported) in 835 clinics (median, 15; ranging from 1 to 142, when reported) across 545 distinct sites (median, 4.5; ranging from 1 to 112, when reported).

Three trials did not declare a funding source [31, 57, 60]. Of those that did, 78% (25/32) were publically funded [15, 25–30, 36–38, 41–51, 54, 56, 58, 59, 61–64, 66–68], 9% (3/32) received both private and public funding [39, 40, 55, 61], and 13% (4/32) were conducted with private funds only [32–35, 65].

CCDSS effectiveness

Each system's impact on the use of diagnostic tests is summarized in Table 1, and Additional file 4, Table S4 provides a detailed description of test ordering outcomes. These outcomes were primary in 37% (13/35) of trials [15, 25–27, 31, 41, 50–53, 55, 58, 61–63, 66].

Fifty-six percent (18/33) of evaluated trials demonstrated an impact on the use of diagnostic tests [15, 25–31, 41, 52, 53, 55–57, 59–64, 66, 67] Two studies [65, 68] met all eligibility criteria and included diagnostic process measures but were excluded from the assessment of effectiveness because they did not provide statistical comparisons of these measures.

Disease monitoring

Systems in 49% (17/35) of trials (median quality score, 7; ranging from 4 to 10) gave recommendations for monitoring active conditions, all focusing on chronic diseases [25–49]. Their effectiveness for improving all processes of care and patient outcomes was assessed in our review on chronic disease management. Here we looked specifically for their impact on monitoring activity and found that 35% (6/17) increased appropriate monitoring [25–31, 41].

In the context of diabetes, four of eight trials successfully increased timely monitoring of common targets such as HbA1c, blood lipids, blood pressure, urine albumin, and foot and eye health [26–30, 41]. One of two systems that focused primarily on monitoring of hypertension was effective at increasing the frequency of appropriate blood pressure measurement [31]. One of three trials that focused on dyslipidemia improved monitoring of blood lipids [25]. Another three systems gave suggestions for monitoring of asthma [35, 37, 39, 40], angina [39, 40], chronic obstructive pulmonary disease (COPD) [37], and one for a combination of renal disease, obesity, and hypertension [47–49], but all failed to change testing behavior.

Treatment monitoring

Systems in 23% of trials (8/35) [34, 50–57] provided suggestions for laboratory monitoring of drug therapy. Trials in this area were generally recent and of high quality (median score, 8.5; range, 2 to 10; 75% (6/8) published since 2005). They targeted a wide range of medications (described in Additional file 4, Table S4) and are discussed in detail in our review of CCDSSs for drug prescribing, which looked for effects on prescribing behavior and patient outcomes. Focusing on their effectiveness for improving laboratory monitoring, we found that 63% (5/8) improved practices such as timely monitoring for adverse effects of medical therapy [34, 52, 53, 55–57]. However, two of the trials demonstrating an impact were older and had low methodologic scores [56, 57].

Diagnosis

Systems in 17% of trials (6/35) [58–64] gave recommendations for ordering tests intended to aid diagnosis (median quality score, 7.5; ranging from 6 to 9) and 67% (4/6) were published since 2005 [58–61]. Eighty-three percent (5/6) successfully improved test ordering behavior [59–64]. Systems suggested tests to investigate suspected dementia in primary practice [60], to detect the source of fever for children in the emergency room [59], to increase bone mineral density measurements for diagnosing osteoporosis [61], to reduce unnecessary laboratory tests for diagnosing urinary tract infections or sore throats [62, 63], to diagnose HIV [58], and to diagnose a host of conditions, including cancer, thyroid disorders, anemia, tuberculosis, and others [64].

Other

Finally, five trials did not specify the clinical purpose of recommended tests [15, 65–68], or suggested tests for several purposes but without data necessary to isolate the effects on testing for any one purpose. Three of five focused on reducing ordering rates and were successful [15, 66, 67]. Javitt et al. intended to increase test ordering and measured compliance with suggestions, but did not evaluate the outcome due to technical problems [65]. Overhage et al. meant to increase 'corollary orders' (tests to monitor the effects of other tests or treatments), but did not present statistical comparisons of their data on diagnostic process outcomes [68].

Costs and practical process-related outcomes

Potentially important factors such as user satisfaction, adverse events, and impact on cost and workflow were rarely studied ( see Additional file 5, Table S5). Because most systems also gave recommendations for therapy, we were usually unable to isolate the effects of test-ordering suggestions on these factors, and here we discuss systems that gave only testing advice.

Two trials estimated statistically significant reductions in the cost of care, but estimates were small in one study [37] and imprecise (large confidence interval) in the other [28, 29]. A third study estimated a relatively small reduction in annual laboratory costs ($35,000), but presented no statistical comparisons [66].

Three trials formally evaluated user satisfaction. One study found mixed satisfaction with a system for monitoring of diabetes and postulated that this was due to technical difficulties [26, 27]. Another found that 78% of users felt CCDSS suggestion for ordering of HIV tests had an effect on their test-ordering practices, despite failing to show an effect of the CCDSS in the study [58]. The third study found that, regardless of high satisfaction with the local CPOE system, satisfaction with reminders about potentially redundant laboratory tests was lower (3.5 on a scale of 1 to 7) [66].

Only one study formally looked for adverse events caused by the CCDSS [66]. The system was designed to reduce potentially redundant clinical laboratory tests by giving reminders. Researchers assessed the potential for adverse events by checking for new abnormal test results for the same test performed after initial cancellation. Fifty-three percent of accepted reminders for a redundant test were followed by the same type of test within 72 hours, and 24% were abnormal, although only 4% provided new information and 1% led to changes in clinical management.

One study made a formal attempt to measure impact on user workflow and found that use of the CCDSS did not increase length of clinical encounters [45]. However, this outcome was not prespecified and the study may not have had adequate statistical power to detect an effect.

Discussion

Our systematic review of RCTs of CCDSSs for diagnostic test ordering found that overall testing behavior was improved in just over one-half of trials. We considered studies 'positive' if they showed a statistically significant improvement in at least 50% of diagnostic process outcomes.

While the earliest RCT of a system for this purpose was published in 1976, most examples have appeared in the past five years, and evaluation methods have improved with time. Systems' diagnostic test ordering advice was most often intended to increase the ordering of certain tests in specific situations. Most systems suggested tests to diagnose new conditions, to monitor existing ones, or to monitor recently initiated drug treatments. Trials often demonstrated benefits in the areas of diagnosis and treatment monitoring, but were seldom effective for disease monitoring. All four systems that were explicitly meant to decrease unnecessary testing were successful [15, 62, 63, 66, 67]. CCDSSs may be better suited for some purposes than for others, but we need more trials and more detailed reporting of potential confounders, such as system design and implementation characteristics, to reliably assess the relationship between purpose and effectiveness.

Previous reviews have separately synthesized the literature on ways of improving diagnostic testing practice and on the effectiveness of CCDSSs [2, 12–14, 17–19, 69]. Our current systematic review combines these areas and isolates the impact of CCDSS on diagnostic test ordering. However, several factors limited our analysis. Importantly, we chose not to evaluate effects on patient outcomes because many systems also gave treatment suggestions that affect these outcomes more directly than does test ordering advice. Some systems gave recommendations for testing but their respective studies did not measure the impact on test ordering practice and were, therefore, excluded from this review [70–72]. Only 37% of trials assessed impact on test ordering activity as a primary outcome, and others may not have had adequate statistical power to detect testing effects.

We did not determine the magnitude of effect in each study, there being no common metric for this, but simply considered studies 'positive' if they showed a statistically significant improvement in at least 50% of diagnostic process outcomes. As a result, some of the systems considered ineffective by our criteria reported statistically significant findings, but only for a minority of secondary or non-prespecified outcomes. Indeed, the limitations of this 'vote counting' [73] are well established and include increased risk of underestimating effect. However, our results remain essentially unchanged from our 2005 review [19] and are comparable to another major review [74], and a recent 'umbrella' review of high-quality systematic reviews of CCDSSs in hospital settings [75].

Vote counting prevented us from assessing publication bias but we believe that, along with selective outcome reporting, publication bias is a real issue in this literature because most systems were tested by their own developers.

We observed an improvement in trial quality over time, but this may simply reflect better reporting after standards such as Consolidated Standards of Reporting Trials (CONSORT) were widely adopted. Thirty-one percent of the authors we attempted to contact did not respond, and this may have particularly affected the quality of our extraction from older, less standardised reports.

While the number of RCTs has increased, the majority of these studies did not investigate or describe potentially important factors, including details of system design and implementation, costs and effects on user satisfaction, and workflow. Reporting such information is difficult under the space constraints of a trial publication, but supplementary reports may be an effective way to communicate these important details. One example comes from Flottorp et al.[62, 63] who reported a process evaluation exploring the factors that affected the success of their CCDSS for management of sore throat and urinary tract infections. Feedback from practices showed that they were generally satisfied with installing and using the software, its technical performance, and with entering data. It also showed where they faced implementation challenges and which components of the intervention they used.

Our systematic review uncovered only three studies evaluating CCDSSs that give advice for the use of diagnostic imaging tests [35, 61, 64]. Effective decision support for ordering of imaging tests may be particularly relevant for the delivery of high quality, sustainable, modern healthcare, given the high cost and rapidly increasing use of such tests, and emerging concerns about cancer risk associated with exposure to medical radiation [11, 76, 77].

Conclusions

Some CCDSSs improve practitioners' diagnostic test ordering behavior, but the determinants of success and failure remain unclear. CCDSSs may be better suited to improve testing for some purposes than others, but more trials and more detailed descriptions of system features and implementation are needed to evaluate this relationship reliably. Factors of interest to innovators who develop CCDSSs and decision makers considering local deployment are under-investigated or under-reported. To support the efforts of system developers, researchers should rigorously measure and report adverse effects of their system and impacts on user workflow and satisfaction, as well as details of their systems' design (e.g., user interface characteristics and integration with other systems). To inform decision makers, researchers should report costs of design, development, and implementation.

References

Miller RA: Medical diagnostic decision support systems--past, present, and future: a threaded bibiiography and brief commentary. J Am Med Inform Assoc. 1994, 1 (1): 8-27. 10.1136/jamia.1994.95236141.

Solomon DH, Hashimoto H, Daltroy L, Liang MH: Techniques to improve physicians' use of diagnostic tests: a new conceptual framework. JAMA. 1998, 280 (23): 2020-2027. 10.1001/jama.280.23.2020.

Wennberg JE: Dealing with medical practice variations: a proposal for action. Health Aff (Millwood). 1984, 3 (2): 6-32. 10.1377/hlthaff.3.2.6.

Daniels M, Schroeder SA: Variation among physicians in use of laboratory tests II. Relation to clinical productivity and outcomes of care. Med Care. 1977, 15 (6): 482-487. 10.1097/00005650-197706000-00004.

Casscells W, Schoenberger A, Graboys TB: Interpretation by physicians of clinical laboratory results. N Engl J Med. 1978, 299 (18): 999-1001. 10.1056/NEJM197811022991808.

McDonald CJ: Protocol-based computer reminders, the quality of care and the non-perfectability of man. N Engl J Med. 1976, 295 (24): 1351-1355. 10.1056/NEJM197612092952405.

Kanouse DE, Jacoby I: When does information change practitioners' behavior?. Int J Technol Assess Health Care. 2009, 4 (01): 27-33.

Davis DA, Thomson MA, Oxman AD, Haynes RB: Changing physician performance. A systematic review of the effect of continuing medical education strategies. JAMA. 1995, 274 (9): 700-705. 10.1001/jama.274.9.700.

Freemantle N: Are decisions taken by health care professionals rational? A non systematic review of experimental and quasi experimental literature. Health Policy. 1996, 38 (2): 71-81. 10.1016/0168-8510(96)00837-8.

McKinlay JB, Potter DA, Feldman HA: Non-medical influences on medical decision-making. Soc Sci Med. 1996, 42 (5): 769-776. 10.1016/0277-9536(95)00342-8.

Iglehart JK: Health insurers and medical-imaging policy--a work in progress. N Engl J Med. 2009, 360 (10): 1030-1037. 10.1056/NEJMhpr0808703.

Axt-Adam P, van der Wouden JC, van der Does E: Influencing behavior of physicians ordering laboratory tests: a literature study. Med Care. 1993, 31 (9): 784-794. 10.1097/00005650-199309000-00003.

Buntinx F, Winkens R, Grol R, Knottnerus JA: Influencing diagnostic and preventive performance in ambulatory care by feedback and reminders. A review. Fam Pract. 1993, 10 (2): 219-228. 10.1093/fampra/10.2.219.

Gama R, Hartland AJ, Holland MR: Changing clinicians' laboratory test requesting behaviour: can the poacher turn gamekeeper?. Clin Lab. 2001, 47 (1-2): 57-66.

Thomas RE, Croal BL, Ramsay C, Eccles M, Grimshaw J: Effect of enhanced feedback and brief educational reminder messages on laboratory test requesting in primary care: a cluster randomised trial. Lancet. 2006, 367 (9527): 1990-1996. 10.1016/S0140-6736(06)68888-0.

Shojania KG, Jennings A, Mayhew A, Ramsay C, Eccles M, Grimshaw J: Effect of point-of-care computer reminders on physician behaviour: a systematic review. CMAJ. 2010, 182 (5): E216-E225. 10.1503/cmaj.090578.

Johnston ME, Langton KB, Haynes RB, Mathieu A: Effects of computer-based clinical decision support systems on clinician performance and patient outcome: a critical appraisal of research. Ann Intern Med. 1994, 120 (2): 135-142.

Hunt DL, Haynes RB, Hanna SE, Smith K: Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA. 1998, 280 (15): 1339-1346. 10.1001/jama.280.15.1339.

Garg AX, Adhikari NK, McDonald H, Rosas-Arellano M, Devereaux PJ, Beyene J, Sam J, Haynes RB: Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005, 293 (10): 1223-1238. 10.1001/jama.293.10.1223.

Haynes RB, Wilczynski NL, the Computerized Clinical Decision Support System (CCDSS) Systematic Review Team: Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: methods of a decision-maker-research partnership systematic review. Implement Sci. 2010, 5: 12-

Poels PJ, Schermer TR, Thoonen BP, Jacobs JE, Akkermans RP, de Vries Robbe PF, Quanjer PH, Bottema BJ, van Weel C: Spirometry expert support in family practice: a cluster-randomised trial. Prim Care Respir J. 2009, 18 (3): 189-197. 10.4104/pcrj.2009.00047.

Bogusevicius A, Maleckas A, Pundzius J, Skaudickas D: Prospective randomised trial of computer-aided diagnosis and contrast radiography in acute small bowel obstruction. Eur J Surg. 2002, 168 (2): 78-83. 10.1080/11024150252884287.

Tierney WM, Miller ME, McDonald CJ: The Effect on Test Ordering of Informing Physicians of the Charges for Outpatient Diagnostic Tests. N Engl J Med. 1990, 322 (21): 1499-1504. 10.1056/NEJM199005243222105.

Tierney : Computerized display of past test results. Effect on outpatient testing. Ann Intern Med. 1987, 107 (4): 569-574.

Gilutz H, Novack L, Shvartzman P, Zelingher J, Bonneh DY, Henkin Y, Maislos M, Peleg R, Liss Z, Rabinowitz G, Vardy D, Zahger D, Ilia R, Leibermann N, Porath A: Computerized community cholesterol control (4C): meeting the challenge of secondary prevention. Israel Med Assoc J. 2009, 11 (1): 23-29.

Holbrook A, Thabane L, Keshavjee K, Dolovich L, Bernstein B, Chan D, Troyan S, Foster G, Gerstein H: Individualized electronic decision support and reminders to improve diabetes care in the community: COMPETE II randomized trial. CMAJ. 2009, 181 (1-2): 37-44. 10.1503/cmaj.081272.

Holbrook A, Keshavjee K, Lee H, Bernstein B, Chan D, Thabane L, Gerstein H, Troyan S, COMPETE II Investigators: Individualized electronic decision support and reminders can improve diabetes care in the community. AMIA Annu Symp Proc. 2005, 982-

MacLean CD, Gagnon M, Callas P, Littenberg B: The Vermont Diabetes Information System: a cluster randomized trial of a population based decision support system. J Gen Intern Med. 2009, 24 (12): 1303-1310. 10.1007/s11606-009-1147-x.

MacLean CD, Littenberg B, Gagnon M: Diabetes decision support: initial experience with the Vermont diabetes information system. Am J Public Health. 2006, 96 (4): 593-595. 10.2105/AJPH.2005.065391.

Peterson KA, Radosevich DM, O'Connor PJ, Nyman JA, Prineas RJ, Smith SA, Arneson TJ, Corbett VA, Weinhandl JC, Lange CJ, Hannan PJ: Improving diabetes care in practice: findings from the TRANSLATE trial. Diabetes Care. 2008, 31 (12): 2238-2243. 10.2337/dc08-2034.

Borbolla D, Giunta D, Figar S, Soriano M, Dawidowski A, de Quiros FG: Effectiveness of a chronic disease surveillance systems for blood pressure monitoring. Stud Health Technol Inform. 2007, 129 (Pt 1): 223-227.

Lester WT, Grant RW, Barnett GO, Chueh HC: Randomized controlled trial of an informatics-based intervention to increase statin prescription for secondary prevention of coronary disease. J Gen Intern Med. 2006, 21 (1): 22-29. 10.1111/j.1525-1497.2005.00268.x.

Lester WT, Grant R, Barnett GO, Chueh H: Facilitated lipid management using interactive e-mail: preliminary results of a randomized controlled trial. Stud Health Technol Inform. 2004, 107 (Pt 1): 232-236.

Cobos A, Vilaseca J, Asenjo C, Pedro-Botet J, Sanchez E, Val A, Torremade E, Espinosa C, Bergonon S: Cost effectiveness of a clinical decision support system based on the recommendations of the European Society of Cardiology and other societies for the management of hypercholesterolemia: Report of a cluster-randomized trial. Dis Manag Health Out. 2005, 13 (6): 421-432. 10.2165/00115677-200513060-00007.

Plaza V, Cobos A, Ignacio-Garcia JM, Molina J, Bergonon S, Garcia-Alonso F, Espinosa C, Grupo Investigador A: [Cost-effectiveness of an intervention based on the Global INitiative for Asthma (GINA) recommendations using a computerized clinical decision support system: a physicians randomized trial]. Med Clin (Barc). 2005, 124 (6): 201-206. 10.1157/13071758.

Sequist TD, Gandhi TK, Karson AS, Fiskio JM, Bugbee D, Sperling M, Cook EF, Orav EJ, Fairchild DG, Bates DW: A randomized trial of electronic clinical reminders to improve quality of care for diabetes and coronary artery disease. J Am Med Inform Assoc. 2005, 12 (4): 431-437. 10.1197/jamia.M1788.

Tierney WM, Overhage JM, Murray MD, Harris LE, Zhou XH, Eckert GJ, Smith FE, Nienaber N, McDonald CJ, Wolinsky FD: Can computer-generated evidence-based care suggestions enhance evidence-based management of asthma and chronic obstructive pulmonary disease? A randomized, controlled trial. Health Serv Res. 2005, 40 (2): 477-497. 10.1111/j.1475-6773.2005.0t369.x.

Mitchell E, Sullivan F, Watt G, Grimshaw JM, Donnan PT: Using electronic patient records to inform strategic decision making in primary care. Stud Health Technol Inform. 2004, 107 (Pt2): 1157-1161.

Eccles M, McColl E, Steen N, Rousseau N, Grimshaw J, Parkin D, Purves I: Effect of computerised evidence based guidelines on management of asthma and angina in adults in primary care: cluster randomised controlled trial. BMJ. 2002, 325 (7370): 941-10.1136/bmj.325.7370.941.

Rousseau N, McColl E, Newton J, Grimshaw J, Eccles M: Practice based, longitudinal, qualitative interview study of computerised evidence based guidelines in primary care. BMJ. 2003, 326 (7384): 314-10.1136/bmj.326.7384.314.

Demakis JG, Beauchamp C, Cull WL, Denwood R, Eisen SA, Lofgren R, Nichol K, Woolliscroft J, Henderson WG: Improving residents' compliance with standards of ambulatory care: results from the VA Cooperative Study on Computerized Reminders. JAMA. 2000, 284 (11): 1411-1416. 10.1001/jama.284.11.1411.

Hetlevik I, Holmen J, Krüger O: Implementing clinical guidelines in the treatment of hypertension in general practice. Evaluation of patient outcome related to implementation of a computer-based clinical decision support system. Scand J Prim Health Care. 1999, 17 (1): 35-40. 10.1080/028134399750002872.

Hetlevik I, Holmen J, Kruger O, Kristensen P, Iversen H: Implementing clinical guidelines in the treatment of hypertension in general practice. Blood Press. 1998, 7 (5-6): 270-276. 10.1080/080370598437114.

Hetlevik I, Holmen J, Krüger O, Kristensen P, Iversen H, Furuseth K: Implementing clinical guidelines in the treatment of diabetes mellitus in general practice. Evaluation of effort, process, and patient outcome related to implementation of a computer-based decision support system. Int J Technol Assess. 2000, 16 (1): 210-227. 10.1017/S0266462300161185.

Lobach DF, Hammond W: Computerized decision support based on a clinical practice guideline improves compliance with care standards. Am J Med. 1997, 102 (1): 89-98. 10.1016/S0002-9343(96)00382-8.

Mazzuca SA, Vinicor F, Einterz RM, Tierney WM, Norton JA, Kalasinski LA: Effects of the clinical environment on physicians' response to postgraduate medical education. Am Educ Res J. 1990, 27 (3): 473-488.

Rogers JL, Haring OM, Goetz JP: Changes in patient attitudes following the implementation of a medical information system. QRB Qual Rev Bull. 1984, 10 (3): 65-74.

Rogers JL, Haring OM: The impact of a computerized medical record summary system on incidence and length of hospitalization. Med Care. 1979, 17 (6): 618-630. 10.1097/00005650-197906000-00006.

Rogers JL, Haring OM, Wortman PM, Watson RA, Goetz JP: Medical information systems: assessing impact in the areas of hypertension, obesity and renal disease. Med Care. 1982, 20 (1): 63-74. 10.1097/00005650-198201000-00005.

Lo HG, Matheny ME, Seger DL, Bates DW, Gandhi TK: Impact of non-interruptive medication laboratory monitoring alerts in ambulatory care. J Am Med Inform Assoc. 2009, 16 (1): 66-71.

Matheny ME, Sequist TD, Seger AC, Fiskio JM, Sperling M, Bugbee D, Bates DW, Gandhi TK: A randomized trial of electronic clinical reminders to improve medication laboratory monitoring. J Am Med Inform Assoc. 2008, 15 (4): 424-429. 10.1197/jamia.M2602.

Feldstein AC, Smith DH, Perrin N, Yang X, Rix M, Raebel MA, Magid DJ, Simon SR, Soumerai SB: Improved therapeutic monitoring with several interventions: a randomized trial. Arch Intern Med. 2006, 166 (17): 1848-1854. 10.1001/archinte.166.17.1848.

Smith DH, Feldstein AC, Perrin NA, Yang X, Rix MM, Raebel MA, Magid DJ, Simon SR, Soumerai SB: Improving laboratory monitoring of medications: an economic analysis alongside a clinical trial. Am J Manag Care. 2009, 15 (5): 281-289.

Palen TE, Raebel M, Lyons E, Magid DM: Evaluation of laboratory monitoring alerts within a computerized physician order entry system for medication orders. Am J Manag Care. 2006, 12 (7): 389-395.

Raebel MA, Lyons EE, Chester EA, Bodily MA, Kelleher JA, Long CL, Miller C, Magid DJ: Improving laboratory monitoring at initiation of drug therapy in ambulatory care: A randomized trial. Arch Intern Med. 2005, 165 (20): 2395-2401. 10.1001/archinte.165.20.2395.

McDonald CJ, Wilson GA, McCabe GP: Physician response to computer reminders. JAMA. 1980, 244 (14): 1579-1581. 10.1001/jama.244.14.1579.

McDonald CJ: Use of a computer to detect and respond to clinical events: its effect on clinician behavior. Ann Intern Med. 1976, 84 (2): 162-167.

Sundaram V, Lazzeroni LC, Douglass LR, Sanders GD, Tempio P, Owens DK: A randomized trial of computer-based reminders and audit and feedback to improve HIV screening in a primary care setting. Int J STD AIDS. 2009, 20 (8): 527-533. 10.1258/ijsa.2008.008423.

Roukema J, Steyerberg EW, van der Lei J, Moll HA: Randomized trial of a clinical decision support system: impact on the management of children with fever without apparent source. J Am Med Inform Assoc. 2008, 15 (1): 107-113.

Downs M, Turner S, Bryans M, Wilcock J, Keady J, Levin E, O'Carroll R, Howie K, Iliffe S: Effectiveness of educational interventions in improving detection and management of dementia in primary care: cluster randomised controlled study. BMJ. 2006, 332 (7543): 692-696. 10.1136/bmj.332.7543.692.

Feldstein A, Elmer PJ, Smith DH, Herson M, Orwoll E, Chen C, Aickin M, Swain MC: Electronic medical record reminder improves osteoporosis management after a fracture: a randomized, controlled trial. J Am Geriatr Soc. 2006, 54 (3): 450-457. 10.1111/j.1532-5415.2005.00618.x.

Flottorp S, Oxman AD, Havelsrud K, Treweek S, Herrin J: Cluster randomised controlled trial of tailored interventions to improve the management of urinary tract infections in women and sore throat. BMJ. 2002, 325 (7360): 367-10.1136/bmj.325.7360.367.

Flottorp S, Havelsrud K, Oxman AD: Process evaluation of a cluster randomized trial of tailored interventions to implement guidelines in primary care--why is it so hard to change practice?. Fam Pract. 2003, 20 (3): 333-339. 10.1093/fampra/cmg316.

McDonald CJ, Hui SL, Smith DM, Tierney WM, Cohen SJ, Weinberger M, McCabe GP: Reminders to physicians from an introspective computer medical record. A two-year randomized trial. Ann Intern Med. 1984, 100 (1): 130-138.

Javitt JC, Steinberg G, Locke T, Couch JB, Jacques J, Juster I, Reisman L: Using a claims data-based sentinel system to improve compliance with clinical guidelines: results of a randomized prospective study. Am J Manag Care. 2005, 11 (2): 93-102.

Bates DW, Kuperman GJ, Rittenberg E, Teich JM, Fiskio J, Ma'Luf N, Onderdonk A, Wybenga D, Winkelman J, Brennan TA: A randomized trial of a computer-based intervention to reduce utilization of redundant laboratory tests. Am J Med. 1999, 106 (2): 144-150. 10.1016/S0002-9343(98)00410-0.

Tierney WM, McDonald CJ, Hui SL, Martin DK: Computer predictions of abnormal test results: effects on outpatient testing. JAMA. 1988, 259 (8): 1194-1198. 10.1001/jama.259.8.1194.

Overhage JM, Tierney WM, Zhou XH, McDonald CJ: A randomized trial of 'corollary orders' to prevent errors of omission. J Am Med Inform Assoc. 1997, 4 (5): 364-375. 10.1136/jamia.1997.0040364.

Berner ES: Clinical decision support systems: State of the Art. 2009, Rockville, Maryland: Agency for Healthcare Research and Quality

Tierney WM, Miller ME, Overhage JM, McDonald CJ: Physician inpatient order writing on microcomputer workstations. Effects on resource utilization. JAMA. 1993, 269 (3): 379-383. 10.1001/jama.269.3.379.

Field TS, Rochon P, Lee M, Gavendo L, Baril JL, Gurwitz JH: Computerized clinical decision support during medication ordering for long-term care residents with renal insufficiency. J Am Med Inform Assoc. 2009, 16 (4): 480-485. 10.1197/jamia.M2981.

Thomas JC, Moore A, Qualls PE: The effect on cost of medical care for patients treated with an automated clinical audit system. J Med Syst. 1983, 7 (3): 307-313. 10.1007/BF00993294.

Hedges LV, Olkin I: Statistical methods for meta-analysis. 1985, Orlando: Academic Press

Kawamoto K, Houlihan CA, Balas EA, Lobach DF: Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005, 330 (7494): 765-10.1136/bmj.38398.500764.8F.

Jaspers MW, Smeulers M, Vermeulen H, Peute LW: Effects of clinical decision-support systems on practitioner performance and patient outcomes: a synthesis of high-quality systematic review findings. J Am Med Inform Assoc. 2011, 18 (3): 327-334. 10.1136/amiajnl-2011-000094.

Brenner DJ, Hall EJ: Computed tomography--an increasing source of radiation exposure. N Engl J Med. 2007, 357 (22): 2277-2284. 10.1056/NEJMra072149.

Fazel R, Krumholz HM, Wang Y, Ross JS, Chen J, Ting HH, Shah ND, Nasir K, Einstein AJ, Nallamothu BK: Exposure to low-dose ionizing radiation from medical imaging procedures. N Engl J Med. 2009, 361 (9): 849-857. 10.1056/NEJMoa0901249.

Acknowledgements

The research was funded by a Canadian Institutes of Health Research Synthesis Grant: Knowledge Translation KRS 91791. The members of the Computerized Clinical Decision Support System (CCDSS) Systematic Review Team included the Principal Investigator, Co-Investigators, Co-Applicants/Senior Management Decision-makers, Co-Applicants/Clinical Service Decision-Makers, and Research Staff. The following were involved in collection and/or organization of data: Jeanette Prorok, MSc, McMaster University; Nathan Souza, MD, MMEd, McMaster University; Brian Hemens, BScPhm, MSc, McMaster University; Robby Nieuwlaat, PhD, McMaster University; Shikha Misra, BHSc, McMaster University; Jasmine Dhaliwal, BHSc, McMaster University; Navdeep Sahota, BHSc, University of Saskatchewan; Anita Ramakrishna, BHSc, McMaster University; Pavel Roshanov, BSc, McMaster University; Tahany Awad, MD, McMaster University. Nicholas Hobson, Dipl.T., Chris Cotoi, BEng, EMBA, and Rick Parrish, Dipl.T., at McMaster University provided programming and information technology support.

Author information

Authors and Affiliations

Consortia

Corresponding author

Additional information

Competing interests

RBH, NLW, PSR, JJY, DK, JD, JAM, LWK, TN received support through the Canadian Institutes of Health Research Synthesis Grant: Knowledge Translation KRS 91791 for the submitted work. PSR was also supported by an Ontario Graduate Scholarship, a Canadian Institutes of Health Research Strategic Training Fellowship, and a Canadian Institutes of Health Research 'Banting and Best' Master's Scholarship. Additionally, PSR is a co-applicant for a patent concerning computerized decision support for anticoagulation, which was not discussed in this review, and has recently received awards from organizations that may benefit from the notion that information technology improves healthcare, including COACH (Canadian Organization for Advancement of Computers in Healthcare), the National Institutes of Health Informatics, and Agfa HealthCare Corp. JJY received funding to his institution through an Ontario Ministry of Health and Long-Term Care Career Scientist award; as well as funds paid to him for travel and accommodation for participation in a workshop sponsored by the Institute for Health Economics in Alberta, regarding optimal use of diagnostic imaging for low back pain. RBH is acquainted with several CCDSS developers and researchers, including authors of papers included in this review.

Authors' contributions

RBH was responsible for study conception and design; acquisition, analysis and interpretation of data; critical revision of the manuscript; obtaining funding; and study supervision. He is the guarantor. PSR acquired, analyzed, and interpreted data; drafted and critically revised the manuscript; and provided statistical analysis. JJY acquired, analyzed, and interpreted data; and critically revised the manuscript. JD acquired data and drafted the manuscript. DK analyzed and interpreted data, and critically revised the manuscript. JAM acquired, analyzed, and interpreted data; drafted the manuscript; and provided statistical analysis as well as administrative, technical, or material support. LWK and TN acquired data and drafted the manuscript. NLW acquired, analyzed, and interpreted data; drafted the manuscript; and provided administrative, technical, or material support, as well as study supervision. All authors read and approved the final manuscript.

Electronic supplementary material

13012_2011_407_MOESM1_ESM.DOCX

Additional File 1: Study methods scores for trials of diagnostic test ordering. Methods scores for the included studies. (DOCX 21 KB)

13012_2011_407_MOESM2_ESM.DOCX

Additional File 2: CCDSS characteristics for trials of diagnostic test ordering. CCDSS characteristics of the included studies. (DOCX 32 KB)

13012_2011_407_MOESM3_ESM.DOCX

Additional File 3: Study characteristics for trials of diagnostic test ordering. Study characteristics of the included studies. (DOCX 34 KB)

13012_2011_407_MOESM4_ESM.DOCX

Additional File 4: Results for CCDSS trials of diagnostic test ordering. Details results of the included studies. (DOCX 54 KB)

13012_2011_407_MOESM5_ESM.DOCX

Additional File 5: Costs and CCDSS process-related outcomes for trials of diagnostic test ordering. Cost and CCDSS process-related outcomes for the included studies. (DOCX 27 KB)

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is published under license to BioMed Central Ltd. This is an Open Access article is distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Roshanov, P.S., You, J.J., Dhaliwal, J. et al. Can computerized clinical decision support systems improve practitioners' diagnostic test ordering behavior? A decision-maker-researcher partnership systematic review. Implementation Sci 6, 88 (2011). https://doi.org/10.1186/1748-5908-6-88

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1748-5908-6-88