Abstract

Background

Given the current emphasis on networks as vehicles for innovation and change in health service delivery, the ability to conceptualise and measure organisational enablers for the social construction of knowledge merits attention. This study aimed to develop a composite tool to measure the organisational context for evidence-based practice (EBP) in healthcare.

Methods

A structured search of the major healthcare and management databases for measurement tools from four domains: research utilisation (RU), research activity (RA), knowledge management (KM), and organisational learning (OL). Included studies were reports of the development or use of measurement tools that included organisational factors. Tools were appraised for face and content validity, plus development and testing methods. Measurement tool items were extracted, merged across the four domains, and categorised within a constructed framework describing the absorptive and receptive capacities of organisations.

Results

Thirty measurement tools were identified and appraised. Eighteen tools from the four domains were selected for item extraction and analysis. The constructed framework consists of seven categories relating to three core organisational attributes of vision, leadership, and a learning culture, and four stages of knowledge need, acquisition of new knowledge, knowledge sharing, and knowledge use. Measurement tools from RA or RU domains had more items relating to the categories of leadership, and acquisition of new knowledge; while tools from KM or learning organisation domains had more items relating to vision, learning culture, knowledge need, and knowledge sharing. There was equal emphasis on knowledge use in the different domains.

Conclusion

If the translation of evidence into knowledge is viewed as socially mediated, tools to measure the organisational context of EBP in healthcare could be enhanced by consideration of related concepts from the organisational and management sciences. Comparison of measurement tools across domains suggests that there is scope within EBP for supplementing the current emphasis on human and technical resources to support information uptake and use by individuals. Consideration of measurement tools from the fields of KM and OL shows more content related to social mechanisms to facilitate knowledge recognition, translation, and transfer between individuals and groups.

Similar content being viewed by others

Background

The context of managing the knowledge base for healthcare is complex. Healthcare organizations are composed of multi-level and multi-site interlacing networks that, despite central command and control structures, have strong front-line local micro-systems involved in interpreting policy direction [1]. The nature of healthcare knowledge is characterized by proliferation of information, fragmentation, distribution, and high context dependency. Healthcare practice requires coordinated action in uncertain, rapidly changing situations, with the potential for high failure costs [2]. The public sector context includes the influence of externally imposed performance targets and multiple stakeholder influences and values, the imperative to share good practice across organisational boundaries, and a complex and diverse set of boundaries and networks [3]. Having strong mechanisms and processes for transferring information, developing shared meanings, and the political negotiation of action [4, 5] are therefore crucially important in public sector/healthcare settings, but it is not surprising that there are reports of problems in the organizational capacity of the public sector to effectively manage best practice innovation [6–11], particularly around issues of power and politics between different professional groups [12–17].

The development of capacity to implement evidence-based innovations is a central concept in UK government programmes in healthcare [18]. Strategies to improve evidence-based decision making in healthcare have only recently shifted emphasis away from innovation as a linear and technical process dominated by psychological and cognitive theories of individual behaviour change [19], toward organisational level interventions [20], with attention shifting toward the development of inter-organisational clinical, learning, and research networks for sharing knowledge and innovation [21–23], and attempts to improve capacity for innovation within the public sector [24].

Organisational capacity refers to the organisation's ability to take effective action, in this context for the purpose of continually renewing and improving its healthcare practices. Absorptive and receptive capacities are theorized as important antecedents to innovation in healthcare [25]. Broadly, the concept of absorptive capacity is the organization's ability to recognise the value of new external knowledge and to assimilate it, while receptive capacity is the ability to facilitate the transfer and use of new knowledge [26–31]. Empirical studies have identified some general antecedent conditions [32–34], and have tested application of the concept of absorptive capacity to healthcare [35, 36], although receptive capacities are less well studied. Empirically supported features of organisational context that impact on absorptive and receptive capacities in healthcare include processes for identifying, interpreting, and sharing new knowledge; a learning organisation culture; network structures; strong leadership, vision, and management; and supportive technologies [25].

Public sector benchmarking is widely promoted as a tool for enhancing organisational capacity via a process of collaborative learning [37]. Benchmarking requires the collation and construction of best practice indicators for institutional audit and comparison. Tools are available to measure the organizational context for evidence-based healthcare practice [38–41], and components of evidence-based practice (EBP) including implementation of organisational change [42–45], research utilization (RU) [46], or research activity (RA) [47]. While organisational learning (OL) and knowledge management (KM) frameworks are increasingly being claimed in empirical studies in healthcare [48–53], current approaches to assessing organisational capacity are more likely to be underpinned by diffusion of innovation or change management frameworks [54].

Nicolini and colleagues [2] draw attention to the similarity between the KM literature and the discourse on supporting knowledge translation and transfer in healthcare [55–57], as well as between concepts of OL and the emphasis on collective reflection on practice in the UK National Health Service [58, 59], but suggest that 'ecological segregation' between these disciplines and literatures means that cross-fertilisation has not occurred to any great extent. OL and KM literatures could be fruitful sources for improving our understanding of dimensions of organizational absorptive and receptive capacity in healthcare. We therefore aimed to support the development of a metric to audit the organizational conditions for effective evidence-based change by consulting the wider OL and knowledge literatures, where the development of metrics is also identified as a major research priority [60], including the use of existing tools in healthcare [2].

Definitions of KM vary, but many include the core processes of creation or development of knowledge, its movement, transfer, or flow through the organisation, and its application or use for performance improvement or innovation [61]. Early models of KM focused on the measurement of knowledge assets and intellectual capital, with later models focusing on processes of managing knowledge in organisations, split into models where technical-rationality and information technology solutions were central and academic models focusing on human factors and transactional processes [62]. The more emergent view is of the organisation as 'milieu' or community of practice, where the focus on explanatory variables shifts away from technology towards the level of interactions between individuals, and the potential for collective learning. However, technical models and solutions are also still quite dominant in healthcare [63].

Easterby-Smith and Lyles [64] consider KM to focus on the content of the knowledge that an organisation acquires, creates, processes, and uses, and OL to focus on the process of learning from new knowledge. Nutley, Davies and Walker [54] define OL as the way organisations build and organise knowledge and routines and use the broad skills of their workforce to improve organisational performance. Early models of OL focused on cognitive-behavioural processes of learning at individual, group, and organisational levels [65–67], and the movement of information in social or activity systems [68]. More recent practice-based theories see knowledge as embedded in culture, practice, and process, conceptualising knowing and learning as dynamic, emergent social accomplishment [69–72]. Organisational knowledge is also seen as fragmented into specialised and localised communities of practice, 'distributed knowledge systems' [73], or networks with different interpretive frameworks [74], where competing conceptions of what constitutes legitimate knowledge can occur [75], making knowledge sharing across professional and organization boundaries problematic.

While the two perspectives of KM and OL have very different origins, Scarbrough and Swan [76] suggest that differences are mainly due to disciplinary homes and source perspectives, rather than conceptual distinctiveness. More recently, there have been calls for cognitive and practice-based theories to be integrated in explanatory theories of how practices are constituted, and the practicalities of how socially shared knowledge operates [77, 78]. Similarly, there have been calls for integrative conceptual frameworks for OL and knowledge[79, 80], with learning increasingly defined in terms of knowledge processes [81, 82].

Practice models have their limitations, particularly in relation to weaknesses in explaining how knowledge is contested and legitimated [83]. In a policy context that requires clinical decisions to be based on proof from externally generated research evidence, a comprehensive model for healthcare KM would need to reflect the importance of processes to verify and legitimate knowledge. Research knowledge then needs to be integrated with knowledge achieved from shared interpretation and meaning within the specific social, political, and cultural context of practice, and with the personal values-based knowledge of both the individual professional and the patient [84]. Much public sector innovation also originates from practice and practitioners, as well as external scientific knowledge [85, 86]. New understandings generated from practice then require re-externalising into explicit and shared formal statements and procedures, so that actions can be defended in a public system of accountability.

Our own preference is for a perspective where multiple forms of knowledge are recognised, and where emphasis is placed on processes of validating and warranting knowledge claims. Attention needs also be directed towards the interrelationship between organisational structures of knowledge governance, such as leadership, incentive and reward structures, or the allocation of authority and decision rights, and the conditions for individual agency [87–89]. Our own focus is therefore on identifying the organizational conditions that are perceived to support or hinder organizational absorptive or receptive capacities, as a basis for practical action by individuals.

The indicators for supportive organisational conditions are to be developed by extracting items from existing tools, as in previous tools developed to measure OL capability [90]. Existing tools are used because indicators are already empirically supported, operationalised, and easily identified and compared, and because our primary focus is one of utility for practice [91], by specifying 'the different behavioural and organisational conditions under which knowledge can be managed effectively' [92p ix]. Measurement tools that were based on reviews of the literature in the respective fields of KM and learning organisations were chosen as comparison sources to assess the comprehensiveness of the current tools in healthcare, and to improve the delineation of the social and human aspects of EBP in healthcare. If this preliminary stage proves fruitful in highlighting the utility of widening the pool for benchmark items, future work aims to compare the source literatures for confirming empirical evidence, with further work to test the validity and reliability of the benchmark items.

Methods

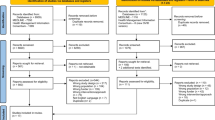

A structured literature review was undertaken to collate measurement tools for organisational context from the domains of research use or RA in healthcare, or for KM or OL in the management or organisational science literature.

Search and screening

A search of electronic databases from inception to March 2006 was carried out on MEDLINE, CINAHL, AMED, ZETOC, IBSS, Web of Science, National Research Register, Ingenta, Business Source Premier, and Emerald. Measurement tools were included if they were designed to measure contextual features of whole organisations, or sub-units such as teams or departments. Tools needed to include at least one item relating to organisational factors influencing RU, RA, KM, or OL. To be included, papers had to report a structured method of tool development and psychometric testing.

Data extraction and analysis

Individual reviewers (BF, PB, LT) extracted items relating to organisational context from each measurement tool. Items were excluded if they focused solely on structural organisational factors not amenable to change (e.g., organisational design, size; inter-organisational factors) and environment (e.g., political directives); or characteristics of the commercial context that were not applicable in a public service context. Some tools had items expressed as staff competencies (e.g., 'Staff in our organization have critical appraisal skills...') or organisational processes (e.g., 'Our organization has arrangements with external expertise...' [93]). Items such as these were included and interpreted in terms of the availability of an organisational resource (e.g., facilities for learning critical appraisal skills, or availability of external expertise). However, some items were not expressed in a way that could be inferred as an organisational characteristic (e.g., 'Our employees resist changing to new ways of doing things' [94]), and were excluded.

Category analysis

Initially, similar items from different measurement tools were grouped together, e.g., 'I often have the opportunity to talk to other staff about successful programmes...' [95] and 'employees have the chance to talk among themselves about new ideas...' [96]. After an initial failed attempt to categorize all items using an existing diffusion of innovation framework [25], the review team constructed categories of organisational attributes by grouping items from across all the measurement instruments, and refining, expanding, or collapsing the groupings until a fit was achieved for all extracted items. The material is illustrated in Table 1 by items allocated to two attributes: involving the individual, and shared vision/goals (tool source in brackets – see Table 2[97–104]). While broadly similar, it can be seen that items from the different domains are expressed differently, and there was some judgement involved in determining the similarity of meaning across domains. It can also be seen that for some categories, particular domains of tool did not contribute any items, while other domains contributed multiple items.

We conducted three rounds of agreement with the fit of items to categories: an initial round using categories derived from the diffusion of innovation framework by Greenhalgh and colleagues [25], which was rejected because of the lack of fit for numerous items; a second round with our own constructed categorization framework built from grouping items; and a third and final round for reviewers to check back that all items from their measurement tools had been included and adequately categorized in the constructed framework. Between each round, joint discussions were held to agree refinements to categories and discuss any disagreement. Using this process, agreement was reached between all reviewers on the inclusion and categorization of all items. An independent reviewer (LP) then checked validity of extraction, categorization, and merging by tracing each composite attribute back to the original tool, agreeing its categorization, then reviewing each tool to ensure that all relevant items were incorporated. Items queried were re-checked.

Results

Thirty tools were identified and appraised [see Additional file 1]. Based on the inclusion criteria for tool development and testing, 18 tools with 649 items in total were selected. These are listed in Table 2, with information on development and psychometric testing [see Additional File 2] The number of the tool from Table 2 will be used in subsequent tables.

In total, 261 items related to organisational context were extracted from the measurement tools. For two tools [105, 106], the full text of each item was not available, so the names of the categories of measurement for which results were reported were used as items, e.g., organisational climate for change.

Final model

Figure 1 illustrates the final category structure constructed to account for all of the items from the measurement tools. Seven broad categories gave a best fit for the items. The central white circle of the diagram shows three core categories of vision, leadership, and a learning culture. The middle ring shows four categories of activity: 'knowledge need and capture' and 'acquisition of new knowledge' (relating to organisational absorptive capacity); and 'knowledge sharing' and 'knowledge use' (related to organisational receptive capacity). The outer ring illustrates the organisational attributes contributing to each category.

Tool item analysis

Table 3 summarises the organisational attributes for each category. Attributes are based on a composite of items extracted from the tools across the four domains. An example of a single tool item is given to illustrate the source material for each attribute.

The marked areas in Table 4 identify the measurement tool source of each organisational attribute. The percentages are derived from the number of times an item is included in a category, compared with the total possible in each domain, e.g., there were two items from RA tools included in the learning culture category, out of a possible total of 16 items. The results for each category are discussed below:

Learning culture

OL and KM tools were the most frequent source of these attributes, with seven out of nine tools covering attributes in this category, although none of the tools covered all of the attributes. Three RA/RU tools covered the attribute of 'involving the individual', with one of the RU tools also including the attribute of 'valuing the individual'. Each attribute was sourced from between three and five tools across all domains. The most representation was sourced from KM tools.

Vision

Eight out of nine of the OL/KM tools, and five out of nine RA/RU tools included attributes from this category. The most common attribute was 'shared vision/goals' (eight tools), and the least common was 'policies and infrastructures' (three tools). The most representation was sourced from OL tools.

Leadership

All of the domains included some reference to attributes of management or leadership. Five out of nine RA/RU tools and four out of nine KM/OL tools included items related to leadership. The most representation was in RA tools.

Knowledge need

All of the OL tools and three out of four of the KM tools included items related to attributes of this category. They were less commonly sourced from RA and RU tools. The most common attribute was 'learning from experience' (seven tools). The most representation was sourced from OL tools.

Acquiring new knowledge

Attributes in this category were more commonly sourced in RA/RU tools. Attributes were sourced from between five and nine tools out of the total of 18 tools across all domains, and each attribute was covered in each domain, except 'accessing information', which was not covered in any KM tool. The most representation was sourced from RU tools.

Knowledge sharing

Most OL/KM tools included multiple attributes from this category, all RA tools included one or two items, but only two out of five RU tools included one attribute. 'Promoting internal knowledge transfer' was the most common attribute, included in 13 out of 18 tools, with 'promoting external contacts' included in seven tools. The other items were included in five tools. The most representation for this category was sourced from OL tools.

Knowledge use

Overall, this was the largest and most populated category. The most common attributes referred to were 'encouraging innovation', included in 14 out of 18 tools, and 'role recognition/reward', referred to in 13 tools. Each of the other attributes was also referred to in at least eight tools. All attributes were sourced from all domains. The most representation for this category was sourced from RA tools.

Analysis of tool coverage

Table 4 also summarises how well each tool domain covers the constructed categories and attributes. The results for each domain are discussed below:

RA tools

The category with the most representation in the RA tools was 'knowledge use', with items in the category of 'acquiring new knowledge' and 'vision' also well represented. The categories of 'knowledge need' and 'knowledge sharing' were less well reflected across the RA tools. Two attributes of 'recognising and valuing existing knowledge' and 'knowledge transfer technology' did not appear in any RA tool. Five attributes appeared in only one of the tools. Four attributes of 'developing expertise, role recognition and reward','support/access to expertise', and'access to resources' were common to all tools. Two tools had relatively good coverage of the attributes: the ABC survey [107], with 14 out of 26 attributes covered, and the KEYS Questionnaire [93] with 15 out of 26 attributes covered.

RU tools

This was the domain with the least coverage overall, commonly centered in the categories of 'acquiring new knowledge' and 'knowledge use'. The other categories were poorly represented. The attribute of 'accessing information' was common to all tools, with 'role recognition/reward', and 'support/access to expertise' common to four out of five tools. The tool which covered the most attributes (10 out of 26) was the RU Survey Instrument [105, 108].

KM tools

The KM tools covered all of the categories, with more common representation in the categories of 'learning culture', 'knowledge need', 'knowledge sharing' and 'knowledge use', but individual tools varied in their emphasis. The categories of 'leadership' and 'acquisition of new knowledge' were the least well represented. Two attributes were included in all four tools: 'promoting internal knowledge transfer', and 'encouraging innovation'.'Learning climate' and 'access to resources' were included in three out of four tools. Five attributes were not represented in any tool: 'involving the individual','policies and infrastructures','managerial attributes','accessing information', and 'supporting teamwork'. The tool with the best overall coverage of the attributes (13 out of 26) was the KM Questionnaire [109].

OL tools

OL tools covered all categories, and generally had more consistent coverage than other domains of the categories 'vision', 'knowledge need' and 'knowledge sharing'. Single attributes relating to 'promoting internal knowledge transfer', and 'encouraging innovation' were covered in all five tools, with the attributes of 'communication', 'shared vision and goals','learning from experience', and 'promoting external contacts/networks' covered in four out of five tools. 'Key strategic aims','policies and infrastructures','questioning culture', 'accessing information', and 'exposure to new information' were only covered in one out of the five tools. The OL Scale [110] covered 17 out of the 26 possible attributes. The other four tools covered between 8 and 11 attributes.

Comparison of support for benchmark items: what can EBP tools learn from the KM and OL literature?

While each of the composite attributes is supported by items extracted from at least three measurement tools, there are differences in emphasis across the domains. To consider the potential contribution of the newer domains of KM/OL, the number of items from these domains have been pooled and compared against the number of items sourced from the domains commonly represented in the healthcare literature, i.e., RA/RU. Figure 2 illustrates that the KM and OL literature focus more on 'learning culture', 'vision', 'knowledge need', and 'knowledge sharing'. The RA and RU literatures have a stronger emphasis on 'leadership', 'acquiring new knowledge', and 'knowledge use'.

Discussion

The importance of understanding context has been reiterated by the High Level Clinical Effectiveness group [18]. This project was developed in response to perceived limitations in the conceptualisation and measurement of organisational context for EBP in healthcare. We wanted to move away from the rather narrow focus on RU and change management to include wider process and practice-based perspectives from the KM and OL literature. Our analysis of existing measurement tools has confirmed differences in emphasis across the domains. Measurement tools for RA and RU focus more on access to new information, leadership, and resources for change, and less on recognizing, valuing, and building shared knowledge. This is congruent with the culture of 'rationality, verticality, and control' [[6] p660] in healthcare, but the lack of attention to social context may be one reason why attempts to improve practice by influencing the behaviour of individual practitioners have variable results [111].

The emphasis in KM and OL tools on shared vision, learning culture, and sharing existing knowledge reflects a more socially mediated view of knowledge. If it is groups and networks that generate the meaning and value to be attached to evidence, organisational efforts to improve EBP would need to do more to shift towards supporting horizontal knowledge transfer. Networks have emerged as a recent UK government strategy for moving health research into action by creating clusters that break down disciplinary, sectoral, and geographic boundaries, but communication structures alone are unlikely to be successful for knowledge transfer across specialized domains [4, 112] without additional mechanisms to support the transfer of practice and process knowledge [73].

Since this search was conducted, three additional tools to measure organisational context in quality improvement related areas have been reported [113–115]. Each of these tools has strengths, including attributes such as feedback that are not included in our model, but none have comprehensive coverage of all of the attributes identified in this study.

A potential weakness in using existing tools as sources in this study is that they might not reflect the latest theories and concepts, because tool development tends to lag behind conceptual development. This might result in inadvertent bias towards earlier more technical models, and we acknowledge that the existing tools do largely adopt a structuralist perspective. While the items contained in the existing measurement tools can only ever provide a rather simplistic reflection of complex phenomena, we felt that including them was better than not attempting to express them at all. However, while new perspectives are worth investigating, the unquestioning interdisciplinary transfer of theory also needs care. Compared with the public sector, there are differences in the types of problems, the availability of information and resources, and the motivations for evidence uptake and use. KM theory supposes an identified knowledge need, scarce information, and a workforce motivated by external incentive in a resource-rich environment. EBP on the other hand requires compliance with externally produced information for predominantly intrinsic reward, with high innovation costs in a resource-limited environment. A number of studies have identified some of the difficulties of knowledge sharing in the public sector [1, 6, 11, 16, 48, 116, 117]. Organisational theory may not transfer well into healthcare if EBP is viewed as a process of social and political control to promote compliance with centrally derived policy, rather than a generative process to make best use of available knowledge.

Conclusion

Assessing organisational absorptive and receptive capacity with the aim of improving organizational conditions is postulated as a first step in supporting a research informed decision-making culture. Foss [87] suggests the emergence of a new approach referred to as knowledge governance: the management of the mechanisms that mediate between the micro-processes of individual knowledge and the outcomes of organisational performance. But what would this mean in practice? The kinds of support which KM and OL tools include as standard, but that are not well reflected in existing tools to measure context in healthcare, would include effort to detect and support emergent and existing communities of practice; encourage and reward individuals and groups to ask questions; discuss and share ideas across knowledge communities; and support the progression, testing, and adopting of new ideas by embedding them in systems and processes.

The processes by which individual- and group-level knowledge are collated into organisational level capability to improve care are less clear. If social networks of individuals are to be facilitated to undertake repeated, ongoing, and routine uptake of evidence within their daily practice, we also need to extend our thinking even further toward considering the organisational contextual features that would support the collective sense-making processes of key knowledge workers.

References

Pope C, Robert G, Bate P, le May A, Gabbay J: Lost in translation: a multi-level case study of the metamorphosis of meanings and action in public sector organizational innovation. Public Admin. 2006, 84: 59-79. 10.1111/j.0033-3298.2006.00493.x.

Nicolini D: Managing knowledge in the healthcare sector: a review. Int J Manag Rev. 2008, 10: 245-263. 10.1111/j.1468-2370.2007.00219.x.

Rashman L, Withers E, Hartley J: Organizational Learning, Knowledge and Capacity: a systematic literature review for policy-makers, managers and academics. 2008, London: Department for Communities and Local Government

Carlile PR: Transferring, translating, and transforming: An integrative framework for managing knowledge across boundaries. Organ Sci. 2004, 15: 555-568. 10.1287/orsc.1040.0094.

Carlile PR: A pragmatic view of knowledge and boundaries: boundary objects in new product development. Organ Sci. 2002, 13: 442-455. 10.1287/orsc.13.4.442.2953.

Bate SP, Robert G: Knowledge management and communities of practice in the private sector: Lessons for modernizing the national health service in England and Wales. Public Admin. 2002, 80: 643-663. 10.1111/1467-9299.00322.

Newell S, Edelman L, Scarborough H, Swan J, Bresen M: 'Best practice' development and transfer in the NHS: the importance of process as well as product knowledge. Health Serv Manage Res. 2003, 16: 1-12. 10.1258/095148403762539095.

Dopson S, Fitzgerald L: Knowledge to Action: evidence-based healthcare in context. 2005, Oxford: Oxford University Press

Hartley J, Bennington J: Copy and paste, or graft and transplant? Knowledge sharing through inter-organizational networks. Public Money Manage. 2006, 26: 101-108. 10.1111/j.1467-9302.2006.00508.x.

Rashman L, Downe J, Hartley J: Knowledge creation and transfer in the Beacon Scheme: improving services through sharing good practice. Local Government Studies. 2005, 31: 683-700. 10.1080/03003930500293732.

Martin GP, Currie G, Finn R: Reconfiguring or reproducing the intra-professional boundaries of expertise? Generalist and specialist knowledge in the modernization of genetics provision in England. International Conference on Organizational Learning, Knowledge and Capabilities; 28. 2008, University of Aarhus, Denmark, April

Haynes P: Managing Complexity in the Public Services. 2003, Maidenhead: Open University Press

Currie G, Waring J, Finn R: The limits of knowledge management for public services modernisation: the case of patient safety and service quality. Public Admin. 2007, 86: 363-385. 10.1111/j.1467-9299.2007.00705.x.

Ferlie E, Fitzgerald L, Wood M, Hawkins C: The nonspread of innovations: the mediating role of professionals. Acad Manage J. 2005, 48: 117-134.

Currie G, Finn R, Martin G: Accounting for the 'dark side' of new organizational forms: the case of healthcare professionals. Hum Relat. 2008, 61: 539-564. 10.1177/0018726708091018.

Currie G, Suhomlinova O: The impact of institutional forces upon knowledge sharing in the UK NHS: the triumph of professional power and the inconsistency of policy. Public Admin. 2006, 84: 1-30. 10.1111/j.0033-3298.2006.00491.x.

Currie G, Kerrin M: The limits of a technological fix to knowledge management: epistemological, political and cultural issues in the case of intranet implementation. Manage Learn. 2004, 35: 9-29. 10.1177/1350507604042281.

Department of Health: Report of the High Level Group on Clinical Effectiveness. 2007, London: Department of Health

Godin G, Belanger-Gravel A, Eccles M, Grimshaw J: Healthcare professionals' intentions and behaviours: a systematic review of studies based on social cognitive theories. Implement Sci. 2008, 3: 36-10.1186/1748-5908-3-36.

Wensing M, Wollersheim H, Grol R: Organizational interventions to implement improvements in patient care: a structured review of reviews. Implement Sci. 2006, 1: 2-10.1186/1748-5908-1-2.

Department of Health: A Guide to Promote a Shared Understanding of the Benefits of Local Managed Networks. 2005, London: Department of Health

Transitions Team Department of Health: The Way Forward: the NHS Institute for Learning, Skills and Innovation. 2005, London: Department of Health

Research and Development Directorate Department of Health: Best Research for Best Health: a new national health research strategy. 2006, London: Department of Health

Pablo AL, Reay T, Dewald JR, Casebeer AL: Identifying, enabling and managing dynamic capabilities in the public sector. J Manage Stud. 2007, 44: 687-708. 10.1111/j.1467-6486.2006.00675.x.

Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O: Diffusion of innovations in service organizations: Systematic review and recommendations. Milbank Q. 2004, 82: 581-629. 10.1111/j.0887-378X.2004.00325.x.

Cohen WM, Levinthal DA: Absorptive capacity – a new perspective on learning and innovation. Adm Sci Q. 1990, 35: 128-152. 10.2307/2393553.

Zahra SA, George G: Absorptive capacity: A review, reconceptualization, and extension. Acad Manage Rev. 2002, 27: 185-203. 10.2307/4134351.

Bosch Van den FAJ, van Wijk R, Volberda HW: Absorptive capacity: antecedents, models, and outcomes. The Blackwell Handbook of Organizational Learning and Knowledge Management. Edited by: Easterby-Smith M, Lyles MA. 2005, Oxford: Blackwell, 279-301.

Lane PJ, Koka BR, Pathak S: The reification of absorptive capacity: A critical review and rejuvenation of the construct. Acad Manage Rev. 2006, 31: 833-863.

Jones O: Developing absorptive capacity in mature organizations: the change agent's role. Manage Learn. 2006, 37: 355-376. 10.1177/1350507606067172.

Todorova G, Durisin B: Absorptive capacity: valuing a reconceptualization. Acad Manage Rev. 2007, 32: 774-786.

Bosch Van den FAJ, Volberda HW, de Boer M: Coevolution of firm absorptive capacity and knowledge environment: organizational forms and combinative capabilities. Organ Sci. 1999, 10: 551-568. 10.1287/orsc.10.5.551.

Jansen JJ, Van den Bosch FA, Volberda HW: Managing potential and realized absorptive capacity: How do organizational antecedent's matter?. Acad Manage J. 2005, 48: 999-1015.

Fosfuri A, Tribó JA: Exploring the antecedents of potential absorptive capacity and its impact on innovation performance. Omega. 2008, 36: 173-187. 10.1016/j.omega.2006.06.012.

Easterby-Smith M, Graça M, Antonacopoulous E, Ferdinand J: Absorptive capacity: a process perspective. Manage Learn. 2008, 39: 483-501. 10.1177/1350507608096037.

Scarbrough H, Laurent S, Bresnen M, Edelman L, Newell S, Swan J: Learning from projects: the interplay of absorptive and reflective capacity. Organizational Learning and Knowledge 5th International Conference; 20. 2003, Lancaster University, May

Braadbaart O, Yusnandarshah B: Public sector benchmarking: a survey of scientific articles, 1990–2005. Int Rev Adm Sci. 2008, 74: 421-433. 10.1177/0020852308095311.

McColl A, Smith H, White P, Field J: General practitioners' perceptions of the route to evidence based medicine: a questionnaire survey. BMJ. 1998, 316: 361-365.

Newman K, Pyne T, Leigh S, Rounce K, Cowling A: Personal and organizational competencies requisite for the adoption and implmentation of evidence-based healthcare. Health Serv Manage Res. 2000, 13: 97-110.

Feasey S, Fox C: Benchmarking evidence-based care. Paediatr Nurs. 2001, 13: 22-25.

Fanning MF, Oakes DW: A tool for quantifying organizational support for evidence-based practice change. J Nurs Care Qual. 2006, 21: 110-113.

Wallin L, Ewald U, Wikbad K, Scott-Findlay S, Arnetz BB: Understanding work contextual factors: A short-cut to evidence-based practice?. Worldviews Evid Based Nurs. 2006, 3: 153-164. 10.1111/j.1741-6787.2006.00067.x.

Baker GR, King H, MacDonald JL, Horbar JD: Using organizational assessment surveys for improvement in neonatal intensive care. Pediatrics. 2003, 111: e419-e425. 10.1542/peds.111.3.579.

Weiner BJ, Amick H, Lee SYD: Conceptualization and measurement of organizational readiness for change: a review of the literature in health services research and other fields. Med Care Res Rev. 2008, 65: 379-436. 10.1177/1077558708317802.

Helfrich CD, Li YF, Mohr DC, Meterko M, Sales AE: Assessing an organizational culture instrument based on the Competing Values Framework: exploratory and confirmatory factor analyses. Implement Sci. 2007, 2: 13-10.1186/1748-5908-2-13.

Funk SG, Champagne MT, Wiese RA, Tornquist EM: BARRIERS: the Barriers to Research Utilization Scale. Appl Nurs Res. 1991, 4: 39-45. 10.1016/S0897-1897(05)80052-7.

Watson B, Clarke C, Swallow V, Forster S: Exploratory factor analysis of the research and development culture index among qualified nurses. J Clin Nurs. 2005, 14: 1042-1047. 10.1111/j.1365-2702.2005.01214.x.

Addicott R, McGivern G, Ferlie E: Networks, organizational learning and knowledge management: NHS cancer networks. Public Money Manage. 2006, 26: 87-94. 10.1111/j.1467-9302.2006.00506.x.

Gabbay J, le May A: Evidence based guidelines or collectively constructed "mindlines?" – Ethnographic study of knowledge management in primary care. Br Med J. 2004, 329: 1013-1016. 10.1136/bmj.329.7473.1013.

Tucker AL, Nembhard IM, Edmondson AC: Implementing new practices: An empirical study of organizational learning in hospital intensive care units. Manage Sci. 2007, 53: 894-907. 10.1287/mnsc.1060.0692.

Berta W, Teare GF, Gilbart E, Ginsburg LS, Lemieux-Charles L, Davis D, Rappolt S: The contingencies of organizational learning in long-term care: factors that affect innovation adoption. Health Care Manage Rev. 2005, 30: 282-292.

Finn R, Waring J: Organizational barriers to architectural knowledge and teamwork in operating theatres. Public Money Manage. 2006, 26: 117-124. 10.1111/j.1467-9302.2006.00510.x.

Reardon JL, Davidson E: An organizational learning perspective on the assimilation of electronic medical records among small physician practices. Eur J Inform Sys. 2007, 16: 681-694. 10.1057/palgrave.ejis.3000714.

Nutley S, Davies H, Walter I: Learning From Knowledge Management. 2004, University of St. Andrews, Research Unit for Research Utilisation

Estabrooks CA, Thompson DS, Lovely JJE, Hofmeyer A: A guide to knowledge translation theory. J Contin Educ Health Prof. 2006, 26: 25-36. 10.1002/chp.48.

Mitton C, Adair CE, McKenzie E, Patten SB, Perry BW: Knowledge transfer and exchange: review and synthesis of the literature. Milbank Q. 2007, 85: 729-768.

Woolf SH: The meaning of translational research and why it matters. JAMA-J Am Med Assoc. 2008, 299: 211-213. 10.1001/jama.2007.26.

Lockyer J, Gondocz ST, Thiverge RL: Knowledge translation: the role and place of practice reflection. J Contin Educ Health Prof. 2004, 24: 50-56. 10.1002/chp.1340240108.

Ghaye T: Building the Reflective Healthcare Organisation. 2008, Oxford: Blackwell

Lyles MA, Easterby-Smith M: Organizational learning and knowledge management: agendas for future research. The Blackwell Handbook of Organizational Learning and Knowledge Management. Edited by: Easterby-Smith M, Lyles MA. 2005, Oxford: Blackwell Publishing

Ricceri F: Intellectual Capital and Knowledge Management: strategic management of knowledge resources. 2008, London: Routledge

Lloria MB: A review of the main approaches to knowledge management. Knowledge Management Research & Practice. 2008, 6: 77-89. 10.1057/palgrave.kmrp.8500164.

Bali RK, Dwiveci AN: Healthcare knowledge management: issues, advances and successes. 2007, Berlin: Springer Verlag

Easterby-Smith M, Lyles MA: Introduction: Watersheds of organizational learning and knowledge management. The Blackwell Handbook of Organizational Learning and Knowledge Management. Edited by: Easterby-Smith M, Lyles MA. 2005, Oxford: Blackwell Publishing

Nonaka I, Takeuchi H: The Knowledge Creating Company: how Japanese companies create the dynamics of innovation. 1995, New York: Oxford University Press

Crossan MM, Lane HW, White RE: An organizational learning framework: From intuition to institution. Acad Manage Rev. 1999, 24: 522-537. 10.2307/259140.

Zollo M, Winter SG: Deliberate learning and the evolution of dynamic capabilities. Organ Sci. 2002, 13: 339-351. 10.1287/orsc.13.3.339.2780.

Boisot MH: The creation and sharing of knowledge. The Strategic Management of Intellectual Capital and Organizational Knowledge. Edited by: Choo CW, Bontis N. 2002, New York: Oxford University Press, 65-77.

Cook SDN, Brown JS: Bridging epistemologies: The generative dance between organizational knowledge and organizational knowing. Organ Sci. 1999, 10: 381-400. 10.1287/orsc.10.4.381.

Orlikowski WJ: Knowing in practice: enacting a collective capability in distributed organizing. Organ Sci. 2002, 13: 249-273. 10.1287/orsc.13.3.249.2776.

Nicolini D, Gherardi S, Yanow D: Knowing in Organizations: a practice-based approach. 2003, New York: M E Sharpe

Gherardi S: Organizational Knowledge: the texture of workplace learning. 2006, Oxford: Blackwell

Blackler F, Crump N, McDonald S: Organizing processes in complex activity networks. Organization. 2000, 7: 277-300. 10.1177/135050840072005.

Brown JS, Duguid P: Knowledge and organization: a social-practice perspective. Organ Sci. 2001, 12: 198-213. 10.1287/orsc.12.2.198.10116.

Blackler F: Knowledge, knowledge work, and organizations: an overview and interpretation. The Strategic Management of Intellectual Capital and organizational Knowledge. Edited by: Choo CW, Bontis N. 2002, Oxford: Oxford University Press, 47-77.

Scarbrough H, Swan J: Discourses of knowledge management and the learning organization: their production and consumption. The Blackwell Handbook of Organizational Learning and Knowledge Management. Edited by: Easterby-Smith M, Lyles MA. 2005, Oxford: Blackwell

Marshall N: Cognitive and practice-based theories of organizational knowledge and learning: incompatible or complementary?. Manage Learn. 2008, 39: 413-435. 10.1177/1350507608093712.

Spender JC: Organizational learning and knowledge management: whence and whither?. Manage Learn. 2008, 39: 159-176. 10.1177/1350507607087582.

Chiva R, Alegre J: Organizational learning and organizational knowledge – toward the integration of two approaches. Manage Learn. 2005, 36: 49-68. 10.1177/1350507605049906.

Bapuji H, Crossan M, Jiang GL, Rouse MJ: Organizationallearning: a systematic review of the literature. International Conference on Organizational Learning, Knowledge and Capabilities (OLKC). 2007, London, Ontario, Canada, ; 14 June 2007

Vera D, Crossan M: Organizational learning and knowledge management: towards an integrative framework. The Blackwell Handbook of Organizational Learning and Knowledge Management. Edited by: Easterby-Smith M, Lyles MA. 2005, Malden, MA: Blackwell

Nonaka I, Peltokorpi V: Objectivity and subjectivity in knowledge management: a review of 20 top articles. Knowledge and Process Management. 2006, 13: 73-82. 10.1002/kpm.251.

Kuhn T, Jackson MH: A framework for investigating knowing in organizations. Management Communication Quarterly. 2008, 21: 454-485. 10.1177/0893318907313710.

Sheffield J: Inquiry in health knowledge management. Journal of Knowledge Management. 2008, 12: 160-172. 10.1108/13673270810884327.

Savory C: Knowledge translation capability and public-sector innovation processes. International Conference on Organizational Learning, Knowledge and Capabilities (OLKC); 20. 2006, Coventry, University of Warwick, March

Mulgan G: Ready or Not? Taking innovation in the public sector seriously. 2007, London: NESTA

Foss NJ: The emerging knowledge governance approach: Challenges and characteristics. Organization. 2007, 14: 29-52. 10.1177/1350508407071859.

Martin B, Morton P: Organisational conditions for knowledge creation – a critical realist perspective. Annual Conference of the International Association for Critical Realism (IACR); 11. 2008, King's College, London, July

Kontos PC, Poland BD: Mapping new theoretical and methodological terrain for knowledge translation: contributions from critical realism and the arts. Implement Sci. 2009, 4: 1-10.1186/1748-5908-4-1.

Chiva R, Alegre J, Lapiedra R: Measuring organisational learning capability among the workforce. Int J Manpower. 2007, 28: 224-242. 10.1108/01437720710755227.

van Aken JE: Management research based on the paradigm of the design sciences: the quest for field-tested and grounded technological rules. J Manage Stud. 2004, 41: 219-246. 10.1111/j.1467-6486.2004.00430.x.

Newell S, Robertson S, Scarborough M, Swan J: Making Knowledge Work. 2002, London: Palgrave

Canadian Health Services Research Foundation: Is research working for you? A self assessment tool and discussion guide for health services management and policy organizations. 2005, Ottowa, Canadian Health Services Research Foundation

Templeton GF, Lewis BR, Snyder CA: Development of a measure for the organizational learning construct. J Manage Inform Syst. 2002, 19: 175-218.

Goh S, Richards G: Benchmarking the learning capability of organizations. European Management Journal. 1997, 15: 575-583. 10.1016/S0263-2373(97)00036-4.

Jerez-Gomez P, Cespedes-Lorente J, Valle-Cabrera R: Organizational learning capability: a proposal of measurement. J Bus Res. 2005, 58: 715-725. 10.1016/j.jbusres.2003.11.002.

Hooff van den B, Vijvers J, de Ridder J: Foundations and applications of a knowledge management scan. European Management Journal. 2003, 21: 237-246. 10.1016/S0263-2373(03)00018-5.

Metcalfe CJ, Hughes C, Perry S, Wright J, Closs J: Research in the NHS: a survey of four therapies. British Journal of Therapy and Rehabilitation. 2000, 7: 168-175.

Champion VL, Leach A: The relationship of support, availability, and attitude to research utilization. J Nurs Adm. 1986, 16: 19-

Champion VL, Leach A: Variables related to research utilization in nursing: an empirical investigation. J Adv Nurs. 1989, 14: 705-710. 10.1111/j.1365-2648.1989.tb01634.x.

Alcock D, Carroll G, Goodman M: Staff nurses' perceptions of factors influencing their role in research. Can J Nurs Res. 1990, 22: 7-18.

Sveiby KE, Simons R: Collaborative climate and effectiveness of knowledge work – an empirical study. Journal of Knowledge Management. 2002, 6: 420-433. 10.1108/13673270210450388.

American Productivity and Quality Center (APQC): The Knowledge Management Assessment Tool (KMAT). 2008

Hult GTM, Ferrell OC: Global organizational learning capacity in purchasing: Construct and measurement. J Bus Res. 1997, 40: 97-111. 10.1016/S0148-2963(96)00232-9.

Horsley JA, Crane J, Bingle JD: Research utilization as an organizational process. J Nurs Adm. 1978, 4-6.

Brett JDL: Organizational and Integrative Mechanisms and Adoption of Innovation by Nurses. PhD Thesis. 1986, University of Pennsylvania, Philadelphia

Clarke HF: A.B.C. Survey. 1991, Vancouver, Registered Nurses Association of British Columbia

Crane J: Factors Associated with the Use of Research-Based Knowledge in Nursing. PhD Thesis. 1990, Ann Arbor, University of Michigan

Darroch J: Developing a measure of knowledge management behaviors and practices. Journal of Knowledge Management. 2003, 7: 41-54. 10.1108/13673270310505377.

Lopez SP, Peon JMM, Ordas CJV: Managing knowledge: the link between culture and organizational learning. Journal of Knowledge Management. 2004, 8: 93-104. 10.1108/13673270410567657.

Grimshaw JM, Shirran L, Thomas R, Mowatt G, Fraser C, Bero L, Grilli R, Harvey E, Oxman A, O'Brien MA: Changing provider behavior: an overview of systematic reviews of interventions. Med Care. 2001, 39: II-2-II-45. 10.1097/00005650-200108002-00002.

Swan J: Managing knowledge for innovation: production, process and practice. Knowledge Management: from knowledge objects to knowledge processes. Edited by: McInerney CR, Day RE. 2007, Berlin: Springer

Gerrish K, Ashworth P, Lacey A, Bailey J, Cooke J, Kendall S, McNeilly E: Factors influencing the development of evidence-based practice: a research tool. J Adv Nurs. 2007, 57: 328-338. 10.1111/j.1365-2648.2006.04112.x.

Kelly DR, Lough M, Rushmer R, Wilkinson JE, Greig G, Davies HTO: Delivering feedback on learning organization characteristics – using a Learning Practice Inventory. J Eval Clin Pract. 2007, 13: 734-740. 10.1111/j.1365-2753.2006.00746.x.

McCormack B, McCarthy G, Wright J, Coffey A, Slater P: Development of the Context Assessment Index (CAI). 2008, Northern Ireland, University of Ulster, [http://www.science.ulster.ac.uk/inr/pdf/ContinenceStudy.pdf]

Taylor WA, Wright GH: Organizational readiness for knowledge sharing: challenges for public sector managers. Information Resources Management Journal. 2004, 17: 22-37.

Matthews J, Shulman AD: Competitive advantage in public-sector organizations: explaining the public good/sustainable competitive advantage paradox. J Bus Res. 2005, 58: 232-240. 10.1016/S0148-2963(02)00498-8.

Acknowledgements

We wish to acknowledge the support of the North West Strategic Health Authority for funding the continuation of this work into the development and piloting of a benchmark tool for the organisational context of EBP.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

BF, LHT and PB undertook searching, data extraction and categorisation. LP undertook external auditing of data analysis. BF drafted the final paper. CRB, LP and HR contributed to the conceptual design of the overall project, and acted as critical readers for this paper. All authors approved the final version of the manuscript.

Electronic supplementary material

13012_2008_160_MOESM1_ESM.doc

Additional file 1: Measurement tools identified by the search. Titles and bibliographic reference for all measurement tools identified as potentially relevant. (DOC 102 KB)

13012_2008_160_MOESM2_ESM.doc

Additional file 2: Details of development and psychometric testing of included measurement tools. Provides background information on psychometric properties of included measurement tools. (DOC 72 KB)

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

French, B., Thomas, L.H., Baker, P. et al. What can management theories offer evidence-based practice? A comparative analysis of measurement tools for organisational context. Implementation Sci 4, 28 (2009). https://doi.org/10.1186/1748-5908-4-28

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1748-5908-4-28