Abstract

Background

The aim of the present study is to demonstrate, through tests with healthy volunteers, the feasibility of potentially assisting individuals with neurological disorders via a portable assistive technology for the upper extremities (UE). For this purpose the task of independently drinking a glass of water was selected, as it is one of the most basic and vital activities of the daily living that is unfortunately not achievable by individuals severely affected by stroke.

Methods

To accomplish the aim of this study we introduce a wearable and portable system consisting of a novel lightweight Robotic Arm Orthosis (RAO), a Functional Electrical Stimulation (FES) system, and a simple wireless Brain-Computer Interface (BCI). This system is able to process electroencephalographic (EEG) signals and translate them into motions of the impaired arm. Five healthy volunteers participated in this study and were asked to simulate stroke patient symptoms with no voluntary control of their hand and arm. The setup was designed such as the volitional movements of the healthy volunteers’ UE did not interfere with the evaluation of the proposed assistive system. The drinking task was split into eleven phases of which seven were executed by detecting EEG-based signals through the BCI. The user was asked to imagine UE motion related to the specific phase of the task to be assisted. Once detected by the BCI the phase was initiated. Each phase was then terminated when the BCI detected the volunteers clenching their teeth.

Results

The drinking task was completed by all five participants with an average time of 127 seconds with a standard deviation of 23 seconds. The incremental motions of elbow extension and elbow flexion were the primary limiting factors for completing this task faster. The BCI control along with the volitional motions also depended upon the users pace, hence the noticeable deviation from the average time.

Conclusion

Through tests conducted with healthy volunteers, this study showed that our proposed system has the potential for successfully assisting individuals with neurological disorders and hemiparetic stroke to independently drink from a glass.

Similar content being viewed by others

Background

Much time and effort in recent years has been devoted to restoring function to paralyzed limbs resulting from hemiparetic stroke [1–3]. Traditional rehabilitative techniques require numerous sessions with a physiotherapist. These sessions are limited by the time and capabilities of the therapist; this in turn possibly limits the recovery of the patient [2]. Robotic aided rehabilitation [3–7] removes many of these limitations by performing the same rehabilitative and assistive motions accurately and without fatigue of the therapist. This potentially allows greater access to rehabilitative care for post stroke. An example is the ArmeoPower [8] a commercially available rehabilitative exercise device which provides intelligent arm support in a 3D workspace for individuals with neurological disorders.

Another popular method utilizing a different form of technology involves electrical stimulation of the user. Electrical stimulation of muscle groups has been used as both a purely rehabilitative technique to restore strength to atrophied muscles, and to manipulate the paralyzed limbs of both stroke patients and tetraplegics [9, 10]. A voltage difference between the pairs of electrodes is generated which results in safe levels of current to flow through the region causing the activation of the respective muscle groups. A recent study [11] proposed using electrical therapy for conduction of tasks of daily living.

In addition to robot-aided rehabilitation and electrical stimulation therapy, brain computer interfaces have shown promising results in aiding stroke recovery [12–14]. Since the damage resulting from hemiparetic stroke is specifically limited to the brain itself, it is an intriguing solution to use a brain computer interface to help induce neuroplasticity [15]. Research has shown that simply imagining movement of a limb activates the same regions of the motor cortex as actually performing the movement [16]. Moreover, mental practice alone post stroke can help produce functional improvement [17].

Unfortunately each method alone has associated disadvantages, which prevent assisting activity of daily living and performing rehabilitation exercise at a comfortable setting such as the patient's home. Both rehabilitative and assistive robots are traditionally large and cumbersome which make them impractical to use outside of the laboratory environment [18]. The ArmeoPower being a prime example is not portable and is also currently not generally affordable by most of the patients [19]. In regards to FES, there are also different concerns, the primary one being fatigue in the respective muscle groups which may occur very quickly [20]. Similar setbacks with standard brain computer interfaces are they cannot be used outside of the laboratory environment due to their high cost and lengthy setup and training times.

Despite their disadvantages, each of the three technologies however shows peculiar promising aspects. Previous studies have in fact explored this concept and introduced combinational systems [21–28]. The work performed by Pfurtscheller et al. [29] is particularly relevant, as it investigated a BCI-controlled FES system use to restore hand grasp function in a tetraplegic volunteer. Another relevant study incorporating both BCI and FES focused on elbow extension and flexion [30]. A more thorough rehabilitative research comprised of a BCI system controlling a neuroprosthesis [31].

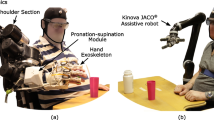

In this article, we propose a unique wearable and portable system that combines all three technologies for assisting functional movement of the upper limb that can potentially be used outside of the laboratory environment. Our proposed system consists of a wearable robotic arm orthosis (RAO) with functional electrical stimulation (FES), which is controlled through a BCI system. The RAO is an exoskeleton capable of providing active force assistance for elbow flexion/extension and forearm pronation/supination. The RAO is made of lightweight plastic with a compact design, and yet powerful enough to effectively assist the arm motion. The RAO does not assist shoulder motion due to the fact that 88% of stroke patients often have voluntary control over this region [32]. The FES is incorporated with the RAO to assist the hand in grasping/releasing an object. The use of FES is limited on the hand motion only, which allows reduction of fatigue and maintains the compactness of the system. Lastly, we seek to control the entire system using an affordable and portable BCI, which comprises of an inexpensive electroencephalography headset (Emotiv EEG headset) to acquire the brain signals and open-source software processing system, BCI2000. In order to evaluate the proposed system, a functional task of daily living - drinking a cup of water [33], is investigated. The drinking task consists of reaching for and grasping a cup from a table, taking a drink, and returning the cup to the table.

Methods

Robotic Arm Orthosis (RAO)

The goal was to design a system that was wearable and portable for enabling its future use in most activities of daily living (ADL). The robotic arm orthosis was developed to actuate the user’s elbow in flexion/extension as well as forearm pronation/supination. All structural components were fabricated out of an ABS derivative using rapid prototyping techniques.

The elbow joint, as seen in Figure 1, was designed to generate 10 Nm of output torque by a brushless DC motor with customized gearbox, which is sufficient to lift the forearm if the user doesn't apply strong resistance force. Due to safety considerations the range of motion of the elbow assembly was mechanically limited to 110 degrees.

The pronation/supination joint of the ROA as seen in Figure 1, consists of two semi-cylindrical interlocking components. The upper component is fixed to the elbow joint while the lower component rotates freely within it and affixes to the user’s wrist. The lower component has a flexible chain wrapped around the outer surface that meshes with a pair of aluminum sprockets affixed to a motor and shaft assembly contained within the upper component. The system is capable of producing 75 degrees of rotation in both pronation and supination for a total of 150 degrees of movement. A brushed DC motor is coupled to a semi-cylindrical component to generate torque on the wrist.

As shown in Figure 1, the device is dawned on the user’s right arm as opposed to the left arm as most functional tasks are conducted with the right arm. Further, the feasibility scope of the study also granted us to assume that the right extremity was affected due to the stroke. The orthosis is affixed using two straps across the upper arm, one strap across proximal end of the forearm, one strap across the distal and of the forearm, and a final strap (yellow color in Figure 1) going over the user’s right shoulder and underneath the left arm. Donning the device takes less than 30 seconds when aided by another party and less than 60 seconds for an unaided healthy individual. Most of the weight is supported by the shoulder strap when the arm is relaxed at the user’s side. Both joints are positioned as such to not interfere with the user’s natural arm position whether relaxed or while performing tasks. The portability of the battery operated system allows the device to be used either as a rehabilitative aid in the laboratory, in the comfort of the patient’s home, or potentially as a functional device wherever the user may desire.

Functional electrical stimulation

Stroke patients often have spasticity in their hands, which is an involuntary constant contraction of the muscles [34]. They are unable to voluntarily open their hands but are instead able to contract, as they desire. Therefore, hand opening was achieved by placing two electrodes on the distal and proximal ends of the extensor digitorum muscles of the forearm [35]. This provided the necessary contraction of the muscles to ensure the hand opened to a minimum degree required to grasp the cup.

For the purposes of this study an EMPI300 functional electrical stimulator from DJO Global was chosen in order to facilitate hand opening in patients who are otherwise incapable. The device itself is portable, battery operated, and capable of producing up to 50 volts at 100 mA. In addition, it is one of the few devices in which an external hand or foot switch may be attached to trigger stimulation. Extra circuitry was developed to interface the FES unit with the computer for control. When stimulation is externally triggered, the device can be set to ramp up to a predefined intensity within a preset period of time.

Brain computer interface

In order to maintain the portability of the entire system, a wearable wireless EEG headset from EMOTIV was chosen to acquire data for the brain computer interface. The EMOTIV headset has 14 active electrodes operating at 2048 Hz before filtering. Amplification, buffering, and filtering are performed in the headset itself before being transmitted over a Bluetooth connection at 128 samples per second to a HP ENVY m6 laptop computer running an AMD A10 processer at 2.3 GHz with 8.0 GB of random-access memory (RAM). Hardware digital notch filters at 50 Hz and 60 Hz were utilized to filter out power line interference. Additional processing comprised of software filters, which include a spatial filter and a linear classifier. The objective of the spatial filter was to focus on the activity of the electrodes located over the sensorimotor cortex while the linear classifier was used to output actions corresponding to inputs.

For the purpose of this study we used the BCI2000 software, an open source system capable of data acquisition, stimulus presentation, and brain monitoring [36]. The four prominent modules accessible in this software include the source module (for data acquisition and storage), the signal processing unit, a user application, and the operator interface. BCI2000 is capable of utilizing the sensorimotor rhythms pattern to classify between motor movement and relaxation states. The sensorimotor rhythms were of significance in this project as alteration in the frequency and amplitude of these waves dictated actuation of the orthosis and FES. Sensorimotor rhythms consist of waves in the frequency range of 7 – 13 Hz (i.e. μ) and 13 – 30 Hz (i.e. β) and are evident in most adults typically around the primary sensorimotor cortices [37]. A decrease in the amplitude of the μ and/or β rhythm wave, known as Event Related Desyncronization [38], occurs upon both motor movement and imagined motor movement. Identifying this change then allowed us to classify the action as an imagined motor movement and activate the corresponding actuator.

One of the most prominent disadvantages of all BCI systems is the difficulty in differentiating between more than three classes in real-time sessions [39]. Classification accuracy in BCI systems decreases with the addition of classes. High-end BCI systems use complex algorithms and EEG caps with over 100 sensors in order to increase data resolution and therefore classification accuracy. This of course comes at the obvious expense of cost, setup time, and portability [40]. Therefore the highlight of our BCI is the ability to dynamically activate and deactivate pre-trained classes in real-time. This allows us to configure the system as to minimize the necessary classes. Thus, the system was designed to distinguish between only two cognitive classes (rest and motor imagery) and one artifact class (jaw clench).

Since the jaw clench may interfere with other motions of the jaw during activities of the daily living, a preliminary test to assess the robustness of classifying this artifact was performed. Specifically, a test was designed to determine the accuracy of the clench artifact in which a volunteer was asked to alternate between talking, resting, eating, swallowing, and jaw clenching. The goal was to determine the accuracy of classifying clenching versus the other four jaw movements. A two-class problem was therefore formulated, in which one class was clenching and the other included talking, resting, eating and swallowing. Talking consisted of repeating an arbitrarily chosen phrase (i.e. "The quick brown fox jumped over the lazy dog", which includes a large number of letters of the alphabet); clenching of the jaw was performed by grinding firmly the rear molar teeth; resting was simply no talking and no jaw motions; eating consisted of chewing some food (a banana was arbitrarily selected); and swallowing entailed other gulping saliva or swallowing the chewed food. Figure 2 shows the EEG voltage-time signal visible during the five different motions considered in this test, namely resting, talking, eating, swallowing and clenching. In the performed test, a volunteer was asked to perform each of the five tasks for 10 seconds. The order of each task was randomized. Each 10 seconds phase was repeated 10 times for a total of 50 times. The entire procedure was performed five times. The average real-time classification accuracy performed with the inexpensive headset was of 98%. Results therefore suggest that jaw clenching can be robustly distinguished against other motions of the jaw typically performed in activities of daily living.

Drinking task experiment

Experimental setup

As illustrated in Figure 3, the system setup required the user to wear the EEG headset on their head, the ROA on their arm, and the FES electrodes on their lower arm. An embedded potentiometer in the wrist pronation/supination joint provided measurable data on the wrist rotation angle while the encoder in the elbow motor was used to determine elbow angle. A custom designed glove with a fitted bend sensor along the middle finger was also worn by the individuals to track their hand configuration. A curled fist was calibrated to be 90° while a fully open hand was 0°. Data for measuring shoulder movement was recorded via a Microstrain Gyroscope affixed to the upper arm of the ROA. Vertical acceleration indicated motion of the arm as it was lifted up or brought back down to rest on the table.

Bci familiarization and demonstration

Prior to experimentation all users were first introduced to the drinking task protocol which consists of the steps entailed in Figures 4A-4H. The trial consisted of an appearance of the Figures 4A through 4H in addition to a blank white screen in between transitions. Each image and blank screen was displayed for 5 seconds in which the volunteers were asked to imagine the corresponding motion for each displayed action and a neutral thought for the blank screen. This procedure was completed 2 times after which a feature plot (Figure 5) was generated. Based on the brain signal features, which differed the most between the two thoughts, parameters for the real-time task were configured. As expected, the most distinguishable features were in the mu and beta band over the left sensorimotor cortex. Following this initial phase, the volunteers were asked demonstrate their BCI control skill. The objective during the demonstration phase was to navigate the virtual cursor towards a virtual target as shown on the screen (see Figure 6). The target would either be visible at the top of the screen or to the right of the screen. Control of the cursor in the vertical direction towards the top target was induced by imagining an active thought, while facial clench controlled horizontal movement of the cursor towards the target on the right side of the screen. A neutral thought resulted in the virtual cursor to remain stationary.

Drinking Task Protocol. Subjects were provided visual cues during the training phase to assist them in their formulation of their cognitive thoughts for each task. (A) Elbow extension. (B) Hand open. (C) Hand close. (D) Elbow flexion. (E) Supination. (F) Pronation (G) Elbow Extension. (H) Hand open.

A maximum duration of 5 seconds was provided to navigate the virtual cursor towards the target. The user was asked to demonstrate proficient BCI control (at least 80% accuracy) for each cognitive phase (elbow extension, hand open, elbow flexion, wrist pronation, wrist supination), before the subject was permitted to proceed onto the next segment of the experiment. In the case of extremely poor performance (accuracy less than 80%), another session at a later date would be conducted before the subject would be withdrawn from the study. This would be a rare possibility given that a similar field study conducted at an exposition in Austria with ninety-nine subjects indicated positive results [41].

FES intensity tuning

Following the BCI operation, the intensity of the FES system had to be tuned for each volunteer. Symmetrical biphasic pulses at a fixed frequency of 25 Hz were applied to all participants. This provided sufficient contraction of the digitorum muscles responsible for opening the hand. Studies have shown that higher frequencies (50 Hz) result in rapid fatigue [42] while low frequencies (15 Hz) are not adequate enough to recruit motor nerve units and only affect the sensory nerves [43]. The pulse width was also fixed at 200 μs for all subjects leaving the intensity parameter to be the only variable. The intensity of the stimulation was catered to each individual and was determined prior to initiating tests. The chosen values, as shown in Table 1, were programmed into the system and consequently applied during the experiment. A ramp up time of 1.0 second followed by continuous stimulation was initiated until the individual clenched their jaw to indicate termination of the task. No complaints of fatigue were expressed by the volunteers during any time of the experiment.

Drinking task protocol

The protocol for the drinking task was split up into eleven sections (Figure 7), of which seven required the BCI and the rest consisted of voluntary movements. The voluntary movements were clenching of the jaw, hand contraction, and shoulder movement all of which can be performed by the majority of the stroke patients as discussed earlier. The transition from each section to the next required the subject to perform a clenching of the jaw. This artifact was used as it could be detected with high accuracy through the Emotiv EEG headset. Clenching of the jaw signified the end of the present phase and initiation of the next one.

Upon initialization of the drinking task, the elbow joint of the RAO automatically rotated to a “rest” position in which the user’s forearm was approximately horizontally parallel with the ground while resting on the table. The first motion of elbow extension (Figure 4A) was initiated by an imagined movement of the user extending their arm toward the cup. Once the thought was detected by the BCI, the elbow joint incrementally increased by a fixed angle, which was empirically selected to be 18° in this application. Repetition of the thought caused another incremental increase in the elbow joint. Once the volunteers were satisfied with their degree of elbow extension, they then clenched their jaw to move to the second phase. The second phase (Figure 4B) entailed the users’ opening of the hand by electrical stimulation. The electrical stimulation was initiated via an imagined hand open thought. A jaw clench turned off the FES which initiated the third phase of the task (Figure 4C). The user grasped the cup volitionally and the phase was completed once a finger flexion angle of 15° was detected. The angle of 15° was empirically selected. The fourth phase (Figure 4D) prompted the user to flex their elbow as to bring their arm towards their body. Increments of 18° were also used in this phase, which was terminated via a clenching of the jaw. The fifth phase was to bring the hand up towards the mouth. This required the user to utilize their shoulder voluntarily. Clenching of the jaw was used to indicate the end of the current phase. The sixth phase (Figure 4E) was wrist pronation, once again triggered by an imagined movement and terminated via a jaw clench. During this interval the user was expected to drink from the cup but this segment was neglected as to minimize the risk of any fluid spillage. Instead the users simply touched the cup to their lips. The seventh phase (Figure 4F) was wrist supination actuated in a similar manner but by imaging a supination motion. Each wrist rotation was empirically selected to be 60°. The eighth phase required the user to return their arm back on the table using their shoulder and clenching their jaw when done so. The ninth phase (Figure 4G) was once again elbow extension but this time with the cup in the hand. The corresponding imagined thought was the trigger mechanism. Incremental extension was terminated by a jaw clench. The tenth phase (Figure 4H) required the user to place the cup on the table by activating the FES unit. Once the users hand was opened, a clench terminated this phase and turned off the FES. The eleventh phase being the final phase simply required the user to voluntarily clench their hand. This motion indicated successful completion of the drinking task.

Participants

The goal of this study was to determine the capabilities of the complete RAO/FES/BCI system by performing a functional drinking task with healthy individuals simulating stroke patients. The users were asked to simulate the common spastic condition and allowed shoulder movements which parallel the abilities of stroke patients. The volunteers were also encouraged to not provide any volitional movement necessary for the task.

It should however be noted that possible undesired volitional movements of the participants could not compromise the validity of the performed tests. In fact, the RAO was not back drivable, and therefore did not allow movements of the elbow and pronation/supination without a correct use of the BCI interface. In addition, electromyographic (EMG) signals were also used to monitor activities of the different muscles of the participates’ hand to make sure they did not interfere during the phase in which FES was used to clench their hand.

Five healthy individuals (mean age equal to 21 ± 1 yr) with no prior experience with EEG-based BCI systems volunteered to participate in the research (project approved by the Office of Research Ethics, Simon Fraser University).

Results and discussion

Drinking task results

Once the volunteers demonstrated competency in controlling their μ rhythm brain activity, they were then asked to perform a complete drinking task motion. A snapshot of the real-time data captured over the sensorimotor cortex during the elbow extension phase of the drinking task illustrates the ERD effect (Figure 8). A decrease in the amplitude of the mu band and the beta band was visible prior to each motion for all subjects. Rather than specifying a constant numerical threshold for the amplitude, the system computed the average EEG power decrease relative to a reference value over fixed time intervals. This approach compensated for each individual and eliminated the need for any manual adjustments. Data from the elbow joint, middle finger joint, wrist, and shoulder were all recorded during their respective active phases and are illustrated in Figures 9A – 9E. Each BCI phase of the task is indicated with a different shade of green while the un-shaded parts are the volitional movements. Additional numbers are labeled to indicate the exact phase sequences which were defined earlier.

Presentation of extremity throughout the drinking task. Elbow movements are incremental. Finger flexion and wrist rotation are continuous. Shoulder acceleration is only during motion. Class 1 – Elbow Extension. Class 2 – Elbow Flexion. Class 3 – Hand Open. Class 4 – Pronation. Class 5 – Supination. Unshaded – Volitional. (A) Subject 1. (B) Subject 2. (C) Subject 3. (D) Subject 4. (E) Subject 5. Each phase of the task is labeled in the respective regions.

As indicated in Figures 9A – 9E, elbow extension and flexion for all users occurs in increments. The incremental method was chosen to provide users with a controllable range of motion as opposed to a full continuous extension or flexion. This was necessary as seen by the results of the user in Figure 9D. This user did not fully extend the arm as opposed to other individuals yet was still able to grasp the cup and fully complete the task. Full flexion of the elbow (71°) on the contrary was necessary for all individuals as to ensure that the cup would be able to make contact with the lips.

Wrist pronation and supination on the other hand occurred in a single smooth motion with a rotation of approximately 70°. This provided the necessary tilt to allow drinking from the cup. Although users were not drinking in these trials due to safety concerns mentioned earlier, the simulation imitated the action reasonably.

Analysis of the results provided in Figures 9A – 9E further indicate that each user performed the task at their own pace. For example, comparison of the elbow extension phase for Figure 9A and Figure 9B illustrates the variance in BCI control. Although both users fully extended their elbow during this phase, one user took 40 seconds while the other user took 28 seconds (see Table 2). Additional differences in the time taken to complete each phase vary throughout the entire protocol for each volunteer. This applies to both the BCI controlled motions and the volitional motions. As a consequence, the total time for completion varied with the individual.

As stated earlier, the time required to complete the whole drinking motion depended upon how well the subject was able to control their cognitive thoughts and at what pace they conducted their voluntary movements. Overall, the duration ranged from 100 seconds to 160 seconds for the individuals to complete the task. The average time to complete the motion was 127 seconds with a standard deviation of 23 seconds.

The results attained further indicate the practicality of the system in terms of the duration it takes to complete a drinking task. The values are fair and can be analyzed over a period of trials to visualize improvements in the patients’ abilities when testing with stroke or spinal cord injury patients.

Limitations of study

Although optimistic results were presented in this study, some assumptions were made during the project design. Firstly, determining the true intention of the users when operating the BCI was based on the users’ word. In fact, to the best of the authors’ knowledge, there is no verification method to establish that the user was indeed imagining ‘reach’ during the elbow extension phase and not imagining a different motion. It is understandable to assume, as we did, that the users will follow the guidelines when using the system for both an intuitive experience and maximal benefit for themselves.

Secondly, while the study is in fact designed for stroke and spinal cord injury patients, it currently lacks that portion. The next step would be to test the system with individuals who would actually benefit from the operation.

Lastly, the current study was limited to the sole functional task of drinking a glass of water, as the main focus was to highlight the feasibility of such a combinational system of an ROA, FES, and BCI for a single functional task of the arm. Future versions, while maintaining the inexpensive and portable nature of the setup, may include other functional tasks. It should be noted that the ROA and FES could already potentially assist a large variety of tasks in their current configurations. In fact, they could assist a number of functional tasks including reaching for an object, turning the handle of a door, supinating the arm to see the time on a wrist watch, pulling a drawer, and grasping an object to move it from one location to another. The main challenge to obtain a multi-task system is therefore the identification of the user’s intention using the BCI. A potential approach is the use of a cascaded classification strategy [44]. In fact, similarly to a decision tree [45], a binary classifier could be used in each internal node of the tree. This approach would enable selecting a number of tasks, corresponding to the number of the leaves of the tree. An example of feasible binary classification consists of determining if the volunteer intends to move her/his right or left extremities – such a classification was proven to yield high accuracy [46–49]. This node would be of interest in case the volunteer wears assistive devices on both arms. Another set of tasks for a potential node would be the “drinking task”, as analyzed in this manuscript, and another functional task, such as a “time check task”, consisting of supinating the forearm to check the time on a wristwatch. The functional mental task used to identify the intention of the user to drink from a glass of water would be to imagine being thirsty and wanting to reach out for a glass placed in front of the volunteer. Initiation of the time check task would instead be triggered by imagining a ticking clock where the user is asked to continuously add the numbers on the dials. This two-task problem has strong potential for being classified with high accuracy as research showed that motor and arithmetic tasks could clearly be distinguished [50–52]. An example of the suggested cascaded classification to be investigated in future research is presented in Figure 10. In addition to the above-mentioned cascaded approach, the advancement of BCI systems could also enable the future extension of the proposed two-class problem to multi-class problems. Research has shown strong potential in this regard. For instance, Schlögl et al. demonstrated the feasibility of classifying four motor imaginary tasks [53]. Future research aimed at on-line classification of motor tasks using inexpensive headsets could therefore further facilitate the use of the proposed technology for assisting multiple tasks.

Conclusion

The goal of this study was to explore an inexpensive and fully portable assistive technology for individuals with neurological disorders in their pursuit of independently drinking from a glass. A combination of a robotic arm orthotic, an electrical stimulation system, and a Brain Computer system were utilized for this task. The task consisted of a drinking maneuver broken down into eleven phases where each phase was triggered by the respective imagined movement and terminated by a soft clench of the jaw. The ambitions of the study were met with five healthy volunteers who simulated stroke patients with spasticity. The volunteers completed the drinking maneuver with an average time of 127 seconds and a standard deviation of 23 seconds. The next step would be expand the capabilities of the system by including additional functional tasks and then conducting tests with stroke and spinal cord injury patients to assess the benefit of the system.

References

Kwakkel G, Wagenaar RC, Koelman TW, Lankhorst GJ, Koetsie JC: Effects of intensity of rehabilitation after stroke a research synthesis. Stroke 1997, 28: 1550-1556. 10.1161/01.STR.28.8.1550

Duncan PW, Zorowitz R, Bates B, Choi JY, Glasberg JJ, Graham GD, Katz RC, Lamberty K, Reker D: Management of adult stroke rehabilitation care a clinical practice guideline. Stroke 2005, 36: e100-e143. 10.1161/01.STR.0000180861.54180.FF

Loureiro Rui CV, Harwin WS, Nagai K, Johnson M: Advances in upper limb stroke rehabilitation: a technology push. Med Biol Eng Comput 2011, 49: 1103-1118. 10.1007/s11517-011-0797-0

Colombo R, Pisano F, Micera S, Mazzone A, Delconte C, Carrozza MC, Dario P, Minuco G: Robotic techniques for upper limb evaluation and rehabilitation of stroke patients. Neural Syst Rehabil Eng 2005, 13: 311-324.

Adamovich SV, Merians AS, Boian R, Lewis JA, Tremaine M, Burdea GS, Recce M, Poizner H: A virtual reality-based exercise system for hand rehabilitation post-stroke. Presence: Teleop Virt Environments 2005, 14: 161-174. 10.1162/1054746053966996

Fazekas G, Horvath M, Toth A: A novel robot training system designed to supplement upper limb physiotherapy of patients with spastic hemiparesis. Int J Rehabil Res 2006, 29: 251-254. 10.1097/01.mrr.0000230050.16604.d9

Lo AC, Guarino PD, Richards LG, Haselkorn JK, Wittenberg GF, Federman DG, Ringer RJ, Wagner TH, Krebs HI, Volpe BT, Bever CT, Bravata DM, Duncan PW, Corn BH, Maffucci AD, Nadeau SE, Conroy SS, Powell JM, Huang GD, Peduzzi P: Robot-assisted therapy for long-term upper-limb impairment after stroke. N Engl J Med 2010, 362: 1772-1783. 10.1056/NEJMoa0911341

Guidali M, Guidali M, Duschau-Wicke A, Broggi S, Klamroth-Marganska V, Nef T, Riener R: A robotic system to train activities of daily living in a virtual environment. Med Biol Eng Comput 2011, 49: 1213-1223. 10.1007/s11517-011-0809-0

Pfurtscheller G, Müller GR, Pfurtscheller J, Gerner HJ, Rupp R: ‘Thought’–control of functional electrical stimulation to restore hand grasp in a patient with tetraplegia. Neurosci Lett 2003, 351: 33-36. 10.1016/S0304-3940(03)00947-9

Kimberley TJ, Kimberley TJ, Lewis SM, Auerbach EJ, Dorsey LL, Lojovich JM, Carey JR: Electrical stimulation driving functional improvements and cortical changes in subjects with stroke. Exp Brain Res 2004, 154: 450-460. 10.1007/s00221-003-1695-y

Popovic MB, Popovic DB, Sinkjær T, Stefanovic A, Schwirtlich L: Restitution of reaching and grasping promoted by functional electrical therapy. Artif Organs 2002, 26: 271-275. 10.1046/j.1525-1594.2002.06950.x

Ang KK, Guan C, Chua KSG, Ang BT, Kuah C, Wang C, Phua KS, Chin ZY, Zhang H: Clinical Study of Neurorehabilitation in Stroke using EEG-Based Motor Imagery Brain-Computer Interface with Robotic Feedback. Buenos Aires, Brazil: Engineering in Medicine and Biology Society (EMBC), 2010 Annual International Conference of the IEEE. IEEE; 2010.

Prange GB, Jannink MJ, Groothuis-Oudshoorn CG, Hermens HJ, IJzerman MJ: Systematic review of the effect of robot-aided therapy on recovery of the hemiparetic arm after stroke. J Rehabil Res Dev 2006, 43: 171. 10.1682/JRRD.2005.04.0076

Prasad G, Herman P, Coyle D, McDonough S, Crosbie J: Applying a brain-computer interface to support motor imagery practice in people with stroke for upper limb recovery: a feasibility study. J Neuroeng Rehabil 2010, 7: 60. 10.1186/1743-0003-7-60

Grosse-Wentrup M, Mattia D, Oweiss K: Using brain–computer interfaces to induce neural plasticity and restore function. J Neural Eng 2011, 8: 025004. 10.1088/1741-2560/8/2/025004

Schaechter JD: Motor rehabilitation and brain plasticity after hemiparetic stroke. Prog Neurobiol 2004, 73: 61. 10.1016/j.pneurobio.2004.04.001

Prasad G, Herman P, Coyle D, McDonough S, Crosbie J: Using motor imagery based brain-computer interface for post-stroke rehabilitation. Antalya, Turkey: Neural Engineering; 2009. NER'09. 4th International IEEE/EMBS Conference on. IEEE, 2009

Lum PS, Burgar CG, Shor PC, Majmundar M, Van der Loos M: Robot-assisted movement training compared with conventional therapy techniques for the rehabilitation of upper-limb motor function after stroke. Arch Phys Med Rehabil 2002, 83: 952-959. 10.1053/apmr.2001.33101

NeuroSolutions. [http://www.neuro-solutions.ca/hocoma/hocoma-armeo/] []

Mizrahi J: Fatigue in muscles activated by functional electrical stimulation. Critical Reviews™ in Physical and Rehabilitation Medicine 1997, 9: 93.

Meng F, Tong K, Chan S, Wong W, Lui K, Tang K, Gao X, Gao S: BCI-FES training system design and implementation for rehabilitation of stroke patients. In, IEEE Int Jt Conf Neural Networks (IEEE World Congr Comput Intell IEEE 2008, 2008: 4103-4106.

Gollee H, Volosyak I, McLachlan AJ, Hunt KJ, Gräser A: An SSVEP-based brain-computer interface for the control of functional electrical stimulation. IEEE Trans Biomed Eng 2010, 57: 1847-55.

Do AH, Wang PT, King CE, Abiri A, Nenadic Z: Brain-computer interface controlled functional electrical stimulation system for ankle movement. J Neuroeng Rehabil 2011, 8: 49. 10.1186/1743-0003-8-49

Belda-Lois J-M, Mena-del Horno S, Bermejo-Bosch I, Moreno JC, Pons JL, Farina D, Iosa M, Molinari M, Tamburella F, Ramos A, Caria A, Solis-Escalante T, Brunner C, Rea M: Rehabilitation of gait after stroke: a review towards a top-down approach. J Neuroeng Rehabil 2011, 8: 66. 10.1186/1743-0003-8-66

Rahman K, Ibrahim B: Fundamental study on brain signal for BCI-FES system development. Sci (IECBES) 2012, 2012: 195-198.

Takahashi M, Takeda K, Otaka Y, Osu R, Hanakawa T, Gouko M, Ito K: Event related desynchronization-modulated functional electrical stimulation system for stroke rehabilitation: a feasibility study. J Neuroeng Rehabil 2012, 9: 56. 10.1186/1743-0003-9-56

Liu Y, Li M, Zhang H, Li J, Jia J, Wu Y, Cao J, Zhang L: Single-trial discrimination of EEG signals for stroke patients: a general multi-way analysis. Conf Proc IEEE Eng Med Biol Soc 2013, 2013: 2204-7.

Zhang H, Liu Y, Liang J, Cao J, Zhang L: Gaussian mixture modeling in stroke patients’ rehabilitation EEG data analysis. Conf Proc IEEE Eng Med Biol Soc 2013, 2013: 2208-11.

Pfurtscheller G, Müller-Putz GR, Pfurtscheller J, Rupp R: EEG-based asynchronous BCI controls functional electrical stimulation in a tetraplegic patient. EURASIP J Adv Sig Pr 2005, 2005: 3152-3155. 10.1155/ASP.2005.3152

Rüdiger R, Gernot R, Müller- P: BCI-Controlled Grasp Neuroprosthesis in High Spinal Cord Injury. Converging Clinical and Engineering Research on Neurorehabilitation 2013, 2013: 1253-1258.

Rohm M, Schneiders M, Müller C, Kreilinger A, Kaiser V, Müller-Putz GR, Rupp R: Hybrid brain–computer interfaces and hybrid neuroprostheses for restoration of upper limb functions in individuals with high-level spinal cord injury. Artif Intell Med 2013, 59: 133-142. 10.1016/j.artmed.2013.07.004

Lindgren I, Jönsson AC, Norrving B, Lindgren A: Shoulder pain after stroke A prospective population-based study. Stroke 2007, 38: 343-348. 10.1161/01.STR.0000254598.16739.4e

Alt Murphy M, Willén C, Sunnerhagen KS: Kinematic variables quantifying upper-extremity performance after stroke during reaching and drinking from a glass. Neurorehabil Neural Repair 2011, 25: 71-80. 10.1177/1545968310370748

Sommerfeld DK, Eek EUB, Svensson AK, Holmqvist LW, Von Arbin MH: Spasticity After Stroke Its Occurrence and Association With Motor Impairments and Activity Limitations. Stroke 2004, 35: 134-139. 10.1161/01.STR.0000126608.41606.5c

Ijzerman MJ, Stoffers TS, Klatte MAP, Snoek GJ, Vorsteveld JHC, Nathan RH, Hermens HJ: The NESS Handmasterothosis. J Rehabil Sci 1996, 9: 86-89.

Schalk Lab. [http://www.schalklab.org/research/bci2000] []

McFarland DJ, Miner LA, Vaughan TM, Wolpaw JR: Mu and beta rhythm topographies during motor imagery and actual movements. Brain Topogr 2000, 12: 177-186. 10.1023/A:1023437823106

Pfurtscheller G, da Silva FHL: Event-related EEG/MEG synchronization and desynchronization: basic principles. Clinical neurophysiology 1999, 110.11: 1842-1857.

Shenoy P, Krauledat M, Blankertz B, Rao RP, Müller KR: Towards adaptive classification for BCI. J Neural Eng 2006, 3: R13. 10.1088/1741-2560/3/1/R02

Popescu F, Blankertz B, Müller K-R: Computational challenges for noninvasive brain computer interfaces. IEEE Intell Syst 2008, 23: 78-79.

Guger C, Edlinger G, Harkam W, Niedermayer I, Pfurtscheller G: How many people are able to operate an EEG-based brain-computer interface (BCI)? IEEE Trans Neural Syst Rehabil Eng 2003, 11: 145-147.

Bigland-Ritchie B, Jones DA, Woods JJ: Excitation frequency and muscle fatigue: electrical responses during human voluntary and stimulated contractions. Exp Neurol 1979,64(2):414-427. 10.1016/0014-4886(79)90280-2

Kebaetse MB, Turner AE, Binder-Macleod SA: Effects of stimulation frequencies and patterns on performance of repetitive, nonisometric tasks. J Appl Physiol 2002,92(1):109-116.

Lee P-L, Chen LF, Wu YT, Chiu HF, Shyu KK, Yeh TC, Hsieh JC: Classifying MEG 20Hz rhythmic signals of left, right index finger movement and resting state using cascaded radial basis function networks. J Med Biol Eng 2002,22(3):147-152.

Yuan Y, Shaw MJ: Induction of fuzzy decision trees. Fuzzy Sets Syst 1995,69(2):125-139. 10.1016/0165-0114(94)00229-Z

Pfurtscheller G, Neuper C, Flotzinger D, Pregenzer M: EEG-based discrimination between imagination of right and left hand movement. Electroencephalogr Clin Neurophysiol 1997, 103: 642-51. 10.1016/S0013-4694(97)00080-1

Ozmen NG, Gumusel L: Classification of Real and Imaginary Hand Movements for a BCI Design. Rome, Italy: 2013 36th Int Conf Telecommun Signal Process; 2013:607-611.

Kayagil TA, Bai O, Henriquez CS, Lin P, Furlani SJ, Vorbach S, Hallett M: A binary method for simple and accurate two-dimensional cursor control from EEG with minimal subject training. J Neuroeng Rehabil 2009, 6: 14. 10.1186/1743-0003-6-14

Medina-Salgado B, Duque-Munoz L, Fandino-Toro H: Characterization of EEG signals using wavelet transform for motor imagination tasks in BCI systems. In Image, Signal Processing, and Artificial Vision (STSIVA) 2013 XVIII Symposium of IEEE: 11-13 September. Bogota: Colombial; 2013:1-4.

Volavka J, Matoušek M, Roubíček J: Mental arithmetic and eye opening. An EEG frequency analysis and GSR study. Electroencephalogr Clin Neurophysiol 1967, 22.2: 174-176.

Hamada T, Murata T, Takahashi T, Ohtake Y, Saitoh M, Kimura H, Wada Y, Yoshida H: Changes in autonomic function and EEG power during mental arithmetic task and their mutual relationship. Rinsho byori Jap J Clin Path 2006,54(4):329.

Wang Q, Sourina O: Real-time mental arithmetic task recognition from EEG signals. IEEE Trans Neural Syst Rehabil Eng 2013, 21: 225-32.

Schlögl A, Lee F, Bischof H, Pfurtscheller G: Characterization of four-class motor imagery EEG data for the BCI-competition 2005. J Neural Eng 2005, 2.4: L14.

Acknowledgements

This work was supported by the Michael Smith Foundation for Health Research, the Canadian Institutes of Health Research, and the Natural Sciences and Engineering Research Council of Canada. The authors would like to thank Gil Herrnstadt for his assistance in setting up of the devices, and Axis Prototypes for the support and assistance in the fabrication of the robotic arm orthosis.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

CM conceived the work, proposed the overall protocol, and directed the development of the robotic system. ZGX assisted on selecting the components of the system, programming the robot and interpreting the recorded data. JB designed the robot, interfaced the Emotiv EEG headset to the robot, and contributed to the design of the protocol. RL interfaced the Emotiv EEG headset to the BCI2000 software and to the FES, conducted the tests with the volunteers, and finalized the protocol. All authors were involved on drafting the paper and read and approved the final manuscript.

Rights and permissions

Open Access This article is published under license to BioMed Central Ltd. This is an Open Access article is distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Looned, R., Webb, J., Xiao, Z.G. et al. Assisting drinking with an affordable BCI-controlled wearable robot and electrical stimulation: a preliminary investigation. J NeuroEngineering Rehabil 11, 51 (2014). https://doi.org/10.1186/1743-0003-11-51

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1743-0003-11-51