Abstract

During the extended clinically latent period associated with Human Immunodeficiency Virus (HIV) infection the virus itself is far from latent. This phase of infection generally comes to an end with the development of symptomatic illness. Understanding the factors affecting disease progression can aid treatment commencement and therapeutic monitoring decisions. An example of this is the clear utility of CD4+ T-cell count and HIV-RNA for disease stage and progression assessment.

Elements of the immune response such as the diversity of HIV-specific cytotoxic lymphocyte responses and cell-surface CD38 expression correlate significantly with the control of viral replication. However, the relationship between soluble markers of immune activation and disease progression remains inconclusive. In patients on treatment, sustained virological rebound to >10 000 copies/mL is associated with poor clinical outcome. However, the same is not true of transient elevations of HIV RNA (blips). Another virological factor, drug resistance, is becoming a growing problem around the globe and monitoring must play a part in the surveillance and control of the epidemic worldwide. The links between chemokine receptor tropism and rate of disease progression remain uncertain and the clinical utility of monitoring viral strain is yet to be determined. The large number of confounding factors has made investigation of the roles of race and viral subtype difficult, and further research is needed to elucidate their significance.

Host factors such as age, HLA and CYP polymorphisms and psychosocial factors remain important, though often unalterable, predictors of disease progression. Although gender and mode of transmission have a lesser role in disease progression, they may impact other markers such as viral load. Finally, readily measurable markers of disease such as total lymphocyte count, haemoglobin, body mass index and delayed type hypersensitivity may come into favour as ART becomes increasingly available in resource-limited parts of the world. The influence of these, and other factors, on the clinical progression of HIV infection are reviewed in detail, both preceding and following treatment initiation.

Similar content being viewed by others

Review

Throughout the clinically latent period associated with Human Immunodeficiency Virus (HIV) infection the virus continues to actively replicate, usually resulting in symptomatic illness [1–3]. Highly variable disease progression rates between individuals are well-recognised, with progression categorised as rapid, typical or intermediate and late or long-term non-progression [1, 4]. The majority of infected individuals (70–80%) experience intermediate disease progression in which they have HIV-RNA rise, CD4+ T-cell decline and development of AIDS-related illnesses within 6–10 years of acquiring HIV. Ten to 15% are rapid progressors who have a fast CD4+ T-cell decline and occurrence of AIDS-related events within a few years after infection. The late progressors (5%), can remain healthy without significant changes in CD4 count or HIV-RNA for over 10 years [4].

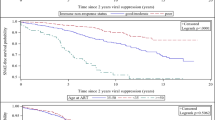

While Figure 1[5] demonstrates the existence of a relationship between high plasma HIV-RNA, low peripheral CD4+ T-cell count and rapidity of disease progression, many of the determinants of this variation in progression are only partially understood. Knowledge of prognostic determinants is important to guide patient management and treatment. Much research has focussed on many different facets of HIV pathogenesis and possible predictive factors, covering immunological, virological and host genetic aspects of disease. Current therapeutic guidelines take many of these into account but their individual significance warrants review [6].

Immunological factors

T-cell count and function

CD4+ T-cells

CD4+ T-cells are fundamental to the development of specific immune responses to infection, particularly intracellular pathogens. As the primary target of HIV, their depletion severely limits the host response capacity. HIV largely infects activated cells, causing the activated T-cells directed against the virus to be at greatest risk of infection [7]. The ability of the immune system to mount a specific response against HIV is a key factor in the subsequent disease course [8]. Long-term non-progressors appear to have better lymphoproliferative responses to HIV-specific antigens than those with more rapid progression [8].

The CD4+ T-cell count is the most significant predictor of disease progression and survival [9–15], and the US Department of Health and Human Services (DHHS) ART treatment guidelines recommends treatment commencement be based on CD4+ T-cell count in preference to any other single marker [6]. Table 1 shows the results of the CASCADE collaborationi (see Appendix 1 for details) analysis of an international cohort of 3226 ART-naïve individuals with estimable dates of seroconversion. Each CD4 count was considered to hold predictive value for no more than the subsequent 6 month period, with individual patients contributing multiple 6 month periods of follow up [10]. Lower CD4 counts are associated with greater risk of disease progression. CD4 counts from 350–500 cells/mm3 are associated with risks of ≤5% across all age and HIV-RNA strata, while the risk of progression to AIDS increases substantially at CD4 counts <350 cells/mm3, the greatest risk increase occurring as CD4 counts fall below 200 cells/mm3. The risk of disease progression at 200 cells/mm3, the threshold for ART initiation in resource-limited settings, is generally double the risk at 350 cells/mm3, the treatment threshold in resource-rich countries [10].

Use of the CD4 count as a means of monitoring ART efficacy is well established [6, 16]. In particular, measurement of the early response in the first six months of therapy has strong predictive value for future immunological progression [17, 18]. Baseline CD4 count is predictive of virological failure, Van Leth et al. [19] finding a statistically significant correlation between a baseline CD4 count of <200 cell/mm3 and HIV-RNA >50 copies/mL at week 48 of therapy. Figure 2 shows the importance of baseline CD4 count as a predictor of disease progression; each stratum of CD4 count <200 cell/mm3 at time of HAART initiation being associated with an increasingly worse prognosis [20]. Immunological recovery is largely dependent on baseline CD4 count and thus the timing of ART initiation is important in order to maximise the CD4+ T-cell response to therapy [20].

It is important to note that within-patient variability in CD4+ T-cell quantification can occur and so care must be taken to ensure measurements are consistently performed by the same method for each patient [9].

CD8 T-lymphocyte function

The influence of CD8+ T-lymphocyte function on HIV disease progression is of considerable interest as cytotoxic T-lymphocytes (CTLs) are the main effector cells of the specific cellular immune response. Activated by CD4+ T-helper cells, anti-HIV specific CD8+ T-cells have a crucial role to play in the control of viremia [21], increasing in response to ongoing viral replication [22]. Further, the diversity of HIV-specific CTL responses correlates with the control of viral replication and CD4 count, indicating the need for a response to a broad range of antigens to achieve a maximum effect [23, 24]. Low absolute numbers of HIV-specific CD8+ T-cells correlate with poor survival outcomes in both ART-naïve and experienced patients, providing additional evidence for the significance of the CTL response [23, 25, 26].

Immune activation

Chronic immune activation is a characteristic of HIV disease progression. Immune-activation-driven apoptosis of CD4+ cells, more than a direct virological pathogenic effect, is responsible for the decline in CD4+ T-lymphocytes seen in HIV infection [27]. HIV triggers polyclonal B cell activation, increased T cell turnover, production of proinflammatory cytokines and increased numbers of activated T cells [28]. CD4+ T cells that express activation markers such as CD69, CD25, and MHC class II are a prime target for HIV infection and a source of active HIV replication. Increased numbers of these activated T cells correlate with HIV disease progression [29–31]. Another important surface marker of cell activation is CD38. In HIV negative individuals, CD38 is expressed in relatively greater numbers by naïve lymphocytes, while in HIV infection, memory T-cells, particularly CD8+ memory T-cells, express the largest quantities of CD38 [32, 33]. CD8+CD38+T-cell levels correlate strongly with HIV-RNA levels, decreasing with ART-induced virological suppression and increasing with transient viremia, suggesting that continuously high levels of CD38+ cells may be an indicator of ongoing viral replication [32–35]. Indeed, HIV replication has been nominated the main driving force behind CD8+ T-cell activation [32, 33]. Similar to the stabilization of HIV-RNA levels following initial infection, an immune activation "set point" has also been described and shown to have prognostic value [36].

Despite the strength of the relationship with HIV-RNA, the search for a clear association between CD8+ T-cell activation and CD4 count has resulted in conflicting findings [32, 33, 35, 37]. In contrast, CD4+ T-cell activation has a considerable influence on CD4+ T-cell decline [27, 34, 35]. One prospective study of 102 seroconverters has found that CD8+CD38+ proportions lose their prognostic significance over time and only elevated CD4+CD38+ percentage is associated with clinical deterioration at 5 years follow up [27]. The clinical value of monitoring CD38 expression is yet to be clarified, however, there is no doubt that disease progression is related to both CD4+ and CD8+ T-cell activation as indicated by expression of CD38.

A switch from T-Helper Type 1 (TH1) to T-Helper Type 2 (TH2) cytokine response is seen in HIV-related immune dysfunction and is associated with HIV disease progression. TH1 cytokines such as interleukin (IL)-2, IL-12, IL-18 and interferon-γ promote strong cellular responses and early HIV viremic control while TH2 cytokines, predominantly modulated by IL-1β, IL-4, IL-6, IL-10 and tumor necrosis factor-α (TNF-α), promote HIV viral replication and dampen cellular response to HIV [8, 38]. HIV-positive individuals have high plasma IL-10 levels, reduced production of IL-12 and poor proliferation of IL-2 producing CD4+ central memory T-cells [30, 39, 40]. Levels of pro-inflammatory cytokines such as TNF-α are also increased, causing CD8+ T-cell apoptosis [1, 40].

Soluble markers of immunological activity have been the focus of many studies over the years in the hope that they will show utility as prognostic indicators. Unfortunately, the product of these endeavours is a large number of studies with apparently conflicting results, some studies linking elevated levels of these markers with more rapid disease progression [41–46], and others finding no correlation [46–48]. Factors investigated include neopterin [41–43, 45, 46, 48], β2-microglobulin [42, 46–48], tumour necrosis factor type II receptor [41, 46], tumour necrosis factor receptor 75 [45], endogenous interferon [43] and tumour necrosis factor-α [25]. The lack of specificity of these markers for HIV infection appears to curtail their utility. Current treatment guidelines make no mention of their use for either disease or therapeutic monitoring [6, 49, 50]. As the immune response to HIV is clarified with further research, the utility of monitoring these immune modulators may become more apparent.

Virological factors

HIV-RNA

The value of HIV-RNA quantification as a prognostic marker has long been established [6, 51, 52]. An approximately inverse relationship to the CD4+ T-cell count and survival time has been observed in around 80% of patients [53, 54]. Higher HIV-RNA levels are associated with more rapid decline of CD4+ T-cells, assisting prediction of the rate of CD4 count decline and disease progression. However, once the CD4 count is very low (<50–100 cells/mm3), the disease progression risk is so great that HIV-RNA levels add little prognostic information [25, 54–56]. The correlation between CD4 count and disease progression seen clearly in Table 1 has already been described [10]. Further highlighting the risk of AIDS in those with CD4 counts of 200–350 cell/mm3 (the current threshold for ART initiation), a four-fold risk increase can be seen between those with a HIV-RNA of 3000 copies/mL and those with ≥300 000 copies/mL, even within the same age bracket. Additionally, there is a considerable increase in risk of disease progression in those with HIV-RNA >100 000 copies/mL across all age and CD4 strata.

Higher baseline HIV-RNA levels in early infection have been associated with faster CD4+ T-cell decline over the first two years of infection [15, 57]. Research has suggested that HIV-RNA levels at later time points are better indicators of long term disease progression than levels at seroconversion, with the viral load reaching a stable mean or 'set point' around one year after infection [4, 12, 52, 58, 59]. Indicative of the efficiency of immunological control of viral replication, this set point is strongly associated with the rate of disease progression as can be inferred from Figure 1[1, 52, 59–62]. Desquilbet et al. [63] studied the effect of early ART on the virological set point by starting treatment during Primary HIV Infection (PHI) and then ceasing it soon after. They found the virological set point was mainly determined by pre-treatment viral load, early treatment having minimal reducing effect.

Treatment response has been strongly linked to the baseline HIV-RNA level; Van Leth et al. [19] finding that patients with a HIV-RNA >100 000 copies/mL were almost 1.5 times more likely to experience virological failure (HIV-RNA >50 copies/mL) after 48 weeks of treatment than those with HIV-RNA <100 000 copies/mL.

An analysis of a subgroup (6814 participants) of the EuroSIDA study cohort verified that clinical outcome correlates strongly with most recent CD4+ T-cell or HIV RNA level, regardless of ART regimen used. In particular, it is worth noting that those with CD4 count ≤350 cells/mm3 were at increased risk of AIDS or death than higher CD4 counts (rate ratio ≥3.39 vs ≤1.57), while the risk of these outcomes was substantially lower at HIV-RNA <500 copies/mL compared to >50 000 copies/mL (rate ratio 0.22 vs 0.61) [64]. These findings support the continued use of HIV-RNA and CD4 count as markers of disease progression on any HAART regimen [6, 51, 65].

There is no doubt that monitoring viral load is critical to assessing the efficacy of ART [65–68]. Findings from multiple studies reinforce the association between greater virological suppression and sustained virological response to ART [6, 50]. Guidelines define virological failure as either a failure to achieve an undetectable HIV-RNA (<50 copies/mL) after 6 months or a sustained HIV-RNA >50 copies/mL or >400 copies/mL following suppression below this level [6]. A greater than threefold increase in viral load has been associated with increased risk of clinical deterioration and so this value is recommended to guide therapeutic regimen change in the developed world [69, 70]. Several studies have shown a significant correlation between HIV-RNA >10 000 copies/mL and increased mortality and morbidity, and therapeutic switching should occur prior to this point [49]. The World Health Organisation recommends that this level be considered the definition of virological failure in resource limited settings [49].

Some patients experience intermittent episodes of low-level viremia followed by re-suppression below detectable levels, known as "blips". A very detailed study by Nettles et al. [71] defined a typical blip as lasting about 2.5 days, of low magnitude (79 copies/ml) and requiring no change in therapy to return to <50 copies/ml. Havlir et al. [72] and Martinez et al. [73] demonstrated that there was no association between intermittent low-level viremia and virological failure. Intermittent viremia does not appear to be a significant risk factor for disease progression. Nevertheless, it must be distinguished from true virological failure, which is consistently elevated HIV-RNA, as defined above. Continued follow up is essential.

Even in patients achieving apparently undetectable HIV-RNA levels, the HIV virus persists through the infection of memory T-cells [74, 75]. This 'viral reservoir' has an extremely long half life and remains remarkably stable even in prolonged virological suppression [74, 76, 77]. Responsible for the failure of ART to eradicate infection, regardless of therapy efficacy, it may also contribute to the 'blips' described above [76]. Like intermittent viremia, its effect on disease progression appears trivial, being mainly of therapeutic importance [78, 79]. However, as the long-term clinical outcomes of viral resistance and sub-detection viral replication become clearer, its significance may increase [15].

Resistance mutations

Drug resistance is a strong predictor of virological failure after HAART, with a clear relationship seen between the number of mutations and virological outcome [56, 80–84]. Hence maximal suppression of viral replication, with the parallel effect of preventing the development of resistance, is essential to optimise both response to treatment and improvement in disease progression [85, 86].

The transmission of resistant virus is a serious reality, with implications for the efficacy of initial regimens in ART naïve patients. Prevalence of resistance mutations amongst seroconverters varies according to geographic location, with inter-country prevalence varying from 3–26%. This reinforces the need to gain local data, especially as resistance increases with increasing HAART use [87]. In the USA, the prevalence of primary (transmitted) resistance was 24.1% in 2003–2004, an almost two-fold increase from the 13.2% prevalence recorded in 1995–1998 [88]. European prevalence for 2001–2002 was 10.4%, which, although lower than the US figures, remains quite high [86, 87]. Pre-treatment resistance testing has been shown to reduce the risk of virological failure in patients with primary drug resistance [6, 50]. The DHHS guidelines suggest that pre-treatment resistance testing in ART naïve patients may be considered if the risk of resistance is high (ie: population prevalence ≥5%) while the British HIV Association (BHIVA) recommends testing for transmitted resistance in all newly diagnosed patients and prior to initiating ART in chronically infected patients [49]. In resource-limited settings, resistance testing may not be readily available; however, in such locations, primary resistance is likely to be rare, and need for pre-treatment resistance testing is lower [89]. An exception to this rule is the case of child-bearing women who have received intrapartum nevirapine, in whom poorer virological responses to post-partum nevirapine based regimens have been seen (49% vs 68% achieved HIV-RNA <50 copies/mL at 6 months) [49, 89]. Regardless of the setting, there is a need for surveillance of local drug resistance prevalence [1, 2, 90].

Chemokine receptor tropism

CCR5 and CXCR4 chemokine receptors act as co-receptors for HIV virions. Proportionately greater tropism for one or the other of these receptors has been associated with different rates of disease progression. Slowly progressing phases of infection are associated with predominance of the "R5 virus strains" that ligate the CCR5 receptor, mainly present on activated immune cell surfaces (including macrophages). "X4 strains" showing tropism for CXCR4, expressed by naïve or resting T-cells, and dual-tropic R5X4 strains, increase proportionately in the later stages of disease and are associated with more rapid clinical and immunological deterioration [1, 2, 90]. 'X4' strains have been associated with greater immune activation, suggesting a possible mechanism for their effects on disease progression [27]. Patients with predominantly X4 strains have been found to have lower CD4 counts, but correlations with viral load have been inconsistent [90–92]. Some host genetic phenotypes namely CCR5-Δ32 and SDF-1'A, affect R5 strain binding and are associated with delayed disease progression [93–95].

It is evident that even under effective HAART suppression [96], the predominant viral strain can change from R5 to X4 [90, 92, 97]. Additionally, about 50% of triple therapy experienced patients have been found to harbour X4 strains, a far greater proportion than the 18.2% seen in an ART naïve population [98]. The evidence for a difference in survival between those on HAART with X4 strains and those with R5 strains is difficult to interpret. Brumme et al. [98] suggested that a group of patients with the 11/25 envelope sequence (a highly specific predictor of the presence of X4 strains) had higher mortality and poorer immunological response to HAART despite similar virological responses to those without the 11/25 sequence. In contrast, a later study indicated that after adjustment for baseline characteristics, X4 strains were not associated with a difference in survival or response to HAART [99]. As can be seen, the effects of chemokine receptor tropism remain controversial and as yet, there is no clear evidence that monitoring or measuring these parameters will be useful clinically.

Viral subtype and race

Complicating the assessment of the effect of viral subtype on disease progression are the potential confounders such as race, prevalence of various opportunistic infections and access to health care. Subtype C affects 50% of people with HIV and is seen mostly in Southern and Eastern Africa, India and China. Subtype D is found in East Africa and Subtype CRF_01 AE is seen mainly in Thailand. Caucasians are predominantly infected by subtype B, seen in 12% of the global HIV infected population [99]. The majority of research on all aspects of HIV has been performed amongst subtype B-affected individuals. The implications for treatment practice are obvious should differences in viral pathogenicity or disease progression exist between subtypes [99, 100].

Rangsin et al. [100] noted median survival times in young Thai men (Type E 97%) of only 7.4 years, significantly shorter than the 11.0 years reported by the mainly Caucasian CASCADE cohort (Type B ≥50%). Hu et al. [101] found differences in early viral load between those with Type E (n = 103) when compared to Type B (n = 27) in Thai injecting drug users. Kaleebu et al. [102] studied a large cohort in Uganda, providing the strongest evidence for a difference in survival between A and D subtypes. However, analysis of a small cohort in Sweden reported no difference in survival rates between subtypes A-D [103]. Only Rangsin et al. [100] and Hu et al. [101] studied cohorts with estimable seroconversion dates.

It is difficult to control for the multiple potential confounding factors in research measuring the influence of subtype on disease progression. Geretti [99] remarked that the evidence for survival and disease progression rate differences between subtypes is currently inadequate to draw any definitive conclusions. Ongoing research is essential not only to determine the effect of subtype on disease progression but also to evaluate response to therapy.

Many of the confounding factors affecting subtype investigation also confound research into the effect of race on disease progression. Studies with clinical endpoints have found no significant relationship between race and disease progression [104, 105], while another study of clinical response to HAART suggested disease progression appears to correlate more strongly with other factors (eg: depression, drug toxicity) than with race per se [106]. In support of this, data from the TAHOD databaseii (see Appendix 1 for details) suggests that responses to HAART among Asians are comparable to those seen in other races [11]. Evidence for racial variation in viral loads and CD4 counts has not been consistent and confounders have been difficult to exclude [107–109]. Morgan et al. [110] reported a median survival time of 9.8 years amongst HIV infected Ugandans which does not differ greatly from the 11.0 years reported by the mainly Caucasian CASCADE cohort. Race as an independent factor does not appear to play a part in the rate of disease progression independently of confounders such as psychosocial factors, access to care and genetically driven response to therapy.

Host genetics

An understanding of the effect of host genetics on disease susceptibility and progression has significant implications for the development of therapies and vaccines [95]. Host genetics impact HIV infection at two main points: (i) cell-virion fusion, mediated primarily by the chemokine receptors CXCR4 and CCR5 and their natural ligands, and (ii) the host immune response, mediated by Human Leukocyte Antigen (HLA) molecules [95, 111].

Polymorphisms of the genes controlling these two pathways have been extensively studied and multiple genetic alleles that have been found to correlate with either delayed or accelerated disease progression [95, 111, 112].

HLA molecules provide the mechanism by which the immune system generates a specific response to a pathogen. As has been described earlier, the diversity of HIV-specific immune responses plays a crucial role in containment of the virus and it is HLA molecules that control that diversity. Thus, HLA polymorphisms should affect disease progression. Investigation of the effect of specific alleles has found that heterozygosity of any MHC Class I HLA alleles appears to delay progression, while rapid progression has been associated with some alleles in particular, for example, HLA-B35 and Cω4[95]. The HLA-B57 allele, present in 11% of the US population and around 10% of HIV-positive individuals, has been linked to long-term non-progression, a lower viral set-point and fewer symptoms of primary HIV infection [95, 112].

In addition to the effect of genetic polymorphisms on the natural history of infection, host genetic profile can influence the response to HAART [113]. In Australia, the presence of the HLA-B5701 allele accounts for nearly 90% of patients with abacavir hypersensitivity. Drug clearance also varies significantly between racial groups due to genetic variations in CYP enzyme isoforms [114]. For example, polymorphisms of CYP2B6 occurring more frequently in people of African origin are associated with three-fold greater plasma efavirenz concentrations, leading to a greater incidence of central nervous system toxicity amongst this group [115]. Potential outcomes of such phenomena include treatment discontinuation in the case of toxicity or hypersensitivity and drug resistance when medications are ceased simultaneously causing monotherapy of the drug with the prolonged half life [114]. Genetic screening in order to guide choice of therapy is already underway in Australia for HLA-B57 alleles related to abacavir hypersensitivity [114]. Studies of host genetics appear likely to significantly influence the clinical management of HIV in the future.

Other host factors

Studies of many of these factors usually assume equality of access to care for members of the study population. A survey of people living with AIDS in New York city found that female gender, older age, non-Caucasian race and transmission via injecting drug use or heterosexual intercourse were all associated with significantly higher mortality. This most likely reflects the poorer access to health care and other sociological disparities experienced by these groups [116].

Age

Age at seroconversion has repeatedly been found to have considerable impact on the future progression of disease. Concurring with earlier studies, the CASCADE collaboration [62] found a considerable age effect correlating with CD4 count and HIV-RNA, across all exposure categories, CD4 count and HIV-RNA strata in an analysis of multiple international seroconversion cohorts, reinforcing these findings again recently [10, 117, 118]. Table 1 clearly demonstrates the importance of stratification by age, CD4 count and HIV-RNA as predictive of the short term risk of AIDS. There is a clear relationship between increasing risk with increasing age. For example, a 25 year old with a CD4 count of 200 and HIV-RNA level of 3000 has one third the risk of disease progression when compared to a 55 year old. This raises the issue of whether or not older patients should be treated at higher CD4+ T-cell counts [10].

Older age is associated with lower CD4 counts at similar time from seroconversion which may explain the relationship between age and disease progression [57, 119]. However, age disparities seem to diminish with HAART treatment; CD4 counts and HIV-RNA levels becoming more useful prognostic indicators [119]. It appears that the age effect seen on HAART treatment is closer to the natural effect of aging rather than the pre-treatment, HIV-related increase in mortality, suggesting that HAART attenuates the effect of age at seroconversion on HIV disease progression [120].

Gender

Mean HIV-RNA has been found to vary between men and women for given CD4 count strata [107, 121–123]. Low levels of CD4+ T-cells (<50 cells/mm3) are associated with higher mean HIV-RNA in women (of the order of 1.3 log10copies/mL) than in men within the same CD4 count stratum. Conversely, at higher CD4+ T-cell levels (>350 cells/mm3), mean HIV-RNA has been noted to be 0.2–0.5 log10copies/mL lower in women [124]. Despite HIV-RNA variation, disease progression has not been seen to differ between the genders for given CD4 counts [121, 124, 125]. On this basis, the current DHHS Guidelines for the use of Antiretroviral Drugs in HIV-1 Infected Adults and Adolescents state that there is no need for sex-specific treatment guidelines for the initiation of treatment given that antiretroviral therapy initiation is guided primarily by CD4 count [6].

Mode of transmission

Comparing disease progression rates between transmission risk groups has led to conflicting findings. An early study found significantly faster progression amongst homosexuals than heterosexuals [126]. However, more recent studies analysing much larger cohorts reported no difference in disease progression rates following adjustment for age and exclusion of Kaposi's sarcoma as an AIDS defining illness [62, 127, 128]. Prins et al. [127] noted that injecting drug users have a very high other-cause mortality rate that could confound results failing to take this into account.

The CASCADE collaboration [120] examined the change in morbidity and mortality between the pre- and post-HAART periods. They found a reduction in mortality in the post-HAART era amongst homosexual and heterosexual risk groups but no such change in injecting drug users. This apparently higher risk of death than other groups may be related to poor therapy adherence, less access to HAART and the higher rate of co-morbid illnesses such as Hepatitis C. Other factors may have a larger role to play in clinical deterioration than the mode of transmission.

Psychosocial factors

Understanding the interaction between physical and psychosocial factors in disease progression is important to maximise holistic care for the patient. Several studies have found significant relationships between poorer clinical outcome and lack of satisfaction with social support, stressful life events, depression and denial-based coping strategies [129–132]. Other studies have found strong correlations between poorer adherence to therapy and depression, singleness and homelessness [106, 133]. Patient management should include consideration of the psychosocial context and aim to provide assistance in problem areas.

Resource limited settings

The three elements of host, immunological and virological factors obviously synergise to influence the progression of HIV infection, however, a few additional factors may hold prognostic value. While CD4 count and HIV-RNA are the gold standard markers for disease monitoring, when measurement of these parameters is not possible surrogate markers become important. Markers investigated for their utility as simple markers for disease progression in resource-limited settings include delayed type hypersensitivity responses (DTH), total lymphocyte count (TLC), haemoglobin and body mass index (BMI).

Delayed type hypersensitivity

Mediated by CD4+ T-lymphocytes, DTH-type responses give an indication of CD4+ T-cell function in vivo. It has been shown that DTH responses decline in parallel with CD4+ T-cells resulting in a corresponding increase in mortality [134, 135]. Failure to respond to a given number of antigens has been suggested as a marker for the initiation of ART in resource-limited settings [135, 136].

Improved DTH responses have been noted with ART, although the degree of improvement appears dependent on the CD4+ nadir prior to HAART initiation [137–139]. This holds implications for the timing of initiation of treatment, as delayed treatment and hence low nadir CD4 counts may cause long-term immune deficits [139]. There is a need for further research in resource-limited settings to determine the utility of DTH testing as both a marker for HAART initiation and a means of monitoring its efficacy.

Total lymphocyte count

Another marker available in resource-limited countries, total lymphocyte count (TLC), has been investigated as an alternative to CD4+ T-cell count. Current WHO guidelines recommend using 1200 cells/mm3 or below as a substitute marker for ART initiation in symptomatic patients [140]. Evidence for the predictive worth of this TLC level is encouraging, with several large studies confirming the significant association between a TLC of <1200 cells/mm3 and subsequent disease progression or mortality [135, 141, 142]. Others propose that rate of TLC decline should be used in disease monitoring as a rapid decline (33% per year) precedes the onset of AIDS by 1–2 years [142, 143]. Disappointingly, there is generally a poor correlation between TLC and CD4 count at specific given values.

While TLC measurement has been validated as a means of monitoring disease progression in ART-naïve patients, its use for therapeutic monitoring is questionable and not recommended [49, 144–146].

Body mass index

The body mass index (BMI) is a simple and commonly used measure of nutritional status. Its relationship to survival in HIV infection is important for two main reasons. Firstly, 'wasting syndrome' (>10% involuntary weight loss in conjunction with chronic diarrhoea and weakness, +/- fever) is considered an AIDS defining illness according to the CDC classification of disease [1]. Secondly, the ease of measurement of this parameter makes it potentially highly useful as a marker for the initiation of ART in resource limited countries.

Like TLC, long-term monitoring of BMI is predictive of disease progression. A rapid decline has been noted in the 6 months preceding AIDS although the sensitivity of this measure was only 33% [145, 146]. A baseline BMI of <20.3 kg/m2 for men and <18.5 kg/m2 for women is predictive of increased mortality, even in racially diverse cohorts, with a BMI of 17–18 kg/m2 and <16 kg/m2 being associated with a 2-fold and 5-fold risk of AIDS respectively [147–149].

In combination with other simple markers such as haemoglobin, clinical staging and TLC, a BMI <18.5 kg/m2 shows similar utility to CD4 count and HIV-RNA based guidelines for the initiation of HAART [150, 151]. A sustained BMI <17 kg/m2 6 months after HAART initiation has been associated with a two-fold increase in risk of death [152].

As can be seen, measurement of the body mass index is a simple and useful predictor of disease progression. A BMI of <18.5 kg/m2 was consistently strongly associated with increased risk of disease progression and may prove to be a valuable indicator of the need for HAART.

Haemoglobin

Haemoglobin levels reflect rapidity of disease progression rates and independently predict prognosis across demographically diverse cohorts [151, 153]. Rates of haemoglobin decrease also correlate with falling CD4 counts [135, 141].

There have been suggestions that increases in haemoglobin are predictive of treatment success when combined with a TLC increase [143]. While racial variation in normal haemoglobin ranges and the side effects of antiretroviral agents such as zidovudine on the HIV infected bone marrow must be taken into account [144], monitoring haemoglobin levels shows utility in predicting disease progression both before and following HAART initiation.

Conclusion

The evolution of HIV infection from the fusion of the first virion with a CD4+ T-cell to AIDS and death is influenced by a multitude of interacting factors. However, in gaining an understanding of the prognostic significance of just a few of these elements it may be possible to improve the management and long-term outcome for individuals. Host factors, although unalterable, remain important in considering the prognosis of the patient and guiding therapeutic regimens. Furthermore, research into host-virus interactions has great potential to enhance the development of new therapeutic strategies.

Immunological parameters such as levels of CD38 expression and the diversity of HIV-specific cytotoxic lymphocyte responses allow insight into the levels of autologous control of the virus. Virological monitoring, including drug resistance surveillance, will continue to play a considerable role in the management of HIV infection. Additionally, as access to antiretroviral therapy improves around the world, the utility of, and need for, low-cost readily available markers of disease is evident. As with any illness of such magnitude, it is clear that a multitude of factors must be taken into account in order to ensure optimum quality of life and treatment results.

Appendix 1

i The "Concerted Action of Seroconversion to AIDS and Death in Europe" (CASCADE) collaboration includes cohorts in France, Germany, Italy, Spain, Greece, Netherlands, Denmark, Norway, UK, Switzerland, Australia and Canada

ii The "TREAT Asia HIV Observational Database" (TAHOD) database contains observational information collected from 11 sites in the Asia-Pacific region, encompassing groups from Australia, India, the Philippines, Malaysia, China, Singapore and Thailand.

References

Fauci AS, Lane HC: Harrison's Principles of Internal Medicine. 2005, 1: p1076-1139. Chapter 173. Human Immunodeficiency Virus Disease: AIDS and Related Disorders McGraw-Hill, Fauci AS, Lane HC, 16, Harrison's Principles of Internal Medicine Kasper Dennis L, Braunwald Eugene, Fauci Anthony S, Hauser Stephen L, Longo Dan L, Jameson J Larry, Isselbacher Kurt J,

Murray PR, Rosenthal KS, Pfaller MA: Medical Microbiology. 2005, 1: 963-Philadelphia, USA, Elsevier Mosby, 5th,

Miedema F: T cell dynamics and protective immunity in HIV infection: a brief history of ideas. Current Opinion in HIV & AIDS. 2006, 1 (1): 1-2.

Pantaleo G, Fauci AS: IMMUNOPATHOGENESIS OF HIV INFECTION. Annual Review of Microbiology. 1996, 50 (1): 825-854. 10.1146/annurev.micro.50.1.825

Osmond DH: Figure 1. Generalized time course of HIV infection and disease. Edited by: HIV EDP. 1998, Modified from: Centers for Disease Control and Prevention. Report of the NIH Panel to Define Principles of Therapy of HIV Infection and Guidelines for the Use of Antiretroviral Agents in HIV-Infected Adults and Adolescents. MMWR 1998;47(No. RR-5):Figure 1, page 34 . HIV InSite Knowledge Base Chapter,

Bartlett JG, Lane HC: Guidelines for the use of Antiretroviral Drugs in HIV-1-Infected Adults and Adolescents. Clinical Guidelines for the Treatment and Managment of HIV Infection. Edited by: Infection PCPTHIV. 2005, 1-118. USA , Department of Health and Human Services,

Stebbing J, Gazzard B, Douek DC: Mechanisms of disease - Where does HIV live?. New England Journal of Medicine. 2004, 350 (18): 1872-1880. 10.1056/NEJMra032395

Chinen J, Shearer WT: Molecular virology and immunology of HIV infection. Journal of Allergy and Clinical Immunology. 2002, 110 (2): 189-198. 10.1067/mai.2002.126226.

Phillips AN, Lundgren JD: The CD4 lymphocyte count and risk of clinical progression. Current Opinion in HIV & AIDS. 2006, 1 (1): 43-49.

CASCADE collaboration: Short-term risk of AIDS according to current CD4 cell count and viral load in antiretroviral drug-naive individuals and those treated in the monotherapy era. AIDS. 2004, 18 (1): 51-58. 10.1097/00002030-200401020-00006

Zhou J, Kumarasamy N: Predicting short-term disease progression among HIV-infected patients in Asia and the Pacific region: preliminary results from the TREAT Asia HIV Observational Database (TAHOD). HIV Medicine. 2005, 6 (3): 216-223. 10.1111/j.1468-1293.2005.00292.x

de Wolf F, Spijkerman I, Schellekens P, Langendam M, Kuiken C, Bakker M, Roos M, Coutinho R, Miedema F, Goudsmit J: AIDS prognosis based on HIV-1 RNA, CD4+ T-cell count and function: markers with reciprocal predictive value over time after seroconversion. AIDS. 1997, 11 (15): 1799-1806. 10.1097/00002030-199715000-00003

Lepri AC, Katzenstein TL, Ullum H, Phillips AN, Skinhoj P, Gerstoft J, Pedersen B: The relative prognostic value of plasma HIV RNA levels and CD4 lymphocyte counts in advanced HIV infection. AIDS. 1998, 12 (13): 1639-1643. 10.1097/00002030-199813000-00011

Hogg RS, Yip B, Chan KJ, Wood E, Craib KJP, O'Shaughnessy MV, Montaner JSG: Rates of Disease Progression by Baseline CD4 Cell Count and Viral Load After Initiating Triple-Drug Therapy. JAMA. 2001, 286 (20): 2568-2577. 10.1001/jama.286.20.2568

Goujard C, Bonarek M, Meyer L, Bonnet F, Chaix ML, Deveau C, Sinet M, Galimand J, Delfraissy JF, Venet A, Rouzioux C, Morlat P: CD4 cell count and HIV DNA level are independent predictors of disease progression after primary HIV type 1 infection in untreated patients. Clinical Infectious Diseases. 2006, 42 (5): 709-715. 10.1086/500213

Moore RD, Chaisson RE: Natural history of HIV infection in the era of combination antiretroviral therapy. AIDS. 1999, 13 (14): 1933-1942. 10.1097/00002030-199910010-00017

Duncombe C, Kerr SJ, Ruxrungtham K, Dore GJ, Law MG, Emery S, Lange JA, Phanuphak P, Cooper DA: HIV disease progression in a patient cohort treated via a clinical research network in a resource limited setting. Aids. 2005, 19 (2): 169-178. 10.1097/00002030-200501280-00009

Grabar S, Moing VL, Goujard C, Leport C, Kazatchkine MD, Costagliola D, Weiss L: Clinical Outcome of Patients with HIV-1 Infection according to Immunologic and Virologic Response after 6 Months of Highly Active Antiretroviral Therapy. Ann Intern Med. 2000, 133 (6): 401-410.

van Leth F, Andrews S, Grinsztejn B, Wilkins E, Lazanas MK, Lange JMA, Montaner J: The effect of baseline CD4 cell count and HIV-1 viral load on the efficacy and safety of nevirapine or efavirenz-based first-line HAART. Aids. 2005, 19 (5): 463-471. 10.1097/01.aids.0000162334.12815.5b

Battegay M, Nuesch R, Hirschel B, Kaufmann GR: Immunological recovery and antiretroviral therapy in HIV-1 infection. Lancet Infectious Diseases. 2006, 6 (5): 280-287. 10.1016/S1473-3099(06)70463-7

Ogg GS, Jin X, Bonhoeffer S, Dunbar PR, Nowak MA, Monard S, Segal JP, Cao Y, Rowland-Jones SL, Cerundolo V, Hurley A, Markowitz M, Ho DD, Nixon DF, McMichael AJ: Quantitation of HIV-1-specific cytotoxic T lymphocytes and plasma load of viral RNA. Science. 1998, 279 (5359): 2103-2106. 10.1126/science.279.5359.2103

Keoshkerian E, Ashton LJ, Smith DG, Ziegler JB, Kaldor JM, Cooper DA, Stewart GJ, Ffrench RA: Effector HIV-specific cytotoxic T-lymphocyte activity in long-term nonprogressors: Associations with viral replication and progression. Journal of Medical Virology. 2003, 71 (4): 483-491. 10.1002/jmv.10525

Oxenius A, Price DA, Hersberger M, Schlaepfer E, Weber R, Weber M, Kundig TM, Böni J, Joller H, Phillips RE, Flepp M, Opravil M, Speck RF: HIV-specific cellular immune response is inversely correlated with disease progression as defined by decline of CD4+ T cells in relation to HIV RNA load. The journal of infectious diseases. 2004, 189 (7): 1199-1208. 10.1086/382028

Chouquet C, Autran B, Gomard E, Bouley JM, Calvez V, Katlama C, Costagliola D, Rivière Y: Correlation between breadth of memory HIV-specific cytotoxic T cells, viral load and disease progression in HIV infection. AIDS. 2002, 16 (18): 2399-2407. 10.1097/00002030-200212060-00004

Macias J, Leal M, Delgado J, Pineda JA, Munoz J, Relimpio F, Rubio A, Rey C, Lissen E: Usefulness of route of transmission, absolute CD8+T-cell counts, and levels of serum tumor necrosis factor alpha as predictors of survival of HIV-1-infected patients with very low CD4+T-cell counts. European Journal of Clinical Microbiology & Infectious Diseases. 2001, 20 (4): 253-259.

Bonnet F, Thiebaut R, Chene G, Neau D, Pellegrin JL, Mercie P, Beylot J, Dabis F, Salamon R, Morlat P: Determinants of clinical progression in antiretroviral-naive HIV-infected patients starting highly active antiretroviral therapy. Aquitaine Cohort, France, 1996-2002. Hiv Medicine. 2005, 6 (3): 198-205. 10.1111/j.1468-1293.2005.00290.x

Hazenberg MD, Otto SA, van Benthem BHB, Roos MTL, Coutinho RA, Lange JMA, Hamann D, Prins M, Miedema F: Persistent immune activation in HIV-1 infection is associated with progression to AIDS. Aids. 2003, 17 (13): 1881-1888. 10.1097/00002030-200309050-00006

Brenchley JM, Price DA, Schacker TW, Asher TE, Silvestri G, Rao S, Kazzaz Z, Bornstein E, Lambotte O, Altmann D, Blazar BR, Rodriguez B, Teixeira-Johnson L, Landay A, Martin JN, Hecht FM, Picker LJ, Lederman MM, Deeks SG, Douek DC: Microbial translocation is a cause of systemic immune activation in chronic HIV infection. Nature Medicine. 2006, 12 (12): 1365-1371. 10.1038/nm1511

Kaushik S, Vajpayee M, Sreenivas V, Seth P: Correlation of T-lymphocyte subpopulations with immunological markers in HIV-1-infected Indian patients. Clinical Immunology. 2006, 119 (3): 330-338. 10.1016/j.clim.2005.12.014

Scriba TJ, Zhang HT, Brown HL, Oxenius A, Tamm N, Fidler S, Fox J, Weber JN, Klenerman P, Day CL, Lucas M, Phillips RE: HIV-1–specific CD4+ T lymphocyte turnover and activation increase upon viral rebound. Journal of Clinical Investigation. 2005, 115 (2): 443-450. 10.1172/JCI200523084

Deeks SG, Walker BD: The immune response to AIDS virus infection: good, bad or both? . Journal of Clinical Investigation. 2004, 113 (6): 808-810. 10.1172/JCI200421318

Benito DM, Lopez M, Lozano S, Ballesteros C, Martinez P, Gonzalez-Lahoz J, Soriano V: Differential upregulation of CD38 on different T-cell subsets may influence the ability to reconstitute CD4(+) T cells under successful highly active antiretroviral therapy. Jaids-Journal of Acquired Immune Deficiency Syndromes. 2005, 38 (4): 373-381. 10.1097/01.qai.0000153105.42455.c2.

Benito JM, Lopez M, Lozano S, Martinez P, Gonzaez-Lahoz J, Soriano V: CD38 expression on CD8(+) T lymphocytes as a marker of residual virus replication in chronically HIV-infected patients receiving antiretroviral therapy. Aids Research and Human Retroviruses. 2004, 20 (2): 227-233. 10.1089/088922204773004950.

Giorgi JV, Hultin LE, McKeating JA, Johnson TD, Owens B, Jacobson LP, Shih R, Lewis J, Wiley DJ, Phair JP, Wolinsky SM, Detels R: Shorter survival in advanced human immunodeficiency virus type 1 infection is more closely associated with T lymphocyte activation than with plasma virus burden or virus chemokine coreceptor usage. Journal of Infectious Diseases. 1999, 179 (4): 859-870. 10.1086/314660

Eggena MP, Barugahare B, Okello M, Mutyala S, Jones N, Ma YF, Kityo C, Mugyenyi P, Cao H: T cell activation in HIV-seropositive Ugandans: Differential associations with viral load, CD4(+) T cell depletion, and coinfection. Journal of Infectious Diseases. 2005, 191 (5): 694-701. 10.1086/427516

Deeks SG, Kitchen CMR, Liu L, Guo H, Gascon R, Narvaez AB, Hunt P, Martin JN, Kahn JO, Levy J, McGrath MS, Hecht FM: Immune activation set point during early FHV infection predicts subsequent CD4(+) T-cell changes independent of viral load. Blood. 2004, 104 (4): 942-947. 10.1182/blood-2003-09-3333

Wilson CM, Ellenberg JH, Douglas SD, Moscicki AB, Holland CA: CD8(+)CD38(+) T cells but not HIV type 1 RNA viral load predict CD4(+) T cell loss in a predominantly minority female HIV+ adolescent population. Aids Research and Human Retroviruses. 2004, 20 (3): 263-269. 10.1089/088922204322996482.

Connolly NC, Ridder SA, Rinaldo CR: Proinflammatory cytokines in HIV disease - a review and rationale for new therapeutic approaches. AIDS Reviews. 2005, 7: 168-180.

Spear GT, Alves MEAF, Cohen MH, Bremer J, Landay AL: Relationship of HIV RNA and cytokines in saliva from HIV-infected individuals. FEMS Immunology and Medical Microbiology. 2005, 45: 129-136. 10.1016/j.femsim.2005.03.002.

Majumder B, Janket ML, Schafer EA, Schaubert K, Huang XL, Kan-Mitchell J, Rinaldo J, Ayyavoo V: Human Immunodeficiency Virus Type 1 Vpr Impairs Dendritic Cell Maturation and T-Cell Activation: Implications for Viral Immune Escape. JOURNAL OF VIROLOGY. 2005, 79 (13): 7990–8003-10.1128/JVI.79.13.7990-8003.2005.

Fahey JL, Taylor JM, Detels R, Hofmann B, Melmed R, Nishanian P, Giorgi JV: The prognostic value of cellular and serologic markers in infection with human immunodeficiency virus type 1. N Engl J Med. 1990, 322 (3): 166-172.

Fahey JL, Taylor JMG, Manna B, Nishanian P, Aziz N, Giorgi JV, Detels R: Prognostic significance of plasma markers of immune activation, HIV viral load and CD4 T-cell measurements. AIDS. 1998, 12 (13): 1581-1590. 10.1097/00002030-199813000-00004

Mildvan D, Spritzler J, Grossberg SE, Fahey JL, Johnston DM, Schock BR, Kagan J: Serum neopterin, an immune activation marker, independently predicts disease progression in advanced HIV-1 infection. Clinical Infectious Diseases. 2005, 40 (6): 853-858. 10.1086/427877

Shi MG, Taylor JMG, Fahey JL, Hoover DR, Munoz A, Kingsley LA: Early levels of CD4, neopterin, and beta(2)-microglobulin indicate future disease progression. Journal of Clinical Immunology. 1997, 17 (1): 43-52. 10.1023/A:1027336428736

Zangerle R, Steinhuber S, Sarcletti M, Dierich MP, Wachter H, Fuchs D, Most J: Serum HIV-1 RNA levels compared to soluble markers of immune activation to predict disease progression in HIV-1-infected individuals. International Archives of Allergy and Immunology. 1998, 116 (3): 228-239. 10.1159/000023949.

Stein DS, Lyles RH, Graham NMH, Tassoni CJ, Margolick JB, Phair JP, Rinaldo C, Detels R, Saah A, Bilello J: Predicting clinical progression or death in subjects with early-stage human immunodeficiency virus (HIV) infection: A comparative analysis of quantification of HIV RNA, soluble tumor necrosis factor type II receptors, neopterin, and beta(2)-microglobulin. Journal of Infectious Diseases. 1997, 176 (5): 1161-1167.

O'Brien WA, Hartigan PM, Martin D, Esinhart J, Hill A, Benoit S, Rubin M, Simberkoff MS, Hamilton JD: Changes in Plasma HIV-1 RNA and CD4+ Lymphocyte Counts and the Risk of Progression to AIDS. N Engl J Med. 1996, 334 (7): 426-431. 10.1056/NEJM199602153340703

Mellors JW, Munoz A, Giorgi JV, Margolick JB, Tassoni CJ, Gupta P, Kingsley LA, Todd JA, Saah AJ, Detels R, Phair JP, Rinaldo CR: Plasma Viral Load and CD4+ Lymphocytes as Prognostic Markers of HIV-1 Infection. Annals of Internal Medicine. 1997, 126 (12): 946-954.

Hammer S, WHO Guidelines Development Group: Antiretroviral therapy for HIV infection in Adults and Adolescents in resource-limited settings: Towards Universal Access Recommendations for a public health approach (2006 revision). Antiretroviral therapy for HIV infection in Adults and Adolescents in resource-limited settings: Towards Universal Access. Edited by: Hammer S. 2006, Geneva , WHO,

Gazzard B, BHIVA writing committee: British HIV Association (BHIVA) guidelines for the treatment of HIV-infected adults with antiretroviral therapy (2005). HIV medicine. 2005, 6 (Supplement 2): 1-61. 10.1111/j.1468-1293.2005.0311b.x.

Bhatia R, Narain JP: Guidelines for HIV DIAGNOSIS and Monitoring of ANTIRETROVIRAL THERAPY - South East Asia Regional Branch. World Health Organisation Publications. 2005

Lyles RH, Munoz A, Yamashita TE, Bazmi H, Detels R, Rinaldo CR, Margolick JB, Phair JP, Mellors JW: Natural history of human immunodeficiency virus type 1 viremia after seroconversion and proximal to AIDS in a large cohort of homosexual men. Journal of Infectious Diseases. 2000, 181 (3): 872-880. 10.1086/315339

Thiebaut R, Pellegrin I, Chene G, Viallard JF, Fleury H, Moreau JF, Pellegrin JL, Blanco P: Immunological markers after long-term treatment interruption in chronically HIV-1 infected patients with CD4 cell count above 400x10(6) cells/I. Aids. 2005, 19 (1): 53-61.

Arnaout RA, Lloyd AL, O'Brien TR, Goedert JJ, Leonard JM, Nowak MA: A simple relationship between viral load and survival time in HIV-1 infection. Proceedings of the National Academy of Sciences of the United States of America. 1999, 96 (20): 11549-11553. 10.1073/pnas.96.20.11549

Arduino JM, Fischl MA, Stanley K, Collier AC, Spiegelman D: Do HIV type 1 RNA levels provide additional prognostic value to CD4(+) T lymphocyte counts in patients with advanced HIV type 1 infection?. Aids Research and Human Retroviruses. 2001, 17 (12): 1099-1105. 10.1089/088922201316912709.

Ledergerber B: Predictors of trend in CD4-positive T-cell count and mortality among HIV-1-infected individuals with virological failure to all three antiretroviral-drug classes. The Lancet. 2004, 364 (9428): 51-62. 10.1016/S0140-6736(04)16589-6.

Touloumi G, Hatzakis A, Rosenberg PS, O'Brien TR, Goedert JJ: Effects of age at seroconversion and baseline HIV RNA level on the loss of CD4+ cells among persons with hemophilia. Aids. 1998, 12 (13): 1691-1697. 10.1097/00002030-199813000-00018

Ho DD: Dynamics of HIV-1 replication in vivo. Journal of Clinical Investigation. 1997, 99 (11): 2565-2567.

Hubert JB, Burgard M, Dussaix E, Tamalet C, Deveau C, Le Chenadec J, Chaix ML, Marchadier E, Vilde JL, Delfraissy JF, Meyer L, Rouzioux C: Natural history of serum HIV-1 RNA levels in 330 patients with a known date of infection. Aids. 2000, 14 (2): 123-131. 10.1097/00002030-200001280-00007

Relucio K, Holodniy M: HIV-1 RNA and viral load. Clinics in Laboratory Medicine. 2002, 22 (3): 593-+. 10.1016/S0272-2712(02)00008-2

Bajaria SH, Webb G, Cloyd M, Kirschner D: Dynamics of naive and memory CD4(+) T lymphocytes in HIV-1 disease progression. Journal of Acquired Immune Deficiency Syndromes. 2002, 30 (1): 41-58. 10.1097/00126334-200205010-00006

CASCADE collaboration, Babiker A, Darby S, De Angelis D, Kwart D, Porter K, Beral V, Darbyshire J, Day N, Gill N, Coutinho R, Prins M, van Benthem B, Coutinho R, Dabis F, Marimoutou C, Ruiz I, Tusell J, Altisent C, Evatt B, Jaffe H, Kirk O, Pedersen C, Rosenberg P, Goedert J, Biggar R, Melbye M, Brettie R, Downs A, Hamouda O, Touloumi G, Karafoulidou A, Katsarou O, Donfield S, Gomperts E, Hilgartner M, Hoots K, Schoenbaum E, Beral V, Zangerle R, Del Amo J, Pezzotti P, Rezza G, Hutchinson S, Day N, De Angelis D, Gore S, Kingsley L, Schrager L, Rosenberg P, Goedert J, Melnick S, Koblin B, Eskild A, Bruun J, Sannes M, Evans B, Lepri AC, Sabin C, Buchbinder S, Vittinghoff E, Moss A, Osmond D, Winkelstein W, Goldberg D, Boufassa F, Meyer L, Egger M, Francioli P, Rickenbach M, Cooper D, Tindall B, Sharkey T, Vizzard J, Kaldor J, Cunningham P, Vanhems P, Vizzard J, Kaldor J, Learmont J, Farewell V, Berglund O, Mosley J, Operskalski E, van den Berg M, Metzger D, Tobin D, Woody G, Rusnak J, Hendrix C, Garner R, Hawkes C, Renzullo P, Garland F, Darby S, Ewart D, Giangrande P, Lee C, Phillips A, Spooner R, Wilde J, Winter M, Babiker A, Darbyshire J, Evans B, Gill N, Johnson A, Phillips A, Porter K, Lorenzo JI, Schechter M: Time from HIV-1 seroconversion to AIDS and death before widespread use of highly-active antiretroviral therapy: a collaborative re-analysis. Lancet. 2000, 355 (9210): 1131-1137. 10.1016/S0140-6736(00)02061-4

Desquilbet L, Goujard C, Rouzioux C, Sinet M, Deveau C, Chaix ML, Sereni D, Boufassa F, Delfraissy JF, Meyer L: Does transient HAART during primary HIV-1 infection lower the virological set-point?. Aids. 2004, 18 (18): 2361-2369.

Olsen Jose b; Ledergerber, Bruno c; Katlama, Christine d; Friis-Moller, Nina a; Weber, Jonathan e; Horban, Andrzej f; Staszewski, Schlomo g; Lundgren, Jens D a; Phillips, Andrew N h; for the EuroSIDA Study Group * CHG, 2005. Risk of AIDS and death at given HIV-RNA and CD4 cell count, in relation to specific antiretroviral drugs in the regimen. AIDS 19(3), 319–330.

Raboud JM, Rae S, Hogg RS, Yip B, Sherlock CH, Harrigan PR, O'Shaughnessy MV, Montaner JS: Suppression of plasma virus load below the detection limit of a human immunodeficiency virus kit is associated with longer virologic response than suppression below the limit of quantitation. The journal of infectious diseases. 1999, 180 (4): 1347-1350. 10.1086/314998

Raboud JM, Montaner JSG, Conway B, Rae S, Reiss P, Vella S, Cooper D, Lange O, Harris M, Wainberg MA, Robinson P, Myers M, Hall D: Suppression of plasma viral load below 20 copies/ml is required to achieve a long-term response to therapy. Aids. 1998, 12 (13): 1619-1624. 10.1097/00002030-199813000-00008

Kempf DJ, Rode RA, Xu Y, Sun E, Heath-Chiozzi ME, Valdes J, Japour AJ, Danner S, Boucher C, Molla A, Leonard JM: The duration of viral suppression during protease inhibitor therapy for HIV-1 infection is predicted by plasma HIV-1 RNA at the nadir. AIDS. 1998, 12 (5): F9-F14. 10.1097/00002030-199805000-00001

Tarwater PM, Gallant JE, Mellors JW, Gore ME, Phair JP, Detels R, Margolick JB, Munoz A: Prognostic value of plasma HIV RNA among highly active antiretroviral therapy users. Aids. 2004, 18 (18): 2419-2423.

Raffanti SP, Fusco JS, Sherrill BH, Hansen NI, Justice AC, D' Aquila R, Mangialardi WJ, Fusco GP: Effect of persistent moderate viremia on disease progression during HIV therapy. Jaids-Journal of Acquired Immune Deficiency Syndromes. 2004, 37 (1): 1147-1154. 10.1097/01.qai.0000136738.24090.d0.

Murri R, Lepri AC, Cicconi P, Poggio A, Arlotti M, Tositti G, Santoro D, Soranzo ML, Rizzardini G, Colangeli V, Montroni M, Monforte AD: Is moderate HIV viremia associated with a higher risk of clinical progression in HIV-Infected people treated with highly active Antiretroviral therapy - Evidence from the Italian Cohort of Antiretroviral-Naive Patients Study. Jaids-Journal of Acquired Immune Deficiency Syndromes. 2006, 41 (1): 23-30. 10.1097/01.qai.0000188337.76164.7a.

Nettles RE, Kieffer TL, Kwon P, Monie D, Han YF, Parsons T, Cofrancesco J, Gallant JE, Quinn TC, Jackson B, Flexner C, Carson K, Ray S, Persaud D, Siliciano RF: Intermittent HIV-1 viremia (blips) and drug resistance in patients receiving HAART. Jama-Journal of the American Medical Association. 2005, 293 (7): 817-829. 10.1001/jama.293.7.817.

Havlir DV, Bassett R, Levitan D, Gilbert P, Tebas P, Collier AC, Hirsch MS, Ignacio C, Condra J, Gunthard HF, Richman DD, Wong JK: Prevalence and Predictive Value of Intermittent Viremia With Combination HIV Therapy. JAMA. 2001, 286 (2): 171-179. 10.1001/jama.286.2.171

Martinez V, Marcelin AG, Morini JP, Deleuze J, Krivine A, Gorin I, Yerly S, Perrin L, Peytavin G, Calvez V, Dupin N: HIV-1 intermittent viraemia in patients treated by non-nucleoside reverse transcriptase inhibitor-based regimen. AIDS. 2005, 19 (10): 1065-1069. 10.1097/01.aids.0000174453.55627.de

Siliciano JD, Kajdas J, Finzi D, Quinn TC, Chadwick K, Margolick JB, Kovacs C, Gange SJ, Siliciano RF: Long-term follow-up studies confirm the stability of the latent reservoir for HIV-1 in resting CD4(+) T cells. Nature Medicine. 2003, 9 (6): 727-728. 10.1038/nm880

Haggerty CM, Pitt E, Siliciano RF: The latent reservoir for HIV-1 in resting CD4+ T cells and other viral reservoirs during chronic infection: insights from treatment and treatment-interruption trials. Current Opinion in HIV & AIDS. 2006, 1 (1): 62-68.

Siliciano JD, Siliciano RF: The latent reservoir for HIV-1 in resting CD4R T cells: a barrier to cure. Current Opinion in HIV and AIDS. 2006, 1 (2): 121-128.

Chun TW, Nickle DC, Justement JS, Large D, Semerjian A, Curlin ME, O'Shea MA, Hallahan CW, Daucher M, Ward DJ, Moir S, Mullins JI, Kovacs C, Fauci AS: HIV-infected individuals receiving effective antiviral therapy for extended periods of time continually replenish their viral reservoir. The journal of clinical investigation. 2005, 115 (11): 3250-3255. 10.1172/JCI26197

Anton PA, Mitsuyasu RT, Deeks SG, Scadden DT, Wagner B, Huang C, Macken C, Richman DD, Christopherson C, Borellini F, Lazar R, Hege KM: Multiple measures of HIV burden in blood and tissue are correlated with each other but not with clinical parameters in aviremic subjects. AIDS. 2003, 17 (1): 53-63. 10.1097/00002030-200301030-00008

Vitone F, Gibellini D, Schiavone P, Re MC: Quantitative DNA proviral detection in HIV-1 patients treated with antiretroviral therapy. Journal of Clinical Virology. 2005, 33 (3): 194-200.

Vray M, Meynard JL, Dalban C, Morand-Joubert L, Clavel F, Brun-Vezinet F, Peytavin G, Costagliola D, Girard PM, Group NT: Predictors of the virological response to a change in the antiretroviral treatment regimen in HIV-1-infected patients enrolled in a randomized trial comparing genotyping, phenotyping and standard of care (Narval trial, ANRS 088). Antiviral therapy. 2003, 8 (5): 427-434.

Rodes B, Garcia F, Gutierrez C, Martinez-Picado J, Aguilera A, Saumoy M, Vallejo A, Domingo P, Dalmau D, Ribas MA, Blanco JL, Pedreira J, Perez-Elias MJ, Leal M, de Mendoza C, Soriano V: Impact of drug resistance genotypes on CD4+counts and plasma viremia in heavily antiretroviral-experienced HIV-infected patients. Journal of Medical Virology. 2005, 77 (1): 23-28. 10.1002/jmv.20395

Deeks SG: Determinants of virological response to antiretroviral therapy: Implications for long-term strategies. Clinical Infectious Diseases. 2000, 30: S177-S184. 10.1086/313855

Lucas GM: Antiretroviral adherence, drug resistance, viral fitness and HIV disease progression: a tangled web is woven. Journal of Antimicrobial Chemotherapy. 2005, 55 (4): 413-416. 10.1093/jac/dki042

Deeks SG: Treatment of antiretroviral-drug-resistant HIV-1 infection. Lancet. 2003, 362 (9400): 2002-2011. 10.1016/S0140-6736(03)15022-2

Vandamme AM, Sonnerborg A, Ait-Khaled M, Albert J, Asjo B, Bacheler L, Banhegyi D, Boucher C, Brun-Vezinet F, Camacho R, Clevenbergh P, Clumeck N, Dedes N, De Luca A, Doerr HW, Faudon JL, Gatti G, Gerstoft J, Hall WW, Hatzakis A, Hellmann N, Horban A, Lundgren JD, Kempf D, Miller M, Miller V, Myers TW, Nielsen C, Opravil M, Palmisano L, Perno CF, Phillips A, Pillay D, Pumarola T, Ruiz L, Salminen M, Schapiro J, Schmidt B, Schmit JC, Schuurman R, Shulse E, Soriano V, Staszewski S, Vella S, Youle M, Ziermann R, Perrin L: Updated European recommendations for the clinical use of HIV drug resistance testing. Antiviral Therapy. 2004, 9 (6): 829-848.

Oette M, Kaiser R, Daumer M, Petch R, Fatkenhetter G, Carls H, Rockstroh JK, Schmaloer D, Stechel J, Feldt T, Pfister H, Haussinger D: Primary HIV drug resistance and efficacy of first-line antiretroviral therapy guided by resistance testing. Jaids-Journal of Acquired Immune Deficiency Syndromes. 2006, 41 (5): 573-581. 10.1097/01.qai.0000214805.52723.c1.

Shet A, Berry L, Mohri H, Mehandru S, Chung C, Kim A, Jean-Pierre P, Hogan C, Simon V, Boden D, Markowitz MT: Tracking the prevalence of transmitted antiretroviral drug-resistant HIV-1 - A decade of experience. Jaids-Journal of Acquired Immune Deficiency Syndromes. 2006, 41 (4): 439-446. 10.1097/01.qai.0000219290.49152.6a.

Wensing AMJ, van de Vijver DA, Angarano G, Asjo B, Balotta C, Boeri E, Camacho R, Chaix ML, Costagliola D, De Luca A, Derdelinckx I, Grossman Z, Hamouda O, Hatzakis A, Hemmer R, Hoepelman A, Horban A, Korn K, Kucherer C, Leitner T, Loveday C, MacRae E, Maljkovic I, de Mendoza C, Meyer L, Nielsen C, de Coul ELO, Ormaasen V, Paraskevis D, Perrin L, Puchhammer-Stockl E, Ruiz L, Salminen M, Schmit JC, Schneider F, Schuurman R, Soriano V, Stanczak G, Stanojevic M, Vandamme AM, Van Laethem K, Violin M, Wilbe K, Yerly S, Zazzi M, Boucher CA: Prevalence of drug-resistant HIV-1 variants in untreated individuals in Europe: Implications for clinical management. Journal of Infectious Diseases. 2005, 192 (6): 958-966. 10.1086/432916

Jourdain G, Ngo-Giang-Huong N, Le Coeur S, Bowonwatanuwong C, Kantipong P, Leechanachai P, Ariyadej S, Leenasirimakul P, Hammer S, Lallemant M: Intrapartum exposure to nevirapine and subsequent maternal responses to nevirapine-based antiretroviral therapy. New England Journal of Medicine. 2004, 351 (3): 229-240. 10.1056/NEJMoa041305

Melby T, DeSpirito M, DeMasi R, Heilek-Snyder G, Greenberg ML, Graham N: HIV-1 coreceptor use in triple-class treatment experienced patients: Baseline prevalence, correlates, and relationship to enfuvirtide response. Journal of Infectious Diseases. 2006, 194 (2): 238-246. 10.1086/504693

Daar ES, Lynn HS, Donfield SM, Lail A, O'Brien SJ, Huang W, Winkler CA: Stromal cell-derived factor-1 genotype, coreceptor tropism, and HIV type 1 disease progression. Journal of Infectious Diseases. 2005, 192 (9): 1597-1605. 10.1086/496893

Brumme ZL, Goodrich J, Mayer HB, Brumme CJ, Henrick BM, Wynhoven B, Asselin JJ, Cheung PK, Hogg RS, Montaner JSG, Harrigan PR: Molecular and clinical epidemiology of CXCR4-using HIV-1 in a large population of antiretroviral-naive individuals. Journal of Infectious Diseases. 2005, 192 (3): 466-474. 10.1086/431519

Kaslow RA, Dorak T, Tang J: Influence of host genetic variation on susceptibility to HIV type 1 infection. Journal of Infectious Diseases. 2005, 191: S68-S77. 10.1086/425269

Liu HL, Hwangbo Y, Holte S, Lee J, Wang CH, Kaupp N, Zhu HY, Celum C, Corey L, McElrath MJ, Zhu TF: Analysis of genetic polymorphisms in CCR5, CCR2, stromal cell-derived factor-1, RANTES, and dendritic cell-specific intercellular adhesion molecule-3-grabbing nonintegrin in seronegative individuals repeatedly exposed to HIV-1. Journal of Infectious Diseases. 2004, 190 (6): 1055-1058. 10.1086/423209

Hogan CM, Hammer SM: Host determinants in HIV infection and disease - Part 2: Genetic factors and implications for antiretroviral therapeutics. Annals of Internal Medicine. 2001, 134 (10): 978-996.

Delobel P, Sandres-Saune K, Cazabat M, Pasquier C, Marchou B, Massip P, Izopet J: R5 to X4 switch of the predominant HIV-1 population in cellular reservoirs during effective highly active antiretroviral therapy. Jaids-Journal of Acquired Immune Deficiency Syndromes. 2005, 38 (4): 382-392. 10.1097/01.qai.0000152835.17747.47.

Skrabal K, Trouplin V, Labrosse B, Obry V, Damond F, Hance AJ, Clavel F, Mammano F: Impact of antiretroviral treatment on the tropism of HIV-1 plasma virus populations. Aids. 2003, 17 (6): 809-814. 10.1097/00002030-200304110-00005

Brumme ZL, Dong WWY, Yip B, Wynhoven B, Hoffman NG, Swanstrom R, Jensen MA, Mullins JI, Hogg RS, Montaner JSG, Harrigan PR: Clinical and immunological impact of HIV envelope V3 sequence variation after starting initial triple antiretroviral therapy. Aids. 2004, 18 (4): F1-F9. 10.1097/00002030-200403050-00001

Geretti AM: HIV-1 subtypes: epidemiology and significance for HIV management. Current opinion in infectious diseases. 2006, 19 (1): 1-7. 10.1097/01.qco.0000200293.45532.68

Rangsin R, Chiu J, Khamboonruang C, Sirisopana N, Eiumtrakul S, Brown AE, Robb M, Beyrer C, Ruangyuttikarn C, Markowitz LE, Nelson KE: The Natural History of HIV-1 Infection in Young Thai Men After Seroconversion. J Acquired Immunodeficiency Syndromes. 2004, 36 (1): 622-629. 10.1097/00126334-200405010-00011.

Hu DJ, Vanichseni S, Mastro TD, Raktham S, Young NL, Mock PA, Subbarao S, Parekh BS, Srisuwanvilai L, Sutthent R, Wasi C, Heneine W, Choopanya K: Viral load differences in early infection with two HIV-1 subtypes. Aids. 2001, 15 (6): 683-691. 10.1097/00002030-200104130-00003

Kaleebu P, French N, Mahe C, Yirrell D, Watera C, Lyagoba F, Nakiyingi J, Rutebemberwa A, Morgan D, Weber J, Gilks C, Whitworth J: Effect of human immunodeficiency virus (HIV) type 1 envelope subtypes A and D on disease progression in a large cohort of HIV-1-positive persons in Uganda. Journal of Infectious Diseases. 2002, 185 (9): 1244-1250. 10.1086/340130

Alaeus A, Lidman K, Bjorkman A, Giesecke J, Albert J: Similar rate of disease progression among individuals infected with HIV-1 genetic subtypes A-D. AIDS. 1999, 13 (8): 901-907. 10.1097/00002030-199905280-00005

Gray L, Newell ML, Cortina-Borja M, Thorne C: Gender and race do not alter early-life determinants of clinical disease progression in HIV-1 vertically infected children - European Collaborative Study. Aids. 2004, 18 (3): 509-516. 10.1097/00002030-200402200-00018

Stephenson JM, Griffioen A, Woronowski H, Phillips AN, Petruckevitch A, Keenlyside R, Johnson AM, Anderson J, Melville R, Jeffries DJ, Norman J, Barton S, Chard S, Sibley K, Mitchelmore M, Brettle R, Morris S, O'Dornen P, Russell J, Overington-Hickford L, O'Farrell N, Chappell J, Mulcahy RF, Moseley J, Lyons F, Welch J, Graham D, Fadojutimi M, Kitchen V, Wells C, Byrne G, Tobin J, Tucker L, Harindra V, Mercey DE, Allason-jones E, Campbell L, French R, Johnson MA, Reid A, Farmer D, Saint N, Olaitan A, Madge S, Forster G, Phillips M, Sampson K, Nayagam A, Edlin J, Bradbeer C, de Ruiter A, Hargreaves L, Doyle C: Survival and progression of HIV disease in women attending GUM/HIV clinics in Britain and Ireland. Sexually Transmitted Infections. 1999, 75 (4): 247-252.

Anastos K, Schneider MF, Gange SJ, Minkoff H, Greenblatt RM, Feldman J, Levine A, Delapenha R, Cohen M: The association of race, sociodemographic, and behavioral characteristics with response to highly active antiretroviral therapy in women. Jaids-Journal of Acquired Immune Deficiency Syndromes. 2005, 39 (5): 537-544.

Anastos K, Gange SJ, Lau B, Weiser B, Detels R, Giorgi JV, Margolick JB, Cohen M, Phair J, Melnick S, Rinaldo CR, Kovacs A, Levine A, Landesman S, Young M, Munoz A, Greenblatt RM: Association of race and gender with HIV-1 RNA levels and immunologic progression. JAIDS. 2000, 24 (3): 218-226.

Saul J, Erwin J, Sabin CA, Kulasegaram R, Peters BS: The relationships between ethnicity, sex, risk group, and virus load in human immunodeficiency virus type 1 antiretroviral-naive patients. Journal of Infectious Diseases. 2001, 183 (10): 1518-1521. 10.1086/320191

Brown AE, Malone JD, Zhou SY, Lane JR, Hawkes CA: Human immunodeficiency virus RNA levels in US adults: a comparison based upon race and ethnicity. The journal of infectious diseases. 1997, 176 (3): 794-797.

Morgan D, Mahe C, Mayanja B, Okongo JM, Lubega R, Whitworth JAG: HIV-1 infection in rural Africa: is there a difference in median time to AIDS and survival compared with that in industrialized countries?. Aids. 2002, 16 (4): 597-603. 10.1097/00002030-200203080-00011

Julg B, Goebel FD: Susceptibility to HIV/AIDS: An individual characteristic we can measure?. Infection. 2005, 33 (3): 160-162. 10.1007/s15010-005-6305-4

Altfeld M, Addo MA, Rosenberg ES, Hecht FA, Lee PK, Vogel M, Yu XG, Draenert R, Johnston MN, Strick D, Allen TA, Feeney ME, Kahn JO, Sekaly RP, Levy JA, Rockstroh JK, Goulder PJR, Walker BD: Influence of HLA-B57 on clinical presentation and viral control during acute HIV-1 infection. Aids. 2003, 17 (18): 2581-2591. 10.1097/00002030-200312050-00005

Boffito M, Winston A, Owen A: Host determinants of antiretroviral drug activity. Current opinion in infectious diseases. 2005, 18 (6): 543-549. 10.1097/01.qco.0000191507.48481.10

Phillips EJ: The pharmacogenetics of antiretroviral therapy. Current Opinion in HIV and AIDS 2006, 1:. 2006, 1 (3): 249-256.

Haas DW: Pharmacogenomics and HIV therapeutics. Journal of Infectious Diseases. 2005, 191 (9): 1397-1400. 10.1086/429303

Nash D, Katyal M, Shah S: Trends in predictors of death due to HIV-related causes among persons living with AIDS in New York City: 1993-2001. Journal of Urban Health-Bulletin of the New York Academy of Medicine. 2005, 82 (4): 584-600. 10.1093/jurban/jti123

Darby SC, Ewart DW, Giangrande PLF, Spooner RJD, Rizza CR: Importance of age at infection with HIV-1 for survival and development of AIDS in UK haemophilia population. Lancet. 1996, 347 (9015): 1573-1579.

Operskalski EA, Stram DO, Lee H, Zhou Y, Donegan E, Busch MP, Stevens CE, Schiff ER, Dietrich SL, Mosley JW: Human-Immunodeficiency-Virus Type-1 Infection - Relationship of Risk Group and Age to Rate of Progression to Aids. Journal of Infectious Diseases. 1995, 172 (3): 648-655.

Geskus RB, Meyer L, Hubert JB, Schuitemaker H, Berkhout B, Rouzioux C, Theodorou ID, Delfraissy JF, Prins M, Coutinho RA: Causal pathways of the effects of age and the CCR5-Delta 32, CCR2-641, and SDF-1 3 ' A alleles on AIDS development. Jaids-Journal of Acquired Immune Deficiency Syndromes. 2005, 39 (3): 321-326. 10.1097/01.qai.0000142017.25897.06.

CASCADE collaboration, Porter K, Babiker AG, Darbyshire JH, Pezzotti P, Bhaskaran K, Walker AS: Determinants of survival following HIV-1 seroconversion after the introduction of HAART. Lancet. 2003, 362 (9392): 1267-1274. 10.1016/S0140-6736(03)14570-9

Touloumi G, Pantazis N, Babiker AG, Walker SA, Katsarou O, Karafoulidou A, Hatzakis A, Porter K: Differences in HIV RNA levels before the initiation of antiretroviral therapy among 1864 individuals with known HIV-1 seroconversion dates. Aids. 2004, 18 (12): 1697-1705. 10.1097/01.aids.0000131395.14339.f5

Sterling TR, Vlahov D, Astemborski J, Hoover DR, Margolick JB, Quinn TC: Initial Plasma HIV-1 RNA Levels and Progression to AIDS in Women and Men. N Engl J Med. 2001, 344 (10): 720-725. 10.1056/NEJM200103083441003

Farzadegan H, Hoover DR, Astemborski J, Lyles CM, Margolick JB, Markham RB, Quinn TC, Vlahov D: Sex differences in HIV-1 viral load and progression to AIDS. Lancet. 1998, 352: 1510-1514. 10.1016/S0140-6736(98)02372-1

Donnelly CA, Bartley LM, Ghani AC, Le Fevre AM, Kwong GP, Cowling BJ, van Sighem AI, de Wolf F, Rode RA, Anderson RM: Gender difference in HIV-1 RNA viral loads. HIV medicine. 2005, 6 (3): 170-178. 10.1111/j.1468-1293.2005.00285.x

Sterling TR, Chaisson RE, Moore RD: HIV-1 RNA, CD4 T-lymphocytes, and clinical response to highly active antiretroviral therapy. AIDS. 2001, 15 (17): 2251-2257. 10.1097/00002030-200111230-00006

Carre N, Deveau C, Belanger F, Boufassa F, Persoz A, Jadand C, Rouzioux C, Delfraissy JF, Bucquet D, Dellamonica P, Gallais H, Dormont J, Lefrere JJ, Cassuto JP, Dupont B, Vittecoq D, Herson S, Gastaut JA, Sereni D, Vilde JL, Brucker G, Katlama C, Sobel A, Duval J, Kazatchine M, Lebras P, Even P, Guillevin L: Effect of Age and Exposure Group on the Onset of Aids in Heterosexual and Homosexual Hiv-Infected Patients. Aids. 1994, 8 (6): 797-802.

Prins M, Veugelers PJ: Comparison of progression and non-progression in injecting drug users and homosexual men with documented dates of HIV-1 seroconversion. Aids. 1997, 11 (5): 621-631. 10.1097/00002030-199705000-00010

Rezza G: Determinants of progression to AIDS in HIV-infected individuals: An update from the Italian Seroconversion Study. Journal of Acquired Immune Deficiency Syndromes and Human Retrovirology. 1998, 17: S13-S16.

Ironson G, O'Cleirigh C, Fletcher MA, Laurenceau JP, Balbin E, Klimas N, Schneiderman N, Solomon G: Psychosocial Factors Predict CD4 and Viral Load Change in Men and Women With Human Immunodeficiency Virus in the Era of Highly Active Antiretroviral Treatment. Psychosom Med. 2005, 67 (6): 1013-1021. 10.1097/01.psy.0000188569.58998.c8

Leserman J, Petitto JM, Golden RN, Gaynes BN, Gu HB, Perkins DO, Silva SG, Folds JD, Evans DL: Impact of stressful life events, depression, social support, coping, and cortisol on progression to AIDS. American Journal of Psychiatry. 2000, 157 (8): 1221-1228. 10.1176/appi.ajp.157.8.1221

Leserman J, Petitto JM, Gu H, Gaynes BN, Barroso J, Golden RN, Perkins DO, Folds JD, Evans DL: Progression to AIDS, a clinical AIDS condition and mortality: psychosocial and physiological predictors. Psychological Medicine. 2002, 32 (6): 1059-1073. 10.1017/S0033291702005949

Ashton E, Vosvick M, Chesney M, Gore-Felton C, Koopman C, O'Shea K, Maldonado J, Bachmann MH, Israelski D, Flamm J, Spiegel D: Social support and maladaptive coping as predictors of the change in physical health symptoms among persons living with HIV/AIDS. Aids Patient Care and Stds. 2005, 19 (9): 587-598. 10.1089/apc.2005.19.587.

Parruti G, Manzoli L, Toro PM, D'Amico G, Rotolo S, Graziani V, Schioppa F, Consorte A, Alterio L, Toro GM, Boyle BA: Long-term adherence to first-line highly active antiretroviral therapy in a hospital-based cohort: Predictors and impact on virologic response and relapse. Aids Patient Care and Stds. 2006, 20 (1): 48-57. 10.1089/apc.2006.20.48.

Blatt SP, Hendrix CW, Butzin CA, Freeman TM, Ward WW, Hensley RE, Melcher GP, Donovan DJ, Boswell RN: Delayed-Type Hypersensitivity Skin Testing Predicts Progression to Aids in Hiv-Infected Patients. Annals of Internal Medicine. 1993, 119 (3): 177-184.

Anastos K, Shi Q, French AL, Levine A, Greenblatt RM, Williams C, DeHovitz J, Delapenha R, Hoover DR: Total lymphocyte count, hemoglobin, and delayed-type hypersensitivity as predictors of death and AIDS illness in HIV-1-infected women receiving highly active antiretroviral therapy. Journal of Acquired Immune Deficiency Syndromes: JAIDS. 2004, 35 (4): 383-392. 10.1097/00126334-200404010-00008.

Jones-Lopez EC, Okwera A, Mayanja-Kizza H, Ellner JJ, Mugerwa RD, Whalen CC: Delayed-type hypersensitivity skin test reactivity and survival in HIV-infected patients in Uganda: Should anergy be a criterion to start antiretroviral therapy in low-income countries?. American Journal of Tropical Medicine and Hygiene. 2006, 74 (1): 154-161.

Lange CG, Lederman MM, Madero JS, Medvik K, Asaad R, Pacheko C, Carranza C, Valdez H: Impact of suppression of viral replication by highly active antiretroviral therapy on immune function and phenotype in chronic HIV-1 infection. Journal of Acquired Immune Deficiency Syndromes. 2002, 30 (1): 33-40. 10.1097/00126334-200205010-00005

Lederman HM, Williams PL, Wu JW, Evans TG, Cohn SE, McCutchan JA, Koletar SL, Hafner R, Connick E, Valentine FT, McElrath MJ, Roberts NJ, Currier JS: Incomplete immune reconstitution after initiation of highly active antiretroviral therapy in human immunodeficiency virus-infected patients with severe CD4(+) cell depletion. Journal of Infectious Diseases. 2003, 188 (12): 1794-1803. 10.1086/379900