Abstract

Background

Verbal Autopsy (VA) is widely viewed as the only immediate strategy for registering cause of death in much of Africa and Asia, where routine physician certification of deaths is not widely practiced. VA involves a lay interview with family or friends after a death, to record essential details of the circumstances. These data can then be processed automatically to arrive at standardized cause of death information.

Methods

The Population Health Metrics Research Consortium (PHMRC) undertook a study at six tertiary hospitals in low- and middle-income countries which documented over 12,000 deaths clinically and subsequently undertook VA interviews. This dataset, now in the public domain, was compared with the WHO 2012 VA standard and the InterVA-4 interpretative model.

Results

The PHMRC data covered 70% of the WHO 2012 VA input indicators, and categorized cause of death according to PHMRC definitions. After eliminating some problematic or incomplete records, 11,984 VAs were compared. Some of the PHMRC cause definitions, such as ‘preterm delivery’, differed substantially from the International Classification of Diseases, version 10 equivalent. There were some appreciable inconsistencies between the hospital and VA data, including 20% of the hospital maternal deaths being described as non-pregnant in the VA data. A high proportion of VA cases (66%) reported respiratory symptoms, but only 18% of assigned hospital causes were respiratory-related. Despite these issues, the concordance correlation coefficient between hospital and InterVA-4 cause of death categories was 0.61.

Conclusions

The PHMRC dataset is a valuable reference source for VA methods, but has to be interpreted with care. Inherently inconsistent cases should not be included when using these data to build other VA models. Conversely, models built from these data should be independently evaluated. It is important to distinguish between the internal and external validity of VA models. The effects of using tertiary hospital data, rather than the more usual application of VA to all-community deaths, are hard to evaluate. However, it would still be of value for VA method development to have further studies of population-based post-mortem examinations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Background

Verbal Autopsy (VA, the practice of interviewing witnesses of a death and processing the information into likely causes of death) is recognized as an important strategy for widening the scope of cause-specific mortality data, particularly in countries where most deaths pass unregistered and unattended by medical professionals. There has been considerable development in recent years of automated procedures for systematically coding VA data into cause of death outcomes, which are seen as a necessary strategy to underpin widespread, rapid and cost-effective approaches to registering cause of death [1].

Inevitably, one of the major challenges to developing fit-for-purpose VA interpretative models is capturing the necessary knowledge in a valid and usable manner. Some models have sought to be entirely based on data, including in some cases the application of machine learning techniques to construct the model’s evidence base [2, 3]. Others have developed systematic approaches to incorporating human medical expertise as the basis of model building [4]. In both cases, there is also an obvious desire to be able to test models with high-quality VA data in order to demonstrate validity in terms of relationships between the input side (VA interview material) and the output side (cause of death assignment) [5, 6].

To meet this need, the Population Health Metrics Research Consortium (PHMRC) undertook a data collection exercise between 2007 and 2010 in four countries (India, Mexico, Tanzania and the Philippines) which documented 12,542 deaths in high-level hospitals where excellent diagnostic facilities were available during final illnesses, and then followed up the deaths with VA interviews [7]. This dataset was dubbed as ‘gold standard’ VA data in findings published in 2011. In October 2013 the dataset was released into the public domain, and this dataset is examined here in terms of its compatibility with the WHO 2012 VA standard [8, 9], its completeness and quality, and consistency between the hospital cause of death categorization and the InterVA-4 model (which is exactly compatible with the WHO 2012 VA standard) [10]. The objectives in undertaking these analyses are to arrive at a better understanding of how useful the PHMRC dataset might be for developing general VA methods and to explore the external validity of the dataset.

Methods

The PHMRC public-domain dataset [11] contains tertiary hospital assignments of cause of death and responses to subsequent VA interviews. The data are divided into three sections according to variations in the VA instrument used for the neonatal period (including stillbirths), childhood (one month to eleven years) and adulthood (twelve years and older). Two separate sites were covered in India (Andra Pradesh and Uttar Pradesh) and Tanzania (Dar es Salaam and Pemba island), with one each in the Philippines (Bohol) and Mexico (Mexico City). The public domain dataset contains slightly fewer cases than were originally reported (12,530 versus 12,542). An overall summary of the data is presented in Table 1 by site, age group and cause of death according to the hospital diagnosis. No hospital data other than the cause of death category are available in the dataset, and the cause categories were pre-defined as previously described [7].

The VAs that were subsequently performed on the PHMRC hospital deaths used a set of standard forms covering each of the three age groups, yielding databases with structures defined in a spreadsheet [12]. In addition, the original VAs contained an open narrative section which is not available in the public domain dataset, but the dataset does include some machine-extracted variables indicating the presence of certain key words within those narratives. However, in the present analyses it was not possible to make use of those key word variables because no clear distinction was made between narratives containing, for example, the word ‘heartbeat’ in the phrases ‘the baby’s heartbeat was normal’ or ‘the baby had no heartbeat’.

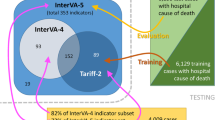

The WHO 2012 VA standard [8] and InterVA-4 [10] define 244 yes/no indicators covering VAs relating to various age and sex groups. A correspondence table between the WHO 2012 indicators and the PHMRC database is available in Additional file 1. Overall 170/244 (69.7%) of the WHO indicators were available in the PHMRC dataset, and these variables were extracted into a dataset for processing using the InterVA-4 model (version 4.02) [13]. Some problems were encountered in the age and sex variables in the PHMRC dataset. Dates of birth and death were recorded in two duplicate sets of variables (g1_01/6 and g5_01/3) as well as duplicate variables for age at death (g1_07 and g5_04). For some individuals there were inconsistencies between these age-related variables, and cases were dropped where these inconsistencies resulted in ambiguities between WHO 2012 age groups. Overall, 494 cases (3.9%) in the PHMRC dataset had no valid age or sex recorded and were excluded from InterVA-4 processing. A further 36 cases (0.3%) reported none of the WHO 2012 symptom indicators and were likewise excluded. Analyses of InterVA-4 outputs are, therefore, based on 11,984 cases.

The InterVA-4 model requires estimates of HIV/AIDS and malaria levels among deaths in what would normally be a population (rather than a group of hospital cases), as described in the User Guide [14]. In the PHMRC study, there was no intention that the deaths recorded were in any way representative of local populations, and so these parameters were set according to the overall levels of HIV/AIDS and malaria according to the hospital causes of death. In Andhra Pradesh, Dar es Salaam and Uttar Pradesh malaria levels were high (>1% of all deaths) while in the remaining sites malaria levels were very low (<0.01%). Similarly for HIV/AIDS, in Bohol and Pemba HIV/AIDS levels were very low (<0.01%), while in the remaining sites the level was high (>1%). InterVA-4 generates from zero to three most likely causes per case, each with an associated likelihood; cases with zero causes reflect complete uncertainty. The sum of the likelihoods for each case was always taken to be one in these analyses, including, where necessary, an indeterminate cause component.

The WHO 2012 VA standard defines 62 cause of death categories (including stillbirths) with corresponding International Classification of Diseases, 10th revision (ICD-10) codes. These differ in some respects from the PHMRC cause categories, and for the purposes of comparison the correspondence between the WHO and PHMRC categories (34 cause version as previously published [7]) is shown in Table 2. The concordance correlation coefficient between cause-specific mortality fractions (CSMF) was calculated using Stata, according to the method described by Lin [15].

A separate experiment was carried out using the DHS 2010 Afghanistan national mortality survey [16] database as an independent source, to estimate the effect of losing the missing PHMRC VA variables on the overall mortality cause distribution as determined by InterVA-4. This involved running InterVA-4 separately on a dataset extracted from the DHS and then re-running with the non-PHMRC indicators removed.

No specific ethical clearance was required for this study, since it only used the public domain anonymized PHMRC VA dataset, the public domain DHS 2010 Afghanistan dataset and the public domain InterVA-4 model.

Results

From the overall 12,530 cases in the PHMRC VA dataset, 11,984 cases met the basic minimum requirements of the WHO 2012 VA standard (valid age, sex and some symptom data). Among these cases, 1,688 (14.1%) of the PHMRC causes of death fell into categories described as ‘Other…’ which do not represent specific causes of death.

Deaths among women aged 12 to 49 accounted for 1,884/11,984 (15.7%) of the cases, and 458 (24.3%) of these deaths were classified as ‘Maternal’ in the PHMRC dataset. However, 17/1,884 (0.9%) of these deaths had contradictory pregnancy status information in the VA data (for example, both pregnant and recently delivered at death). Of the 458 PHMRC ‘Maternal’ deaths, 90 (19.7%) were not reported in the VA interview to have been pregnant at or within six weeks of death. Hospital diagnoses reported 1,603 neonatal deaths, 107 (6.7%) of which were said to be stillbirths in the VA interviews. The remaining 1,496 neonatal deaths are presented by cause and time in Figure 1. In 147/1,001 (14.7%) deaths which were recorded as stillbirths in hospitals, the circumstances were recorded as neonatal deaths in VA interviews, mostly during the first day of life. However, the symptoms reported in many of these discrepant cases were contradictory, leading to many being categorized as indeterminate in cause by InterVA-4.

A high proportion of cases (7,898/11,984; 65.9%) were reported as having respiratory symptoms in the VA interviews, although 2,134/11,984 (17.8%) had respiratory-related PHMRC causes of death assigned. Table 3 shows the proportions of cases reporting various respiratory symptoms for respiratory-related PHMRC causes (2,134/11,984; 17.8%; comprising asthma, birth asphyxia, COPD, lung cancer, pneumonia, TB) and for other PHMRC causes of death.

CSMFs are shown in Table 4 by age group and for all ages combined for 43 cause-of-death categories as determined by InterVA-4 and PHMRC. Differences in CSMF and 95% confidence intervals (CIs) of those differences are shown for the all-age CSMFs. For 22/43 causes (51.1%), CSMF differences were less than 1%. Figure 2 illustrates the correlation between PHMRC and InterVA-4 CSMFs in the 11,984 cases and 43 cause categories analyzed. The concordance correlation coefficient was 0.612 (95% CI 0.454 to 0.770). Excluding the residual categories, the concordance correlation coefficient increased to 0.665 (95% CI 0.502 to 0.827).

Correlation between cause-specific mortality fractions as determined by PHMRC hospital data and the InterVA-4 model for 11,984 verbal autopsy cases in 43 cause categories. Data points are labelled with the WHO 2012 VA cause of death category codes and shown against the line of equivalence. PHMRC, Population Health Metrics Research Consortium.

In an empirical comparison of the DHS 2010 Afghanistan dataset (3,349 deaths) run through InterVA-4 with and without the input indicators not available in the PHMRC dataset, overall 16.2% of causes of death were re-assigned due to the missing indicators. There was a net shift of 2.5% of all deaths into residual categories. Specific causes which increased most appreciably due to the missing indicators included neonatal pneumonia, acute abdomen and asthma, while the greatest decreases due to the missing indicators were seen in birth asphyxia, COPD and diarrheal diseases. However, the top six ranked causes of death were the same in both cases.

Discussion

The PHMRC VA dataset undoubtedly represents an important attempt to collect a set of reference data for VA that have corresponding causes of death established in high-level facilities offering good diagnostic procedures. Obviously, since the commencement of the PHMRC study preceded the WHO 2012 VA standard, the dataset could not correspond directly to that standard. In the analyses presented here, equivalence between the PHMRC and WHO 2012 VA cause of death categories and VA indicators has been established as transparently and completely as possible, given the inherent differences. Empirical findings from the Afghan data comparison suggest that the WHO 2012 indicators which are missing from the PHMRC dataset may have some effect in terms of decreased certainty of cause attribution and redistribution of certain causes, although the overall cause of death profile may not change markedly in consequence. The relatively robust nature of the InterVA model has also been demonstrated in a previous sensitivity analysis [17].

Nevertheless, there are some differences both of principle and detail that cannot be totally reconciled between the PHMRC and WHO 2012 approaches. The PHMRC study set out to rigorously define causes of death, but this involved imposing some hierarchies which may not be universally recognized and are not always consistent with ICD-10 coding. For example, prematurity as cause of death can be applied according to the PHMRC definitions if a baby is born at <33 weeks gestational age and experienced ‘death from another medically documented neonatal condition’ irrespective of that condition [7]. This differs appreciably from ICD-10 coding: ‘The mode of death, e.g. heart failure, asphyxia or anoxia, should not be entered… [as the most important cause] …unless it was the only fetal or infant condition known. This also holds true for prematurity’. [18]. As can be seen from the PHMRC causes of death in Figure 1, prematurity is consequently the dominant component of mortality throughout the neonatal period. InterVA-4, on the other hand, ascribes more of these later neonatal deaths among premature babies to infectious causes, based on their subsequently reported symptoms. Neither of these two approaches is necessarily right or wrong, and both may actually be different views of the same reality; but the differences are very important in statistical descriptions of neonatal mortality and in assessing the comparability of different methods of cause of death ascertainment.

Unfortunately, the PHMRC public-domain dataset does not include any clinical details from the hospital work-ups in addition to the assigned cause of death. It would add considerable value to have basic clinical parameters for these deaths irrespective of cause of death. As has been shown in a multicenter analysis of VA cause of death against HIV serostatus, being infected with HIV has consequences for cause of death far beyond the number of classic AIDS deaths [19], and this may also be true for other factors routinely recorded as clinical parameters, such as malaria parasitemia, hemoglobin levels, and so on. It would also be of interest to have included the PHMRC local physicians’ interpretation of the VAs in order to see how those related to VA data, even though the PHMRC group previously concluded that physicians did not perform particularly well against the hospital causes of death [20].

The very high proportion of VA interviews reporting respiratory symptoms in the PHMRC dataset is also worth noting. Table 3 shows rather non-specific relationships between respiratory symptoms and respiratory causes of death in the PHMRC data, which need further investigation and explanation. One possibility is that many patients in tertiary facilities may receive oxygen therapy in the final stages of an illness leading to death, which may be interpreted as respiratory difficulties by family and friends when later responding to a VA interview. In any case, the high proportion of VAs reporting respiratory symptoms leads InterVA-4 to assign high fractions of respiratory-related causes of death. Again, further clinical details in terms of treatment received in the final illness would add value to the utility of the PHMRC dataset. By extension, this and similar issues also raise questions of the validity of using tertiary facility deaths as the evidence base for VA methods. For example, since all the injury cases in the PHMRC data were hospitalized, clearly none of them could have been instantaneous or near-instantaneous fatalities. On closer examination, many of the VAs for injury cases also reported a range of symptoms not obviously associated with their injuries by the time they died in hospital; but, according to the PHMRC protocols, any ‘third-party written account’ of an injury makes that injury the cause of death, irrespective of further clinical details [7].

There are some significant omissions in the PHMRC indicators compared with the WHO 2012 standard. Many of the detailed items relating to obstetric causes of death were missing from the PHMRC instrument, for example. Other items were recorded more precisely by PHMRC, such as details around loss of consciousness and tobacco consumption. Inevitably the comparability of cause of death between the PHMRC cause categories and the WHO 2012 VA cause categories generated by InterVA-4 are somewhat compromised by the differences in cause definitions and by missing 30% of the WHO 2012 VA indicators. Given these sources of variation, the overall concordance correlation coefficient of 0.6, as reflected in Figure 2 for specific cause categories, reflected reasonably good agreement. Some particular cause categories, such as pneumonia, some neonatal causes and certain residual groupings, accounted for much of the non-concordance.

Unsurprisingly, there were cases within the PHMRC database which showed blatant inconsistencies between hospital cause of death and responses to some VA questions. The most obvious were the 147 cases recorded as stillbirths in hospital but which were described as neonatal deaths in VA interviews, and the 90 hospital maternal deaths described as neither pregnant nor recently delivered in VA interviews. There is no way of knowing from the dataset whether these reflected data quality issues either in the hospital causes of death or VA material, or whether VA respondents were actually, either knowingly or unwittingly, presenting a different picture. It is, of course, likely that there were similar phenomena relating to other parameters which cannot be so easily checked. However, it raises an important issue if such a dataset is to be used to build an evidence-based model for VA interpretation, because any data-driven model based solely on these data would ‘learn’, for example, that a proportion of stillbirths show signs of life and that a proportion of maternal deaths occur in non-pregnant women. These effects are of particular concern in the case of, for example, the random forest and tariff models which the PHMRC group has built exclusively from this dataset and then evaluated using the same data [2, 3]. Earlier work with artificial neural networks showed that VA models could be built with extremely high internal validity but had very limited external validity [21]. PHMRC’s earlier conclusions that independent methods (physician interpretation [20] and InterVA-3 [22]) performed less well against the PHMRC dataset than their internally-derived methods essentially confirm that the internal and external validities of automated VA methods can be very different. These findings are also reflected in a systematic review of automated VA methods [23]. There may be an argument for an expert panel to censor unreliable or contradictory cases from any VA reference database before using it to build VA models, in order to improve external validity. In addition, including residual cause of death categories in data for building models is unlikely to be very productive, given that residual categories are likely to include multifarious symptomatology, with no clear mapping between symptoms and cause of death categories.

Conclusions

The PHMRC VA dataset is a unique resource in terms of offering mappings from causes of death determined in tertiary hospitals to corresponding VA data. The dataset would be all the more valuable if it included clinical diagnostic data, details of hospital treatment and local physicians’ interpretation of the VA material. In addition, a more usable presentation of terms included in the VA narratives might add further insights.

Such a dataset basically has two potential uses in relation to VA methods in general. Firstly, it is potentially a source of reference VA data which can be used to evaluate various approaches intended for interpreting other VA material, basically as done here in Figure 2. When used in that way, it should not be applied to data-driven models that have themselves been built from this same reference dataset to avoid confusion between the internal and external validity of models. Secondly, it is potentially a source of evidence between established causes of death and VA data that can be used to build evidence-based models for interpreting other VA data. Used in this context, it would be important to censor obviously misleading cases and probably also to exclude residual cause of death categories before extracting evidence from the dataset into models. In both uses, it is impossible to completely discount the effect of taking only tertiary hospital cases and cause of death assignments, which clearly differ in some important respects from more usual all-population applications of VA. Nevertheless, this effect has to be weighed against the advantages of having hospital causes of death based on sound clinical evidence.

It would still be very interesting to see studies of all-population post-mortem series from settings in low- and middle-income countries in order to build a more definitive evidence base from which to construct and validate VA methods [24].

References

Fottrell E, Byass P: Verbal Autopsy – methods in transition. Epidemiol Rev. 2010, 32: 38-55. 10.1093/epirev/mxq003.

Flaxman AD, Vahdatpoor A, Green S, James SL, Murray CJL, Population Health Metrics Research Consortium (PHMRC): Random forests for verbal autopsy analysis: multisite validation study using clinical diagnostic gold standards. Popul Health Metr. 2011, 9: 29-10.1186/1478-7954-9-29.

James SL, Flaxman AD, Murray CJL, Population Health Metrics Research Consortium (PHMRC): Performance of the Tariff Method: validation of a simple additive algorithm for analysis of verbal autopsies. Popul Health Metr. 2011, 9: 31-10.1186/1478-7954-9-31.

Byass P, Fottrell E, Huong DL, Berhane Y, Corrah T, Kahn K, Muhe L, Do DV: Refining a probabilistic model for interpreting verbal autopsy data. Scand J Public Health. 2006, 34: 26-31. 10.1080/14034940510032202.

Tensou B, Araya T, Telake DS, Byass P, Berhane Y, Kebebew T, Sanders EJ, Reniers G: Evaluating the InterVA model for determining AIDS mortality from verbal autopsies in the adult population of Addis Ababa. Trop Med Int Health. 2010, 15: 547-553.

Byass P, Kahn K, Fottrell E, Collinson MA, Tollman SM: Moving from data on deaths to public health policy in Agincourt. South Africa: approaches to analysing and understanding verbal autopsy findings. PLoS Med. 2010, 7: e1000325-10.1371/journal.pmed.1000325.

Murray CJL, Lopez AD, Black R, Ahuja R, Ali S, Baqui A, Dandona L, Dantzer E, Das V, Dhingra U, Dutta A, Fawzi W, Flaxman AD, Gómez S, Hernández B, Joshi R, Kalter H, Kumar A, Kumar V, Lozano R, Lucero M, Mehta S, Neal B, Ohno S, Prasad R, Praveen D, Premji Z, Ramírez-Villalobos D, Remolador H, Riley I, et al: Population Health Metrics Research Consortium gold standard verbal autopsy validation study: design, implementation, and development of analysis datasets. Popul Health Metr. 2011, 9: 27-10.1186/1478-7954-9-27.

World Health Organization: Verbal Autopsy Standards: the 2012 WHO Verbal Autopsy Instrument. 2012, WHO: Geneva, Available at: http://www.who.int/healthinfo/statistics/WHO_VA_2012_RC1_Instrument.

Leitao J, Chandramohan D, Byass P, Jakob R, Bundhamcharoen K, Choprapawon C, de Savigny D, Fottrell E, França E, Froen F, Gewaifel G, Hodgson A, Hounton S, Kahn K, Krishnan A, Kumar V, Masanja H, Nichols E, Notzon F, Rasooly MH, Sankoh O, Spiegel P, Abouzahr C, Amexo M, Kebede D, Alley WS, Marinho F, Ali M, Loyola E, Chikersal J, et al: Revising the WHO verbal autopsy instrument to facilitate routine cause-of-death monitoring. Global Health Action. 2013, 6: 21518.

Byass P, Chandramohan D, Clark SJ, D'Ambruoso L, Fottrell E, Graham WJ, Herbst AJ, Hodgson A, Hounton S, Kahn K, Krishnan A, Leitao J, Odhiambo F, Sankoh OA, Tollman SM: Strengthening standardised interpretation of verbal autopsy data: the new InterVA-4 tool. Global Health Action. 2012, 5: 19281.

Population Health Metrics Research Consortium: PHMRC Gold Standard Verbal Autopsy Data. 2005, [http://ghdx.healthmetricsandevaluation.org/search/site/phmrc], –2011

Population Health Metrics Research Consortium: IHME PHMRC VA Data Codebook. [http://ghdx.healthmetricsandevaluation.org/sites/ghdx/files/record-attached-files/IHME_PHMRC_VA_DATA_CODEBOOK_Y2013M09D11_0.xlsx]

InterVA-4 (version 4.02) verbal autopsy interpretation model. [http://www.interva.net]

InterVA-4 User Guide. [http://www9.umu.se/phmed/epidemi/interva/interva4_130706.zip]

Lin LI-K: A concordance correlation coefficient to evaluate reproducibility. Biometrics. 1989, 45: 255-268. 10.2307/2532051.

Afghan Public Health Institute: Afghanistan Mortality Survey 2010. 2011, Calverton, Maryland, USA, Available at: http://measuredhs.com/pubs/pdf/FR248/FR248.pdf.

Fottrell E, Byass P: Probabilistic methods for verbal autopsy interpretation: InterVA robustness in relation to variations in a priori probabilities. PLoS One. 2011, 6: e27200-10.1371/journal.pone.0027200.

World Health Organization: International Statistical Classification of Diseases and Related Health Problems, 10th Revision. Volume 2: Instruction Manual. 2011, WHO Geneva, Available at: http://apps.who.int/classifications/icd10/browse/Content/statichtml/ICD10Volume2_en_2010.pdf.

Byass P, Calvert C, Miiro-Nakiyingi J, Lutalo T, Michael D, Crampin A, Gregson S, Takaruza A, Robertson A, Herbst K, Todd J, Zaba B: InterVA-4 as a public health tool for measuring HIV/AIDS mortality: a validation study from five African countries. Global Health Action. 2013, 6: 22448.

Lozano R, Lopez AD, Atkinson C, Naghavi M, Flaxman AD, Murray CJL, Population Health Metrics Research Consortium (PHMRC): Performance of physician-certified verbal autopsies: multisite validation study using clinical diagnostic gold standards. Popul Health Metr. 2011, 9: 32-10.1186/1478-7954-9-32.

Boulle A, Chandramohan D, Weller P: A case study of using artificial neural networks for classifying cause of death from verbal autopsy. Int J Epidemiol. 2001, 30: 515-520. 10.1093/ije/30.3.515.

Lozano R, Freeman MK, James SL, Campbell B, Lopez AD, Flaxman AD, Murray CJ, Population Health Metrics Consortium (PHMRC): Performance of InterVA for assigning causes of death to verbal autopsies: multisite validation study using clinical diagnostic gold standards. Popul Health Metr. 2011, 9: 50-10.1186/1478-7954-9-50.

Leitao J, Desai N, Aleksandrowicz L, Byass P, Miasnikof P, Tollman S, Alam D, Lu Y, Rathi SK, Singh A, Suraweera W, Ram F, Jha P: Comparison of physician-certified verbal autopsy with computer-coded verbal autopsy for cause of death assignment in hospitalized patients in low- and middle-income countries: systematic review. BMC Med. 2014, 12: 22.

Fligner CL, Murray J, Roberts DJ: Synergism of verbal autopsy and diagnostic pathology autopsy for improved accuracy of mortality data. Popul Health Metr. 2011, 9: 25-10.1186/1478-7954-9-25.

Acknowledgments

This work was undertaken within the Umeå Centre for Global Health Research, with support from Forte, the Swedish Research Council for Health, Working Life and Welfare (grant no. 2006–1512). Thanks are due to the Population Health Metrics Research Consortium for making their VA dataset available in the public domain, and to Measure DHS for making available the VA dataset from the Afghanistan 2010 national mortality survey.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The author declares that he has no competing interests, and points out that the InterVA models which he has developed are entirely public domain assets.

Electronic supplementary material

12916_2013_911_MOESM1_ESM.pdf

Additional file 1: Correspondence between WHO 2012 verbal autopsy indicators and Population Health Metrics Research Consortium variables.(PDF 274 KB)

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Byass, P. Usefulness of the Population Health Metrics Research Consortium gold standard verbal autopsy data for general verbal autopsy methods. BMC Med 12, 23 (2014). https://doi.org/10.1186/1741-7015-12-23

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1741-7015-12-23