Abstract

This article proposed a novel human identification method based on retinal images. The proposed system composed of two main parts, feature extraction component and decision-making component. In feature extraction component, first blood vessels extracted and then they have been thinned by a morphological algorithm. Then, two feature vectors are constructed for each image, by utilizing angular and radial partitioning. In previous studies, Manhattan distance has been used as similarity measure between images. In this article, a fuzzy system with Manhattan distances of two feature vectors as input and similarity measure as output has been added to decision-making component. Simulations show that this system is about 99.75% accurate which make it superior to a great extent versus previous studies. In addition to high accuracy rate, rotation invariance and low computational overhead are other advantages of the proposed systems that make it ideal for real-time systems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.1. Introduction

Biometric is composed of two Greek roots, Bios is meaning life and Metron is meaning measure. Biometrics refers to human identification methods which based on physical or behavioral characteristics. Finger prints, palm vein, face, iris, retina, voice, DNA and so on are some examples of these characteristics. In biometric, usually we use body organs that have simpler and healthier usage. Each method has its own advantages and disadvantages and we could combine them with other security methods to resolve their drawbacks. These systems have been designed so that they use people natural characteristics instead of using keys or ciphers, these characteristics never been lost, robbed, or forgotten, they are available anytime and anywhere and coping them or forging them are so difficult [1, 2].

Characteristics which could be used in biometric system must have two important uniqueness and repeatability properties. This means that the characteristic must be so that it could recognize all people from each other and also it must infinitely be measurable for all peoples.

Humans are familiar with biometric for a long time but it become popular in the last two centuries. In 1870, a French researcher first introduced human identification system based on measurement of body skeleton parts. This system was used in United States until 1920. Also, in 1880, fingerprint and face were proposed for human identification. Another usage of biometric goes back to World War II, when Germans record people's fingerprint on their ID. Also retina vessels first have been used in 1980. Iris image is another biometric that has been used so far. Although use of them has been suggested in 1936 but due to technological limitations they have not been used until 1993.

Biometric features are divided into physical, behavioral, and chemical categories based on their essence. Using physical characteristics is one of the oldest identification methods which get more diverse by technological advancements. Fingerprint, face, iris, and retina are examples of the most popular physical biometrics. The most important advantages of this category are their high uniqueness and their stability over time.

Behavioral techniques evaluate doing of some task by the user. Signature modes, walking style, or expression style of some statement are examples of these features. Moreover, typing or writing style or voice could be classified as behavioral characteristics too. Lack of stability over time is a great drawback of these features because people's habits and behaviors are being changed over time and therefore these characteristics will be changed accordingly. For resolving this problem, database of human features must be updated frequently.

Chemical techniques measure chemical properties of the user's body like body smell or blood glucose, these features are not stable in all conditions and situations therefore they are not dependable so much.

Blood vessel's pattern of retina is unique among people and forms a good differentiation between peoples. Owing to this property retina images could be one of the best choices for biometric systems. In this article, a novel human identification system based on retinal images has been proposed. The proposed system has two main phases like other pattern recognition system; these phases are feature extraction and decision-making phases. In feature extraction phase, we extracts feature vectors for all images of our database by utilizing angular and radial partitioning. Then, in decision-making phase, we compute Manhattan distance of all images with each other and make final decision using fuzzy system. It is noted that we have used 1D Fourier transform for rotation invariance.

The rest of the article is organized as follows: in Section 2, we investigate retina and corresponding technologies. In Section 3, we described the proposed algorithms with its details. Simulation results and comparison of them with previous studies have been represented in Section 4 and finally, Section 5 is the conclusion and suggestion for some future studies.

2. Overview of retinal technology

Retina is one of the most dependable biometric features because of its natural characteristics and low possibility of fraud because pattern of human's retinas rarely changes during their life and also it is stable and could not be manipulated. Retina-based identification and recognition systems have uniqueness and stability properties because pattern of retina's vessels is unique and stable. Despite of these appropriate attributes, retina has not been used so much in recent decades because of technological limitations and its expensive corresponding devices [3–6]. Therefore, a few identification studies based on retina images have been performed until now [7–10]. Nowadays, because of various technological advancements and cheapen of retina scanners, these restrictions have been eliminated [6, 11]. EyeDentify Company has marketed the first commercial identification tool (EyeDentification 7.5) in 1976 [6].

Xu et al. [9] used the green grayscale retinal image and obtained vector curve of blood vessel skeleton. The major drawback of this algorithm is its computational cost, since a number of rigid motion parameters should be computed for all possible correspondences between the query and enrolled images in the database [12]. They have applied their algorithm on a database which consists of 200 different images and obtained zero false recognition against 38 false rejections.

Farzin et al. [12] have suggested another method based on wavelet transform. Their proposed system consists of blood vessel segmentation, feature generation, and feature matching parts. They have evaluated their system using 60 images of DRIVE [13] and STARE [14] databases and have reported 99% as the average success rate of their system in identification.

Ortega et al. [10] used a fuzzy circular Hough transform to localize the optical disk (OD) in the retinal image. Then, they defined feature vectors based on the ridge endings and bifurcations from vessels obtained from a crease model of the retinal vessels inside the OD. They have used a similar approach given in [9] for pattern matching. Although their algorithm is more efficient than that of [9], they have evaluated their system using a database which only includes 14 images.

2.1. Anatomy of the retina

The retina covers the inner side at the back of the eye and it is about 0.5 mm thick [8]. Optical nerve or OD with about 2 × 1.5 mm across is laid inside the central part of the retina. Figure 1 shows a side view of the eye [15]. Blood vessels form a connected pattern like a tree with OD as root over the surface of retina. The average thickness of these vessels is about 250 μm [15].

Anatomy of the human eye [16].

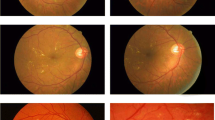

These vessels form a unique pattern for each people which could be used for identification. Figure 2 shows different patterns of the blood vessels for four peoples.

Retina images from four different subjects [12].

Two studies are more complete and more impressive among the various studies that have been done about uniqueness of the people's blood vessels pattern [12]. In 1935, Simon and Goldstein [7] first introduced uniqueness of the pattern of vessels among peoples; they also have suggested using of retina images for identification in their subsequent articles. The next study has been done by Tower in 1950 which showed that pattern of retina's blood vessels is different even for twins [16, 17].

2.2. The strengths and weaknesses of retinal recognition

Pattern of retina's blood vessels rarely changes during people's lives. In addition, retina has not contact with environment unlike the other biometrics such as finger print; therefore, it is protected from external changes. Moreover, people have not access to their retina and hence they could not deceive identification systems. Small size of the feature vector is another advantage of retina to the other biometrics; this property leads to faster identification and authentication than other biometrics [18].

Despite of its advantages, use of retina has some disadvantages that limit application of it [12]. People may suffer from eye diseases like cataract or glaucoma, these diseases complicate identification task to a great extent. Also scanning process needs to a lot of cooperation from the user that could be unfavorable. In addition, retina images could reveal people diseases like blood pressure; this maybe unpleasant for people and it could be harmful for popularity of retina-based identification systems.

3. The proposed system

In thisarticle, we explain a new identification method based on retina images. In this section, we review the proposed algorithm and its details. We examine simulation results in the next section. These results are obtained using DRIVE standard database, as we could see later the proposed system has about 99.75% accuracy.

In addition to its high accuracy, the suggested system has two other advantages as well. First, it is computationally inexpensive so it is very favorable for using in real-time systems. Also the proposed algorithm is resistant to rotation of the images. Rotation invariance is very important for retina-based identification systems because people may turn their head slightly during scanning time. In the proposed algorithm, a suitable resistance to the rotation has been formed using 1D Fourier transform.

As we mentioned earlier, our system composed of two feature extraction and decision-making components. In feature extraction phase, two feature vectors are extracted by angular and radial partitioning. In decision-making phase, first two Manhattan distances are obtained for images and then individual is identified by utilizing the fuzzy system. We will explain angular and radial partitioning along with the proposed systems and its parts in the following sections.

3.1. Angular partitioning

Angular sections defined as φ degree pieces on the Ω image [19]. Number of pieces is k and the φ = 2π/K equation is true (see Figure 3).

According to Figure 3, if any rotation has been made on the image then pixels in section S i will be moved to section S j so that Equation 1 will be true.

Number of edges pixels in each slice considered as a feature of that slice. The scale and translation invariant image feature is then {f(i)} where

where R is the radius of the surrounding circle of the image.

When the considered image rotates to τ = l 2π/K radians (l = 0, 1, 2, ...) then its corresponding feature vector shifts circularly. To demonstrate this subject, let Ω τ as counter counterclockwise rotated image of Ω to τ radians

So, the feature element of a considered section will be obtained from Equation 4.

Also we can express f τ as:

Since fτ(i) = f(i - l) is true, we could conclude that the feature vector has been circularly shifted.

If we apply 1D Fourier transform to the images, Equation 6 will be obtained.

Based on the property |F(u)| = |F τ (u)|, the scale, translation, and rotation invariant image feature are chosen as Ψ = {|F(u)|} for u = 0, 1, 2, ..., K-1. The extracted features are robust against translation because of the aforementioned normalization process. Choosing a medium-size slice makes the extracted features more vigorous against local variations. This is based on the fact that the number of pixels in such slices varies slowly with local translations. The features are rotation invariant because of the Fourier transform applied [19].

3.2. Radial partitioning

In radial partitioning, the image I is divided into several concentric circles. The number of circles may be changed to get to best results. In radial partitioning, features are determined like angular partitioning, it means that we let the number of the edges pixels in each circle as a feature element. According to structure of this type of partitioning and because the centers of circles are one point, local information and feature values are not changed if a rotation happened. Figure 4 shows an example of radial partitioning.

3.3. Feature extraction

Figure 5 shows overview of the proposed system's feature extraction part. This process is done for all images in database and query images.

As one can see from Figure 5, feature extraction has some phases. In preprocessing phase, at first, useless margins are removed and images are limited to the retina's edges. Also in this step, all images are saved in a J × J array. A sample output of this step is depicted in Figure 6b.

Steps of feature vector extraction in the proposed system. (a) Initial image of the retina. (b) Retina's image after preprocessing step. (c) Pattern of blood vessels extracted by the algorithm in [13]. (d) Thinned pattern of vessels using a morphological algorithm. (e) Angular portioning. (f) Radial partitioning.

In next step, we must extract patterns of blood vessels from the retina images. Until now, various algorithms and methods have been suggested for recognition of these patterns; in our system, we have used a method like in [13] (see Figure 6c). Also we have used a morphological algorithm [20] for thinning the extracted patterns. A sample output of the morphological algorithm has shown in Figure 6d. In fact we have used only thicker and more significant vessels for identification and have eliminated thinner ones.

In next step, we generate two separate feature vectors for each image using angular and radial partitioning simultaneously (see Figure 6e, f). The procedure is as follows: first we partition the image based on type of the partitioning and then we let number of sketch pixels within each section as feature value of that segment. After finishing this step, we have two feature vectors correspond to angular and radial partitioning which will be used on decision-making phase.

3.4. Decision-making phase

Pattern matching is a key point in all pattern-recognition algorithms. Searching and finding similar images to a requested image in database is one of the most important tasks in image-based identification systems. Feature vectors of the query image and images in the database are compared to each other and nearest image to the query image returned as a result. In suggested algorithms for pattern matching, various distance criterions have been used as similarity measure. Manhattan distance and Euclidian distance are two of the most important similarity measures used until now [21–23]. Also some systems have used weighted Manhattan and Euclidian distances as their similarity measures [24, 25].

As we stated in feature extraction section, in the proposed system, two feature vectors have been extracted for each image by applying angular and radial partitioning. Applying 1D Fourier transform to the feature vectors could eliminate rotation effects. We used Manhattan distance as similarity measure between images. So, compute Manhattan distance between the query image and all images in database. Since we have two feature vectors, we have two Manhattan distances too. In some cases, angular partitioning may be better and in some other cases radial partitioning works better. This means that if we rely only on angular partitioning, may be we misjudge on some images and vice versa. Angular partitioning only system gives 98% accuracy [26] and radial partitioning only system is 91.5% accurate. In previous study [27], for resolving this problem, we have used sum of the two Manhattan distances in our system. So, our similarity measure is as given in Equation 7.

Details of this similarity measure computation have depicted in Figure 7. Finally, we choose nearest database image to the query image as result. This method has obtained 98.75% accuracy which is superior to both of them.

Decision making component used in [27].

Although using sum of the two distances, we reached to a better accuracy but summation could not be the best solution. In this article, we have used a fuzzy system in decision-making phase. The obtained distances of previous step form input of the fuzzy system and the output is similarity between two images. Membership functions of the input and output variables are showed in Figures 8 and 9, respectively.

Fuzzy rules of our proposed system are as follow.

-

1.

If (AP is Low) and (RP is Low) then (Similarity is High)

-

2.

If (AP is Low) and (RP is Medium) then (Similarity is High)

-

3.

If (AP is Low) and (RP is High) then (Similarity is Medium)

-

4.

If (AP is Medium) and (RP is Low) then (Similarity is High)

-

5.

If (AP is Medium) and (RP is Medium) then (Similarity is Low)

-

6.

If (AP is Medium) and (RP is High) then (Similarity is Low)

-

7.

If (AP is High) and (RP is Low) then (Similarity is Medium)

-

8.

If (AP is High) and (RP is Medium) then (Similarity is Low)

-

9.

If (AP is High) and (RP is High) then (Similarity is Low)

It is noted that we have mapped Manhattan distances to the range of [0 1000]. The value of the output is in the range [0 1], when the value is close to 1 it means that two images are very similar. Finally, we consider closest image to the query image as result. Using this fuzzy system, we reached to 99.75 accuracy that is superior to previous studies.

4. Simulation results

The proposed system is implemented on MATLAB platform and has been tested on DRIVE [13] standard database. The DRIVE database contains retina images of 40 people. In our simulations, we have set image sizes to 512 × 512 (J = 512). We have tested different angles for performing angular partitioning and finally we conclude that 5-degree angle produces better results. Therefore, each image has divided into 72 pieces (360°/5° = 72) and its corresponding feature vector has 72 elements. On the other hand in radial partitioning, we have divided the circle into eight concentric circles, so the achieved feature vector has eight elements. We rotated each image 11 times to generate 440 images. Simulation results have been demonstrated in Table 1.

Also, proposed system experimented against scale and rotation variations. First, images rotated on arbitrary degrees and then these new images are used as system input (results shown in Table 2).

Then, different scales of images used as input and system performance were experimented. Results have been shown in Table 3.

5. Conclusion and future works

We have proposed an identification system based on retina image in thisarticle. The suggested system uses angular and radial partitioning for feature extraction. After feature extraction step, Manhattan distances between the query image and database images are computed and final decision is made based on the proposed fuzzy system. Simulation results show high accuracy of our system in comparison with similar systems. More over rotation invariance and low computational overhead are other advantages of system that make it suitable for use in real-time systems.

As mentioned earlier, the best results obtained when we used 5-degree angle for angular partitioning. We could use other angles as well so we may have different feature vectors with different lengths for each image. Hence, we can generate various feature vectors for images and use them to train a neural network. Then, we can use the trained neural network for decision making. Use of neural network for improving results will be considered in future studies.

References

Jain A, Bolle R, Pankanti S: Biometrics: Personal Identification in a Networked Society. Kluwer Academic Publishers, Dordrecht, The Netherlands; 1999.

Zhang D: Automated Biometrics: Technologies and Systems. Kluwer Academic Publishers, Dordrecht, Netherlands; 2000.

Hill RB: Rotating beam ocular identification apparatus and method. US Patent 4393366 1983.

Hill RB: Fovea-centered eye fundus scanner. US Patent 4620318 1986.

Johnson JC, Hill RB: Eye fundus optical scanner system and method. US Patent 5532771 1990.

Hill RB: Biometrics: Personal Identification in Networked Society. Edited by: Jain A, Bolle R, Pankati S. Springer, Berlin; 1999:126.

Simon C, Goldstein I: A new scientific method of identification. N Y J Med 1935,35(18):901-906.

Tabatabaee H, Milani Fard A, Jafariani H: A novel human identifier system using retina image and fuzzy clustering approach. In Proceedings of the 2nd IEEE International Conference on Information and Communication Technologies (ICTTA '06). Damascus, Syria; 2006:1031-1036.

Xu ZW, Guo XX, Hu XY, Cheng X: The blood vessel recognition of ocular fundus. In Proceedings of the 4th International Conference on Machine Learning and Cybernetics (ICMLC '05). Guangzhou, China; 2005:4493-4498.

Ortega M, Marino C, Penedo MG, Blanco M, Gonzalez F: Biometric authentication using digital retinal images. In Proceedings of the 5th WSEAS International Conference on Applied Computer Science (ACOS '06). Hangzhou, China; 2006:422-427.

Farzin H, Moghaddam HA, Moin MS: A novel retinal identification system. EURASIP J Adv Signal Process 2008.,2008(280635):

Staal J, Abramoff MD, Niemeijer M, Viergever MA, van Ginneken B: Ridge-based vessel segmentation in color images of the retina. IEEE Trans Med Imag 2004,23(4):501-509. 10.1109/TMI.2004.825627

Hoover A, Kouznetsova V, Goldbaum M: Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Trans Med Imag 2000,19(3):203-210. 10.1109/42.845178

Goh KG, Hsu W, Lee ML: Medical Data Mining and Knowledge Discovery. Springer, Berlin, Germany; 2000:181-210.

Chaudhuri S, Chatterjee S, Katz N, Nelson M, Goldbaum M: Detection of blood vessels in retinal images using two-dimensional matched filters. IEEE Trans Med Imag 1989,8(3):263-269. 10.1109/42.34715

Tower P: The fundus oculi in monozygotic twins: report of six pairs of identical twins. Arch Ophthalmol 1955,54(2):225-239. 10.1001/archopht.1955.00930020231010

Chen WS, Chih KH, Shih SW, Hsieh CM: Personal identification technique based on human Iris recognition with wavelet transform. In Proceedings of IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP '05). Volume 2. Philadelphia, PA, USA; 2005:949-952.

Chalechale A: Content-based retrieval from image databases using sketched queries. PhD thesis, School of Electrical, Computer, and Telecommunication Engineering, University of Wollongong 2005.

Gonzalez RC, Woods RE: Digital Image Processing. Addison-Wesley; 1992.

Pass G, Zabih R: Histogram refinement for content-based image retrieval. Proceedings 3rd IEEE Workshop on Applications of Computer Vision 1996, 96-102.

Del Bimbo A: Visual Information Retrieval. Morgan Kaufmann Publishers; 1999.

Jacobs CE, Finkelstein A, Salesin DH: Fast multiresolution image querying. Proceedings ACM Computer Graphics (IGGRAPH 95). USA 1995, 277-286.

Bober M: MPEG-7 Visual shape description. IEEE Trans Circ Syst Video Technol 2001,11(6):716-719. 10.1109/76.927426

Won CS, Park DK, Park S: Efficient use of MPEG-7 edge histogram descriptor. Etri J 2002,24(1):23-30. 10.4218/etrij.02.0102.0103

Barkhoda W, Tab FA, Amiri MD: Rotation invariant retina identification based on the sketch of vessels using angular partitioning. In Proceedings International Multiconference on Computer Science and Information Technology (IMCSIT'09). Mragowo, Poland; 2009:3-6.

Amiri MD, Tab FA, Barkhoda W: Retina identification based on the pattern of blood vessels using angular and radial partitioning. In Proceedings Advanced Concepts for Intelligent Vision Systems (ACIVS 2009). LNCS 5807, Bordeaux, France; 2009:732-739.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Barkhoda, W., Akhlaqian, F., Amiri, M.D. et al. Retina identification based on the pattern of blood vessels using fuzzy logic. EURASIP J. Adv. Signal Process. 2011, 113 (2011). https://doi.org/10.1186/1687-6180-2011-113

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-6180-2011-113