Abstract

In this paper, the problem of the adaptive almost surely asymptotically synchronization for stochastic delayed neural networks with Markovian switching is considered. By utilizing a new nonnegative function and the M-matrix approach, we derive a sufficient condition to ensure adaptive almost surely asymptotically synchronization for stochastic delayed neural networks. Some appropriate parameters analysis and update laws are found via the adaptive feedback control techniques. We also present an illustrative numerical example to demonstrate the effectiveness of the M-matrix-based synchronization condition derived in this paper.

Similar content being viewed by others

1 Introduction

As we know, the stochastic delayed neural networks (SDNNs) with Markovian switching have played an important role in the fields of science and engineering for their many practical applications, including image processing, pattern recognition, associative memory, and optimization problems [1, 2]. In the past several decades, the characteristics of the SDNNs with Markovian switching, such as the various stability [3, 4], have received a lot of attention from scholars in various fields of nonlinear science. Wang et al. in [5] considered exponential stability for delayed recurrent neural networks with Markovian jumping parameters. Zhang et al. investigated stochastic stability for Markovian jumping genetic regulatory networks with mixed time delays [6]. Huang et al. investigated robust stability for stochastic delayed additive neural networks with Markovian switching [7]. The researchers presented a number of sufficient conditions to achieve the global asymptotic stability and exponential stability for the SDNNs with Markovian switching [8–11]. As is well known, time delays, as a source of instability and oscillations, always appear in various aspects of neural networks. Recently, the time delays of neural networks have received a lot of attention [12–15]. The linear matrix inequality (LMI, for short) approach is one of the most extensively used in recent publications [16, 17].

In recent years, it has been found that the synchronization of the coupled neural networks has potential applications in many fields such as biology and engineering [18–21]. In the coupled nonlinear dynamical systems, many neural networks may experience abrupt changes in their structure and parameters caused by some phenomena such as component failures or repairs, changing subsystem interconnections, and abrupt environmental disturbances. The synchronization may help to protect interconnected neurons from the influence of random perturbations which affect all neurons in the system. Therefore, from the neurophysiological as well as theoretical point of view, it is important to investigate the impact of synchronization on the SDNNs. Moreover, in the adaptive synchronization for the neural networks, the control law needs to be adapted or updated in realtime. So, the adaptive synchronization for neural networks has been used in real neural networks control such as parameter estimation adaptive control, model reference adaptive control, etc. Some stochastic synchronization results have been investigated. For example, in [22], an adaptive feedback controller is designed to achieve complete synchronization for unidirectionally coupled delayed neural networks with stochastic perturbation. In [23], via adaptive feedback control techniques with suitable parameters update laws, several sufficient conditions are derived to ensure lag synchronization for unknown delayed neural networks with or without noise perturbation. In [24], a class of chaotic neural networks is discussed, and based on the Lyapunov stability method and the Halanay inequality lemma, a delay independent sufficient exponential synchronization condition is derived. The simple adaptive feedback scheme has been used for the synchronization for neural networks with or without time-varying delay in [25]. A general model of an array of N linearly coupled delayed neural networks with Markovian jumping hybrid coupling is introduced in [26] and some sufficient criteria have been derived to ensure the synchronization in an array of jump neural networks with mixed delays and hybrid coupling in mean square.

It should be pointed out that, to the best of our knowledge, the adaptive almost surely asymptotically synchronization for the SDNNs with Markovian switching is seldom mentioned although it is of practical importance. Motivated by the above statements, in this paper, we aim to analyze the adaptive almost surely asymptotically synchronization for the SDNNs with Markovian switching. M-matrix-based criteria for determining whether adaptive almost surely asymptotically synchronization for the SDNNs with Markovian switching are developed. An adaptive feedback controller is proposed for the SDNNs with Markovian switching. A numerical simulation is given to show the validity of the developed results.

The rest of this paper is organized as follows: in Section 2, the problem is formulated and some preliminaries are given; in Section 3, a sufficient condition to ensure the adaptive almost surely asymptotically synchronization for the SDNNs with Markovian switching is derived; in Section 4, an example of numerical simulation is given to illustrate the validity of the results; Section 5 gives the conclusion of the paper.

2 Problem formulation and preliminaries

Throughout this paper, ℰ stands for the mathematical expectation operator, is used to denote a vector norm defined by , ‘T’ represents the transpose of a matrix or a vector, is an n-dimensional identical matrix.

Let be a right-continuous Markov chain on the probability space taking values in a finite state space with generator given by

where and is the transition rate from i to j if while

We denote .

In this paper, we consider the neural network called drive system and represented by the compact form as follows:

where is the time, is the state vector associated with n neurons, denote the activation functions of the neurons, is the transmission delay satisfying that and , where , are constants. As a matter of convenience, for , we denote and , , , , respectively. In model (1), furthermore, , (i.e., is a diagonal matrix) has positive and unknown entries , and are the connection weight and the delayed connection weight matrices, respectively. is the constant external input vector.

For the drive system (1), a response system is constructed as follows:

where is the state vector of the response system (2), is a control input vector with the form of

is an n-dimensional Brown moment defined on a complete probability space with a natural filtration (i.e., is a σ-algebra) and is independent of the Markovian process , and is the noise intensity matrix and can be regarded as a result of the occurrence of eternal random fluctuation and other probabilistic causes.

Let . For the purpose of simplicity, we mark and . From the drive system (1) and the response system (2), the error system can be represented as follows:

The initial condition associated with system (4) is given in the following form:

for any , where is the family of all -measurable -value random variables satisfying that , and denotes the family of all continuous -valued functions on with the norm .

To obtain the main result, we need the following assumptions.

Assumption 1 The activation functions of the neurons satisfy the Lipschitz condition. That is, there exists a constant such that

Assumption 2 The noise intensity matrix satisfies the linear growth condition. That is, there exist two positives and such that

for all .

Assumption 3 In the drive system (1)

Remark 1 Under Assumption 1∼Assumption 3, the error system (4) admits an equilibrium point (or trivial solution) , .

The following stability concept and synchronization concept are needed in this paper.

Definition 1 The trivial solution of the error system (4) is said to be almost surely asymptotically stable if

for any .

The response system (2) and the drive system (1) are said to be almost surely asymptotically synchronized if the error system (4) is almost surely asymptotically stable.

The main purpose of the rest of this paper is to establish a criterion of the adaptive almost surely asymptotically synchronization of system (1) and response system (2) by using the adaptive feedback control and M-matrix techniques.

To this end, we introduce some concepts and lemmas which will be frequently used in the proofs of our main results.

Definition 2 [27]

A square matrix is called a nonsingular M-matrix if M can be expressed in the form with some (i.e., each element of G is nonnegative) and , where is the spectral radius of G.

Lemma 1 [8]

If with (), then the following statements are equivalent:

-

(1)

M is a nonsingular M-matrix.

-

(2)

Every real eigenvalue of M is positive.

-

(3)

M is positive stable. That is, exists and (i.e., and at least one element of is positive).

Lemma 2 [5]Let , . Then

for any .

Consider an n-dimensional stochastic delayed differential equation (SDDE, for short) with Markovian switching

on with the initial data given by

If , define an operator ℒ from to R by

where

For the SDDE with Markovian switching, we have the Dynkin formula as follows.

Lemma 3 (Dynkin formula) [8, 28]

Let and , be bounded stopping times such that a.s. (i.e., almost surely). If and are bounded on with probability 1, then

For the SDDE with Markovian switching again, the following hypothesis is imposed on the coefficients f and g.

Assumption 4 Both f and g satisfy the local Lipschitz condition. That is, for each , there is an such that

for all and those with . Moreover,

Now we cite a useful result given by Yuan and Mao [29].

Lemma 4 [29]

Let Assumption 4 hold. Assume that there are functions , and such that

and

Then the solution of Eq. (5) is almost surely asymptotically stable.

3 Main results

In this section, we give a criterion of the adaptive almost surely asymptotically synchronization for the drive system (1) and the response system (2).

Theorem 1 Assume that  is a nonsingular M-matrix, where

is a nonsingular M-matrix, where

Let and  (in this case, , i.e., all elements of are positive by Lemma 1). Assume also that

(in this case, , i.e., all elements of are positive by Lemma 1). Assume also that

where .

Under Assumptions 1∼3, the noise-perturbed response system (2) can be adaptive almost surely asymptotically synchronized with the delayed neural network (1) if the update law of the feedback control gain of the controller (3) is chosen as

where () are arbitrary constants.

Proof Under Assumptions 1∼3, it can be seen that the error system (4) satisfies Assumption 4.

For each , choose a nonnegative function as follows:

Then it is obvious that condition (8) holds.

Computing along the trajectory of the error system (4), and using (10), one can obtain that

Now, using Assumptions 1∼2 together with Lemma 2 yields

and

Substituting (12)∼(15) into (11) yields

where by .

Let , . Then inequalities (6) and (7) hold by using (9), where in (6). By Lemma 4, the error system (4) is adaptive almost surely asymptotically stable, and hence the noise-perturbed response system (2) can be adaptive almost surely asymptotically synchronized with the drive delayed neural network (1). This completes the proof. □

Remark 2 In Theorem 1, condition (9) of the adaptive almost surely asymptotically synchronization for the SDNN with Markovian switching obtained by using M-matrix and the Lyapunov functional method is generator-dependent and very different to other methods such as the linear matrix inequality method. And it is easy to check the condition if the drive system and the response system are given and the positive constant m is well chosen. To the best of the authors’ knowledge, this method is the first development in the research area of synchronization for neural networks.

Now, we are in a position to consider two special cases of the drive system (1) and the response system (2).

Special case 1 The Markovian jumping parameters are removed from the neural networks (1) and the response system (2). In this case, and the drive system, the response system and the error system can be represented, respectively, as follows:

and

For this case, one can get the following result that is analogous to Theorem 1.

Corollary 1 Let

Assume that

and

Under Assumptions 1∼3, the noise-perturbed response system (18) can be adaptive almost surely asymptotically synchronized with the delayed neural network (17) if the update law of the feedback gain of the controller (3) is chosen as

where () are arbitrary constants.

Proof Choose the following nonnegative function:

The rest of the proof is similar to that of Theorem 1, and hence omitted. □

Special case 2 The noise-perturbation is removed from the response system (2), which yields the noiseless response system

and the error system

respectively.

In this case, one can get the following results.

Corollary 2 Assume that  is a nonsingular M-matrix, where

is a nonsingular M-matrix, where

Let and  (in this case, by Lemma 1). Assume also that

(in this case, by Lemma 1). Assume also that

where .

Under Assumptions 1∼3, the noiseless-perturbed response system (22) can be adaptive almost surely asymptotically synchronized with the unknown drive delayed neural network (1) if the update law of the feedback gain of the controller (3) is chosen as

where are arbitrary constants.

Proof For each , choose a nonnegative function as follows:

The rest of the proof is similar to that of Theorem 1, and hence omitted. □

4 Numerical example

In the section, an illustrative example is given to support our main results.

Example 1 Consider a delayed neural network (1), and its response system (2) with Markovian switching and the following network parameters:

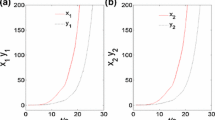

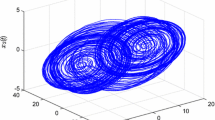

It can be checked that Assumption 1∼Assumption 3 and inequality (9) are satisfied and the matrix M is a nonsingular M-matrix. So, the noise-perturbed response system (2) can be adaptive almost surely asymptotically synchronized with the drive delayed neural network (1) by Theorem 1. The simulation results are given in Figures 1∼2. Figure 1 shows that the state response and of the errors system converge to zero. Figure 2 shows the dynamic curve of the feedback gain and . From the simulations, it can be seen that the stochastic delayed neural networks with Markovian switching are adaptive almost surely asymptotically synchronization.

5 Conclusions

In this paper, we have proposed the concept of adaptive almost surely asymptotically synchronization for the stochastic delayed neural networks with Markovian switching. Making use of the M-matrix and Lyapunov functional method, we have obtained a sufficient condition, under which the response stochastic delayed neural network with Markovian switching can be adaptive almost surely asymptotically synchronized with the drive delayed neural networks with Markovian switching. The method to obtain the sufficient condition of the adaptive synchronization for neural networks is different to that of the linear matrix inequality technique. The condition obtained in this paper is dependent on the generator of the Markovian jumping models and can be easily checked. Extensive simulation results are provided to demonstrate the effectiveness of our theoretical results and analytical tools.

References

Sevgen S, Arik S: Implementation of on-chip training system for cellular neural networks using iterative annealing optimization method. Int. J. Reason.-Based Intell. Syst. 2010, 2: 251-256.

Lütcke H, Helmchen F: Two-photon imaging and analysis of neural network dynamics. Rep. Prog. Phys. 2010., 74: Article ID 086602

Xu Y, Li B, Zhou W, Fang J: Mean square function synchronization of chaotic systems with stochastic effects. Nonlinear Dyn. 2012. 10.1007/s11071-011-0217-x

Zhao L, Hu J, Fang J, Zhang W: Studying on the stability of fractional-order nonlinear system. Nonlinear Dyn. 2012. 10.1007/s11071-012-0469-0

Wang Z, Liu Y, Liu X: Exponential stability of delayed recurrent neural networks with Markovian jumping parameters. Phys. Lett. A 2006, 356: 346-352. 10.1016/j.physleta.2006.03.078

Zhang W, Fang J, Tang Y: Stochastic stability of Markovian jumping genetic regulatory networks with mixed time delays. Appl. Math. Comput. 2011, 17: 7210-7225.

Huang H, Ho D, Qu Y: Robust stability of stochastic delayed additive neural networks with Markovian switching. Neural Netw. 2007, 20: 799-809. 10.1016/j.neunet.2007.07.003

Mao X, Yuan C: Stochastic Differential Equations with Markovian Switching. Imperial College Press, London; 2006.

Wang Z, Ho D, Liu Y, Liu X:Robust control for a class of nonlinear discrete time-delay stochastic systems with missing measurements. Automatica 2010, 45: 1-8.

Wang Z, Liu Y, Liu G, Liu X: A note on control of discrete-time stochastic systems with distributed delays and nonlinear disturbances. Automatica 2010, 46: 543-548. 10.1016/j.automatica.2009.11.020

Zhou W, Lu H, Duan C: Exponential stability of hybrid stochastic neural networks with mixed time delays and nonlinearity. Neurocomputing 2009, 72: 3357-3365. 10.1016/j.neucom.2009.04.012

Tang Y, Fang J, Miao Q: Synchronization of stochastic delayed neural networks with Markovian switching and its application. Int. J. Neural Syst. 2009, 19: 43-56. 10.1142/S0129065709001823

Min X, Ho D, Cao J: Time-delayed feedback control of dynamical small-world networks at Hopf bifurcation. Nonlinear Dyn. 2009, 58: 319-344. 10.1007/s11071-009-9485-0

Xu Y, Zhou W, Fang J: Topology identification of the modified complex dynamical network with non-delayed and delayed coupling. Nonlinear Dyn. 2012, 68: 195-205. 10.1007/s11071-011-0217-x

Wang Z, Liu Y, Liu X: Exponential stabilization of a class of stochastic system with Markovian jump parameters and mode-dependent mixed time-delays. IEEE Trans. Autom. Control 2010, 55: 1656-1662.

Hassouneh M, Abed E: Lyapunov and LMI analysis and feedback control of border collision bifurcations. Nonlinear Dyn. 2007, 50: 373-386. 10.1007/s11071-006-9169-y

Tang Y, Leung S, Wong W, Fang J: Impulsive pinning synchronization of stochastic discrete-time networks. Neurocomputing 2010, 73: 2132-2139. 10.1016/j.neucom.2010.02.010

Zhang W, Tang Y, Fang J, Zhu W: Exponential cluster synchronization of impulsive delayed genetic oscillators with external disturbances. Chaos 2011, 21: 37-43.

Tang Y, Gao H, Zou W, Kurths J: Identifying controlling nodes in neuronal networks in different scales. PLoS ONE 2012., 7: Article ID e41375

Tang Y, Wang Z, Gao H, Swift S, Kurths J: A constrained evolutionary computation method for detecting controlling regions of cortical networks. IEEE/ACM Trans. Comput. Biol. Bioinform. 2012, 9: 1569-1581.

Ma Q, Xu S, Zou Y, Shi G: Synchronization of stochastic chaotic neural networks with reaction-diffusion terms. Nonlinear Dyn. 2012, 67: 2183-2196. 10.1007/s11071-011-0138-8

Li X, Cao J: Adaptive synchronization for delayed neural networks with stochastic perturbation. J. Franklin Inst. 2008, 354: 779-791.

Sun Y, Cao J: Adaptive lag synchronization of unknown chaotic delayed neural networks with noise perturbation. Phys. Lett. A 2007, 364: 277-285. 10.1016/j.physleta.2006.12.019

Chen G, Zhou J, Liu Z: Classification of chaos in 3-D autonomous quadratic systems - I: basic framework and methods. Int. J. Bifurc. Chaos 2006, 16: 2459-2479. 10.1142/S0218127406016203

Cao J, Lu J: Adaptive synchronization of neural networks with or without time-varying delays. Chaos 2006., 16: Article ID 013133

Tang Y, Fang J: Adaptive synchronization in an array of chaotic neural networks with mixed delays and jumping stochastically hybrid coupling. Commun. Nonlinear Sci. Numer. Simul. 2009, 14: 3615-3628. 10.1016/j.cnsns.2009.02.006

Berman A, Plemmons R: Nonnegative Matrices in Mathematical Sciences. Academic Press, New York; 1979.

Øksendal B: Stochastic Differential Equations: An Introduction with Applications. Springer, Berlin; 2005.

Yuan C, Mao X: Robust stability and controllability of stochastic differential delay equations with Markovian switching. Automatica 2004, 40: 343-354. 10.1016/j.automatica.2003.10.012

Acknowledgements

We would like to thank the referees and the editor for their valuable comments and suggestions, which have led to a better presentation of this paper. This work is supported by the National Natural Science Foundation of China (61075060), the Innovation Program of Shanghai Municipal Education Commission (12zz064) and the Fundamental Research Funds for the Central Universities.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally to the manuscript. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Ding, X., Gao, Y., Zhou, W. et al. Adaptive almost surely asymptotically synchronization for stochastic delayed neural networks with Markovian switching. Adv Differ Equ 2013, 211 (2013). https://doi.org/10.1186/1687-1847-2013-211

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-1847-2013-211