Abstract

We present an explicit solution to an optimal stopping problem of the stochastic Gilpin-Ayala population model by applying the smooth pasting technique (Dixit in The Art of Smooth Pasting, 1993 and Dixit and Pindyck in Investment under Uncertainty, 1994). The optimal stopping rule is to find an optimal stopping time and an optimal stopping boundary of maximizing the expected discounted reward, which are given in this paper explicitly.

Similar content being viewed by others

1 Introduction

Optimal stopping problems of stochastic systems play an important role in the field of stochastic control theory. A special interest in such problems is attracted by many fields such as finance, biology models and so on.

The aim of the optimal stopping problems is to search for random times at which the stochastic processes should be stopped to make the expected values of the given reward functionals optimal. Lots of explicitly solvable stopping problems with exponentially discounted stopping problems are mainly those for one-dimensional diffusion processes. The optimal stopping times are the first time at which the underlying processes exit certain regions restricted by constant boundaries.

In this paper, the optimal stopping time for the stochastic Gilpin-Ayala model [1–4], whose solution is a diffusion process, is introduced, and the explicit expressions for the value functions and the boundaries in such optimal stopping problems are obtained. To our best knowledge, there have been few tries to research the optimal harvesting problems based on optimal stopping, and many scholars studied stochastic logistic models such as [5, 6]. There are only a few results about the corresponding stochastic Gilpin-Ayala model, which is our motivation.

The Gilpin-Ayala population model is one of the most important and classic mathematical bio-economic models due to its theoretical and practical significance. In 1973, Gilpin and Ayala [7] claimed the following model:

where denotes the density of resource population at time t, is called the intrinsic growth rate and , K is the environmental carrying capacity. It is obvious that (1.1) becomes the classic logistic population model when .

Recently, Eq. (1.1) has been extensively studied and many important results have been obtained; see, e.g., [8–11].

However, the population systems are affected by random disturbances such as environment effects, financial events and so on in the real world. In order to fit the real world better, the white noise is introduced into the population systems by many researchers [2, 3, 12–14]. In this paper, we study the optimal stopping problem of the stochastic Gilpin-Ayala population model

where the constants r, b are mentioned in (1.1) and is one-dimensional Brownian motion [15].

The outline for this paper is as follows. Section 2 of this paper is concerned with the general problem of choosing an optimal stopping time for the stochastic Gilpin-Ayala population model. In Section 3, a closed-form candidate function for the value function is given. We verify the candidate for the expected reward is optimal and the optimal stopping boundary is expressed by the smooth pasting technique.

2 Formulation of the problem

Let the probability space satisfy the usual conditions. Suppose the population with size at time t is given by the stochastic Gilpin-Ayala population model

It can be proved that if and , then the stochastic Gilpin-Ayala equation (2.1) has a global, continuous positive solution defined by

for all , is one-dimensional Brownian motion (see [16]), and note that .

The optimal stopping rule here can be considered to find an optimal value function Φ and an optimal stopping time such that

The sup is taken over all stopping times τ of the process and the reward function

where the discounting exponent , is the profit at time τ and a represents a fixed fee and it is natural to assume that . The positive constant w represents the permanent assets. denotes the expectation with respect to the probability law of the process , starting at .

Note that it is trivial that the initial value . So we further assume that and the stopping time τ is bounded since .

3 Analysis

Let us start with the infinitesimal generator [15] of the Itô diffusion , which is defined by

By the application of Itô formula, we have

which is based on

And

for all , [15]. In order to find the unknown value function Φ from (2.3) and the unknown boundary , we consider

If we try a solution of (3.5) of the form

and substitute (3.6) into (3.5), we obtain

The general solution ϕ of (3.7) is

by setting

and

where , are arbitrary constants. Here is the confluent hypergeometric function, whose integral representation is

for and (see [7, 17, 18]). is the Kummer hypergeometric function and Γ denotes the gamma function.

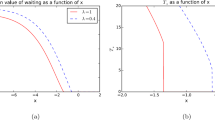

If goes to ∞ as , we must have since is bounded. Then we define the candidate for the optimal value function Φ in (2.3) by

where

and

We observe that the constant

is determined by

and

-

(2)

smooth pasting condition

(3.15)

In fact, is showed to be the unique solution of (3.15) by the following assumptions and Lemma 3.1.

We assume the following.

Assumption 1

Assumption 2

The following lemma provides an optimal stopping boundary.

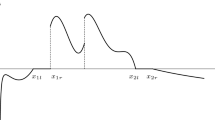

Lemma 3.1 is the maximum value point of given by (3.12) with respect to , for fixed , .

Proof Let for arbitrary , , then we derive

by setting and together with

and

Since increases on the interval with and , and is an increasing function on the interval with and , we can deduce that is a decreasing function on with . In fact, we only need to check that decreases on with and . is trivial due to the fact that as . To prove , we take the change-of-variable formula to (3.11), then it follows

which directly implies

for . Next, with the help of the integral representation (3.21), we observe that

where , , with some normalizing constant A for . Then by applying the Jensen inequality and considering the obvious fact that , we deduce , which gives the monotonicity of on (similar discussion can be found in [21]).

Then we conclude that

-

(1)

There exists a unique solution, which satisfies , of (3.16) on and note that on under Assumption 2.

-

(2)

The maximum value is given by

(3.24)

under (3.9) and Assumption 1 on the interval . The proof is completed.

□

Now, let us give the following lemma for our main Theorem 3.3.

Lemma 3.2 Under Assumptions 1 and 2, the function satisfies the following properties (1)-(3):

-

(1)

given by (2.4) for all , .

-

(2)

For , ,

(3.25) -

(3)

, , .

Proof It is clear that by construction, for , . We check that

-

(1)

for , i.e., for and

-

(2)

for . This is easily done by routine calculation under Assumptions 1 and 2.

□

Let us give our main theorem.

Theorem 3.3 Under Assumptions 1 and 2, setting and , the function defined by

is the optimal value function. Moreover, the optimal stopping region F and the optimal stopping time are given by

and

Proof Let τ be any stopping time with for the process and any , then by Dynkin’s formula [15]

Therefore, by (1) and (2) in Lemma 3.2, we get

Taking of both sides of (3.29), we have by the Fatou lemma [22]

Since τ is arbitrary with , we conclude that

We proceed to prove .

-

(a)

If , then . So, we have by (3.31) and is optimal for .

-

(b)

Next, suppose . By Dynkin’s formula [15] and the fact that a.s. for , we have

(3.32)

So, by (1), (3) in Lemma 3.2 and the fact that a.s. for and [18], we get

Combining the two cases (a), (b) and (3.31), we obtain

So, and is optimal, .

We conclude that for all and the stopping time is defined by

□

4 Conclusion and further studies/research

This paper describes the optimal harvesting problems of the stochastic Gilpin-Ayala population model as an optimal stopping problem, which is our first try. Meanwhile, we obtain the explicit optimal value function and optimal stopping time by using the smooth pasting technique. Finally, we prove the result. Furthermore, our work can lead a new way for the optimal harvesting problem in the real world. In further direction, the optimal harvesting problems for the stochastic predator-prey model and related stochastic models will be considered.

References

Gilpin ME, Ayala FJ: Global models of growth and competition. Proc. Natl. Acad. Sci. USA 1973, 70: 3590–3593. 10.1073/pnas.70.12.3590

Lian B, Hu S: Stochastic delay Gilpin-Ayala competition models. Stoch. Dyn. 2006, 6: 561–576. 10.1142/S0219493706001888

Lian B, Hu S: Asymptotic behaviour of the stochastic Gilpin-Ayala competition models. J. Math. Anal. Appl. 2008, 339: 419–428. 10.1016/j.jmaa.2007.06.058

Liu M, Wang K: Stationary distribution, ergodicity and extinction of a stochastic generalized logistic system. Appl. Math. Lett. 2012, 25: 1980–1985. 10.1016/j.aml.2012.03.015

Liu M, Wang K: Persistence and extinction in stochastic non-autonomous logistic systems. J. Math. Anal. Appl. 2011, 375: 443–457. 10.1016/j.jmaa.2010.09.058

Liu M, Wang K: Asymptotic properties and simulations of a stochastic logistic model under regime switching. Math. Comput. Model. 2011, 54: 2139–2154. 10.1016/j.mcm.2011.05.023

Abramovitz M, Stegun IA: Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Wiley, New York; 1972. National Bureau of Standards

Clark CW: Mathematical Bioeconomics: the Optimal Management of Renewal Resources. Wiley, New York; 1976.

Clark CW: Mathematical Bioeconomics: the Optimal Management of Renewal Resources. 2nd edition. Wiley, New York; 1990.

Fan M, Wang K: Optimal harvesting policy for single population with periodic coefficients. Math. Biosci. 1998, 152: 165–177. 10.1016/S0025-5564(98)10024-X

Zhang X, Shuai Z, Wang K: Optimal impulsive harvesting policy for single population. Nonlinear Anal., Real World Appl. 2003, 4: 639–651. 10.1016/S1468-1218(02)00084-6

Alvarez LHR, Shepp LA: Optimal harvesting of stochastically fluctuating populations. Math. Biosci. 1998, 37: 155–177.

Alvarez LHR: Optimal harvesting under stochastic fluctuations and critical depensation. Math. Biosci. 1998, 152: 63–85. 10.1016/S0025-5564(98)10018-4

Lungu EM, Øksendal B: Optimal harvesting from a population in a stochastic crowded environment. Math. Biosci. 1997, 145: 47–75. 10.1016/S0025-5564(97)00029-1

Øksendal B: Stochastic Differential Equations. 6th edition. Springer, New York; 2005.

Wang K: Stochastic Biomathematics Models. Science Press, Beijing; 2010.

Muller KE:Computing the confluent hypergeometric function, . Numer. Math. 2001, 90: 179–196. 10.1007/s002110100285

Olver FWJ, Lozier DW, Boisvert RF, Clark CW: NIST Handbook of Mathematical Function. Cambridge University Press, Cambridge; 2010.

Dixit A: The Art of Smooth Pasting. Harwood Academic, Switzerland; 1993.

Dixit A, Pindyck R: Investment under Uncertainty. Princeton University Press, New Jersey; 1994.

Gapeev PV, Markus R: An optimal stopping problem in a diffusion-type model with delay. Stat. Probab. Lett. 2006, 76: 601–608. 10.1016/j.spl.2005.09.006

Halmos P: Measure Theory. Springer, Berlin; 1974.

Acknowledgements

We are grateful to Prof. Wang Ke for a number of helpful suggestions for improving the article. The second author was supported by the Natural Science Foundation of the Education Department of Heilongjiang Province (Grant No. 12521116).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

XHA and YS carried out the proof of the main part of this article, XHA corrected the manuscript. All authors have read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Ai, X., Sun, Y. An optimal stopping problem in the stochastic Gilpin-Ayala population model. Adv Differ Equ 2012, 210 (2012). https://doi.org/10.1186/1687-1847-2012-210

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-1847-2012-210