Abstract

Background

The evaluation of interventions and policies designed to promote resilience, and research to understand the determinants and associations, require reliable and valid measures to ensure data quality. This paper systematically reviews the psychometric rigour of resilience measurement scales developed for use in general and clinical populations.

Methods

Eight electronic abstract databases and the internet were searched and reference lists of all identified papers were hand searched. The focus was to identify peer reviewed journal articles where resilience was a key focus and/or is assessed. Two authors independently extracted data and performed a quality assessment of the scale psychometric properties.

Results

Nineteen resilience measures were reviewed; four of these were refinements of the original measure. All the measures had some missing information regarding the psychometric properties. Overall, the Connor-Davidson Resilience Scale, the Resilience Scale for Adults and the Brief Resilience Scale received the best psychometric ratings. The conceptual and theoretical adequacy of a number of the scales was questionable.

Conclusion

We found no current 'gold standard' amongst 15 measures of resilience. A number of the scales are in the early stages of development, and all require further validation work. Given increasing interest in resilience from major international funders, key policy makers and practice, researchers are urged to report relevant validation statistics when using the measures.

Similar content being viewed by others

Background

International research on resilience has increased substantially over the past two decades [1], following dissatisfaction with 'deficit' models of illness and psychopathology [2]. Resilience is now also receiving increasing interest from policy and practice [3, 4] in relation to its potential influence on health, well-being and quality of life and how people respond to the various challenges of the ageing process. Major international funders, such as the Medical Research Council and the Economic and Social Research Council in the UK [5] have identified resilience as an important factor for lifelong health and well-being.

Resilience could be the key to explaining resistance to risk across the lifespan and how people 'bounce back' and deal with various challenges presented from childhood to older age, such as ill-health. Evaluation of interventions and policies designed to promote resilience require reliable and valid measures. However the complexity of defining the construct of resilience has been widely recognised [6–8] which has created considerable challenges when developing an operational definition of resilience.

Different approaches to measuring resilience across studies have lead to inconsistencies relating to the nature of potential risk factors and protective processes, and in estimates of prevalence ([1, 6]. Vanderbilt-Adriance and Shaw's review [9] notes that the proportions found to be resilient varied from 25% to 84%. This creates difficulties in comparing prevalence across studies, even if study populations experience similar adversities. This diversity also raises questions about the extent to which resilience researchers are measuring resilience, or an entirely different experience.

One of the main tasks of the Resilience and Healthy Ageing Network, funded by the UK Cross-Council programme for Life Long Health and Wellbeing (of which the authors are members), was to contribute to the debate regarding definition and measurement. As part of the work programme, the Network examined how resilience could best be defined and measured in order to better inform research, policy and practice. An extensive review of the literature and concept analysis of resilience research adopts the following definition. Resilience is the process of negotiating, managing and adapting to significant sources of stress or trauma. Assets and resources within the individual, their life and environment facilitate this capacity for adaptation and 'bouncing back' in the face of adversity. Across the life course, the experience of resilience will vary [10].

This definition, derived from a synthesis of over 270 research articles, provides a useful benchmark for understanding the operationalisation of resilience for measurement. This parallel paper reports a methodological review focussing on the measurement of resilience.

One way of ensuring data quality is to only use resilience measures which have been validated. This requires the measure to undergo a validation procedure, demonstrating that it accurately measures what it aims to do, regardless of who responds (if for all the population), when they respond, and to whom they respond. The validation procedure should establish the range of and reasons for inaccuracies and potential sources of bias. It should also demonstrate that it is well accepted by responders and that items accurately reflect the underlying concepts and theory. Ideally, an independent 'gold standard' should be available when developing the questionnaire [11, 12].

Other research has clearly demonstrated the need for reliable and valid measures. For example Marshall et al.[13] found that clinical trials evaluating interventions for people with schizophrenia were almost 40% more likely to report that treatment was effective when they used unpublished scales as opposed to validated measures. Thus there is a strong case for the development, evaluation and utilisation of valid measures.

Although a number of scales have been developed for measuring resilience, they are not widely adopted and no one scale is preferable over the others [14]. Consequently, researchers and clinicians have little robust evidence to inform their choice of a resilience measure and may make an arbitrary and inappropriate selection for the population and context. Methodological reviews aim to identify, compare and critically assess the validity and psychometric properties of conceptually similar scales, and make recommendations about the most appropriate use for a specific population, intervention and outcome. Fundamental to the robustness of a methodological review are the quality criteria used to distinguish the measurement properties of a scale to enable a meaningful comparison [15].

An earlier review of instruments measuring resilience compared the psychometric properties and appropriateness of six scales for the study of resilience in adolescents[16]. Although their search strategy was thorough, their quality assessment criteria were found to have weaknesses. The authors reported the psychometric properties of the measures (e.g. reliability, validity, internal consistency). However they did not use explicit quality assessment criteria to demonstrate what constitutes good measurement properties which in turn would distinguish what an acceptable internal consistency co-efficient might be, or what proportion of the lowest and highest scores might indicate floor or ceiling effects. On that basis, the review fails to identify where any of the scales might lack specific psychometric evidence, as that judgement is left to the reader.

The lack of a robust evaluation framework in the work of Ahern et al. [16] creates difficulties for interpreting overall scores awarded by the authors to each of the measures. Each measure was rated on a scale of one to three according to the psychometric properties presented, with a score of one reflecting a measure that is not acceptable, two indicating that the measure may be acceptable in other populations, but further work is needed with adolescents, and three indicating that the measure is acceptable for the adolescent population on the basis of the psychometric properties. Under this criteria only one measurement scale, the Resilience Scale [17] satisfied this score fully.

Although the Resilience Scale has been applied to younger populations, it was developed using qualitative data from older women. More rigorous approaches to content validity advocate that the target group should be involved with the item selection when measures are being developed[11, 15]. Thus applying a more rigorous criterion for content validity could lead to different conclusions.

In order to address known methodological weaknesses in the current evidence informing practice, this paper reports a methodological systematic review of resilience measurement scales, using published quality assessment criteria to evaluate psychometric properties[15]. The comprehensive set of quality criteria was developed for the purpose of evaluating psychometric properties of health status measures and address content validity, internal consistency, criterion validity, construct validity, reproducibility, responsiveness, floor and ceiling effects and interpretability (see Table 1). In addition to strengthening the previous review, it updates it to the current, and by identifying scales that have been applied to all populations (not just adolescents) it contributes an important addition to the current evidence base.

The aims are to:

-

Identify resilience measurement scales and their target population

-

Assess the psychometric rigour of measures

-

Identify research and practice implications

-

Ascertain whether a 'gold standard' resilience measure currently exists

Methods

Design

We conducted a quantitative methodological review using systematic principles [18] for searching, screening, appraising quality criteria and data extraction and handling.

Search strategy

The following electronic databases were searched; Social Sciences CSA (ASSIA, Medline, PsycInfo); Web of science (SSCI; SCI AHCI); Greenfile and Cochrane database of systematic reviews. The search strategy was run in the CSA data bases and adapted for the others. The focus was to identify peer reviewed journal articles where resilience was a key focus and/or is assessed. The search strategy was developed so as to encompass other related project research questions in addition to the information required for this paper.

-

A.

(DE = resilien*) and((KW = biol*) or(KW = geog*) or(KW = community))

-

B.

(DE = resilien*) and((KW = Interven*) or(KW = promot*) or(KW = associat*) or(KW = determin*) or(KW = relat*) or(KW = predict*) or(KW = review) or (definition))

-

C.

(DE = resilien*) and ((KW = questionnaire) or (KW = assess*) or (KW = scale) or (KW = instrument))

Table 2 defines the evidence of interest for this methodological review.

For this review all the included papers were searched to identify, in the first instance, the original psychometric development studies. The search was then further expanded and the instrument scale names were used to search the databases for further studies which used the respective scales. A general search of the internet using the Google search engine was undertaken to identify any other measures, with single search terms 'resilience scale', 'resilience questionnaire', 'resilience assessment', 'resilience instrument.' Reference lists of all identified papers were hand searched. Authors were contacted for further information regarding papers that the team were unable to obtain.

Inclusion criteria

Peer reviewed journal articles where resilience measurement scales were used; the population of interest is human (not animal research); publications covering the last twenty years (1989 to September 2009). This time-frame was chosen so as to capture research to answer other Resilience and Healthy Ageing project questions, which required the identification of some of the earlier definitive studies of resilience, to address any changes in meaning over time and to be able to provide an accurate count of resilience research as applied to the different populations across the life course. All population age groups were considered for inclusion (children, adolescents/youth, working age adults, older adults).

Exclusion criteria

Papers were excluded if only the title was available, or the project team were unable to get the full article due to the limited time frame for the review.

Studies that claimed to measure resilience, but did not use a resilience scale were excluded from this paper. Papers not published in English were excluded from review if no translation was readily available.

Data extraction and quality assessment

All identified abstracts were downloaded into RefWorks and duplicates removed. Abstracts were screened according to the inclusion criteria by one person and checked by a second. On completion full articles that met the inclusion criteria were retrieved and reviewed by one person and checked by a second, again applying the inclusion criteria. The psychometric properties were evaluated using the quality assessment framework, including content validity, internal consistency, criterion validity, construct validity, reproducibility, responsiveness, floor and ceiling effects and interpretability (see table 1). A positive rating (+) was given when the study was adequately designed, executed and analysed, had appropriate sample sizes and results. An intermediate rating (?) was given when there was an inadequate description of the design, inadequate methods or analyses, the sample size was too small or there were methodological shortfalls. A negative rating (-) was given when unsatisfactory results were found despite adequate design, execution, methods analysis and sample size. If no information regarding the relevant criteria was provided the lowest score (0) was awarded.

Study characteristics (the population(s) the instrument was developed for, validated with, and subsequently applied to, the mode of completion) and psychometric data addressing relevant quality criteria were extracted into purposively developed data extraction tables. This was important as a review of quality of life measures indicates that the application to children of adult measures without any modification may not capture the salient aspects of the construct under question [19].

An initial pilot phase was undertaken to assess the rigour of the data extraction and quality assessment framework. Two authors (GW and KB) independently extracted study and psychometric data and scored responses. Discrepancies in scoring were discussed and clarified. JN assessed the utility of the data extraction form to ensure all relevant aspects were covered. At a further meeting of the authors (GW, KB and JN) it was acknowledged that methodologists, researchers and practitioners may require outcomes from the review presented in various accessible ways to best inform their work. For example, methodologists may be most interested in the outcome of the quality assessment framework, whereas researchers and practitioners needing to select the most appropriate measure for clinical use may find helpful an additional overall aggregate score to inform decision making. To accommodate all audiences we have calculated and reported outcomes from the quality assessment framework and an aggregate numerical score (see table 1).

To provide researchers and practitioners with a clear overall score for each measure, a validated scoring system ranging from 0 (low) to 18 (high. This approach to calculating an overall score has been utilised in other research [20] where a score of 2 points is awarded if there is prima facie evidence for each of the psychometric properties being met; 1 point if the criterion is partially met and 0 points if there is no evidence and/or the measure failed to meet the respective criteria. In line with the application of this quality criteria with another methodological assessment [21] a score was awarded under the 'responsiveness' criterion to scales that reported change scores over time.

A number of studies that had used some of the measures provided further data additional to the validation papers, mainly on internal consistency and construct validity. In these cases a score was awarded and an overall score calculated for the relevant criteria. Data regarding the extent to which the measure was theoretically grounded was extracted for critical evaluation by discussion.

Results

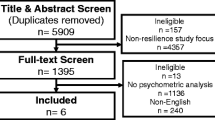

The search yielded a large amount of potential papers. Figure 1 summarises the process of the review. Seventeen resilience measurement scales were initially identified, and a further 38 papers were identified that had used the scales (see additional file 1). Of these, five papers were unobtainable. One of the measures - the Resiliency Attitudes Scale [22] - was identified through its application in one of the included papers. Although a website exists for the measure, there does not appear to be any published validation work of the original scale development, therefore it was excluded from the final review. Another measure excluded at a later stage after discussion between the authors was the California Child Q-Set (CCQ-Set). Designed to measure ego-resiliency and ego-control, the CCQ-Set does not represent an actual measurement scale, but an assessment derived from 100 observer rated personality characteristics. The final number of measures reviewed was fifteen, with an additional four being reported on that were reductions/refinements of the original measure.

Table 3 provides a description of included measures [14, 17, 23–42]. In some instances, further development of measures led to reduced or refined versions of the same scale. In these instances results are presented separately for each version of the scale. The mode of completion for all of the measures was self report. The majority (9) focused on assessing resilience at the level of individual characteristics/resources only.

Overall quality

Table 4 presents the overall quality score of the measures and scores for each quality criteria. With the exception of the Adolescent Resilience Scale and the California Healthy Kids Survey, all of the measures received the highest score for one criteria. Six measures (the RSA, Brief Resilience Scale, Resilience Scale, Psychological Resilience, READ, CD-RISC-10) scored high on two criteria.

Content validity

Four measures (Resiliency Attitudes and Skills Profile, CYRM; Resilience Scale; READ) achieved the maximum score for content validity and the target population were involved in the item selection. One measure (California Healthy Kids Survey) scored a 0 as the paper did not describe any of the relevant criteria for content validity. The remainder generally specified the target population, had clear aims and concepts but either did not involve the target population in the development nor undertook pilot work.

Internal consistency

With the exception of Bromley, Johnson and Cohen's examination of Ego Resilience [42], all measures had acceptable Cronbach Alphas reported for the whole scales. The former does not present figures for the whole scale. Alphas were not reported for subscales of the Resilience Scale, the California Healthy Kids Survey, Ego Resiliency and the CD-RISC.

For the Resiliency Attitudes and Skills Profile only one subscale was >0.70. For the RSA, two separate analyses report that one of the six subscales to be <0.70. For the 30 item version of the Dispositional Resilience Scale, the challenge subscale alpha = 0.32, and the author recommends the full scale is used. In the 15 item version, the challenge subscale alpha = 0.70. Bromley et al.'s examination of ego resilience [42] notes that two of the four sub-scales had α < 0.70. One of the five subscales of the READ had α <0.70. Across four different samples the Brief Resilience Scale had alphas >0.70 and <0.95, the YR:ADS, Psychological Resilience and the Adolescent Resilience Scale report α > 0.70 and <0.95 for all subscales, however no factor analysis is reported for the Adolescent Resilience Scale.

Criterion validity

There is no apparent 'gold standard' available for criterion validity and resilience, and most authors did not provide this information. The Ego Resiliency scale[40] was developed as a self report version of an observer rated version of Ego Resiliency [43] and the latter is stated as the criterion. From two different samples, coefficients of 0.62 and 0.59 are reported. Smith et al. [36] report correlations between the Brief Resilience Scale and the CD-RISC of 0.59 and the ER-89 of 0.51. Bartone [24] reports a correlation of -0.71 between the 30 item Dispositional Resilience Scale and an earlier version of the measure.

Construct validity

In the absence of a 'gold standard', validity can be established by indirect evidence, such as construct validity [21]. Eight measures achieved the maximum score on this criterion (ER-89, CD-RISC (both 25 and 10 item versions), RSA (37 and 33 item versions), Brief Resilience Scale, RS, Psychological Resilience, the READ and Ego-Resiliency). Evidence for construct validity was lacking in the Dispositional Resilience Scale, YR:ADS, California Healthy Kids Survey and the CYRM.

Reproducibility - agreement

Information on agreement was not present in any of the papers.

Reproducibility - reliability (test-retest)

Reliability was investigated for five measures. Three did not specify the type of analysis. The test re-test coefficients are reported for the 15 items Dispositional Resilience Scale, with correlations of 0.78 for commitment, 0.58 for control and 0.81 for challenge. The ER-89 test-retest correlations were 0.67 and 0.51 for two different groups (females and males) however information was lacking about the procedure. For the 37-item RSA the test re-test correlations were >0.70 for all the subscales except the social support (0.69), for the 33 item RSA test-retest correlations were >0.70 for all the subscales. The ICC was 0.87 for the CD-RISC, but the sample size <50 (n = 24) and the type of ICC is not specified. The ICC for agreement for the Brief Resilience Scale was 0.69 in one sample (n = 48) and 0.62 in another (n = 61).

Responsiveness

Changes over time were examined in the CD-RISC only. Pre and post treatment CD-RISC scores were compared in PTSD treatment responders and non-responders. The patients were receiving drug treatments as part of PTSD clinical trials. No MIC was specified, although they note that response was defined by a score of Clinical Global Improvement with a score of 1 (very much improved); 2 (much improved); 3 or more (minimal or no improvement). It appears that the CD-RISC scores increased significantly with overall clinical improvement, and that this improvement was in proportion to the degree of global clinical improvement. Some limited results are available for the Resilience Scale in Hunter & Chandler [44], who note that post-test scores were significantly higher than pre-test, however the data presented is incomplete and unclear.

Floor/ceiling effects

The extent of floor or ceiling effects was not reported for any measures.

Interpretability

For eight measures (RSA 37 items; CD-RISC 25 items; Brief Resilience Scale; Psychological Resilience; The Resilience Scale, the ER-89; the Adolescent Resilience Scale; the Dispositional Resilience Scale), information on sub-groups that were expected to differ was available and in most cases means and standard deviations were presented, although information on what change in scores would be clinically meaningful (MIC) was not specified. Sub group analysis information for the Resilience Scale was available in Lundman, Strandberg, Eisemann, Gustafason and Brulin [45] and Rew, Taylor-Seehafer, Thomas and Yockey [46].

Discussion

Fifteen measures were identified that propose to measure resilience. All of these measures had some missing information regarding the psychometric properties. Overall, the CD-RISC (25 items), the RSA (37 items) and the Brief Resilience Scale received the highest ratings, although when considering all quality criteria, the quality of these questionnaires might be considered as only moderate. These three aforementioned questionnaires have been developed for use with an adult population.

All but one of the identified resilience scales reflects the availability of assets and resources that facilitate resilience, and as such may be more useful for measuring the process leading to a resilient outcome, or for clinicians and researchers who are interested in ascertaining the presence or absence of these resources. The Brief Resilience Scale states its aim is to assess resilience as an outcome; that is the ability to 'bounce back'. Even so, items in the Brief Resilience Scale, although corresponding with the ability to recover and cope with difficulties, all reflect a sense of personal agency, e.g. 'I usually come through difficult times with little trouble' or 'I have a hard time making it through stressful events'. Most of the measures focus on resilience at the level of the individual only. Two of these (the ER 89 and Psychological Resilience) presented a good theoretical basis to justify the item selection.

Whilst a strong sense of personal agency is important for negotiating adversity, the availability of resources from the level of family and community are also important. The conceptual definition of resilience in the introduction reflects this multi-level perspective of resilience. The development of a measurement instrument capable of assessing a range of protective mechanisms within multiple domains provides an approach to operationalising resilience as a dynamic process of adaptation to adversity [47]. Ideally, measures of resilience should be able to reflect the complexity of the concept and the temporal dimension. Adapting to change is a dynamic process [48]. However only five measures (the CYRM, the RSA, the Resilience Scale of the California Healthy Kids Survey the READ and the YR: ADS) examine resilience across multiple levels, reflecting conceptual adequacy.

Strengths and weaknesses

To our knowledge, no previous study has systematically addressed the psychometric properties of resilience measures using well-defined criteria. The previous review [16] described a limited number of psychometric properties and did not evaluate them against clear criteria. The improved quality assessment applied in this paper has contributed new evidence to the findings of the previous review. Likewise, extending the inclusion criteria to include all populations, not just adolescents, has increased the number of measures identified and presents more options for a researcher seeking a measure of resilience. However, as yet there is no single measure currently available that we would recommend for studies which run across the lifespan.

Another point relates to the extent to which the measures are culturally appropriate. One scale in particular, the CYRM, received extensive development and piloting within eleven countries, although the authors note that "definitions of resilience are ambiguous when viewed across cultures" (p.174). Thus the meaning of resilience may be culturally and contextually dependent [38].

It is important to identify what the benchmark for 'success' might be for different cultures, who might place different values on such criteria. In terms of the community as a facilitator of resilience, most of the measures for children and adolescents identified in this review have an emphasis on school based resources. This may be appropriate for Western cultures, but be far less so in a country where children do not have automatic access to education. Ungar et al. [38] refer to the 'emic' perspective, which "seeks to understand a concept from within the cultural frame from which the concept emerges" (p.168). From this perspective, the concept of resilience may not necessarily be comparable across cultures. Having said that, Ungar et al [38] found that the key factors underlying resilience were universally accepted across their participating countries, but they were perceived differently by the youths completing the questionnaire. Nevertheless, the setting and circumstances in which a questionnaire is administered play an important role. A good questionnaire seeks to minimise situational effects [12].

As well as reviewing original papers on the psychometric development and validation of resilience measures, this review also sought to identify studies that had used or adapted the respective scales, or contributed to further validation. A further 38 papers were identified, but most studies focussed on the application of scales, and tended to only report information relating to internal consistency. The exceptions here related to four studies that focussed on scale refinement.

The potential limitation of our search strategy should also be considered. As with many reviews, a restriction was placed on the time frame within which to indentify potential studies. If readers wish to be certain no other measures have been developed or new evidence on existing measures published, they should run the search strategy from October 2009 onwards. Likewise, we placed a lower limit of 1989 on the searches, for which the rationale is outlined in the inclusion criteria. We aimed to develop a search strategy sensitive enough to identify relevant articles, and specific enough to exclude unwanted studies. Although we searched 8 databases, we fully acknowledge the issue of potentially missing studies; this is one of the challenges of undertaking a review such as this, Whiting et al.[49] recommend undertaking supplementary methods such as reference screening. We hope that by conducting a general internet search in addition to database searching helps to alleviate the potential for overlooking relevant studies.

It should also be noted that the rating of the measurement scales was hampered by the lack of psychometric information, so it was impossible to give a score on a number of quality criterion, such as reproducibility and responsiveness. We wish to emphasise that this does not necessarily mean that the scale is poor, but would urge researchers to report as much information as possible so as to inform further reviews.

On the other hand, the quality assessment criteria used for this paper could be considered to be overly constraining. However it is one of the few available for evaluating measurement scales, and clearly identifies the strengths and weaknesses of respective measures.

Recommendations for further research

Our analyses indicate the need for better reporting of scale development and validation, and a requirement for this information to be freely available. Further development and reporting by the authors of the measurement scales could improve the assessments reported here.

Most of the measures advocated application where assessment of change would be required, for example in a clinical setting, or in response to an intervention. An important aspect of three of the criterion (agreement, responsiveness and interpretability) was whether a minimal important change (MIC) was defined. However none of the measures reported a MIC, and it was impossible to receive the maximum score for these criterion. Only one validation paper (the CD-RISC) examined change scores and reported their statistical significance. However it has been noted that statistical significance in change scores does not always correspond to the clinical relevance of effect, which often is due to the influence of sample size [50]. Thus developers of measurement scales should indicate how much change is regarded as clinically meaningful.

As some of the scales are relatively new, and are unlikely as yet to have been adopted into practice, there is scope to improve here. Qualitative research with different patient groups/populations would enable an understanding of how any quantitative changes match with qualitative perspectives of significance. There is also a need for researchers who examine changes scores to present effect sizes, or as a minimum, ensure that data on means, standard deviations and sample sizes are presented. This will enable others who may be considering using a resilience measure in a clinical trial to be able to perform a sample size calculation. However what is lacking from most measures is information on the extent to which measures are responsive to change in relation to an intervention. It is difficult to ascertain whether or not an intervention might be theoretically adequate and able to facilitate change, and whether the measure is able to accurately detect this change.

Also important to note is the absence of a conceptually sound and psychometrically robust measure of resilience for children aged under 12. Only one of the measures, the Resilience Scale of the California Healthy Kids Survey applied this to primary school children (mean ages 8.9, 10.05, 12.02), however this measure scored poorly according to our quality assessment. Resilience research with children has tended to operationalise resilience by looking at ratings of adaptive behaviour by other people, such as teachers, parents, etc. A common strategy is to use task measures which reflect developmental stages [6]. For example Cichetti and Rosgoch [51] examined resilience in abused children and used a composite measure of adaptive functioning to indicate resilience which consists of 7 indicators; different aspects of interpersonal behaviour important for peer relations, indicators of psychopathology and an index of risk for school difficulties.

Implications for practice

Making recommendations about the use of resilience measures is difficult due to the lack of psychometric information available for our review. As with recommendations in other reviews [21], consideration should be given to the aim of the measurement; in other words, 'what do you want to use it for?' Responsiveness analyses are especially important for evaluating change in response to an intervention [21]. Unfortunately only one measure, the CD-RISC has been used to look at change in response to an intervention. This measure scored also highest on the total quality assessment, but would benefit from further theoretical development.

However five measures (the RSA, the CD-RISC, the Brief Resilience Scale, the ER-89 and the Dispositional Resilience Scale provided test-retest information, and the RSA scored the maximum for this criteria. This provides some indication of the measure's stability, and an early indication of the potential for it to be able to detect clinically important change, as opposed to measurement error. For researchers interested in using another resilience measure to ascertain change, in the first instance we would recommend that reliability (test-rest) for the measure is ascertained prior to inclusion in an evaluation.

None of the adolescent resilience measures scored more than 5 on the quality assessment. The higher scoring RS has been applied to populations across the lifespan from adolescence upwards. However as development was undertaken with older women, it is questionable as to how appropriate this measure is for younger people. Given the limitations, in the first instance, consideration should perhaps be given to measures that achieved the highest score on at least two of the criteria. On that basis the READ may be an appropriate choice for adolescents.

A further important point not covered in the quality assessment criteria related to the applicability of the questionnaire. Questionnaires that require considerable length of time to complete may result in high rates of non-response and missing data. Initial piloting/consultation with qualitative feedback could help identify the questionnaire design that is most likely to be positively received by the target group. As noted above, from a cultural perspective, care needs to be given that the choice of measure is meaningful for the population it is to be applied to. One measure (the CYRM) was developed simultaneously across eleven countries, and may be the best choice for a cross-national survey.

In terms of our findings, for researchers undertaking cross-sectional surveys, especially if undertaking multivariate data analysis, consideration could be given to measures that demonstrate good content and construct validity and good internal consistency. This could provide some assurances that the concept being measured is theoretically robust, that any sub-scales are sufficiently correlated to indicate they are measuring the same construct and that analyses will be able to sufficiently discriminate between and/or soundly predict other variables of interest. The Brief Resilience Scale could be useful for assessing the ability of adults to bounce back from stress, although it does not explain the resources and assets that might be present or missing that could facilitate this outcome. In practice, it is likely that a clinician would need to know an individual's strengths and weaknesses in the availability of assets and resources in order to facilitate interventions to promote development of resilience. Assessing a range of resilience promoting processes would allow key research questions about human adaptation to adversity to be addressed [52]. Identifying protective or vulnerability factors can guide a framework for intervention, for example a preventative focus that aims to develop personal coping skills and resources before specific encounters with real life adversity [47].

Conclusions

We found no current 'gold standard' amongst 15 measures of resilience. On the whole, the measures developed for adults tended to achieve higher quality assessment scores. Future research needs to focus on reporting further validation work with all the identified measures. A choice of valid resilience measures for use with different populations is urgently needed to underpin commissioning of new research in a public health, human-wellbeing and policy context.

Authors' information

Gill Windle PhD is a Research Fellow in Gerontology with expertise in mental health and resilience in later life, and quantitative research methods.

Kate Bennett PhD is a Senior Lecturer in Psychology with expertise in bereavement and widowhood.

Jane Noyes PhD is Professor of Nursing Research with expertise in health services research and evaluation.

References

Haskett ME, Nears K, Ward CS, McPherson AV: Diversity in adjustment of maltreated children: Factors associated with resilient functioning. Clin Psychol Rev 2006,26(6):796–812. 10.1016/j.cpr.2006.03.005

Fergus S, Zimmerman MA: Adolescent resilience: a framework for understanding healthy development in the face of risk. Annu Rev Public Health 2005, 26: 399–419. 10.1146/annurev.publhealth.26.021304.144357

Friedli L: Mental health, resilience and inequalities. Denmark: World Health Organisation; 2009. [http://www.euro.who.int/document/e92227.pdf]

The Scottish Government: Equally well. Report of the Ministerial Task Force on Health Inequalities. Edinburgh: The Scottish Government; 2008., 2: [http://www.scotland.gov.uk/Publications/2008/06/09160103/0]

Medical Research Council: Lifelong health and well-being. 2010. [http://www.mrc.ac.uk/Ourresearch/ResearchInitiatives/LLHW/index.htm#P61_3876]

Luthar S, Cicchetti D, Becker B: The Construct of Resilience: A Critical Evaluation and Guidlines for Future Work. Child Dev 2000,71(3):543–562. 10.1111/1467-8624.00164

Kaplan HB: Toward an understanding of resilience: A critical review of definitions and models. In Resilience and development: Positive life adaptations. Edited by: Glantz MD, Johnson JR. New York: Plenum; 1999:17–83.

Masten A: Resilience in developing systems: Progress and promise as the fourth wave rises. Dev Psychopathol 2007, 19: 921–930. 10.1017/S0954579407000442

Vanderbilt-Adriance E, Shaw DS: Conceptualizing and re-evaluating resilience across levels of risk, time, and domains of competence. Clinical Child and Family Psychology Review 2008,11(1–2):30–58. 10.1007/s10567-008-0031-2

Windle G, The Resilience Network: What is resilience? A systematic review and concept analysis. Reviews in Clinical Gerontology 2010, 21: 1–18. 10.1017/S0959259810000420

Streiner DL, Norman GR: Health Measurement Scales: A practical guide to their development and use. Oxford: Oxford University Press; 2008.

The IEA European questionnaires Group: Epidemiology Deserves Better Questionnaires. 2005. [http://www.iea-europe.org/download/Questionnaires.pdf]

Marshall M, Lockwood A, Bradley C, Adams C, Joy C, Fenton M: Unpublished rating scales: A major source of bias in randomised controlled trials of treatments for schizophrenia. Br J Psychiatry 2000, 176: 249–252. 10.1192/bjp.176.3.249

Connor KM, Davidson JRT: Development of a new resilience scale: The Connor-Davidson resilience scale (CD-RISC). Depress Anxiety 2003,18(2):76–82. 10.1002/da.10113

Terwee CB, Bot SDM, de Boer MR, van der Windt D, Knol DL, Dekker J, Bouter LM, de Vet H: Quality criteria were proposed for measurement properties of health status questionnaires. J Clin Epidemiol 2007, 60: 34–42. 10.1016/j.jclinepi.2006.03.012

Ahern NR, Kiehl EM, Sole ML, Byers J: A review of instruments measuring resilience. Issues in Comprehensive Pediatric Nursing 2006,29(2):103–125. 10.1080/01460860600677643

Wagnild GM, Young HM: Development and psychometric evaluation of the resilience scale. Journal of Nursing Measurement 1993,1(2):165–178.

NHS Centre for Reviews and Dissemination: Systematic reviews. CRD's guidance for undertaking reviews in health care. University of York; 2008. [http://www.york.ac.uk/inst/crd/pdf/Systematic_Reviews.pdf]

Eiser C, Morse M: Quality of life measures of chronic diseases in childhood. Health Technology Assessment England: Health Technology Assessment Programme; 2000.,5(4): [http://www.hta.ac.uk/project/1040.asp]

Russell I, Di Blasi Z, Lambert M, Russell D: Systematic reviews and meta-analyses: opportunities and threats. In Evidence based Fertility Treatment. Edited by: Templeton A, O'Brien P. RCOG Press, London, UK; 1998.

Sikkes SAM, de Lange-de Klerk ESM, Pijnenburg YAL, Scheltens P, Uitdehaag BMJ: A systematic review of instrumental activities of daily living scales in dementia: room for improvement. J Neurol Neurosurg Psychiatry 2008,80(7):7–12.

Biscoe , Harris : The Resiliency Attitudes Scale. [http://www.dataguru.org/ras/]

Bartone RT, Ursano RJ, Wright KM, Ingraham LH: The impact of a military air disaster on the health of assistance workers: A prospective study. J Nerv Ment Dis 1989, 177: 317–328. 10.1097/00005053-198906000-00001

Bartone P: Development and validation of a short hardiness measure. Paper presented at the annual convention of the American Psychological Society. Washington DC 1991.

Bartone PT: A short hardiness scale. Paper presented at the annual convention of the American Psychological Society. New York 1995.

Bartone P: Test-retest reliability of the dispositional resilience scale- 15, a brief hardiness scale. Psychol Rep 2007, 101: 943–944.

Block J, Kremen AM: IQ and ego-resiliency: Conceptual and empirical connections and separateness. J Pers Soc Psychol 1996, 70: 349–361. 10.1037/0022-3514.70.2.349

Campell-Sills L, Stein MB: Psychometric analysis and refinement of the Connor-Davidson Resilience Scale (CD-RISC): Validation of a 10 item measure of resilience. J Trauma Stress 2007,20(6):1019–1028. 10.1002/jts.20271

Connor KM, Davidson JRT, Lee LC: Spirituality, resilience, and anger in survivors of violent trauma: A community survey. J Trauma Stress 2003,16(5):487–494. 10.1023/A:1025762512279

Donnon T, Hammond W, Charles G: Youth resiliency: assessing students' capacity for success at school. Teaching and Learning, Winter 2003, 23–28.

Donnon T, Hammond W: A psychometric assessment of the self reported youth resiliency assessing developmental strengths questionnaire. Psychol Rep 2007, 100: 963–978. 10.2466/pr0.100.3.963-978

Friborg O, Hjemdal O, Rosenvinge JH, Martinussen M: A new rating scale for adult resilience: What are the central protective resources behind healthy adjustment? International Journal of Methods in Psychiatric Research 2003, 12: 65–76. 10.1002/mpr.143

Friborg O, Barlaug D, Martinussen M, Rosenvinge JH, Hjemdal O: Resilience in relation to personality and intelligence. International Journal of Methods in Psychiatric Research 2005,14(1):29–42. 10.1002/mpr.15

Hurtes KP, Allen LR: Measuring resiliency in youth: The resiliency attitudes and skills profile. Therapeutic Recreation Journal 2001,35(4):333–347.

Oshio A, Kaneko H, Nagamine S, Nakaya M: Construct validity of the adolescent resilience scale. Psychol Rep 2003, 93: 1217–1222.

Sun J, Stewart D: Development of population-based resilience measures in the primary school setting. Health Education 2007,7(6):575–599. 10.1108/09654280710827957

Smith BW, Dalen J, Wiggins K, Tooley E, Christopher P, Bernard J: The brief resilience scale: assessing the ability to bounce back. International Journal of Behavioural Medicine 2008, 15: 194–200. 10.1080/10705500802222972

Ungar M, Liebenberg L, Boothroyd R, Kwong WM, Lee TY, Leblanc J, Duque L, Maknach A: The study of youth resilience across cultures: Lessons from a pilot study of measurement development. Research in Human Development 2008,5(3):166–180. 10.1080/15427600802274019

Windle G, Markland DA, Woods B: Examination of a theoretical model of psychological resilience in older age. Aging & Mental Health 2008,12(3):285–292.

Klohnen EC: Conceptual analysis and measurement of the construct of ego-resiliency. J Pers Soc Psychol 1996,70(5):1067–1079. 10.1037/0022-3514.70.5.1067

Hjemdal O, Friborg O, Stiles TC, Martinussen M, Rosenvinge J: A new rating scale for adolescent resilience. Grasping the central protective resources behind healthy development. Measurement and Evaluation in Counseling and Development 2006, 39: 84–96.

Bromley E, Johnson JG, Cohen P: Personality strengths in adolescence and decreased risk of developing mental health problems in early adulthood. Compr Psychiatry 2006,47(4):317–326. 10.1016/j.comppsych.2005.11.003

Block JH, Block J: The California Child Q Set. Berkeley: University of California, Institute of Human Development; 1969.

Hunter AJ, Chandler GE: Adolescent resilience. Image: Journal of Nursing Scholarship 1999,31(2):243–247. 10.1111/j.1547-5069.1999.tb00488.x

Lundman B, Strandberg G, Eisemann M, Gustafson Y, Brulin C: Psychometric properties of the Swedish version of the resilience scale. Scandinavian Journal of Caring Sciences 2007,21(2):229–237. 10.1111/j.1471-6712.2007.00461.x

Rew L, Taylor-Seehafer M, Thomas NY, Yockey RD: Correlates of resilience in homeless adolescents. Journal of Nursing Scholarship 2001,33(1):33–40. 10.1111/j.1547-5069.2001.00033.x

Olsson CA, Bond L, Burns JM, Vella-Brodrick DA, Sawyer SM: Adolescent resilience: A concept analysis. Journal of Adolescence 2003,26(1):1–11. 10.1016/S0140-1971(02)00118-5

Donoghue EM, Sturtevant VE: Social science constructs in ecosystem assessments: Revisiting community capacity and community resiliency. Society & Natural Resources 2007,20(10):899–912.

Whiting P, Westwood M, Burke M, Sterne J, Glanville J: Systematic reviews of test accuracy should search a range of databases to identify primary studies. Journal of Clinical Epidemiology 61: 357–364.

de Vet HC, Terwee CB, Ostelo RW, Beckerman H, Knol DL, Bouter LM: Minimal changes in health status questionnaires: distinction between minimally detectable change and minimally important change. Health Qual Life Outcomes 2006, 4: 54. 10.1186/1477-7525-4-54

Cicchetti D, Rogosch FA: The role of self-organization in the promotion of resilience in maltreated children. Dev Psychopathol 1997,9(4):797–815. 10.1017/S0954579497001442

Allen JR: Of resilience, vulnerability, and a woman who never lived . Child and Adolescent Psychiatric Clinics of North America 1998, 7: 53–71.

Booth A: Formulating research questions. In Evidence based practice: A handbook for information professionals. Edited by: Booth A, Brice A. London: Facet; 2003.

Acknowledgements

This paper has been developed as part of the work of the Resilience and Healthy Ageing Network, funded through the UK Lifelong Health and Wellbeing Cross-Council Programme. The LLHW Funding Partners are: Biotechnology and Biological Sciences Research Council, Engineering and Physical Sciences Research Council, Economic and Social Research Council, Medical Research Council, Chief Scientist Office of the Scottish Government Health Directorates, National Institute for Health Research/The Department of Health, The Health and Social Care Research & Development of the Public Health Agency (Northern Ireland), and Wales Office of Research and Development for Health and Social Care, Welsh Assembly Government.

The authors would like to thank the network members for their inspiring discussions on the topic, and Jenny Perry, Eryl Roberts and Marta Ceisla (Bangor University) for their assistance with abstract screening and identification of papers, and to the reviewers of the original manuscript for their constructive and helpful comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The lead author is also the developer of one of the scales included in the review (Psychological Resilience). To ensure the fidelity of the review, the measure was reviewed by JN and KB.

Authors' contributions

GW lead the work-programme of the Resilience Network and was responsible for the search strategy and conceptualisation of the manuscript. She lead the production of the manuscript and reviewed each of the included papers with KB.

KB reviewed the included papers and contributed to the writing of the manuscript.

JN provided methodological oversight and expertise for the review and contributed to the writing of the manuscript.

All authors read and approved the final manuscript.

Electronic supplementary material

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Windle, G., Bennett, K.M. & Noyes, J. A methodological review of resilience measurement scales. Health Qual Life Outcomes 9, 8 (2011). https://doi.org/10.1186/1477-7525-9-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1477-7525-9-8