Abstract

Background

Based on sensitivity analysis of the MacDonald-Ross model, it has long been argued that the best way to reduce malaria transmission is to target adult female mosquitoes with insecticides that can reduce the longevity and human-feeding frequency of vectors. However, these analyses have ignored a fundamental biological difference between mosquito adults and the immature stages that precede them: adults are highly mobile flying insects that can readily detect and avoid many intervention measures whereas mosquito eggs, larvae and pupae are confined within relatively small aquatic habitats and cannot readily escape control measures.

Presentation of the hypothesis

We hypothesize that the control of adult but not immature mosquitoes is compromised by their ability to avoid interventions such as excito-repellant insecticides.

Testing the hypothesis

We apply a simple model of intervention avoidance by mosquitoes and demonstrate that this can substantially reduce effective coverage, in terms of the proportion of the vector population that is covered, and overall impact on malaria transmission. We review historical evidence that larval control of African malaria vectors can be effective and conclude that the only limitations to the effective coverage of larval control are practical rather than fundamental.

Implications of the hypothesis

Larval control strategies against the vectors of malaria in sub-Saharan Africa could be highly effective, complementary to adult control interventions, and should be prioritized for further development, evaluation and implementation as an integral part of Rolling Back Malaria.

Similar content being viewed by others

Background

Domestic insecticide interventions such as pyrethroid-treated bednets can substantially lower morbidity and mortality [1] and remain the most commonly advocated methods for malaria prevention. Bednets have revitalized interest in vector control of malaria in sub-Saharan Africa where high transmission levels result in extremely stable malaria prevalence, incidence and clinical burden [2–4]. Insecticide-treated nets protect their occupants by diverting host-seeking vectors to look for a blood meal elsewhere and by killing those that attempt to feed [5, 6]. Treated nets can therefore also prevent malaria in unprotected individuals by suppressing vector numbers [7–9], survival [7–9], human blood indices [10, 11] and feeding frequency [11] in local populations. However, the results of individual studies often differ and although some trials with African vectors have demonstrated substantial reductions of vector density, survival and sporozoite prevalence [7–9], others have found little or no effects on the vector population as a whole [12–14]. These instances where bednets appear to have little effect upon vector populations have been attributed to various factors, including behavioural adaptation and dispersal between control and treatment villages [13, 15, 16], but here we explore the possibility that the ability of vectors to avoid interventions [17, 18] may also contribute to such apparent shortcomings.

Presentation of the Hypothesis

Suppression of transmission over large areas depends upon population-level exposure of vectors to the intervention and this, in turn, depends upon the level of coverage within the human community. Adult vectors, however, can avoid many commonly used insecticides [17], so effective coverage may not necessarily be equivalent to the absolute coverage of humans but may be considerably less if vectors evade it. By avoiding covered humans, vectors may redistribute their biting activity towards those who are not covered by personal protection measures such as treated bednets. Larval stages of mosquitoes are of relatively low mobility compared with flying adults and it is the humans that must bring the control to them rather than vice versa. We therefore hypothesize that the control of adult but not immature aquatic-stage mosquitoes is compromised by the ability of the former to avoid interventions such as excito-repellant insecticides, including bednet impregnation treatments or indoor residual sprays.

Testing the Hypothesis

For the purposes of this analysis, we define effective coverage as the proportion of the vector population that will be exposed to the intervention under given levels of absolute coverage and at a given ability to detect and avoid the intervention. We consider that at any given level of coverage, the vector population equilibrates between covered humans (C) and uncovered humans (U = 1 - C), in accordance with their propensity to avoid (α) the intervention measure, resulting in a steady-state proportion of the vector population that is covered (C*) and uncovered (U* = 1 - C*):

U* / C* = α U / C

Solving for C* and C, yields:

C* = C / α (1 - C) (1 + (C /α (1 - C)))

Here we model the effects typically expected from insecticide-impregnated bednets in African settings, using the Kilombero valley region of Tanzania as an example with a well-studied vectorial system dominated by An. arabiensis Patton. On the basis of detailed experimental hut trials [5, 6], we consider that bednets could approximately halve the baseline values for both the proportion surviving per feeding cycle (Pf*) and the proportion of blood meals taken from humans (Qh) for vectors effectively covered by the intervention. Thus, these key determinants of entomological inoculation rate (EIR) are estimated as weighted averages of those expected for the covered and uncovered populations:

P f * = P f (1 - C*) + 0.5 P f C*

Q h * = Q h (1 - C*) + 0.5 Q h C*

Based on these estimates we calculate the expected human biting rate, sporozoite prevalence and EIR for Namawala, a well characterized holoendemic village as previously described [4], at varying levels of coverage with bednets and varying levels of avoidance by vectors.

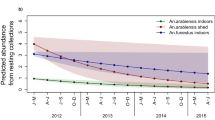

The predictions of our model indicate that avoidance behaviour by vectors could severely undermine the effective coverage achievable by bednet programmes, particularly at low and intermediate levels of coverage (Figure 1). Given the robustness of clinical malaria burden to reductions of transmission intensity, [3] such attenuation is of appreciable epidemiological significance. For example, in the absence of any avoidance behaviour (α = 1) bednets at an absolute coverage of 50% were predicted to reduce annual EIR from 246 to 22 infectious bites per year, whereas the same level of coverage with a ten-fold preference of vectors for uncovered versus covered areas (α = 10) would be expected to yield EIR of 161 with only minor reductions of biting rate and sporozoite prevalence (Figure 1). In simple terms, this makes the difference between a programme that can significantly lower risk of clinical malaria in unprotected individuals and one that cannot [3]. This trend is also clear in examining the major underlying determinants of EIR: avoidance can almost completely negate the effects of bednets upon vector survival (P f ) and human blood index (Q h *) at absolute coverage levels of up to 50%. Although less attenuation is observed at higher levels of absolute coverage, such levels are rarely achieved in real programmes and, even then, avoidance can still considerably undermine the ability of bednets to lower or destabilize transmission in an endemic area (Figure 1).

The predicted effects of insecticide-treated bednets upon vector bionomics and malaria transmission as a function of the ability of mosquitoes to avoid them. The effects increasing absolute coverage (C) upon effective coverage (C*), survival per feeding cycle (P f *), human blood index (Q h *), annual human biting rate (B h ), sporozoite prevalence (S) and annual entomological inoculation rate (EIR) are depicted as a function of increasing ability to avoid the intervention (increasing α).

The impacts we predicted for vector populations with moderate to high levels of avoidance appear more realistic than those without. Indeed our predictions for mosquitoes which do not avoid bednets are more dramatic than even the most successful field trials [7–9] and remarkably similar to those used to justify the Global Malaria Eradication Campaign based on indoor residual spraying [19, 20]. Large differences in the excito-repellency of pyrethroid formulations have been reported [6] and may help explain the contrasting effects of bednet programmes which do exert community-level effects [7–9] and those which do not [12–14], supporting the view that insecticide formulations should minimize excito-repellency to maximize effects at the community level.

The huge number of lives that bednets could save remains difficult to realize in practice because of difficulties in maintaining high absolute coverage [21, 22]. Furthermore, vector dispersal can often spread the effects of bednets over wide areas, sometimes making their impact difficult to measure [15, 16, 23]. On the basis of the modeling analysis presented here, we conclude that the effectiveness of bednets may also be restricted by the limiting effects of vector avoidance upon effective coverage. The effectiveness of malaria control programmes are crucially dependent upon not only the extent of coverage but also the ability to target the most intense foci of transmission [24, 25]. Thus, adulticide-based control may be limited because of constantly shifting distributions of biting vectors [26–28] and their ability to avoid interventions. A number of field studies have shown that vectors prevented from feeding upon individuals protected by treated nets are not diverted to unprotected humans in the same dwelling or those immediately nearby [5, 29, 30]. However, excito-repellent bednet treatments and indoor residual sprays are known to lower human blood indices in vector populations when applied at the community level [7, 10, 11, 18] so mosquitoes that are deterred from covered homes probably do feed elsewhere upon whatever unprotected humans and alternative hosts are available. Thus it seems that vector biting density may be redistributed to unprotected humans and livestock but over longer distances than have been tested thus far. Nevertheless, this concentration of bites upon unprotected people may not manifest itself as an increased biting rate because it could be counterbalanced by the reduction in the total number of bites taken by the shorter-lived vector population at reasonable levels of bednet coverage (Figure 1). In conclusion, we suggest that vector avoidance of excito-repellent insecticides may considerably limit the impacts of treated bednets and residual sprays on the vector populations and curtail their ability to suppress malaria transmission at the community level.

In this context it may be worthwhile considering alternative methods of malaria control that can complement intra-domiciliary insecticide interventions and augment transmission suppression by integrated programmes. Transmission-blocking vaccines and genetically modified mosquitoes will not be available for several years and their chances of success have been seriously questioned [31–33]. In contrast, the complete eradication of accidentally introduced An. gambiae from the north east coast of Brazil [34] and the Nile Valley of Egypt [35], six decades ago, are the only campaigns that have ever completely eliminated an African malaria vector species from a large area. In both these cases, 100% effective coverage was achieved because no specimen of An. gambiae has since been recorded at either site. Both campaigns were executed almost exclusively by ruthless, well-managed larval control [34, 35]. It has been reasoned that these examples are misleading because An. gambiae had colonized areas to which it was not well adapted [17]. Egypt was indeed the northernmost limit of the range of An. gambiae, but the ecological conditions in Brazil seemed ideally suited to it. Descriptions of the flooding valley of the Jaguaribe River are remarkably similar to those of many holoendemic parts of Africa, including the Kilombero valley, upon which we have based our modeling analysis [4]. Furthermore, adult density reached hundreds per house and their exceptional levels of infection could only have been possible with well-adapted, healthy, long-lived mosquitoes [34].

The kind of exhaustive and complete control applied during these intensive eradication campaigns could not be sustained indefinitely, especially in the poorest parts of sub-Saharan Africa. However, a clearly documented example of sustained and successful malaria prevention through larval control in sub-Saharan Africa has recently come to light and, once again, this successful endeavour pre-dates the advent of dichlorodiphenyltrichloroethane (DDT) [36]. An. funestus and An. gambiae were predominantly controlled by environmental management and regular larviciding, based on simple but rational entomological surveys. Malaria mortality, morbidity and incidence were reduced by 70–95% for two decades at quite reasonable expense [36]. There are many other examples of how larval control using standard insecticides and biological control agents [37] have contributed to malaria control in Africa and its associated islands [38–42], including Mauritius where local transmission has been sustainably eliminated [43, 44]. However, these are largely descriptive evaluations of operational programmes and larval control has never been evaluated in Africa through rigorous and specific trials similar to those which bednets have been put through [1].

Implications of the hypothesis

The Global Malaria Eradication Campaign marked a notable departure from larval control and focused on adult control with DDT, based on overly confident interpretation of models that failed to account for the mobility of adult mosquitoes as well as the plasticity and inter-species variability of their behaviour [17]. Larval control does not suffer from such drawbacks and should be integrated with more commonly used approaches such as improved access to screening and treatment, bednets or indoor-spraying [4, 25, 45, 46]. Controlling aquatic stages of malaria vectors depends upon finding where and when they occur and targeting them with appropriate intervention measures on a regular and indefinite basis. Given the extensive, diverse and sometimes obscure nature of breeding sites chosen by Afrotropical vectors, this represents a formidable challenge but one that has proven tractable to organized, well-supported efforts [34–36], [38–44]. Although the historically proven autocratic approaches applied in Brazil, Egypt and Zambia may not be applicable in the increasingly democratic post-colonial Africa of today, relevant administrative capacity and organizational tools, notably mobile phones, geographic information systems and remote sensing data, have become more widely available and could facilitate well-managed abatement programmes in sub-Saharan Africa [25]. Those who eradicated An. gambiae from Brazil and Egypt fully appreciated and exploited its notoriously anthropophilic behaviour. Although the innate preference of this species for human hosts [47] and for larval habitats that are near them [48, 49] makes An. gambiae a devastatingly efficient vector, it also renders its larvae vulnerable to control because they are often relatively easy to locate in association with human settlements and activities [25, 34, 35]. Surely with the advent of modern environmentally-friendly larvicides [42, 50–52] and geographic information technology [25], similar success can be achieved by determined efforts on the African continent in the near future? The largest obstacles to the implementation of effective larval control in Africa are practical rather than fundamental because of its dependence on well-organized vertical management and reliable infrastructure. We therefore suggest that rather than constantly looking for methods that do not have to wait upon economic and political development in Africa, those concerned with malaria control need to actively participate in this process so that malaria research and control capacity can be nurtured as an integral part of infrastructure in endemic nations [53].

Perhaps the most depressing indicator of just how much larval control of African malaria vectors has been neglected is that almost all the greatest successes were reported more than half a century ago. Most of the questions that were asked about the larval ecology of these deadly insects over 50 years ago [17] remain unanswered. We propose that larval control strategies against the vectors of malaria in sub-Saharan Africa should be seriously reconsidered and prioritized for development, evaluation and implementation.

Abbreviations

- α:

-

Propensity of adult mosquitoes to avoid the intervention measures

- Bh:

-

Annual vector biting rate experienced by humans

- C:

-

Absolute coverage; the proportion of the human population covered by an intervention programme.

- C*:

-

Effective coverage; the proportion of the vector population covered by an intervention programme.

- DDT:

-

dichlorodiphenyltrichloroethane

- EIR:

-

Entomological inoculation rate experienced by humans

- Pf:

-

Baseline survival probability per feeding cycle for vectors without any intervention programme

- Pf*:

-

Survival probability per feeding cycle for vectors under an intervention programme

- Qh:

-

Baseline proportion of vector bloodmeals taken from humans without any intervention programme

- Qh*:

-

Proportion of vector bloodmeals taken from humans under an intervention programme

- S:

-

Sporozoite prevalence in the vector population

- U:

-

Proportion of the human population not covered by an intervention programme.

- U*:

-

Proportion of the vector population not covered by an intervention programme.

References

Lengeler C: Insecticide treated bednets and curtains for malaria control. Cochrane Library Reports. 1998, 3: 1-70.

Beier JC, Killeen GF, Githure J: Short report: Entomologic inoculation rates and Plasmodium falciparum malaria prevalence in Africa. Am J Trop Med Hyg. 1999, 61: 109-113.

Smith TA, Leuenberger R, Lengeler C: Child mortality and malaria transmission intensity in Africa. Trends Parasitol. 2001, 17: 145-9. 10.1016/S1471-4922(00)01814-6.

Killeen GF, McKenzie FE, Foy BD, Schieffelin C, Billingsley PF, Beier JC: The potential impacts of integrated malaria transmission control on entomologic inoculation rate in highly endemic areas. Am J Trop Med Hyg. 2000, 62: 545-551.

Lines JD, Myamba J, Curtis CF: Experimental hut trials of permethrin-impregnated mosquito nets and eave curtains against malaria vectors in Tanzania. Med Vet Entomol. 1987, 1: 37-51.

Pleass RJ, Armstrong JRM, Curtis CF, Jawara M, Lindsay SW: Comparison of permethrin treatments for bednets in The Gambia. Bull Entomol Res. 1993, 83: 133-140.

Magesa SM, Wilkes TJ, Mnzava AEP, Njunwa KJ, Myamba J, Kivuyo MDP, Hill N, Lines JD, Curtis CF: Trial of pyrethroid impregnated bednets in an area of Tanzania holoendemic for malaria. Part 2 Effects on the malaria vector population. Acta Trop. 1991, 49: 97-108. 10.1016/0001-706X(91)90057-Q.

Robert V, Carnevale P: Influence of deltamethrin treatment of bednets on malaria transmission in the Kou valley, Burkina Faso. Bull Wld Hlth Org. 1991, 69: 735-740.

Carnevale P, Robert V, Boudin C, Halna JM, Pazart L, Gazin P, Richard A, Mouchet J: La lutte contre le paludisme par des moustiquaires impregnées de pyréthroides au Burkina Faso. Bull Soc Path Exot. 1988, 81: 832-846.

Bogh C, Pedersen EM, Mukoko DA, Ouma JH: Permethrin-impregnated bednet effects on resting and feeding behaviour of lymphatic filariasis vector mosquitoes in Kenya. Med Vet Entomol. 1998, 12: 52-59. 10.1046/j.1365-2915.1998.00091.x.

Charlwood JD, Graves PM: The effect of permethrin-impregnated bednets on a population of Anopheles farauti in coastal Papua New Guinea. Med Vet Entomol. 1987, 1: 319-327.

Quinones ML, Lines J, Thomson M, Jawara M, Greenwood BM: Permethrin-treated bednets do not have a "mass-killing effect" on village populations of Anopheles gambiae. Trans R Soc Trop Med Hyg. 1998, 92: 373-378.

Mbogo CNM, Baya NM, Ofulla AVO, Githure JI, Snow RW: The impact of permethrin-impregnated bednets on malaria vectors of the Kenyan coast. Med Vet Entomol. 1996, 10: 251-259.

Lindsay SW, Alonso PL, Armstrong Schellenberg JRM, Hemingway J, Adiamah JH, Shenton FC, Jawa M, Greenwood BM: A malaria control trial using insecticide-treated bednets and targeted chemoprophylaxis in a rural area of The Gambia, West Africa. 7. Impact of permethrin-impregnated bednets on malaria vectors. Trans R Soc Trop Med Hyg. 1993, 87 (Supplement 2): 45-51.

Thomson MC, Connor SJ, Qinones Ml, Jawara M, Todd J, Greenwood BM: Movement of Anopheles gambiae s.l. vectors between villages in The Gambia. Med Vet Entomol. 1995, 9: 413-419.

Thomas CJ, Lindsay SW: Local-scale variation in malaria infection amongst rural Gambian children estimated by satellite remote sensing. Trans R Soc Trop Med Hyg. 2000, 94: 159-163.

Muirhead-Thomson RC: Mosquito behaviour in relation to malaria transmission and control in the tropics. London: Edward Arnold & Co.;. 1951

Muirhead-Thomson RC: The significance of irritability, behaviouristic avoidance and allied phenomena in malaria eradication. Bull Wld Hlth Org. 1960, 22: 721-734.

MacDonald G: The epidemiology and control of malaria. London: Oxford University Press;. 1957

Garrett-Jones C: Prognosis for interruption of malaria transmission through assessment of the mosquito's vectorial capacity. Nature. 1964, 204: 1173-1175.

Snow RW, McCabe E, Mbogo CNM, Molyneux CS, Some ES, Mung'ala VO, Nevill CG: The effect of delivery mechanism on the uptake of bednet re-impregnation in Kilifi district, Kenya. Hlth Pol Plan. 1999, 14: 18-25. 10.1093/heapol/14.1.18.

Lines J, Harpham T, Leake C, Schofield C: Trends, priorities and policy directions in the control of vector-borne diseases in urban environments. Hlth Pol Plan. 1994, 9: 113-129.

Hii JLK, Smith T, Vounatsou P, Alexander N, Mai A, Ibam E, Alpers MP: Area effects of bednet use in a malaria-endemic area in Papua New Guinea. Trans R Soc Trop Med Hyg. 2001, 95: 7-13.

Woolhouse MEJ, Dye C, Etard JF, Smith T, Charlwood JD, Garnett GP, Hagan P, Hii JLK, Ndhlovu PD, Quinnell RJ, Watts CH, Chaniawana SK, Anderson RM: Heterogeneities in the transmission of infectious agents: implications for the design of control programmes. Proc Natl Acad Sci USA. 1997, 94: 338-342. 10.1073/pnas.94.1.338.

Carter R, Mendis KN, Roberts D: Spatial targeting of interventions against malaria. Bull Wld Hlth Org. 2000, 78: 1401-1411.

Smith T, Charlwood JD, Takken W, Tanner M, Spiegelhalter DJ: Mapping densities of malaria vectors within a single village. Acta Trop. 1995, 59: 1-18. 10.1016/0001-706X(94)00082-C.

Lindsay SW, Armstrong Schellenberg JRM, Zeiler HA, Daly RJ, Salum FM, Wilkins HA: Exposure of Gambian children to Anopheles gambiae vectors in an irrigated rice production area. Med Vet Entomol. 1995, 9: 50-58.

Ribeiro JMC, Seulu F, Abose T, Kidane G, Teklehaimanot A: Temporal and spatial distribution of anopheline mosquitoes in an Ethiopian village: implications for malaria control strategies. Bull Wld Hlth Org. 1996, 74: 299-305.

Hewitt S, Ford E, Urhaman H, Muhammad N, Rowland M: The effect of bednets on unprotected people: open-air studies in an Afghan refugee village. Bull Entomol Res. 1997, 87: 455-459.

Lindsay SW, Adiamah JH, Armstrong JRM: The effect of permethrin-impregnated bednets on house entry by mosquitoes in The Gambia. Bull Entomol Res. 1992, 82: 49-55.

Boete C, Koella JC: A theoretical approach to predicting the success of genetic manipulation of malaria mosquitoes in malaria control. Malar J. 2002, 1: 3-10.1186/1475-2875-1-3. [http://www.malariajournal.com/content/1/1/3]

Kiszewski AE, Spielman A: Spatially explicit model of transposon-based genetic drive mechanisms for displacing fluctuating populations of anopheline vector mosquitoes. J Med Entomol. 1998, 35: 584-90.

Saul A: Minimal efficacy requirements for malaria vaccines to significantly lower transmission in epidemic or seasonal malaria. Acta Trop. 1993, 52: 283-296. 10.1016/0001-706X(93)90013-2.

Soper FL, Wilson DB: Anopheles gambiae in Brazil: 1930 to 1940. New York: The Rockefeller Foundation;. 1943

Shousha AT: Species-eradication. The eradication of Anopheles gambiae from Upper Egypt, 1942–1945. Bull Wld Hlth Org. 1948, 1: 309-353.

Utzinger J, Tozan Y, Singer BH: Efficacy and cost effectiveness of environmental management for malaria control. Trop Med Intl Hlth. 2001, 6: 677-687. 10.1046/j.1365-3156.2001.00769.x.

Rozendaal JA: Vector Control. Methods for use by individuals and communities. Geneva: WHO;. 1997

Gopaul R: Surveillance entomologique à Maurice. Santé. 1995, 5: 401-405.

Fletcher M, Teklehaimanot A, Yemane G: Control of mosquito larave in the port city of Assab by an indigenous larvivorous fish, Aphanius dispar. Acta Trop. 1992, 52: 155-166. 10.1016/0001-706X(92)90032-S.

Sabatinelli G, Blanchy S, Majori G, Papakay M: Impact of the use of the larvivorous fish, Poecilia reticulata in the transmission of malaria in the Federal Islamic Republic of Comoros. Ann Parasitol Hum Comp. 1991, 66: 84-88.

Louis JP, Albert JP: Malaria in the Republic of Djibouti. Strategy for control using a biological antilarval campaign: indigenous larvivorous fishes (Aphanius dispar) and bacterial toxins. Méd Trop. 1988, 48: 127-131.

Barbazan P, Baldet T, Darriet F, Escaffre H, Djoda DH, Hougard JM: Impact of treatments with Bacillus sphaericus on Anopheles populations and the transmission of malaria in Maroua, a large city in a savannah region of Cameroon. J Am Mosq Control Assoc. 1998, 14: 33-9.

Ragavoodoo C: Situation du paludisme à Maurice. Santé. 1995, 5: 371-375.

Julvez J: Historique du paludisme insulaire dans l'océan Indien (Sud-Ouest). Une approche éco-épidémiologique. Santé. 1995, 5: 353-357.

Shiff C: Integrated approach to malaria control. Clin Microbiol Rev. 2002, 15: 278-298. 10.1128/CMR.15.2.278-293.2002.

Marsh K, Snow RW: Malaria transmission and morbidity. Parassitologia. 1999, 41:

Killeen GF, McKenzie FE, Foy BD, Bogh C, Beier JC: The availability of potential hosts as a determinant of feeding behaviours and malaria transmission by mosquito populations. Trans R Soc Trop Med Hyg. 2001, 95: 469-476.

Minakawa N, Mutero CM, Githure JI, Beier JC, Yan G: Spatial distribution and habitat characterization of Anopheline mosquito larvae in Western Kenya. Am J Trop Med Hyg. 1999, 61: 1010-1016.

Charlwood JD, Edoh D: Polymerase chain reaction used to describe larval habitat use by Anopheles gambiae complex (Diptera: Culicidae) in the environs of Ifakara, Tanzania. J Med Entomol. 1996, 33: 202-204.

Romi R, Ravoniharimelina B, Ramiakajato M, Majori G: Field trials of Bacillus thuringiensis H-14 and Bacillus sphaericus (strain 2362) formulations against Anopheles arabiensis in the central highlands of Madagascar. J Amer Mosq Control Assoc. 1993, 9: 325-329.

Seyoum A, Abate D: Larvicidal efficacy of Bacillus thuringiensis var. israelensis and Bacillus spaericus on Anopheles arabiensis in Ethiopia. World J Microbiol Biotechnol. 1997, 13: 21-24.

Karch S, Asidi N, Manzambi ZM, Salaun JJ: Efficacy of Bacillus sphaericus against the malaria vector Anopheles gambiae and other mosquitoes in swamps and rice fields in Zaire. J Amer Mosq Control Assoc. 1992, 8: 376-380.

Lerer LB: Cost-effectiveness of malaria control in sub-Saharan Africa. Lancet. 1999, 354: 1123-1124.

Acknowledgements

We thank Prof. Steven W. Lindsay for insightful and constructive suggestions for improving the manuscript. Financial support was provided by NIH (award U19AI4511 and D43 TW01142) and by Valent BioSciences Corporation.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing Interests

One of the authors (UF) has been supported over the last two years by Valent Biosciences Corporation, a commercial manufacturer of microbial larvicides.

Authors contributions

GFK formulated the hypothesis and model, following which the literature reviews and drafting of the manuscript were carried out with the participation of UF and BGJK. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Killeen, G.F., Fillinger, U. & Knols, B.G. Advantages of larval control for African malaria vectors: Low mobility and behavioural responsiveness of immature mosquito stages allow high effective coverage. Malar J 1, 8 (2002). https://doi.org/10.1186/1475-2875-1-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1475-2875-1-8