Abstract

Background

Knowledge of the extent to which patient characteristics are systematically associated with variation in patient evaluations will enable us to adjust for differences between practice populations and thereby compare GPs. Whether this is appropriate depends on the purpose for which the patient evaluation was conducted. Associations between evaluations and patient characteristics may reflect gaps in the quality of care or may be due to inherent characteristics of the patients. This study aimed to determine such associations in a setting with a comprehensive list system and gate-keeping.

Methods

A nationwide Danish patient evaluation survey among voluntarily participating GPs using the EUROPEP questionnaire, which produced 28,260 patient evaluations (response rate 77.3%) of 365 GPs. In our analyses we compared the prevalence of positive evaluations in groups of patients.

Results

We found a positive GP assessment to be strongly associated with increasing patient age and increasing frequency of attendance. Patients reporting a chronic condition were more positive, whereas a low self-rated health was strongly associated with less positive scores also after adjustment. The association between patient gender and assessment was weak and inconsistent and depended on the focus. We found no association either with the patients' educational level or with the duration of listing with the GP even after adjusting for patient characteristics.

Conclusion

Adjustment for patient differences may produce a more fair comparison between GPs, but may also blur the assessment of GPs' ability to meet the needs of the populations actually served. On the other hand, adjusted results will enable us to describe the significance of specific patient characteristics to patients' experience of care.

Similar content being viewed by others

Background

Patient evaluations of care are increasingly being included in systematic assessments aimed at improving the quality of general practice. The extent to which patients are able to assess the technical quality of care is being debated [1, 2], however, when the focus is on doctor-patient relationships, patients' experience is an obvious and valuable assessment tool [3, 4]. Besides, WHO defines a high degree of patient satisfaction as one of five criteria for good health care quality [5].

Variation in patient evaluations of general practice reflects differences between the evaluated general practitioners (GPs) and their practices, and between the patients themselves.

A patient's evaluation is shaped by the actual contents and quality of the contacts over time, the patient's experience of the contact, his or her expectations, former experience, needs and the reason for the encounter. These aspects are also influenced by external factors such as family and friends, press and official (health) authorities and the cultural and historical setting at the time of the patient's life [6, 7].

Knowledge of the extent to which patient characteristics are systematically associated with variation in patient evaluations will help to discover possible gaps in the quality of care and also enable us to adjust for differences between practice populations and thereby compare GPs. In addition, we will be able to calculate the variation attributable to GP factors. On the other hand adjustment for patient characteristics will blur the GPs' ability to tailor care according to the individual patient [8, 9].

Many studies and reviews have explored associations between patients' evaluation of care and their characteristics. The most consistent finding is that older patients and patients with a low educational level rate care higher than younger patients and better educated patients [2, 4, 6, 10–14]. While many studies find no gender difference in the assessment of the GP, a few studies report women to be more satisfied with care than men [4, 10]. Similar results are reported regarding patients' socioeconomic status with a few studies reporting patients with a high socioeconomic status being a little more satisfied compared with less well off patients [6, 11]. Diverging associations between patients' health and their care assessment have also been described [14].

Earlier studies have been carried out in different settings like hospital, outpatient clinics and general practice thus making comparisons irrelevant. The applied instruments have been of a varying quality and often study populations have often been small [15]. Some authors have set out to measure patients' satisfaction with care, although a proper theory for the construct "satisfaction" has never been worked out [1]. A European tool for patients' assessment of specific aspects of general practice care, the EUROPEP instrument, was developed through the 1990s [16, 17] on the basis of patients' care priorities [18] and refined through a strict validation procedure [19]. The 23 items are displayed in [see Additional file 1].

Patients' assessment of specific aspects of care may be shaped by the context including the health care organisation which reduces the transferability of standards between organisations. The Danish health care system is based on self-employed GPs working as gatekeepers for the public health services on a contract basis serving patients on their lists (a brief introduction to the Danish general practice is given in [see Additional file 2]).

This study aimed to determine to which extent variations in patients' evaluation of the GPs were associated with the patients' gender, age, health, educational level, frequency of attendance and adherence to the GP in a setting with a comprehensive list system and gate-keeping.

Methods

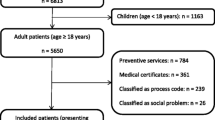

Study population

In 2002–4 all 2181 GPs from ten Danish counties were invited to carry out patient evaluations of their practices. A total of 365 GPs (16–34% of all GPs in these counties) signed in. The participating GPs handed out questionnaires to 100 successive patients seen in the surgery or at home visits. The patients were at least 18 years of age, were listed in the practice and were able to read and write Danish. They were informed that their replies were anonymous to the doctor. Each questionnaire was identified by a serial number connecting it with the GP who handed it out and to the patient. If the GP had not handed out all questionnaires within two weeks, (s)he returned the rest to the project secretariat for registration.

The patients were asked to assess the GP they considered to be their personal GP based on their contact experience over the past 12 months. They were also asked to write the GP's name on the questionnaire to confirm which GP was assessed and to allow individual assessment of GPs in partnership practices. The questionnaires were returned by the patients in prepaid envelopes to the project secretariat.

In order to be able to carry out the reminder procedure the GPs registered the names, addresses and serial numbers from the questionnaires handed out. Reminders with new questionnaires were sent to non-responding patients three to five weeks after the GPs' distribution of the patient-questionnaires and the patient lists were thereafter destroyed.

The questionnaire

The questionnaire contained the 23 items forming the EUROPEP instrument [16, 17]. These questions covered specific aspects of general practice care and were grouped into five dimensions: doctor-patient relationship (6 questions), medical care (5 questions), information and support (4 questions), organisation of care (2 questions) and accessibility (6 questions). The answers were marked on a 5-point Likert scale ranging from "poor" to "excellent", with "acceptable" as the middle value. Alternatively, the patients could choose a sixth category "not able to answer/not relevant". The questionnaire also included questions about the patient's gender, age, educational level, frequency of attendance to a general practice for the previous 12 months, time listed with the GP, self-rated health and chronic conditions.

In the project secretariat we coded the diagnoses reported by patients with chronic conditions according to the major ICPC-2 groups [20] with the label K for cardiovascular, R for respiratory and T for endocrine diseases along with two ad hoc-groups labelled C for patients reporting cancer diagnoses and M for patients with multiple diagnoses.

Assessments of the GPs

Within each dimension, a patients' evaluation was included only if 50% or more of the items had been answered in one of the six categories. An answer was considered positive if it fell in one of the two most favourable categories. The assessment of the dimension was categorised as 100%, 50–99% or 0–49% positive depending on how many of the items marked on the 5-point Likert scale were positive. We compared the prevalences of assessments in the 100%-category between strata and the prevalences in the 0–49%-category, respectively. We excluded responses from patients not indicating which GP they assessed or assessing non-participating GPs from our analyses.

Patient characteristics

The patients' age was calculated on the basis of their year of birth and the year of assessment. The patients were grouped into 13 five-year age categories. The patients' level of further education (theoretical education or formalized vocational training after grammar or high school) were categorised as none, less than 2, 2–4 or 5 or more years. Patients undergoing education and patients who could not specify the length of their education formed a separate category. The frequency of attending a GP and the duration of the patient's listing with a particular GP or practice were included as categorical variables (Table 1). Self-rated health was included with its five categories (excellent, very good, good, poor and bad [21]). Patients with chronic conditions were grouped according to the ICPC main category.

Statistics

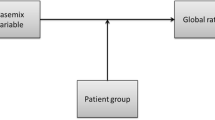

We investigated univariate associations between the patient characteristics and the assessment scores for each of the five dimensions, accounting for the clustering of patients by GPs [22]. Prevalence ratios (PR) with 95%-confidence intervals (95% CI) were preferred to odds ratios (OR) which would tend to overestimate the associations because the prevalence of the variables was high [23, 24]. We used generalised linear models (GLM) with log link for Bernoulli family, i.e., modelling the PR. Because of the high prevalence, some of the adjusted GLM analyses could not converge with the Bernoulli family. In these situations we used Poisson regression with robust variance [25]. Furthermore, we adjusted for confounders associated with both assessment and patient characteristics. We found correlations (Pearson's correlation coefficient) between age and frequency of attendance and self-rated health, and accordingly adjusted for these three variables adding gender to the multiple regression analyses. Even though significantly associated with assessment, educational level was not correlated with other patient characteristics and we chose not to adjust for it in the model. Due to relatively high collinearity, we chose to adjust for self-rated health rather than for chronic conditions. In these analyses we also accounted for the clustering of patients. Analyses were performed using complete data only, i.e., the univariate and the GLM analyses were performed using the same data set. We used Stata 9.1 for data processing [26].

Ethical approval

Questionnaire surveys such as the present study do not fall within the scope of The Danish National Committee on Biomedical research Ethics. Therefore, we did not need any ethical approval to carry out this study.

Informed consent

The participation in this study of both the doctors and the patients was voluntary. The data in this study derive entirely from the evaluation of doctors who had responded to an individual participation to be evaluated by the patients. The patients were asked to answer the questionnaire and as such were free not to do so. The patients knew that aggregated and anonymised replies were fed back to the doctors.

Results

The GPs distributed a total of 36,561 questionnaires. Valid responses were obtained from 28,260 patients (response rate 77.3%). More than twice as many respondents were female (Table 1) which reflects that women attend a GP twice as often as men [27] and that women are more prone to respond to questionnaires than men (Heje et al., submitted).

Gender differences in assessments (Tables 2, 3, 4, 5, 6 (one table per dimension)) were statistically significant, but numerically quite small. Adjusted analysis showed that male patients assessed "medical care" and "information and support" less favourably, but "organisation of care" more favourably than female patients. In all dimensions the scores increased statistically significantly with increasing patient age. This trend was robust to adjusting. In all dimensions but "medical care", crude PRs for positive evaluations tended to decline with a rising level of education, but this association was eliminated by adjusting.

Scores for all dimensions rose with an increasing frequency of attendance. Adjustment for patient characteristics eliminated this association for "medical care", but not for the other dimension. Scores also tended to increase the longer time the patients had been listed with the GP, but not after adjusting for confounding patient characteristics.

We found consistent, statistically significantly decreasing scores with decreasing self-rated health in all dimensions which was even more pronounced after controlling for confounders.

Patients reporting a chronic condition gave more positive assessments of their GP – the most positive being the patients with "KRTC-conditions". The associations were modified but not eliminated upon adjustment except for "accessibility" where we found no association.

Discussion

We found a positive GP assessment to be associated with increasing patient age and increasing frequency of attendance. Patients reporting a chronic condition were more positive, whereas a poor self-rated health was strongly associated with less positive scores also after adjustment. The association between patient gender and assessment was weak and inconsistent and depended on the focus. We found no association either with the patients' educational level or with the duration of listing with the GP even after adjusting for patient characteristics.

This project was part of a larger national patient evaluation project, which may have introduced some sources of bias. Thus, all GPs in the involved counties were invited and those who signed in may not necessarily be a representative sample. The method for patient inclusion would ideally secure a random sample of the doctor-seeking part of the listed patients where frequently attending patients, evidently, would be overrepresented. We do not know to what extent GPs forgot to hand out questionnaires or even if they more systematically let some patients out. However, in this study we focused on adjusted associations between assessments and patient characteristics. Selection bias would therefore seem to have a smaller impact than if we had studied actual levels of assessment.

Duration of further education is only a rough indicator of education. We chose this simple indicator because it lends itself better to use in a self-administered questionnaire than more complex indicators. A certain recall bias may have affected indications of frequency of attendance and duration of listing with the GP. Such information bias may be differentiated which would tend to overestimate the magnitude, but not the direction of the associations found [24].

We learned from our pilot-study that many patients did not understand the expression "chronic illness", so we added "or a serious disease lasting more than three months". This probably enhanced the sensitivity of the question but lowered its specificity, resulting in overrepresentation of more trivial conditions. In order to be able to compare evaluations by patients with a genuine chronic disease with that of those with no chronic conditions, we divided the respondents into three groups: those reporting no chronic condition, those reporting a cardiovascular, respiratory, endocrine or cancer diagnosis (ICPC-2-categories K, R and T and the ad hoc category C), and those reporting other or multiple diagnoses. This may have resulted in the exclusion of some very ill patients with for instance cardiovascular disease in addition to diabetes thus tending to underestimate the significance of suffering from a chronic condition.

This study enjoyed a very high statistical power with over 27,000 cases included. We were therefore able to detect quite small, statistically significant associations. Some statistically significant associations were so small that their clinical relevance could be questioned. However, the considerable power of our analyses is accompanied by an almost negligent risk of overlooking associations (type II-error).

While some earlier studies have presented diverging results on the association between patient gender and assessment [6, 13, 28], we found only small and inconsistent associations, which is in concordance with a meta-analysis performed by Hall et al. [11]. This finding may be rooted in the absence of any gender influence on the way patients experience health care or in the GPs' possible intuitive adjustment of their care to the different needs of different patients [9].

The adjusted analyses showed a strong positive association between patient age and assessment level, which is also a consistent finding in other studies [6, 11]. This association may be rooted not only in the long-standing relationship with their GP and a higher age-related morbidity, but also in a more realistic view on health, health care expectancies and doctors' skills due to the patients' life experience. The finding may also be due to a general positivity of – some may say more mellow way of judging by – older people [29]. However, we could also be facing to a cohort-effect which, though, is less probable considering the linearity of the association.

Crude analysis showed an expected negative association between educational level and assessment scores, which is in concordance with earlier findings [11]. In our study, however, the association was eliminated after adjustment except for the heterogenous group of patients undergoing education and patients who were unable to report the length of their education, which indicates that the association may have been confounded by other characteristics. This difference may be due to the use of different methods for measuring educational level, but it may also reflect that associations found in one cultural setting may not necessarily be valid in another.

Frequency of attendance is a multifaceted variable. For example, we do not know if a high number of encounters is the result of the patient's or the GP's initiative (ex. half-yearly control-appointments for chronic disease). Still, it is an indicator of the intensity of the doctor-patient relationship, just as the duration of listing with the GP is an indicator of the relational continuity between the GP and his patient [30]. Patients' age, time on the GP's list, frequency of attendance and health are closely interconnected. Our adjusted PRs therefore capture a more "clean" effect on the assessment of being listed for years with the same GP and of the frequency of attending the GP. Adjustment of the latter for health ensured that the positive assessment was not an expression of the ill patient relying on the quality of the GP care [28].

Continuity is one of the core qualities of the doctor-patient-relationship in a health care system where the GP is the patient's primary contact with the health care system [31]. The possible migration of dissatisfied patients from the GPs' lists favours a positive association between assessment and time on the GPs list. However, unlike Hjortdahl and Laerum [32] we found no association with the duration of the relationship but a positive association with the intensity. This may indicate that the positive association between continuity and assessment demonstrated in earlier studies [10, 33, 34] may be correlated with other characteristics which we adjusted for in the present study.

We found diverging results regarding the association between health and assessment of the care depending on whether we looked at self rated health or diagnosed chronic illness. In a paper by Rahmqvist [14] this was very well illustrated. The health indicator used in this study was a mix of self and physician ratings and the study found no association between the patients' health and their rating of the care. Hall et al. [35] also used a mixed health indicator with a seeming emphasis on self rated health indicators and found that poor health was associated with dissatisfaction.

Both Hall et al. [36, 37] and Wensing et al. [38] found an association between less positive assessments and poor self-rated health. We also found this strong negative association between self-rated health and assessment after adjusting for confounders, but we also found that patients who reported a chronic condition assessed more positively in all dimensions except accessibility with patients suffering from cardiovascular, respiratory, endocrine and cancer diseases giving the most positive assessments. This is an assessment paradox because GPs received more negative assessments from patients with a poor self-rated health, but more positive assessments from patients with chronic illness. This was also found by Zapka et al. [39]. This may be due to our adjusting for self-rated health which may be somewhat risky when dealing with chronic illness. On the other hand, a possible explanation may be that the GPs were more capable at handling patients with exact diagnoses and maybe even capable of improving their self-rated health, than at handling patients who rated their health as poor did not fit into a specific disease category – e.g. patients with somatization disorders [40]. We may have been demonstrating an effect of the clinical recommendations on the handling of different chronic diseases that have been implemented in Danish general practice through the past few years. All in all, these results illustrates how the use of different health indicators may affect the association between health and assessment and that it is crucial to specify how health is measured whenever this parameter is being used.

We only included a limited number of patient variables. Inclusion of more and specific variables reflecting psycho-socio-cultural aspects might have added value to the study; in particular, it might have helped explain the oppositely directed associations between self-rated health, chronic conditions and assessments.

If patients' demands, expectations and experience, which are determinants of satisfaction in most models [6, 7], were always in balance we would probably see no assessment variation between patients with different characteristics. But, as we have demonstrated, assessments do vary with patient characteristics. In this paper we chose to publish the crude as well as the adjusted results of the analyses of possible associations between patient characteristics and evaluations. This kind of results serves to point out possible quality deficits in general, and to serve this purpose the results need to be adjusted for possible confounders. However, our study offers no possibility for deciding whether the source for evaluation differences between groups of patients is embedded with the patient or with the care and hence with the GP.

Whether or not the results from patient evaluations of care providers should be adjusted for uneven distribution of patient characteristics also depends on the purpose for which they are produced. Adjustment for patient differences may produce a more fair comparison between GPs, and when patient evaluations are used for accreditation purposes it may also seem fair to adjust for differences in the evaluated GPs' patient populations. Yet, adjustment may also blur the assessment of GPs' ability to meet the needs of the populations actually served [9, 11] and thus render quality improvement at a GP level difficult.

Conclusion

In a setting with a comprehensive list system and gatekeeping, we performed a patient evaluation study using a validated international questionnaire among voluntarily participating GPs and a large number of patients, thus producing results with high statistical precision. After adjusting for patients' gender, age, frequency of attending a GP and self-rated health we confirmed findings from earlier studies that there is a positive association between patients' age and frequency of attendance and their assessment of their GP and a weak and inconsistent association with patients' gender. We also showed that patients reporting a chronic condition were more positive in their assessment of the GP than patients without a chronic condition, whereas in the same population assessing the same aspects of practice, the assessments turned out less positive with decreasing self-rated health. We were not able to demonstrate associations with patients' level of education or the time the patients had been listed with the GP.

In this study we demonstrated statistically significant but yet minor associations between patients' characteristics and their assessment of the GP. Some of the variation may also be associated with GP and practice characteristics as we have demonstrated in a related already published paper [41]. The results from this study may lead to further investigations into the causes behind the found associations and to improving activities in case they are due to quality deficits. We will leave it to the relevant parties to discuss and decide whether or not they should be used for standardising future quality assessments for comparison purposes.

References

Williams B: Patient satisfaction: a valid concept?. Soc Sci Med. 1994, 38: 509-516. 10.1016/0277-9536(94)90247-X.

Lewis JR: Patient views on quality care in general practice: literature review. Soc Sci Med. 1994, 39: 655-670. 10.1016/0277-9536(94)90022-1.

Donabedian A: Quality assurance in health care: Consumers' role. Quality in Health Care. 1992, 1: 247-251.

Cleary PD, McNeil BJ: Patient satisfaction as an indicator of quality care. Inquiry. 1988, 25 (1): 25-36.

Shaw CD, Kalo I: A background for national quality policies in health systems. 2002, Copenhagen, WHO, 1-48.

Sitzia J, Wood N: Patient satisfaction: a review of issues and concepts. Soc Sci Med. 1997, 45: 1829-1843. 10.1016/S0277-9536(97)00128-7.

Thompson AG, Sunol R: Expectations as determinants of patient satisfaction: concepts, theory and evidence. Int J Qual Health Care. 1995, 7: 127-141. 10.1016/1353-4505(95)00008-J.

Fox JG, Storms DM: A different approach to sociodemographic predictors of satisfaction with health care. Soc Sci Med [A]. 1981, 15: 557-564.

Perneger TV: Adjustment for patient characteristics in satisfaction surveys. Int J Qual Health Care. 2004, 16: 433-435. 10.1093/intqhc/mzh090.

Pascoe GC: Patient satisfaction in primary health care: A literature review and analysis. Evaluation and program planning. 1983, 6: 185-210. 10.1016/0149-7189(83)90002-2.

Hall JA, Dornan MC: Patient sociodemographic characteristics as predictors of satisfaction with medical care: a meta-analysis. Soc Sci Med. 1990, 30: 811-818. 10.1016/0277-9536(90)90205-7.

Mainz J, Vedsted P, Olesen F: [How do patients evaluate their general practitioners? Danish results from a European study] (in Danish). Ugeskr Laeger. 2000, 162: 654-658.

Campbell JL, Ramsay J, Green J: Age, gender, socioeconomic, and ethnic differences in patients' assessments of primary health care. Qual Health Care. 2001, 10: 90-95. 10.1136/qhc.10.2.90.

Rahmqvist M: Patient satisfaction in relation to age, health status and other background factors: a model for comparisons of care units. Int J Qual Health Care. 2001, 13: 385-390. 10.1093/intqhc/13.5.385.

Sitzia J: How valid and reliable are patient satisfaction data? An analysis of 195 studies. Int J Qual Health Care. 1999, 11: 319-328. 10.1093/intqhc/11.4.319.

Grol R, Wensing M, Mainz J, Jung HP, Ferreira P, Hearnshaw H, Hjortdahl P, Olesen F, Reis S, Ribacke M, Szecsenyi J: Patients in Europe evaluate general practice care: an international comparison. Br J Gen Pract. 2000, 50: 882-887.

Grol R, Wensing M: Patients evaluate general/family practice. The EUROPEP instrument. EQuiP, WONCA Region Europe. 2000

Wensing M, Mainz J, Ferreira PL, Hearnshaw H, Hjortdahl P, Olesen F, Reis S, Ribacke M, Szecsenyi J, Grol R: General practice care and patients' priorities in Europe: an international comparison. Health Policy. 1998, 45: 175-186. 10.1016/S0168-8510(98)00040-2.

Wensing M, Mainz J, Grol R: A standardised instrument for patient evaluations of general practice care in Europe. European Journal of General Pratice. 2000, 6: 82-87.

Lamberts H, Wood M: ICPC. [International Classification for Primary Care], Danish version. 1990, Oxford Medical Publications

Bjørner JB, Damsgaard MT, Watt T, Bech P, Rasmussen NK, Kristensen TS, Modvig J, Thunedborg K: [The Danish manual for SF-26. A health status questionnaire], (in Danish). 1997, Copenhagen: Lif

Stata Statistical Software: Release 8.0. 2003, College Station, TX: Stata Corporation

Clayton D, Hills M: Statistical Models in Epidemiology. 1993, Oxford: Oxford University Press

Rothman KJ, Greenland S: Modern Epidemiology. 1998, Philadelphia: Lippicott-Raven Publishers, Second

Barros AJ, Hirakata VN: Alternatives for logistic regression in cross-sectional studies: an empirical comparison of models that directly estimate the prevalence ratio. BMC Med Res Methodol. 2003, 3: 21-10.1186/1471-2288-3-21.

Stata Statistical Software: Release 9.0. 2005, College Station, TX: StataCorp LP

Statistics Denmark, Statbank Denmark. (Accessed November 2005), [http://www.statbank.dk]

Hall JA, Dornan MC: What patients like about their medical care and how often they are asked: a meta-analysis of the satisfaction literature. Soc Sci Med. 1988, 27: 935-939. 10.1016/0277-9536(88)90284-5.

Isaacowitz DM, Vaillant GE, Seligman ME: Strengths and satisfaction across the adult lifespan. Int J Aging Hum Dev. 2003, 57: 181-201. 10.2190/61EJ-LDYR-Q55N-UT6E.

Haggerty JL, Reid RJ, Freeman GK, Starfield BH, Adair CE, McKendry R: Continuity of care: a multidisciplinary review. BMJ. 2003, 327: 1219-1221. 10.1136/bmj.327.7425.1219.

Freeman GK, Olesen F, Hjortdahl P: Continuity of care: an essential element of modern general practice?. Fam Pract. 2003, 20: 623-627. 10.1093/fampra/cmg601.

Hjortdahl P, Laerum E: Continuity of care in general practice: effect on patient satisfaction. BMJ. 1992, 304: 1287-1290.

Baker R, Mainous AG, Gray DP, Love MM: Exploration of the relationship between continuity, trust in regular doctors and patient satisfaction with consultations with family doctors. Scand J Prim Health Care. 2003, 21: 27-32. 10.1080/02813430310002995.

Donahue KE, Ashkin E, Pathman DE: Length of patient-physician relationship and patients' satisfaction and preventive service use in the rural south: a cross-sectional telephone study. BMC Fam Pract. 2005, 6: 40-10.1186/1471-2296-6-40.

Hall JA, Milburn MA, Roter DL, Daltroy LH: Why are sicker patients less satisfied with their medical care? Tests of two explanatory models. Health Psychol. 1998, 17: 70-75. 10.1037/0278-6133.17.1.70.

Hall JA, Feldstein M, Fretwell MD, Rowe JW, Epstein AM: Older patients' health status and satisfaction with medical care in an HMO population. Med Care. 1990, 28: 261-270. 10.1097/00005650-199003000-00006.

Hall JA, Milburn MA, Epstein AM: A causal model of health status and satisfaction with medical care. Med Care. 1993, 31: 84-94. 10.1097/00005650-199301000-00007.

Wensing M, Grol R, Asberg J, van Montfort P, van Weel C, Felling A: Does the health status of chronically ill patients predict their judgements of the quality of general practice care?. Qual Life Res. 1997, 6: 293-299. 10.1023/A:1018405207552.

Zapka JG, Palmer RH, Hargraves JL, Nerenz D, Frazier HS, Warner CK: Relationships of patient satisfaction with experience of system performance and health status. J Ambul Care Manage. 1995, 18: 73-83.

Smith GR, Monson RA, Ray DC: Patients with multiple unexplained symptoms. Their characteristics, functional health, and health care utilization. Arch Intern Med. 1986, 146: 69-72. 10.1001/archinte.146.1.69.

Heje HN, Vedsted P, Sokolowski I, Olesen F: Doctor and practice characteristics associated with differences in patient evaluations of general practice. BMC Health Serv Res. 2007, 7 (46): 46-10.1186/1472-6963-7-46.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1472-6963/8/178/prepub

Acknowledgements

This study was carried out as part of the national project on patient evaluations, DanPEP. We wish to thank all the GPs and patients whose evaluation provided this project with valuable data and Ms. Gitte Hove, cand. scient. bibl., for her competent management of the data. The DanPEP study was supported by grants from the Central Committee on Quality Development and Informatics in General Practice and the Danish Ministry of the Interior and Health. Direct expenses incurred by the participating GPs were refunded by the local Committees for Quality Improvement in General Practice in the counties of Aarhus, Frederiksborg, Funen, Ribe, Southern Jutland, Vejle and Western Zealand and the municipalities of Bornholm, Copenhagen and Frederiksberg.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

HNH, PV and FO planned the study, and HNH carried out the patient evaluation survey assisted by the research secretariat. IS, PV and HNH planned the statistical analyses, which were performed by IS. HNH drafted the manuscript, which was rewritten by HNH, PV, FO and IS. All authors read and approved the final manuscript

Electronic supplementary material

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Heje, H.N., Vedsted, P., Sokolowski, I. et al. Patient characteristics associated with differences in patients' evaluation of their general practitioner. BMC Health Serv Res 8, 178 (2008). https://doi.org/10.1186/1472-6963-8-178

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1472-6963-8-178