Abstract

Background

Computerized decision support systems (CDSS) are believed to have the potential to improve the quality of health care delivery, although results from high quality studies have been mixed. We conducted a systematic review to evaluate whether certain features of prescribing decision support systems (RxCDSS) predict successful implementation, change in provider behaviour, and change in patient outcomes.

Methods

A literature search of Medline, EMBASE, CINAHL and INSPEC databases (earliest entry to June 2008) was conducted to identify randomized controlled trials involving RxCDSS. Each citation was independently assessed by two reviewers for outcomes and 28 predefined system features. Statistical analysis of associations between system features and success of outcomes was planned.

Results

Of 4534 citations returned by the search, 41 met the inclusion criteria. Of these, 37 reported successful system implementations, 25 reported success at changing health care provider behaviour, and 5 noted improvements in patient outcomes. A mean of 17 features per study were mentioned. The statistical analysis could not be completed due primarily to the small number of studies and lack of diversity of outcomes. Descriptive analysis did not confirm any feature to be more prevalent in successful trials relative to unsuccessful ones for implementation, provider behaviour or patient outcomes.

Conclusion

While RxCDSSs have the potential to change health care provider behaviour, very few high quality studies show improvement in patient outcomes. Furthermore, the features of the RxCDSS associated with success (or failure) are poorly described, thus making it difficult for system design and implementation to improve.

Similar content being viewed by others

Background

Prescribing skills are core to the practice of medicine. As in most developed countries, prescription drugs are currently the fastest growing cost category in Canadian healthcare, exceeding $22 billion annually and increasing at 10.5% yearly [1]. With this increase in medication prescribing follows the potential for adverse drug events, including prescribing errors. It is estimated that medication errors occur in 57 per 1000 orders, with 18.7 – 57.7% of these errors having the potential for harm [2]. The suggestion that detection of preventable errors by health care professionals could improve patient safety and reduce the cost of adverse drug events [3], has been sufficient to spawn a multitude of medication safety initiatives with limited rigorous evaluation of their benefits and harms. Although they have several uses, the main interest in electronic health records (EHR) and computerized decision support systems (CDSS) is to improve patient outcomes by influencing the decision making process of providers [4–6]. CDSS provide patient-specific advice by using algorithms to compare patient characteristics against a knowledge base [7–9]. Prescribing CDSS (RxCDSS) specifically deal with medications and can support basic (e.g. checking for drug-drug interactions) to complex (e.g. integrating patient-specific diagnoses, risk factors, and prior treatments to make a drug recommendation) functions [10]. These systems may include, but do not require, a formal e-prescribing link with pharmacies.

Reviews evaluating the literature surrounding decision support have noted that technologies have the potential to improve practitioner performance, but effects on patient outcomes are still unclear [11–15]. Several features have been linked with successful clinical decision support. These include use of a computer to generate the decision support based on automated EHR data analysis, including provision of recommendations instead of just assessments, and provision of the decision support at the time and location of decision-making and in synchrony with usual clinician workflow [11, 12, 14, 15].

However, only one of these reviews [14] limited their analysis to high quality evidence (randomized controlled trials). None of the reviews systematically separated outcomes by their natural hierarchy of difficulty – system implementation, provider behaviour change and patient outcomes, and focused on features predicting success versus failure for each outcome domain. Finally, while one review was limited to drug order entry systems [14], no study to date has examined all RxCDSS irrespective of the presence of this system feature.

Our objective was to conduct a systematic review of randomized trials, to evaluate the effectiveness of RxCDSS using a hierarchical approach to defining success, and to determine which features of system design or implementation were associated with the success or failure of RxCDSS implementation, change in provider behaviour, and change in patient outcomes.

Methods

The two primary research questions of this review were: (1) When evaluated rigorously in randomized controlled trials, have current RxCDSS successfully been implemented and altered physician prescribing or patient outcomes? Furthermore, (2) what features of these RxCDSS are associated with success versus failure? Based on the literature and our own experience, we hypothesized that: a) high quality studies of RxCDSS may report successful implementation, but fewer have changed prescriber behaviour and fewer still have demonstrated improved patient outcomes and b) a number of RxCDSS features will be associated with successful versus unsuccessful outcomes as defined above.

RxCDSS Features

Potentially important features were identified primarily from our own e-health research program and clinical experience [16] as well as reviews of the literature [11, 12, 17–19]. A list of 40 features was generated, 12 features were ultimately removed during the review process due to lack of reporting or inability to assess. The remaining 28 features were grouped into 4 categories: Pure technical features, Technical/user interactions, Logic of decision support, and Developmental and Administrative environment (see Figure 1; Additional File 2).

Study Inclusion/Exclusion

We included reports of RCTs of RxCDSS published in English. We considered a RxCDSS to be an intervention which utilized a computer to analyze patient-specific information to advise a prescriber (primarily a physician) or pharmacist when they were writing or filling a prescription, respectively. Although the decision support itself had to be generated electronically, the support could be delivered by any means (e.g. computer terminal, fax, mail, patient record insert). We only considered systems which intervened before a drug therapy had been chosen by a physician, or had the ability to suggest alternate therapies (i.e. a drug different then that initially prescribed) to be a RxCDSS. These are the more challenging decisions for which to intervene and change. Systems whose sole purpose was to offer 'fine-tuning' advice on a pre-defined therapy – usually dose modification – were not included in this review. Systems primarily focused on diagnosis, vaccination, or nutrition, were also excluded.

Search Strategy

We searched the databases Medline, EMBASE, CINAHL, and INSPEC for articles published since the earliest entry to June 2008. The detailed search strategy is shown in Additional File 3. The search was individually tailored for each database, with search terms from domains of study methodology, general CDSS terms and RxCDSS identifiers. These included: randomized controlled trial, artificial intelligence, decision support systems, computer-assisted therapy, computerized medical records system, reminder systems, hospital information systems, computer systems, decision support techniques, ambulatory care information systems, computer assisted decision making, medical errors, therapeutic uses, drug therapy, drug information services, drug interactions, drug monitoring, guideline adherence, medication systems, drug administration schedule, drug costs, drug dose-response relationship, and computer assisted drug therapy. A pilot test was completed to ensure that known relevant studies were identified. All citations obtained were downloaded into Reference Manager, version 11.0.

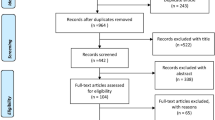

Study Selection

The titles of all returned studies were reviewed, and those potentially matching our definition of a RxCDSS were kept. Next, the abstracts were assessed independently by two reviewers to determine whether the studies met the inclusion criteria. Disagreements between reviewers were resolved by consensus and, if necessary, by arbitration of a third reviewer. If uncertain whether a study met the inclusion criteria, it moved to the next stage of assessment in order to decrease the likelihood that a relevant study was overlooked.

During full-text review, articles were once again reviewed independently using detailed data extraction forms which extracted details on methods, study validity, study outcomes and features. Before use, the data extraction forms were critiqued for face validity by a panel of methodologists experienced in systematic reviews and CDSS. The forms were also piloted to improve usability.

Analysis

Methodological quality of each RCT was assessed using a modified scale adapted from Garg et al [11]. Our rating system assessed studies on four potential sources of bias: unit of allocation, presence of baseline differences between groups potentially linked to study outcomes, objectiveness of outcome, and completeness of follow-up. Each source of bias was rated on a scale of 0 to 2, with 2 indicating the highest methodological quality. The results of this evaluation were summed with a maximum total possible score of 8.

Study outcomes were assessed for success in each of our three domains of focus: 'Implementation', 'Change in Health Care Provider Behaviour', and 'Change in Patient Outcomes'. Implementation was considered successful if the RxCDSS was successfully introduced and utilized by the clinical staff. A successful change in provider behaviours required reporting of changes such as a decrease in inappropriate prescribing or a change to a more cost-effective therapy. Lastly, impact on patient outcomes was considered successful if the study reported improvements in patients' health (e.g. decreases in morbidity or mortality). These domains of outcomes were hypothesized to be conditional and hierarchical, with success required in implementation before changes in provider behaviour would be noted, and so on. Since the concept of minimal clinically important difference in this area of research remains undefined, outcomes were assessed for statistical significance as reported by the original study [20].

Each RCT report was reviewed several times independently to ensure complete abstraction of features of interest. Each feature on our list was rated for each study as present, absent, or could not assess. 'Could not assess' was used when, even after extensive discussion, reviewers could not agree that a feature was present or absent. For the purposes of analysis, features that could not be assessed were considered absent.

Consensus was obtained as described above for methodological quality scores, RxCDSS success and presence/absence of features. Descriptive statistics were used to characterize the studies included, their degree of success, and the number of features reported. Inter-rater reliability for selected methodological quality score, success and features present or not, was calculated and reported as a kappa statistic. We planned to measure the association between our three-tier definition of success of the individual studies and the feature list using univariate binary logistic regression. This method requires roughly equivalent numbers of successful and unsuccessful studies per outcome. Statistical analyses were conducted using SAS 9.1 (Cary, North Carolina).

Results

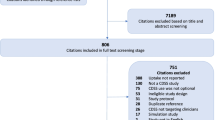

Our search protocol returned 4534 unique citations (1179 from Medline, 1072 from EMBASE, 1053 from CINAHL, and 1204 from INSPEC plus an additional 26 from the reference lists). Of these, 332 abstracts were evaluated, and 110 were chosen for full text review (see study flow diagram in Figure 2). At this stage, 33 (30%) were removed for not meeting initial inclusion criteria (18 did not deal with prescribing, 7 were not randomized controlled trials, 3 were not drug-related, 3 were extension studies or interim analysis, 1 was a foreign language study, and 1 did not use a computer to offer the decision support). In addition, 36 (32.7%) were deemed to be a drug dosing CDSS and were excluded. The final review sample consisted of 41 studies (see Additional File 4) [9, 21–60].

Key ratings, such as study quality and successful implementation showed good agreement, with kappa estimates of 0.62 (95% CI 0.50–0.73; weighted) and 0.77 (0.48 – 1.00) respectively. However, the features in the individual studies were more difficult to rate (see Additional File 5). Although the proportion of agreement for the presence of a feature was substantial, varying from 58.1% to 93%, the nature of the kappa statistic resulted in scores such as for 'CDSS supports the user's task at hand' at 0.14 (95% CI -0.18 to 0.46). Ultimately, the consensus rating for each variable was used for final data analysis.

Description of Studies

The 41 RCTs involved a total of 612,556 patients (range 169–407,460 per study) and 2963 providers (range 17–334 per study). The mean methodological score was 5.9 with a range of 2–8, indicating generally good quality studies. Twenty-three studies (56.1%) used a RxCDSS in an outpatient general practice or internal medicine setting, 10 (24.4%) in inpatient hospital wards or emergency rooms, 5 (12.2%) in pharmacies and 3 (7.3%) in specialty clinics (2 for paediatrics and 1 for diabetes). The systems addressed a variety of problems – cardiovascular care (36.6%), general/internal medicine (29.3%), diabetes (9.8%), respiratory disease (9.8%), otitis media (7.3%), depression, osteoporosis and infectious disease (2.4% each). Nineteen (46.3%) of the RxCDSS were integrated with drug order entry, 16 (39.0%) with management/electronic health record (EHR) software and 9 (22.0%) also printed the suggestions. While 20 (48.8%) of these systems appeared to be developed by independent vendors, 21 (51.2%) were developed within an (experienced) home EHR environment such as the Regenstreif Medical System [61] and the Veterans Health Information System [62].

The studies most often employed a traditional 2-arm parallel design (31, or 75.6%), although 6 studies (14.6%) used a 3-arm design and 4 (9.8%) used a 2 × 2 factorial design. The nature of the control arm of studies was also mixed but most often was labelled as usual care (35 or 85.4%). The remaining 6 studies employed a control group intervention that was felt to be much less effective such as distribution of general treatment guidelines or education, reminders regarding an unrelated condition or a RxCDSS that operated with reduced functionality.

Systems were successfully implemented in 37 trials (90.2%) [21–23, 25–30, 32, 33, 35–60], provider behaviour changed in 25 (61%) [21–23, 25, 26, 28, 29, 32, 33, 35, 37, 41, 43–45, 47, 48, 50, 51, 53, 55–57, 59, 60], and patient outcomes improved in 5 (12.2%) [28, 44–46, 59]. Only 23 RCTs reported on this important outcome and all of the studies reporting success with a patient outcome were published after 2005. Of those studies reporting improvements in patient outcomes, Kucher et al. [44] noted a computer program encouraging prophylaxis against deep-vein thrombosis and pulmonary embolism reduced the risk of these two events by 41% at 90 days (P = 0.001). Javitt et al. [28] found a system that scanned administrative claims and clinical data to detect physician errors reduced hospital admissions by 19% in the intervention group relative to the control group (P < 0.001). Feldstein et al. [59] used patient-specific guideline reminders within the primary care physician's EHR to improve osteoporosis management for HMO patients who had suffered a previous fracture. Lester et al. [45] used e-mail reminders to physicians to encourage the increased use of lipid-lowering medication for patients with coronary artery disease. Roumie et al. [46] examined strategies to improve blood pressure control and found that patient education combined with computerized patient-specific alerts to providers was superior to provider education alone.

Association Between CDSS Features and Outcomes

These associations are shown in detail in Additional File 1. Overall, studies mentioned an average of 17 features each (see Additional File 6). No trial concentrated on the features and their relationship with success or failure of the intervention nor did any trial systematically identify all of the features of their intervention. Only one study [21] isolated a specific RxCDSS feature through their randomization procedure. In this study, no benefit was found by adding bibliographic citations to electronic reminders. Another study [42] randomized two groups to guideline-based suggestions for treating congestive heart failure versus these suggestions plus others based on symptoms gleaned from the linked EHR, and found that the intervention group fared worse in terms of more hospitalizations. As this study did not have a control arm without a RxCDSS, it was not considered further in the analysis of features.

Because of the small number of studies, the lack of rigorous attention to features descriptions by the trials and, especially, the lack of diversity of outcomes across the 3 domains, we were unable to statistically evaluate whether there are features more associated with success than failure using logistic regression as originally planned. In general, the features most prevalent across the 40 remaining studies included: support of the user's task at hand (95%), provision of decision support at the time and place of decision-making (85%), provision of a recommendation rather than just an assessment (85%), automatic provision of decision support as part of clinician workflow (78%), integration with charting or order entry (75%), and convenient locations for the computers (68%). However, with few exceptions, the prevalence of these features was similar between successful and unsuccessful studies when examining implementation, provider behaviour and patient outcomes. For patient outcomes, there was reasonable separation for the prevalence of a few features. The features which all of the successful studies [28, 44–46, 59] shared and which most of the unsuccessful studies did not, included: provision of a recommendation rather than just an assessment, justification of decision support via provision of research evidence and the system uses data standards that support integration. However these results should be considered hypothesis-generating at best, given the low number of studies which successfully altered patient outcomes and the poor general reporting of features.

Discussion

We have systematically reviewed the literature surrounding RxCDSS. The distribution of success in these 41 studies – the majority successfully implemented, more than half reporting changes in provider process but only five were able to successfully impact patient-related outcomes, appears to validate our hierarchal definition of success. The primary finding of the review is the continued poor reporting of and, by implication, the poor attention to system design and implementation features [12]. The lack of rigorous attention to and reporting of intervention features severely hampers progress in this field. All CDSS are by definition, complex interventions meaning mutifactorial, multidisciplinary and usually multi-staged [20, 63].

If the ideal set of features was known, these could be highlighted and those more likely to be wasteful of time and resources could be dropped. For example, an activity with enormous cost in time and effort such as training and support of users, would rapidly change if high quality evidence suggested that only selected components and timing were the key to success.

The small number of trials and the lack of consistent reporting of features in the individual studies prevented statistical analysis of associations of features with outcomes. The descriptive examination of feature prevalence and their association with success versus failure returned no clear message.

The strengths of this review include a detailed search protocol tailored to four individual databases, the explicit use of a comprehensive features list, and a multi-level evaluation of system success. However, our study was limited, as mentioned, by the small sample size of included studies and the lack of systematic reporting of system features. Publication bias is always a possibility and is difficult to refute – in this case there may be an under-representation of negative studies. Many studies were excluded because the interventions dealt only with drug dosing suggestions. This group of studies should be systematically reviewed separately; it may well be that the simplicity of dosing decision support is an easier area to build success than complex processes of connecting diagnosis with therapy in light of contraindications, allergies and co-medications.

Despite the substantial interest and investment in developing electronic decision aids [64–68], our review supports the results of others who have noted a lack of demonstrated impact on clinically important patient outcomes [11–13]. Only 23 studies reported on patient outcomes; of these, 5 were successful. In the limited literature that evaluated such endpoints, patient outcomes were frequently secondary outcomes, with resultant lack of power to detect a difference between the intervention and control groups. This speaks to the lack of mature research programs in this field as well as the difficulties organizing and completing these difficult, complex intervention trials.

Conclusion

This systematic review suggests that electronic prescribing decision support systems can be implemented and have the potential to change clinician behaviours, but there is no consistent translation into improved patient outcomes. We have demonstrated that trials do not adequately report and may not give sufficient attention to features of their system design and implementation. We believe that the lack of attention to evidence-based optimization of RxCDSS interventions continues to hamper the development and implantation of these essential systems.

References

Canadian Institute for Health Information: Drug Expenditure in Canada 1985 to 2004. 2005, [http://dsp-psd.pwgsc.gc.ca/Collection/H115-27-2004E.pdf]

Von Laue NC, Schwappach DLB, Koeck CM: The epidemiology of preventable adverse drug events: A review of the literature. Wien Klin Wochenschr. 2003, 115: 407-415.

Bemt van den PM, Postma MJ, van Roon EN, Chow MC, Fijn R, Brouwers JR: Cost-benefit analysis of the detection of prescribing errors by hospital pharmacy staff. Drug Saf. 2002, 25: 135-143. 10.2165/00002018-200225020-00006.

Bates DW, Gawande AA: Improving safety with information technology. N Engl J Med. 2003, 348: 2526-2534. 10.1056/NEJMsa020847.

Bates DW, Evans RS, Murff H, Stetson PD, Pizziferri L, Hripcsak G: Detecting adverse events using information technology. J Am Med Inform Assoc. 2003, 10: 115-128. 10.1197/jamia.M1074.

Delaney BC, Fitzmaurice DA, Riaz A, Hobbs FD: Can computerised decision support systems deliver improved quality in primary care? Interview by Abi Berger. BMJ. 1999, 319: 1281-

Friedman C, Wyatt J: Evaluation methods in medical informatics. 1997, New York: Springer-Verlag

Grimshaw J, Freemantle N, Wallace S, Russell I, Hurwitz B, Watt I: Developing and implementing clinical practice guidelines. Qual Health Care. 1995, 4: 55-64. 10.1136/qshc.4.1.55.

Eccles M, McColl E, Steen N, Rousseau N, Grimshaw J, Parkin D: Effect of computerised evidence based guidelines on management of asthma and angina in adults in primary care: cluster randomised controlled trials. BMJ. 2002, 325: 941-944. 10.1136/bmj.325.7370.941.

Coiera E: Clinical Decision Support Systems. Guide to Health Informatics. 2003, Australia: Arnold Publishers

Garg AX, Adhikari NKJ, McDonald H, Rosas-Arellano MP, Devereaux PJ, Beyene J: Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005, 293: 1223-1238. 10.1001/jama.293.10.1223.

Kawamoto K, Houlihan CA, Balas EA, Lobach DF: Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005, 330: 765-10.1136/bmj.38398.500764.8F.

Kaushal R, Shojania KG, Bates DW: Effects of computerized physician order entry and clinical decision support systems on medication safety: a systematic review. Arch Intern Med. 2003, 163: 1409-1416. 10.1001/archinte.163.12.1409.

Kawamoto K, Lobach DF: Clinical decision support provided within physician order entry systems: A systematic review of features effective for changing clinician behavior. AMIA Annu Symp Proc. 2003, 361-365.

Niès J, Colombet I, Degoulet P, Durieux P: Determinants of success for computerized clinical decision support systems integrated into CPOE systems: A systematic review. AMIA Annu Symp Proc. 2006, 594-598.

COMPETE: Computerization of medical practice for the enhancement of therapeutic effectiveness. [http://www.compete-study.com/]

Wears RL, Berg M: Computer technology and clinical work: still waiting for Godot. JAMA. 2005, 293: 1261-1263. 10.1001/jama.293.10.1261.

Koppel R, Metlay JP, Cohen A, Abaluck B, Localio AR, Kimmel SE: Role of computerized physician order entry systems in facilitating medication errors. JAMA. 2005, 293: 1197-1203. 10.1001/jama.293.10.1197.

Holbrook AM, Xu S, Banting J: What factors determine the success of clinical decision support systems?. AMIA Annu Symp Proc. 2003, 862-

Holbrook AM, Thabane L, Shcherbatykh IY, O'Reilly D: E-Health interventions as complex interventions: improving the quality of methods of assessment. AMIA Annu Symp Proc. 2006, 952-

McDonald CJ, Wilson GA, McCabe GP: Physician response to computer reminders. JAMA. 1980, 244: 1579-1581. 10.1001/jama.244.14.1579.

Hershey CO, Porter DK, Breslau D, Cohen DI: Influence of simple computerized feedback on prescription charges in an ambulatory clinic. A randomized clinical trial. Med Care. 1986, 24: 472-481. 10.1097/00005650-198606000-00002.

Tierney WM, Miller ME, Overhage JM, McDonald CJ: Physician inpatient order writing on microcomputer workstations. Effects on resource utilization. JAMA. 1993, 269: 379-383. 10.1001/jama.269.3.379.

Rotman BL, Sullivan AN, McDonald TW, Brown BW, DeSmedt P, Goodnature D: A randomized controlled trial of a computer-based physician workstation in an outpatient setting: implementation barriers to outcome evaluation. J Am Med Inform Assoc. 1996, 3: 340-348.

Overhage JM, Tierney WM, Zhou XH, McDonald CJ: A randomized trial of "corollary orders" to prevent errors of omission. J Am Med Inform Assoc. 1997, 4: 364-375.

Dexter PR, Perkins S, Overhage JM, Maharry K, Kohler RB, McDonald CJ: A computerized reminder system to increase the use of preventive care for hospitalized patients. N Engl J Med. 2001, 345: 965-970. 10.1056/NEJMsa010181.

Tamblyn R, Huang A, Perreault R, Jacques A, Roy D, Hanley J: The medical office of the 21st century (MOXXI): effectiveness of computerized decision-making support in reducing inappropriate prescribing in primary care. CMAJ. 2003, 169: 549-556.

Javitt JC, Steinberg G, Locke T, Couch JB, Jacques J, Juster I: Using a claims data-based sentinel system to improve compliance with clinical guidelines: results of a randomized prospective study. Am J Manag Care. 2005, 11: 93-102.

Berner ES, Houston TK, Ray MN, Allison JJ, Heudebert GR, Chatham WW: Improving ambulatory prescribing safety with a handheld decision support system: a randomized controlled trial. J Am Med Inform Assoc. 2006, 13: 171-179. 10.1197/jamia.M1961.

Judge J, Field TS, DeFlorio M, Laprino J, Auger J, Rochon P: Prescribers' Responses to Alerts During Medication Ordering in the Long Term Care Setting. J Am Med Inform Assoc. 2006, 13: 385-390. 10.1197/jamia.M1945.

Martens J, Weijden van der T, Severens J, de Clercq P, de Bruijn D, Kester A: The effect of computer reminders on GPs' prescribing behaviour: A cluster-randomised trial. Int J Med Inform. 2007, 76S: S403-S416. 10.1016/j.ijmedinf.2007.04.005.

Raebel MA, Carroll NM, Kelleher JA, Chester EA, Berga S, Magid DJ: Randomized trial to improve prescribing safety during pregnancy. J Am Med Inform Assoc. 2007, 14: 440-450. 10.1197/jamia.M2412.

McAlister NH, Covvey HD, Tong C, Lee A, Wigle ED: Randomised controlled trial of computer assisted management of hypertension in primary care. Br Med J (Clin Res Ed). 1986, 293: 670-674. 10.1136/bmj.293.6548.670.

Hobbs FD, Delaney BC, Carson A, Kenkre JE: A prospective controlled trial of computerized decision support for lipid management in primary care. Fam Pract. 1996, 13: 133-137. 10.1093/fampra/13.2.133.

Rossi RA, Every NR: A computerized intervention to decrease the use of calcium channel blockers in hypertension. J Gen Intern Med. 1997, 12: 672-678. 10.1046/j.1525-1497.1997.07140.x.

Murray MD, Harris LE, Overhage JM, Zhou XH, Eckert GJ, Smith FE: Failure of computerized treatment suggestions to improve health outcomes of outpatients with uncomplicated hypertension: results of a randomized controlled trial. Pharmacotherapy. 2004, 24: 324-337. 10.1592/phco.24.4.324.33173.

Selker HP, Beshansky JR, Griffith JL: Use of the electrocardiograph-based thrombolytic predictive instrument to assist thrombolytic and reperfusion therapy for acute myocardial infarction. A multicenter, randomized, controlled, clinical effectiveness trial. Ann Intern Med. 2002, 137: 87-95.

Ansari M, Shlipak MG, Heidenreich PA, Van Ostaeyen D, Pohl EC, Browner WS: Improving guideline adherence: a randomized trial evaluating strategies to increase beta-blocker use in heart failure. Circulation. 2003, 107: 2799-2804. 10.1161/01.CIR.0000070952.08969.5B.

Tierney WM, Overhage JM, Murray MD, Harris LE, Zhou XH, Eckert GJ: Effects of computerized guidelines for managing heart disease in primary care. J Gen Intern Med. 2003, 18: 967-976. 10.1111/j.1525-1497.2003.30635.x.

Weir CJ, Lees KR, Sim I, Erwin L, McAlpine C, Rodger J: Cluster-randomized, controlled trial of computer-based decision support for selecting long-term anti-thrombotic therapy after acute ischaemic stroke. QJM. 2003, 96: 143-153. 10.1093/qjmed/hcg008.

Murray MD, Loos B, Tu W, Eckert GJ, Zhou XH, Tierney WM: Work patterns of ambulatory care pharmacists with access to electronic guideline-based treatment suggestions. Am J Health Syst Pharm. 1999, 56: 225-232.

Subramanian U, Fihn SD, Weinberger M, Plue L, Smith FE, Udris EM: A controlled trial of including symptom data in computer-based care suggestions for managing patients with chronic heart failure. Am J Med. 2004, 116: 375-384. 10.1016/j.amjmed.2003.11.021.

Cobos A, Vilaseca J, Asenjo C, Pedro-Botet J, Sanchez E, Val A: Cost effectiveness of a clinical decision support system based on the recommendations of the European Society of Cardiology and other societies for the management of hypercholesterolemia: Report of a cluster-randomized trial. Dis Manag Health Outcomes. 2005, 13:

Kucher N, Koo S, Quiroz R, Cooper JM, Paterno MD, Soukonnikov B: Electronic alerts to prevent venous thromboembolism among hospitalized patients. N Engl J Med. 2005, 352: 969-977. 10.1056/NEJMoa041533.

Lester WT, Grant RW, Barnett GO, Chueh HC, Lester WT, Grant RW: Randomized controlled trial of an informatics-based intervention to increase statin prescription for secondary prevention of coronary disease. J Gen Intern Med. 2006, 21: 22-29. 10.1111/j.1525-1497.2005.00268.x.

Roumie CL, Elasy TA, Greevy R, Griffin MR, Liu X, Stone WJ: Improving blood pressure control through provider education, provider alerts, and patient education: a cluster randomized trial. Ann Intern Med. 2006, 145: 165-175.

Hicks LS, Sequist TD, Ayanian JZ, Shaykevich S, Fairchild DG, Orav EJ: Impact of computerized decision support on blood pressure management and control: a randomized controlled trial. J Gen Intern Med. 2007, 23: 429-441. 10.1007/s11606-007-0403-1.

McDonald CJ: Use of a computer to detect and respond to clinical events: its effect on clinician behavior. Ann Intern Med. 1976, 84: 162-167.

Hetlevik I, Holmen J, Kruger O, Kristensen P, Iversen H, Furuseth K: Implementing clinical guidelines in the treatment of diabetes mellitus in general practice: Evaluation of effort, process, and patient outcome related to implementation of a computer-based decision support system. Int J Technol Assess Health Care. 2000, 16: 210-227. 10.1017/S0266462300161185.

Filippi A, Sabatini A, Badioli L, Samani F, Mazzaglia G, Catapano A: Effects of an automated electronic reminder in changing the antiplatelet drug-prescribing behavior among Italian general practitioners in diabetic patients: an intervention trial. Diabetes Care. 2003, 26: 1497-1500. 10.2337/diacare.26.5.1497.

Sequist TD, Gandhi TK, Karson AS, Fiskio JM, Bugbee D, Sperling M: A randomized trial of electronic clinical reminders to improve quality of care for diabetes and coronary artery disease. J Am Med Inform Assoc. 2005, 12: 431-437. 10.1197/jamia.M1788.

McCowan C, Neville RG, Ricketts IW, Warner FC, Hoskins G, Thomas GE: Lessons from a randomized controlled trial designed to evaluate computer decision support software to improve the management of asthma. Med Inform Internet Med. 2001, 26: 191-201. 10.1080/14639230110067890.

Samore MH, Bateman K, Alder SC, Hannah E, Donnelly S, Stoddard GJ: Clinical decision support and appropriateness of antimicrobial prescribing: a randomized trial. JAMA. 2005, 294: 2305-2314. 10.1001/jama.294.18.2305.

Tierney WM, Overhage JM, Murray MD, Harris LE, Zhou XH, Eckert GJ: Can computer-generated evidence-based care suggestions enhance evidence-based management of asthma and chronic obstructive pulmonary disease? A randomized, controlled trial. Health Serv Res. 2005, 40: 477-497. 10.1111/j.1475-6773.2005.0t369.x.

Kuilboer MM, van Wijk MA, Mosseveld M, van der DE, de Jongste JC, Overbeek SE: Computed critiquing integrated into daily clinical practice affects physicians' behavior – a randomized clinical trial with AsthmaCritic. Methods Inf Med. 2006, 45: 447-454.

Davis RL, Wright J, Chalmers F, Levenson L, Brown JC, Lozano P: A cluster randomized clinical trial to improve prescribing patterns in ambulatory pediatrics. PLoS Clinical Trials. 2007, 2: e25-10.1371/journal.pctr.0020025.

Christakis DA, Zimmerman FJ, Wright JA, Garrison MM, Rivara FP, Davis RL: A randomized controlled trial of point-of-care evidence to improve the antibiotic prescribing practices for otitis media in children. Pediatrics. 2001, 107: E15-10.1542/peds.107.2.e15.

Rollman BL, Hanusa BH, Lowe HJ, Gilbert T, Kapoor WN, Schulberg HC: A randomized trial using computerized decision support to improve treatment of major depression in primary care. J Gen Intern Med. 2002, 17: 493-503. 10.1046/j.1525-1497.2002.10421.x.

Feldstein A, Elmer PJ, Smith DH, Herson M, Orwoll E, Chen C: Electronic medical record reminder improves osteoporosis management after a fracture: a randomized, controlled trial. J Am Geriatr Soc. 2006, 54: 450-457. 10.1111/j.1532-5415.2005.00618.x.

Paul M, Andreassen S, Tacconelli E, Nielsen AD, Almanasreh N, Frank U: Improving empirical antibiotic treatment using TREAT, a computerized decision support system: cluster randomized trial. J Antimicrob Chemother. 2006, 58: 1238-1245. 10.1093/jac/dkl372.

McDonald C, Overhage J, Tierney W, Dexter PR, Martin D, Suico J: The Regenstrief Medical Record System: a quarter century experience. Int J Med Inform. 1999, 54: 225-253. 10.1016/S1386-5056(99)00009-X.

Brown SH, Lincoln MJ, Groen PJ, Kolodner RM: VistA – U.S. Department of Veterans Affairs national-scale HIS. Int J Med Inform. 2003, 69: 135-156. 10.1016/S1386-5056(02)00131-4.

Campbell M, Fitzpatrick R, Haines A, Kinmonth AL, Sandercock P, Spiegelhalter D: Framework for design and evaluation of complex interventions to improve health. BMJ. 2000, 321: 694-696. 10.1136/bmj.321.7262.694.

Bates DW: Computerized physician order entry and medication errors: finding a balance. J Biomed Inform. 2005, 38: 259-261. 10.1016/j.jbi.2005.05.003.

Teich JM, Osheroff JA, Pifer EA, Sittig DF, Jenders RA, The CDS Expert Review Panel: Clinical decision support in electronic prescribing: recommendations and an action plan: report of the joint clinical decision support workgroup. JAMIA. 2005, 12: 365-376.

Canada Health Infoway: 2003–2004 annual report and corporate plan summary 2004–2005. [http://www.infoway-inforoute.ca]

Health Canada: A 10-year plan to strengthen health care. [http://www.hc-sc.gc.ca/hcs-sss/delivery-prestation/fptcollab/2004-fmm-rpm/index-eng.php]

Baker M, Robson B, Shears J: Clinical decision support in the NHS – the clinical element. The Journal of Clinical Governance. 2002, 10: 77-82.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1472-6947/9/11/prepub

Acknowledgements

This study was funded by a grant from the Ontario Ministry of Health's Primary Healthcare Transition Fund. Dr. Holbrook is a recipient of a Canadian Institutes of Health Career Investigator Award.

The authors would like to thank Gaf Zardasht and Eleanor Pullyenayegum for their assistance with the literature review update and reliability statistics, respectively.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

BM and JJRC were involved in all aspects of study design, literature search, data extraction, descriptive statistical analysis, and manuscript preparation. AMH was responsible for the study's conception and funding, participated in its design and execution, helped to draft the manuscript and revisions. MS was involved in review and renovation of the manuscript and led the latest update of results. LT was involved in the design of the statistical analysis and aided in the interpretation of the results. GF was responsible for conducting the statistical analysis and aided in the interpretation of the results.

Electronic supplementary material

12911_2008_253_MOESM1_ESM.xls

Additional file 1: Table 1 – Prevalence of 28 features in 40 successful and unsuccessful randomized trials. This table provides a detailed look at the prevalence of 28 RxCDSS features in 40 randomized controlled trials (XLS 127 KB)

12911_2008_253_MOESM2_ESM.xls

Additional file 2: Appendix A – Description of features thought to impact clinical decision support system success. This table describes features which might impact success in clinical decision support systems, and provides references and examples for each. (XLS 44 KB)

12911_2008_253_MOESM3_ESM.doc

Additional file 3: Appendix B – In-depth online search strategy. This document provides a detailed protocol for the online search strategy utilized in this systematic review. (DOC 30 KB)

12911_2008_253_MOESM4_ESM.xls

Additional file 4: Appendix C – Summary of studies included in the review. This table summarizes the intention and outcomes of the randomized controlled trials included in this systematic review. (XLS 76 KB)

12911_2008_253_MOESM5_ESM.xls

Additional file 5: Appendix D – Reliability scores when evaluating feature prevalence. This table summarizes the intention and outcomes of the randomized controlled trials included in this systematic review. (XLS 39 KB)

12911_2008_253_MOESM6_ESM.xls

Additional file 6: Appendix E – Detailed assessment of features from studies included in the review. This table summarizes the reviewers' assessment of the prevalence or absence of features from studies included in this review. (XLS 42 KB)

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is published under license to BioMed Central Ltd. This is an Open Access article is distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Mollon, B., Chong, J.J., Holbrook, A.M. et al. Features predicting the success of computerized decision support for prescribing: a systematic review of randomized controlled trials. BMC Med Inform Decis Mak 9, 11 (2009). https://doi.org/10.1186/1472-6947-9-11

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1472-6947-9-11