Abstract

Background

Asthma is one of the most common childhood illnesses. Guideline-driven clinical care positively affects patient outcomes for care. There are several asthma guidelines and reminder methods for implementation to help integrate them into clinical workflow. Our goal is to determine the most prevalent method of guideline implementation; establish which methods significantly improved clinical care; and identify the factors most commonly associated with a successful and sustainable implementation.

Methods

PUBMED (MEDLINE), OVID CINAHL, ISI Web of Science, and EMBASE.

Study Selection: Studies were included if they evaluated an asthma protocol or prompt, evaluated an intervention, a clinical trial of a protocol implementation, and qualitative studies as part of a protocol intervention. Studies were excluded if they had non-human subjects, were studies on efficacy and effectiveness of drugs, did not include an evaluation component, studied an educational intervention only, or were a case report, survey, editorial, letter to the editor.

Results

From 14,478 abstracts, we included 101 full-text articles in the analysis. The most frequent study design was pre-post, followed by prospective, population based case series or consecutive case series, and randomized trials. Paper-based reminders were the most frequent with fully computerized, then computer generated, and other modalities. No study reported a decrease in health care practitioner performance or declining patient outcomes. The most common primary outcome measure was compliance with provided or prescribing guidelines, key clinical indicators such as patient outcomes or quality of life, and length of stay.

Conclusions

Paper-based implementations are by far the most popular approach to implement a guideline or protocol. The number of publications on asthma protocol reminder systems is increasing. The number of computerized and computer-generated studies is also increasing. Asthma guidelines generally improved patient care and practitioner performance regardless of the implementation method.

Similar content being viewed by others

Background

Asthma disease burden

Asthma is the most common chronic childhood disease in the U.S., affecting 9 million individuals under 18 years of age (12.5%) [1, 2]. Approximately 4 million children experience an asthma exacerbation annually resulting in more than 1.8 million emergency department (ED) visits and an estimated 14 million missed school days each year [2, 3]. In the U.S., asthma is the third leading cause for hospitalizations among patients <18 years of age [4]. Asthma exacerbations leading to ED encounters and hospitalizations account for >60% of asthma-related costs [5].

Characteristics of clinical guidelines

Guideline-driven clinical care, in which providers follow evidence-based treatment recommendations for given medical conditions, positively affects patient outcomes for routine clinical care as well as asthma treatment in particular [6–9]. Care providers, payors, federal agencies, healthcare institutions, and patient organizations support the development, implementation, and application of clinical guidelines in order to standardize treatments and quality of care. Consequently, the number of nationally endorsed and locally developed guidelines has grown with 2331 active guidelines found on the US department of Health and Human Services website [10].

Several guidelines exist to support clinicians in providing adequate asthma treatment, including Global Initiative for Asthma Guidelines [11], the British Thoracic Society Guidelines [12], Australian national guidelines [13], and the guideline from the U.S. National Heart Lung and Blood Institute (NHLBI) [14].

There are several reminder methods of implementing guidelines to integrate them into clinical workflow. Reminders can be paper-based, computer-generated or computerized reminders depending on the particular clinic. Reminder methods are defined as follows:

-

a)

Paper-based implementation approaches included the use of paper within the patient’s chart in the form of stickers, tags, or sheets of paper and patients were identified manually by office staff.

-

b)

Computer-generated implementations included the application of computerized algorithms to identify eligible patients, but the reminder or protocol was printed out and placed in the patient chart or given to the clinician during the visit.

-

c)

Computerized reminders included prompts that were entirely electronic, i.e., computerized algorithms identified eligible patients, and prompts were provided upon access to the electronic clinical information system [15].

However, time and guideline initiation can limit the integration of guidelines in the daily routine of practicing clinicians, [6] and many implementation efforts have been shown little effect [16]. We believe that asthma guidelines would be used more frequently if clinicians were aware of the best published implementation methods. The objective of our systematic literature review was to determine the most prevalent method of guideline implementation (paper, computer-generated, or computerized), as reported in the literature; establish which methods significantly improved clinical care; and identify the factors most commonly associated with a successful and sustainable asthma guideline implementation.

Methods

Literature search

We conducted a systematic literature review to identify articles that studied the impact of implementing paper-based and computerized asthma care protocols and guidelines in any clinical setting, including treatment protocols, clinical pathways, and guidelines. We did not create a central review protocol and followed PRISMA guidelines; however we were unable to perform meta-analysis [17]. Studies were eligible for inclusion if they examined asthma protocol implementation for clinicians or patients, evaluated an intervention and not just the design, were a clinical trial of a protocol implementation, and qualitative studies as part of a protocol intervention. Studies were excluded if they enrolled non-human subjects, studied the efficacy and effectiveness of drugs, lacked an evaluation component, tested no intervention, studied a clinician or patient educational intervention only, or were a case report, survey, editorial, letter to the editor, or non-English language report.

We searched the electronic literature databases PUBMED® (MEDLINE®) [18], OVID CINAHL® [19], ISI Web of Science™ [20], and EMBASE ® [19] from their respective inception to December 2010. In MEDLINE, all search terms were defined as keywords and Medical Subject Headings (MeSH®) unless otherwise noted; in the remaining databases, the search terms were defined only as keywords. The search strategy was based on the concept “asthma” combined with concepts representing any kind of asthma protocol implementation. Search terms included ‘asthma’ and any combination of the terms ‘checklist’, ‘reminder systems’, ‘reminder’, ‘guideline’, ‘pathway’, ‘flow diagram’, ‘guideline adherence’, ‘protocol’, ‘care map’, ‘computer’, ‘medical informatics’, ‘informatics’ and relevant plurals. The exact PubMed query is shown below:

asthma AND (medical informatics OR computers OR computer OR informatics OR checklist OR checklists OR reminder systems OR reminder OR guideline OR pathway OR pathways OR “flow diagram” OR guidelines OR guideline adherence OR protocol OR protocols OR “care map” OR “care maps”)

Review of identified studies

The title and abstract of all articles identified using the keyword searches were retrieved and reviewed by two of three independent reviewers (JWD, KWC, DA). Disagreements between two reviewers were resolved by consensus among all three participating reviewers. The bibliographies of identified review articles were examined and additional relevant studies were included. All included studies were examined for redundancy (e.g., findings of one study reported in two different reports) and duplicate results were removed. The full text of included articles was obtained and two reviewers (JWD, DA) screened the articles independently for inclusion. Disagreements were resolved by consensus. Data were abstracted by one reviewer (JWD) into a central database. To obtain a better understanding of implementation approaches, studies were further categorized as “paper-based,” “computer-generated,” or “computerized [15]”.

Analysis

We collected basic demographic data from each study including reminder type [15], setting, study design, randomization, patient and clinician populations, setting, the centers (multicenter or single center) and factors described below. We looked at all included studies to determine similar characteristics associated with implementing guidelines, study design, and study scoring. We assessed study quality following the methodology of Wang et al., which grades study design on a 5-point scale with Level 1 studies being the most scientifically rigorous and Level 5 studies having a more lenient study design [21]. The study levels were adapted as follows:

-

1.

Level 1 studies were primary prospective studies, case–control groups of consecutive or random patients.

-

2.

Level 2 studies were similar to Level 1 but with a smaller sample size.

-

3.

Level 3 studies were retrospective studies, non-random designs, or non-consecutive comparison groups.

-

4.

Level 4 studies had a reference standard or convenience sample of patients who have the target illness.

-

5.

Level 5 studies were comparisons of clinical findings with a reference or convenience of unknown or uncertain validity.

The effects of the implementation on the performance were graded based on Hunt et al. [22]. The intervention effects on health care practitioner performance and patient outcomes were examined. Studies were classified to have no change, a decreased change, or an increased change. A positive improvement in reported patient outcomes was an increased change; a negative effect such as a decrease in the number of action plans given after implementation were considered a decreased change. A positive improvement in reported measure of health care practitioner performance such as guideline compliance or increased charting was considered an increased change in performance; a reported decrease in the measurement was considered a decreased change.

We assessed success factors following the methodology of Kawamoto et al. [23]. The success factors for each study were determined from the article’s text. If the success factors of the implementation could not be determined or were not present in the article, we contacted the authors. The success factors were designed from and are intended to be applied to clinical decision support systems. We applied the factors to all three study types. The success factors are listed in Table 1. When the prompt information was unavailable, the study authors were contacted in an attempt to obtain it.

Agreement among reviewers to consider articles based on title and abstract was high (0.972 to 0.996), as determined by Yule’s Q [24].

Results

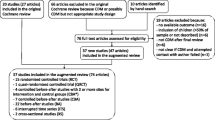

The literature searches resulted in 27,995 abstracts during the search period (Figure 1). After excluding 13,477 duplicates 14,384 articles were further excluded based on a review of the title and abstract, leaving 134 articles for further consideration. We retrieved the full text of the 134 articles and added 13 articles for full-text review that were identified from the bibliographies of the 104 full text studies. From the 147 articles we excluded 39 studies not meeting inclusion criteria based on the full-text information.

The 43 articles we removed from the study set included resource utilization studies (2 articles), implementation/design/development/system descriptions without evaluations (10 articles), drug trial publications (7), surveys (7), no intervention, descriptive or protocol descriptions (6), no guideline implementation (5), reviews (1), overviews of asthma (1), studies of education-only interventions (1), simulation studies (1), abstracts (1), and one study that only provided data on the efficacy of guidelines (not an intervention). We included 104 full-text articles for evaluation (Figure 1). We extracted data from 101 articles. Two sets of articles contained the same intervention and therefore only one was included in the analysis, these were 3 articles [25–27] and 2 articles [28, 29].

We identified the guideline implementation method, study setting, study design, randomization, patient population, clinician population, setting, and study center count for all 101 articles (Table 2). Study publication years ranged from 1986 to 2010, with a peak of 10 studies in 2010 (Figure 2). Of the studies that reported a guideline 75 used site-specific guidelines, 66 used national guidelines, and 1 used another protocol. Forty-eight studies adapted a national guideline to be site-specific. Study periods ranged from 2 months to 114 months. Patient follow-up ranged from half a day to 730 days. In 59 studies the physician was the clinician studied, nurses were studied in 25 studies, respiratory therapists in 8, and other clinicians in 3 studies. Of the studies that mentioned the clinician population, the range of participants was 8 to 377. Of studies that mentioned the total patient population size, the range of participants was 18 to 27,725.

Studies were performed in the United States (48 studies), the United Kingdom (10 studies), Canada (9 studies), Australia (8 studies), the Netherlands (6 studies), Singapore (5 studies), New Zealand (2 studies), Brazil (2 studies), Saudi Arabia (2 studies), Germany (2 studies), and 1 study each in France, Oman, Switzerland, Italy, Iran, Japan, Taiwan, Korea, Thailand, and the United Arab Emirates.

The most frequent study designs included a pre-post design (61 studies), followed by 56 studies that applied a prospective design, 27 population based case series, 23 consecutive case series, 13 randomized trials, 15 non-blinded trials, 16 nonconsecutive case series, 5 double-blinded trials, and 6 best-case series. Studies could be classified as having more than one design element. Six studies were descriptive and one looked at quality improvement. Most studies were performed at academic institutions (57 studies) with 42 studies performed at non-academic institutions and 3 did not describe the setting. Studies looked at outpatients most frequently (50 studies), followed by the emergency department (39 studies) and inpatients (20 studies), with 7 studies looking at patients in other settings (e.g., the home). Some studies involve multiple settings. Most studies were performed in a single center (64 studies) versus a multi-center environment (38 studies).

Reminders consisted of paper-based (82 studies), computer generated (8 studies), fully computerized (12 studies), and other modalities (10 studies). The interventions were protocol-based (61 studies), treatment-based (53 studies), focused on the continuity of care (17 studies), scoring based (19 studies), and included an educational component (48 studies). Fifty studies reported or described using an asthma scoring metric that was applied to guide treatment decisions. Seventy-three studies listed some or all of the medications suggested for use in asthma management. Forty-two studies included clinician education and 30 studies included patient education (e.g., inhaler technique, asthma education and teaching). If the intervention method was described, 67 described measuring protocol adherence including chart review, severity scoring, checking orders, and the use of the physical protocol. Ten described work-flow interventions, and 2 looked at the timing of care during the patient’s visit.

The effects of the intervention are shown in, Table 2. No study reported a decrease in health care practitioner performance or declining patient outcomes. 66 (63%) studies improved health care practitioner performance and 32 (31%) studies had no change in performance. 34 (33%) studies increased or improved patient outcomes and 37 (36%) resulted without affecting a change in outcomes.

Among the 12 computerized studies, 5 studies with no change in the health care practitioner performance, 7 improved performance. There were 3 studies with no change in the patient outcomes and 9 studies that improved patient outcomes. Among the 8 computer-generated studies 4 resulted in no change in the health care practitioner performance, 4 improved performance. There were 5 studies with no change in the patient outcomes and 3 studies that improved patient outcomes. Paper-based studies had 24 studies with no change in the health care practitioner performance, 56 improved performance. There were 31 studies with no change in the patient outcomes and 51 studies that improved patient outcomes.Study quality is shown in Figure 3. Most studies (41%) were assessed as level 3 quality studies, i.e., retrospective studies, non-random designs, or non-consecutive comparison groups.

The success factors for each study are in Table 3. The number of success factors implemented ranged from 0 to 15, from a maximum of 22 possible. Computerized studies implemented an average of 7.1 success factors (range: 2 to 15). Computer-generated studies implemented an average of 5.7 success factors (range: 3 to 11); and paper-based studies implemented an average of 3.7 success factors (range: 0 to 12). The paper-based implementation most often had a computer help to generate the decision support, the computer-generated and computerized implementations had clear and intuitive interfaces or prompts.

The most common primary outcome measure was compliance with provided guidelines or prescribing guidelines (32 studies), key clinical indicators such as patient outcomes or quality of life were used in 20 studies, and hospital or emergency length of stay in 19 studies. Admission was used as a primary outcome in 8 studies and medication use was looked at in 8 studies including the use of a spacer, timing of medication administration, use of oxygen. Relapse to either the inpatient or emergency department were used in 4 studies; and educational outcomes were used in 2 studies. The administration of an action plan, filling prescriptions, quality improvement, documentation of severity, ED visits, and cost were looked at as primary outcomes in only one study each. One qualitative study was included.

Of the 16 studies that reported a percentage of patients going home on take-home medications either beta-agonists or inhaled corticosteroids, the mean initial reported value was 57% (range: 0.53%, 92%) with a mean final reported value of 69% (range: 14%, 100%). Of the 18 studies that reported the percentage of patients with an asthma action plan or asthma care plan, the mean initial reported value was 20% (range: 0%, 62%) with a mean final reported value of 46% (range: 7%, 100%). Studies (49) that looked at admissions rates between groups reported an initial mean value of 11% (range: 0%, 55%) with a mean final reported value of 9% (range: 0%, 37%) but was highly variable based on selected population. The 38 studies that looked at ED visit rates between groups reported an initial mean value of 9% (range: 0%, 47%) with a mean final reported value of 8% (range: 0%, 46%) also variable by population chosen.

Discussion

Paper-based implementations are by far the most prevalent method to implement a guideline or protocol. All of the methods implemented either improved clinical care or had no change. Of those that improved patient care, 94 were paper-based, 9 were computerized and only 3 were computer-generated. The paper-based implementation was the most likely to report improving patient care. Of the studies that reported improving patient care, they reported an average of 4.5 success factors with “Clear and intuitive user interface with prominent display of advice” (50%), “Active involvement of local opinion leaders” (41%), and “Local user involvement in development process”(41%) being the most common success factors reported, and 52 (83%) of them also improved practitioner performance. They were most often prospective (59%) and pre-post (63%) study designs. These characteristics are reported as our “best” implementation methods since they improved patient care. Due to the disparate nature of the results across manuscripts, we did not perform a meta-analysis but presented the descriptive data in aggregate form.

Clinical decision support research is difficult to perform. Alerting methodologies and their effectiveness have been studied in the literature but are frequently limited in scope in terms of time and conditions [126–129]. The results suggest that reminder systems are effective at changing behavior and improving care, and they are more successful when designed for a specific environment [127]. This individualized design and the necessary study design demands, help to make clinical decision support more difficult to evaluate homogenously.

The double-blinded randomized controlled trial is considered the gold-standard for study design but it is difficult to implement any kind of reminder system that could be effectively blinded and randomized. While blinding is frequently difficult, decision support implementations can be blinded if the interventions occur at different locations or for different providers. Randomized controlled trials are not well-presented in the informatics literature [130], and many potential issues exist in implementation research including issues such as randomization (e.g. by patient, physician, day, clinic) and outcome measures (e.g. informatics-centric or patient outcomes centric). Failure to consider clinical workflow when implementing reminder systems has impeded guideline adoption and workflow issues can be barriers to adoption [131, 132].

Pediatric and adult populations are studied equally. As a chronic condition outpatient studies were most frequent followed by ED-based studies and finally inpatient studies. Few studies reported randomization and a pre-post design was most common. Seventy percent of the studies had a level 3 or higher. The studies were designed optimally for the disparate locations, settings, and factors that needed to be considered. We excluded studies looking at just an educational component for either clinicians or patients because these covered general asthma and guideline knowledge, not implementation or adherence.

No interventions reported decreasing the quality of clinician care or patient care. “No change” in care or an improvement in care or performance was reported in all published studies. This may be due to negative studies not being published. Because of the disparate outcomes measures used, a single characteristic could not be determined to decide which implementation methodology was best or most-effective. Choosing the best implementation method from paper-based, computerized, and computer generated is a situationally dependent task and medical record and workflow considerations for specific settings should be taken into account.

The computerized studies had no change in clinician performance in 42% of the interventions; this may be due to the prompts not being integrated into the clinician’s workflow. The computerized studies mostly reported improving patient outcomes (75%) and having no change on patient outcomes. The computer-generated studies were evenly split on having no change in practitioner performance and improving performance but had 62% of the studies report no change in patient outcomes. The paper-based studies had 70% reporting an improvement in clinician performance and a 62% improvement in the patient outcomes. There were more paper-based than computer-based studies, but paper can be an effective way to implement a protocol reminder. However, as hospitals increase their use of computerized decision support and electronic medical records, it is likely that the efficacy of computer-based protocol implementations will also improve.

Many studies did not implement or report many success factors [33]. These success factors were created for computerized decision support implementations so they may not be as valuable a scoring tool for the paper-based studies. We applied them to the paper-based and computer-generated studies as best as possible (e.g., a paper-based form with check boxes would have required minimal time to use compared to a paper-based form that required writing out entirely new orders by hand). Automatically prompting providers increases adherence to recommendations [133], however in a newer systematic review, effective decision support is still provided to both the patients and physicians and is lower for electronic systems [134]. The benefits of decision support still remain small [135].

The analysis is limited by what results were reported in the manuscripts. Although an attempt was made to contact the corresponding authors, some manuscripts were 20 years old or more and details about the exact intervention may have been lost. Because we only included published manuscripts, a publication bias may exist where studies with positive results are more likely to be published. Given the tendency to publish and emphasize favorable outcomes, decision support systems have the potential to increase adverse outcomes however, these are rarely reported [136].

The outcomes varied from each study and were too disparate to combine. In conclusion, asthma guidelines generally improved patient care and practitioner performance regardless of the implementation method.

Conclusion

The number of publications on asthma protocol reminder systems is increasing. The number of computerized and computer-generated studies is also increasing. There appears to be a moderate increase towards use of information technology in guideline implementation and will probably continue to rise as electronic health records become more widespread. Asthma guidelines improved patient care and practitioner performance regardless of the implementation method.

Abbreviations

- ED:

-

Emergency department

- NHLBI:

-

National heart lung and blood institute.

References

FastStats - Asthma. [http://www.cdc.gov/nchs/fastats/asthma.htm]

QuickStats: Percentage of Children Aged < 18 years Who Have Ever Had Asthma Diagnosed, by Age Group --- United States. 2003, [http://www.cdc.gov/mmwr/preview/mmwrhtml/mm5416a5.htm]

Allergy & Asthma Adovocate: Quarterly newsletter of the American Academy of Allergy, Asthma and Immunology. [http://www.aaaai.org/home.aspx]

Eder W, Ege MJ, Von Mutius E: The asthma epidemic. N Engl J Med. 2006, 355: 2226-2235. 10.1056/NEJMra054308.

Lozano P, Sullivan SD, Smith DH, Weiss KB: The economic burden of asthma in US children: estimates from the National Medical Expenditure Survey. J Allergy Clin Immunol. 1999, 104: 957-963. 10.1016/S0091-6749(99)70075-8.

Grimshaw JM, Eccles MP, Walker AE, Thomas RE: Changing physicians' behavior: what works and thoughts on getting more things to work. J Contin Educ Health Prof. 2002, 22: 237-243. 10.1002/chp.1340220408.

Kuilboer MM, Van Wijk MA, Mosseveld M, van der Does E, De Jongste JC, Overbeek SE, Ponsioen B, van der Lei J: Computed critiquing integrated into daily clinical practice affects physicians' behavior–a randomized clinical trial with AsthmaCritic. Methods Inf Med. 2006, 45: 447-454.

Scribano PV, Lerer T, Kennedy D, Cloutier MM: Provider adherence to a clinical practice guideline for acute asthma in a pediatric emergency department. Acad Emerg Med. 2001, 8: 1147-1152. 10.1111/j.1553-2712.2001.tb01131.x.

Shiffman RN, Freudigman M, Brandt CA, Liaw Y, Navedo DD: A guideline implementation system using handheld computers for office management of asthma: effects on adherence and patient outcomes. Pediatrics. 2000, 105: 767-773. 10.1542/peds.105.4.767.

National Guideline Clearinghouse. [http://www.guidelines.gov/]

Global Strategy for Asthma Management and Prevention. [http://www.ginasthma.org/uploads/users/files/GINA_Report_2011.pdf]

Turner S, Paton J, Higgins B, Douglas G: British guidelines on the management of asthma: what's new for 2011?. Thorax. 2011, 66: 1104-1105. 10.1136/thoraxjnl-2011-200213.

National Asthma Council Australia: Australian Asthma Handbook, Version 1.0. 2014, Melbourne: National Asthma Council Australia, Website. Available from: http://www.asthmahandbook.org.au [Accessed: 8 September 2014]

National Heart Lung and Blood Institute (NHLBI), National Asthma Education and Prevention Program: Expert Panel Report 3 (EPR-3): Guidelines for the Diagnosis and Management of Asthma–Summary Report 2007. J Allergy Clin Immunol. 2007, 120: S94-S138.

Dexheimer JW, Talbot TR, Sanders DL, Rosenbloom ST, Aronsky D: Prompting clinicians about preventive care measures: a systematic review of randomized controlled trials. J Am Med Inform Assoc. 2008, 15: 311-320. 10.1197/jamia.M2555.

Porter SC, Cai Z, Gribbons W, Goldmann DA, Kohane IS: The asthma kiosk: a patient-centered technology for collaborative decision support in the emergency department. J Am Med Inform Assoc. 2004, 11: 458-467. 10.1197/jamia.M1569.

Moher D, Liberati A, Tetzlaff J, Altman DG, Group P: Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Int J Surg. 2010, 8: 336-341. 10.1016/j.ijsu.2010.02.007.

PUBMED. [http://www.ncbi.nlm.nih.gov/pubmed/]

ISI Web of Knowledge. [http://apps.webofknowledge.com]

Wang CS, FitzGerald JM, Schulzer M, Mak E, Ayas NT: Does this dyspneic patient in the emergency department have congestive heart failure?. JAMA. 2005, 294: 1944-1956. 10.1001/jama.294.15.1944.

Hunt DL, Haynes RB, Hanna SE, Smith K: Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA. 1998, 280: 1339-1346. 10.1001/jama.280.15.1339.

Kawamoto K, Houlihan CA, Balas EA, Lobach DF: Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ. 2005, 330: 765-10.1136/bmj.38398.500764.8F.

Alamoudi OS: The efficacy of a management protocol in reducing emergency visits and hospitalizations in chronic asthmatics. Saudi Med J. 2002, 23: 1373-1379.

Doherty SR, Jones P, Stevens H, Davis L, Ryan N, Treeve V: 'Evidence-based implementation' of paediatric asthma guidelines in a rural emergency department. J Paediatr Child Health. 2007, 43: 611-616. 10.1111/j.1440-1754.2007.01151.x.

Doherty SR, Jones PD: Use of an 'evidence-based implementation' strategy to implement evidence-based care of asthma into rural district hospital emergency departments. Rural Remote Health. 2006, 6: 529-

Doherty SR, Jones PD, Davis L, Ryan NJ, Treeve V: Evidence-based implementation of adult asthma guidelines in the emergency department: a controlled trial. Emerg Med Australas. 2007, 19: 31-38. 10.1111/j.1742-6723.2006.00910.x.

To T, Cicutto L, Degani N, McLimont S, Beyene J: Can a community evidence-based asthma care program improve clinical outcomes?: a longitudinal study. Med Care. 2008, 46: 1257-1266. 10.1097/MLR.0b013e31817d6990.

To T, Wang C, Dell SD, Fleming-Carroll B, Parkin P, Scolnik D, Ungar WJ: Can an evidence-based guideline reminder card improve asthma management in the emergency department?. Respir Med. 2010, 104: 1263-1270. 10.1016/j.rmed.2010.03.028.

Abisheganaden J, Chee CB, Goh SK, Yeo LS, Prabhakaran L, Earnest A, Wang YT: Impact of an asthma carepath on the management of acute asthma exacerbations. Ann Acad Med Singapore. 2001, 30: 22-26.

Abisheganaden J, Ng SB, Lam KN, Lim TK: Peak expiratory flow rate guided protocol did not improve outcome in emergency room asthma. Singapore Med J. 1998, 39: 479-484.

Ables AZ, Godenick MT, Lipsitz SR: Improving family practice residents' compliance with asthma practice guidelines. Fam Med. 2002, 34: 23-28.

Akerman MJ, Sinert R: A successful effort to improve asthma care outcome in an inner-city emergency department. J Asthma. 1999, 36: 295-303. 10.3109/02770909909075414.

Baddar S, Worthing EA, Al-Rawas OA, Osman Y, Al-Riyami BM: Compliance of physicians with documentation of an asthma management protocol. Respir Care. 2006, 51: 1432-1440.

Bailey R, Weingarten S, Lewis M, Mohsenifar Z: Impact of clinical pathways and practice guidelines on the management of acute exacerbations of bronchial asthma. Chest. 1998, 113: 28-33. 10.1378/chest.113.1.28.

Baker R, Fraser RC, Stone M, Lambert P, Stevenson K, Shiels C: Randomised controlled trial of the impact of guidelines, prioritized review criteria and feedback on implementation of recommendations for angina and asthma. Br J Gen Pract. 2003, 53: 284-291.

Bell LM, Grundmeier R, Localio R, Zorc J, Fiks AG, Zhang X, Stephens TB, Swietlik M, Guevara JP: Electronic health record-based decision support to improve asthma care: a cluster-randomized trial. Pediatrics. 2010, 125: e770-e777. 10.1542/peds.2009-1385.

Boskabady MH, Rezaeitalab F, Rahimi N, Dehnavi D: Improvement in symptoms and pulmonary function of asthmatic patients due to their treatment according to the Global Strategy for Asthma Management (GINA). BMC Pulm Med. 2008, 8: 26-10.1186/1471-2466-8-26.

Callahan CW, Chan DS, Brads-Pitt T, Underwood GHJ, Imamura DT: Performance feedback improves pediatric residents' adherence to asthma clinical guidelines. J Clin Outcomes Manag. 2003, 10: 317-322.

Cerci Neto A, Ferreira Filho OF, Bueno T, Talhari MA: Reduction in the number of asthma-related hospital admissions after the implementation of a multidisciplinary asthma control program in the city of Londrina, Brazil. J Bras Pneumol. 2008, 34: 639-645.

Chamnan P, Boonlert K, Pasi W, Yodsiri S, Pong-on S, Khansa B, Yongkulwanitchanan P: Implementation of a 12-week disease management program improved clinical outcomes and quality of life in adults with asthma in a rural district hospital: pre- and post-intervention study. Asian Pac J Allergy Immunol. 2010, 28: 15-21.

Chan DS, Callahan CW, Hatch-Pigott VB, Lawless A, Proffitt HL, Manning NE, Schweikert M, Malone FJ: Internet-based home monitoring and education of children with asthma is comparable to ideal office-based care: results of a 1-year asthma in-home monitoring trial. Pediatrics. 2007, 119: 569-578. 10.1542/peds.2006-1884.

Chee CB, Wang SY, Poh SC: Department audit of inpatient management of asthma. Singapore Med J. 1996, 37: 370-373.

Cho SH, Jeong JW, Park HW, Pyun BY, Chang SI, Moon HB, Kim YY, Choi BW: Effectiveness of a computer-assisted asthma management program on physician adherence to guidelines. J Asthma. 2010, 47: 680-686. 10.3109/02770903.2010.481342.

Chouaid C, Bal JP, Fuhrman C, Housset B, Caudron J: Standardized protocol improves asthma management in emergency department. J Asthma. 2004, 41: 19-25. 10.1081/JAS-120024589.

Cloutier MM, Wakefield DB, Sangeloty-Higgins P, Delaronde S, Hall CB: Asthma guideline use by pediatricians in private practices and asthma morbidity. Pediatrics. 2006, 118: 1880-1887. 10.1542/peds.2006-1019.

Cloutier MM, Hall CB, Wakefield DB, Bailit H: Use of asthma guidelines by primary care providers to reduce hospitalizations and emergency department visits in poor, minority, urban children. J Pediatr. 2005, 146: 591-597. 10.1016/j.jpeds.2004.12.017.

Colice GL, Carnathan B, Sung J, Paramore LC: A respiratory therapist-directed protocol for managing inpatients with asthma and COPD incorporating a long-acting bronchodilator. J Asthma. 2005, 42: 29-34. 10.1081/JAS-200044765.

Cunningham S, Logan C, Lockerbie L, Dunn MJ, McMurray A, Prescott RJ: Effect of an integrated care pathway on acute asthma/wheeze in children attending hospital: cluster randomized trial. J Pediatr. 2008, 152: 315-320. 10.1016/j.jpeds.2007.09.033.

Dalcin PDR, Da Rocha PM, Franciscatto E, Kang SH, Menegotto DM, Polanczyk CA, Barreto SS: Effect of clinical pathways on the management of acute asthma in the emergency department: five years of evaluation. J Asthma. 2007, 44: 273-279. 10.1080/02770900701247020.

Davies B, Edwards N, Ploeg J, Virani T: Insights about the process and impact of implementing nursing guidelines on delivery of care in hospitals and community settings. BMC Health Serv Res. 2008, 8: 29-10.1186/1472-6963-8-29.

Davis AM, Cannon M, Ables AZ, Bendyk H: Using the electronic medical record to improve asthma severity documentation and treatment among family medicine residents. Fam Med. 2010, 42: 334-337.

Duke T, Kellermann A, Ellis R, Arheart K, Self T: Asthma in the emergency department: impact of a protocol on optimizing therapy. Am J Emerg Med. 1991, 9: 432-435. 10.1016/0735-6757(91)90208-2.

Eccles M, McColl E, Steen N, Rousseau N, Grimshaw J, Parkin D, Purves I: Effect of computerised evidence based guidelines on management of asthma and angina in adults in primary care: cluster randomised controlled trial. BMJ. 2002, 325: 941-10.1136/bmj.325.7370.941.

Emond SD, Woodruff PG, Lee EY, Singh AK, Camargo CA: Effect of an emergency department asthma program on acute asthma care. Ann Emerg Med. 1999, 34: 321-325. 10.1016/S0196-0644(99)70125-3.

Feder G, Griffiths C, Highton C, Eldridge S, Spence M, Southgate L: Do clinical guidelines introduced with practice based education improve care of asthmatic and diabetic patients? A randomised controlled trial in general practices in east London. BMJ. 1995, 311: 1473-1478. 10.1136/bmj.311.7018.1473.

Fifield J, McQuillan J, Martin-Peele M, Nazarov V, Apter AJ, Babor T, Burleson J, Cushman R, Hepworth J, Jackson E, Reisine S, Sheehan J, Twiggs J: Improving pediatric asthma control among minority children participating in medicaid: providing practice redesign support to deliver a chronic care model. J Asthma. 2010, 47: 718-727. 10.3109/02770903.2010.486846.

Gentile NT, Ufberg J, Barnum M, McHugh M, Karras D: Guidelines reduce x-ray and blood gas utilization in acute asthma. Am J Emerg Med. 2003, 21: 451-453. 10.1016/S0735-6757(03)00165-7.

Gibson PG, Wilson AJ: The use of continuous quality improvement methods to implement practice guidelines in asthma. J Qual Clin Pract. 1996, 16: 87-102.

Gildenhuys J, Lee M, Isbister GK: Does implementation of a paediatric asthma clinical practice guideline worksheet change clinical practice?. Int J Emerg Med. 2009, 2: 33-39. 10.1007/s12245-008-0063-x.

Goh AE, Tang JP, Ling H, Hoe TO, Chong NK, Moh CO, Huak CY: Efficacy of metered-dose inhalers for children with acute asthma exacerbations. Pediatr Pulmonol. 2011, 46 (5): doi:10.1002/ppul.21384

Goldberg R, Chan L, Haley P, Harmata-Booth J, Bass G: Critical pathway for the emergency department management of acute asthma: effect on resource utilization. Ann Emerg Med. 1998, 31: 562-567. 10.1016/S0196-0644(98)70202-1.

Guarnaccia S, Lombardi A, Gaffurini A, Chiarini M, Domenighini S, D'Agata E, Schumacher RF, Spiazzi R, Notarangelo LD: Application and implementation of the GINA asthma guidelines by specialist and primary care physicians: a longitudinal follow-up study on 264 children. Prim Care Respir J. 2007, 16: 357-362.

Van Den Berg NJ, Van Der Palen J, Van Aalderen WM, Bindels PJ, Hagmolen of ten Have W: Implementation of an asthma guideline for the management of childhood asthma in general practice: a randomised controlled trial. Prim Care Respir J. 2008, 17: 90-96. 10.3132/pcrj.2008.00011.

Halterman JS, Fisher S, Conn KM, Fagnano M, Lynch K, Marky A, Szilagyi PG: Improved preventive care for asthma: a randomized trial of clinician prompting in pediatric offices. Arch Pediatr Adolesc Med. 2006, 160: 1018-1025. 10.1001/archpedi.160.10.1018.

Heaney LG, Conway E, Kelly C, Johnston BT, English C, Stevenson M, Gamble J: Predictors of therapy resistant asthma: outcome of a systematic evaluation protocol. Thorax. 2003, 58: 561-566. 10.1136/thorax.58.7.561.

Jans MP, Schellevis FG, Le Coq EM, Bezemer PD, Van Eijk JT: Health outcomes of asthma and COPD patients: the evaluation of a project to implement guidelines in general practice. Int J Qual Health Care. 2001, 13: 17-25. 10.1093/intqhc/13.1.17.

Jans MP, Schellevis FG, Van Hensbergen W, Dukkers Van Emden T, Van Eijk JT: Management of asthma and COPD patients: feasibility of the application of guidelines in general practice. Int J Qual Health Care. 1998, 10: 27-34. 10.1093/intqhc/10.1.27.

Joe RH, Kellermann A, Arheart K, Ellis R, Self T: Emergency department asthma treatment protocol. Ann Pharmacother. 1992, 26: 472-476.

Johnson KB, Blaisdell CJ, Walker A, Eggleston P: Effectiveness of a clinical pathway for inpatient asthma management. Pediatrics. 2000, 106: 1006-1012. 10.1542/peds.106.5.1006.

Jones CA, Clement LT, Morphew T, Kwong KY, Hanley-Lopez J, Lifson F, Opas L, Guterman JJ: Achieving and maintaining asthma control in an urban pediatric disease management program: the Breathmobile Program. J Allergy Clin Immunol. 2007, 119: 1445-1453. 10.1016/j.jaci.2007.02.031.

Kelly AM, Clooney M: Improving asthma discharge management in relation to emergency departments: The ADMIRE project. Emerg Med Australas. 2007, 19: 59-62. 10.1111/j.1742-6723.2006.00929.x.

Kelly CS, Andersen CL, Pestian JP, Wenger AD, Finch AB, Strope GL, Luckstead EF: Improved outcomes for hospitalized asthmatic children using a clinical pathway. Ann Allergy Asthma Immunol. 2000, 84: 509-516. 10.1016/S1081-1206(10)62514-8.

Kwan-Gett TS, Lozano P, Mullin K, Marcuse EK: One-year experience with an inpatient asthma clinical pathway. Arch Pediatr Adolesc Med. 1997, 151: 684-689. 10.1001/archpedi.1997.02170440046008.

Kwok R, Dinh M, Dinh D, Chu M: Improving adherence to asthma clinical guidelines and discharge documentation from emergency departments: implementation of a dynamic and integrated electronic decision support system. Emerg Med Australas. 2009, 21: 31-37. 10.1111/j.1742-6723.2008.01149.x.

Lehman HK, Lillis KA, Shaha SH, Augustine M, Ballow M: Initiation of maintenance antiinflammatory medication in asthmatic children in a pediatric emergency department. Pediatrics. 2006, 118: 2394-2401. 10.1542/peds.2006-0871.

Lesho EP, Myers CP, Ott M, Winslow C, Brown JE: Do clinical practice guidelines improve processes or outcomes in primary care?. Mil Med. 2005, 170: 243-246.

Lierl MB, Pettinichi S, Sebastian KD, Kotagal U: Trial of a therapist-directed protocol for weaning bronchodilator therapy in children with status asthmaticus. Respir Care. 1999, 44: 497-505.

Lim TK, Chin NK, Lee KH, Stebbings AM: Early discharge of patients hospitalized with acute asthma: a controlled study. Respir Med. 2000, 94: 1234-1240. 10.1053/rmed.2000.0958.

Lougheed MD, Olajos-Clow J, Szpiro K, Moyse P, Julien B, Wang M, Day AG: Multicentre evaluation of an emergency department asthma care pathway for adults. CJEM. 2009, 11: 215-229.

Lukacs SL, France EK, Baron AE, Crane LA: Effectiveness of an asthma management program for pediatric members of a large health maintenance organization. Arch Pediatr Adolesc Med. 2002, 156: 872-876. 10.1001/archpedi.156.9.872.

Maa SH, Chang YC, Chou CL, Ho SC, Sheng TF, Macdonald K, Wang Y, Shen YM, Abraham I: Evaluation of the feasibility of a school-based asthma management programme in Taiwan. J Clin Nurs. 2010, 19: 2415-2423. 10.1111/j.1365-2702.2010.03283.x.

Mackey D, Myles M, Spooner CH, Lari H, Tyler L, Blitz S, Senthilselvan A, Rowe BH: Changing the process of care and practice in acute asthma in the emergency department: experience with an asthma care map in a regional hospital. CJEM. 2007, 9: 353-365.

Martens JD, van der Weijden T, Severens JL, De Clercq PA, De Bruijn DP, Kester AD, Winkens RA: The effect of computer reminders on GPs' prescribing behaviour: a cluster-randomised trial. Int J Med Inform. 2007, 76 (Suppl 3): S403-S416.

Martin E: The CGHA asthma management program and its effect upon pediatric asthma admission rates. Clin Pediatr (Phila). 2001, 40: 425-434. 10.1177/000992280104000801.

Massie J, Efron D, Cerritelli B, South M, Powell C, Haby MM, Gilbert E, Vidmar S, Carlin J, Robertson CF: Implementation of evidence based guidelines for paediatric asthma management in a teaching hospital. Arch Dis Child. 2004, 89: 660-664. 10.1136/adc.2003.032110.

McCowan C, Neville RG, Ricketts IW, Warner FC, Hoskins G, Thomas GE: Lessons from a randomized controlled trial designed to evaluate computer decision support software to improve the management of asthma. Med Inform Internet Med. 2001, 26: 191-201.

McDowell KM, Chatburn RL, Myers TR, O'Riordan MA, Kercsmar CM: A cost-saving algorithm for children hospitalized for status asthmaticus. Arch Pediatr Adolesc Med. 1998, 152: 977-984.

McFadden ER, Elsanadi N, Dixon L, Takacs M, Deal EC, Boyd KK, Idemoto BK, Broseman LA, Panuska J, Hammons T, Smith B, Caruso F, McFadden CB, Shoemaker L, Warren EL, Hejal R, Strauss L, Gilbert I: Protocol therapy for acute asthma: therapeutic benefits and cost savings. Am J Med. 1995, 99: 651-661. 10.1016/S0002-9343(99)80253-8.

Mitchell EA, Didsbury PB, Kruithof N, Robinson E, Milmine M, Barry M, Newman J: A randomized controlled trial of an asthma clinical pathway for children in general practice. Acta Paediatr. 2005, 94: 226-233. 10.1080/08035250410020235.

Nelson KA, Freiner D, Garbutt J, Trinkaus K, Bruns J, Sterkel R, Smith SR, Strunk RC: Acute asthma management by a pediatric after-hours call center. Telemed J E Health. 2009, 15: 538-545. 10.1089/tmj.2009.0005.

Newcomb P: Results of an asthma disease management program in an urban pediatric community clinic. J Spec Pediatr Nurs. 2006, 11: 178-188. 10.1111/j.1744-6155.2006.00064.x.

Norton SP, Pusic MV, Taha F, Heathcote S, Carleton BC: Effect of a clinical pathway on the hospitalisation rates of children with asthma: a prospective study. Arch Dis Child. 2007, 92: 60-66. 10.1136/adc.2006.097287.

Patel PH, Welsh C, Foggs MB: Improved asthma outcomes using a coordinated care approach in a large medical group. Dis Manag. 2004, 7: 102-111. 10.1089/1093507041253235.

Porter SC, Forbes P, Feldman HA, Goldmann DA: Impact of patient-centered decision support on quality of asthma care in the emergency department. Pediatrics. 2006, 117: e33-e42. 10.1542/peds.2005-0906.

Press S, Lipkind RS: A treatment protocol of the acute asthma patient in a pediatric emergency department. Clin Pediatr (Phila). 1991, 30: 573-577. 10.1177/000992289103001001.

Qazi K, Altamimi SA, Tamim H, Serrano K: Impact of an emergency nurse-initiated asthma management protocol on door-to-first-salbutamol-nebulization-time in a pediatric emergency department. J Emerg Nurs. 2010, 36: 428-433. 10.1016/j.jen.2009.11.003.

Quint DM, Teach SJ: IMPACT DC: Reconceptualizing the Role of the Emergency Department for Urban Children with Asthma. Clin Pediatr Emerg Med. 2009, 10: 115-121. 10.1016/j.cpem.2009.03.003.

Renzi PM, Ghezzo H, Goulet S, Dorval E, Thivierge RL: Paper stamp checklist tool enhances asthma guidelines knowledge and implementation by primary care physicians. Can Respir J. 2006, 13: 193-197.

Robinson SM, Harrison BD, Lambert MA: Effect of a preprinted form on the management of acute asthma in an accident and emergency department. J Accid Emerg Med. 1996, 13: 93-97. 10.1136/emj.13.2.93.

Rowe BH, Chahal AM, Spooner CH, Blitz S, Senthilselvan A, Wilson D, Holroyd BR, Bullard M: Increasing the use of anti-inflammatory agents for acute asthma in the emergency department: experience with an asthma care map. Can Respir J. 2008, 15: 20-26.

Ruoff G: Effects of flow sheet implementation on physician performance in the management of asthmatic patients. Fam Med. 2002, 34: 514-517.

Schneider A, Wensing M, Biessecker K, Quinzler R, Kaufmann-Kolle P, Szecsenyi J: Impact of quality circles for improvement of asthma care: results of a randomized controlled trial. J Eval Clin Pract. 2008, 14: 185-190. 10.1111/j.1365-2753.2007.00827.x.

Schneider SM: Effect of a treatment protocol on the efficiency of care of the adult acute asthmatic. Ann Emerg Med. 1986, 15: 703-706. 10.1016/S0196-0644(86)80429-2.

Shelledy DC, McCormick SR, LeGrand TS, Cardenas J, Peters JI: The effect of a pediatric asthma management program provided by respiratory therapists on patient outcomes and cost. Heart Lung. 2005, 34: 423-428. 10.1016/j.hrtlng.2005.05.004.

Sherman JM, Capen CL: The Red Alert Program for life-threatening asthma. Pediatrics. 1997, 100: 187-191. 10.1542/peds.100.2.187.

Stead L, Whiteside T: Evaluation of a new EMS asthma protocol in New York City: a preliminary report. Prehosp Emerg Care. 1999, 3: 338-342. 10.1080/10903129908958965.

Stell IM: Asthma management in accident and emergency and the BTS guidelines–a study of the impact of clinical audit. J Accid Emerg Med. 1996, 13: 392-394. 10.1136/emj.13.6.392.

Steurer-Stey C, Grob U, Jung S, Vetter W, Steurer J: Education and a standardized management protocol improve the assessment and management of asthma in the emergency department. Swiss Med Wkly. 2005, 135: 222-227.

Stormon MO, Mellis CM, Van Asperen PP, Kilham HA: Outcome evaluation of early discharge of asthmatic children from hospital: a randomized control trial. J Qual Clin Pract. 1999, 19: 149-154. 10.1046/j.1440-1762.1999.00305.x.

Sucov A, Veenema TG: Implementation of a disease-specific care plan changes clinician behaviors. Am J Emerg Med. 2000, 18: 367-371. 10.1053/ajem.2000.7321.

Suh DC, Shin SK, Okpara I, Voytovich RM, Zimmerman A: Impact of a targeted asthma intervention program on treatment costs in patients with asthma. Am J Manag Care. 2001, 7: 897-906.

Sulaiman ND, Barton CA, Liaw ST, Harris CA, Sawyer SM, Abramson MJ, Robertson C, Dharmage SC: Do small group workshops and locally adapted guidelines improve asthma patients' health outcomes? A cluster randomized controlled trial. Fam Pract. 2010, 27: 246-254. 10.1093/fampra/cmq013.

Suzuki T, Kaneko M, Saito I, Kokubu F, Kasahara K, Nakajima H, Adachi M, Shimbo T, Sugiyama E, Sato H: Comparison of physicians' compliance, clinical efficacy, and drug cost before and after introduction of Asthma Prevention and Management Guidelines in Japan (JGL2003). Allergol Int. 2010, 59: 33-41. 10.2332/allergolint.09-OA-0102.

Szilagyi PG, Rodewald LE, Savageau J, Yoos L, Doane C: Improving influenza vaccination rates in children with asthma: a test of a computerized reminder system and an analysis of factors predicting vaccination compliance. Pediatrics. 1992, 90: 871-875.

Thomas KW, Dayton CS, Peterson MW: Evaluation of internet-based clinical decision support systems. J Med Internet Res. 1999, 1: E6-

Tierney WM, Overhage JM, Murray MD, Harris LE, Zhou XH, Eckert GJ, Smith FE, Nienaber N, McDonald CJ, Wolinsky FD: Can computer-generated evidence-based care suggestions enhance evidence-based management of asthma and chronic obstructive pulmonary disease? A randomized, controlled trial. Health Serv Res. 2005, 40: 477-497. 10.1111/j.1475-6773.2005.0t369.x.

Touzin K, Queyrens A, Bussières JF, Languérand G, Bailey B, Labergne N: Management of asthma in a pediatric emergency department: asthma pathway improves speed to first corticosteroid and clinical management by RRT. Can J Respir Ther. 2008, 44: 22-26.

Town I, Kwong T, Holst P, Beasley R: Use of a management plan for treating asthma in an emergency department. Thorax. 1990, 45: 702-706. 10.1136/thx.45.9.702.

van der Meer V, Van Stel HF, Bakker MJ, Roldaan AC, Assendelft WJ, Sterk PJ, Rabe KF, Sont JK: Weekly self-monitoring and treatment adjustment benefit patients with partly controlled and uncontrolled asthma: an analysis of the SMASHING study. Respir Res. 2010, 11: 74-10.1186/1465-9921-11-74.

Vandeleur M, Chroinin MN: Implementation of spacer therapy for acute asthma in children. Ir Med J. 2009, 102: 264-266.

Wazeka A, Valacer DJ, Cooper M, Caplan DW, DiMaio M: Impact of a pediatric asthma clinical pathway on hospital cost and length of stay. Pediatr Pulmonol. 2001, 32: 211-216. 10.1002/ppul.1110.

Webb LZ, Kuykendall DH, Zeiger RS, Berquist SL, Lischio D, Wilson T, Freedman C: The impact of status asthmaticus practice guidelines on patient outcome and physician behavior. QRB Qual Rev Bull. 1992, 18: 471-476.

Welsh KM, Magnusson M, Napoli L: Asthma clinical pathway: an interdisciplinary approach to implementation in the inpatient setting. Pediatr Nurs. 1999, 25: 79-80. 83–77

Wright J, Warren E, Reeves J, Bibby J, Harrison S, Dowswell G, Russell I, Russell D: Effectiveness of multifaceted implementation of guidelines in primary care. J Health Serv Res Policy. 2003, 8: 142-148. 10.1258/135581903322029485.

Bright TJ, Wong A, Dhurjati R, Bristow E, Bastian L, Coeytaux RR, Samsa G, Hasselblad V, Williams JW, Musty MD, Wing L, Kendrick AS, Sanders GD, Lobach D: Effect of clinical decision-support systems: a systematic review. Ann Intern Med. 2012, 157: 29-43. 10.7326/0003-4819-157-1-201207030-00450.

Cheung A, Weir M, Mayhew A, Kozloff N, Brown K, Grimshaw J: Overview of systematic reviews of the effectiveness of reminders in improving healthcare professional behavior. Syst Rev. 2012, 1: 36-10.1186/2046-4053-1-36.

Klatt TE, Hopp E: Effect of a best-practice alert on the rate of influenza vaccination of pregnant women. Obstet Gynecol. 2012, 119: 301-305. 10.1097/AOG.0b013e318242032a.

McCoy AB, Waitman LR, Lewis JB, Wright JA, Choma DP, Miller RA, Peterson JF: A framework for evaluating the appropriateness of clinical decision support alerts and responses. J Am Med Inform Assoc. 2012, 19: 346-352. 10.1136/amiajnl-2011-000185.

Augestad KM, Berntsen G, Lassen K, Bellika JG, Wootton R, Lindsetmo RO, Study Group of Research Quality in Medical I, Decision S: Standards for reporting randomized controlled trials in medical informatics: a systematic review of CONSORT adherence in RCTs on clinical decision support. J Am Med Inform Assoc. 2012, 19: 13-21. 10.1136/amiajnl-2011-000411.

Mayo-Smith MF, Agrawal A: Factors associated with improved completion of computerized clinical reminders across a large healthcare system. Int J Med Inform. 2007, 76: 710-716. 10.1016/j.ijmedinf.2006.07.003.

Saleem JJ, Patterson ES, Militello L, Render ML, Orshansky G, Asch SM: Exploring barriers and facilitators to the use of computerized clinical reminders. J Am Med Inform Assoc. 2005, 12: 438-447. 10.1197/jamia.M1777.

Garg AX, Adhikari NK, McDonald H, Rosas-Arellano MP, Devereaux PJ, Beyene J, Sam J, Haynes RB: Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA. 2005, 293: 1223-1238. 10.1001/jama.293.10.1223.

Roshanov PS, Fernandes N, Wilczynski JM, Hemens BJ, You JJ, Handler SM, Nieuwlaat R, Souza NM, Beyene J, Van Spall HG, Garg AX, Haynes RB: Features of effective computerised clinical decision support systems: meta-regression of 162 randomised trials. BMJ. 2013, 346: f657-10.1136/bmj.f657.

Shojania KG, Jennings A, Mayhew A, Ramsay C, Eccles M, Grimshaw J: Effect of point-of-care computer reminders on physician behaviour: a systematic review. CMAJ. 2010, 182: E216-E225. 10.1503/cmaj.090578.

Carling CL, Kirkehei I, Dalsbo TK, Paulsen E: Risks to patient safety associated with implementation of electronic applications for medication management in ambulatory care–a systematic review. BMC Med Inform Decis Mak. 2013, 13: 133-10.1186/1472-6947-13-133.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1472-6947/14/82/prepub

Acknowledgements

The first author was supported by a training grant from the National Library of Medicine [LM T15 007450–03]. This work was supported by [LM 009747–01] (JWD, DA).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed materially to the production of this manuscript. JD participated in the design, acquisition of data, drafting of the manuscript, critical revision, and technical, and material support. EB was involved with drafting of the manuscript and critical revisions. KC participated in the design, article review, and revisions. KJ was involved with the conceptual design and revisions. DA participated in article review, conception, design, and critical revisions. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly credited.

About this article

Cite this article

Dexheimer, J.W., Borycki, E.M., Chiu, KW. et al. A systematic review of the implementation and impact of asthma protocols. BMC Med Inform Decis Mak 14, 82 (2014). https://doi.org/10.1186/1472-6947-14-82

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1472-6947-14-82