Abstract

Background

Increased serum urate levels are associated with poor outcomes including but not limited to gout. It is unclear whether serum urate levels are the sole predictor of incident hyperuricemia or whether demographic and clinical risk factors also predict the development of hyperuricemia. The goal of this study was to identify risk factors for incident hyperuricemia over 9 years in a population-based study, ARIC.

Methods

ARIC recruited individuals from 4 US communities; 8,342 participants who had urate levels <7.0 mg/dL were included in this analysis. Risk factors (including baseline, 3-year, and change in urate level over 3 years) for 9-year incident hyperuricemia (urate level of > 7.0 g/dL) were identified using an AIC-based selection approach in a modified Poisson regression model.

Results

The 9-year cumulative incidence of hyperuricemia was 4%; men = 5%; women = 3%; African Americans = 6% and whites = 3%. The adjusted model included 9 predictors for incident hyperuricemia over 9 years: male sex (RR = 1.73 95% CI: 1.36-2.21), African-American race (RR = 1.79 95% CI: 1.37-2.33), smoking (RR = 1.27, 95% CI: 0.97-1.67), <HS education (RR = 1.27, 95% CI: 0.99-1.63), hypertension (RR = 1.65, 95% CI: 1.30-2.09), CHD (RR = 1.57, 95% CI: 0.99-2.50), obesity (class I RR = 2.37, 95% CI: 1.65-3.41 and ≥ class II RR = 3.47, 95% CI: 2.33-5.18), eGFR < 60 (RR = 2.85, 95% CI: 1.62-5.01) and triglycerides (Quartile 4 vs. Quartile 1: RR = 2.00, 95% CI: 1.38-2.89). In separate models, urate levels at baseline (RR 1 mg/dL increase = 2.33, 95% CI: 1.94-2.80) and 3 years after baseline (RR for a 1 mg/dL increase = 1.92, 95% CI: 1.78-2.07) were associated with incident hyperuricemia after accounting for demographic and clinical risk factors.

Conclusion

Demographic and clinical risk factors that are routinely collected as part of regular medical care are jointly associated with the development of hyperuricemia.

Similar content being viewed by others

Background

Increased serum urate levels are associated with poor outcomes including but not limited to gout [1–11]. There are clear differences in serum urate levels by age, sex, and race. Young adult women are known to have lower serum urate levels than young adult men, [12] which is primarily attributable to sex hormone effects on renal urate transport [13]. The onset of menopause is associated with increased serum urate levels [13, 14]. Additionally, young African Americans have lower serum urate levels than whites; although African American women are at a higher risk than white women of developing hyperuricemia [12]. Previous studies have identified individual risk factors for increased serum urate levels and incident hyperuricemia in US populations in addition to age, sex, and race: diet, alcohol intake, medication use, and chronic conditions [12, 13, 15–22].

In current clinical practice, patients with asymptomatic hyperuricemia are not treated. Emerging research suggests that treatment of asymptomatic hyperuricemia may reduce the risk of some adverse health outcomes, such as mortality and cardiovascular disease; [23–27] therefore, treatment of asymptomatic hyperuricemia is currently being discussed to prevent adverse health outcomes [28, 29]. Better understanding of which patients are at risk of developing hyperuricemia may aid in clinical decision-making about treatment of asymptomatic hyperuricemia. However, hyperuricemia risk prediction has been limited because previously published studies were cross-sectional, [12, 14, 17, 21, 22] and thus, only correlates of prevalent serum urate were identified in these studies. These cross-sectional studies do not distinguish whether the clinical factors associated with serum urate level predated the onset of hyperuricemia or whether hyperuricemia led to the onset of these clinical factors. Prospective studies of both men and women without hyperuricemia that follow participants until they develop this outcome are needed for accurate risk prediction to inform clinical decision-making. Additionally, these previous studies focused only on a single correlate of serum urate level [14, 19–21]. However, optimum risk prediction for hyperuricemia requires the simultaneous assessment of the risk inferred by a combination of risk factors.

It is unclear what demographic and clinical risk factors contribute to the development of high uric acid levels and whether serum urate levels are the sole predictor of incident hyperuricemia or whether demographic and clinical risk factors also predict the development of hyperuricemia (>7.0 mg/dL). Therefore, we utilized a population-based cohort study that included prospective measurement of a range of demographic and clinical risk factors among African American and white, middle-aged men and women to identify risk factors for the development of hyperuricemia over 9 years.

Methods

Setting and participants

The Atherosclerosis Risk in Communities study (ARIC) is a prospective population-based cohort study of 15,792 individuals recruited from 4 US communities (Washington County, Maryland; Forsyth County, North Carolina; Jackson, Mississippi; and suburbs of Minneapolis, Minnesota). The Institutional Review Board of the participating institutions (Johns Hopkins University, University of North Carolina at Chapel Hill, Wake Forest Baptist Medical Center, University of Mississippi Medical Center, and University of Minnesota) approved the ARIC study protocol and study participants provided written informed consent. Participants aged 45 to 64 years were recruited to the cohort in 1987–1989. This cohort was established to study the natural history of atherosclerosis, and the study consisted of 1 baseline visit (visit 1) between 1987 and 1989 and 3 follow-up visits (visits 2, 3, and 4) administered 3 years apart. Details of the study design have been previously published [30].

We excluded participants with prevalent hyperuricemia at cohort entry, defined as a measured serum urate level >7.0 mg/dL at the baseline visit (n = 2,455) to ensure that those study participants who were included in the analysis were at risk of developing hyperuricemia during follow-up. We additionally excluded those who were missing baseline serum urate level (n = 77) because we were unsure whether or not they had hyperuricemia at baseline. This analysis was limited to participants who were Caucasian or African American; few participants reported other races (n = 48). Additionally, participants who did not have a subsequent urate measure were not included in this study (n = 4,288). Results were similar when multiple imputation was used to include participants with missing serum or plasma urate levels. Finally, we only included participants with complete ascertainment of demographic and clinical risk factors (n = 582 excluded). There were 8,342 participants included in our study; however, 8,211 participants were included in the analyses that adjusted for serum urate level at visit 2 due to the fact that 131 participants in the analytic cohort did not have a measure of serum urate level at visit 2. Participants who were excluded from this analysis did not differ on sex or age. However, they were more likely to be African-American (37% vs. 19%). Additionally, participants with missing plasma urate at visit 4 differed from those with available plasma urate based on baseline smoking status (39% vs. 23%), hypertension (38% vs. 26%), diabetes (17% vs. 8%), CHD (7% vs. 3%), and obesity (27% vs. 22%).

Hyperuricemia

Serum urate concentrations were measured with the uricase method at visit 1 and 2 in mg/dL [31]. The reliability coefficient of serum urate was 0.91, and the coefficient of variation was 7.2% in a sample of 40 individuals with repeated measures taken at least 1 week apart [32]. At visit 4 plasma urate level was measured in mg/dL and hyperuricemia was defined as a plasma urate level > 7.0 mg/dL at this visit. Participants who were free of hyperuricemia at visit 1 (by study design) and who had hyperuricemia at visit 4 were considered to have 9-year incident hyperuricemia.

Demographic and clinical risk factors

All demographic and clinical risk factors were assessed at baseline and included: sex, age (in years), race (white or African American), smoking status, education (less than high school or high school and higher), diabetes, hypertension (>140/90 mm Hg or use of an anti-hypertensive treatment), diuretic use, coronary heart disease (CHD), total/HDL cholesterol, triglycerides level (mg/dL, categorized as quartiles), congestive heart failure (use of a medication for heart failure or fulfillment of the Gothenburg Criteria), body mass index (BMI, kg/m2), early adult obesity (from self-reported weight at age 25), weight change from age 25 to baseline, waist-to-hip ratio, alcohol intake (grams/week), animal protein/fat intake (grams/day), and menopausal status (self-reported for women pre- and peri- vs. post-menopausal). Glomerular filtration rate (eGFR) was estimated by using the CKD-Epi equation [33] and categorized as ≥90, 60–90, or <60 ml/min/1.73 m2. Categories were chosen to reduce residual confounding. All risk factors were categorized based on empirical categorizations to reflect the distribution in the cohort.

Additionally, serum urate levels at visits 1 and 2 were considered predictors of the future development of hyperuricemia. Baseline serum urate level, follow-up serum urate level (measured 3-years after baseline), and 3-year change in serum urate level were considered predictors of hyperuricemia.

Analysis

First, the mean and standard deviation (SD) as well as the prevalence of the covariates were calculated and compared by incident hyperuricemia. The mean of continuous variables in those with incident hyperuricemia was compared with the mean of those who did not develop hyperuricemia using a t-test; the prevalence of categorical factors was compared using the Fisher’s exact tests. For categorical factors with more than 2 levels, a test for trend was conducted using a nominal value for each category.

Using modified Poisson regression, [34] the relative risk (RR) of 9-year incident hyperuricemia was calculated. All potential demographic and clinical risk factors, except serum urate measures, were considered in the initial model. We used an Akaike’s Information Criterion (AIC)-based selection criteria to create a final model of predictors of 9-year incident hyperuricemia. Then, baseline serum urate level, 3-year serum urate level, and 3-year change in serum urate level were included as a predictor of incident hyperuricemia in separate models that were adjusted for demographic and clinical risk factors. In sensitivity analysis, the threshold for serum urate level was set at 6.8 mg/dL.

Results

Study cohort characteristics

There were 8,342 participants included in our study; 63% were female and 19% were African-American. The 9-year cumulative incidence of hyperuricemia was 4% and subgroup cumulative incidence rates were: 5% for men; 3% for women; 6% for African Americans and 3% for whites.

Cohort demographic and clinical risk factors differed by incident hyperuricemia status (Table 1). Overall, participants who developed hyperuricemia were more likely to be male (49% vs. 37%, p-value < 0.001), and African American (33% vs. 18%, p-value < 0.001), and were less likely to have a high school education or higher (73% vs. 83%, p-value < 0.001). Furthermore, participants who developed hyperuricemia were more likely to have chronic conditions at baseline: hypertension (44% vs. 24%, p-value < 0.001), obesity (BMI ≥ 30 kg/m2; 37% vs. 21%, p-value < 0.001), diabetes (11% vs. 8%, p-value < 0.05), and CHD (6% vs. 3%, p-value < 0.05). Participants with incident hyperuricemia were more likely to have an eGFR <60 ml/min/1.73 m2 (4% vs. 1%) or 60–90 ml/min/1.73 m2 (40% vs. 39%; p-value for trend < 0.001) than those without hyperuricemia. Among female participants, who were free of hyperuricemia by design, there was no difference in menopausal status at baseline and incidence of hyperuricemia, which may be the result of the exclusion of those with hyperuricemia at baseline (Table 1).

Participants who developed hyperuricemia were more likely to have a higher serum urate at baseline, even though the level did not cross the threshold of hyperuricemia by design (6.1 vs. 5.3 mg/dL, p-value < 0.001). This trend persisted during follow-up (3 years after baseline) (7.0 vs. 5.5 mg/dL, p-value < 0.001). Furthermore, there was a greater change in serum urate levels over 3 years (0.87 vs. 0.19, p-value < 0.001) for those who developed hyperuricemia.

Demographic and clinical risk factors for incident hyperuricemia

The final adjusted model included 9 demographic and clinical risk factors for incident hyperuricemia over 9 years (Table 2). Men were at 1.73-fold (95% CI: 1.36-2.21) increased risk of developing hyperuricemia and African-American participants were at 1.79-fold (95% CI: 1.37-2.33) increased risk. Additional participant demographics such as current smoker (RR = 1.27, 95% CI: 0.97-1.67) and less than a high school education (RR = 1.27, 95% CI: 0.99-1.63) were associated with an increased risk of developing hyperuricemia. Participants with hypertension were at 1.65-fold (95% CI: 1.30-2.09) increased risk of developing hyperuricemia and CHD was associated with an increased but not significant risk of hyperuricemia (RR = 1.57, 95% CI: 0.99-2.50). Additionally, BMI was a strong predictor of incident hyperuricemia; there was an increased risk of developing hyperuricemia for participants who were overweight (RR = 2.01, 95% CI: 1.46-2.78), or had 30 ≤ BMI < 35 kg/m2 (RR = 2.37, 95% CI: 1.65-3.41) and BMI ≥ 35 kg/m2 (RR = 3.47, 95% CI: 2.33-5.18). eGFR < 60 (RR = 2.85, 95% CI: 1.62-5.01) at baseline was associated with incident hyperuricemia. Finally, high triglyceride levels were a predictor of incident hyperuricemia (quartile 4 vs. quartile 1: RR = 2.00, 95% CI: 1.38-2.89). There were no differences in the association of smoking status, education, hypertension, BMI, or kidney function between men and women as well as between African Americans and white participants (all p-values for these interactions >0.05).

Serum urate level and incident hyperuricemia

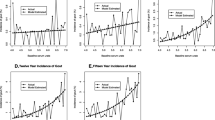

Serum urate levels at baseline (RR for a 1 mg/dL increase =2.33, 95% CI: 1.94-2.80) and at visit 2 (RR for a 1 mg/dL increase =1.92, 95% CI: 1.78-2.07) were associated with the development of hyperuricemia after accounting for demographic and clinical risk factors (Table 2). Adjusting for baseline and follow-up (3 years after baseline) serum urate levels attenuated the associations of the demographic and clinical risk factors for hyperuricemia. Additionally, the 3-year change in serum urate level was associated with an increased risk of hyperuricemia, such that for every 1 mg/dL increase in serum urate, participants were 1.60-times (95% CI: 1.47-1.74) more likely to develop hyperuricemia after accounting for demographic and clinical risk factors. The association of serum urate level did not differ by sex (women RR = 2.49, 95% CI: 1.96-3.17; men RR = 2.41, 95% CI: 1.77-3.27; p-value for interaction = 0.98).

The results did not materially change when we defined incident hyperuricemia as a serum urate level >6.8 mg/dL. Additionally, the results were similar when the analysis was restricted to post-menopausal women (urate level at visit 1: RR = 2.36, 95% CI: 1.65-3.37; urate level at visit 2: RR = 1.96, 95% CI: 1.74-2.20; change in urate level RR = 1.73, 95% CI: 1.50-2.01).

Discussion

The results from this large population-based cohort of middle-aged, African American and White, men and women followed over 9 years help define significant predictors of the onset of hyperuricemia. Although baseline serum urate level was a strong predictor of the incidence of hyperuricemia, it was not the sole factor. Other risk factors, including sex, race and chronic conditions, were important predictors of incident hyperuricemia above and beyond serum urate levels.

Our findings support and extend previous observations concerning risk factors for gout and hyperuricemia. Similar to prior studies, we determined that African Americans have an increased risk of incident hyperuricemia relative to whites. The effect was similar for African American men and women [35]. Congestive heart failure (RR = 1.67, 95% CI: 1.21-2.23) and diuretic use (RR = 3.32, 95% CI: 3.06-3.61) were associated with hyperuricemia among men with a high cardiovascular risk profile in the Multiple Risk Factor Interventional Trial [19]. However, the ARIC cohort is a low cardiovascular disease risk population and we did not identify these factors as predictors of incident hyperuricemia. Our study supports previous ones that identified modifiable risk factors as correlates of serum urate level [15, 17, 36]. In our study, BMI was a strong predictor, although alcohol intake was not independently associated with incident hyperuricemia. In contrast to previous cross sectional studies, [16] our multivariate analyses did not support an association of dietary factors with incident hyperuricemia in this cohort. One possible explanation for why the dietary factors were not associated with incident hyperuricemia is that by middle age, chronic conditions like obesity, hypertension, and kidney disease, are more likely to influence the development of hyperuricemia than the transient effects of dietary changes. Additionally, our measure of usual dietary intake may not fully capture the variation in purine-rich foods and thus, including these dietary factors may not improve our ability to predict who develops hyperuricemia. Finally, among women, we did not observe an association between menopausal status and incident hyperuricemia, as has been noted in other studies [13]. This finding may reflect the fact that our study population was middle-aged at enrollment and few female participants were pre-menopausal at the time of outcome ascertainment.

In current clinical practice, patients with asymptomatic hyperuricemia are not treated. This practice was strongly recommended in 1978 based on limited evidence at the time that hyperuricemia was associated with hypertension, atherosclerosis, cerebrovascular disease, as well as renal stones, and gouty nephropathy [37]. However, since 1978, many research studies have focused on the consequences of hyperuricemia providing strong evidence that links hyperuricemia directly to not only gout but other poor outcomes such as heart failure, atherosclerosis, endothelial dysfunction, sleep disordered breathing, diabetic nephropathy, metabolic syndrome, acute myocardial infarction, stroke, ischemic heart disease, hypertension, chronic kidney disease, acute kidney injury, and death [3–11, 23, 38–50]. Additionally, there is growing evidence that treatment of asymptomatic hyperuricemia improves health outcomes for patients. Treatment of asymptomatic hyperuricemia is currently being discussed to prevent adverse health outcomes [28, 29].

Treatment of asymptomatic hyperuricemia has been found to improve health outcomes in patients. There is emerging evidence that reductions in serum urate levels have led to improved health including improvements in endothelial dysfunction, inflammation, and kidney function in asymptomatic patients [23–26] and cardiovascular events and mortality among those with prescribed allopurinol [27]. Evidence from clinical trials suggests that treatment of asymptomatic hyperuricemia controls essential hypertension [51]. In obese adolescents with prehypertension, urate lowering therapy reduced systolic BP by 10.2 mm Hg and diastolic BP by 9.0 mm Hg in treated patients compared with a rise of 1.7 mm Hg and 1.6 mm Hg in systolic and diastolic blood pressure for patients on placebo [52]. Therefore, urate lowering therapy reduces systemic vascular resistance [52]. Our findings may help guide clinical decision-making to help identify which patients are at highest risk of developing hyperuricemia based on patient characteristics, chronic conditions, and serum urate levels.

There are a few notable limitations to this study. First, we do not have measures of serum urate level prior to enrollment in ARIC. Therefore, we cannot be certain that incident hyperuricemia over follow-up is truly the first occurrence of hyperuricemia. Next, not all participants had a measure of serum urate level after baseline. There is limited evidence of differential missing plasma urate measures and thus, the potential for survival bias is minimal. There was no serum measure of sex hormones, which have been associated with serum urate level [53]. Therefore we were unable to assess the impact of sex hormones on the incidence of hyperuricemia. Furthermore, there were too few pre- or peri-menopausal women with hyperuricemia (n = 51) to identify risk factors in this subgroup. Additionally, there were no participants who had received kidney transplantation, a strong risk factor for hyperuricemia, and thus our results are not generalizable to this clinical population [54]. The strengths of this study include multiple measures of serum urate, which allows for the analysis of incident hyperuricemia rather than correlates of serum urate level. Furthermore, ARIC is a rich cohort with detailed data collection from African American and white, men and women.

Conclusions

These study findings extend the previous research of correlates to serum urate level to the development of incident hyperuricemia. Our results suggest that serum urate levels alone do not predict incident hyperuricemia; patient characteristics and chronic conditions are also predictors of the development of hyperuricemia. The factors identified as predictors of incident hyperuricemia are routinely collected as part of regular medical and may be readily incorporated into risk prediction. Future work should establish the clinical effectiveness of predicting and treating incident hyperuricemia to prevent the onset of gout and other adverse outcomes.

References

Campion EW, Glynn RJ, DeLabry LO: Asymptomatic hyperuricemia. Risks and consequences in the Normative Aging Study. Am J Med. 1987, 82 (3): 421-426. 10.1016/0002-9343(87)90441-4.

Krishnan E, Pandya BJ, Chung L, Hariri A, Dabbous O: Hyperuricemia in young adults and risk of insulin resistance, prediabetes, and diabetes: a 15-year follow-up study. Am J Epidemiol. 2012, 176 (2): 108-116. 10.1093/aje/kws002.

Liu WC, Hung CC, Chen SC, Yeh SM, Lin MY, Chiu YW, Kuo MC, Chang JM, Hwang SJ, Chen HC: Association of hyperuricemia with renal outcomes, cardiovascular disease, and mortality. Clin J Am Soc Nephrol. 2012, 7 (4): 541-548. 10.2215/CJN.09420911.

Tomita M, Mizuno S, Yamanaka H, Hosoda Y, Sakuma K, Matuoka Y, Odaka M, Yamaguchi M, Yosida H, Morisawa H: Does hyperuricemia affect mortality? A prospective cohort study of Japanese male workers. J Epidemiol. 2000, 10 (6): 403-409. 10.2188/jea.10.403.

Krishnan E, Hariri A, Dabbous O, Pandya BJ: Hyperuricemia and the echocardiographic measures of myocardial dysfunction. Congest Heart Fail. 2012, 18 (3): 138-143. 10.1111/j.1751-7133.2011.00259.x.

Chiou WK, Huang DH, Wang MH, Lee YJ, Lin JD: Significance and association of serum uric acid (UA) levels with components of metabolic syndrome (MS) in the elderly. Arch Gerontol Geriatr. 2012, 55 (3): 724-728. 10.1016/j.archger.2012.03.004.

Goncalves JP, Oliveira A, Severo M, Santos AC, Lopes C: Cross-sectional and longitudinal associations between serum uric acid and metabolic syndrome. Endocrine. 2012, 41 (3): 450-457. 10.1007/s12020-012-9629-8.

Lapsia V, Johnson RJ, Dass B, Shimada M, Kambhampati G, Ejaz NI, Arif AA, Ejaz AA: Elevated uric acid increases the risk for acute kidney injury. Am J Med. 2012, 125 (3): 302 e309-317-

Chuang SY, Chen JH, Yeh WT, Wu CC, Pan WH: Hyperuricemia and increased risk of ischemic heart disease in a large Chinese cohort. Int J Cardiol. 2012, 154 (3): 316-321. 10.1016/j.ijcard.2011.06.055.

Kim SY, Guevara JP, Kim KM, Choi HK, Heitjan DF, Albert DA: Hyperuricemia and coronary heart disease: a systematic review and meta-analysis. Arthrit Care Res. 2010, 62 (2): 170-180.

Gaffo AL, Jacobs DR, Sijtsma F, Lewis CE, Mikuls TR, Saag KG: Serum urate association with hypertension in young adults: analysis from the Coronary Artery Risk Development in Young Adults cohort. Ann Rheum Dis. 2012, 72 (28): 1321-1327.

Gaffo AL, Jacobs DR, Lewis CE, Mikuls TR, Saag KG: Association between being African-American, serum urate levels and the risk of developing hyperuricemia: findings from the Coronary Artery Risk Development in Young Adults cohort. Arthrit Res Therapy. 2012, 14 (1): R4-10.1186/ar3552.

Hak AE, Choi HK: Menopause, postmenopausal hormone use and serum uric acid levels in US women–the Third National Health and Nutrition Examination Survey. Arthrit Res Therapy. 2008, 10 (5): R116-10.1186/ar2519.

Stockl D, Doring A, Thorand B, Heier M, Belcredi P, Meisinger C: Reproductive factors and serum uric acid levels in females from the general population: the KORA F4 study. PLoS One. 2012, 7 (3): e32668-10.1371/journal.pone.0032668.

Choi HK, Curhan G: Beer, liquor, and wine consumption and serum uric acid level: the Third National Health and Nutrition Examination Survey. Arthritis Rheum. 2004, 51 (6): 1023-1029. 10.1002/art.20821.

Choi HK, Liu S, Curhan G: Intake of purine-rich foods, protein, and dairy products and relationship to serum levels of uric acid: the Third National Health and Nutrition Examination Survey. Arthritis Rheum. 2005, 52 (1): 283-289. 10.1002/art.20761.

Gaffo AL, Roseman JM, Jacobs DR, Lewis CE, Shikany JM, Mikuls TR, Jolly PE, Saag KG: Serum urate and its relationship with alcoholic beverage intake in men and women: findings from the Coronary Artery Risk Development in Young Adults (CARDIA) cohort. Ann Rheum Dis. 2010, 69 (11): 1965-1970. 10.1136/ard.2010.129429.

Thompson FE, McNeel TS, Dowling EC, Midthune D, Morrissette M, Zeruto CA: Interrelationships of added sugars intake, socioeconomic status, and race/ethnicity in adults in the United States: National Health Interview Survey, 2005. J Am Diet Assoc. 2009, 109 (8): 1376-1383. 10.1016/j.jada.2009.05.002.

Misra D, Zhu Y, Zhang Y, Choi HK: The independent impact of congestive heart failure status and diuretic use on serum uric acid among men with a high cardiovascular risk profile: a prospective longitudinal study. Semin Arthritis Rheu. 2011, 41 (3): 471-476. 10.1016/j.semarthrit.2011.02.002.

Cohen E, Krause I, Fraser A, Goldberg E, Garty M: Hyperuricemia and metabolic syndrome: lessons from a large cohort from Israel. Isr Med Assoc J. 2012, 14 (11): 676-680.

Lee JM, Kim HC, Cho HM, Oh SM, Choi DP, Suh I: Association between serum uric acid level and metabolic syndrome. J Prev Med Public Health. 2012, 45 (3): 181-187. 10.3961/jpmph.2012.45.3.181.

Zhu Y, Pandya BJ, Choi HK: Comorbidities of gout and hyperuricemia in the US general population: NHANES 2007–2008. Am J Med. 2012, 125 (7): 679-687. 10.1016/j.amjmed.2011.09.033. e671

Yelken B, Caliskan Y, Gorgulu N, Altun I, Yilmaz A, Yazici H, Oflaz H, Yildiz A: Reduction of uric acid levels with allopurinol treatment improves endothelial function in patients with chronic kidney disease. Clin Nephrol. 2012, 77 (4): 275-282. 10.5414/CN107352.

Melendez-Ramirez G, Perez-Mendez O, Lopez-Osorio C, Kuri-Alfaro J, Espinola-Zavaleta N: Effect of the treatment with allopurinol on the endothelial function in patients with hyperuricemia. Endocr Res. 2012, 37 (1): 1-6. 10.3109/07435800.2011.566235.

Kanbay M, Huddam B, Azak A, Solak Y, Kadioglu GK, Kirbas I, Duranay M, Covic A, Johnson RJ: A randomized study of allopurinol on endothelial function and estimated glomular filtration rate in asymptomatic hyperuricemic subjects with normal renal function. Clin J Am Soc Nephrol. 2011, 6 (8): 1887-1894. 10.2215/CJN.11451210.

Goicoechea M, de Vinuesa SG, Verdalles U, Ruiz-Caro C, Ampuero J, Rincon A, Arroyo D, Luno J: Effect of allopurinol in chronic kidney disease progression and cardiovascular risk. Clin J Am Soc Nephrol. 2010, 5 (8): 1388-1393. 10.2215/CJN.01580210.

Wei L, Mackenzie IS, Chen Y, Struthers AD, MacDonald TM: Impact of allopurinol use on urate concentration and cardiovascular outcome. Br J Clin Pharmacol. 2011, 71 (4): 600-607. 10.1111/j.1365-2125.2010.03887.x.

Gaffo AL, Saag KG: Drug treatment of hyperuricemia to prevent cardiovascular outcomes: are we there yet?. Am J Cardiovasc Drugs. 2012, 12 (1): 1-6. 10.2165/11594580-000000000-00000.

Nakaya I, Namikoshi T, Tsuruta Y, Nakata T, Shibagaki Y, Onishi Y, Fukuhara S: Management of asymptomatic hyperuricaemia in patients with chronic kidney disease by Japanese nephrologists: a questionnaire survey. Nephrology (Carlton). 2011, 16 (5): 518-521. 10.1111/j.1440-1797.2011.01446.x.

The ARIC investigators: The Atherosclerosis Risk in Communities (ARIC) Study: design and objectives. Am J Epidemiol. 1989, 129 (4): 687-702.

Iribarren C, Folsom AR, Eckfeldt JH, McGovern PG, Nieto FJ: Correlates of uric acid and its association with asymptomatic carotid atherosclerosis: the ARIC Study. Atherosclerosis Risk in Communities. Ann Epidemiol. 1996, 6 (4): 331-340. 10.1016/S1047-2797(96)00052-X.

Eckfeldt JH, Chambless LE, Shen YL: Short-term, within-person variability in clinical chemistry test results. Experience from the Atherosclerosis Risk in Communities Study. Arch Pathol Lab Med. 1994, 118 (5): 496-500.

Levey AS, Stevens LA, Schmid CH, Zhang YL, Castro AF, Feldman HI, Kusek JW, Eggers P, Van Lente F, Greene T: A new equation to estimate glomerular filtration rate. Ann Intern Med. 2009, 150 (9): 604-612. 10.7326/0003-4819-150-9-200905050-00006.

Zou G: A modified poisson regression approach to prospective studies with binary data. Am J Epidemiol. 2004, 159 (7): 702-706. 10.1093/aje/kwh090.

Gaffo AL, Jacobs DR, Lewis CE, Mikuls TR, Saag KG: Association between being African-American, serum urate levels, and the risk of developing hyperuricemia: findings from the coronary artery risk development in young adults (CARDIA) cohort. Arthritis Res Ther. 2012, 14 (1): R4-10.1186/ar3552.

Shiraishi H, Une H: The effect of the interaction between obesity and drinking on hyperuricemia in Japanese male office workers. J Epidemiol. 2009, 19 (1): 12-16. 10.2188/jea.JE20080016.

Liang MH, Fries JF: Asymptomatic hyperuricemia: the case for conservative management. Ann Intern Med. 1978, 88 (5): 666-670. 10.7326/0003-4819-88-5-666.

Reunanen A, Takkunen H, Knekt P, Aromaa A: Hyperuricemia as a risk factor for cardiovascular mortality. Acta Med Scand Suppl. 1982, 668: 49-59.

Zafrir B, Goren Y, Paz H, Wolff R, Salman N, Merhavi D, Lavi I, Lewis BS, Amir O: Risk score model for predicting mortality in advanced heart failure patients followed in a heart failure clinic. Congest Heart Fail. 2012, 18 (5): 254-261. 10.1111/j.1751-7133.2012.00286.x.

Wiener RC, Shankar A: Association between Serum Uric Acid Levels and Sleep Variables: Results from the National Health and Nutrition Survey 2005–2008. Int J Inflam. 2012, 2012: 363054-

Kohagura K, Kochi M, Miyagi T, Kinjyo T, Maehara Y, Nagahama K, Sakima A, Iseki K, Ohya Y: An association between uric acid levels and renal arteriolopathy in chronic kidney disease: a biopsy-based study. Hypertens Res. 2012, 36 (1): 43-49.

Ito S, Naritomi H, Ogihara T, Shimada K, Shimamoto K, Tanaka H, Yoshiike N: Impact of serum uric acid on renal function and cardiovascular events in hypertensive patients treated with losartan. Hypertens Res. 2012, 35 (8): 867-873. 10.1038/hr.2012.59.

Takayama S, Kawamoto R, Kusunoki T, Abe M, Onji M: Uric acid is an independent risk factor for carotid atherosclerosis in a Japanese elderly population without metabolic syndrome. Cardiovasc Diabetol. 2012, 11: 2-10.1186/1475-2840-11-2.

Neri L, Rocca Rey LA, Lentine KL, Hinyard LJ, Pinsky B, Xiao H, Dukes J, Schnitzler MA: Joint association of hyperuricemia and reduced GFR on cardiovascular morbidity: a historical cohort study based on laboratory and claims data from a national insurance provider. Am J Kidney Dis. 2011, 58 (3): 398-408. 10.1053/j.ajkd.2011.04.025.

Seki S, Tsutsui K, Fujii T, Yamazaki K, Anzawa R, Yoshimura M: Association of uric acid with risk factors for chronic kidney disease and metabolic syndrome in patients with essential hypertension. Clin Exp Hypertens. 2010, 32 (5): 270-277. 10.3109/10641960903265220.

Wen CP, David Cheng TY, Chan HT, Tsai MK, Chung WS, Tsai SP, Wahlqvist ML, Yang YC, Wu SB, Chiang PH: Is high serum uric acid a risk marker or a target for treatment? Examination of its independent effect in a large cohort with low cardiovascular risk. Am J Kidney Dis. 2010, 56 (2): 273-288. 10.1053/j.ajkd.2010.01.024.

Ho WJ, Tsai WP, Yu KH, Tsay PK, Wang CL, Hsu TS, Kuo CT: Association between endothelial dysfunction and hyperuricaemia. Rheumatology (Oxford). 2010, 49 (10): 1929-1934. 10.1093/rheumatology/keq184.

Hamaguchi S, Furumoto T, Tsuchihashi-Makaya M, Goto K, Goto D, Yokota T, Kinugawa S, Yokoshiki H, Takeshita A, Tsutsui H: Hyperuricemia predicts adverse outcomes in patients with heart failure. Int J Cardiol. 2011, 151 (2): 143-147. 10.1016/j.ijcard.2010.05.002.

Shah A, Keenan RT: Gout, hyperuricemia, and the risk of cardiovascular disease: cause and effect?. Curr Rheumatol Rep. 2010, 12 (2): 118-124. 10.1007/s11926-010-0084-3.

Kuo CF, Yu KH, Luo SF, Ko YS, Wen MS, Lin YS, Hung KC, Chen CC, Lin CM, Hwang JS: Role of uric acid in the link between arterial stiffness and cardiac hypertrophy: a cross-sectional study. Rheumatology (Oxford). 2010, 49 (6): 1189-1196. 10.1093/rheumatology/keq095.

Feig DI, Soletsky B, Johnson RJ: Effect of allopurinol on blood pressure of adolescents with newly diagnosed essential hypertension: a randomized trial. JAMA. 2008, 300 (8): 924-932. 10.1001/jama.300.8.924.

Soletsky B, Feig DI: Uric Acid Reduction Rectifies Prehypertension in Obese Adolescents. Hypertension. 2012, 60 (5): 1148-1156. 10.1161/HYPERTENSIONAHA.112.196980.

Nishida Y, Akaoka I, Nishizawa T: Effect of sex hormones on uric acid metabolism in rats. Experientia. 1975, 31 (10): 1134-1135. 10.1007/BF02326752.

Numakura K, Satoh S, Tsuchiya N, Saito M, Maita S, Obara T, Tsuruta H, Inoue T, Narita S, Horikawa Y: Hyperuricemia at 1 year after renal transplantation, its prevalence, associated factors, and graft survival. Transplantation. 2012, 94 (2): 145-151. 10.1097/TP.0b013e318254391b.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1471-2474/14/347/prepub

Acknowledgements

The authors thank the staff and participants of the ARIC study for their important contributions. Additionally, we thank Takeda Pharmaceuticals U.S.A., Inc. for the grant that funded this project. Takeda Pharmaceuticals U.S.A., Inc was not involved in the writing, study design, analysis, or interpretation of the data.

Funding

This work was supported by Takeda Pharmaceuticals North America, Inc. The Atherosclerosis Risk in Communities Study is carried out as a collaborative study supported by National Heart, Lung, and Blood Institute contracts (HHSN268201100005C, HHSN268201100006C, HHSN268201100007C, HHSN268201100008C, HHSN268201100009C, HHSN268201100010C, HHSN268201100011C, and HHSN268201100012C). Dr. McAdams-DeMarco was jointly funded by the Arthritis National Research Foundation and the American Federation for Aging Research.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interest

The authors declare that they have no competing interests.

Authors’ contributions

MMD made substantial contributions to conception and design, acquisition of data, analysis and interpretation of data; and was involved in drafting the manuscript and revising it critically for important intellectual content. JM made substantial contributions to conception and design, interpretation of data, and was involved in revising the manuscript critically for important intellectual content. AL made substantial contributions to design, acquisition of data, analysis and interpretation of data, and was involved in drafting the manuscript and revising it critically for important intellectual content. JC made substantial contributions to conception and design, acquisition of data, analysis and interpretation of data, and was involved in drafting the manuscript or revising it critically for important intellectual content. AB made substantial contributions to conception and design, analysis and interpretation of data, and was involved in drafting the manuscript and revising it critically for important intellectual content. All authors gave final approval of the version to be published.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an open access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

McAdams-DeMarco, M.A., Law, A., Maynard, J.W. et al. Risk factors for incident hyperuricemia during mid-adulthood in African American and White men and women enrolled in the ARIC cohort study. BMC Musculoskelet Disord 14, 347 (2013). https://doi.org/10.1186/1471-2474-14-347

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2474-14-347