Abstract

Background

Bloodstream Infections (BSIs) in neutropenic patients often cause considerable morbidity and mortality. Therefore, the surveillance and early identification of patients at high risk for developing BSIs might be useful for the development of preventive measures. The aim of the current study was to assess the predictive power of three scoring systems: Infection Probability Score (IPS), APACHE II and KARNOFSKY score for the onset of Bloodstream Infections in hematology-oncology patients.

Methods

A total of 102 patients who were hospitalized for more than 48 hours in a hematology-oncology department in Athens, Greece between April 1st and October 31st 2007 were included in the study. Data were collected by using an anonymous standardized recording form. Source materials included medical records, temperature charts, information from nursing and medical staff, and results on microbiological testing. Patients were followed daily until hospital discharge or death.

Results

Among the 102 patients, Bloodstream Infections occurred in 17 (16.6%) patients. The incidence density of Bloodstream Infections was 7.74 per 1,000 patient-days or 21.99 per 1,000 patient-days at risk. The patients who developed a Bloodstream Infection were mainly females (p = 0.004), with twofold time mean length of hospital stay (p < 0.001), with fourfold time mean length of neutropenia (p < 0.001), with neutropenia < 500 (p < 0.001), suffered mainly from acute myeloid leukemia (p < 0.001), had been exposed to antibiotics (p = 0.045) and chemotherapy (p = 0.023), had a surgery (p = 0.048) and a Hickman catheter (p = 0.025) as compared to the patients without Bloodstream Infection. The best cut-off value of IPS for the prediction of a Bloodstream Infection was 10 with a sensitivity of 75% and specificity of 70.9%

Conclusion

Between the three different prognostic scoring systems, Infection Probability Score had the best sensitivity in predicting Bloodstream Infections.

Similar content being viewed by others

Background

Bloodstream infections are an important cause of morbidity and mortality in immunocompromised populations [1]. Patients with hematologic malignancies such as acute leukemia, malignant lymphoma, and multiple myeloma are at increased risk for healthcare-associated bloodstream infections because of the intensive cytotoxic chemotherapy, which often renders them pancytopenic for long periods of time [2]. Several clinical trials showed improved survival with the use of immediate empirical broad-spectrum antimicrobial therapy at onset of fever, which has become the standard procedure [3, 4]. Despite such an immediate approach, Bloodstream Infections (BSIs) in neutropenic patients often cause considerable morbidity and mortality. The high mortality is partly related to BSIs caused by microorganisms resistant to broad-spectrum antibiotics [5].

Laboratory-based strategies for the surveillance of bloodstream infections in the hematology department should be reported periodically, and used for longitudinal assessment [6]. Such data can then inform decisions about screening, prophylaxis and empirical therapy. If clinical data are also collected for surveillance purposes (e.g presence of CVC, risk factors for the development of bacteraemia), an understanding of the required resources and prevention strategies should also be available [7]. In patients with suspected bloodstream infections and new skin lesions, urgent biopsy of necrotizing hematologic lesions should be performed to aid in the diagnosis of disseminated mould infection. Non-culture based diagnosis techniques have been used for the early detection of fungaemia in hematology patients but are not recommended for routine use in the clinical practice [8]. In addition, the benefit of prophylaxis for the reduction of disease incidence must be weighed against the potential for the selection of resistant isolates [9]. Implementation of infection control practices is required, particularly for the control of transmission of multi-resistant organisms [10]. Surveillance may be justified in case of hospital outbreaks or periods of increasing endemicity, to facilitate the isolation and barrier precautions for the patients who are colonized or infected with multi-resistant organisms. For clusters or outbreaks of hospital infections, appropriate investigation, including environmental sampling, clinical screening and risk factor analysis may be required [11].

In Greece, the data concerning BSIs occurring in haematology/oncology units are sparse. Therefore, the surveillance and early identification of patients at high risk for developing BSIs might be useful for the development of preventive measures. The objective of this study was to assess the predictive power of three systems: Infection Probability Score (IPS), APACHE II and Karnofsky score for the onset of BSIs in hematology/oncology patients, which will provide a basis to design more effective guidelines for the prevention and treatment of these infections.

Methods

A retrospective review of all records during a seven-month period (1/4/2007-30/10/2007) was conducted in the hematology-oncology unit of a general hospital in Athens, Greece. All patients who were hospitalized for more than 48 hours in the hematology-oncology unit were included in the analysis. The surveillance of nosocomial BSI was performed by the physician in charge of the patient and a specifically trained nurse. It included a daily clinical examination of the patients and daily reviewing of the patient's medical records, Kardex data, temperature charts, information from the nursing and the medical staff, and all the microbiological data of a positive blood result.

Data were collected by using an anonymous standardized case-record form. Patient data consisted of I) demographic characteristics: age, length of stay, outcome (survivors or non-survivors), II) risk factors: underlying malignancy, underlying diseases: Infection on admission, diabetes mellitus, short or long term presence of an indwelling central venous catheter (CVC), total parenteral nutrition, duration of neutropenia, exposure to chemotherapy, antibiotics or corticosteroid therapy and prior surgical procedure.

The diagnosis and the source of blood-stream infection were determined by the physician in charge and a specifically trained nurse, and were based on objective clinical evidence, microbiological data, clinical judgment and the definitions proposed by CDC. Blood cultures were performed in aerobic and anaerobic bottles and incubated in an automatic system. Bacteria were identified using standard microbiological methods. Antimicrobial therapy was given and adapted according to the susceptibility testing by the physician in charge of the patient. Patients were followed daily for BSI from admission until discharge or death. Patients were diagnosed with BSI on the date of the first positive result. The diagnosis of BSIs was based on definitions proposed by the Centers for Disease Control and Prevention [12].

Ethical considerations

The research protocol was approved by the Ethics Committee of the Hospital in which the survey has been conducted. The anonymity and the confidentiality of the patients' data have been assured by the research team.

Case definitions

Neutropenia was defined as an absolute blood neutrophil count less than 500/ml. Laboratory-confirmed bloodstream infection-LCBI: LCBI must meet at least one of the following criteria:

-

1.

Patient has a recognized pathogen cultured from 1 or more blood cultures and organism cultured from blood is not related to an infection at another site.

-

2.

Patient has at least one of the following signs or symptoms: fever (≥38°C) chills, or hypotension and signs and symptoms and positive laboratory results are not related to an infection at another site and common skin contaminant (ie, diphtheroids (Corynebacterium spp) Bacillus (not B anthracis) spp, Propionibacterium spp, coagulase-negative staphylococci (including S epidermidis) viridans group streptococci, Aerococcus spp, Micrococcus spp) is cultured from 2 or more blood cultures drawn on separate occasions.

The incidence rate was defined as the number of new cases of BSIs divided by the total number of patient-days and patient-days at risk (neutropenic days) in the population studied.

Instruments

The IPS is a simple scoring system that assesses the probability of infection in critically ill patients. It has been developed and validated in ICU [13]. IPS uses six simple and commonly used variables and ranges from 0-26 points (0-2 for temperature, 0-12 for heart rate, 0-1 for respiratory rate, 0-3 for white blood cell count, 0-6 for C-reactive protein, 0-2 for sequential organ failure assessment score [14].

APACHE II is a severity of disease classification system that uses a point score based on initial values of 12 routine physiologic measurements, age and previous health status, to provide a general measure of severity of disease. The total score in the APACHE II is 71, which includes the sum of physiological score, age score and chronic health evaluation [15].

The KARNOFSKY Performance Scale Index allows patients to be classified as to their functional impairment. The lower the KARNOFSKY score, the worse the survival for most serious illnesses [16]. The KARNOFSKY score runs from 100 to 0, where 100 is "perfect" health and 0 is death. Although the score has been described with intervals of 10, a practitioner may choose decimals if he or she feels a patient's situation holds somewhere between two marks [17]. Table 1 displays the score calculation of the three scales.

Data analysis

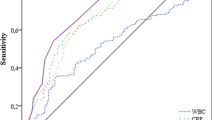

All of the items were coded and scored and the completed forms were included in the data analysis set. SPSS-17 (SPSS, Chicago, IL, US) was used to analyze the data. Continuous variables were compared using Student's t-test. The x2 statistic or Fisher's Exact Test were used to compare categorical variables. All values are expressed as the mean ± SD or median for continuous variables and as a percentage of the group they were derived from categorical variables. ROC curve calculations have been performed by using MedCalc statistical software. Receiver operating characteristics (ROC) curves compared sensitivity and specificity of the three scoring systems (APACHE II, KARNOFSKY and IPS scores) over a wide range of cut-off values for predicting the onset of BSI. The area under the ROC is a convenient way of comparing classifiers. A random classifier has an area of 0.5, while an ideal one has an area of 1. The ROC curve was used to choose the best operating point. All p values of < 0.05 were considered statistically significant unless otherwise stated.

Results

Over the seven-month period a total of 102 patients with 2,197 patient-days were admitted in a hematology/oncology unit in Athens-Greece and were prospectively evaluated. Among the participants, 53 (52%) were males and 49 (48%) were females. The baseline clinical characteristics of the patients are illustrated in Table 1. Patients had a mean age of 53.30 ± 18.59 years (median 56 years, range 17 - 85 years). The mean duration of neutropenia was significantly longer for patients with BSI than for patients without BSI (21.06 ± 8.33 vs 5.07 ± 8.32 days, p < 0.001). The majority of the patients (33.3%) suffered from acute myeloid leukemia (Tables 1 and 2).

As it is displayed in Table 2, the mean APACHE II score did not differ statistically (p = 0.876) between those with a BSI and those without BSI. On the contrary IPS score was significantly (p = 0.010) higher in the patients with a BSI (11.06 ± 5.10) as opposed to those without BSI (6.59 ± 6.41).

The patients who developed a BSI were mainly females (p = 0.004), with twofold higher time for mean length of hospital stay (p < 0.001), with fourfold higher time for mean length of neutropenia (p < 0.001), with neutropenia < 500 (p < 0.001), suffered mainly from acute myeloid leukemia (p < 0.001), had been exposed to antibiotics (p = 0.045) and chemotherapy (p = 0.023), had a surgery (p = 0.048) and a Hickman catheter (p = 0.025) as compared to patients without BSI (Table 2).

The cumulative incidence of BSI was 16.7% and the incidence rate of BSI was 7.74 per 1,000 patient-days or 21.99 per 1,000 patient-days at risk. The most common isolates were gram-negative (44.4%) and gram positive (44.4%) microorganisms.

Determination of the best cut-off point for the prediction of BSI onset

Table 3 illustrates the predictive values of the three scoring systems calculated at the best cut-off point according to the ROC curve. As can be seen, the IPS score system better predicts the onset of a BSI compared to the two other scoring systems. Discrimination power of IPS AUC was acceptable as opposed to APACHE II AUC and KARNOFSKY AUC that were poor. The best cut-off value of IPS for the prediction of a BSI was 10 with a sensitivity of 75% and a specificity of 70.9% (Table 3).

Logistic regression analysis revealed that IPS was the only significant predictor of BSI onset (OR = 5.60; 95% CI = 1.66-18.88; p = 0.003) compared with APACHE II (OR = 0.71; 95% CI = 0.22-2.28; p = 0.546) and KARNOFSKY (OR = 0.39; 95% CI = 1.13-1.18; p = 0.121).

Discussion and Conclusion

A retrospective study was conducted in order to examine the predictive power of three different scoring systems for BSI in a hematological unit. The literature regarding predictive power of IPS in hematology/oncology patients is sparse. Most of the information about the epidemiology of BSIs and outcome is extrapolated from studies of hospitalized patients in general hospitals or from studies of patients in University hospitals [18] or, more specifically, from studies of neutropenic cancer patients [19]. In our study, the incidence density of BSIs (21.99 per 1,000 patient-days at risk) was higher than that reported by previous studies (11.2 per 1000 patient-days at risk) [20]. Furthermore in the current study the crude mortality (5.9%) was lower than that reported elsewhere [21]. One of the major problems associated with comparison studies, is the difficulty in interpretation caused by variability in study designs and efficacy or outcome measures [22]. Furthermore, many patients with neutropenia have complex medical profiles, leading to increased physician concern and a tendency to modify regimens during the treatment course of febrile episodes. Such modifications generally are made in a setting of inadequate information or lack of definitive diagnosis. It has been estimated that empiric regimens may be modified in 40% to 60% of cases [23].

Furthermore, we found that the most common isolates were gram-negative (44.4%) and gram positive (44.4%) microorganisms. Our findings are very similar to those reported in other studies [24]. It is noteworthy that a considerable shift in the spectrum of pathogens isolated from blood cultures of febrile neutropenic cancer patients in the USA has emerged since 1995, with Gram-positive cocci increasing from 62% to 76% of isolates [21], Gram-negative bacilli declining from 21.5% to 14% and fungi declining from 15 to 8%. Based on consecutive clinical trials conducted by the European Organization on the Research and treatment of cancer (EORTC) between 1973 and 2000, a shift toward the predominance of Cram-positive bacteria has also been reported however, during the most recent time period (1998 - 2000), Gram- positive and Gram-negative isolates have been involved in the same magnitude [24].

A number of epidemiological factors may have contributed to this changing pattern. Firstly, this period corresponds to the uptake of widespread empiric antibiotic therapy for febrile neutropenia especially fluroquinolones and third/fourth generation cephalosporines), with resulting selection pressure for gram-positive bacteria [25]. Secondly, the use of more intensive treatments with more severe oral mucositis may provide a frequent portal of entry for gram-positive organisms. Thirdly, increased utility of medium to long-term venous access devises for patients with malignancy may increase gram-positive blood stream infections (particularly staphylococcal infections [26]. Finally, local infection control practices may impact upon the number of infections and spectrum of causative organisms. Further investigation of the data of patients with BSIs showed that most patients had received third/fourth generation cephalosporines (53%) and quinolones (35.3%), and they had central venous (11%) and Hickman catheters. Our findings highlight that the ongoing co-operation between haematologists, oncologists and infectious disease specialists is important to detect trends in epidemiology, which can be used to design empirical antibiotic regimens and guide infection control policies [27].

The mean IPS score was higher in patients with a BSI. This is a common finding in the two other researches supporting the discriminative adequacy of the tool [13, 28]. The two previous studies have confirmed the predictive power of the IPS for the onset of a Healthcare Associated Infection (HCAI). The current study extends the use of IPS as a predictor of the onset of a BSI. Additionally, the IPS separated infected from non-infected patients already on the first day of evaluation [28]. This finding enables the therapeutic team to early detect the infected patients, as well as to avoid the administration of unnecessary antibiotics to the patients that are unlikely considered to be infected. Further studies with a bigger sample would be useful to give more evidence to this argument.

Between the three different prognostic scoring systems, IPS had the best sensitivity in predicting BSI. It is worth noting that IPS as a risk factor for BSIs in cancer patients has never being studied before. A previous study has used IPS to predict the need for mechanical ventilation and the duration of mechanical ventilation [29] and for the prediction of HCAI in the ICU patients [28]. In a previous study [28] the cutoff point for the prediction of a HAI was 14 with a positive predictive value of 53.6% and a negative predictive value of 89.5%. In our study the cutoff point for the prediction of a BSI was 10 with a positive predictive value of 37% and a negative predictive value of 92.5%. The patients were considered as unlikely to be infected with a BSI if they had a score < 10 points. An IPS score >10 represents an argument against a BSI onset in oncology-hematology patients and should be considered as a complementary tool for the early detection of a BSI. In the present study the best cut-off value of IPS for the prediction of a BSI was 10 as opposed to 14 that has been previously reported from Martini et al. [28] and Peres-Bota [13]. One possible explanation is that the present research has been conducted in a sample of hematology/oncology patients who had a different nosological profile compared to the ICU patients. Furthermore Martini et al. [28] have used a priori the cut-off value 14 without testing its sensitivity and specificity as opposed to our study that has used ROC analysis to find the best cut-off value. A further research is needed to compare IPS scores between several categories of patients. A multi-centered study would be useful to test the reliability of the tool in patients with a similar pathology.

The AUC value of IPS is associated with a good test as it is above 0.70 with means that the probability that the patients will be correctly classified as an infected person is 72%. It may be considered that a bigger sample of bloodstream infected person would help us to make safer conclusions. Furthermore it could be argued that the tool is more sensitive in predicting the onset of a HAI compared with the onset of a BSI. In conclusion the calculation of the IPS score is simple and the variables used can be obtained routinely, without additional costs. Additionally it is easy to be used from the clinicians.

To reduce the risk of BSIs and associated mortality in the hematology population, a number of strategies may be implemented including the routine use of IPS. Early identification of a changing spectrum and antimicrobial sensitivity of isolates should always be monitored. Frequently, a multi-disciplinary approach is recommended, to facilitate certain interventions such as typing of isolates, ward cleaning and structural modification of clinical areas. Guidelines for empirical antibiotic therapy for febrile neutropenia must be based upon the risk of infection in specific hematology patients and the local epidemiology and susceptibility of infecting isolates.

References

Cherif H, Kronvall G, Bjorkholm M, Kalin M: Bacteraemia in hospitalized patients with malignant blood disorders: a retrospective study of causative agents and their resistance profiles during a 14-year period without antibacterial prophylaxis. Hematol J. 2003, 4: 420-426. 10.1038/sj.thj.6200334.

Madani TA: Clinical infections and bloodstream isolates associated with fever in patients undergoing chemotherapy for acute myeloid leukemia. Infection. 2000, 28: 367-373. 10.1007/s150100070007.

Hughes WT, Armstrong D, Bodey GP, Bow EJ, Brown AE, Calandra T, Feld R, Pizzo PA, Rolston KV, Shenep JL, Young LS: Guidelines for the use of antimicrobial agents in neutropenic patients with cancer. Clin Infect Dis. 2002, 34: 730-751. 10.1086/339215.

Link H, Böhme A, Cornely OA, Höffken K, Kellner O, Kern WV, Mahlberg R, Maschmeyer G, Nowrousian MR, Ostermann H, Ruhnke M, Sezer O, Schiel X, Wilhelm M, Auner HW, Diseases Working Party (AGIHO) of the German Society of Hematology and Oncology (DGHO); Group Interventional Therapy of Unexplained Fever, Arbeitsgemeinschaft Supportivmassnahmen in der Onkologie (ASO) of the Deutsche Krebsgesellschaft (DKG-German Cancer Society): Antimicrobial therapy of unexplained fever in neutropenic patients-guidelines of the infectious diseases working party of the German Society of Hematology and Oncology. Ann Hematol. 2003, 82: 105-117. 10.1007/s00277-003-0764-4.

Kirby JT, Fritsche TR, Jons RN: Influence of patient age on the frequency of occurrence and antimicrobial resistance patterns of isolates from hematology/oncology patients: report from the Chemotherapy Alliance for Neutropenics and Control of Emerging Resistance Program. Diagn Microbiol Infect Dis. 2006, 56: 75-82. 10.1016/j.diagmicrobio.2006.06.004.

Mutnick AH, Kirby JT, Jones RN: Cancer resistance surveillance program:initial results from haematology-oncology centers in North America. Chemotherapy alliance for neutropenia and the control of emerging resistance. Ann Pharmacother. 2003, 37: 47-56. 10.1345/aph.1C292.

Worth LJ, Slavin MA, Brown GV, Black J: Catheter-related bloodstream infections in hematology: time for standardized surveillance?. Cancer. 2007, 109: 1215-1226. 10.1002/cncr.22527.

Morrissey CO, Bardy PG, Slavin MA, Ananda-Rajah MR, Chen SC, Kirsa SW, Ritchie DS, Upton A: Diagnostic and therapeutic approach to persistent or recurrent fevers of unknown origin in adult stem cell transplantation and haematological malignancy. Intern Med J. 2008, 38: 477-495. 10.1111/j.1445-5994.2008.01724.x.

Rolston KV: Management of infections in the neutropenic patients. Annu Rev Med. 2004, 55: 519-526. 10.1146/annurev.med.55.091902.103826.

Coia JE, Duckworth GJ, Edwards DI, Farrington M, Fry C, Humphreys H, Mallaghan C, Tucker DR: Guidelines for the control and prevention of methicillin-resistant staplylococcus aureus (MRSA) in health care Facilities. J Hosp Infect. 2006, 63 (Supl 1): S1-S44. 10.1016/j.jhin.2006.01.001.

Shaw BE, Boswell T, Byrne JL, Yates C, Russell NH: Clinical impact of MRSA in a stem cell transplant unit: analysis before, during and after an MRSA outbreak. Bone Marrow Transplant. 2007, 39: 623-629. 10.1038/sj.bmt.1705654.

Horan TC, Andrus M, Dudeck MA: CDC/NHSN surveillance definition of health care-associated infection and criteria for specific types of infections in the acute care setting. Am J Infect Control. 2008, 36: 309-332. 10.1016/j.ajic.2008.03.002.

Peres-Bota D, Melot C, Lopes Ferreira F, Vincent JL: Infection probability score (IPS): a simple method to help assess the probability of infection in critically patients. Critical Care Medicine. 2003, 31: 2579-84. 10.1097/01.CCM.0000094223.92746.56.

Tillett WS, Francis T: Serological reactions in pneumonia with a nonprotein somatic fraction of pneumococcus. The Journal of experimental medicine. 1930, 52: 561-571. 10.1084/jem.52.4.561.

Knaus WA, Draper EA, Wagner DP, Zimmerman JE: APACHE II: a severity of disease classification system. Critical Care Medicine. 1985, 13: 818-829. 10.1097/00003246-198510000-00009.

Karnofsky DA, Burchanot JH: The clinical evaluation of chemotherapeutic agents. 1949, New York, New York Columpia Univ. Press

Schag CC, Heinrich RL, Ganz PA: Karnofsky performance status revisited: Reliability, validity and guidelines. Journal of Clinical Oncology. 1984, 2: 187-193.

Weinstein MP, Towns ML, Quartey SM, Mirrett S, Reimer LG, Parmigiani G, Reller LB: The clinical significance of positive blood cultures in the 1990's: a prospective comprehensive evaluation of the microbiology, epidemiology, and outcome of bacteremia and fungemia in adults. Clin Infect Dis. 1997, 24: 584-602.

Gonzalez-Barca E, Fernandez-Sevilla A, Carratala J, Salar A, Peris J, Granena A, Gudiol F: Prognostic factors influencing mortality in cancer patients with neutropenia and bacteremia. Eur J Clin Microbiol Infect Dis. 1999, 18: 539-544. 10.1007/s100960050345.

Engelhart S, Glasmacher A, Exner M, Kramer MH: Surveillance for nosocomial infections and fever of unknown origin among adult Haematology- Oncology patients. Infect Control Hosp Epidemiol. 2002, 23: 244-248. 10.1086/502043.

Wisplinghoff H, Seifert H, Wenzel RP, Edmond MB: Current trends in the epidemiology of nosocomial bloodstream infections in patients with haematological malignancies and solid neoplasms in hospitals in the United States. Clin Infect Dis. 2003, 36: 1103-1110. 10.1086/374339.

Feld R: Criteria for response in patients in clinical trials of empiric antibiotic regiments for febrile neutropenia: Is there agreement?. Support Care Cancer. 1998, 6: 444-448. 10.1007/s005200050192.

De Pauw BE, Raemaekers JM, Schattenberg T, Donnelly JP: Empirical and subsequent use of antibacterial agents on the febrile neutropenic patient. J Intern Med. 1997, 242: 69-77.

Viscoli C: EORTC International Antimicrobial Therapy Group. Management of infection in cancer patients. Studies of the EORTC International Antimicrobial Therapy Group. Eur J Cancer. 2002, 38: 82-87. 10.1016/S0959-8049(01)00461-0.

Oppenheim BA: The changing pattern of infection in neutropenic patients. J Antimicrob Chemother. 1998, 41 (Suppl D): 7-11. 10.1093/jac/41.suppl_4.7.

Zinner SH: Changing epidemiology of infections in patients with neutropenia and cancer: emphasis on gram-positive and resistant bacteria. Clin Infec Dis. 1999, 29: 490-494. 10.1086/598620.

Jugo J, Kennedy R, Crowe MJ, Lamrock G, McClurg RB, Rooney PJ, Morris T, Johnston PG: Trends in bacteraemia on the haematology and oncology units of a UK tertiary referral hospital. Journal of Hospital Infection. 2002, 50: 48-55. 10.1053/jhin.2001.1101.

Martini A, Gottin L, Melot C, Vincent JL: A prospective evaluation of the Infection Probability Score (IPS) in the intensive care unit. Journal of Infection. 2008, 56: 313-318. 10.1016/j.jinf.2008.02.015.

Safavi M, Honarmand A: Comparison of infection probability score, APACHE II and APACHE III Scoring Systems in Predicting Need for Ventilator and Ventilation Duration in Critically Ill Patients. Archives of Iranian Medicine. 2007, 10: 354-360.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1471-2334/10/135/prepub

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

EA wrote the manuscript and was involved in the study design. VR wrote the manuscript, analyzed the data, interpreted the results, revised the manuscript and was involved in the study design. KT collected the data, and contributed to the literature review. IE read the manuscript. All the authors read and approved the final manuscript.

Rights and permissions

Open Access This article is published under license to BioMed Central Ltd. This is an Open Access article is distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Apostolopoulou, E., Raftopoulos, V., Terzis, K. et al. Infection Probability Score, APACHE II and KARNOFSKY scoring systems as predictors of bloodstream infection onset in hematology-oncology patients. BMC Infect Dis 10, 135 (2010). https://doi.org/10.1186/1471-2334-10-135

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2334-10-135