Abstract

Background

Many studies conducted in health and social sciences collect individual level data as outcome measures. Usually, such data have a hierarchical structure, with patients clustered within physicians, and physicians clustered within practices. Large survey data, including national surveys, have a hierarchical or clustered structure; respondents are naturally clustered in geographical units (e.g., health regions) and may be grouped into smaller units. Outcomes of interest in many fields not only reflect continuous measures, but also binary outcomes such as depression, presence or absence of a disease, and self-reported general health. In the framework of multilevel studies an important problem is calculating an adequate sample size that generates unbiased and accurate estimates.

Methods

In this paper simulation studies are used to assess the effect of varying sample size at both the individual and group level on the accuracy of the estimates of the parameters and variance components of multilevel logistic regression models. In addition, the influence of prevalence of the outcome and the intra-class correlation coefficient (ICC) is examined.

Results

The results show that the estimates of the fixed effect parameters are unbiased for 100 groups with group size of 50 or higher. The estimates of the variance covariance components are slightly biased even with 100 groups and group size of 50. The biases for both fixed and random effects are severe for group size of 5. The standard errors for fixed effect parameters are unbiased while for variance covariance components are underestimated. Results suggest that low prevalent events require larger sample sizes with at least a minimum of 100 groups and 50 individuals per group.

Conclusion

We recommend using a minimum group size of 50 with at least 50 groups to produce valid estimates for multi-level logistic regression models. Group size should be adjusted under conditions where the prevalence of events is low such that the expected number of events in each group should be greater than one.

Similar content being viewed by others

Background

The idea that individual action is shaped by macro-level forces was evident in sociological theories of psychiatric illness and delinquency arising out of the Chicago School [1, 2]. These theories suggest that while individual risk factors can affect individual health and delinquent behavior, so also can the structure of the social environment in which we live. It is only in the last 20 years that these theories could be truly tested, when statistical models were developed that allowed researchers to examine the additive and interactive effects of individual-level and contextual features that affect sociological outcomes at the individual level. In the last ten years the use of multilevel models has burgeoned in epidemiology. These models are highly appropriate in assessing how context affects individual-level health risks and outcomes [3].

Many kinds of data, including national surveys, have a hierarchical or clustered structure. For example, respondents in a complex large survey are naturally clustered in geographical units (e.g., health regions) and may be grouped into smaller units (e.g. census tracts). Over the last two decades, researchers have developed a class of statistical models designed for data with hierarchical structure. These models are variously known as mixed, hierarchical linear, random coefficient, and multilevel models. Hierarchical data routinely arise in many fields where multilevel models can be used as an extended version of the more traditional statistical techniques either to adjust for the dependency of the observations within clusters by using variables at higher levels or assessing the impact of higher level characteristics on the outcome after controlling for individual characteristics at the base level. An important feature of this class of models is the ability to estimate the cross-level interaction which provides a measure of the joint effect of a variable at the individual level in conjunction with a variable at the group level.

The robustness issue and the choice of sample size and power in multilevel modeling for continuous dependent variables has been studied by several authors [4–13]. Austin [14] used Monte Carlo simulation to assess the impact of misspecification of the distribution of random effects on estimation of and inference about both the fixed effects and the random effects in multilevel logistic regression models. He concluded that estimation and inference concerning the fixed effects were insensitive to misspecification of the distribution of the random effects, but estimation and inferences concerning the random effects were affected by model misspecification. Simulation studies indicate that a larger number of groups is more important than a larger number of individuals per group [4, 5]. The overall conclusion from these studies is that the estimates of the regression coefficients are unbiased, but the standard errors and the variance components tend to be biased downward (underestimated) when the number of level 2 units is small (e.g. less than 30) [4, 11].

Outcomes of interest in many fields do not only reflect continuous measures. Binary outcomes such as depression, presence or absence of a disease, and poor versus good self-reported general health are also of interest. Few studies have examined the accuracy of estimates, sample size or power analysis in binary multilevel regression [5, 15]. Although Sastry et al. [15] calculate power and sample size in multilevel logistic regression models for their survey of children, families and communities in Los Angeles, they used a test of proportions between two comparison groups to calculate preliminary total sample size for a given baseline proportion and minimum detectable differences. After adjusting the calculated preliminary sample size for design effect, a total sample size of 3,250 was adopted. Finally based on simulation studies with total sample size of 3,250 and group sizes of 51, 66, 75, and 81 they decided to sample 65 groups (tracts) each of size 50.

We are unaware of any studies to date that have focused on these issues in multilevel logistic regression in a more comprehensive manner. In this paper simulation studies based on multilevel logistic regression models are used to assess the impact of varying sample size at both the individual and group level on the accuracy of the estimates of the parameters and their corresponding variance components.

Methods

Simulation models

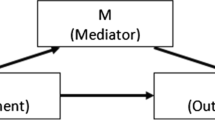

We focus on the following multilevel logistic model with one explanatory variable at level 1 (individual level) and one explanatory variable at level 2 (group level):

where .

Here P ij is the probability that individual i in group j will experience the outcome, x ij is an explanatory variable on the respondent level, and z j is a group level explanatory variable. Model (1) can be written in the following single equation:

logit(p ij ) = γ 00 + γ 10 x ij + γ 01 z j + γ 11 x ij z j + u 0j + u 1j x ij

In equation (2) the segment γ 00 + γ 10 x ij + γ 01 z j + γ 11 x ij z j is the fixed effect part and the segment u 0j + u 1j x ij is the random part of the model. An important feature of equation (2) is the presence of a cross-level interaction term represented by γ 11 z j x ij in which the coefficient γ 11 shows how π 1j , the slope of equation (1), varies with z j , the group level variable.

The size of the intra-class correlation coefficient (ICC) may also affect the accuracy of the estimates [16]. The ICC for the logistic model is defined as where and is the variance of the random intercept in a fully unconditional multilevel logistic model logit(p ij ) = γ 00 + u 0j where u 0j ~ N(0, ) [17].

The accuracy of the parameter estimates is quantified by the percentage relative bias [11]. Let stand for the estimate of the population parameter θ, then indicates the percentage relative bias for parameter θ. The accuracy of the standard error of the parameter estimate is assessed by analyzing the observed coverage of the 95% confidence interval created by using the asymptotic standard normal distribution [11].

Following the simulation conditions used by Maas and Hox [11] we set the following conditions for our simulation studies: (i) the number of individuals per group, j, n j , was set at 5, 30, and 50, (ii) the number of groups was set at 30, 50, and 100, and (iii) the variances of the random intercept were set at 0.13, 0.67, and 2.0, corresponding to intra-class correlation coefficients (ICC) of 0.04, 0.17, and 0.38, respectively. The individual and group explanatory variables x ij and z j are generated from the standard normal distribution. The group random components u 0j and u 1j are independent normal variables with mean zero and standard deviations σ 0 and σ 1where σ 1 = 1 in all simulations and σ 0 follows from the ICC and is set to 0.36, 0.82, and 1.42. We set the fixed effect parameters for all simulated models as: γ 00 = -1.0, γ 01 = 0.3, γ 10 = 0.3, and γ 11 = 0.3. To generate the outcome, a Bernoulli distribution with probability is used. The overall prevalence of the outcome is close to 30 percent.

For practical purposes we generated 1000 data sets for each combination since a larger number of replications would have substantially increased processing time. The software SAS 9.1 (SAS Institute, North Carolina, US) was used for simulating observations and estimating the parameters. The SAS procedure NLMIXED with default options was used for estimation. This procedure only allows full maximum likelihood estimation. If convergence was not achieved the estimated parameters were not included in calculating summary statistics. We set initial values as the "true" values of each parameter. Distributions for random effects were normal, the optimization technique was Dual Quasi-Newton, and the integration method was Adaptive Gaussian Quadrature (AGQ). The number of quadrature points in AGQ was selected automatically. The absolute value for parameter convergence criterion was 10-8 and the maximum number of iterations was 200.

Results

Convergence

The overall rate of model convergence varied from 56% to 100%. There were no negative variance estimates in converged models. Logistic regression was used to investigate the impact of ICC, number of groups and group size on the convergence. The rate of convergence (percent converged) significantly improved with either an increase in the number of groups or an increase in the group size. The overall rate of convergence for groups of sizes 5, 30, and 50 was 80.4%, 99.3, and 99.9%, respectively. For group of sizes 30, 50, and 100, the rate of convergence was 87.7%, 93.8%, and 98.1%. For the three ICC conditions of 0.04, 0.17, and 0.38 the rate of convergence was 89.2%, 94.5% and 95.9%. When we compared the samples that did and did not converge findings indicate no significant differences in prevalence (30.4% vs. 30.0%), mean and standard deviation of z j (0.01 and 1.01 versus 0.02 and 1.00), or mean and standard deviation of x ij (0.00 and 1.00 vs. 0.00 and 1.00). To further explore the non-convergent samples we examined 168 non-convergent simulated data sets with 30 groups and group size of 5. We first fitted a logistic model with random intercept only and then a logistic model with random slope only to each of these data sets. The estimated random intercepts and random slopes were classified as significant if the corresponding p-value was less that 0.05; otherwise each was classified as non-significant. Both the random intercept and random slope were statistically significant in only a small proportion of these data sets (2.4%). A closer investigation showed that when both random intercept and random slope were statistically significant either the random slope or the random intercept was severely underestimated. This suggests that non-convergence result from lack of sufficient variation in both the intercept and slope and further suggests that simplifying the model is appropriate; for example either a random intercept or a random slope is estimated, but not both.

Distribution of parameter estimates

P-values and confidence intervals given by the NLMIXED procedure are based on asymptotic normality which may not be accurate for small sample sizes. The Shapiro-Wilk test calculates a W statistic that tests whether a random sample of size n comes from a normal distribution. The Shapiro-Wilk test for normality was used to test the normality of the distribution of fixed effect estimates for different combinations. Logistic regression was used to assess the effects of each factor on normality. The ICC was not associated with normality of the parameter estimates. The number of groups was associated with normality of the estimates for γ 10 and γ 01 group size was associated with normality of the estimates for γ 00, γ 10, and γ 11. The majority of estimates from simulations with a group size of 5 were non-normal even with 100 groups. For simulations with a group size of 30 a few estimates were non-normal even with 50 groups. All estimates were normally distributed with 100 groups and group size of 50.

Parameter estimates

In simulation studies of multilevel regression with continuous outcomes, Maas and Hox [11] found negligible bias for the fixed effect parameter estimates. They reported an average bias less than 0.05% for the fixed parameter estimates, intercept and the regression slopes. Our simulations show that the overall biases for the fixed effect parameters γ 00, γ 01, γ 10, and γ 11 were 0.6%, 2.6%, 1.4%, and 3.7% respectively (data not shown). The cross-level interaction parameter (γ 11) had the largest overall bias.

Table 1 shows the percent relative bias and rate of convergence (percent converged) for different simulation conditions. For the fixed effect parameters, the largest biases (8.8%, 11.1%, 15.8%, and 13.3% for γ 00, γ 01, γ 10, and γ 11) were found under conditions where of the smallest variance for the random intercept (0.13), the smallest group size (5), and the lowest number of groups (30). When the size of the group was increased to 30 with 30 groups, the bias was reduced to less than 6%. These biases were reduced to less than 4% when the size of the group was 30 and the number of groups was 50. Even further reductions occurred (bias of 1% or less) when the size of the group was 30 and there were 100 groups.

The estimates of the random intercept and random slope have larger biases compared to the fixed effect parameters. The overall biases (data not shown) for σ 0 and σ 1 were 6.9% and 5.0%. The bias for σ 1 remained at the level of 5% for different values of σ 0, however the estimates for σ 0 had the largest bias (21.2%) for σ 0 = 0.36.

The relative bias for the variance components was less than 4% when the size of the group was 50 and there were 100 groups. The variance-covariance parameter estimates are positively biased in all cases when the group size was 5 regardless of the number of groups (some exceeded 100%). The variance components were consistently underestimated when with a group size of 30 or more regardless of the number of groups. This problem of underestimation has been noted previously in simulation studies of multilevel models for continuous outcomes [11].

The overall relative bias for the random intercept was 21%, 0.5%, 0.1% for ICC 0.04, 0.17, 0.38, respectively. For the random slope, the overall relative biases for the three ICC conditions were not statistically different, ranging from 4% to 6%. There were no statistically significant differences in bias for the fixed effect parameters for any of the ICC conditions.

Standard errors

We adopted the method used by Maas and Hox [11] to assess the accuracy of the standard errors. For each parameter in each simulated data set the 95% Wald confidence interval is established. For each parameter a non-coverage indicator variable is set to zero if the confidence interval contains the true value, otherwise if the true value lies outside the 95% confidence interval it is set to 1. The effect of number of groups, group size, and ICC on the non-coverage is presented in Tables 2 and 3, respectively. Logistic regression was used to assess the effect of the different simulated conditions on non-coverage.

As shown in Table 2 the effect of number of groups on the standard errors of the fixed effect parameters is small with non-coverage rates ranging from 5% and 6%. The nominal non-coverage rate is 5%. The effect of number of groups on the standard errors of the variance component was larger than the nominal 5%, with non-coverage ranging from 7% to 11%. With 30 groups the non-coverage rate was 11% for the random intercept and 10% for the random slope. These non-coverage rates were reduced to 9% and 7% percent, respectively, for 100 groups. The extent of non-coverage implies that the standard error for the variance components is underestimated, a phenomenon reported by Maas and Hox [11] in their simulation studies of two-level linear regression models. The rate of non-coverage decreased as number of groups increased however, the non-convergence cannot be ignored.

The rates of non-coverage for the fixed effect parameters varied between 4 to 6% which is close to 5% nominal (Table 2). The effect of group size on the standard error of the estimates of the random intercept (close to 10%) was not significant; however the rate of non-coverage for the random slope increased as the group size increased. Table 2 shows that ICC had no effect on the non-coverage rates for the fixed effects or the random slope. Similar to findings for the number of groups and group size, the rate of non-coverage is close to 5% for the fixed effect parameters and over 5% for the random effect parameters. The rate of non-coverage for the random intercept decreased as ICC increased.

Table 3 shows the rates of non-coverage for each simulation condition. The minimum and maximum rates of non-coverage for the fixed effect parameters, γ 00, γ 01, γ 10, and γ 11, range from 3% and 7%. The rates of non-coverage for the variance-covariance components range from 7% and 17%. These findings indicate that the estimates of the standard errors are acceptable for the fixed effect parameters but not acceptable for the variance covariance components.

Prevalence

The accuracy of the estimates of the parameters at the individual level depends on the prevalence of the outcome. To assess the relationship between the prevalence of the outcome and the sample size we repeated our simulations with prevalence rates of 0.10, 0.34, and 0.45. We set the parameters, γ 00, γ 01, γ 10, and γ 11, at -3.0, 0.3, 0.3, and 0.3 for prevalence of 10%, at -1.0, 0.3, 0.3, and 0.3 for prevalence of 34% and -0.3, -0.3, -0.3, and -0.3 for prevalence of 45%. The variances of the random intercepts and random slopes were 1 for all simulations. Table 4 shows that for both fixed and random effect parameters the simulated data with 10% prevalence had the largest bias.

The overall effect of prevalence on the non-coverage rates was not significant (data not shown). As shown in Table 4 the rate of non-coverage for all fixed effect parameter estimates ranged from 5 to 6%. The rate of non-coverage for the random intercept and random slope variance estimates ranged from 8 to 11%. This suggests that a larger sample size is necessary to minimize bias for low-prevalent outcomes. The largest bias was observed under conditions when the size of the group was 5 and the prevalence of the outcome was 10% (12% for fixed effect and 50% for random effect). Similarly, with 30 groups and a 10% prevalence the largest bias was 9% for the fixed effect parameters and 15% for the random slope. The rate of convergence was lowest with 10% prevalence.

Discussion and conclusion

In this paper we investigated the impact of varying sample size at both the group and individual level on the accuracy of the parameter estimates and variance components using multilevel modeling for logistic regression. We also examined the effect of prevalence of the outcome on the accuracy of the estimates. The number of replications was restricted to 1000 due to extensive computer processing time.

Previous research has indicated that a sample of 50 groups and 30 units per group is sufficient to produce reliable parameter estimates for linear multilevel regression models [11]. Our findings suggest this may not be the case for logistic regression. Simulations presented in this paper suggest that the number of level two groups and the number of individuals in each group should be adjusted for prevalence of the outcome. Low prevalent events require a larger number of individuals per group.

We did not study the effect of different estimation procedures on the accuracy of the parameter estimates. However Rodriguez and Goldman [18, 19] showed that the marginal quasi likelihood with first order Taylor expansion underestimates both the fixed effect and the variance-covariance components. The data set that formed the basis for these conclusions was extreme in the sense that the variance components were large and the sample size at the lowest level was quite small. In less extreme cases it appears that predictive quasi likelihood with second order Taylor expansion usually provides accurate estimates for both fixed and random parameters [5]. Simulations by Callens and Croux [20] compared penalized quasi-likelihood (PQL) with adaptive Gaussian quadrature (AGQ) and non-adaptive Gaussian quadrature (NGQ) and showed that PQL suffers from large bias but performs better in terms of mean-squared error (MSE) than standard versions of quadrature methods. They also showed that automatic selection of the number of quadrature points in AGQ (the default of the NLMIXED procedure) might be inadequate and lead to a loss in MSE. Thus, numerical results may change slightly depending on the statistical package, number of iterations, or algorithm used to estimate the parameters.

In multilevel analysis non-convergence can occur when estimating too many random components that are close to zero. Hox [5] suggests a solution to this problem which is to remove some random components, thereby simplifying the model. In our case non-convergence was a significant problem when group size was 5 and the number of groups was 30. There were no significant differences in the prevalence of the outcome or in the distribution of the explanatory variables among the converged versus non-converged samples. When the sample size is small there may not be sufficient variation to estimate a random effect, thus leading to non-convergence.

Simulation studies come with their own set of limitations. This said, our results are comparable with simulation results for multilevel regression models as reported by other researchers [11]. We focused on the impact of sample size at the individual and group level on the bias and accuracy of parameter estimates. We did not consider the impact of varying the distribution and variance of the individual and group level explanatory variables for practical reasons, specifically due to the large number of conditions that would have to be considered and which would result in extensive computer processing time. For a discussion of the impact of misspecification of the distribution of random effects on estimation of and inference about both the fixed effects and the random effects in multilevel logistic regression models see Austin [14].

Our results and recommendations are based on extensive simulation studies from data which are generated from normal distributions. Since the normal distribution assumption may be violated in real study applications we conducted further simulations with 100 replications for each model and relaxed the normal distribution assumption. This allowed us to compare convergence, coverage, and bias of the simulated non-normal models with the simulated normal model. Comparisons were done by each parameter, number of groups, and group size. The distributions for generating z j , u 0j , u 1j , and x ij for 8 simulated models are as follows: Model 1: N(0,1), N(0,1), N(0,1), N(0,1); Model 2: N(0,1), N(0,1), N(0,4), N(0,4); Model 3: N(0,4), N(0,4), N(0,4), N(0,4); Model 4: U(-0.5,0.5), N(0,1), N(0,1), U(-0.5,0.5); Model 5: U(-0.5,0.5), U(-2,2), U(-2,2), U(-0.5,0.5); Model 6: N(0,1), t(df = 3), t(df = 3), N(0,1); Model 7: t(df = 3), t(df = 3), t(df = 3), t(df = 3); Model 8: t(df = 5), t(df = 5), t(df = 5), t(df = 5) where N(a, b) stands for a normal distribution with mean a and variance b, U(a, b) represents uniform distribution over the interval (a, b), and t(df = k) stands for t-student distribution with k degrees of freedom. Table 5 shows the convergence, coverage and relative bias for above 8 models.

Logistic regression was used to compare the rate of convergence of models 2 to 8 with model 1 (the model with 4 normal standard distributions). Models 3, 6, 7, and 8 were more likely to converge while models 4 and 5 were less likely to converge (p-value < 0.01) when group size was 5. When group size was greater than 5 there was no significant difference between the rates of convergence for all models.

The comparisons (Ttest) between the fixed effect parameter estimates of models 2–8 with model 1 did not show any significant differences. For models 6, 7, and 8 the random intercept and random slope were underestimated compare to model 1 (p < 0.01). This phenomenon was also observed and reported by Austin [14] for a logistic model with random intercept.

Fisher exact test was used to test the rate of coverage of models 2–8 with model 1 for each parameter, number of groups, and group size. There was no significant difference between the rates of coverage for the fixed effect parameters when group size was greater than 5 using groups of 50 or more. The rates of coverage for the random components of models 6 and 7 were significantly lower than the coverage rates of model 1 (p < 0.01). Austin [14] reported similar conclusions for a logistic model with random intercept.

Although the misspecification of random components significantly affected the estimates and standard errors of the random intercepts and random slopes when either group size or number of groups was small, the estimates and standard errors of all models were statistically the same as those estimates and standard errors for model 1 when the number of groups and group size was 50 or more; the exception being for a t-distribution with 3 degrees of freedom.

Despite the limitations of simulation studies [see for example 21] our findings can offer some suggestions for sample size selection in multilevel logistic regression. In practice a group size of 30 is often recommended in educational research and a group size of 5 is recommended in family and longitudinal research studies [11]. Based on our findings we recommend a minimum group size of at least 50 and a minimum of 50 groups to produce valid estimates for multilevel logistic regression models. We offer a caveat here such that the group size must be adjusted properly for low-prevalent outcomes; specifically the expected number of outcomes in each group should be greater than one. This caveat is offered as a caution to researchers using multilevel logistic regression in conjunction with small data sets; under these conditions researchers can expect to encounter convergence problems, large biases in their model estimates and inadequate statistical inference procedures. Our findings suggest that when choosing a sample size, researchers should base their decision on the level of bias that they consider acceptable for that particular study.

The main findings from this research can be summarized as follows: (i) convergence problems arise when prevalence is low, the number of groups is small, or the group size is small; (ii) the estimates of the fixed effect parameters are unbiased when the number of groups is relatively large (more than 50) and with moderate group size; (iii) when group size is small (e.g. 5) the estimates of the random slope and random intercept are severely overestimated, and; (iv) the standard errors of the variance component estimates are underestimated even with 100 groups and group size of 50.

References

Faris REL, Dunham HW: Mental disorders in urban areas: an ecological study of schizophrenia and other psychoses,. Edited by: Dunham HW. 1939, Chicago, University of Chicago Press

Shaw CR, McKay HD: Juvenile delinquency and urban areas;: a study of rates of delinquency in relation to differential characteristics of local communities in American cities. Edited by: McKay HD. 1969, Chicago, University Press

O'Campo P: Invited Commentary: Advancing Theory and Methods for Multilevel Models of Residential Neighborhoods and Health. Am J Epidemiol. 2003, 157: 9-13. 10.1093/aje/kwf171.

Maas CJM, Hox JJ: Robustness issues in multilevel regression analysis. Statistica Neerlandica. 2004, 58: 127-137. 10.1046/j.0039-0402.2003.00252.x.

Hox JJ: Multilevel analysis: techniques and applications. 2002, Mahwah, N.J., Lawrence Erlbaum Publishers

Snijders TAB, Bosker RJ: Standard Errors and Sample Sizes for 2-Level Research. Journal of Educational Statistics. 1993, 18: 237-259. 10.2307/1165134.

Leyland AH, Goldstein H: Multi-Level Modeling of Health Statistics. 2001, London, John Wiley

Raudenbush SW, Liu XF: Effects of study duration, frequency of observation, and sample size on power in studies of group differences in polynomial change. Psychological Methods. 2001, 6: 387-401.

Bingenheimer JB, Raudenbush SW: Statistical and substantive inferences in public health: Issues in the application of multilevel models. Annual Review of Public Health. 2004, 25: 53-77. 10.1146/annurev.publhealth.25.050503.153925.

Atkins DC: Using multilevel models to analyze couple and family treatment data: Basic and advanced issues. Journal of Family Psychology. 2005, 19: 98-110. 10.1037/0893-3200.19.1.98.

Maas CJM, Hox JJ: Sufficient Sample Sizes for Multilevel Modeling. Methodology: European Journal of Research Methods for the Behavioral and Social Sciences. 2005, 1: 85-91. 10.1027/1614-2241.1.3.86.

Shieh YY, Fouladi RT: The Effect of Multicollinearity on Multilevel Modeling Parameter Estimates and Standard Errors. Educational and Psychological Measurement. 2003, 63: 951-985. 10.1177/0013164403258402.

Dickinson LM, Basu A: Multilevel Modeling and Practice-Based Research. Ann Fam Med. 2005, 3: S52-S60. 10.1370/afm.340.

Austin PC: Bias in Penalized Quasi-Likelihood Estimation in Random Effects Logistic Regression Models When the Random Effects are not Normally Distributed. Communications in Statistics: Simulation & Computation. 2005, 34: 549-565. 10.1081/SAC-200068364.

Sastry N, Ghosh-Dastidar B, Adams J, Pebley AR: The Design of a Multilevel Survey of Children, Families, and Communities: The Los Angeles Family and Neighborhood Survey. 2003, California, RAND, Working Paper Series 03-21: 1-55.

Goldstein H: Multilevel statistical models. 2003, New York, Distributed in the United States of America by Oxford University Press, 3rd ed.

Guo G, Zhao H: Multilevel Modeling for Binary Data. Annual Review of Sociology. 2000, 26: 441-462. 10.1146/annurev.soc.26.1.441.

Rodriguez G, Goldman N: An Assessment of Estimation Procedures for Multilevel Models with Binary Responses. Journal of the Royal Statistical Society Series A (Statistics in Society). 1995, 158: 73-89. 10.2307/2983404.

Rodriguez G, Goldman N: Improved estimation procedures for multilevel models with binary response: a case-study. Journal of the Royal Statistical Society: Series A (Statistics in Society). 2001, 164: 339-355. 10.1111/1467-985X.00206.

Callens M, Croux C: Performance of likelihood-based estimation methods for multilevel binary regression models. Journal of Statistical Computation and Simulation. 2005, 75: 1003-1017. 10.1080/00949650412331321070.

Kreft IGG: Are multilevel techniques necessary? An overview, including simulation studies. 1996

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1471-2288/7/34/prepub

Acknowledgements

From the Centre for Research on Inner City Health, The Keenan Research Centre in the Li Ka Shing Knowledge Institute of St. Michael's Hospital. The authors gratefully acknowledge the support of the Ontario Ministry of Health and Long-Term Care.

The views expressed in this manuscript are the views of the authors and do not necessarily reflect the views of the Ontario Ministry of Health and Long-Term Care. The authors are also grateful to the reviewers for their thoughtful and constructive comments.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The author(s) declare that they have no competing interests.

Authors' contributions

RM performed simulation studies and drafted the paper. RM, FIM and RHG conceptualized the research and revised the manuscript for intellectual content. All authors read and approved the final manuscript.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Moineddin, R., Matheson, F.I. & Glazier, R.H. A simulation study of sample size for multilevel logistic regression models. BMC Med Res Methodol 7, 34 (2007). https://doi.org/10.1186/1471-2288-7-34

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2288-7-34