Abstract

Background

It is generally believed that exhaustive searches of bibliographic databases are needed for systematic reviews of health care interventions. The CENTRAL database of controlled trials (RCTs) has been built up by exhaustive searching. The CONSORT statement aims to encourage better reporting, and hence indexing, of RCTs. Our aim was to assess whether developments in the CENTRAL database, and the CONSORT statement, mean that a simplified RCT search strategy for identifying RCTs now suffices for systematic reviews of health care interventions.

Methods

RCTs used in the Cochrane reviews were identified. A brief RCT search strategy (BRSS), consisting of a search of CENTRAL, and then for variants of the word random across all fields (random$.af.) in MEDLINE and EMBASE, was devised and run. Any trials included in the meta-analyses, but missed by the BRSS, were identified. The meta-analyses were then re-run, with and without the missed RCTs, and the differences quantified. The proportion of trials with variants of the word random in the title or abstract was calculated for each year. The number of RCTs retrieved by searching with "random$.af." was compared to the highly sensitive search strategy (HSSS).

Results

The BRSS had a sensitivity of 94%. It found all journal RCTs in 47 of the 57 reviews. The missing RCTs made some significant differences to a small proportion of the total outcomes in only five reviews, but no important differences in conclusions resulted. In the post-CONSORT years, 1997–2003, the percentage of RCTs with random in the title or abstract was 85%, a mean increase of 17% compared to the seven years pre-CONSORT (95% CI, 8.3% to 25.9%). The search using random$.af. reduced the MEDLINE retrieval by 84%, compared to the HSSS, thereby reducing the workload of checking retrievals.

Conclusion

A brief RCT search strategy is now sufficient to locate RCTs for systematic reviews in most cases. Exhaustive searching is no longer cost-effective, because in effect it has already been done for CENTRAL.

Similar content being viewed by others

Background

Literature searching for systematic reviews of interventions in health care has been largely based on finding all randomized controlled trials (RCTs), as this study design is considered the gold standard. However RCTs make up only a very small proportion of all the articles included in bibliographic databases, and so the problem for systematic reviewers has been to devise a search strategy which is sensitive enough to find all the RCTs, but specific enough not to bury them in a large number of other unwanted retrievals needing to be manually excluded.

Two developments have facilitated searching for RCTs. The first is CENTRAL (the Cochrane Central Register of Controlled Trials), the world's most comprehensive database consisting exclusively of controlled clinical trials. It currently contains over 425,000 citations. The majority of the trials in CENTRAL have been identified through systematic searches of MEDLINE and EMBASE. The first two phases of a three phase highly sensitive search strategy (HSSS) have been used to search MEDLINE [1]. CENTRAL also includes citations to reports of controlled trials that are not indexed in MEDLINE or EMBASE, derived through searches of other bibliographic databases, and extensive hand searching.

Because identification has relied solely on the titles and, where available, the abstracts, some relevant trials may not have been identified. Therefore, the Cochrane Handbook says "it may still be worthwhile for reviewers to search MEDLINE using the Cochrane highly sensitive search strategy and to obtain and check the full reports of possibly relevant citations" [2]. However, this strategy is complicated to run, and may require time-consuming screening of abstracts, and perhaps of full articles.

The second development is the CONSORT statement, introduced in 1996 [3], and since revised [4]. CONSORT comprises a 22 item checklist and a flow diagram to help improve the quality of reports of RCTs, and has been endorsed by prominent medical journals There is evidence from a comparative before-and-after evaluation that there has been an increase over time in the number of CONSORT checklist items included in the reports of RCTs [5].

Item 1 on the CONSORT checklist recommends that the method in which participants were allocated to interventions (e.g., "random allocation", "randomized" or "randomly assigned") is described in the title and abstract. This allows instant identification of RCTs, and should help ensure that a study is appropriately indexed as an RCT in bibliographic databases.

As the Cochrane Collaboration has already done exhaustive work to ensure that CENTRAL is as complete as possible, we wanted to examine whether this eliminates the need for individual reviewers to run the HSSS, and the effectiveness of replacing this with a simplified search strategy.

The aims were

-

1.

To determine the effect on the results of Cochrane reviews of using a brief RCT search strategy (BRSS).

-

2.

To examine the change in use of variants of the word random in the title or abstract of journal articles, pre- and post-CONSORT.

Methods

All reviews new to the Cochrane Database of Systematic Reviews in the Cochrane Library 2004 issue 2 were selected. Those that stated that they were considering only RCTs, and which found at least one RCT, were selected.

All trials included in each review were identified from the section 'References to studies included in this review'. Each trial was checked to determine whether it was indexed in CENTRAL (on Cochrane Library 2004, issue 2), and then MEDLINE. If not in either of these databases, EMBASE was checked.

The full bibliographic records of all trials that were in either MEDLINE or EMBASE (using the OVID interface) were examined to determine whether random$ was in any field. (Random$.af. means a search of variants of random in all fields, where $ is truncation symbol).

The full papers of any trials that were: 1) not in CENTRAL, or 2) did not have random$ in any field in the bibliographic record, were obtained and checked to see whether they were actually RCTs. All non-English articles were translated, apart from those in Japanese and Chinese, as resources were not available.

The impact of omitting the RCTs not found with the BRSS was quantified, using Review Manager 4.2.7. The forest plots for the meta-analyses were reproduced, both with and without data from the missing trials, and the results compared to see if omission would make any important difference. The differences could theoretically include;

-

a)

There might be less, or no data left, for some outcomes.

-

b)

There could be a different result; no benefit over comparator, or vice versa.

-

c)

There could be the same result, but with a different effect size.

-

d)

There could be the same result and effect size, but with a wider confidence interval, and possibly loss of statistical significance.

In summary, the BRSS would involve: 1) searching CENTRAL, and 2) supplementing that with a search of MEDLINE and EMBASE, using a search of 'random$.af.' to pick up trials not in CENTRAL.

Results

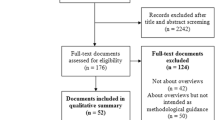

There were 78 new reviews, of which 57 met the inclusion criteria. They cited a total of 920 trials; 79% (725) were journal articles. The remaining 21% were from the grey literature, 80% of these being conference abstracts).

Twenty one reviews were excluded from our study; 14 because they did not find any RCTs that met their inclusion criteria, and seven because they included other study designs in addition to RCTs.

Determination of the proportion of journal articles found using the BRSS

It was found that 93.3% (677) of the 725 journal articles included in the systematic reviews were in CENTRAL. It was assumed that these were all RCTs, but this was not checked due to the large numbers involved (and it was not relevant to the aims of the study). This left 48 journal articles not indexed in CENTRAL.

The full texts of all 48 of these articles (apart from the four in Japanese and five in Chinese) were translated and checked. It was found that 40 were RCTs, and the remaining eight used non-RCT study designs. (It was assumed that the untranslated articles were RCTs). Eleven of the 40 RCTs were found by searching MEDLINE or EMBASE with 'random$.af'. Therefore, 29 (4%) of RCTs would not have been found with the BRSS.

Details of the 29 RCTs not found by the BRSS

The 29 trials were distributed over 12 reviews. Ten were in MEDLINE, 11 in EMBASE only. Twelve were non-English language.

In two reviews [6, 7], each with one missing trial, the data used in the meta-analyses were available in two other papers; both were in CENTRAL, and included in the reviews. Zhang 1990 [8] was confirmed (by authors of the review) to be the same trial as Chang 1996 [9]. The data in the Stensrud trial [10] was also reported in another paper by Stensrud [11].

Therefore, this leaves 27 missing journal articles, in 10 separate reviews that contain at least one trial with data not found by the BRSS. Table 1 shows the detail of these trials and the impact of excluding them from the reviews.

Only one review did not do a meta-analysis[12] The nine remaining reviews did a total of 129 meta-analyses of various outcomes. In five out of the 10 reviews the missing trials made no significant difference, in that there were no clinically significant changes in effect size, nor any change in whether results were statistically significant or not. Hence, there was some difference in only five reviews.

The impact of the 'missing trials' being excluded from the reviews

Two consequences of missing data were found:

-

1)

In three reviews [13–15], the missing trials were the only ones providing data for seven (of a total 35) outcomes, so no data were available for these seven outcomes. In a fourth review [16], omission of a trial would lose 92% of patients for two outcomes out of 28, but this did not change the significance or direction of the result.

-

2)

In three reviews [15–17], the meta-analyses lost statistical significance due to the wider confidence intervals, for three of 74 outcomes, but one of these was not a clinical outcome.

Comparison of retrieval of HSSS and random$.af. in MEDLINE

For the period 1966 to October, 2004, the HSSS search strategy retrieved 2,505,742 records in MEDLINE, compared to 'random$.af.', which retrieved 399,208 records. Therefore, only 16% of the number of records were found using 'random$.af.', compared to HSSS.

CONSORT and the change over time in the proportion of RCTs with random$ in title or abstract

Using the 725 journal articles in our sample, we compared the frequency of random$ in the title or abstract between pre-CONSORT (published up to 1996) and post-CONSORT trials (published from 1997 onwards). Figure 1 shows the change in the proportion of RCTs with 'random$' in the title or abstract (with three year moving average trendline added).

In the post-CONSORT years, 1997–2003, the term appeared in 85% of the titles or abstracts, compared to 68% in the seven years before CONSORT (1990–96); mean difference was 17% (95% CI 8.3 to 25.9%; p = 0.001). If a longer pre-CONSORT period is used, 1980 to 1996, the proportion is similar at 62%.

Discussion

Using the BRSS, rather than the much more exhaustive HSSS, to retrieve RCTs in journal articles for Cochrane reviews, affected only a very small percentage of total outcomes of a few reviews, and made no important difference to the conclusions of these reviews. The most affected review had four of the five included trials published in Chinese [14].

The CONSORT statement appears to be associated with a significant increase in the frequency of random in the titles and abstracts of journal articles. Whether this is directly due to CONSORT, or whether CONSORT simply accelerated a pre-existing trend, and raised the general awareness amongst authors and editors for better reporting of RCTs, is uncertain. This improved description of RCTs by authors should mean that all RCTs are indexed with the appropriate publication type in MEDLINE, and also result in more sensitive retrieval of RCTs using the BRSS.

The BRSS had a sensitivity of 96% for RCTs in journal articles. Compared to the HSSS, the BRSS reduced the MEDLINE retrieval by 84%. This would represent a major time and cost saving in manual screening.

The strengths of this study include firstly that we used Cochrane reviews, as they approximate the 'gold standard' in searching, due to the requirement for exhaustive searches. Hence we can be fairly certain that we were starting with as comprehensive set of included trials as possible. Secondly, we quantified the impact of omitting trials not found with the simplified search.

A weakness of this study was that we could not check whether the RCTs would have been in CENTRAL at the time the searchers did their searching, and hence eliminate the possibility that some RCTs were first identified by the reviewers, and then passed to CENTRAL. However, given the extensive searching routinely done for CENTRAL, it is highly likely most RCTs would be identified sooner or later, and therefore be included in a subsequent update of the review.

A range of subject areas was included, which helps with generalisability, though it may decrease power in any one subject area. However, a recent study on identifying quality RCTs in pain relief gives general support to our findings [18]. It investigated the efficiency of the search strategy DBRCT.af., ("double-blind," "random," or variations of these terms) in MEDLINE and EMBASE, and was found have a sensitivity of 97%.

This study focused only on a simplified search strategy for retrieving RCTs in journal articles, since these made up the vast majority of the references used in Cochrane reviews, and are most important in terms of the quality and quantity of assessable data available. By contrast, most grey literature (the vast majority of which is meeting abstracts) gives very limited data. However, CENTRAL includes many grey literature trials, so these will be identified with the BRSS.

There are currently over 2200 Cochrane reviews, and these will need maintaining in the future. Authors may be encouraged to update their reviews, if they can be confident they can identify RCTs comprehensively with a simple search. The simplified search may also be useful for those doing reviews in a tight timescale, or by clinicians who just want a rapid but reliable answer to a question. There is a case for the 'not quite perfect but rapid, easy and almost complete' search'.

In practice, some of the few trials missed by the BRSS were small or of poor quality, and as Egger and colleagues have reported, the last few studies found by exhaustive searching could introduce bias, by being of poor quality [19].

The issue is whether the marginal benefits of exhaustive searching justify the extra costs. When the inclusion criteria demand only RCTS, this study suggests that exhaustive searching is now, in the era of CENTRAL and CONSORT, no longer cost-effective.

References

Dickersin K, Manheimer E, Wieland S, Robinson KA, Lefebvre C, McDonald S: Development of the Cochrane Collaboration's CENTRAL Register of controlled clinical trials. Eval Health Prof. 2002, 25: 38-64. 10.1177/0163278702025001004.

Alderson P, Green S, Higgins JP, editors: Cochrane Reviewers' Handbook 4.2.2 [updated March 2004]. In: The Cochrane Library, Issue 2, 2004; Section 5. 2004, Chichester, UK, John Wiley & Sons, Ltd

Begg C, Cho M, Eastwood S, Horton R, Moher D, Olkin I, Pitkin R, Rennie D, Schulz KF, Simel D, Stroup DF: Improving the quality of reporting of randomized controlled trials. The CONSORT statement. JAMA. 1996, 276: 637-639. 10.1001/jama.276.8.637.

Moher D, Schulz KF, Altman D, CONSORT Group (Consolidated Standards of Reporting Trials): The CONSORT statement: revised recommendations for improving the quality of reports of parallel-group randomized trials.[see comment]. [Review] [30 refs]. JAMA. 2001, 285: 1987-1991. 10.1001/jama.285.15.1987.

Moher D, Jones A, Lepage L: Use of the CONSORT statement and quality of reports of randomized trials: a comparative before-and-after evaluation. JAMA. 2001, 285: 1992-1995. 10.1001/jama.285.15.1992.

Linde K, Rossnagel K: Propranolol for migraine prophylaxis. Cochrane Database Syst Rev. 2004, 2: CD003225-

Badger C, Preston N, Seers K, Mortimer P: Benzo-pyrones for reducing and controlling lymphoedema of the limbs. Cochrane Database Syst Rev. 2004, 2: CD003140-

Zhang D: Benzo-pyrones in the treatment of chronic lymphoedema of the arms and legs. Zhonghua Yi Xue Za Zhi. 1990, 70: 655-657.

Chang TS, Gan JL, Fu KD, Huang WY: The use of 5,6 benzo-[alpha]-pyrone (coumarin) and heating by microwaves in the treatment of chronic lymphedema of the legs. Lymphology. 1996, 29: 106-111.

Stensrud P, Sjaastad O: Comparative trial of Tenormin (atenol) and Inderal (propranolol) in migraine. Headache. 1980, 20: 204-207. 10.1111/j.1526-4610.1980.h2004006.x.

Stensrud P, Sjaastad O: Comparative trial of Tenormin (atenolol) and Inderal (propranolol) in migraine. Ups J Med Sci Suppl. 1980, 31: 37-40.

Schroeder K, Fahey T, Ebrahim S: Interventions for improving adherence to treatment in patients with high blood pressure in ambulatory settings. Cochrane Database Syst Rev. 2004, 2: CD004804-

Marshall M, Lockwood A: Early Intervention for psychosis. Cochrane Database Syst Rev. 2004, 2: CD004718-

Wu HM, Tang JL, Sha ZH, Cao L, Li YP: Interventions for preventing infection in nephrotic syndrome. Cochrane Database Syst Rev. 2004, 2: CD003964-

Koning S, Verhagen AP, Suijlekom-Smit LW, Morris A, Butler CC, van der Wouden JC: Interventions for impetigo. Cochrane Database Syst Rev. 2004, 2: CD003261-

Rees K, Bennett P, West R, Davey SG, Ebrahim S: Psychological interventions for coronary heart disease. Cochrane Database Syst Rev. 2004, 2: CD002902-

Allen SJ, Okoko B, Martinez E, Gregorio G, Dans LF: Probiotics for treating infectious diarrhoea. Cochrane Database Syst Rev. 2004, 2: CD003048-

Chow TK, To E, Goodchild CS, McNeil JJ: A simple, fast, easy method to identify the evidence base in pain-relief research: validation of a computer search strategy used alone to identify quality randomized controlled trials. Anesth Analg. 2004, 98: 1557-65, table. 10.1213/01.ANE.0000114071.78448.2D.

Egger M, Juni P, Bartlett C, Holenstein F, Sterne J: How important are comprehensive literature searches and the assessment of trial quality in systematic reviews? Empirical study. Health Technol Assess. 2003, 7: 1-76.

Badger C, Seers K, Preston N, Mortimer P: Antibiotics / anti-inflammatories for reducing acute inflammatory episodes in lymphoedema of the limbs. Cochrane Database Syst Rev. 2004, CD003143-

Kasseroller R: Erysipelprophylaxe beim sekundaren lymphoedem mit selen. Der Allgemeinartzt. 1996, 3: 244-247.

Dretzke J, Toff WD, Lip GY, Raftery J, Fry-Smith A, Taylor R: Dual chamber versus single chamber ventricular pacemakers for sick sinus syndrome and atrioventricular block. Cochrane Database Syst Rev. 2004, 2: CD003710-

Davis MJ, Mundin HA, Mews GC, Cope GD: Functional benefits of physiologic compared with ventricular pacing in complete heart block. Clin Prog Electrophysiol Pacing. 1985, 3: 457-460.

Linszen D, Dingemans PM, Lenior ME, Scholte WF, Goldstein M: Early family and individual interventions and relapse in recent-onset schizophrenia and related disorders. Ital J Psychiatry Behav Sci. 1998, 8: 77-84.

Linszen DH, Dingemans PMAJ, Scholte WF, Lenior ME, Goldstein M: Early recognition, intensive intervention and other protective and risk factors for psychotic relapse in patients with first psychotic episodes in schizophrenia. Int Clin Psychopharmacol. 1998, 13: S7-S12.

Arata J, Kanzaki H, Kanamoto A, Okawara A, Kato N, Kumakiri M, Shimizu T, Ishibashi Y, Nogita T, Iozumi K, Harada S, Nakanishi H, Hyang YS, Okouchi H, Takano S, Ohara K, Ota T, Shishiba T, Nakabayashi Y: A double blind comparative study of cefdinir and cefaclor in skin and skin structure infections. Chemotherapy. 1989, 37: 1016-1042.

Arata J, Yamamoto Y, Tamaki H, Ookawara A, Fukaya T, Ishibashi Y, Shimozuma M, Iozumi T, Kukita A, Kimura Y, Takahashi H, Sasaki J, Nishiwaki M, Urushibata O, Tomizawa T, Eto H, Kurihara S, Morohashi M, Seki T: Double-blind study of lomefloxacin vs. norfloxacin in the treatment of skin and soft tissue infections. Chemotherapy. 1989, 37: 482-503.

Bass JW, Chan DS, Creamer KM, Thompson MW, Malone FJ, Becker TM, Marks SN: Comparison of oral cephalexin, topical mupirocin and topical bacitracin for treatment of impetigo. Pediatr Infect Dis J. 1997, 16: 708-710. 10.1097/00006454-199707000-00013.

Koranyi KI, Burech DL, Haynes RE: Evaluation of bacitracin ointment in the treatment of impetigo. Ohio State Medical Journal. 1976, 72: 368-370.

Moraes-Barbosa AD: Comparative study between topical 2% sodium fusidate and oral association of chloranphenicol/neomycin/bacitracin in the treatment of staphylococcic impetigo in new-born. Arq Bras Med. 1986, 60: 509-511.

Park SW, Wang HY, Sung HS: A study for the isolation of the causative organism, antimicrobial susceptibility tests and therapeutic aspects in patients with impetigo. Korean J Dermatol. 1993, 31: 312-319.

Pruksachatkunakorn C, Vaniyapongs T, Pruksakorn S: Impetigo: an assessment of etiology and appropriate therapy in infants and children. J Med Assoc Thai. 1993, 76: 222-229.

Sutton JB: Efficacy and acceptability of fusidic acid cream and mupirocin ointment in facial impetigo. Curr Ther Res. 1992, 51: 673-678.

Tamayo L, De la Luz OM, Sosa de Martinez MC: Rifamycin and mupirocin in the treatment of impetigo. Dermatol Rev Mex. 1991, 35: 99-103.

Gabriel M, Gagnon JP, Bryan CK: Improved patients compliance through use of a daily drug reminder chart. Am J Public Health. 1977, 67: 968-969.

Hamilton GA, Roberts SJ, Johnson JM, Tropp JR, Anthony-Odgren D, Johnson BF: Increasing adherence in patients with primary hypertension: an intervention. Health Value. 1993, 17: 3-11.

Kerr JA: Adherence and self-care. Heart Lung. 1985, 14: 24-31.

Morisky DE, DeMuth NM, Field-Fass M, Green LW, Levine DM: Evaluation of family health education to build social support for long-terms control of high blood pressure. Health Education Quarterly. 1985, 12: 35-50.

Rehder TL, McCoy LK, Blackwell B, Whitehead W, Robinson A: Improving medication compliance by counseling and special prescription container. Am J Hosp Pharm. 1980, 37: 379-385.

Dou ZY, Wang JY, Liu YP: Preventive efficiency of low-dose IVIgc on infection in nephrotic synrome. Chin J Biologicals. 2000, 13: 160-

Li RH, Peng ZP, Wei YL, Liu CH: Clinical observation on Chinese medicinal herbs combined with predisone for reducing the risks of infection in children with nephrotic syndrome. Inf J Chin Med. 2000, 7: 60-61.

Zhang YJ, Wang Y, Yang ZW, Li XT: Clinical investigation of thymosin for preventing infection in children with primary nephrotic syndrome. Chin J Contemp Pediatr. 2000, 2: 197-198.

Tong LZ, Mi LZ: Preventive efficiency of IVIgG on secondary nosocomial in nephrotic syndrome. Mod Rehabil. 1998, 2: 236-

Sugita T, Togawa M: Efficacy of Lactobacillus preparation biolactis powder in children with rotavirus enteritis. Jpn J Pediatr. 1994, 47: 2755-2762.

Gallacher JE, Hopkinson CA, Bennett P, Burr ML, Elwood PC: Effect of stress management on angina. Psychol Health. 1997, 12: 523-532.

Mitsibounas DN, Tsouma-Hadjis ED, Rotas VR, Sideris DA: Effect of group psychosocial intervention on coronary risk factors. Psychother Psychosom. 1992, 58: 97-102.

Siebenhofer A, Plank J, Berghold A, Narath M, Gfrerer R, Pieber TR: Short acting insulin analogues versus regular human insulin in patients with diabetes mellitus. Cochrane Database Syst Rev. 2004, 2: CD003287-

Iwamoto Y, Akanuma Y, Niimi H, Sasaki N, Tajima N, Kawamori R: Comparison between insulin aspart and soluble human insulin in type 1 diabetes (IDDM) patients treated with basal-bolus insulin therapy - Phase III clinical trial in Japan. J Japan Diab Soc. 2001, 44: 799-811.

Duhmke RM, Cornblath DD, Hollingshead JR: Tramadol for neuropathic pain. Cochrane Database Syst Rev. 2004, 2: CD003726-

Leppert W: Analgesic efficacy and side effects of oral tramadol and morphine administered orally in the treatment of cancer pain. Nowotwory. 2001, 51: 257-266.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1471-2288/5/23/prepub

Acknowledgements

We thank Mark Deakin and Lynda Bain for help with data extraction.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The author(s) declare that they have no competing interests.

Authors' contributions

PR conceived and designed the study, and analysed the data. NW helped with interpretation and with drafting the paper. Both authors approved the final version

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is published under license to BioMed Central Ltd. This is an Open Access article is distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Royle, P., Waugh, N. A simplified search strategy for identifying randomised controlled trials for systematic reviews of health care interventions: a comparison with more exhaustive strategies. BMC Med Res Methodol 5, 23 (2005). https://doi.org/10.1186/1471-2288-5-23

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2288-5-23