Abstract

Background

A discrete choice experiment (DCE) is a preference survey which asks participants to make a choice among product portfolios comparing the key product characteristics by performing several choice tasks. Analyzing DCE data needs to account for within-participant correlation because choices from the same participant are likely to be similar. In this study, we empirically compared some commonly-used statistical methods for analyzing DCE data while accounting for within-participant correlation based on a survey of patient preference for colorectal cancer (CRC) screening tests conducted in Hamilton, Ontario, Canada in 2002.

Methods

A two-stage DCE design was used to investigate the impact of six attributes on participants' preferences for CRC screening test and willingness to undertake the test. We compared six models for clustered binary outcomes (logistic and probit regressions using cluster-robust standard error (SE), random-effects and generalized estimating equation approaches) and three models for clustered nominal outcomes (multinomial logistic and probit regressions with cluster-robust SE and random-effects multinomial logistic model). We also fitted a bivariate probit model with cluster-robust SE treating the choices from two stages as two correlated binary outcomes. The rank of relative importance between attributes and the estimates of βcoefficient within attributes were used to assess the model robustness.

Results

In total 468 participants with each completing 10 choices were analyzed. Similar results were reported for the rank of relative importance and βcoefficients across models for stage-one data on evaluating participants' preferences for the test. The six attributes ranked from high to low as follows: cost, specificity, process, sensitivity, preparation and pain. However, the results differed across models for stage-two data on evaluating participants' willingness to undertake the tests. Little within-patient correlation (ICC ≈ 0) was found in stage-one data, but substantial within-patient correlation existed (ICC = 0.659) in stage-two data.

Conclusions

When small clustering effect presented in DCE data, results remained robust across statistical models. However, results varied when larger clustering effect presented. Therefore, it is important to assess the robustness of the estimates via sensitivity analysis using different models for analyzing clustered data from DCE studies.

Similar content being viewed by others

Background

With increased emphasis on the role of patients in healthcare decision making, discrete choice experimental (DCE) designs are more often used to elicit patient preferences among proposed health services programs [1, 2]. DCE is an attribute-based design drawn from Lancaster's economic theory of consumer behaviour [3] and the statistical principles of the design of experiments [4]. This method measures consumer preference according to McFadden's random utility (benefit) maximisation (RUM) framework amongst a choice set which contains two or more alternatives of products or goods varying along several characteristics (attributes) of interest. In the early 1980s, Louviere, Hensher and Woodworth [5, 6] introduced DCE into marketing research, and since then DCE has been rapidly adopted by researchers in other areas such as transportation, environment and social science. Its applications in health research emerged in the early 1990s, and it has been increasingly used to evaluate patient preferences for currently available and newly-proposed health services or programs in health economics and policy-making related topics. For example, in the health economics related research area, 34 published studies used DCE design in the period from 1990 to 2000, and 114 DCE design studies were published in the period from 2001 to 2008 [7].

In the short history of using DCE in health research, there were several reviews [7–9], and debates about methodological and design issues, challenges and future development [10–12]. In generating a DCE study, three major formats of the choice design have frequently been used: i) a forced choice between two alternatives, ii) a choice among three or more alternatives with an opt-out option, and iii) a two-staged choice process which forces participants to choose one of the alternatives and then an opt-out choice is provided to allow participants to say no to all proposed products [13]. Despite the rapid developments in design aspects [12, 14], less attention was paid to the statistical analysis and model selection issues. Lancaster and Louviere [15] and Ryan and et al. [13] discussed several statistical models used for DCE including multinomial logistic model (MNL), multinomial probit model (MNP), and mixed logit model (MIXL). However, these studies did not provide detailed comparisons amongst competing models, or a clear indication of how to best deal with model selection issues. Another aspect related to the analysis of DCE data is adjustment for clustering effects. For example, in the DCE survey, it is common to ask participants to respond to several choice tasks in one survey. Each choice task has the same format but different attribute combinations. Naturally the choices made by same person would be expected to be more similar than the choices of other persons, leading to the within-patient correlation of responses. This within-subject correlation caused by the clustering effects or repeated observations needs to be accounted for in the analysis [16]. It is often measured using the intra-class correlation coefficient (ICC) where ICC = 0 indicates no intra-person correlation and ICC = 1 indicates perfect intra-person correlation. In this paper, we empirically compared some commonly-used statistical models which also account for the clustering effects in DCE analysis. We assessed the robustness (consistency and discrepancy) of the models on ranking of the relative importance between the attributes and the estimates of the βcoefficients within each level of the attributes.

The data we used were taken from the preference survey on colorectal cancer (CRC) screening tests conducted in Hamilton, Ontario, Canada in 2002 [17]. This project used a two-level choice design. Thus, the data structure allowed us to investigate the statistical models for analyzing binary, nominal and bivariate outcomes for DCE data.

Methods

Overview of the CRC screening project

The Canadian Cancer Society reported in 2011 that CRC is the fourth most commonly diagnosed cancer and the second leading cause of cancer death in Canada [18]. According to the same report, the estimates of new cases of CRC and CRC related death in 2011 were 22,200 (50 per 100,000 person) and 8,900 (20 per 100,000 persons) in 2011. Although CRC has a high incidence rate, patients have a better chance of successful treatment if diagnosis can be made earlier. Although a population-based CRC screening program is highly recommended for people over 50 years of age [19, 20], the uptake rate in North America is only about 50% [21]. Therefore, better understanding of patient preferences for screening tests may be the key to the successful implementation and uptake of CRC screening programs. This survey was the first conducted in Canada to evaluate patient preferences for various CRC screening tests to identify the key attributes and levels that may influence CRC screening test uptake.

Traditional CRC screening modalities such as fecal occult blood testing (FOBT), flexible sigmoidoscopy (SIG), colonoscopy (COL) and double-contrast barium enema (DCBE) vary on their process, accuracy, comfort and cost [22]. In this survey, five important attributes of features of the screening tests were identified through review of the literature, consultation with clinical specialists and patient focus groups. They were: process (4 levels), pain (2 levels), preparation (3 levels), specificity (3 levels) and sensitivity (3 levels). In addition, cost (4 levels) was included due to its potential influence on the uptake (Table 1). To reduce the burden on respondents for making their choices on 864 (4 × 2 × 3 × 3 × 3 × 4) unique combination from full factorial design, we used a fractional factorial design. In this design, 40 choice tasks were divided into four blocks to create a subset of 10 choice tasks of the attribute combinations for each survey participant to evaluate. The original design was developed using the SAS Optex procedure and optimized several measures of efficiency: 1) level balance; 2) orthogonality; and 3) D-efficiency [17, 23]. This design ensured the ability of estimating the main effects of the attributes while minimizing the number of combinations. No prior information on the ranking of attributes from the literature was available at the time of the design of the study. The survey used the pair-wise binary two-stage response design [24] with the choice between two choice sets of the attributes at different levels as the first step and the addition of an opt-out option as the second step (Table 2). This design maximized the information gained through the questionnaire to understand patient preferences on the CRC screening tests and the factors affecting the uptake rate. However, the analysis presented challenges. First, the answers were likely to cluster within subjects because each subject made two sequential choices for ten choice tasks. Therefore, a statistical model adjusting for within-subject correlation for repeated measurements was needed. Second, in the original paper, the analysis was done using the bivariate probit model, but the analysis could be approached using different methods: treating the responses at the two stages as independent responses, as sequential and correlated bivariate responses, or as a single response with three levels (Test A, Test B or No screening).

Outcomes

According to the unique data structure of the two-stage design, we conducted three analytic approaches. 1) Analyze the two-staged sequential choices of each choice task separately, i.e. binary outcomes: a) subject preferences on the screening modalities which only included patient responses at the first stage, and b) subject willingness to participate in the screening program which only included subjects' responses at the second stage. 2) Treat the two-staged data as paralleled three-choice options including Test A, Test B and "opt-out", i.e. nominal data. 3) Treat the two-staged data as two correlated binary choice sets, i.e. bivariate outcomes. Figure 1 presents the data structure of the original design and these three analysis approaches.

Random utility theory

As mentioned above, the DCE design is generally based on random utility theory [25] which expresses the utility (benefit) U in of an alternative i in a choice set C n (perceived by individual n) as two parts: 1) an explainable component specified as a function of the attributes of the alternatives V(X in , β); and 2) an unexplainable component (random variation) ε in .

The individual n will choose alternative i over other alternatives if and only if this alternative gives the maximized utility. The relationship of the utility function and the observed k attributes of the alternatives can be assumed under a linear-in-parameter function.

According to the assumption of the distribution of the error term ε in , the models specification of DCE data can be varied.

Statistical methods

The statistical models discussed in this paper were organized according to the type of outcomes: i) logistic and probit models for binary outcomes, ii) multinomial logistic and probit models for nominal outcomes, and iii) bivariate probit model for bivariate binary outcomes. We provide some details on how the different statistical techniques account for the within-cluster correlation in analyzing clustered DCE data.

For the binary type of outcomes, we examined six statistical models which have the capacity to account for the within-patients correlations [26, 27], including logistic regression with clustered robust standard error, random-effects logistic regression, logistic model using generalized estimating equations (GEE), probit regression with clustered robust standard error, random-effects probit regression, and probit regression using generalized estimating equation (GEE) model. Below are some brief descriptions of the methods.

Standard logistic regression and standard probit regression

Both standard logistic and probit regressions assume that the observations are independent. However in our dataset, each subject completed ten choice tasks, i.e. each subject had ten observations (choice tasks) which formed a cluster or can be considered repeated measurement. Normally, the observations in the same clusters are more similar (correlated) comparing to the observations out of the cluster. Therefore, adjusting the correlation within the cluster is necessary. We used three methods to adjust the within-cluster correlation.

Clustered robust standard error

In this method the independence assumptions are relaxed among all observations, but it is assumed that the observations across clusters are independent. The total variance is empirically estimated using Huber-White (also called Sandwich) standard error [28]. This method takes only the intra-class correlation into account, but the degrees of freedom are still based on the number of observations, not the number of clusters [29]. Therefore, this method only adjusts the standard error related to the confidence interval, but the point estimates are left unchanged.

Random-effects method

In this method, the total variance has two components: between-cluster variance and within-cluster variance. We assume that, at the cluster level, data follow a normal distribution with mean zero and between-cluster variance τ2; and that within each cluster, data vary according to some within-cluster variance [30]. This method takes two types of variance into account when estimating the total variance and the degrees of freedom are calculated based on the number of clusters [31]. Therefore, the point estimates and their corresponding variances are adjusted for intra-cluster correlation. For the covariance structure, we assumed equal variances for the random effects and a common pairwise covariance [32]. This structure corresponds to the exchangeable correlation structure specified for GEE method, which we describe below. The key difference between the random-effects method and other methods discussed here is that the random-effects method estimates the parameters for each subject within cluster or clusters sharing the same random effects. Therefore, the random effect is also often called subject specific effect [33].

GEE method

This method allows a working correlation matrix to be specified to adjust the within-cluster correlation. We assumed that there was no ordering effect among the observation in each cluster, allowing us to use an exchangeable correlation matrix [34]. As in the random-effects method, the degrees of freedom are based on the number of clusters, which in turn adjusts the estimate of the confidence interval [35]. Unlike the random-effects method, the GEE approach estimates the regression parameters averaging over the clusters (so-called population average model) [36].

For the nominal type of outcomes, we used three statistical models [37]: multinomial logistic model with clustered robust standard error, random-effects multinomial logistic model, and multinomial probit model with clustered robust standard error. We also fitted a bivariate probit model in which the choices from two stages were treated as two binary outcomes [38].

Multinomial logistic model

McFadden's conditional logit model (CLM), also called multinomial logistic (MNL) model, was the pioneer and most commonly used model in the early DCE studies [39]. The key assumption of this model is that the error terms ε in are independent and identically distributed (IID) [13], which leads to the independence of irrelevant alternatives (IIA) property [40]. Another assumption for this model is that the error term has an extreme value distribution with mean 0 and variance π2/6 [37]. To take the intra-class correlation into account, the clustered robust SE was used.

Random-effects multinomial logistic model

Similar to the random-effects models used for analyzing binary outcomes, this model takes two levels of variance, between-cluster variance and within-cluster variance, into account for clustered or longitudinal nominal responses [41, 42].

Multinomial probit model

Multinomial probit model (MNP) (heteroscedastic models) is considered to be one of the most robust, flexible and general models in DCE, especially when the correlation (heteroscedasticity) between alternatives is presented [43]. The model is assumed to have a normally distributed error term. The benefit of using MNP model is that the IIA assumption which is the strict requirement for MNL model can be somehow relaxed [37]. The main concern in using this model is that its maximization involves Monte Carlo simulation but not the analytical maximization which could lead to a computational burden. Again, the clustered robust SE was used to incorporate the intra-class correlation.

Bivariate probit model

In this model, we assume that the choices between two stages (stage 1: choice between screening test; stage 2: choice between participation and opt-out) are not independent. It says that subject choice as to whether or not to participate in the screening program was conditional on subject preference for the screening modalities [44]. By fitting this model, two types of correlation can be taken into account: the correlation between the outcomes from stage 1 and stage 2, incorporated through the bivariate nature of the model itself, and the intra-class correlation, incorporated through use of the cluster robust SE.

To assess the necessity of accounting for the intra-class correlation for analyzing clustered correlated DCE data, we also presented the results from the above models using simple standard error (SE)--which does not take clustering into account. They are the standard logistic, probit, multinomial logistic, multinomial probit and bivariate probit models.

We compared results from the above models on the following criteria: rank on the relative importance of the attributes, and magnitude, direction and significance of the estimates of the βcoefficient within each level of the attributes, which were obtained by regressing preference onto the difference in attributes between the two choices. The ranking criterion was measured by the percent change between the log-likelihood value of the full model and the value after removing one specific attribute from the model [45]. To evaluate the significance of the estimate of the βcoefficients within each attribute, the criterion for statistical significance was set at alpha = 0.05. All statistical models were conducted using STATA 10.2 (College Station TX) and the figures were plotted using PASW Statistics 19 (SPSS: An IBM Company).

Results

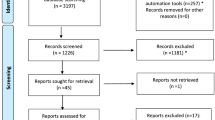

A random sample of 1,170 patients was selected from a roster of 9,959 patients aged 40-60 years from the Hamilton Primary Care Network. After excluding the patients who did not pass the inclusion criteria, questionnaires were mailed to 1,049 patients. Of these, 547 were returned and 485 had complete data. Among the patients with complete data, we excluded 17 patients who did not pass the rationale test, which were two warm-up choice tasks. For these warm-up tasks, one alternative was dominant over another possessing all favourable attribute levels and the respondents who did not choose the dominant alternative were considered to have failed the rational test. Finally, we analyzed the data for 468 patients (Figure 2) from four blocks with the block size of 105, 124, 120 and 119 respectively.

The mean age of the subjects was 50.8 years (standard deviation, 5.95 years), which was similar to the recommended age to start CRC screening [46]. Of the 468 included subjects, about 48% were female, 12% had family history of CRC and two patients (0.2%) had been diagnosed with CRC. The detailed demographic characteristics are presented in Table 3.

For the two-point outcomes (binary), the rank of the attributes on the choice of Test A and Test B was consistent across models. From most important to least important, they ranked as follows: cost, specificity, process, sensitivity, preparation and pain (Figure 3). With the exception of the random-effects logistic and probit models, the ranking (from most important to least important) of the six attributes for assessing participation or opt-out (stage-two), was as follows: cost, sensitivity, preparation, process, specificity and pain. The ranking from random-effects models was: cost, sensitivity, process, specificity, preparation and pain (Figure 4). For the three-point outcomes (nominal and bivariate) in which the choices of Test A, Test B and opt-out were estimated simultaneously, the attributes were ranked consistently: cost, sensitivity, specificity, process, preparation and pain (Figure 5). Comparing to the models using simple SE, using clustered robust SE to incorporate intra-class correlation did have any effects on calculating the relative importance of attributes.

When looking at how certain levels of each attribute affected the choice between Test A and Test B (stage-one), the estimates of the βcoefficients were similar in magnitude and direction across different statistical models. The most preferred screening test had the following features: stool sample, no preparation, 100% specificity, 70% sensitivity, without pain and with an associated cost of $50. The least preferred screening test had the combination of colonoscopy, special diet for preparation, 80% specificity, 90% sensitivity, with mild pain and no associated cost (Table 4 and Table 5).

When assessing the impact of certain levels of each attribute on patient choice of participating or opt-out (stage-two), the βcoefficient estimates for 90% sensitivity and no preparation had a significantly positive effect on uptake and this was consistent across all models. For other attributes and levels, results appeared similar across all three global analysis approaches: the random-effects and GEE logistic models and the random-effects and GEE probit models (Table 6); MNL with clustered robust SE, MNL random-effects and MNP with clustered robust SE (Table 7); and logistic with clustered robust SE, probit with clustered robust SE and bivariate probit (Table 6 and Table 7). The following two examples showed the estimates across models could differ by magnitude and direction. The magnitude of estimates of the effect of 90% sensitivity varied by model, but the direction was similar across all models. When comparing the cost of $50 to no cost, logistic and probit random-effects and GEE models reported that participants preferred no cost. MNL with clustered robust SE, MNL random-effects and MNP with clustered robust SE model reported that participants preferred the $50 cost. For other models, no significant statistical differences were found (Figure 6). We also found that unlike the results from the stage-one data (Table 4 and Table 5), for the stage-two data there was noticeable difference between the βcoefficient estimates from the models with and without incorporating the intra-class correlation (Table 6 and Table 7).

When assessing the clustering effect, we found that intra-class correlation was small among the stage-one data (ICC ≈ 0) and relatively large among the stage-two data (ICC = 0.659). For this survey, it appears as though many patients had predetermined their participation for CRC screening. For example, among the 468 participants included in the analyses, 48% always chose to undertake the screening program and 15% always chose no participation regardless of how the screening modalities varied at the first stage. Although Test A and Test B were generic terms of the combinations of the different levels of six attributes and they were randomly assigned to appear first or second in one choice task, we found that 24% more participants chose Test A over Test B. All the design limitations had some impact on our interpretation of the analysis results.

Discussion

We applied six statistical models to binary outcomes, three models to nominal multinomial outcomes and one model to bivariate binary outcomes to estimate the ranking of key attributes of CRC screening tests using data from DCE survey conducted in Hamilton, Ontario, Canada in 2002. We used three methods to adjust the within-cluster correlations: clustered robust standard error, random-effects, and GEE methods. The results showed consistent answers for estimating subject preference for CRC screening tests, both on ranking the importance of the attributes and identifying the significant factors influencing subject choice between testing modalities. For estimating subject willingness to participate or undertake CRC screening (i.e. incorporating "out-put" option), models disagreed both on ranking the importance of the attributes and identifying the significant factors (i.e. attributes and levels) affecting whether or not subjects would participate.

Overall, our analyses showed that participants preferred a CRC screening test with the following characteristics: stool sample, no preparation, 100% specificity, 70% sensitivity and without pain. The CRC test with such a combination of attribute levels would be the FOBT test [18]. Thus, our findings appear to be consistent with the results from Nelson and Schwartz's survey in 2004 [47] which showed FOBT to be the most preferred option for CRC screening. In that survey, they also reviewed 12 previous studies, all of which showed FOBT to be a preferred choice by most patients.

The reason for the consistency in estimating the choice between screening tests and the discrepancy in estimating the choice between participation and "out-put" might be due to the model's ability to adjust the within-participant (cluster) correlation. When the within-cluster correlation is small (choice between Test A and Test B), the assumption of the independently and identically distributed error term ε in is held. Therefore, it might not be necessary to take the clustering effects into account and thus the estimates are similar across statistical models. However, when the intra-class correlation presents, the analysis needs to account for both the within-cluster variance and between-cluster variance [48].

To the best of our knowledge, this is the first empirical study to compare different methods to address the within-participant correlation in the analysis of DCE data. However, many authors have emphasized the importance of adjusting for clustering in analysis of clustered data or repeated measurements for binary outcomes [49, 50]. When intra-class correlations are present in clustered or longitudinal data, the random-effects and GEE models are two commonly recommended approaches. Although they are estimating different parameters (the estimates from random-effects model are interpreted for the observations in the same cluster; the estimates from GEE model are interpreted as the mean across entire sample), the results from these two models are similar most of the time [41, 51]. Some researchers generally prefer random-effects model when the results from these two approaches disagree. However, some researchers argue that the random-effects model could provide biased results due to unverifiable assumptions about the data distribution [52].

Comparing to the models for analyzing correlated binary data, statistical software seldom has ready-to-use statistical models developed for multinomial outcomes or multi-variate outcomes. The multinomial probit model is routinely used to deal with correlation between alternatives [53], but it does not take intra-class or intra-respondent correlation into account. Robust standard error can be specified for multinomial logistic or probit and bivariate logistic models to adjust the estimate of standard error, but this would not correct the bias related to point estimates (coefficients). A simulation study has shown that the bias and the inconsistency for estimating the within-cluster correlation increase with the size of the cluster [54]. The newly developed generalized linear latent and mixed model (gllamm) procedure in STATA has the ability to run random-effects multinomial logistic model [55] to address the intra-class correlation issue, but this model has yet to be evaluated for performance (i.e. whether or not yields unbiased estimates). Some researchers have suggested using Bayesian hierarchical random-effects logistic and probit regression for clustered or panel data [56]. Although the Bayesian approach allows the flexibility to specify random effects, it requires considerable skill in programming.

This study has some limitations. First, this study is an empirical comparison of the analytic models and therefore we cannot know which model performs the best. Such an analysis would require simulation studies to assess the performance of the models in terms of the bias, precision, and coverage. Second, some estimates of the cost attribute in our study were inexplicable. For the test associated cost, participants' preference had a non linear order: $50, $0, $500 and $250. This could be a result of as the violation of the model assumptions or model misspecification. Most DCE analyses assume a linear utility function, but some recent studies have shown that this assumption may not be true for price-related attributes. A study of MPS players found that the utility function of the price and storage size had W-shaped curves rather than smooth linear trends [57]. A local travel mode study also found that the preference of time savings followed a non-linear utility function [58]. Another reason which may cause inaccurate results in our study is the use of two-staged design. The two-staged design had the advantage of maximizing the information gained by forcing participants to make a choice at the first stage, but it also gave us some artificial information. Third, many respondents in this survey seemed to have predetermined their participation in CRC screening before seeing the questionnaire. This may have caused an unusually high with-in cluster correlation when choosing between participation and opt-out. We also doubt that the predetermination might cause the ordering effect [59] when choosing the preferred screening tests. When individuals are forced to make a choice between products which they have decided that they do not want, the answer might not resemble the truth. Therefore, the results need to be interpreted cautiously--replication from similar studies is needed to better understand participant preferences for CRC screening and the willingness to undertake the screening program.

Conclusion

Responses from the same participant are likely to be more similar than the responses between participants in DCE data leading to possible intra-class or intra-participant correlation. Therefore, it is important to investigate the size of intra-class correlation before fitting any statistical model. We found that when within-cluster correlation is very small, all models gave consistent results both on the estimates ranking and coefficients. Therefore, the simplest logistic regression and multinomial logistic regression are recommended for the computation advantage being ease. Multinomial probit model may be a preferred choice method of analysis if we assume the existence of the correlation between alternatives.

When within-cluster correlation is high, sensitivity analyses are needed to examine the consistency of the results. Instead of making generalized inferences according to the estimate from any single statistical model, results from the sensitivity analyses based on different models can provide some insight about the robustness of the findings.

Our study empirically compared some commonly used statistical model on taking intra-class correlation into account when analyzing DCE data. To completely understand the necessity of accounting for the intra-class correlation for DCE data, particularly on analyzing nominal type of outcomes, simulation studies are needed.

Conflict of interest

The authors declare that they have no competing interests.

References

Longo MF, Cohen DR, Hood K, Edwards A, Robling M, Elwyn G, Russell IT: Involving patients in primary care consultations: assessing preferences using discrete choice experiments. Br J Gen Pract. 2006, 56 (522): 35-42.

Ryan M, Major K, Skatun D: Using discrete choice experiments to go beyond clinical outcomes when evaluating clinical practice. J Eval Clin Pract. 2005, 11 (4): 328-338. 10.1111/j.1365-2753.2005.00539.x.

Lancaster KJ: A new approach to consumer theory. J Polit Econ. 1966, 74 (2): 132-157. 10.1086/259131.

Montgomery DC: Design and analysis of experiments. 2000, New York: Wiley, 5

Louviere J, Hensher D: On the design and analysis of simulated choice or allocation experiments in travel choice modelling. Transp Res Rec. 1982, 890: 11-17.

Louviere J, Woodworth G: Design and analysis of simulated consumer choice or allocation experiments: an approach based on aggregate data. J Mark Res. 1983, 20: 350-367. 10.2307/3151440.

de Bekker-Grob EW, Ryan M, Gerard K: Discrete choice experiments in health economics: a review of the literature. Health Econ. 2010, doi:10.1002/hec.1697

Ryan M, Gerard K: Using discrete choice experiments to value health care programs: current practice and future research reflections. Appl Health Econ Health Policy. 2003, 2 (1): 55-64.

Marshall DA, Bridges JFP, Hauber B, Cameron RA, Donnalley L, Fyie KA, Johnson FR: Conjoint analysis applications in health--how are studies being designed and reported? an update on current practice in the published literature between 2005 and 2008. The Patient: Patient-Centered Outcomes Research. 2010, 3: 249-256. 10.2165/11539650-000000000-00000.

Louviere JJ, Lancsar E: Choice experiments in health: the good, the bad, the ugly and toward a brighter future. Health Econ Policy Law. 2009, 4 (Pt 4): 527-546.

Bryan S, Dolan P: Discrete choice experiments in health economics. For better or for worse?. Eur J Health Econ. 2004, 5 (3): 199-202. 10.1007/s10198-004-0241-6.

Louviere JJ, Pihlens D, Carson R: Design of discrete choice experiments: a discussion of issues that matter in future applied research. Journal of Choice Modelling. 2010, 4: 1-8.

Ryan M, Gerard K, Amaya-Amaya M: Using discrete choice experiments to value health and health care. 2008, Dordrecht, The Netherlands: Springer

Bridges JFP, Hauber B, Marshall DA, Lloyd A, Prosser LA, Regier DA, Johnson FR, Mauskopf J: Conjoint analysis applications in health--a checklist: a report of the ISPOR good research practices for conjoint Analysis task force. Value Health. 2011, 14: 403-413. 10.1016/j.jval.2010.11.013.

Lancsar E, Louviere J: Conducting discrete choice experiments to inform healthcare decision making: a user's guide. PharmacoEconomics. 2008, 26 (8): 661-677. 10.2165/00019053-200826080-00004.

Mehndiratta SR, Hansen M: Analysis of discrete choice data with repeated observations: comparison of three Techniques in intercity travel Case. Transp Res Rec. 1997, 1607: 69-10.3141/1607-10.

Marshall DA, Johnson FR, Phillips KA, Marshall JK, Thabane L, Kulin NA: Measuring patient preferences for colorectal cancer screening using a choice-format survey. Value Health. 2007, 10 (5): 415-430. 10.1111/j.1524-4733.2007.00196.x.

Colorectal Cancer Association of Canada. [http://www.colorectal-cancer.ca/en/just-the-facts/colorectal/] and [http://www.colorectal-cancer.ca/en/screening/fobt-and-fit/]

Anonymous From the Centers for Disease Control and Prevention: Colorectal cancer test use among persons aged > or = 50 years-United States, 2001. JAMA. 2003, 289 (19): 2492-2493.

Walsh JM, Terdiman JP: Colorectal cancer screening: scientific review. JAMA. 2003, 289 (10): 1288-1296. 10.1001/jama.289.10.1288.

Slomski A: Expert panel offers advice to improve screening rates for colorectal cancer. JAMA. 2010, 303 (14): 1356-1357. 10.1001/jama.2010.360.

Labianca R, Merelli B: Screening and diagnosis for colorectal cancer: present and future. Tumori. 2010, 96 (6): 889-901.

Kuhfeld WF: Discrete choice (SAS Technical Papers: Marketing research, MR2010F). (Date of last access: January 7, 2012), [http://support.sas.com/techsup/technote/mr2010f.pdf]

Street DJ, Burgess L: Optimal and near-optimal pairs for the estimation of effects in 2-level choice experiments. Journal of Statistics Planning and Inference. 2004, 118: 185-199. 10.1016/S0378-3758(02)00399-3.

Louviere J, Flynn F, Carson R: Discrete choice experiments are not conjoint analysis. Journal of Choice Modelling. 2010, 2 (2): 57-72.

Neuhaus JM: Statistical methods for longitudinal and clustered designs with binary responses. Stat Methods Med Res. 1992, 1 (3): 249-273. 10.1177/096228029200100303.

Pendergast JF, Gange SJ, Newton MA, Lindstrom MJ, Palta M, Fisher MR: A survey of methods for analyzing clustered binary response data. Int Stat Rev. 1998, 64: 89-118.

Huber JS, Ervin LH: Using heteroscedastic consistent standard errors in the linear regression model. Am Stat. 2000, 54: 795-806.

Rogers W: Regression standard errors in clustered samples. Stata Technical Bulletin. 1994, 3: 19-23.

Larsen K, Petersen JH, Budtz-Jørgensen E, Endahl L: Interpreting parameters in the logistic regression model with random effects. Biometrics. 2000, 56: 909-914. 10.1111/j.0006-341X.2000.00909.x.

Hedeker D, Gibbons RD, Flay BR: Random-effects regression models for clustered data with an example from smoking prevention research. J Consult Clin Psychol. 1994, 62 (4): 757-765.

Stata online help. [http://www.stata.com/help.cgi?xtmelogit]

Rabe-Hesketh S, Skrondal A: Multilevel and longitudinal modeling using Stata. 2008, USA: A Stata Press Publication, 2

Shults J, Sun W, Tu X, Kim H, Amsterdam J, Hilbe JM, Ten-Have T: A comparison of several approaches for choosing between working correlation structures in generalized estimating equation analysis of longitudinal binary data. Stat Med. 2009, 28 (18): 2338-2355. 10.1002/sim.3622.

Hanley JA, Negassa A, Edwardes MD, Forrester JE: Statistical analysis of correlated data using generalized estimating equations: an orientation. Am J Epidemiol. 2003, 157 (4): 364-375. 10.1093/aje/kwf215.

Ballinger GA: Using generalized estimating equations for longitudinal data analysis. Organ Res Methods. 2004, 7: 127-150. 10.1177/1094428104263672.

Long JS, Freese J: Regression models for categorical dependent variables using STATA. 2006, Texas, USA: Stata Press, 2

Chib S, Greenberg E: Analysis of multivariate probit models. Biometrika. 1998, 85: 347-361. 10.1093/biomet/85.2.347.

Pizzo E, Pezzoli A, Stockbrugger R, Bracci E, Vagnoni E, Gullini S: Screenee perception and health-related quality of life in colorectal cancer screening: a review. Value Health. 2011, 14 (1): 152-159. 10.1016/j.jval.2010.10.018.

Cheng S, Long J: Testing for IIA in the multinomial logit model. Sociological Methods Research. 2007, 35 (4): 583-600. 10.1177/0049124106292361.

Crouchley R, Ganjali M: A comparison of GEE and random effects models for distinguishing heterogeneity, nonstationarity and state dependence in a collection of short binary event series. Stat Model. 2002, 2: 39-62. 10.1191/1471082x02st022oa.

Hedeker D: A mixed-effects multinomial logistic regression model. Stat Med. 2003, 22 (9): 1433-1446. 10.1002/sim.1522.

Daganzo C: Multinomial probit: the theory and its application to demand forecasting. 1979, New York: Academic

Kaplan D, Venezky RL: Literacy and voting behavior: a bivariate probit model with sample selection. Soc Sci Res. 1994, 23: 350-367. 10.1006/ssre.1994.1014.

Watt DJ, Kayis B, Willey K: The relative importance of tender evaluation and contractor selection criteria. International Journal of Project Management. 2010, 28: 51-60. 10.1016/j.ijproman.2009.04.003.

Heitman SJ, Hilsden RJ, Au F, Dowden S, Manns BJ: Colorectal cancer screening for average-risk North Americans: an economic evaluation. PLoS Med. 2010, 7 (11): e1000370-10.1371/journal.pmed.1000370.

Nelson RL, Schwartz A: A survey of individual preference for colorectal cancer screening technique. BMC Cancer. 2004, 4: 76-10.1186/1471-2407-4-76.

Campbell MJ, Donner A, Klar N: Developments in cluster randomized trials and Statistics in Medicine. Stat Med. 2007, 26 (1): 2-19. 10.1002/sim.2731.

Ma J, Thabane L, Kaczorowski J, Chambers L, Dolovich L, Karwalajtys T, Levitt C: Comparison of Bayesian and classical methods in the analysis of cluster randomized controlled trials with a binary outcome: the Community Hypertension Assessment Trial (CHAT). BMC Med Res Methodol. 2009, 9: 37-10.1186/1471-2288-9-37.

Schukken YH, Grohn YT, McDermott B, McDermott JJ: Analysis of correlated discrete observations: background, examples and solutions. Prev Vet Med. 2003, 59 (4): 223-240. 10.1016/S0167-5877(03)00101-6.

Neuhaus JM, Kalbfleisch JD, Hauck WW: A comparison of cluster-specific and population-averaged approaches for analyzing correlated binary data. Int Stat Rev. 1991, 59: 25-35. 10.2307/1403572.

Hubbard AE, Ahern J, Fleischer NL, Van der Laan M, Lippman SA, Jewell N, Bruckner T, Satariano WA: To GEE or not to GEE: comparing population average and mixed models for estimating the associations between neighborhood risk factors and health. Epidemiology. 2010, 21 (4): 467-474. 10.1097/EDE.0b013e3181caeb90.

Munizaga MA, Heydecker BG, de Dios Ortúzar J: Representation of heteroskedasticity in discrete choice models. Transp Res. 2000, 34: 219-240. 10.1016/S0191-2615(99)00022-3.

Peters TJ, Richards SH, Bankhead CR, Ades AE, Sterne JA: Comparison of methods for analysing cluster randomized trials: an example involving a factorial design. Int J Epidemiol. 2003, 32 (5): 840-846. 10.1093/ije/dyg228.

Heil SF: A review of multilevel and longitudinal modeling using stata. J Educ Behav Stat. 2009, 34: 559-560. 10.3102/1076998609341365.

Burda M, Harding M, Hausman J: A Bayesian mixed logit-probit model for multinomial choice. J Econ. 2008, 147: 232-246.

Ferguson S, Olewnik A, Cormier P: Proceedings of the exploring marketing to engineering information mapping in mass customization: a presentation of ideas, challenges and resulting questions: August 28-31; Washington, DC, USA. 2011, USA: ASME

Kato H: Proceedings of the non-linearity of utility function and value of travel time savings: empirical analysis of inter-regional non-business travel mode choice of Japan: September 18-20; Strasbourg. 2006, European Transport Conference: France

Kjaer T, Bech M, Gyrd-Hansen D, Hart-Hansen K: Ordering effect and price sensitivity in discrete choice experiments: need we worry?. Health Econ. 2006, 15 (11): 1217-1228. 10.1002/hec.1117.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1471-2288/12/15/prepub

Acknowledgements

The original study was funded by a research grant from the Canadian Institutes for Health Research (MOB-53116) and the Cancer Research Foundation of America. We thank the reviewers for their insightful comments and suggestions that led to improvements in the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Authors' contributions

JC (chengj2@mcmaster.ca) conducted literature review, preformed the statistical analyses and composed the draft of the manuscript. LT (thabanl@mcmaster.ca) designed the original study, oversaw the statistical analysis and revised the manuscript. EP (pullena@mcmaster.ca) assisted planning statistical analyses and revised the manuscript. DAM (damarsha@ucalgary.ca) and JKM (marshllj@mcmaster.ca) designed the original study and revised the manuscript. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is published under license to BioMed Central Ltd. This is an Open Access article is distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Cheng, J., Pullenayegum, E., Marshall, D.A. et al. An empirical comparison of methods for analyzing correlated data from a discrete choice survey to elicit patient preference for colorectal cancer screening. BMC Med Res Methodol 12, 15 (2012). https://doi.org/10.1186/1471-2288-12-15

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2288-12-15