Abstract

Background

Accurate monitoring of health conditions and behaviours, and health service usage in the population, using an effective and economical method is important for planning and evaluation. This study examines the reliability of questions asked in a telephone survey by conducting a test/retest analysis of a range of questions covering demographic variables, health risk factors and self-reported chronic conditions among people aged 16 years and over.

Methods

A Computer Assisted Telephone Interviewing (CATI) survey on health issues of South Australians was re-administered to a random sub-sample of 154 respondents between 13-35 days (mean 17) after the original survey. Reliability between questions was assessed using Cohen’s kappa and intraclass correlation coefficients.

Results

Demographic questions (age, gender, number of adults and children in the household, country of birth) showed extremely high reliability (0.97 to 1.00). Health service use (ICC = 0.90 95% CI 0.86-0.93) and overall health status (Kappa = 0.60 95% CI 0.46-0.75) displayed moderate agreement. Questions relating to self-reported risk factors such as smoking (Kappa = 0.81 95% CI 0.72-0.89) and alcohol drinking (ICC 0.75 = 95% CI 0.63-0.83) behaviour showed good to excellent agreement, while questions relating to self-reported risk factors such as time spent walking for physical activity (ICC 0.47 = 95% CI 0.27-0.61), fruit (Kappaw = 0.60 95% CI 0.45-0.76) and vegetable consumption (Kappaw = 0.50 95% CI 0.32-0.69) showed only moderate agreement. Self-reported chronic conditions displayed substantial to almost perfect agreement (0.72 to 1.00) with the exception of moderate agreement for heart disease (Kappa = 0.82 95% CI 0.57-0.99).

Conclusion

These results show the questions assessed to be reliable in South Australia for estimating health conditions and monitoring health related behaviours using a CATI survey.

Similar content being viewed by others

Background

Telephone interviews are an effective and economical way to monitor health behaviours in the population. Data used in the planning and monitoring of health services and disease prevalence in populations should be as accurate as possible and assessing the reliability of questions is one way that accuracy or precision of the questions can be assessed and bias minimised. The aim of reliability testing is to make sure the responses to questions provide similar results and this is especially important if the questions are used in an on-going monitoring or surveillance system.

Papers have addressed the reliability of questions in telephone health survey questionnaires conducted by the Behavioral Risk Factor Surveillance System (BRFSS) in the United States [1–6]. The reliability tests of the BRFSS questionnaires have addressed a range of demographic variables and health risk factors, as well as specific issues such as ethnic monitories and women's health [6]. Demographic variables were found to have the highest reproducibility along with self-reported health, with health risk factors and ‘poor’ health days slightly less reliable although still at an acceptable level [1–5]. Knowledge or attitudinal variables were found to have lower reliability [2].

There remains a paucity of published reliability studies of Australian telephone health survey questionnaires to date. We previously published a reliability study of a telephone survey of South Australians (SA) in 1997 [7], and reported comparable findings to the BRFSS. Demographic questions showed the highest reproducibility, questions regarding health risk factors, such as smoking and alcohol consumption, showed substantial to almost perfect agreement, while chronic conditions variables were substantially reproducible where prevalence estimates were not close to zero. In addition, Brown et al [8] assessed reliability and validity of the Active Australia physical activity questionnaire and other physical activity measures and reported moderate to high levels of agreement. Nutrition questions administrated by self-completion have also been tested with the General Nutrition Knowledge Questionnaire having high test-re-test reliability [9]. Other relevant Australian studies have focused on children [10–13], older people [14, 15], and on specific patient types (eg cataract) [16].

The South Australian Monitoring and Surveillance System (SAMSS) [17], is a chronic disease and risk factor surveillance system operated by the South Australian Department of Health. The aim of this study is to compare the reliability of questions asked in the SAMSS by conducting a test/retest analysis of a range of questions covering demographic variables, health risk factors and chronic conditions variables among people aged 16 years and over.

Methods

Study design

SAMSS is designed to systematically monitor the trends of diseases, health related problems, risk factors and other health services issues for all ages over time for the SA health system. This is a telephone monitoring system using the Computer Assisted Telephone Interview (CATI) method to detect emerging trends, to assist in the planning and evaluation of health policies and programs, and to assess progress in primary prevention activities. Data collected includes demographics, health risk and protective factors and chronic conditions.

Interviews are conducted on a minimum of 600 randomly selected people (of all ages) each month. All households in SA with a telephone connected and the telephone number listed in the Electronic White Pages (EWP) are eligible for selection in the sample. A letter introducing the survey is sent to the selected household and the person with the last birthday is chosen for interview. There are no replacements for non-respondents. Up to ten call backs are made to the household to interview the selected persons. Interviews are conducted by trained health interviewers.

Data are weighted by area (metropolitan/rural), age, gender and probability of selection in the household to the most recent SA population data so that the results are representative of the SA population. For participants aged less than 16 years, data are collected from an adult in the household, who has been nominated by a household member as the most appropriate person to answer questions on the child’s behalf.

Test/retest study design

For this test/retest study, respondents were eligible to participate if they answered yes to being asked if they could be re-contacted to obtain further information regarding a health issue or in the event of a serious public health problem (n = 567) and aged 16 years and over (n = 551). The retest was conducted on a random sample of the eligible participants from 2009 November’s SAMSS survey (n = 495). Based on the literature [3, 7, 18] and costs, at least a third of the eligible sample would be approached for re-interview to obtain a sample size of at least 150 interviews. Call-back interviews started 14 days after the first interview with a mean 16.8 days, range 13 to 35 days. The same intensity that was used to contact participants for the first interview was used to contact the second time.

For the test/retest study, a selection of questions was asked of respondents (Table 1). Demographic variables included age, sex, country of birth [19], number of people living in the household aged 16 years and over, and aged 15 years or less. Health-related variables included current health status (SF1), risk and protective behaviours (body mass index [20] derived from height and weight, smoking status, alcohol consumption [21], nutrition intake, walking), and self-reported conditions (ever had high blood pressure or cholesterol, cardiovascular disease, asthma, osteoporosis, arthritis or diabetes).

The initial interviews took an average of 19 minutes (range 3 to 42 minutes) and each test/retest interviews took an average of 5 minutes (range 3 to 11 minutes).

Data analyses

Response rates were calculated for both surveys. Those who participated in the test/retest study were compared to the initial sample of SAMSS participants aged 16 years and over. For each categorical variable, the observed agreement (percentage of respondents who agree with themselves) and expected agreement (percentage of respondent who agree with themselves which would be expected by chance) was calculated. Cohen's kappa statistic (κ) [22, 23] was used to calculate reliability for categorical variables; weighted kappa (κw) [24] was used for ordinal variables (eg. alcohol risk) with user-defined weighting system, and Intraclass Correlation Coefficients (ICC) was used for continuous variables (eg. age, BMI). 95% confidence intervals (CI) were calculated for each measure of agreement. Kappa is a measure of agreement beyond that is expected by chance, calculated by (observed agreement-chance agreement)/(1-chance agreement). Kappa statistics is affected by prevalence (very low or very high) and skewed data which can produce low values of kappa. It should be noted that the interpretation of agreement results on continuous variables using ICC should be performed with caution. ICC is a function of the range of the continuous variable being assessed. Larger range increases the ICC, independent of the actual differences between the measures being compared. Reliability values of less than 0.20 were considered ‘poor’ agreement, between 0.21-.40 as ‘fair’, between 0.41 and 0.60 as ‘moderate’, between 0.61 and 0.80 as ‘good’ agreement and between 0.81 and 1.00 as ‘excellent’ agreement [25]. Data from the CATI system were analysed in SPSS version 17.0 [26] and Stata (Version 9.0) [27].

Ethical approval for the project was obtained from University of Adelaide (ethics approval number H-182-2009). All participants gave informed consent.

Results

Overall n = 626 respondents participated in SAMSS in November 2009 (response rate = 64.9%), with n = 495 (89.8%) of the people aged 16 years and over (n = 551) willing to be recontacted. Every third participant was selected to be recontacted (n = 165). In total 154 (93.3%) of those who were selected to be recontacted were reinterviewed.

Overall, 57.8% of participants of the retest study were female and the average age was 58 years (range 16 to 93). Table 2 displays the demographic differences between those who were reinterviewed (n = 154) and those who did not want to be recontacted, eligible but not selected, and eligible and selected but were not re-interivewed (n = 397).

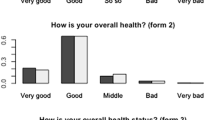

Table 3 presents estimates of reliability for all assessed variables. In general, reproducibility to demographic characteristics was extremely high especially for age (ICC 1.00); gender (κ 1.00); country of birth (κ 0.98); number of people in household aged 16 + (ICC 0.97) and number of people in household aged less than 16 years (ICC 0.99). Health service use, displayed good agreement with a correlation coefficient of 0.90. Overall health status displayed a moderate agreement beyond chance (κw 0.60) but the observed agreement was 85.8%, which indicated that the kappa statistic was affected by the low proportion reporting ‘poor’ health.

Overall health conditions displayed excellent observed agreements (92% to 100%) and good to excellent agreement beyond chance. Data on diabetes showed the highest reliability with a kappa value of 1.00. Reliability was lowest for heart disease (κ 0.53) but had excellent observed agreement (96.8%) which would be due to the low prevalence of the condition (3.9% and 3.2%). Similarly other health conditions had kappa values (good to excellent agreement beyond chance) between 0.72 (asthma) and 0.80 (arthritis), and had excellent observed agreements. Again, the low prevalence of cardiovascular diseases (heart attack, angina and stroke) and osteoporosis affected the kappa statistic when the observed and expected agreements were excellent.

Overall health risk factors displayed fair to excellent agreement with the highest being a correlation coefficient value of 0.98 for BMI and lowest being a correlation coefficient of 0.47 for the total time (minutes) walking per week. Overall protective factors displayed fair to moderate agreement beyond chance in serves of vegetables per day (κw 0.50) and serves of fruit per day (κw 0.60).

Discussion

SAMSS has contributed to the monitoring of departmental issues, key risk factors and population trends in priority chronic disease and related areas thereby guiding investments, identifying target groups, providing important program and policy information, and assessing outcomes. Potential bias from differing probabilities of selection in the sample is addressed by weighting by age, gender, probability of selection in the household, and area of residence to the most recent estimates of residential population, derived from census data by the Australian Bureau of Statistics. Additional bias may result if the questions are not reliable, that is the questionnaire will not always result in the same response when repeatedly administered to the same respondents. Undertaking this study to determine this source of bias determined that substantial to almost perfect reliability for the questions asked in SAMSS that were tested. These findings are consistent with published literature of BRFSS survey questions [1–6] and a previous reliability study of health survey questions asked in South Australia [7].

The high level of reliability for demographic variables reflects survey administration protocols which ensured that the respondent was in fact the original respondent, resulting in excellent agreement beyond chance on sex and age. The small variation in household composition would be expected in a random sample of the population over such a short time frame. This finding is consistent with our previous reliability study [7] and BRFSS study [3].

It is reasonable to expect some variation between surveys for behavioural related variables such as physical activity, smoking or alcohol consumption, with a change between surveys leading to a lower level of agreement for these variables. This retest was performed a minimum of 13 days following the initial survey (mean 16.8; SD 3.6). This length was long enough so that respondents were not expected to have remembered the answers that they gave to the first survey and also unlikely that answers would change significantly, making it possible that the two surveys could be considered independent. Despite this reasoning, real changes could have occurred between interviews, which would weaken the reliability estimates. Notwithstanding, any differences could be the result of social desirability and the subjective need to report knowledge rather than actual behaviour. This could explain the lower kappa scores for smoking status (κ 0.81) smoking situation at home (κ 0.59) and alcohol consumption (reliability values from 0.66 to 0.75). Our results for smoking status, which had an observed agreement of 89.0%, is similar to our previous study (κ 0.92) and other studies with kappa values of current smoking of 0.85 [3], 0.90 [4] and 0.83 [5]. Smoking situation at home had moderate agreement beyond chance (κ 0.59) but the observed agreement (96.1%) was excellent. This low kappa value would be due to the responses to the categories being close to 0% or 100% which affects the kappa statistic. The results for alcohol consumption had moderate agreement beyond chance which was similar to our previous finding [7]. Similar to smoking, the risk of harm to health due to alcohol consumption had a weighted kappa value of 0.66 but high observed agreement of 95.0%. This suggests that smoking status and alcohol consumption are relatively reliable due to the excellent observed agreement despite the moderate kappa values. The total time estimated walking had the lowest level of agreement (ICC 0.47) which was similar to another Australian study which had reliability score of 0.56 [28] and our previous study (κ=0.54 95% CI 0.37-0.70) and other BRFSS study [4] with kappa values of 0.54 for sedentary lifestyle and 0.56 for inactivity. The fair agreement could be due to a real change in total time walking between the two interviews or the ability to recall actual time on walking was not good. It should be noted that outliers can greatly influence the ICC producing low values and the possibility of converting the values to categories can result in a higher reliability values [29].

Similarly, while questions relating to self-reported risk factors such as fruit and vegetable consumption showed only fair to moderate agreement beyond chance this could be due to changes in behaviour following the first interview. However in both cases, the number of serves of both fruit and vegetables appeared to decrease following the first survey. It is also possible that participants modified their answers the second time due to providing social desirable or knowledge answers as a result of major advertising campaigns, Go for 2&5®, that was conducted in SA and other states to promote the recommended 2 serves of fruit and 5 serves of vegetables per day. Or participants do not have the ability to recall all of the fruit or vegetables consumed per day or to quantify fruit and vegetables into serve sizes.

Somewhat unexpected is the excellent reliability for height, weight and BMI (ICC ranging from 0.97 to 1.00), and the excellent observed agreement (97.9%) and agreement beyond chance in the derived BMI categories (κw 0.93). These results are consistent with our previous study which the weighted kappa value for the BMI categories had of 0.89 (95% CI 0.84-0.92) [7] and a BRFSS study which reported excellent reliability for height, weight and BMI (Pearson’s r 0.84 to 0.94). We have previously addressed the reliability of self-reported height and weight when compared to clinic measurements [30] but this study has shown that BMI, as a broad measurement of adiposity, is reliable when collecting self-reported data over the telephone.

The questions relating to chronic condition variables, and high blood pressure and cholesterol displayed excellent observed agreements and good to excellent agreement beyond chance with the exception of heart disease. As stated previously, the low prevalence of heart disease affects the kappa statistic when the observed and expected agreements were shown to be excellent. Hence the questions to obtain prevalence estimates on health conditions, and high blood pressure and cholesterol are reliable in telephone surveys. Health service use displayed excellent agreement and one would expect some variation in the time period of this study. Overall health status use had moderate agreement by chance (κ 0.60) but good observed agreement (85.8%). The kappa value is lower than a BRFSS study (κ 0.75). This low kappa value found in this study could be partly because the respondent’s health could have changed between the two time periods and the low proportion reporting ‘poor’ health which affects the kappa statistic.

Weaknesses of the study include the possible bias from a willingness to participate, although comparison with SAMSS November sample does not indicate this to be the case. In addition, the retest data were not weighted so any prevalence estimates should be used with the utmost caution. Although telephones are connected to a large number of Australian households, not all are listed in the EWP (mobile only households and silent numbers). In 2008 in South Australia, 9% of households are mobile only and 69% of households do not have their landline or mobile number listed in EWP [31]. Previous work undertaken in 1999 has shown that inclusion of unlisted landline numbers in the sampling did not impact on the health estimates [32]. However, mobile only households are increasing in South Australia and following international trends, and a very small proportion (7%) elect to have their mobile number listed in EWP [31]. Presently, the exclusion of this group from the current sampling frame may be small in relation to the health estimates obtained using EWP. However, the characteristics of people living in mobile-only households are distinctly different and the rising proportion in the number of mobile-only households is not uniform across all groups in the community. Given these sampling issues, there is potential bias in the results obtained in this study.

The response rate of nearly 65% is moderately acceptable for this type of survey but the potential for survey non-response bias is acknowledged. Response rates are declining in surveys based on all forms of interviewing [33] as people have become more active in protecting their privacy. The growth of telemarketing has disillusioned the community and diminished the success of legitimate social science research by means of telephone-based surveys.

Conclusion

This study has shown that there is a high level of reliability associated with the 20 questions routinely asked in a regular SA risk factor and chronic disease surveillance system. Although high reliability levels were apparent it should be remembered that this does not equate to high validity. Further studies are required to establish the validity of the some of the questions asked in telephone surveys such as SAMSS. Nevertheless we conclude that these questions provide a reliable tool for assessing these indictors using the telephone as the data collection method.

Abbreviations

- SA:

-

South Australia

- SAMSS:

-

South Australian Monitoring and Surveillance System

- CATI:

-

Computer Assisted Telephone Interviewing

- EWP:

-

Electronic White Pages

- BMI:

-

Body mass index

- BRFSS:

-

Behavioral Risk Factor Surveillance System

- ICC:

-

Intraclass Correlation Coefficients

- SD:

-

Standard deviation

- CI:

-

Confidence intervals

- κ:

-

Cohen's kappa statistic

- SPSS:

-

Statistical Package for Social Sciences.

References

Andresen EM, Catlin TK, Wyrwich KW, Jackson Thompson J: Retest reliability of surveillance questions on health related quality of life. J Epidemiol Community Health. 2003, 57: 339-343. 10.1136/jech.57.5.339.

Brownson RC, Jackson-Thompson J, Wilkerson JC, Kiani F: Reliability of information on chronic disease risk factors collected in the Missouri Behavioral Risk Factor Surveillance System. Epidemiology. 1994, 5: 545-549.

Shea S, Stein AD, Lantigua R, Basch CE: Reliability of the Behavioral Risk Factor Survey in a triethnic population. Am J Epidemiol. 1991, 133: 489-500.

Stein AD, Courval JM, Lederman RI, Shea S: Reproducibility of responses to telephone interviews: demographic predictors of discordance in risk factor status. Am J Epidemiol. 1995, 141: 1097-1106.

Stein AD, Lederman RI, Shea S: The Behavioral Risk Factor Suveillance System questionaire: its reliability in a statewide sample. Am J Public Health. 1993, 83 (12): 1796-1772.

Stein AD, Lederman RI, Shea S: Reproducibility of the women's module of the Behavioral Risk Factor Surveillance System questionnaire. Ann Epidemiol. 1996, 6: 47-52. 10.1016/1047-2797(95)00092-5.

Starr GJ, Dal Grande E, Taylor AW, Wilson DH: Reliability of self-reported behavioural health risk factors in a South Australian telephone survey. Aust N Z J Public Health. 1999, 23 (9): 528-530.

Brown WJ, Burton NW, Marshall AL, Miller YD: Reliability and validity of a modified self-administered version of the Active Australia physical activity survey in a sample of mid-age women. Aust N Z J Public Health. 2008, 32 (6): 535-541. 10.1111/j.1753-6405.2008.00305.x.

Hendrie GA, Cox DN, Coveney J: Validation of the general nutrition knowledge questionnaire in an Australian community sample. Nutr Diet. 2008, 65 (1): 72-77. 10.1111/j.1747-0080.2007.00218.x.

Gwynn JD, Flood VM, D’Este CA, Attia JR, Turner N, Cochrane J, Wiggers JH: The reliability and validity of a short FFQ among Australian Aboriginal and Torres Strait islanders and non-indigenous rural children. Public Health Nutr. 2011, 14 (3): 388-401. 10.1017/S1368980010001928.

Halim M, Vincent H, Saini B, Hameen-Anttila K, Vainio K, Moles R: Validating the children’s medicine use questionnaire (CMUQ) in Australia. Pharm World Sci. 2010, 32 (1): 81-89. 10.1007/s11096-009-9346-4.

Watson JF, Collins CE, Sibbritt DW, Dibley MJ, Garg ML: Reproducibility and comparative validity of a food frequency questionnaire for Australian children and adolescents. Int J Behav Nutr Phys Act. 2009, 6: 62-10.1186/1479-5868-6-62.

Ambrosina GL, de Klerk NH, O’Sullivan TA, Beilin LJ, Oddy WH: The reliability of a food frequency questionnaire for use among adolescents. Eur J Clin Nutr. 2009, 63 (10): 1251-1259. 10.1038/ejcn.2009.44.

Master S, Giles L, Halbert J, Crotty M: Development and testing of a questionnaire to measure older people’s experience of the Transition Care Program in Australia. Australas J Ageing. 2010, 29 (4): 172-178. 10.1111/j.1741-6612.2010.00443.x.

Cyarto EV, Marshall AL, Dickinson RK, Brown WJ: Measurement properties of the CHAMPS physical activity questionnaire in a sample of older Australians. J Sci Med Sport. 2006, 9 (4): 319-326. 10.1016/j.jsams.2006.03.001.

Gothwal VK, Wright TA, Lamoureux EL, LUndstrom M, Pesudovs K: Catquest questionnaire: re-validation in an Australian cataract population. Clin Exp Ophthalmol. 2009, 37 (8): 785-794. 10.1111/j.1442-9071.2009.02133.x.

Taylor AW, Dal Grande E: Chronic disease and risk factor surveillance using the SA Monitoring and Surveillance System (SAMSS)–history, results and future challenges. Public Health Bull. 2008, 5 (3): 17-21.

Barr M, Raphael B, Taylor M, et al: Pandemic influenza in Australia: using telephone surveys to measure perceptions of threat and willingness to comply. BMC Infect Dis. 2008, 8 (1): 117-10.1186/1471-2334-8-117.

Australian Bureau of Statistics: Standard Australian Classification of Countries(SACC), 1998 Revision 2.03, Catalogue number 1269.0. 2007, Canberra: Australian Bureau of Statistics

WHO (World Health Organization): Obesity: Preventing and Managing the Global Epidemic. 2000, Geneva: WHO

National Health and Medical Research Council: Australian Alcohol Guidelines: Health Risks and Benefits. 2001, Canberra: NHMRC

Altman DG: Practical Statistics for Medical Research. 1991, Chapman & Hall, London

Cohen J: A coefficient of agreement for nominal scales. Educ Psychol Meas. 1960, 20 (1): 37-46. 10.1177/001316446002000104.

Cohen J: Weighed kappa: nominal scale agreement with provision for scaled disagreement or partial credit. Psychol Bull. 1968, 70 (4): 213-220.

Fleiss JL, Cohen J: The equivalence of weighted kappa and the intraclass correlation coefficient as measures of reliability. Educ Psychol Meas. 1973, 33: 613-619. 10.1177/001316447303300309.

SPSS for Windows: Version 17.0. 2008, Chicago, Illinois, USA

StataCorp: Stata Statistical Software: Release 9.0. 2011, College Station, TX: StataCorp LP

Brown WJ, Trost SG, Bauman A, Mummery K, Owen N: Test-retest reliability of four physical activity measures used in population surveys. J Sci Med Sport. 2004, 7 (2): 205-215. 10.1016/S1440-2440(04)80010-0.

Evenson KR, McGinn AP: Test-retest reliability of adult surveillance measures for physical activity and inactivity. Am J Prev Med. 2005, 28 (5): 470-478. 10.1016/j.amepre.2005.02.005. (32a)

Taylor AW, Dal Grande E, Gill TK, Chittleborough CR, Wilson DH, Adams RA, Grant JF, Phillips P, Appleton S, Ruffin RE: How valid are self-reported height and weight? A comparisons between CATI self-report and clinic measurements using a large representative cohort study. Aust N Z J Public Health. 2006, 30: 238-246. 10.1111/j.1467-842X.2006.tb00864.x.

Dal Grande E, Taylor AW: Sampling and coverage issues of telephone surveys used for collecting health information in Australia: results from a face-to-face survey from 1999 to 2008. BMC Med Res Methodol. 2010, 10: 77-10.1186/1471-2288-10-77.

Wilson DH, Starr GJ, Taylor AW, Dal Grande E: Random digit dialling and electronic white pages samples compared: demographic profiles and health estimates. Aust N Z J Public Health. 1999, 23 (6): 627-633. 10.1111/j.1467-842X.1999.tb01549.x.

Groves RM: Nonresponse rates and nonresponse bias in household surveys. Public Opin Q. 2006, 70 (5): 646-675. 10.1093/poq/nfl033.

Pre-publication history

The pre-publication history for this paper can be accessed here:http://www.biomedcentral.com/1471-2288/12/108/prepub

Acknowledgement

SAMSS is owned by SA Health, South Australia, Australia. All collected source data are maintained and managed by Population Research and Outcomes Studies, University of Adelaide. The opinions expressed in this work are those of the authors and may not represent the position or policy of SA Health.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declared that they have no competing interests.

Authors’ contribution

EDG, Participated in the design and co-ordination of the study, performed statistical analyses, drafted the manuscript. SF, Participated in the design and co-ordination of the study and involved in the drafting of the manuscript. AWT, Participated in the design and co-ordination of the study, drafted the manuscript. All authors read and approved the final manuscript.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Dal Grande, E., Fullerton, S. & Taylor, A.W. Reliability of self-reported health risk factors and chronic conditions questions collected using the telephone in South Australia, Australia. BMC Med Res Methodol 12, 108 (2012). https://doi.org/10.1186/1471-2288-12-108

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2288-12-108