Abstract

Background

Many supervised learning algorithms have been applied in deriving gene signatures for patient stratification from gene expression data. However, transferring the multi-gene signatures from one analytical platform to another without loss of classification accuracy is a major challenge. Here, we compared three unsupervised data discretization methods--Equal-width binning, Equal-frequency binning, and k-means clustering--in accurately classifying the four known subtypes of glioblastoma multiforme (GBM) when the classification algorithms were trained on the isoform-level gene expression profiles from exon-array platform and tested on the corresponding profiles from RNA-seq data.

Results

We applied an integrated machine learning framework that involves three sequential steps; feature selection, data discretization, and classification. For models trained and tested on exon-array data, the addition of data discretization step led to robust and accurate predictive models with fewer number of variables in the final models. For models trained on exon-array data and tested on RNA-seq data, the addition of data discretization step dramatically improved the classification accuracies with Equal-frequency binning showing the highest improvement with more than 90% accuracies for all the models with features chosen by Random Forest based feature selection. Overall, SVM classifier coupled with Equal-frequency binning achieved the best accuracy (> 95%). Without data discretization, however, only 73.6% accuracy was achieved at most.

Conclusions

The classification algorithms, trained and tested on data from the same platform, yielded similar accuracies in predicting the four GBM subgroups. However, when dealing with cross-platform data, from exon-array to RNA-seq, the classifiers yielded stable models with highest classification accuracies on data transformed by Equal frequency binning. The approach presented here is generally applicable to other cancer types for classification and identification of molecular subgroups by integrating data across different gene expression platforms.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

Molecular understanding of tumor heterogeneity is key to personalized medicine and effective cancer treatments. Numerous studies have identified molecularly distinct cancer subtypes among individual patients of the same histopathological type by performing a high-throughput gene expression analysis of the patient tumor samples [1]. While microarray-based gene expression estimates are often not precise or quantitative enough for applications in the clinical setting, the expression profile data from microarrays are the basis for the widely used OncotypeDX (Genomic Health, Redwood City, CA) test, which predicts the risk of recurrence in patients with early stage breast cancer [2]. The OncotypeDX assay analyzes the expression of 21 genes by RT-qPCR to provide a recurrence score that is unique to each patient [3, 4]. More recently, the development of next-generation sequencing (NGS) based techniques, RNA-Seq [5], is enabling gene expression analysis to yield a much greater resolution for accurate identification of different isoforms. While several genome-wide expression profiling studies have dramatically improved our collective understanding of cancer biology and led to numerous clinical advancements [6, 7], the use of genomics based molecular diagnostics, such as OncotypeDX [8–12], in clinical practice still remains largely unmet for majority of human cancers [13].

A crucial step in the translation of gene signatures derived from high-throughput platforms is validation in a clinical setting, using robust and quantitative assay platforms (e.g., RT-qPCR based assay) without loss of any classification accuracy [14]. A major bottleneck in translating the prognostic or molecular subtyping statistical models is lack of adaptability of the derived models from one analytical platform to another. In other words, assuming that we have gene expression data for a set of tumor samples (with known subtype/class labels) from two different analytical platforms, "can a statistical model derived on data from one platform (e.g., microarray/exon-array) accurately predict the class labels using data from another platform (e.g., RNA-Seq) for the same patient samples?" While several normalization strategies, such as locally weighted scatter plot smoothing (loess) [15, 16], rank and quantile normalization methods [17–19], have been successfully applied to eliminate systematic errors in data from a same platform, these methods are not appropriate for normalization of data from different profiling platforms (microarray, RNA-Seq and RT-qPCR) because of the differences in the data scales and magnitude. In such cases, researchers usually accept the normalized data in the original analyses, and harvest the list of differentially expressed (significantly up or down) genes from each study by rank ordering. Then, the genes are prioritized by comparing the lists of up- and down-regulated (or rank ordered) genes between studies, rather than comparing individual expression values. However, these pre-processing methods are not useful in developing platform-independent statistical models.

Data discretization is a popular data pre-processing step used in supervised learning for creating the training sets. Data discretization transforms continuous values of feature variables to discrete ones [21, 22]. It can significantly impact the performance of classification algorithms in the analysis of high-dimensional data [23]. Different data discretization methods have been developed that can be categorized as: (1) supervised vs. unsupervised methods depending on the availability of class labels; (2) global vs. local methods considering all or only one feature to discretize; and (3) static vs. dynamic methods based on interdependency between attributes. Many discretization techniques have been applied to analyze gene expression data, for example, to devise a new approach to explore gene regulatory networks [24], and as a pre-processing step to improve classification accuracy using microarray data [25]. While the previous studies have used the discretization method as a pre-processing step to design and apply the statistical models on data from one platform (e.g., microarray), our goal in this study is to evaluate three unsupervised data discretization methods--equal width (Equal-W) binning, equal frequency (Equal-F) binning, and k-means clustering--in combination with different feature selection and machine learning methods for deriving the most accurate classification model from one platform (e.g., exon-array), and apply it to data from another platform (e.g., RNA-seq) for molecular subtype prediction of a future cancer patient.

Feature selection algorithms seek for a subset of relevant features to use in model construction in order to simplify and reduce over-fitting of the models. The wrapper, filter, and embedded methods are the three main categories that have been widely used in biomedical research to deal with a large feature space [26, 27]. Briefly, wrapper algorithm uses a predictive model that scores on a new each subset to train, and test on the remaining set; filter algorithm uses a direct measure instead of the error rate estimate to score a feature subset; embedded algorithm integrates feature selection as part of the model construction process including the Recursive Feature Elimination (RFE) algorithm. In this study, we adopted two advanced feature selection algorithms based on SVM and RF, and one filter method using the coefficient of variation (CV), a statistical measure to find highly variable genes.

Using a subset of most important genes (variables/features) screened by the variable selection methods, numerous classification methods have been applied to tackle disease sample classification problems. For example, SVM was applied for characterizing functional roles of genes in yeast genome and cancer tissues [28, 29], RF for classifying cancer patients and predicting drug response for cancer cell lines [30–32], NB (naïve Bayes) for classification on prostate cancer [33, 34], and PAM (Prediction Analysis of Microarrays) for molecular classification of brain tumor and heart disease [35, 36]. These studies, however, focused largely on the data from one platform such as microarray, although cross-platform data analysis would help find robust gene signatures. Recently, we developed PIGExClass [20], platform-independent isoform-level gene expression based classification system, that captures and transfers gene signatures from one analytical platform to another through data discretization. PIGExClass is an integrative system that consists of data discretization, feature selection, and classification. The application of PIGExClass has led to the development of a novel molecular classifier (or gene panel) for diagnosis of GBM subtypes [20]. Motivated by the importance of data discretization step in PIGExClass algorithm, in this paper we evaluated the performance of three data discretization methods together with four popular machine learning algorithms to derive reliable platform-independent multi-class classification models; specifically, predicting the four known subtypes of GBM patient samples from the same platform as well as independent platforms.

Results

Data-discretization retained the classification accuracy with fewer number of variables for data from same platform

Because gene isoforms (variables) whose expression levels do not vary much across the samples are less useful for discriminating the four GBM subtypes, We selected 2,000 isoforms with the highest variability across the samples, using CV (coefficient of variation). To search for an optimal bin number k for the discretization, we explored various bin sizes including the optimal bin number (k = 11) based on Dougherty's formula [37], and chose the bin number of k = 10 as it consistently achieved good accuracy. Then we applied two advanced feature selection algorithms, SVM-recursive feature elimination (SVM-RFE) [38] and RF based feature selection (RF_based_FS) [39], and prepared independent training and testing datasets by dividing the exon-array samples into four fold; 3/4th (257 samples) for training and 1/4th (85 samples) for testing. We describe below the classification performance for each variable selection method--CV, SVM-RFE and RF_based_FS.

First, we trained the classifiers with the features ranked by the CV that represent high generic variability. Overall, the accuracy of the derived classifiers was within the range of 89.4-97.6% for FC and 91.3-97.6% for discretized data (Figure 1 and Table 1), suggesting that the discretization retained the classification accuracy of the respective models. More importantly, SVM achieved similar accuracy with Equal-W binning using only 500 features in comparison to without discretization. For RF, NB and PAM the classification accuracies and the number of variables used in the models did not differ significantly between the discretized and non-discretized data. We then trained the classifiers by considering only the top 100 features that can be clinically testable by, for example, RT-PCR. We observed that SVM with k-means clustering yielded the best accuracy of 90.6% (Table 2).

Second, we evaluated the classification performance using the features ranked by SVM-RFE. Accuracy of the classifiers ranged from 83.5 to 97.6% for both FC and discretized data (Figure 2 and Table 1). Again, SVM showed similar accuracy between discretized and FC data, but required lot fewer variables in the model that was trained on Equal-W binning data. Similarly, RF showed similar accuracy between discretized and non-discretized data, but the RF model trained on Equal-F binning data used only 400 variables in comparison to 1,000 variables required for FC data. Interestingly, NB not only improved the classification accuracy with Equal-F binning data but also used much fewer number of variables (80 in comparison to 1,000) to achieve the higher accuracy. For PAM, the classification accuracy and number of variables in the models remained similar between FC and discretized data. Using the top 100 features, SVM still attained the best accuracy with Equal-F binning (Table 2).

Lastly, we used the features selected by RF_based_FS to assess the classifiers' performance. Accuracy of the classifiers did not fluctuate much by staying within the range of 92.9-98.8% for both non-discretized and discretized data (Figure 3 and Table 1). Overall, all the classifiers tested retained their highest accuracies, but with significantly fewer number of variables in the final models. While SVM achieved the best accuracy (98.8%) with FC, it retained comparable accuracy (96.4%) with just 70 variables in the model trained on Equal-W binning data in comparison to 150 variables in the model trained on FC data. Similarly, both RF and NB models trained on Equal-W binning data achieved similar accuracy with fewer number of variables in comparison to FC data. Interestingly, PAM model trained on Equal-W binned data slightly improved the accuracy with lot fewer variables in comparison to FC data.

In summary, all the classifiers trained and tested on the discretized data from same platform resulted with lot fewer number of variables, yet retaining the high accuracies in comparison to the corresponding models that were trained on FC data. Overall, while SVM achieved the best accuracy, Equal-W discretization in combination with RF_based_FS helped build the classification models with significantly lower number of variables in the final models.

Data discretization improved cross-platform predictions

In order to evaluate the accuracy of classification models on data derived by different gene-expression platforms (exon-array and RNA-seq in this study), we trained the classifiers using the data from exon-array and tested on matched RNA-seq datasets for the same TCGA samples. First, we observed that the classification framework resulted in poor classification accuracies when the classification and feature selection algorithms were trained on FC data from exon-array data and tested on corresponding FC data from RNA-seq platform (Table 3). The best accuracy of 73.6% on FC data was achieved by RF with RF_based_FS with just 40 variables in the final model. However, with data discretization we observed significant improvements in the performance of the classification framework. Below, we report the classification performance in more detail based on testing of the models on data from 76 RNA-seq samples.

For CV based feature selection, the classification accuracy of the models trained on FC (without discretization) was rather poor and ranged from 27.6 to 69.7% (Figure 4 and Table 3). However, the accuracy of the models built on Equal-F binning data achieved higher and stable accuracy, ranging from 92.1 to 100%. Notably, the SVM classifier accomplished the best accuracy of 100% (700 features) with Equal-F binning followed by k-means with SVM (92.1% accuracy; 300 features). Equal-W binning improved the accuracy for RF (84.2% accuracy; 1000 features), but not for the other three classifiers. When using the top 100 features in the final model, RF with Equal-F binning correctly predicted 68 samples out of 76, achieving ~90% accuracy (Table 4).

Similarly, for SVM-RFE features selection, the prediction accuracy of the models on FC data is quite low, within the range of 35.5-61.8%. While Equal-F binning improved the accuracy of all the four classifiers, Equal-W binning improved the accuracy for SVM and RF only (Figure 5 and Table 3). Most notably, with Equal-F binning discretization, SVM classifier achieved the highest accuracy using 1,000 features. For both Equal-W binning and k-means clustering discretization, RF achieved the best performance. Using the top 100 features, RF with Equal-F binning achieved 86.8% accuracy that is 31.6% higher than the best accuracy with FC (55.2%).

For RF_based_FS, the classification accuracies were dramatically improved for the models trained on discretized data, with Equal-F binning showing the highest improvement with more than 90% accuracies for all the models (Figure 6 and Table 3). Models built using k-means based discretized data also showed significant improvement with fewer number of variables in the final models. Considering only the top 100 features, RF with Equal-F performed 90.8% (69/76 samples) accuracy whereas RF with FC correctly predicted only 43 samples out of 76 (Table 4). We present the sensitivity and specificity measures for each classifier trained on the top ranking 100 features from exon-array data and tested on corresponding data from RNA-seq in Table 5.

In summary, we found that Equal-F binning based discretization performed best, followed by k-means clustering based data discretization. Equal-W binning improved only for RF and not for other classifiers for cross-platform class label predictions.

Discussion

The evaluation of the three unsupervised discretization methods using our integrated classification framework revealed that the addition of discretization step into the learning framework led to a large average increase in classification accuracy for all the classification models trained on data from one gene expression platform and tested on corresponding data from a different platform. Specifically, the best method, Equal-F binning, improves performance of all the classifiers and feature selection methods for cross-platform transfer of the derived models.

In machine learning, data discretization is primarily used as a data pre-processing step for various reasons, for example, (1) for classification methods that can handle only discrete variables, (2) for improving the human interpretation, (3) for faster computation process with a reduced level of data complexity, (4) for handling non-linear relations in the data, e.g., very highly and very lowly expressed genes are more relevant to cancer subtype, and (5) to harmonize the heterogeneous data. In this study, we showed that simple unsupervised discretization indeed improved the classification accuracy by harmonizing the data that come in different scale and magnitude from different gene expression platforms. The discretization step lead to numerically comparable measures of gene expression between different platforms, and translate the classification models (consisting of multiple transcript variables) across platforms. However, the discretization methods applied in this study have some limitations. For example, Equal-W binning is prone to outliers that may skew the distribution [37]. The k-means discretization performed relatively well with the CV and RF based feature selection schemes. The known drawback of this clustering discretization, however, is in choosing initial cluster centroids which in general is randomly assigned and less robust to outliers; additionally, it is sensitive to the number of clusters affecting classification accuracy. The Equal-F binning performed superior in this study and appeared to be feasible.

The choice of classification algorithms is often important dealing with a certain dataset as each of the algorithms has its own strengths and weaknesses. We experimented on the four state-of-the-art machine learning approaches based on maximum margin, decision tree, probabilistic and clustering classification. While SVM achieved the best accuracy, the performance of RF was more consistent when tested with various numbers of features and data types. We used the linear kernel SVM because it is known to be less prone to overfitting than nonlinear kernels such as radial basis function (RBF); intuitively, the RBF kernel could perform better when the data is linearly not separable or the feature and sample spaces are well balanced. PAM and NB also performed fairly well with the features chosen by RF_based_FS. NB is known to be robust with irrelevant features, but the performance would be quickly degraded when correlated features are added.

Conclusions

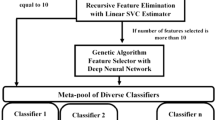

For training and testing the models on data from same platform, all the classifiers built with features selected by RF_based_FS led to robust and accurate predictive models regardless of the data format. While data discretization step does not significantly improve the accuracy of the classifiers, it significantly reduced the number of variables in the final models. For cross-platform training and testing of the classifiers, Equal-F binning outperformed FC, Equal-W binning and k-means clustering. With Equal-F binning, RF_based_FS identified important features more efficiently than the CV and SVM-RFE when fewer gene isoforms are considered in classification. Based on these encouraging results, we propose an integrative machine learning framework that involves feature selection, data discretization, and classification model build up by training and testing for independent platform (Figure 7). We anticipate that the application of this machine-learning framework, which includes data discretization as a key step, will provide quantitative and reproducible stratification of cancer patients with prognostic significance, the potential to improve precision therapy and the selection of drugs with subtype-specific efficacy. More importantly, the approach presented here is generally applicable to other cancer types for classification and identification of molecular subgroups.

Methods

Dataset

We obtained isoform-level gene expression estimates and molecular subtype information for 342 and 155 GBM samples profiled by Affymetrix exon-arrays and RNA-seq, respectively. The four molecular subgroups are neural (N), proneural (PN), mesenchymal (M) and classical (CL). Gene expression profiles for 76 (18 are N; 22 are PN; 16 are M; and 20 are CL subtype) samples were available from both RNA-seq and exon-array platforms. The common samples were used to assess classification performance for platform transition. We followed the data pre-processing procedure and obtained patients' GBM subtype information (class labels) from our recent study [14]; briefly, we downloaded the unprocessed Affymetrix exon-array dataset of 426 GBM samples and 10 normal brain samples from TCGA data portal (https://tcga-data.nci.nih.gov/tcga/); obtained the isoform expression of 114,930 transcript variants (equivalent to 35,612 genes) using the Multi-Mapping Bayesian Gene eXpression program [33]. The estimated expression values were then normalized across the samples, using the locally weighted scatterplot smoothing algorithm [34], a non-parametric regression method. To select 2,000 most variable transcripts, we applied Pearson's correlation coefficient with cutoff of > 0.8 followed by the CV. See [14] for more details.

Data type and transformation

We processed the gene expression data to estimate the FC values, and then three unsupervised discretization techniques--Equal-W binning, Equal-F binning, and k-means clustering--on the continuous FC data.

FC is a measure of a quantitative change of gene expression, defined by FC=log2 (T/N), where T is estimated expression values of a tumor sample and N is median expression of normal brain samples.

To determine the number of bins for discretization, Dougherty et al [28] suggested a heuristic to set the maximum number of bins k = max (1, 2 log (l)), where l is the number of distinct values of the attribute. Boulle [29] proposed an algorithm to find an optimal bin number for Equal-F and Equal-W, and demonstrated the optimal bin number performs similar to the bin number k = 10, considered as a default for most cases. While the former approach resulted the maximum bin number k = 11, we extensively evaluated by exploring various bin numbers of k = 2i for i={1, 2, ..., 10}.

Equal-W binning algorithm seeks for maximum and minimum values, and then divides the range into the user-defined equal width intervals defined as Equal-W=(max(GE(i))-min(GE(i)))/number of bins, where GE is isoform-level transcript gene expression of sample i. Then, continuous variables are assigned into the corresponding bin numbers.

Equal-F binning algorithm sorts all continuous variables in ascending order, and then divides the range into the user-defined intervals so that every interval contains the same number of sorted values defined as Equal-F=sort(GE(i))/number of bins.

K-means clustering algorithm calculates distance-based similarity to cluster the continuous variables. With the user-defined number of clusters, the algorithm iteratively finds centroids until no data point is reassigned to the updated centroids.

Feature selection methods

To capture most significant features, we first applied Pearson's correlation to the normalized expression data with a cutoff value of 0.8. Second, we used the CV to assess the degree of variability for each transcript.

CV is defined as CV=σ /µ, where σ and µ are the standard deviation and mean, respectively. Based on the CV scores, we selected the top 2000 transcripts out of ~115,000. To refine the selected features further, we employed two advanced feature selection algorithms based on SVM and RF that iteratively evaluate each feature's contribution to the classification performance. We adopted the programs available in R packages 'mSVM-RFE' and 'varSelRF.'

SVM-RFE is a feature search algorithm that measures feature's importance to the data by iteratively eliminating one feature at a time [13]. Adopted from the weight vector w of the binary classification problem, the ranking criteria is the coefficients of ; features with the highest weights are the most informative. Thus, the procedure of SVM-RFE is composed of training the SVM classifier, computing the ranking criteria for all features, and eliminating the feature with the lowest ranking criterion. This process is repeated until a small subset of features is achieved.

RF_based_FS method uses both backward elimination strategy and the importance spectrum to search a set of important variables [31]. Concisely, multiple random forests were iteratively constructed to search for a set of variable in each forest that yields the smallest out-of-bag (OOB) error rate. The main advantage of this method is that it returns a very small set of genes while retaining high accuracy.

Classification methods

We considered the four classification methods--SVM, RF, NB, and PAM--to compare the performance on platform transition using the 76 GBM samples.

SVM is primarily a two-class classifier that constructs a hyperplane to separate the data with maximum margin [35, 36]. For multiclass classification problems, two techniques are widely used: one is to build one-versus-all classifiers, and choose the class that yields maximum margin for test examples; the other is to build a set of one-versus-one classifiers. For class C > 2, C (C−1)/2 binary classifiers are trained and the appropriate class is determined by major voting. In this study, we used the latter approach with a linear kernel method as the size of features is larger than samples.

RF is an ensemble learning method that builds decision trees with binary splits [37]. Each tree is grown randomly in two steps. First, a subset of predictors is chosen at random from all the predictors. Second, a bootstrap sample of the data is randomly drawn with replacement from the original sample. For each RF tree, an unused observation is utilized to calculate the classification accuracy.

NB is a simple probabilistic classification method grounded in Bayes' theorem, for calculating conditional probabilities, with an independence assumption [38]. For a given instance (example), the NB classifier calculates the probability belonging to a certain class. The basic underlying assumption is that the features (x1,...,x n ) of an instance X are conditionally independent given the class C. For example, for a class C that maximizes the likelihood is P(X|C)=P(X 1 ,...,X n |C). The conditional independence enables the conditional probability as a product of simpler probabilities defined by P(X|C)=Π P(X i |C).

PAM is a sample classification method that uses the nearest shrunken centroid approach for transcript-variants gene expression data [26]. Briefly, the method computes a standardized centroid for each class. Then, it shrinks each of the class centroids by removing genes toward the overall centroid for all classes using a user-defined threshold. A new sample is assigned to the nearest centroid for which classification is based on the unseen sample's gene expression profile.

Accuracy

We estimated the overall classification accuracy based on the number of correct predictions divided by the total number of prediction samples defined as ACC=(number of correct predictions)/(total number of test samples). In addition, sensitivity (Sn) and specificity (Sp) for each sub-group (one GBM sub-group vs the rest of the GBM groups together) are calculated as and where tp i , tn i , and fn i , are true positive, true negative, false positive, and false negative for class C i , respectively.

References

Fodor SP, Read JL, Pirrung MC, Stryer L, Lu AT, Solas D: Light-directed, spatially addressable parallel chemical synthesis. Science. 1991, 251 (4995): 767-773.

Fayyad U, Irani K: Multi-interval discretization of continuous-valued attributes for classification learning. In Proceedings of the 13th International Joint Conference on Artificial Intelligence. 1993, 1022-1029.

Morin R, Bainbridge M, Fejes A, Hirst M, Krzywinski M, Pugh T, McDonald H, Varhol R, Jones S, Marra M: Profiling the HeLa S3 transcriptome using randomly primed cDNA and massively parallel short-read sequencing. BioTechniques. 2008, 45 (1): 81-94.

Maher CA, Kumar-Sinha C, Cao X, Kalyana-Sundaram S, Han B, Jing X, Sam L, Barrette T, Palanisamy N, Chinnaiyan AM: Transcriptome sequencing to detect gene fusions in cancer. Nature. 2009, 458 (7234): 97-101.

Chu Y, Corey DR: RNA sequencing: platform selection, experimental design, and data interpretation. Nucleic acid therapeutics. 2012, 22 (4): 271-274.

Yi Y, Li C, Miller C, George AL: Strategy for encoding and comparison of gene expression signatures. Genome biology. 2007, 8 (7): R133-

Cancer Genome Atlas Research N: Comprehensive genomic characterization defines human glioblastoma genes and core pathways. Nature. 2008, 455 (7216): 1061-1068.

Ohgaki H, Kleihues P: Population-based studies on incidence, survival rates, and genetic alterations in astrocytic and oligodendroglial gliomas. Journal of neuropathology and experimental neurology. 2005, 64 (6): 479-489.

Sotiriou C, Piccart MJ: Taking gene-expression profiling to the clinic: when will molecular signatures become relevant to patient care?. Nature reviews Cancer. 2007, 7 (7): 545-553.

Pusztai L: Chips to bedside: incorporation of microarray data into clinical practice. Clinical cancer research : an official journal of the American Association for Cancer Research. 2006, 12 (24): 7209-7214.

Subramanian J, Simon R: What should physicians look for in evaluating prognostic gene-expression signatures?. Nature reviews Clinical oncology. 2010, 7 (6): 327-334.

Statnikov A, Aliferis CF, Tsamardinos I, Hardin D, Levy S: A comprehensive evaluation of multicategory classification methods for microarray gene expression cancer diagnosis. Bioinformatics. 2005, 21 (5): 631-643.

Guyon I, Elisseeff A: An introduction to variable and feature selection. Journal of Machine Learning Research. 2003, 3: 1157-1182.

Pal S, Bi Y, Macyszyn L, Showe LC, O'Rourke DM, Davuluri RV: Isoform-level gene signature improves prognostic stratification and accurately classifies glioblastoma subtypes. Nucleic acids research. 2014

Dougherty J, Kohavi R, Sahami M: Supervised and unsupervised discretization of continuous features. Proceedings of the 12th International Conference. 1995, 194-202.

Li Y, Liu L, Bai X, Cai H, Ji W, Guo D, Zhu Y: Comparative study of discretization methods of microarray data for inferring transcriptional regulatory networks. BMC bioinformatics. 2010, 11: 520-

Hu H, Li J, Plank A, Wang H, Daggard G: Comparative Study of Classification Methods for Microarray Data Analysis. In Proceedings of the Fifth Australasian Conference on Data Mining and Analystics. 2006, 33-37.

Kohavi R, John GH: Wrappers for feature subset selection. Artificial Intelligence. 1997, 97 (1-2): 273-324.

Brown MP, Grundy WN, Lin D, Cristianini N, Sugnet CW, Furey TS, Ares M, Haussler D: Knowledge-based analysis of microarray gene expression data by using support vector machines. Proceedings of the National Academy of Sciences of the United States of America. 2000, 97 (1): 262-267.

Furey TS, Cristianini N, Duffy N, Bednarski DW, Schummer M, Haussler D: Support vector machine classification and validation of cancer tissue samples using microarray expression data. Bioinformatics. 2000, 16 (10): 906-914.

Diaz-Uriarte R, Alvarez de Andres S: Gene selection and classification of microarray data using random forest. BMC bioinformatics. 2006, 7: 3-

Riddick G, Song H, Ahn S, Walling J, Borges-Rivera D, Zhang W, Fine HA: Predicting in vitro drug sensitivity using Random Forests. Bioinformatics. 2011, 27 (2): 220-224.

Zhang H, Yu CY, Singer B: Cell and tumor classification using gene expression data: construction of forests. Proceedings of the National Academy of Sciences of the United States of America. 2003, 100 (7): 4168-4172.

Demichelis F, Magni P, Piergiorgi P, Rubin MA, Bellazzi R: A hierarchical Naive Bayes Model for handling sample heterogeneity in classification problems: an application to tissue microarrays. BMC bioinformatics. 2006, 7: 514-

Helman P, Veroff R, Atlas SR, Willman C: A Bayesian network classification methodology for gene expression data. Journal of computational biology : a journal of computational molecular cell biology. 2004, 11 (4): 581-615.

Tibshirani R, Hastie T, Narasimhan B, Chu G: Diagnosis of multiple cancer types by shrunken centroids of gene expression. Proceedings of the National Academy of Sciences of the United States of America. 2002, 99 (10): 6567-6572.

Northcott PA, Korshunov A, Witt H, Hielscher T, Eberhart CG, Mack S, Bouffet E, Clifford SC, Hawkins CE, French P, et al: Medulloblastoma comprises four distinct molecular variants. Journal of clinical oncology : official journal of the American Society of Clinical Oncology. 2011, 29 (11): 1408-1414.

Dougherty J, Kohavi R, Sahami M: Supervised and unsupervised discretization of continuous features. Machine Learning: Proceedings of the Twelfth International Conference. 1995, 194-202.

Boulle M: Optimal bin number for equal frequency discretization. Intell Data Anal. 2005, 175-188. 9

Guyon I, Weston J, Barnhill S, Vapnik V: Gene selection for cancer classification using support vector machines. Machine learning. 2002, 46: 389-422.

Diaz-Uriarte R: GeneSrF and varSelRF: a web-based tool and R package for gene selection and classification using random forest. BMC bioinformatics. 2007, 8: 328-

de Jonge HJ, Fehrmann RS, de Bont ES, Hofstra RM, Gerbens F, Kamps WA, de Vries EG, van der Zee AG, te Meerman GJ, ter Elst A: Evidence based selection of housekeeping genes. PloS one. 2007, 2 (9): e898-

Turro E, Lewin A, Rose A, Dallman MJ, Richardson S: MMBGX: a method for estimating expression at the isoform level and detecting differential splicing using whole-transcript Affymetrix arrays. Nucleic acids research. 2010, 38 (1): e4-

Workman C, Jensen LJ, Jarmer H, Berka R, Gautier L, Nielser HB, Saxild HH, Nielsen C, Brunak S, Knudsen S: A new non-linear normalization method for reducing variability in DNA microarray experiments. Genome biology. 2002, 3 (9): research0048-

Schölkopf B, Burges CJC, Smola AJ: Advances in Kernel Methods. The MIT Press. 1998

Vapnik V: The Nature of Statistical Learning Theory. Springer. 1999

Breiman L: Random Forests. Machine Learning. 2001, 45: 5-32.

Mitchell TM: Machine Learning. McGraw-Hill. 1997

Acknowledgements

The work was supported in part by the National Library of Medicine of the National Institutes of Health (NIH) under Award Number R01LM011297.

Declarations

The publication costs for this article were funded by the NIH-NLM grant R01LM011297.

This article has been published as part of BMC Genomics Volume 16 Supplement 11, 2015: Selected articles from the Fourth IEEE International Conference on Computational Advances in Bio and medical Sciences (ICCABS 2014): Genomics. The full contents of the supplement are available online at http://www.biomedcentral.com/bmcgenomics/supplements/16/S11.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

SJ participated in the design of the project, performed the bioinformatics and statistical analysis, and drafted the manuscript. YB and RVD conceived the idea and participated in the design of the project. SJ, YB, and RVD revised the manuscript. All authors read and approved the final manuscript.

Rights and permissions

This article is published under an open access license. Please check the 'Copyright Information' section either on this page or in the PDF for details of this license and what re-use is permitted. If your intended use exceeds what is permitted by the license or if you are unable to locate the licence and re-use information, please contact the Rights and Permissions team.

About this article

Cite this article

Jung, S., Bi, Y. & Davuluri, R.V. Evaluation of data discretization methods to derive platform independent isoform expression signatures for multi-class tumor subtyping. BMC Genomics 16 (Suppl 11), S3 (2015). https://doi.org/10.1186/1471-2164-16-S11-S3

Published:

DOI: https://doi.org/10.1186/1471-2164-16-S11-S3