Abstract

Background

Monoculture, multi-cropping and wider use of highly resistant cultivars have been proposed as mechanisms to explain the elevated rate of evolution of plant pathogens in agricultural ecosystems. We used a mark-release-recapture experiment with the wheat pathogen Phaeosphaeria nodorum to evaluate the impact of two of these mechanisms on the evolution of a pathogen population. Nine P. nodorum isolates marked with ten microsatellite markers and one minisatellite were released onto five replicated host populations to initiate epidemics of Stagonospora nodorum leaf blotch. The experiment was carried out over two consecutive host growing seasons and two pathogen collections were made during each season.

Results

A total of 637 pathogen isolates matching the marked inoculants were recovered from inoculated plots over two years. Genetic diversity in the host populations affected the evolution of the corresponding P. nodorum populations. In the cultivar mixture the relative frequencies of inoculants did not change over the course of the experiment and the pathogen exhibited a low variation in selection coefficients.

Conclusions

Our results support the hypothesis that increasing genetic heterogeneity in host populations may retard the rate of evolution in associated pathogen populations. Our experiment also provides indirect evidence of fitness costs associated with host specialization in P. nodorum as indicated by differential selection during the pathogenic and saprophytic phases.

Similar content being viewed by others

Background

The evolution of pathogens is widely believed to be one of the major challenges facing agriculture and medicine [1, 2]. Experimental studies focused on the evolution of pathogens, including the emergence of virulence and pathogen adaptation to changing agricultural and medical practices, can provide critical information for more effective management of infectious diseases. In medicine, infectious diseases are mitigated mainly through the application of antimicrobial substances such as antibiotics. While pesticides such as fungicides are widely utilized in agricultural ecosystems, host resistance imposes many fewer environmental costs and is a more cost efficient approach to control plant diseases. In both agriculture and medicine, the efficacy of host resistance and antimicrobials usually decays over time as a result of the continuous evolution and adaptation of pathogens.

Plant pathogens are thought to evolve faster in agricultural ecosystems than in natural ecosystems [3–6]. Wild relatives are the primary sources of host resistance bred into cultivated crops. The disease resistance genes carried by these wild relatives of modern crop plants have coexisted with their pathogens for many thousands or millions of years in natural ecosystems. However, when these resistance genes are introgressed into modern crops and deployed in agricultural ecosystems, their value in controlling infectious diseases usually does not last for more than 10 years [7]. Temporal analysis of population dynamics also is consistent with the hypothesis of rapid pathogen evolution in agricultural ecosystems. For example US-1 was the predominant genotype in Phytophthora infestans populations around the world until the 1990s [8] but this genotype is rarely recovered since 2000. In the UK, a single P. infestans genotype called Blue-13-A2 was first detected in southern England in 2003 at a very low frequency. By 2007, this genotype was detected in all populations sampled across the UK and accounted for more than half of 1000 isolates assayed (J. Zhan & D. Cooke, unpublished data). These P. infestans examples illustrate how pathogen populations can experience rapid turnover as new genotypes with greater fitness emerge, spread, out-compete and replace earlier genotypes.

The evolution of pathogens can be influenced by the type of resistance, the amount of diversity found in host populations and the type of cropping system [9–12]. Modern agriculture is dominated by species monocultures grown at a high density. In these agricultural ecosystems, it is common for a single host cultivar or genotype carrying a major resistance gene to be grown over a large area. The limited genetic diversity in the host populations coupled with intensive use of major resistance genes can lead to rapid shifts in associated pathogen populations. A mutant with higher fitness that emerges in a pathogen population as a result of a single mutation event can quickly increase in frequency through strong directional selection and spread across entire fields or regions through natural or human-mediated migration.

Multi-cropping, where the same annual crop is grown in the same field more than once during the same year, is another common practice in modern agriculture, especially in countries experiencing a shortage of arable land. This practice may further accelerate the evolution of plant pathogens because locally adapted pathogen genotypes with a high parasitic fitness can steadily increase in frequency due to the year-around availability of the living host (i.e. a "green bridge" allows the parasitic phase of the pathogen life cycle to occur continuously).

It is hypothesized that the evolution of plant pathogens in agricultural ecosystems can be retarded by increasing genetic diversity of the host populations, by using partial resistance encoded by several genes and by avoiding multi-cropping systems. Increasing genetic diversity in host populations by mixing plants carrying different major resistance genes (e.g. cultivar mixtures or multilines) is thought to be an ecologically and evolutionarily sound approach to control plant diseases, particularly for airborne pathogens of cereals [13]. Increasing host diversity by using cultivar mixtures will impose disruptive selection on pathogen populations, i.e. pathotypes that are favored on one host will have lower fitness on the other hosts in the mixture [14–16], impeding their ability to evolve towards higher virulence, here defined as the damage a pathogen causes to its host [17]. On the other hand, because many fungal pathogens have large effective population sizes [18] and exhibit a mixture of sexual and asexual reproduction [19, 20], they can rapidly obtain new pathogenicity factors through mutation or new combinations of pathogenicity factors through recombination and then maintain the novel combinations of pathogenicity factors through asexual reproduction. Thus extensive use of cultivar mixtures could lead to the development of complex races [21, 22] that would be able to infect a large number of host genotypes carrying different major resistance genes.

Though less efficient, partial resistance is thought to offer a more durable method to control plant diseases than major-gene resistance because it works against all pathogen strains and selects equally against all pathotypes [23, 24]. Partial resistance mediated by multiple genes is generally inherited as a quantitative trait [16, 25, 26], where each gene makes a minor but additive contribution to the overall resistance [27]. But selection can increase the frequencies of genes encoding higher virulence in pathogen populations infecting partially resistant hosts and reduce the effectiveness of quantitative resistance [9, 19, 28–31] though possibly at a slower pace compared to major resistance genes [32].

In contrast to multi-cropping, in single cropping systems an annual crop is grown for only 6-9 months of the year or different crops are rotated annually, forcing pathogens to undergo a saprophytic phase in their life cycle in which different strains not only compete with each other but also with other microbial species for nutrients and habitats. Pathogen genotypes that have a high parasitic fitness on living hosts may have a low saprophytic fitness on the dead host biomass. This trade-off could delay the emergence of highly parasitic pathogen strains in agricultural ecosystems characterized by single cropping and regular crop rotations.

Much of our knowledge of pathogen evolution in agricultural ecosystems is drawn from theory or through historical inference from population surveys [33–35]. Experimental tests of pathogen evolution are limited and usually are conducted in controlled environments under laboratory or greenhouse conditions. Here we describe a test of pathogen evolution in a replicated experiment using sensitive molecular markers that could differentiate among pathogen isolates released into an unregulated field setting. This experimental evolution approach based on a mark-release-recapture strategy has now been successfully applied to understand the evolution of cereal pathogens including Mycosphaerella graminicola [20, 32, 36, 37], Rhynchosporium secalis [37] and Phaeosphaeria nodorum [38]. In this study, we used this approach to investigate the evolution of the wheat pathogen Phaeosphaeria nodorum. The experiment was conducted over two years using five replicated host populations differing in levels of resistance and diversity. In the first year, the pathogen populations were introduced into each host population by artificial inoculation of the hosts with nine P. nodorum strains tagged with molecular genetic markers and mixed in equal proportions. In the second year, the pathogen populations were established using the infected straw and plant debris saved from the first year's experiment. During the experiment, two fungal collections were made in each of the two years. The recovered pathogen populations were assayed for their molecular markers so that frequencies of the inoculated isolates could be compared across hosts and sampling times (see Figure 1). With this experimental design, we were able to determine the effects of host diversity and resistance on the evolution of corresponding pathogen populations. The experimental design also allowed us to detect selection operating during both parasitic and saprophytic phases of the pathogen life cycle. The specific objectives of this experiment were to: i) infer the rate of pathogen evolution in an agricultural system; ii) determine the effect of host resistance on competition among genotypes in P. nodorum populations; iii) determine the effect of cultivar mixtures on clonal competition in P. nodorum populations; and iv) compare selection during the parasitic and saprophytic phases of the P. nodorum life cycle. Our previous data analyses indicated that isolates recovered from the experiment included the asexual progeny of the inoculated genotypes, airborne immigrants from outside of the experimental plots and recombinants arising from crosses between the inoculants and/or immigrants [39]. The results presented here consider only the effects of host selection and clonal competition among the asexual progeny of the inoculated genotypes. Evolutionary changes in the pathogen populations attributed to recombination and immigration were considered in a separate publication [39].

The heterothallic loculoascomycete Phaeosphaeria nodorum (Berk.) Castellani and Germano (syn. Septoria nodorum Berk.), the teleomorph form of Stagonospora nodorum (E. Müller) Hedjaroude (syn. Leptosphaeria nodorum E. Muller), causes Stagonospora nodorum leaf and glume blotch on wheat (Triticum aestivum L.). The pathogen can undergo both sexual and asexual reproduction (see Figure 2 for the life cycle) and has the ability to infect all above-ground plant parts during the parasitic phase [40–43]. The pathogen overwinters during its saprophytic phase on infected stubble [44] and can survive for several months [45] on wheat straw until the parasitic phase of the disease cycle is re-initiated. The primary inoculum includes infected seeds as well as pycnidiospores and ascospores. Asexual pycnidiospores are dispersed over short distances by rain-splash while sexual ascospores are wind-dispersed, therefore having the potential for long distance movement [46–49]. Ascospore-producing perithecia of P. nodorum can be formed during the host-free period in infested stubble on the soil surface [50, 51] and during the growing season on infected plants [39].

Results

Recovery of inoculants

A total of 637 isolates matching the multilocus haplotypes of the inoculants were recovered from the inoculated plots. In addition to the inoculants, a large number of isolates (550) sampled over the course of this experiment were novel isolates with multilocus haplotypes that did not match the nine inoculants. The frequency of these novel haplotypes increased steadily over the course of the experiment. The majority of the novel genotypes were detected only once and the most frequent one was detected five times from two adjacent plots in 2005B.

Variation in genotype frequencies among inoculants

Contingency χ2 tests indicated that there were highly significant (P < 0.001) differences in genotype frequencies among the nine inoculants in the pathogen collections sampled from the different host treatments. Isolate SN99CH2.12a became well established and increased in frequency from 2004A to 2005A on all host treatments but decreased in frequency from 2005A to 2005B on four of five hosts (Figure 3). Isolate SN99CH2.09a established well at the beginning of the 2004 season on all host treatments. Its frequency increased from less than 20% in 2004A to about 35% in 2005B on the partially resistant cultivar Runal and increased from about 10% in 2004A to nearly 45% in 2005B on the partially resistant cultivar Tamaro. Isolate C1 established well on all host treatments. Its frequency steadily increased from less than 5% in 2004A to nearly 40% in 2005B on cultivar Levis but gradually decreased from 15% in 2004A to 0% in 2005B on the mixture. The frequency of isolate SN99CH2.04 increased on all host treatments except the cultivar mixture during the growing seasons (from 2004A to 2004B and from 2005A to 2005B) but decreased in frequency during the saprophytic phase (i.e. between 2004B to 2005A, Figure 3).

Frequencies and their 95% confidence intervals of nine Phaeosphaeria nodorum isolates recovered from different host treatments during the 2004 and 2005 growing seasons. Only the last part of each isolate name (See Table 1 for full names) is shown in the figure. A) Levis; B) Runal; C) 1:1 Mixture of Runal and Tamaro; D) Tamaro and E) Tirone.

Comparisons of genotype frequency among P. nodorumpopulations from different hosts

No significant differences in genotype frequency were detected between the pathogen populations collected from any pair of host treatments in 2004A (Table 1). But significant differences (pre-Bonferroni correction) were detected between two pairs of pathogen populations each in 2004B (Tirone-Runal and Tirone-Tamaro, Table 1) and 2005A (Levis-Tirone and Levis-Runal, Table 2). Pair-wise comparison was not conducted for the 2005B collection because of the small sample size. Following a Bonferroni correction for multiple comparisons, the hypothesis of no difference in population genetic structure among P. nodorum populations sampled from different hosts was rejected for the 2004B and 2005A collections. When all populations from the same time point were considered simultaneously using a multi-population comparison, significant differences in genotype frequencies were detected in collections 2004B, 2005A and 2005B but not in collection 2004A (Table 3). There was a clear pattern of increasing differences in genotype frequencies among pathogen populations sampled from different hosts over time (Table 3).

Changes in genotype frequencies among P. nodorumpopulations over time

Between 2004A and 2004B, significant changes in genotype frequencies prior to Bonferroni correction were observed in P. nodorum populations collected from Runal, Tamaro, and Tirone but not from Levis (Table 4). Significant changes prior to Bonferroni correction also occurred in the populations collected from Tamaro, Tirone and Levis between 2004B and 2005A (i.e. during the saprophytic phase) as well as between 2004A and 2005A. The changes in genotype frequencies were not significant in the populations sampled from the host mixture in any pair-wise comparisons over the two-year experiment. Significant differences in genotype frequency were detected in pathogen populations sampled from all host populations except the mixture when pathogen populations from three collections of the same host were considered together in a multi-population comparison (Table 4 Column 5). When data from different host treatments at the same sampling time were pooled to form a single population, all comparisons in genotype frequencies were significant both before and after Bonferroni correction (Table 5).

Selection coefficients

Significant differences in selection coefficients were found on all treatments except the cultivar mixture. The average selection coefficients of the five most common isolates ranged from 0.03 to 0.83 across cultivars (Table 6). Isolates differed in their degree of adaptation to the different cultivars as indicated by the significant cultivar-by-isolate interaction in the analysis of variance for selection coefficients (Table 7). For example, isolate SN99CH2.04a displayed the highest fitness on cultivar Runal and SN99CH2.09a displayed the highest fitness on cultivar Tamaro. SN99CH3.20a, which was the only inoculated isolate carrying the ToxA gene, had selection coefficients ranging from 0.29 on Tirone to 0.80 on Runal. All isolates exhibited similar fitness on the mixture as indicated by no significant difference in their selection coefficients.

Discussion

Rapid change in the composition of P. nodorumpopulations

Because the epidemics were initiated by artificially inoculating the five host treatments with the same P. nodorum population (i.e. the mixture of nine marked strains in equal proportions), our null hypotheses were that the frequencies of the nine released isolates would be nearly equal in different host populations and that the genetic composition of these populations would not change over time. Instead, we observed significant differences in the frequencies of the released isolates and the majority of P. nodorum populations sampled from the five host treatments changed significantly over time. The differences in genotype frequency among the inoculated strains within a host population and among host populations sampled from different points in time could be due to random genetic drift or natural selection, but we believe that the observed differences in this case should be attributed mainly to selection. We have two lines of evidence supporting this hypothesis. 1) If genetic drift was the main factor, we would expect random changes in genotype frequencies among the P. nodorum populations sampled from different hosts. Instead, we found that temporal dynamics of the P. nodorum populations was strongly affected by the corresponding host populations as indicated by significant changes in genotype frequencies both at local (pair-wise comparisons, Table 4 columns 2-4) and global (multiple population comparison, Table 4 last column) levels of comparison and selection coefficients were strongly affected by host genotypes (Table 6). 2) The differences in genetic composition among pathogen populations from different hosts increased over time. Greater differences in genotype frequencies were observed among P. nodorum populations sampled from different hosts at late stages of the experiment compared to early stages of the experiment (Tables 3 and 5).

Phaeosphaeria nodorum requires 2-3 weeks to complete a cycle of asexual reproduction and the discharge of its pycnidiospores requires rain [45, 52–54]. The time intervals between the first collection and second collection were 28 days in the 2003-2004 experiment and 60 days in the 2004-2005 experiment, respectively. Using the meteorological data provided by the local weather station, we estimate that only one generation of asexual reproduction occurred between the first collection and the second collection in 2004 while two asexual generations occurred between the first collection and the second collection in 2005. The significant changes in population composition observed in our experiments indicate strong competition among pathogen genotypes and rapid adaptation to particular hosts, consistent with the hypothesis of rapid pathogen evolution in agricultural ecosystems. Elevated rates of pathogen evolution in agriculture have also been supported empirically for other plant-pathogen interactions. In Mycosphaerella graminicola, pathogen populations were collected three times during a single growing season from a susceptible host and rapid directional increases/decreases in genotype frequency were observed for all marked isolates across all replicates [32]. Sequence analyses of plant cell wall degrading enzymes in M. graminicola [6] provide further evidence that the evolution of pathogens is accelerated in agricultural ecosystems. Montarry et al. [11] also detected a rapid change of population composition in the potato pathogen Phytophthora infestans.

The only strain carrying ToxA, SN99CH3.20a, began at a relatively high frequency (17-27%) in each host treatment but was always present at a lower frequency (0-13%) by the final 2005B sample, with an average decrease in frequency of 17%. By comparison, the two other strains (SNCH3.08a and SNCH3.10a) that had an overall decrease in frequency on each host treatment between the first and the final collections showed a decrease averaging less than 2%. A fitness cost associated with ToxA was proposed earlier to explain the observed differences in frequencies of ToxA positive strains among geographical P. nodorum populations [55]. However, this experiment was not designed to determine whether there is a fitness cost associated with carrying ToxA and it is not clear whether any of the Swiss wheat cultivars used in this experiment carry the corresponding toxin sensitivity allele Tsn1. Therefore, we cannot conclude that the decrease in frequency of SN99CH3.20a reflected a fitness cost associated with ToxA.

The effect of host diversity and partial resistance on the evolution of P. nodorum

Both theoretical and empirical studies have demonstrated that increasing genetic diversity in host populations through deployment of cultivar mixtures offers a promising approach to control plant diseases, with the advantages of lower input costs and a reduction in ecological damage compared to use of fungicides while also providing greater yield stability [12, 22, 56–58]. Investigations of the effect of cultivar mixtures on the evolution of pathogens have been mainly theoretical [12, 33, 34]. It was hypothesized that increasing genetic diversity in host populations would retard the rate at which pathogens evolve [14, 15, 21, 59] because heterogeneity in the host population would lead to divergent selection pressure on the pathogen population [17]. Several theoretical investigations support the hypothesis that increasing genetic diversity in host populations by using cultivar mixtures will delay the emergence of virulence against major resistance genes [22, 33, 34]. For quantitative resistance, mixing two cultivars in any proportions may reduce the final virulence attained by the pathogen population and prolong the time needed to reach the equilibrium point of highest virulence [12].

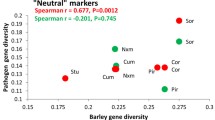

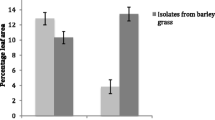

We determined the effect of host diversity on the population dynamics of P. nodorum by directly monitoring changes in frequencies of marked strains in a replicated field experiment. Our results support the hypothesis that increasing genetic diversity in host populations through deployment of cultivar mixtures can slow down the rate of evolution in pathogen populations. Multi-population comparisons indicated that the genetic structure of P. nodorum populations sampled from the host mixture did not change significantly over two years (Table 4 last column) and displayed the lowest variation in selection coefficients (Table 6). Although this experiment included only one host mixture, a similar evolutionary pattern was observed in field experiments involving other plant pathogens including Mycosphaerella graminicola on wheat [32] and Rhynchosporium secalis and Blumeria hordei on barley [38, 60].

It was postulated that partial resistance would retard the evolution of pathogens and thus increase the durability of resistance [24, 61]. A theoretical study indicated that virulence of pathogens would evolve slowly in the presence of partially resistant hosts [9]. We tested this hypothesis in our experiments by comparing the changes in frequency of marked pathogen strains competing on susceptible and partially resistant cultivars. In a similar experiment conducted using the wheat-Mycosphaerella graminicola pathosystem, we found that the pathogen populations sampled from a partially resistant cultivar exhibited less change in genetic structure over time and smaller selection coefficients than those from a susceptible cultivar [32], consistent with the theoretical expectation. Results from this experiment also support the hypothesis. We detected less significant changes in P. nodorum populations sampled from the partially resistant cultivars Tamaro and Runal than from the susceptible cultivar Tirone and resistant cultivar Levis (Table 4). Multi-population comparisons revealed that the changes in genetic structure of the P. nodorum populations sampled from the two partially resistant cultivars were significant at the 5% level over the two-years of the experiment, while the differences were significant at the 1% level on the resistant and susceptible cultivars. This result suggests that directional selection also occurs in pathogen populations infecting partially resistant cultivars albeit at a slower pace, leading to the erosion of resistance [2]. The adaptation to partial resistance was also observed in field experiments with the barley scald pathogen Rhynchosporium secalis [38] and the potato late blight pathogen Phytophthora infestans [19]. Some theoretical analyses of host-pathogen co-evolution suggest that hosts with partial resistance can select for increased virulence in pathogen populations [8]. Our findings agree with these predictions.

Differential selection on P. nodorumstrains during parasitic and saprophytic phases

It is hypothesized that saprophytic and parasitic phases of pathogen life cycles may select for different pathogen traits. During the parasitic phase, pathogen strains with a high capacity to exploit the host may have a selective advantage if they are able to produce greater numbers of viable offspring compared to strains with a lower capacity. But the traits that are favored during the parasitic phase of the life cycle may be selected against during saprophytic phases of the life cycle when living hosts are not available [7, 23]. The trade-offs that occur between parasitic and saprophytic phases of the life cycle may prevent or delay the emergence of high levels of pathogen virulence.

We found some evidence for differential selection between parasitic and saprophytic phases of the life cycle in the P. nodorum populations sampled from Runal, suggesting a fitness cost associated with high virulence during the saprophytic phase [10, 62]. On Runal, a significant difference in P. nodorum genetic structure was found between 2004A and 2004B but not between 2004A and 2005A, suggesting that selection occurring during the parasitic phase might be offset by selection that occurred during the saprophytic phase. The frequency distribution of isolate SN99CH2.04 also suggested that differential selection might occur between parasitic and saprophytic phases of the pathogen life cycle. This isolate increased in frequency on four of the five treatments (the mixture was the exception) during the parasitic phase of the disease cycle but decreased in frequency during the saprophytic phase on all treatments except the cultivar mixture (Figure 3). This finding indicates that this strain may exhibit higher relative fitness during the parasitic phase on the majority of living host tissue but lower competitive ability during the saprophytic phase on the dead host tissue. Using the same experimental approach, Abang et al. [38] also found that some isolates increased in frequency during the parasitic phase but decreased in frequency during the saprophytic phase in the barley pathogen Rhynchosporium secalis.

The lack of evidence for differential selection between parasitic and saprophytic phases in other hosts and isolates may be partially attributed to our sampling strategy. The 2005A collection was made from infected plants several months after the application of the wheat stubble inoculum. Thus at least one cycle of parasitic competition had likely occurred among the pathogen strains before this collection was made. If there was differential selection between the parasitic and saprophytic phases, selection for traits involved in establishment and reproduction during the initiation of the 2005A epidemics may have partially offset selection for traits involved in saprophytic competition. Further experiments with an additional population sample drawn at the beginning of the second cycle of the parasitic phase will be necessary to confirm this hypothesis

Only isolates derived from asexual reproduction of the nine inoculants were used to calculate selection coefficients and determine the effects of host genotypes on the population genetic structure of P. nodorum. Isolates having genotypes different from the nine inoculated strains (called novel isolates) were excluded. Because the contribution of mutation to the formation of new genotypes is expected to be trivial within the time scale of this experiment, we believe these novel isolates originated either via immigration from outside of the experimental plots or by recombination among inoculants and/or immigrants within the experimental plots (details in 39). The current paper focuses on the influence of host genotypes and diversity on clonal competition and we believe that excluding these novel isolates did not affect our interpretations.

We used both pair-wise and multiple population comparisons to evaluate the effects of evolutionary time (different sampling points) and host genotypes on the population dynamics of P. nodorum. In the multiple population comparisons, pathogen populations from different sampling points or hosts were considered simultaneously in a single analysis. This approach is useful to determine the overall pattern of evolutionary change in pathogen populations over hosts (e.g. last column in Table 4) but cannot be used to determine the sequential change in population structure over time within a host, for example whether the population genetic structure between the first (2004A) and the second (2004B) collection differs more than that between the first and the last (2005B) collection. For the latter case, we adopted pair-wise comparisons that included a Bonferroni correction. We believe that combining these approaches was necessary to achieve a comprehensive analysis of the data.

Conclusions

Understanding the evolutionary response of pathogen populations to host diversity and environmental changes (such as over-wintering or over-summering between growing seasons) is important for disease management. Many studies on host-pathogen interactions have focused on the development of mathematical models [12, 33, 63] to predict pathogen evolution in response to different strategies of resistance gene deployment [13, 61, 64–66]. Very few empirical studies have been conducted to test these theoretical models in agricultural ecosystems. Here, we present empirical evidence that strong selection occurs during both parasitic and saprophytic phases of the disease cycle. Evolution during the parasitic phase occurred most slowly on the cultivar mixture. The same result was also reported in similar experiments conducted with the wheat pathogen Mycosphaerella graminicola [32] and the barley pathogen Rhynchosporium secalis [38], suggesting that the observed pattern of evolution may be applicable for other splash-dispersed pathogens on cereals.

Methods

Experimental design

A two-year mark-release-recapture experiment was conducted at the Agroscope Changins-Wädenswil research center in Changins, Switzerland during the 2003-2004 winter wheat growing season on field allotment-34-North and the 2004-2005 winter wheat growing season on field allotment-35-North. Both fields were grown with a permanent meadow for at least three years prior to the mark-release-recapture experiment. Four commercial Swiss wheat cultivars, namely Levis, Runal, Tamaro and Tirone, were used in these experiments. The varieties differed in quantitative resistance to P. nodorum leaf infection according to disease assessments conducted at Changins between 2001 and 2002 [67]. Cultivars Levis, Tamaro and Runal are partially resistant to P. nodorum leaf blotch with their levels of resistance decreasing in that order. Cultivar Tirone is susceptible to leaf blotch. Cultivar Levis is partially resistant on the leaves but not on glumes. The four cultivars and a 1:1 mixture of cultivars Runal and Tamaro (5 host treatments in total) were planted in a randomized complete block design (RCBD) with three replications. The field plots were 1.5 m in width and 4.5 m in length. Each wheat plot was surrounded by four equal-sized plots planted with the highly resistant triticale variety Tridel. The experiment was planted on 5 October 2003 in the first year and on 17 October 2004 in the second year using commercial seeds treated with the fungicide Coral (2.38% difenoconazole and 2.38% fludioxonil, 2 ml/kg seeds).

Nine P. nodorum isolates collected in 1999 from naturally infected wheat fields near Bern, Switzerland were chosen as inoculants for the 2003-2004 experiment. Each of the isolates had distinct multi-locus haplotypes when assayed with seven single-locus RFLP markers [48], ten polymorphic EST-derived microsatellite markers and one minisatellite marker [68]. Only one of the nine isolates (SN99CH3.20a) carried the ToxA gene [55] that encodes a host specific toxin. After completing the field experiments it was discovered that one isolate (SN99CH3.19a) had been replaced by a contaminant of unknown origin, hereafter called C1. Following removal from long-term storage at -80°C, the isolates were first grown on Yeast Maltose Agar (YMA, yeast 4 gl-1, maltose 4 gl-1, sucrose 4 gl-1, agar 10 gl-1) at 21°C for ten days and then transferred to 1000 ml flasks containing 300 g of sterilized wheat kernels (cultivar Arina) in a dark incubator at 4°C. Three months later the infected wheat kernels were harvested and ground to a powder using a gristmill. The powdered kernels were mixed with distilled water and the spore suspension was filtered through cheese cloth and glass-wool. The spore suspension from each isolate was adjusted to 106 spores per ml using a hemacytometer and mixed in equal proportions. A surfactant (Tween 20) was added to the spore suspension at the rate of one drop per 50 ml. The aqueous spore suspension was applied onto disease-free wheat seedlings at growth stage 31 on 11 May 2004. Each field plot was sprayed with 500 ml of the calibrated spore suspension. To maximize the humidity and increase the probability of infection, inoculations were carried out in the late afternoon on a cloudy day and the inoculated seedlings were covered with a plastic tarp for 24 hours.

The source of primary inoculum in the 2004-2005 experiment was the infected straw and other plant debris saved from the first year's experiment. After harvesting the grain at the end of July 2004, the straw and other plant debris in each plot were collected and stored separately in burlap potato sacks for 3 months. The sacks were stored in a dry, dark and cool room to allow the development of the saprophytic phase without the risk of excessive moulding. At the beginning of tillering (Zadoks stage 13 to 21, 69), the straw was applied onto the corresponding host plots.

A total of four fungal collections were made across the two growing seasons. The first collection, hereafter called 2004A, was made on 4 June 2004 from the third or fourth full leaf [60] at three weeks after the artificial inoculation. The second collection, hereafter called 2004B, was made on 2 July 2004 from flag leaves. The third collection, hereafter called 2005A, was made on 11 April 2005 from the second true leaf and the last collection, hereafter called 2005B, was made on 10 June 2005 from the third true leaf. For each collection, 30 to 40 leaves were collected from each inoculated plot at intervals of approximately 20 cm along transects within the inner rows of the field plots. In most cases, only one isolate was made from each infected leaf. Because many lesions did not contain pycnidia, the total number of isolations made was much lower than the number of wheat leaves collected. For some collections with very low levels of infection, two isolations were made from the same leaf. In these cases, isolations were made from clearly separated lesions to minimize the probability of sampling isolates from the same infection. Our earlier work showed that P. nodorum isolations made from different lesions within an infected leaf usually contain different genotypes, suggesting they originate from different infection events [70].

DNA extraction and microsatellite data collection

DNA was extracted from each isolate using the DNeasy Plant Mini DNA extraction kit (Qiagen GmbH, Germany) according to the specifications of the manufacturer. The genotype of each isolate was determined using the same ten EST-derived microsatellite markers (SN1, SN3, SN5, SN11, SN15, SN16, SN17, SN21, SN22, and SN23) and one minisatellite marker (SN8) used to mark the nine inoculants. Multiplexed polymerase chain reactions (PCR) were carried out with fluorescently labeled primers using the same conditions described previously [68]. The PCR products were first cooled on ice for 2 min and then separated on an ABI PRISM 3100 sequencer according to the manufacturer's instructions (Applied Biosystems). Fragment sizes were estimated and alleles were assigned using the program GENESCAN 3.7 (Applied Biosystems).

Data analysis

The multilocus haplotype (MLHT) for each isolate was formed by joining the alleles at each of the 11 marker loci. Isolates with the same MLHT were considered to be clones, the products of asexual reproduction for a particular genotype. Because fungal collections were found to consist of both inoculated and novel isolates (novel isolates are defined as isolates having MLHTs different from any of the nine inoculated isolates, 39), only isolates derived from asexual reproduction of the nine inoculants were used to calculate selection coefficients and determine the effects of host genotypes on the population genetic structure of P. nodorum. The novel isolates, which originated either via immigration from outside of the experimental fields or by recombination among inoculated isolates and immigrants, were considered in a separate publication [39].

Pair-wise and multiple population comparisons in genotype frequencies were performed using contingency χ2 tests to detect differences in pathogen populations sampled from different hosts or sampling times [71]. Pair-wise comparisons were corrected using a sequential Bonferroni procedure as described previously [72]. Multiple population comparisons were conducted by using all populations sampled from the same host across different sampling points simultaneously, generating a c x l contingency table, where c is the number of sampling points and l is the number of genotypes detected. The association between sampling time and differences in genotype frequency among populations was evaluated using simple linear correlation. In this analysis, differences in genotype frequency among populations were measured by GST [73, 74]

Selection coefficients of the inoculated isolates within each host treatment were estimated simultaneously by setting the coefficient of the most-fit isolate (the isolate with the greatest increase in frequency over the considered time period) to zero as described previously [32]. Let the initial frequency for genotype G

1

, G

2

...... and G

i

be  ,

,  , ..... and

, ..... and  and their selection coefficients per generation be s

1

, s

2

, ...... and s

i

, respectively. Then the average fitness for this population at generation t = 0 will be:

and their selection coefficients per generation be s

1

, s

2

, ...... and s

i

, respectively. Then the average fitness for this population at generation t = 0 will be:

And the frequency of genotypes G 1 , G 2 ...... and G i after one generation of selection will be:

And the frequency for genotype G 1 , G 2 , ...... and G i after t generations of selection will be:

Let Gj (i ≠ j) be the most-fit genotype, i.e. sj = 0, then the average fitness ( ) in the t-1 generation will be:

) in the t-1 generation will be:

S1, s2 ...... si can be obtained by substituting equation 3 with equation 4, leading to the general solution of:

This estimate of selection coefficient measures the overall fitness of a genotype relative to the most-fit isolate in a population during the entire life cycle of the pathogen, taking into account its ability to infect, colonize, reproduce and spread [32]. For example, a genotype with a selection coefficient of 0.30 has 30% lower fitness than the most-fit genotype. Selection coefficient is different from selection intensity, a term used mainly in breeding and quantitative genetics to quantify a potential evolutionary gain after selecting a pool of parents from a variable population. To make more robust estimates, selection coefficients were estimated for each host treatment by pooling together data from different replications using only five of the nine inoculants for the 2004A and 2004B collections. Isolate SN99CH3.23a was not recaptured during the course of the experiment and the other three inoculants were recovered at frequencies too low to make meaningful estimates of selection coefficients. Selection coefficients were not estimated for the 2005 collections due to small sample sizes (9-18 isolates/host treatment) remaining after the novel isolates were excluded from 2005B. The means and standard deviations of the selection coefficients were generated based on 100 resamples of the original genotype frequencies using the Excel add-in PopTools 2.7 (CSIRO, Australia). Tukey's significant differences implemented in SYSTAT were used to compare selection coefficients among the five inoculants.

References

Anderson JB: Evolution of antifungal-drug resistance: mechanisms and pathogen fitness. Nature Rev Microbio. 2005, 3: 547-556.

McDonald BA, Linde C: Pathogen population genetics, evolutionary potential and durable resistance. Annu Rev Phytopathol. 2002, 40: 359-379.

Stahl EA, Bishop JG: Plant-pathogen arms races at the molecular level. Curr Opinion Plant Biol. 2000, 3: 299-304.

Laine AL: Evolution of host resistance: looking for coevolutionary hotspots at small spatial scales. Proc R Soc B. 2006, 273: 267-273.

Stukenbrock EH, McDonald BA: The origins of plant pathogens in agro-ecosystems. Annu Rev Phytopathol. 2008, 46: 75-100.

Brunner PC, Keller N, McDonald BA: Wheat domestication accelerated evolution and triggered positive selection in the β-Xylosidase enzyme of Mycosphaerella graminicola. PLoS One. 2009, 4: e7884-

Kiyosawa S: Genetic and epidemiological modeling of breakdown of plant disease resistance. Annu Rev Phytopathol. 1982, 20: 93-117.

Goodwin SB, Cohen BA, Fry WE: Panglobal distribution of a single clonal linage of the Irish potato famine fungus. Proc Natl Acad Sci USA. 1994, 91: 11591-11595.

Gandon S, Michalakis Y: Evolution of parasite virulence against qualitative and quantitative host resistance. Proc R Soc Lond B. 2000, 267: 985-990.

Bergelson J, Dwyer G, Emerson JJ: Models and data on plant-enemy coevolution. Annu Rev Genet. 2001, 35: 469-499.

Montarry J, Corbiere R, Lesueur S, Glais I, Andrivon D: Does selection by resistant hosts trigger local adaptation in plant-pathogen systems?. J Evol Biol. 2006, 19: 522-531.

Marshall B, Newton AC, Zhan J: Quantitative evolution of aggressiveness of powdery mildew under two-cultivar barley mixtures. Plant Pathol. 2009, 58: 378-388.

Mundt CC: Use of multiline cultivars and cultivar mixtures for disease management. Annu Rev Phytopathol. 2002, 40: 381-410.

Burdon JJ: Disease and Plant Population Biology. 1987, Cambridge University Press, Cambridge

Dileone JA, Mundt CC: Effect of wheat cultivar mixtures on populations of Puccinia striiformis races. Plant Pathol. 1994, 43: 917-930.

Higashi T: Genetic studies on field resistance of rice to blast disease. Bull Tohoku Natl Agri Exp Stn. 1995, 90: 19-75.

Ebert D, Hamilton WD: Sex against virulence: the coevolution of parasitic diseases. Trends Ecol Evol. 1996, 11: 79-82.

Zhan J, Mundt CC, McDonald BA: Using RFLPs to assess temporal variation and estimate the number of ascospores that initiate epidemics in field populations of Mycosphaerella graminicola. Phytopathology. 2001, 91: 1011-1017.

Andrivon D, Pilet F, Montarry J, Hafidi M, Corbiere R, Achbani EH, Pelle R, Ellisseche D: Adaptation of Phytophthora infestans to partial resistance in potato: evidence from French and Moroccan populations. Phytopathology. 2007, 97: 338-343.

Zhan J, Mundt CC, McDonald BA: Sexual reproduction facilitates the adaptation of parasites to antagonistic host environment: evidence from field experiment with wheat- Mycosphaerella graminicola system. Intl J Parasitol. 2007, 37: 861-870.

Parlevliet JE: Stabilizing selection in crop pathosystems: an empty concept or reality?. Euphytica. 1981, 30: 259-269.

Lannou C, Mundt CC: Evolution of a pathogen population in host mixtures: simple race - complex race competition. Plant Pathol. 1996, 45: 440-453.

Vanderplank JE: Disease Resistance in Plants. 1968, Academic Press, New York

Simons MD: Polygenic resistance to plant disease and its use in breeding resistant cultivars. J Environ Qual. 1972, 1: 232-240.

Robinson RA: Plant Pathosystems. 1976, Springer, Berlin, Germany

Maruyama K, Kikuchi F, Yokoo M: Gene analysis of field resistance to rice blast (Pyricularia oryzae) in Rikuto Norin Mochi 4 and its use for breeding. Bull Natl Inst Agr Sci. 1983, 35: 1-31.

Parlevliet JE: What is durable resistance: a general outline. Durability of Disease Resistance. Edited by: Jacobs T, Parlevliet JE. 1993, Kluwer Academic Publishers, London

Clifford BC, Clothier RB: Physiologic specialization of Puccinia hordeion barley hosts with non-hypersensitive resistance. Trans Br Mycol Soc. 1974, 63: 421-430.

Kolmer JA, Leonard KJ: Genetic selection and adaptation of Cochliobolus heterostrophusto corn hosts with partial resistance. Phytopathology. 1986, 76: 774-777.

Pink DAC, Lot H, Johnson R: Novel pathotypes of lettuce mosaic virus-Breakdown of a durable resistance?. Euphytica. 1992, 63: 169-174.

Schouten HJ, Benires JE: Durability of resistance to Globodera pallida. I. Changes in pathogenicity, virulence, and aggressiveness during reproduction on partially resistant potato cultivars. Phytopathology. 1997, 87: 862-867.

Zhan J, Mundt CC, Hoffer ME, McDonald BA: Local adaptation and effect of host genotype on the rate of pathogen evolution: an experimental test in a plant pathosystem. J Evol Biol. 2002, 15: 634-647.

Lannou C: Intrapathotype diversity for aggressiveness and pathogen evolution in cultivar mixtures. Phytopathology. 2001, 91: 500-510.

Segarra J: Stable polymorphisms in a two-locus gene-for-gene system. Phytopathology. 2005, 95: 728-736.

Goss EM, Larsen M, Chastagner GA, Givens DR, Grunwald NJ: Population genetic analysis infers migration pathway of Phytophthora ramorumin US nurseries. PLoS Pathog. 2009, 5: e1000583-

Zhan J, Mundt CC, McDonald BA: Measuring immigration and sexual reproduction in field populations of Mycosphaerella graminicola. Phytopathology. 1998, 88: 1330-1337.

Zhan J, Mundt CC, McDonald BA: Estimating rates of recombination and migration in populations of plant pathogens--a reply. Phytopathology. 2000, 90: 324-326.

Abang MM, Baum M, Ceccarelli S, Grando S, Linde C, Yahyaoui A, Zhan J, McDonald BA: Differential selection on Rhynchosporium secalisduring the parasitic and saprophytic phases in the barley scald disease cycle. Phytopathology. 2006, 96: 1214-1222.

Sommerhalder RJ, McDonald BA, Mascher F, Zhan J: Sexual recombinants make a significant contribution to epidemics caused by the wheat pathogen Phaeosphaeria nodorum. Phytopathology. 2010, 100: 855-862.

Bennett RS, Milgroom MG, Sainudiin R, Cunfer BM, Bergstrom GC: Relative contribution of seed-transmitted inoculum to foliar populations of Phaeosphaeria nodorum. Phytopathology. 2007, 97: 584-591.

Eyal Z: The Septoria tritici and Stagonospora nodorum blotch diseases of wheat. Eur J Plant Pathol. 1999, 105: 629-641.

Cowger C, Silva-Rojas HV: Frequency of Phaeosphaeria nodorum, the sexual stage of Stagonospora nodorum, on winter wheat in North Carolina. Phytopathology. 2006, 96: 860-866.

Sommerhalder RJ, McDonald BA, Zhan J: The frequencies and spatial distribution of mating types in Stagonospora nodorum are consistent with recurring sexual reproduction. Phytopathology. 2006, 96: 234-239.

Shaner G: Effect of environment on fungal leaf blights of small grains. Annu Rev Phytopathol. 1981, 19: 263-296.

Bathgate JA, Loughman R: Ascospores are a source of inoculum of Phaeosphaeria nodorum, P. avenaria f. sp. avenaria and Mycosphaerella graminicola in Western Australia. Aust Plant Path. 2001, 30: 317-322.

Griffiths E, Ao HC: Dispersal of Septoria nodorum spores and spread of glume blotch in the field. Trans Br Mycol Soc. 1976, 67: 413-418.

Brennan RM, Fitt BDL, Taylor GS, Colhoun J: Dispersal of Septoria nodorum pycnidiospores by simulated raindrops in still air. Phytopathology. 1985, 112: 281-290.

Keller SM, McDermott JM, Pettway RE, Wolfe MS, McDonald BA: Gene flow and sexual reproduction in the wheat glume blotch pathogen Phaeosphaeria nodorum, (anamorph Stagonospora nodorum). Phytopathology. 1997, 87: 353-358.

Arseniuk E, Góral T, Scharen AL: Seasonal patterns of spore dispersal of Phaeosphaeria spp. and Stagonospora spp. Plant Dis. 1998, 82: 187-194.

Weber GF: Septoria diseases of wheat. Phytopathology. 1922, 12: 537-585.

von Wechmar MB: Investigation on the survival of Septoria nodorum Berk. on crop residues. S African J Agri Sci. 1966, 9: 93-100.

Shearer BL, Zadoks JC: The latent period of Septoria nodorum in wheat. 1. The effect of temperature and moisture treatments under controlled conditions. Neth J Plant Pathol. 1972, 78: 231-241.

Shearer BL, Zadoks JC: The latent period of Septoria nodorum in wheat. 2. The effect of temperature and moisture treatments under field conditions. Neth J Plant Pathol. 1973, 80: 48-60.

Solomon PS, Lowe RGT, Ian KC, Waters ODC, Oliver RP: Stagonospora nodorum: cause of stagonospora nodorum blotch of wheat. Mol Plant Pathol. 2006, 7: 147-156.

Stukenbrock EH, McDonald BA: Geographic variation and positive diversifying selection in the host specific toxin SnToxA. Mol Plant Pathol. 2007, 8: 321-332.

Zhu YY, Chen HR, Fan JH, Wang YY, Li Y, Chen JB, Fan JX, Yang SS, Hu LP, Leung H, Mew TW, Teng PS, Wang ZH, Mundt CC: Genetic diversity and disease control in rice. Nature. 2000, 406: 718-722.

Woldeamlak A, Grando S, Maatougui M, Ceccarelli S: Hanfets, a barley and wheat mixture in Eritrea: yield, stability and farmer preferences. Field Crops Res. 2008, 109: 50-56.

Frankow-Lindberg BE, Halling M, Hoglind M, Forkman J: Yield and stability of yield of single- and multi-clover grass-clover swards in two contrasting temperate environments. Grass Forage Sci. 2009, 64: 236-245.

Huang R, Kranz J, Welz HG: Selection of pathotypes of Erysiphe graminis f.sp. hordei in pure and mixed stands of spring barley. Plant Pathol. 1994, 43: 458-470.

Chin KM, Wolfe MS: Selection on Erysiphe graminis in pure and mixed stands of barley. Plant Pathol. 1984, 33: 535-546.

Parlevliet JE: Durability of resistance against fungal, bacterial and viral pathogens; present situation. Euphytica. 2002, 124: 147-156.

Bahri B, Kaltz O, Leconte M, de Vallavieille-Pope C, Enjalbert J: Tracking costs of virulence in natural populations of the wheat pathogen, Puccinia striiformis f.sp.tritici. BMC Evol Biol. 2009, 9: 26-

Barrett JA: Pathogen evolution in multilines and variety mixtures. Zeitschriften für Pflanzenkrankheiten und Pflanzenschutz. 1980, 87: 383-396.

Paillard S, Goldringer I, Enjalbert J, Trottet M, David J, de Vallavieille-Pope C, Brabant P: Evolution of resistance against powdery mildew in winter wheat populations conducted under dynamic management. II. Adult plant resistance. Theor Appl Genet. 2000, 101: 457-462.

De Meaux J, Mitchell-Olds T: Evolution of plant resistance at the molecular level: ecological context of species interactions. Heredity. 2003, 91: 345-352.

Long J, Holland B, Munkvold GP, Jannink JL: Response to selection for partial resistance to crown rust in oat. Crop Sci. 2006, 46: 1260-1265.

Collaud JF, Schwärzel R, Bertossa M, Menzi M, Anders M: Variétés de céréales recommandées par l'interprofession pour la récolte 2003. Revue suisse d'agriculture. 2002, 34: (insert)

Stukenbrock HE, Banke S, Zala M, McDonald BA, Oliver RP: Isolation and characterization of EST-derived microsatellite loci from the fungal wheat pathogen Stagonospora nodorum. Mol Ecol Notes. 2005, 5: 931-933.

Zadoks JC, Chang TT, Conzak CF: A decimal code for the growth stages of cereals. Weed Res. 1974, 14: 415-421.

McDonald BA, Miles J, Nelson LR, Pettway RE: Genetic variability in nuclear DNA in field populations of Stagonospora nodorum. Phytopathology. 1994, 84: 250-255.

Everitt BS: The Analysis of Contingency Tables. 1977, Wiley, New York

Rice WR: Analyzing tables of statistical tests. Evolution. 1989, 43: 223-225.

Nei M: F-statistics and analysis of gene diversity in subdivided populations. Ann Hum Genet. 1977, 41: 225-233.

Selander RK, Caugant DA, Ochman H, Musser JM, Gilmour MN, Whittam TS: Methods of multilocus enzyme electrophoresis for bacterial population genetics and systematics. Appl Environ Microbiol. 1986, 51: 873-884.

Acknowledgements

This research was supported by the Swiss Federal Institute of Technology Grant TH-49a/02-1t. SSR data were collected using facilities of the Genetic Diversity Center at ETH Zurich. We thank S. Kellenberger for the field work, D. Gobbin, M. Lutz and P. Zaffarano for help with the data analysis, and V. Martinez, C. Phan, S. Seeholzer, and M. Zala for technical assistance.

Author information

Authors and Affiliations

Corresponding author

Additional information

Authors' contributions

RJS inoculated field plots, isolated fungal strains, conducted molecular assays, contributed to analysis of data and manuscript preparation; BAM contributed to the experimental design and coordination of the project, interpretation of the results and manuscript preparation (including Figures 1 and 2): FM prepared pathogen inoculum, carried out field experiments and participated in sample collections; JZ conceived the study, contributed to the experimental design and coordination of the project, interpretation of the results and led the statistical analysis of data and manuscript preparation. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is published under license to BioMed Central Ltd. This is an Open Access article is distributed under the terms of the Creative Commons Attribution License ( https://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Sommerhalder, R.J., McDonald, B.A., Mascher, F. et al. Effect of hosts on competition among clones and evidence of differential selection between pathogenic and saprophytic phases in experimental populations of the wheat pathogen Phaeosphaeria nodorum. BMC Evol Biol 11, 188 (2011). https://doi.org/10.1186/1471-2148-11-188

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1471-2148-11-188