Abstract

In this article, we are concerned with a nondifferentiable minimax fractional programming problem. We derive the sufficient condition for an optimal solution to the problem and then establish weak, strong, and strict converse duality theorems for the problem and its dual problem under B-(p, r)-invexity assumptions. Examples are given to show that B-(p, r)-invex functions are generalization of (p, r)-invex and convex functions

AMS Subject Classification: 90C32; 90C46; 49J35.

Similar content being viewed by others

1 Introduction

The mathematical programming problem in which the objective function is a ratio of two numerical functions is called a fractional programming problem. Fractional programming is used in various fields of study. Most extensively, it is used in business and economic situations, mainly in the situations of deficit of financial resources. Fractional programming problems have arisen in multiobjective programming [1, 2], game theory [3], and goal programming [4]. Problems of these type have been the subject of immense interest in the past few years.

The necessary and sufficient conditions for generalized minimax programming were first developed by Schmitendorf [5]. Tanimoto [6] applied these optimality conditions to define a dual problem and derived duality theorems. Bector and Bhatia [7] relaxed the convexity assumptions in the sufficient optimality condition in [5] and also employed the optimality conditions to construct several dual models which involve pseudo-convex and quasi-convex functions, and derived weak and strong duality theorems. Yadav and Mukhrjee [8] established the optimality conditions to construct the two dual problems and derived duality theorems for differentiable fractional minimax programming. Chandra and Kumar [9] pointed out that the formulation of Yadav and Mukhrjee [8] has some omissions and inconsistencies and they constructed two modified dual problems and proved duality theorems for differentiable fractional minimax programming.

Lai et al. [10] established necessary and sufficient optimality conditions for non-differentiable minimax fractional problem with generalized convexity and applied these optimality conditions to construct a parametric dual model and also discussed duality theorems. Lai and Lee [11] obtained duality theorems for two parameter-free dual models of nondifferentiable minimax fractional problem involving generalized convexity assumptions.

Convexity plays an important role in deriving sufficient conditions and duality for nonlinear programming problems. Hanson [12] introduced the concept of invexity and established Karush-Kuhn-Tucker type sufficient optimality conditions for nonlinear programming problems. These functions were named invex by Craven [13]. Generalized invexity and duality for multiobjective programming problems are discussed in [14], and inseparable Hilbert spaces are studied by Soleimani-damaneh [15]. Soleimani-damaneh [16] provides a family of linear infinite problems or linear semi-infinite problems to characterize the optimality of nonlinear optimization problems. Recently, Antczak [17] proved optimality conditions for a class of generalized fractional minimax programming problems involving B-(p, r)-invexity functions and established duality theorems for various duality models.

In this article, we are motivated by Lai et al. [10], Lai and Lee [11], and Antczak [17] to discuss sufficient optimality conditions and duality theorems for a nondifferentiable minimax fractional programming problem with B-(p, r)-invexity. This article is organized as follows: In Section 2, we give some preliminaries. An example which is B-(1, 1)-invex but not (p, r)-invex is exemplified. We also illustrate another example which (-1, 1)-invex but convex. In Section 3, we establish the sufficient optimality conditions. Duality results are presented in Section 4.

2 Notations and prelominaries

Definition 1. Let f : X → R (where X ⊆ Rn ) be differentiable function, and let p, r be arbitrary real numbers. Then f is said to be (p, r)-invex (strictly (p, r)-invex) with respect to η at u ∈ X on X if there exists a function η : X × X → Rn such that, for all x ∈ X, the inequalities

hold.

Definition 2[17]. The differentiable function f : X → R (where X ⊆ Rn ) is said to be (strictly) B-(p, r)-invex with respect to η and b at u ∈ X on X if there exists a function η : X × X → Rn and a function b : X × X → R+ such that, for all x ∈ X, the following inequalities

hold. f is said to be (strictly) B-(p, r)-invex with respect to η and b on X if it is B-(p, r)-invex with respect to same η and b at each u ∈ X on X.

Remark 1[17]. It should be pointed out that the exponentials appearing on the right-hand sides of the inequalities above are understood to be taken componentwise and 1 = (1, 1, ..., 1) ∈ Rn .

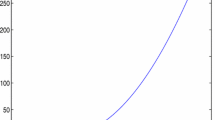

Example 1. Let X = [8.75, 9.15] ⊂ R. Consider the function f : X → R defined by

Let η : X × X → R be given by

To prove that f is (-1, 1)-invex, we have to show that

Now, consider

as can be seen form Figure 1.

Hence, f is (-1, 1)-invex.

Further, for x = 8.8 and u = 9.1, we have

Thus f is not convex function on X.

Example 2. Let X = [0.25, 0.45] ⊂ R. Consider the function f : X → R defined by

Let η : X × X → R and b : X × X → R+ be given by

and

respectively.

The function f defined above is B-(1, 1)-invex as

as can be seen from Figure 2.

However, it is not (p, r) invex for all p, r ∈ (-1017, 1017) as

(for x = 0.4 and u = 0.42)

< 0 as can be seen from Figure 3.

Hence f is B-(1, 1)-invex but not (p, r)-invex.

In this article, we consider the following nondifferentiable minimax fractional programming problem:

(FP)

where Y is a compact subset of Rm , l(., .): Rn × Rm → R, m(., .): Rn × Rm → R, are C1 functions on Rn × Rm and g(.): Rn → Rp is C1 function on Rn . D and E are n × n positive semidefinite matrices.

Let S = {x ∈ X : g(x) ≤ 0} denote the set of all feasible solutions of (FP).

Any point x ∈ S is called the feasible point of (FP). For each (x, y) ∈ Rn × Rm , we define

such that for each (x, y) ∈ S × Y,

For each x ∈ S, we define

where

with

Since l and m are continuously differentiable and Y is compact in Rm , it follows that for each x* ∈ S, Y (x*) ≠ ∅, and for any , we have a positive constant

2.1 Generalized Schwartz inequality

Let A be a positive-semidefinite matrix of order n. Then, for all, x, w ∈ Rn ,

Equality holds if for some λ ≥ 0,

Evidently, if , we have

If the functions l, g, and m in problem (FP) are continuously differentiable with respect to x ∈ Rn , then Lai et al. [10] derived the following necessary conditions for optimality of (FP).

Theorem 1 (Necessary conditions). If x* is a solution of (FP) satisfying x*TDx* > 0, x*TEx* > 0, and ∇g h (x*), h ∈ H(x*) are linearly independent, then there exist , ko ∈ R+, w, v ∈ Rn and such that

Remark 2. All the theorems in this article will be proved only in the case when p ≠ 0, r ≠ 0. The proofs in the other cases are easier than in this one. It follows from the form of inequalities which are given in Definition 2. Moreover, without limiting the generality considerations, we shall assume that r > 0.

3 Sufficient conditions

Under smooth conditions, say, convexity and generalized convexity as well as differentiability, optimality conditions for these problems have been studied in the past few years. The intrinsic presence of nonsmoothness (the necessity to deal with nondifferentiable functions, sets with nonsmooth boundaries, and set-valued mappings) is one of the most characteristic features of modern variational analysis (see [18, 19]). Recently, nonsmooth optimizations have been studied by some authors [20–23]. The optimality conditions for approximate solutions in multiobjective optimization problems have been studied by Gao et al. [24] and for nondifferentiable multiobjective case by Kim et al. [25]. Now, we prove the sufficient condition for optimality of (FP) under the assumptions of B-(p, r)-invexity.

Theorem 2 (Sufficient condition). Let x* be a feasible solution of (FP) and there exist a positive integer s, 1 ≤ s ≤ n + 1, , , ko ∈ R+, w, v ∈ Rn and satisfying the relations (2)-(6). Assume that

(i) is B-(p, r)-invex at x* on S with respect to η and b satisfying b(x, x*) > 0 for all x ∈ S,

(ii) is B g -(p, r)-invex at x* on S with respect to the same function η, and with respect to the function b g , not necessarily, equal to b.

Then x* is an optimal solution of (FP).

Proof. Suppose to the contrary that x* is not an optimal solution of (FP). Then there exists an such that

We note that

for , i = 1, 2, ..., s and

Thus, we have

It follows that

From (1), (3), (5), (6) and (7), we obtain

It follows that

As is B-(p, r)-invex at x* on S with respect to η and b, we have

holds for all x ∈ S, and so for . Using (8) and together with the inequality above, we get

From the feasibility of together with , h ∈ H, we have

By B g -(p, r)-invexity of at x* on S with respect to the same function η, and with respect to the function b g , we have

Since b g (x, x*) ≥ 0 for all x ∈ S then by (4) and (10), we obtain

By adding the inequalities (9) and (11), we have

which contradicts (2). Hence the result. □

4 Duality results

In this section, we consider the following dual to (FP):

where denotes the set of all satisfying

If, for a triplet , the set , then we define the supremum over it to be -∞. For convenience, we let

Let SFD denote a set of all feasible solutions for problem (FD). Moreover, let S1 denote

Now we derive the following weak, strong, and strict converse duality theorems.

Theorem 3 (Weak duality). Let x be a feasible solution of (P) and be a feasible of (FD). Let

(i) is B-(p, r)-invex at a on S ∪ S1 with respect to η and b satisfying b(x, a) > 0,

(ii) is B g -(p, r)-invex at a on S ∪ S1 with respect to the same function η and with respect to the function b g , not necessarily, equal to b.

Then,

Proof. Suppose to the contrary that

Then, we have

It follows from (5) that

with at least one strict inequality, since t = (t1, t2, ..., t s ) ≠ 0.

From (1), (13), (16) and (18), we have

Hence

Since is B-(p, r)-invex at a on S ∪ S1 with respect to η and b, we have

From (19) and b(x, a) > 0 together with the inequality above, we get

Using the feasibility of x together with μ h ≥ 0, h ∈ H, we obtain

From hypothesis (ii), we have

As b g (x, a) ≥ 0 then by (14) and (21), we obtain

Thus, by (20) and (22), we obtain the inequality

which contradicts (12). Hence (17) holds. □

Theorem 4 (Strong duality). Let x* be an optimal solution of (FP) and ∇g h (x*), h ∈ H(x*) is linearly independent. Then there exist and such that is a feasible solution of (FD). Further, if the hypotheses of weak duality theorem are satisfied for all feasible solutions of (FD), then is an optimal solution of (FD), and the two objectives have the same optimal values.

Proof. If x* be an optimal solution of (FP) and ∇g h (x*), h ∈ H(x*) is linearly independent, then by Theorem 1, there exist and such that is feasible for (FD) and problems (FP) and (FD) have the same objective values and

The optimality of this feasible solution for (FD) thus follows from Theorem 3. □

Theorem 5 (Strict converse duality). Let x* and be the optimal solutions of (FP) and (FD), respectively, and ∇g h (x*), h ∈ H(x*) is linearly independent. Suppose that is strictly B-(p, r)-invex at a on S ∪ S1 with respect to η and b satisfying b(x, a) > 0 for all x ∈ S. Furthermore, assume that is B g -(p, r)-invex at a on S ∪ S1 with respect to the same function η and with respect to the function b g , but not necessarily, equal to the function b. Then , that is, is an optimal point in (FP) and

Proof. We shall assume that and reach a contradiction. From the strong duality theorem (Theorem 4), it follows that

By feasibility of x* together with μ h ≥ 0, h ∈ H, we obtain

By assumption, is B g -(p, r)-invex at a on S ∪ S1 with respect to η and with respect to the b g . Then, by Definition 2, there exists a function b g such that b g (x, a) ≥ 0 for all x ∈ S and a ∈ S1. Hence by (14) and (24),

Then, from Definition 2, we get

Therefore, by (25), we obtain the inequality

As is strictly B-(p, r)-invex with respect to η and b at on S ∪ S1. Then, by the Definition of strictly B-(p, r)-invexity and from above inequality, it follows that

From the hypothesis , and the above inequality, we get

Therefore, by (13),

Since t i ≥ 0, i = 1, 2, ..., s, therefore there exists i* such that

Hence, we obtain the following inequality

which contradicts (23). Hence the results. □

5 Concluding remarks

It is not clear that whether duality in nondifferentiable minimax fractional programming with B-(p, r)-invexity can be further extended to second-order case.

References

Gulati TR, Ahmad I: Efficiency and duality in multiobjective fractional programming. Opsearch 1990, 32: 31–43.

Weir T: A dual for multiobjective fractional programming. J Inf Optim Sci 1986, 7: 261–269.

Chandra S, Craven BD, Mond B: Generalized fractional programming duality: a ratio game approach. J Aust Math Soc B 1986, 28: 170–180. 10.1017/S0334270000005282

Charnes A, Cooper WW: Goal programming and multiobjective optimization, Part I. Eur J Oper Res 1977, 1: 39–54. 10.1016/S0377-2217(77)81007-2

Schmitendorf WE: Necessary conditions and sufficient optimality conditions for static minimax problems. J Math Anal Appl 1977, 57: 683–693. 10.1016/0022-247X(77)90255-4

Tanimoto S: Duality for a class of nondifferentiable mathematical programming problems. J Math Anal Appl 1981, 79: 283–294.

Bector CR, Bhatia BL: Sufficient optimality and duality for a minimax problems. Utilitas Mathematica 1985, 27: 229–247.

Yadav SR, Mukherjee RN: Duality for fractional minimax programming problems. J Aust Math Soc B 1990, 31: 484–492. 10.1017/S0334270000006809

Chandra S, Kumar V: Duality in fractional minimax programming. J Aust Math Soc A 1995, 58: 376–386. 10.1017/S1446788700038362

Lai HC, Liu JC, Tanaka K: Necessary and sufficient conditions for minimax fractional programming. J Math Anal Appl 1999, 230: 311–328. 10.1006/jmaa.1998.6204

Lai HC, Lee JC: On duality theorems for a nondifferentiable minimax fractional programming. J Comput Appl Math 2002, 146: 115–126. 10.1016/S0377-0427(02)00422-3

Hanson MA: On sufficiency of the Kuhn-Tucker conditions. J Math Anal Appl 1981, 80: 545–550. 10.1016/0022-247X(81)90123-2

Craven BD: Invex functions and constrained local minima. Bull Aust Math Soc 1981, 24: 357–366. 10.1017/S0004972700004895

Aghezzaf B, Hachimi M: Generalized invexity and duality in multiobjective programming problems. J Global Optim 2000, 18: 91–101. 10.1023/A:1008321026317

Soleimani-damaneh M: Generalized invexity in separable Hilbert spaces. Topology 2009, 48: 66–79. 10.1016/j.top.2009.11.004

Soleimani-damaneh M: Infinite (semi-infinite) problems to characterize the optimality of nonlinear optimization problems. Eur J Oper Res 2008, 188: 49–56. 10.1016/j.ejor.2007.04.026

Antczak T: Generalized fractional minimax programming with B -( p , r )-invexity. Comput Math Appl 2008, 56: 1505–1525. 10.1016/j.camwa.2008.02.039

Mordukhovich BS: Variational Analysis and Generalized Differentiation, I: Basic Theory. Volume 330. Springer, Grundlehren Series (Fundamental Principles of Mathematical Sciences); 2006.

Mordukhovich BS: Variations Analysis and Generalized Differentiation, II: Applications. Volume 331. Springer, Grundlehren Series (Fundamental Principles of Mathematical Sciences); 2006.

Agarwal RP, Ahmad I, Husain Z, Jayswal A: Optimality and duality in nonsmooth multiobjective optimization involving V-type I invex functions. J Inequal Appl 2010, 2010: Article ID 898626. 14

Kim DS, Lee HJ: Optimality conditions and duality in nonsmooth multiobjective programs. J Inequal Appl 2010, 2010: Article ID 939537. 12

Soleimani-damaneh M: Nonsmooth optimization using Mordukhovich's subdifferential. SIAM J Control Optim 2010, 48: 3403–3432. 10.1137/070710664

Soleimani-damaneh M, Nieto JJ: Nonsmooth multiple-objective optimization in separable Hilbert spaces. Nonlinear Anal 2009, 71: 4553–4558. 10.1016/j.na.2009.03.013

Gao Y, Yang X, Lee HWJ: Optimality conditions for approximate solutions in multiobjective optimization problems. J Inequal Appl 2010, 2010: Article ID 620928. 17

Kim HJ, Seo YY, Kim DS: Optimality conditions in nondifferentiable G-invex multiobjective programming. J Inequal Appl 2010, 2010: Article ID 172059. 13

Acknowledgements

Izhar Ahmad thanks the King Fahd University of Petroleum and Minerals for the support under the Fast Track Project no. FT100023. Ravi P. Agarwal gratefully acknowledges the support provided by the King Fahd University of Petroleum and Minerals to carry out this research. The authors wish to thank the referees for their several valuable suggestions which have considerably improved the presentation of this article.

Author information

Authors and Affiliations

Corresponding author

Additional information

6 Competing interests

The authors declare that they have no competing interests.

7 Authors' contributions

All authors contributed equally and significantly in writing this paper. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Ahmad, I., Gupta, S., Kailey, N. et al. Duality in nondifferentiable minimax fractional programming with B-(p, r)- invexity. J Inequal Appl 2011, 75 (2011). https://doi.org/10.1186/1029-242X-2011-75

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1029-242X-2011-75