Abstract

Teaching and learning as well as administrative processes are still experiencing intensive changes with the rise of artificial intelligence (AI) technologies and its diverse application opportunities in the context of higher education. Therewith, the scientific interest in the topic in general, but also specific focal points rose as well. However, there is no structured overview on AI in teaching and administration processes in higher education institutions that allows to identify major research topics and trends, and concretizing peculiarities and develops recommendations for further action. To overcome this gap, this study seeks to systematize the current scientific discourse on AI in teaching and administration in higher education institutions. This study identified an (1) imbalance in research on AI in educational and administrative contexts, (2) an imbalance in disciplines and lack of interdisciplinary research, (3) inequalities in cross-national research activities, as well as (4) neglected research topics and paths. In this way, a comparative analysis between AI usage in administration and teaching and learning processes, a systematization of the state of research, an identification of research gaps as well as further research path on AI in higher education institutions are contributed to research.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The digitization of business, societal, and educational processes, as well as global events, have influenced the dynamics, application, and development of educational technology (EdTech). For instance, the COVID pandemic created a new and urgent situation for education, forcing a shift towards EdTech [1]. Simultaneously, the amount of scientific literature on artificial intelligence (AI) in education has increased rapidly since its emergence, enhancing both theoretical understanding and practical usage. Opportunities for the application of AI are manifold, especially when integrated into EdTech. EdTech can be summarized as all measures that aim to facilitate learning and improve learning performance through the creation, use, and management of appropriate technological processes and resources [2]. Gao et al. [3], among others, distinguish EdTech into pedagogical and operational technologies. While the first category is directly included in teaching–learning processes, whereby the use of EdTech creates learning environments that place the learner at the center of the learning experience, the second category—operational technology—basically refers to administrative or operational parts of teaching–learning processes.

Today, AI can be diversely utilized to deliver effective learning experiences [4]. For example, AI can guide and support a learning process (CoLearn) or be used to personalize learning journeys (e.g., via provision of content-led, personalized experiences so that students can learn at their own pace and—of equal importance—with their own intentions and goals). These advances have led to a growing awareness of the advantages of AI and a changing demand for support in education. Individualized learning can be supported by tools with AI technology that enhances the learning experience, reflection on learning, and theory development [5]. Furthermore, these tools allow for the analysis of vast data sets of instructional behavior collected from databases, containing elements of learning, affect, motivation, or social interaction [5,6,7]. Opportunities for fostering staff, student, or stakeholder participation in general can be for levered by AI-based instruments, such as dialogue-based tutoring systems, explorative learning environments, or intelligent tutoring systems [8]. The use of AI influences the teaching and learning processes. However, administrative processes in higher education institutions (HEI) are also increasingly enriched and supported by applications in management systems, proctoring, grading, student information systems, library services, and disability support [9, 10]. AI can be used to develop adaptive, inclusive, flexible, personalized, and effective learning environments that complement traditional education and training formats. In addition, AI technology promises to provide deeper insights into learners’ learning behaviors, reaction times, and emotions [6, 8, 11].

We refer to AI in the context of higher education as “computing systems that are able to engage in human-line processes such as learning, adapting, synthesizing, self-correction, and the use of data for complex processing tasks” [12]. With this definition, we focus on both learning and administrative tasks in educational institutions, particularly in the specific context of HEI. The application of AI in education has been researched for more than 50 years, and this research is increasingly penetrating different areas of education—as the subject of learning matter, as a research field, or as an enabler for changing the way work, administration, and teaching and learning process are conducted HEIs. Given the diversity and actuality of the topic, a large amount of research on AI in HEI has been published during the last decade. Hinojo-Lucena et al. [13] conducted a bibliometric analysis on AI in HEI and observed that the literature on this subject is at an incipient stage and that the research on AI application in HEI has not been consolidated. Zawacki-Richter et al. [14] provided an overview of the research on AI applications in higher education through a systematic review and showed that most of the disciplines involved in AI in education research come from computer science and STEM. They further synthesized four areas of AI application in academic support services and institutional and administrative services: (1) profiling and prediction, (2) assessment and evaluation, (3) adaptive systems and personalization, and (4) intelligent tutoring systems. Finally, they concluded a lack of critical reflection on the respective challenges and risks. Kebritchi et al. [15] identified issues for teaching in higher education and systematized them in the following categories: (1) issues related to online learners, (2) instructors, and (3) content development. They concluded that HEI should provide professional development for instructors, trainings for learners, and technical support for content development. Scanlon [16] explored the development of educational technology research over the last 50 years by investigating influences and current trends. She identified personalization, social learning, learning design, machine learning (ML), and data-driven improvement as major issues in current technology-enhanced learning, which are rooted in early works of the field. However, there is no structured and systemized overview of AI in teaching and administration processes in HEI that allows for the characterization of the current discourse in research output—that is, identifying major research topics and trends, concretizing peculiarities/abnormalities, and developing recommendations for further action. The aim of this paper is to close this gap. Therefore, the following research question is stated:

How is the current scientific discourse on AI in teaching and administration in HEI characterized?

To answer this question, we investigate the scientific response to the challenge of integrating AI in EdTech in the specific context of HEI. To construct an overview, this research uses a bibliometric analysis to present a statistical analysis of the current (2011–2021) scientific research records on AI usage in HEI. The analysis focuses on research output, collaborations between researchers from different countries, the particular research topics, teaching, and administrative processes. On this basis, trends and neglected/under-investigated areas will be unveiled. The results of this study are therefore quantitative (bibliometric analysis) as well as conceptual, in that they present research avenues for the fortification of AI research and usage in EdTech in the context of HEI.

The remainder of this paper is structured as follows. The following section introduces the underlying methodology of this research. In Sect. 3, results are presented for the learning and administration spheres. In Sect. 4, a research agenda is provided on the basis of the previously presented results. Results and the research agenda are discussed and conclusions are drawn in Sect. 5.

2 Methodology

2.1 Literature identification

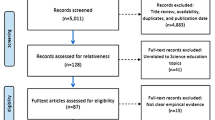

A broad search query was developed to ensure a representative coverage of the existing research fields (Table 1). The search query combines different keywords from the field of AI and ML with keywords from the field of higher education and the focal areas of teaching and administration. For the query, the database Web of Science Core Collection was used, which covers a large number of publications from different research areas and a variety of top-ranked journals and conferences relevant to the fields of AI and processes in HEI. We set a focus on the areas of Business, Computer Science, Education, Engineering, Social Science, and Psychology when querying.

A further refinement of the literature basket was conducted using different inclusion and exclusion criteria (Table 2). Thereby, the only further constraint in the query was date of publication, which was set between 01.01.2011 and 15.09.2021, since relevant content was expected to be published in this time range.

The query was conducted in September 2021 and resulted in 2333 hits for query 1 and 2,289 hits for query 2. These initial hits underwent a prescreening of title, keywords, and abstracts to assess the relevance of the hit according to the inclusion and exclusion criteria; each was prescreened by two researchers. If the researchers disagreed, a third researcher checked the data and added his/her judgement. Then, the candidate hit in question was discussed until consensus was reached. Prescreening of the query 1 data set led to 1590 removals, leaving 743 hits. Prescreening of the query 2 data set led to 2236 removals, leaving 53 hits. These remaining hits were used as the data source for further analysis.

2.2 Bibliometric analysis

Based on the Web of Science Analysis Results Tool and the R package “biblometrix” [17], a bibliometric analysis was performed to screen and classify the field. This includes the analysis of scientific research records of countries and collaborations and the analysis of the most frequently used keywords in order to group topics and themes and identify trends through factorial analysis. Development of this bibliometric analysis focuses on creating conceptual maps of keywords, synonyms, and related concepts through factorial analysis, providing collaboration network maps of countries or organizations, categorizing the keywords, synonyms, and related concepts, and then relating these groupings to countries or organizations, (e.g., in a three-fields plot) [18]. The number of data sets in each section corresponds to the total number of published articles. To analyze the datasets more deeply, we used R Studio. We created a co-occurrence network of the keywords from the datasets. We did not use the keywords from the articles, as they were often too generic. Instead, we used the most frequently occurring keywords of the datasets and designed a co-occurrence network. The co-occurrence network analysis is based on Kamada and Kawai’s [19] network layout. The clustering follows the Louvain method [20]; isolated nodes are deleted and a minimum of two edges to a node was set as the default criterion to be incorporated into the network.

3 Results

First, we present the results on AI for teaching in HEI, and afterwards, the results on AI for administration in HEI. Descriptive information regarding the development of the number of publications, involved countries, and collaborations, as well as topics, their development over time, and trends, is presented in turn.

3.1 AI for teaching in HEI

The development of the publications per year (Fig. 1) visualizes the undoubtedly growing importance in the research landscape of the topic of AI in the context of teaching in higher education. In the ten-year period studied, the number of published papers per year increased more than tenfold—in 2011, less than 20 papers including these terms were published in the database, while in 2021 (until September), there were published more than 200 papers. The trend of the increasing number of papers in the field has not been interrupted in any of the years studied, nor has it decreased.

Chinese institutions are leading the research effort on the topic of AI in teaching and learning in HEI (Fig. 2). They strongly dominate the sector, followed by the USA, Spain, and Australia. Other countries with a minimum of 10 publications in this area are England, Taiwan, Canada, Scotland, Germany, Brazil, the Netherlands, Italy, Saudi Arabia, Japan, Chile, Greece, Malaysia, Russia, France, and India.

Strong collaborations between institutions from the United States and those from Australia, Canada, China, and Europe in general can be identified. Furthermore, strong collaboration between Australia and England specifically, but also Europe generally, can be seen on the country collaboration map (Fig. 3). The analysis shows that there are almost no collaborations between Africa and other countries. South American countries collaborate mainly with European countries. Researchers from institutions in New Zealand and Russia do not collaborate on this topic with foreign residential scientists.

Figure 4 shows the 50 most used words from the KeyWords Plus analysis. The size of the individual words indicates the frequency of use. In addition to more general keywords, such as education or students, there is also a more specific term, performance, among the three most frequently used keywords. With regard to the word performance, we can also identify an accumulation of other typical narratives of the scientific discourse, such as impact, outcomes, self-efficacy, support, and success, among the 50 most frequent words targeting the effectiveness or promise of AI-based technologies in teaching. Semantically, a higher frequency of technical terms, such as learning analytics, model, big data, system, and algorithm, can be identified. In contrast, there are no terms from the field of pedagogy among the 50 most frequently used terms. This could indicate that the discourse is more situated in the field of computer science/informatics. Word duplications like student(s) and system(s) are the result of the statistical software extracting keywords without taking the numerus into account. This is not deliberate; it is a consequence of the way the statistical software extracts the keywords.

Figure 5 shows the correlations between three fields of analysis—the KeyWords Plus analysis, the countries, and the specific affiliations of the authors. On the one hand, it can be directly visually derived that aspects such as performance, motivation, design, engagement, and model are particularly represented in the body of literature. Also, general keywords, such as students and education, can be identified. Publications from China and Australia in particular address a wide range of the keywords. These countries also host most of the affiliations that contribute to the topic investigated. Although they are slightly fewer, strong diverse keywords can also be found in the contributions from the USA, UK, and Spain. Leading by number of contributions are the University of Edinburgh, University of Iowa, and Taipei Medical University.

Considering the co-occurrences of keywords, five different clusters can be identified (Fig. 6). The largest cluster (green) of keyword co-occurrences comprises aspects such as performance, students, online, participation, engagement, higher education, and achievements. The size of a presented keyword correlates with its frequency of occurrence. The lilac cluster relates the teacher keyword with aspects such as learning analytics, technology in general, acceptance, perceptions, support, and language. Another cluster (red) centers around the aspect of analytics. Co-occurring keywords are big data, success, patterns, and networks. Another cluster of keywords (blue) comprises education, design, system, impact, and knowledge. The fifth cluster (orange) consist of co-occurring keywords such as model, framework, motivation, student, classroom, beliefs, self-efficacy, and feedback.

Figure 7 shows the development of the 10 most used keywords in the period of 2011 to 2021 for the selected publication strand. Generally, the frequency of keywords has increased significantly as the number of publications has increased, with the largest increase occurring in 2017. The words performance, model, and impact show the greatest annual growth. The keywords design and higher education have also increased, especially since 2017, in comparison to the former mentioned, however, rather to a moderate rate. According to keyword occurrences, the focus on students decreased since its peak in the middle of 2019. The same applies for technology, learning analytics, and online, which have decreased since the beginning of 2020. The keywords AI and machine learning, on the other hand, are not among the 10 relevant words.

Figure 8 illustrates the trending topics in the publications according to the frequency of their use and with a chronological assignment on the axis of the years studied. We set a focus on the last five years, since the amount of papers rapidly increased in this period. Motivation was used most frequently, with a peak in 2019. In addition to the terms university, curriculum, and classroom, which are emblematic of the context of this study, technology also received usage. It must be noted that the general technological progress is reflected in the terms used—in 2017, the most frequently used technical term is computer; in 2018, it is networks; in 2020, many papers report on science, classroom, skills, technologies (a much broader term), and simulation as a concrete application. In 2021, many papers referenced recognition in general, which was the most frequently used term.

3.2 AI for administration in HEI

There was a steady increase in the number of publications per year with a focus on the usage of AI in HEI administration (Fig. 9). As with the teaching string, in a 10-year period, the number of publications increased tenfold. There was an especially marked increase in 2018, and from then onwards, the numbers increased significantly, although they remained at a moderate level. It should be noted, however, that from 2011 to 2017 the number of publications on AI in HEI administration processes was very low or nonexistent.

Figure 10 shows the countries with the largest output of papers on AI and HEI administration. Three countries produced about 30% of the papers; these were China, Spain, and the USA. Of the European countries, Greece, England, and France led in terms of quantitative output. South America participates relatively significantly in this publication context compared to African and other Asian countries (China excluded).

As shown in the country collaboration map (Fig. 11), there is only one collaboration, which is between Italy and Romania. There are no other cross-national cooperation activities with a focus on the usage of AI in administration in the HEI context. A possible reason for this may be the low overall number of publications on this specific topic.

Figure 12 shows the 50 most frequently used words from the KeyWords Plus analysis, where the size of each word in the figure is representative of the frequency of use. Education is the most used word. Of the technology-linked words, learning analytics is the leader in the administrative field, followed by system and online with slightly less frequently occurrence. Of the terms describing the context of use, emphasis is on performance and environment, followed by participation. Terms that are distant from the topic, such as customer churn prediction, citizenship, and assurance, are also represented, even though they are used relatively rarely.

Considering the relationship between KeyWords Plus results, countries, and author affiliation, the USA seems to focus mainly on performance and prediction, while Spain has the broadest variety of different keywords (Fig. 13). Generally, there is a broad variety (e.g., the keyword system is addressed by research institutions from many different countries). Furthermore, it must be noted that there are some missing values in the data. For example, the University of La Frontera in Chile is not related to specific keywords.

Figure 12 shows the 50 most frequently used keywords in the field of AI in HEI administration. In order to understand in more detail the relationships between the identified keywords, we have created a structure that represents the co-occurrence network between the 10 most used keywords (Fig. 14). By analyzing the individual keywords that are of higher relevance in the research topic of AI in HEI administration, the co-occurrence network becomes more detailed, and we can identify three different clusters (blue, red, and green). The clusters show possible causalities between the keywords. Interestingly, the blue and green clusters show overlaps. Thematically, both clusters can be located in the area of data-based analysis or evaluation of learning performance. For the red cluster, we cannot analyze the relationships in more detail based on the structure of the co-occurrence network.

Figure 15 illustrates the variations in the use of the most-used terms in the context of administration. In 2012, all the terms are used quite infrequently. Similar to the development in the context of teaching, the terms increase intensively from 2017. One exception is participation, which increased until 2017 and from then onwards is stable. All the other terms (learning analytics, online, system, environment, education, and performance) increased constantly, whereby especially the latter had the highest gradient angle.

As there were either no or not enough articles focusing AI in HEI administration in the previous years, trend topics can first be identified from 2018 onwards (Fig. 16). The chosen logarithmic frequency shows performance as a trend topic in 2018. Environment and system are analyzed as trend topics in the following year.

4 Future research paths

This study applied bibliometric analysis as a comprehensive and structured tool to organize and analyze the research on AI in administration and teaching in HEI. Among other things, this allowed us to find gaps in the literature and identify areas that have not been adequately studied but warrant further attention [21]. Below, we explain the findings that emerge directly from our literature review and point to topics that shape the current discourse on AI in HEI but have not been adequately addressed in the literature thus far.

4.1 Imbalance in research on AI in educational and administrative contexts

The most important finding of this analysis is the imbalance in research on the two different contexts. The research output on the application of AI in the teaching and learning contexts is 10 times larger than that on the application of AI in administrative HEI processes at the time of the most significant number of publications in 2021 (see Figs. 1, 9). This imbalance needs to be ameliorated in further research, as theoretical and empirical work is the foundation for the future implementation of AI in HEI processes [22]. Studies highlight the potential of AI and educational data mining for administrative operations (e.g., student admissions) [10], answering service requests or inquiries from students to the secretariat [23], future course selection and appropriate student administration [24], student retention and increasing student enrollment [25], administrative support (e.g., assignment submission, course registration, examination schedule, scoring, graduation) [26], and supporting administrative staff by answering students’ FAQs [27].

Furthermore, there is a minimal number of collaborations in research on AI in administrative processes; only Italy and Romania have collaborated (Fig. 11). Thus, research cooperation should be intensified to enable cross-organizational benefits and experience sharing.

In addition to this identified need for more research in the administrative domain, the results point to growing research interest in both fields and research needs that affect both application contexts. These are discussed below.

4.2 Imbalance in disciplines and lack of interdisciplinary research

In both administration and teaching, the lack of diversity in the applied research approaches is evident. This can be deduced from the result of the keyword analysis (Figs. 4, 12). Most papers are predominantly technical, and non-technology-related research is underrepresented. Further interdisciplinary research could provide insights from other disciplines. In general, the bias in study results has resulted in a lack of validity, as well as bias effects and vertical scoping problems. The importance of interdisciplinary research in the context of digitization, and particularly in the development and implementation of AI technologies, is undisputed [28]. Essential insights from philosophy, for example, could increasingly bring to the fore the limitations of using AI as a substitute for a human teacher [29], as well as provide insights from ethical and epistemological perspectives [30]. Also important are psychological and cognitive aspects and issues, such as acceptance and decision making. These themes are already apparent (see Figs. 4, 12), and their importance will grow in the future. Interdisciplinary studies that link computer science with humanities and social sciences will shape research on explainable and ethical AI to address challenges such as transparency and trust, enabling testing of AI systems for regulatory reasons, and adapting AI systems in response to unexpected behavior [31]. The interdependencies and the dynamic market and technological developments in the fields of AI for admin and teaching in HEI cause a fundamental methodological change resulting from the interdisciplinary perspective. Conceptual development and determination of appropriate characteristics of AI for admin and education in HEI are necessary. Furthermore, innovative and cross-disciplinary approaches are required to address this issue [16].

The imbalance in the diverse disciplines that focus on research on AI in university educational processes also leads to an imbalance in the keywords used to describe the literature. A strong focus is on primary topics (e.g., technology and students) in the classroom context, neglecting important accompanying issues, such as teachers or competencies in the digital world. The cluster analysis of keywords (Fig. 6) similarly shows that in the fifth cluster, the identified outcomes are quantitatively less than those in other clusters, and psychological (motivation, beliefs, self-efficacy) or pedagogical (feedback) keywords are not explored in direct relation to terms like big data or learning analytics. Therefore, there is a risk that the complexity of teaching and learning processes is neglected, limiting the generalizability of the results. At the same time, these competencies are relevant, especially during and after the COVID crisis [32,33,34]. Although the development of the technology is very important and research-intensive, learning remains a human-centered process. For this reason, there should not simply be a transfer of the real world into the virtual world, but the specifics of the interaction between people and technology should also be taken into account. In the literature, factors such as learner characteristics [35, 36] and teacher competencies [37] have already been investigated, which are crucial for its long-term use and acceptance. Further research should therefore include all aspects and stakeholders of teaching and learning processes.

4.3 Inequalities in cross-national research

The data reveal inequalities in collaboration across countries and institutions (Figs. 2, 11). The literature analyzed is heavily dominated by certain countries that lead the discourse on the use of AI in higher education teaching, which implies the risk of research monopolies and thus bias effects. Moreover, studies on cultural aspects of AI application seem promising to mitigate this problem and create different cultural approaches to AI use. We point out the need to establish platforms and formats for the exchange of ideas and experiences and the promotion and funding of international research collaborations. Furthermore, regulations on handling data are often the decisive factor for the further development of AI applications that require large amounts of real data. Regulations at the national level are important in this regard. Since ensuring access to personal data is particularly critical, there is a need for research on ways to enable secure, ethical, and socially acceptable access to data [9, 10, 38].

4.4 Neglected research topics and paths

To our surprise, the analysis results showed that essential topics are neglected in the papers studied. These include AI-related ethics, fairness, and privacy issues [39,40,41]. This is consistent with the findings of other extensive studies [31]. In the university sector, the issues include how to connect sustainability and AI systems, discrimination against students, and overall transparency. Reconsider the data and analyzing the respective shares of sustainability, discrimination, and transparency-related aspects in the articles unveils that these aspects are underrepresented.Footnote 1 Considering the teaching data set unveils that roughly one percent is concerned with sustainability-related aspects and less than one percent are concerned with discrimination or transparency-related aspects, respectively (Fig. 17). Considering the data set resulting from the admin search string, none of these topics are explicitly mentioned in the abstracts of the articles. Thus, we call for more research on these societal relevant topics in the context of AI in HEI.

Furthermore, comparability studies are mainly lacking between countries and between the two sectors (education and administration). There is a need for different target groups in the context of AI in administration and teaching for higher education institutions, as comparison or generalization of existing results are impossible or possible only to a limited extent. Comparison of research results in AI and universities, in general, is difficult, as the complexity of AI applications for higher education administration is increased by institutional or context-specific characteristics (and due to technological developments and available market dynamics). Therefore, case study approaches that are embedded and consider a wide range of common AI for higher education administrative applications are needed. By comparing such cases, (at least) the internal validity of corresponding studies can be improved.

There is a lack of long-term observations of AI-enabled teaching in HEI [42]. Short-term observations do not lead to a holistic understanding of the long-term effects of the use of AI for teaching in HEI. Therefore, it seems necessary to investigate the usage and effects of AI on students, their learning behavior and competencies, the lecturer, applied didactical approaches, and the teaching and learning content. This would allow for a better understanding of the framework conditions and of how AI can be leveraged to enhance the teaching and learning experience.

5 Discussion and conclusions

In this study, a bibliometric analysis of the body of literature on AI in administrative and teaching processes in HEI was presented. On this basis, future research paths were derived. This study identified (1) an imbalance in research on AI in educational and administrative contexts, (2) an imbalance in disciplines and a lack of interdisciplinary research, (3) inequalities in cross-national research activities, and (4) neglected research topics and paths. Specifically, it unveils that the emphasis of AI research in the context of EdTech in HEI lies on the teaching aspect. The number of outputs on AI in administrative processes is less than one-tenth that of AI in teaching processes (743 in comparison to 53 final database hits). This may roughly represent the ratio of expenditure and staffing of HEI in these areas. However, the two areas also need to be considered solely separately, given the potential of AI in, e.g., learner profiling, tracking patterns in student outcomes, or staffing of courses.

The number of publications significantly increased from 2017. On the one hand, this goes hand in hand with a general increase in the number of research outputs due to the increasing number of outlets [43, 44]. On the other hand, due to specific funding schemes, such as the Artificial Intelligence Funding Initiative from the German Research Foundation, the Horizon Europe scheme, the European Research Council, and the European Innovation Council from the European Union, which all explicitly address AI research, research activities have already increased. Furthermore, the COVID crisis forced the usage of EdTech and, therewith, increased the research opportunities in this area.

A very low number of African institutions conduct research on AI in HEI. In both sectors, China and North American countries have produced the main share of contributions. This is not surprising since China, in recent years, attributes very high importance to AI research and provides governmental support [45], with attention to AI in education [46, 47]. The analyzed papers show different tendencies using AI, especially towards an evaluation of teaching and performance, including prediction (e.g., [48,49,50]). The use of AI for learning analytics (e.g. [51,52,53]), but also for the development and further development of algorithms for AI application in teaching (e.g. [54,55,56,57]) have been intensively researched. North American and Spanish researchers are also very active on both topics. The word growth of trending topics increases with the number of publications on this topic. The word performance is leading in both aspects. Technical aspects, such as performance, framework, model, and learning analytics in general are in focus, while soft aspects, such as skills and acceptance, seem not to be in primary focus.

Surprisingly, neither AI nor machine learning are mentioned in the keywords in the teaching data subset. In the subset of administration, they are present but mostly not mentioned. This might be explained by the recent inception of the explicit hype on AI in research. Technology, students, and online higher education are the keywords that have decreased at a rising rate since 2017. It seems that they are replaced by the technical terms model and impact, which have increased exponentially.

This research has some limitations. First, the decision to focus solely on WoS as the one database for data extraction limits the results. Although adopting a narrow focus is common practice and consistent with recent work [18, 31], see [58], future research should collect data from different databases. Second, solely inter-country and no intra-country collaborations were investigated. Furthermore, although applying inclusion and exclusion criteria and mutually discussing questionable hits limits the risk of bias, the risk of bias and subjectivity cannot be excluded entirely. The research paths are primarily based on quantitative measures of the research output—the number of keywords describing the actual content. Assuming that the keywords describe the content of the papers correctly, this approach suffices. However, it must be questioned whether this relationship holds up for every item. Furthermore, only contributions in English were collected for the data set. Considering, for example, the European countries, French and Spanish researchers contribute a large share of research, but some of this is likely published in French. It cannot be ensured that all relevant research results are included in the data set underlying the results of this study.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

Code availability

Not applicable.

Notes

For this purpose, we have analyzed the abstracts of the articles in the respective data set. For sustainability-related aspects we have searched the abstracts for “sustainability” and “sustainable”. The key-words for discrimination-related aspects were “discrimination” and “discriminate”, and “transparency” and”transparent” for transparency-related aspects.

References

Renz A, Krishnaraja S, Schildhauer T. A new dynamic for EdTech in the age of pandemics. In: ISPIM Virtual. 2020. https://www.researchgate.net/publication/342077840_A_new_dynamic_for_EdTech_in_the_age_of_pandemics. Accessed: 15 Jul 2022

Robinson R, Molenda M, Rezabek L. Facilitating Learning. In: Januszewski A, Molenda M, editors. Educational technology - a definition with commentary. 2nd ed. New York: Routledge; 2013. p. 27–60.

Gao PP, Nagel A, Biedermann H. Categorization of educational technologies as related to pedagogical practices. In: Tirri K, Toom A, editors. Pedagogy in basic and higher education. London: IntechOpen; 2020. p. 1–15.

Dietmar J. Three Ways Edtech Platforms Can Use AI To Deliver Effective Learning Experiences. In: Forbes. 2021. https://www.forbes.com/sites/forbestechcouncil/2021/07/19/three-ways-edtech-platforms-can-use-ai-to-deliver-effective-learning-experiences/. Accessed 17 May 2022.

Vainshtein IV, Shershneva VA, Esin RV, Noskov MV. Individualisation of education in terms of e-learning: experience and prospects. J Sib Fed U. 2019;12:1753–70.

Luckin R, Holmes W, Griffiths M, Forcier LB. Intelligence unleashed: an argument for AI in education. London: Pearson; 2016.

Woolf BP, Lane HC, Chaudhri VK, Kolodner JL. AI grand challenges for education. AI Mag. 2013;34:66–84.

Fadel C, Holmes W, Bialik M. Artificial intelligence in education: Promises and implications for teaching and learning. Boston: Center for Curriculum Redesign; 2019.

Berens J, Schneider K, Görtz S, Oster S, Burghoff J. Early detection of students at risk–predicting student dropouts using administrative student data and machine learning methods. SSRN J. 2018. https://doi.org/10.2139/ssrn.3275433.

Marcinkowski F, Kieslich K, Starke C, Lünich M. 2020. Implications of AI (un-) fairness in higher education admissions: the effects of perceived AI (un-) fairness on exit, voice and organizational reputation. In: Proceedings of the 2020 conference on fairness, accountability, and transparency. https://doi.org/10.1145/3351095.3372867.

Renz A, Krishnaraja S, Gronau E. Demystification of artificial intelligence in education—how much AI is really in the educational technology. Int J Learn Anal Artif Intell Educ. 2020;2:4–30.

Popenici SA, Kerr S. Exploring the impact of artificial intelligence on teaching and learning in higher education. Res Pract Technol Enhanc Learn. 2017;12:1–13.

Hinojo-Lucena F-J, Aznar-Díaz I, Cáceres-Reche M-P, Romero-Rodríguez J-M. Artificial intelligence in higher education: a bibliometric study on its impact in the scientific literature. Educ Sci. 2019;9:51–9.

Zawacki-Richter O, Marín VI, Bond M, Gouverneur F. Systematic review of research on artificial intelligence applications in higher education–where are the educators? Int J Educ Technol High Educ. 2019;16:1–27.

Kebritchi M, Lipschuetz A, Santiague L. Issues and challenges for teaching successful online courses in higher education: a literature review. J Educ Technol Syst. 2017;46:4–29.

Scanlon E. Educational technology research: contexts, complexity and challenges. J Interact Media Educ. 2021. https://doi.org/10.5334/jime.580.

Aria M, Cuccurullo C. bibliometrix: an R-tool for comprehensive science mapping analysis. J Informetr. 2017;11:959–75.

Radanliev P, De Roure D, Walton R. Data mining and analysis of scientific research data records on Covid-19 mortality, immunity, and vaccine development-In the first wave of the Covid-19 pandemic. Diabetes Metab Syndr Clin Res Rev. 2020;14:1121–32.

Kamada T, Kawai S. An algorithm for drawing general undirected graphs. Inf Process Lett. 1989;31:7–15.

Blondel VD, Guillaume J-L, Lambiotte R, Lefebvre E. Fast unfolding of communities in large networks. J Stat Mech Theory Exp. 2008. https://doi.org/10.1088/1742-5468/2008/10/p10008.

Donthu N, Kumar S, Mukherjee D, Pandey N, Lim WM. How to conduct a bibliometric analysis: an overview and guidelines. J Bus Res. 2021;133:285–96.

Zhai X, et al. A review of artificial intelligence (AI) in education from 2010 to 2020. Complexity. 2021. https://doi.org/10.1155/2021/8812542.

Keller B, Baleis J, Starke C, Marcinkowski F. Machine learning and artificial intelligence in higher education: a state-of-the-art report on the German University landscape. In: Working Paper Series: Fairness in Artificial Intelligence Reasoning – Working Paper No. 1 2019. https://www.phil-fak. uni-duesseldorf. de/kmw/professur-i-prof-dr-frank-marcinkowski/working-paper . Accessed 17 May 2022.

Ognjanovic I, Gasevic D, Dawson S. Using institutional data to predict student course selections in higher education. Internet High Educ. 2016;29:49–62.

Bhatnagar H. Artificial intelligence-a new horizon in Indian higher education. J Learn Teach Digit Age. 2020;5:30–4.

Hien H T, Cuong P-N, Nam L N H, Nhung H L T K, Thang L D. 2018. Intelligent assistants in higher-education environments: the FIT-EBot, a chatbot for administrative and learning support. In: Proceedings of the ninth international symposium on information and communication technology. https://doi.org/10.1145/3287921.3287937.

Ranoliya B R, Raghuwanshi N, Singh S. Chatbot for university related FAQs. In: 2017 International Conference on Advances in Computing, Communications and Informatics (ICACCI). 2017; https://doi.org/10.1109/ICACCI.2017.8126057.

Whittaker M, et al. AI now report 2018. New York: AI Now Institute at New York University; 2018.

Cope B, Kalantzis M, Searsmith D. Artificial intelligence for education: knowledge and its assessment in AI-enabled learning ecologies. Educ Philos Theory. 2021;53:1229–45.

Kornilaev L. Kant’s doctrine of education and the problem of artificial intelligence. J Philos Educ. 2021. https://doi.org/10.1111/1467-9752.12608.

Mariani MM, Perez-Vega R, Wirtz J. AI in marketing, consumer research and psychology: a systematic literature review and research agenda. Psychol Mark. 2022;39:755–76.

Kalimullina O, Tarman B, Stepanova I. Education in the context of digitalization and culture: evolution of the teacher’s role, pre-pandemic overview. J Ethn Cult Stud. 2021;8:226–38.

López-Belmonte J, Pozo-Sánchez S, Fuentes-Cabrera A, Trujillo-Torres J-M. Analytical competences of teachers in big data in the era of digitalized learning. Educ Sci. 2019. https://doi.org/10.3390/educsci9030177.

Popova OI, Gagarina NM, Karkh DA. Digitalization of educational processes in universities: achievements and problems. Adv Soc Sci Educ Humanit Res. 2020. https://doi.org/10.2991/assehr.k.200509.131.

Vladova G, Ullrich A, Bender B, Gronau N. Students’ acceptance of technology-mediated teaching–how it was influenced during the COVID-19 pandemic in 2020: a study from Germany. Front Psychol. 2021. https://doi.org/10.3389/fpsyg.2021.636086.

Scheel L, Vladova G, Ullrich A. The influence of digital competences, self-organization, and independent learning abilities on students’ acceptance of digital learning. Int J Educ Technol High Educ. 2022. https://doi.org/10.1186/s41239-022-00350-w.

Vladova G, Scheel L, Ullrich A. Acceptance of digital learning in higher education-Whart role do teachers’ competencies play?. In: Proceedings of the European Conference on Information Systems. 2022; https://aisel.aisnet.org/ecis2022_rp/168

de Almeida PGR, dos Santos CD, Farias JS. Artificial intelligence regulation: a framework for governance. Ethics Inf Technol. 2021;23:505–25.

Belk R. Ethical issues in service robotics and artificial intelligence. Serv Ind J. 2021;41:860–76.

Breidbach CF, Maglio P. Accountable algorithms? The ethical implications of data-driven business models. J Serv Manag. 2020. https://doi.org/10.1108/JOSM-03-2019-0073.

Rahwan I, et al. Machine behaviour. Nature. 2019;568:477–86.

Renz A, Hilbig R. Prerequisites for artificial intelligence in further education: identification of drivers, barriers, and business models of educational technology companies. Int J Educ Technol High Educ. 2020;17:1–21.

Fire M, Guestrin C. Over-optimization of academic publishing metrics: observing Goodhart’s Law in action. GigaScience. 2019. https://doi.org/10.1093/gigascience/giz053.

Ware M, Mabe M. The STM report: An overview of scientific and scholarly journal publishing. In: DigitalCommons@University of Nebraska - Lincoln. 2015. https://digitalcommons.unl.edu/cgi/viewcontent.cgi?article=1008&context=scholcom. Accessed 13 Jun 2022.

Roberts H, Cowls J, Morley J, Taddeo M, Wang V, Floridi L. The Chinese approach to artificial intelligence: an analysis of policy, ethics, and regulation. AI Soc. 2021;36:59–77.

Hao K. China has started a grand experiment in AI education. It could reshape how the world learns. MIT Technol Rev. 2019; https://www.technologyreview.com/2019/08/02/131198/china-squirrel-has-started-a-grand-experiment-in-ai-education-it-could-reshape-how-the/. Accessed 13 Jun 2022.

Kwong T, Wong E, Yue K. Bringing abstract academic integrity and ethical concepts into real-life situations. Technol Knowl Learn. 2017;22:353–68.

Hai-tao P, et al. Predicting academic performance of students in Chinese-foreign cooperation in running schools with graph convolutional network. Neural Comput Appl. 2021;33:637–45.

Fang C. Intelligent online English teaching system based on SVM algorithm and complex network. J Intell Fuzzy Syst. 2021;40:2709–19.

Qian Y, Li C-X, Zou X-G, Feng X-B, Xiao M-H, Ding Y-Q. Research on predicting learning achievement in a flipped classroom based on MOOCs by big data analysis. Comput Appl Eng Educ. 2022;30:222–34.

Wang S, Wang H. Knowledge analytics: a constituent of educational analytics. Int J Bus Anal. 2020;7:14–23.

Li M, An Z, Ren M. Student-centred webcast+ home-based learning model and investigation during the covid-19 epidemic. Intel Artif. 2020;23:51–65.

Ma N, Xin S, Du J-Y. A peer coaching-based professional development approach to improving the learning participation and learning design skills of in-service teachers. J Educ Technol Soc. 2018;21:291–304.

Li Y. Feature extraction and learning effect analysis for MOOCs users based on data mining. Int J Emerg Technol Learn IJET. 2018;13:108–20.

Zhang X, Liu Q, Wang D, Zhao L, Gu N, Maybank S. Self-taught semisupervised dictionary learning with nonnegative constraint. IEEE Trans Ind Inform. 2019;16:532–43.

Yang AC, Chen IY, Flanagan B, Ogata H. Automatic generation of cloze items for repeated testing to improve reading comprehension. Educ Technol Soc. 2021;24:147–58.

Fu J, Zhang H. Personality trait detection based on ASM localization and deep learning. Sci Program. 2021. https://doi.org/10.1155/2021/5675917.

Gusenbauer M, Haddaway NR. Which academic search systems are suitable for systematic reviews or meta-analyses? Evaluating retrieval qualities of Google Scholar, PubMed, and 26 other resources. Res Synth Methods. 2020;11:181–217.

Acknowledgements

Not applicable.

Funding

Open Access enabled and organized by Project DEAL and funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) – Projektnumber 491466077. This work has been partly funded by the Federal Ministry of Education and Research of Germany (BMBF) under Grant no. 16DII127 (“Deutsches Internet-Institut”).

Author information

Authors and Affiliations

Contributions

All authors contributed to the conceptualization of the manuscript. AU prepared the introduction which GV and AR edited. FE and AU developed the methodological design and prepared the methodology section. FE conducted the data analysis. All authors prepared the result section. AU, GV, AR developed and refined the research agenda. AR reviewed and edited the manuscript. AU and GV revised the manuscript before submission and in the revision phase. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ullrich, A., Vladova, G., Eigelshoven, F. et al. Data mining of scientific research on artificial intelligence in teaching and administration in higher education institutions: a bibliometrics analysis and recommendation for future research. Discov Artif Intell 2, 16 (2022). https://doi.org/10.1007/s44163-022-00031-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44163-022-00031-7