Abstract

Problems related to patient scheduling and queueing in emergency departments are gaining increasing attention in theory, in the fields of operations research and emergency and healthcare services, and in practice. This paper aims to provide an extensive review of studies addressing queueing-related problems explicitly related to emergency departments. We have reviewed 229 articles and books spanning seven decades and have sought to organize the information they contain in a manner that is accessible and useful to researchers seeking to gain knowledge on specific aspects of such problems. We begin by presenting a historical overview of applications of queueing theory to healthcare-related problems. We subsequently elaborate on managerial approaches used to enhance efficiency in emergency departments. These approaches include bed management, fast-track, dynamic resource allocation, grouping/prioritization of patients, and triage approaches. Finally, we discuss scientific methodologies used to analyze and optimize these approaches: algorithms, priority models, queueing models, simulation, and statistical approaches.

Similar content being viewed by others

1 Introduction

In recent decades, healthcare systems—and emergency departments (EDs) in particular—have been facing a massive increase in demand, which has led to a continuous need to improve and optimize operational processes and quality control methods. Along with this trend, healthcare systems’ managers have begun to encounter logistical and operational problems that previously did not present a most noticeable concern. Many of these problems relate to the deployment of resources and patient flow planning through hospital departments: How should we schedule nurse and physician shifts to optimize their schedules and maximize the quality of care that patients receive? How can we optimize the workflow of staff members faced with large numbers of incoming patients? How should we direct the patient flow in emergency and routine scenarios?

Queueing theory and models have the capacity to address questions such as these in a systematic way, thereby providing managers with efficient solutions and tools for maintaining and improving performance. In 2013, Lakshmi and Sivakumar [1] produced a comprehensive literature review regarding the application of queueing theory in healthcare. Yet, in recent years, there has been increasing focus on problems that are specific to the ED, along with an urgent need to solve them. In 2018, Hu et al. [2] reviewed relevant articles published since 1970 to examine the contributions of queueing theory (QT) in modeling EDs and assess the strengths and limitations of this application: the ED is the first station that patients encounter when entering the hospital system; therefore, it is characterized by high demand levels. Every patient must get treatment and be assigned correctly to a specific department (being hospitalized or not), as the ED professional staff also needs to deal with a vast variety of medical conditions and injuries, high levels of uncertainty, and as a result, high-stress levels. More recently, in 2020, Ortíz-Barrios and Alfaro-Saíz [3] undertook a systematic review of methodological approaches to support process improvement in EDs. They reviewed scholarly articles published between 1993 and 2019 and categorized the selected papers considering the leading ED problems.

In our present work, we aim to provide researchers and healthcare managers with a clear summary of the current managerial approaches used for solving queueing-related problems in EDs and the scientific methodologies used for investigating these problems.

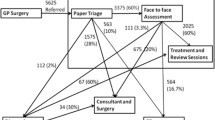

In Fig. 1, we show a diagram of the ED process flow and the relevant issues that address it. The figure presents the patient flow through the healthcare system and emphasizes the need for coordination between interacting departments. At each phase of the process, we show the relevant corresponding queueing theory aspect.

The present paper conducts a systematic literature review to answer the primary question of which theories and models can provide efficient solutions and tools to maintain and improve ED performance. Answers to this question allow detecting and classifying the scope and topics of the current state-of-the-art, gaps and limitations, managerial implications, and future research directions.

Ultimately, our review is intended to serve as an efficient guide for navigating through the extensive research literature in those diverse aspects that are related to queueing applications in the EDs.

2 Methodology

The main purpose of the study was to answer the following research questions: What are the applications of queueing theory to healthcare and especially to problems related to the EDs? What managerial approaches help improve the efficiency of these EDs? What are the scientific methodologies used to analyze and optimize the organizational methods? Since we aimed for relevant high-quality literature that fits the above purposes and topics, we used a systematic method for collecting potential literature. The papers were first retrieved by identifying search keywords and exploring various electronic journal databases, such as Google Scholar, PubMed, Science Direct, JSTOR, ProQuest, Emerald, Elsevier, SpringerLink, and Wiley. To ensure that we collect as much data as possible, those databases were explored both using a Python script and manually for applications of queueing problems, healthcare, operations research, simulations, and modeling. The main keywords and their frequency of occurrence are presented in Fig. 2. The letters’ size and boldness illustrate their dominance and occurrence in published papers. This gives the reader a wide look on the queueing-related frequency of topics in the ED environment.

The search process yielded many references, including referred journal articles, conference proceedings, dissertations, unpublished works, and books, which we systematically investigated for inclusion in the review. Relevant works were extracted by carrying out a comprehensive search of various databases. Figure 3 emphasizes the distribution and frequency throughout the years of published articles on ED queueing problems that have been considered for this literature review.

As shown in Fig. 3, the number of studies has grown significantly as the addressed fields are expanding greatly, mainly in the last decade, and there was a need to perform a deep verification of the studies. Therefore, we checked each paper or work manually and studied it to verify its quality and relevance to the literature review and ignored works that did not meet the criteria. Eventually, we reviewed 229 peer-reviewed works, papers, and books from the last seven decades dealing with queueing theory or related healthcare and operations research topics. We identified keywords corresponding to research methodologies such as discrete-event simulation (DES), algorithms, queueing models, and priority models; keywords corresponding to ED operational concerns such as overcrowding, patient flow, length of stay (LOS), and waiting time; and general keywords such as hospital and emergency department. In selecting studies for inclusion in this review, we sought to cover both the origins of research on ED queueing and state-of-the-art research and methods. Hence, we read each study, arranged its data (authors, title, date, and keywords), and summarized its contents into a Microsoft Excel 365 table. We subsequently grouped the studies according to specific topics to organize the information in a straightforward manner that would enable researchers to access the specific information they need. Papers were excluded based on their irrelevance to the study. We only included articles that were written in English and focused on queueing methods. Our study method was as follows: we performed a general literature survey including all the leading publications and conference proceedings. The literature survey was conducted by search using keywords that include words such as queueing, emergency department, scheduling, discrete-event-simulation, length-of-stay, waiting time, patients flow, hospitals, and more. The data were arranged in a final Microsoft Excel 2016 table that includes the title, authors, journal, date of publication, number of citations, keywords (given by the authors of the papers or us), and a summary. A review of this initial survey was concluded to fill possible holes and make it more comprehensive. In the last step, we searched for the relevant DOI (Digital Object Identifier) assigned to each publication that will provide a continuous link to its location on the web and allow its quick availability to the searcher. Since the DOI link is assigned by the publisher only at the time of publication of the article, activation of each of the links has resulted in a final update of the data previously collected in the electronic databases mentioned above.

This review is organized as follows: We first present a historical survey of early research (1950s–1980s) on queueing-related problems in healthcare systems in general and in EDs specifically. We then discuss methods used in practice to manage patient flow and workflow in EDs and related to queueing theory. Next, we present scientific methods applied to the investigation of queueing-related problems in EDs.

3 Early Applications of Queueing Theory in Research of Healthcare Systems

Research related to queueing in healthcare systems dates back to the early 1950s, beginning with the works by Bailey [4, 5] who examined queues and appointment systems in hospital outpatient departments, focusing on patient waiting times as an outcome of interest. Bailey was the first to simplify the patient flow problem into a problem description that could be modeled using queueing theory and to use this approach to evaluate appointment systems and emergency bed allocations. In 1952, Welch and Bailey [6] carried out a study on appointment systems (an extension was published by Welch in 1964, after his death [7]). They offered recommendations to reduce patient waiting time while ensuring that medical staff’s time would not be wasted. The researchers’ suggestions, which took into account the punctuality of the patients and medical staff, included dividing appointment intervals into periods equal to the average time spent on each patient and calling two patients to arrive at the clinic’s opening time.

In 1969, Bell and Allen [8] used queueing theory to investigate emergency ambulance service planning with a constraint to fulfill 95% or 99% of the demand. A 1969 study by Taylor et al. [9] was the first to adopt a pure queueing theory approach to examine emergency services. Specifically, the authors gathered 8 weeks of data regarding emergency anesthetic services in a hospital in Northampton. They used a queueing theory approach to make predictions regarding the outcomes (in terms of patient waiting times) of various alternative scenarios (e.g., one versus two anesthetists on-call). They highlighted the potential benefits of this prediction approach for decision-making regarding the number of staff members on-call and the allocation of duties among them.

In the 1970s, the foundations for most current research on queueing in healthcare systems began to be established. During this time, studies began to apply queueing theory to diverse healthcare-related problems while considering patient waiting time as the primary index for quality of care. For example, Haussmann [10] modeled patient care processes in a burn unit and, in line with prior studies on queueing in healthcare systems, used empirical data to define the model’s parameters. He used the model to predict how staffing or inpatient load changes would affect the quality of nursing care (as measured by waiting time). Gupta et al. [11] considered allocating workforce for messenger services in a hospital. Milliken et al. [12] developed a queueing theory model to predict hospital delivery rooms’ utilization. Keller and Laughhunn [13] proposed an application of queueing theory to a congestion problem in an outpatient clinic, and Cooper and Corcoran [14] and McClain [15] addressed bed planning models in hospitals. Larson [16] designed efficient algorithms for facility location and redistricting in urban ambulance services. Moore [17] used queueing theory to address a problem of dissatisfaction due to waiting time for healthcare services in Dallas, TX, and Scott et al. [18] addressed reducing the response time of ambulance systems.

Notably, preliminary simulation- and algorithm-based studies of ED queueing were published during this period as well. In 1976, Collings and Stoneman [19] were the first to present a general queue approach that can be applied in an ED environment. They studied the M/M/ ∞ queue with varying arrival and departure rates in a hospital ICU (intensive care unit) and showed that their model is well-suited to the ED environment, where it is important to meet nearly all demands immediately. The authors showed that it is unlikely that the hourly variation in patient arrival rates to the unit will significantly affect the number of occupied beds. In 1978, Ladany and Turban [20] developed a pioneering simulation model for operational planning and staffing of EDs, which they used to identify optimal numbers of service stations and their staffing patterns under various conditions. The authors suggested that their simulation framework could serve as a tool for planning, budgeting, decision-making, and managerial control since it provided decision-makers with the capacity to make predictions about costs, patient waiting times, and facility idleness.

By the 1980s, queueing theory had become a well-established approach to various problems encountered in healthcare settings. Researchers and managers, such as Kao and Tung [21] and Worthington [22, 23], began to build on this knowledge to solve straightforward queueing problems (e.g., optimizing the number of staff members allocated to a department, given data on the typical rate of incoming patients), and devising new means of addressing queueing-related challenges in practice. In parallel, researchers began to develop increasingly sophisticated methodologies to understand these challenges and identify means of improving healthcare systems’ capacity to handle them. We address these developments in what follows, with a specific focus on EDs.

4 Practical Approaches to Managing ED Queueing

As noted above, in recent decades, researchers and practitioners have developed creative new methods to improve ED performance. In Fig. 4, we present the distribution of such managerial studies over the years; we can observe a drastic increase in the number of studies during the years which shows the interest and importance of such studies. Extensive research has sought to provide guidance on how such practices should be implemented and identify which infrastructure changes ED managers might need to initiate in their departments. In what follows, we review these methods, addressing their history, development, and current potential research opportunities. Mainly, we shed light on the following techniques: triage approaches, bed management, dynamic resource allocation, the grouping of patients and prioritization of specific groups, and fast-track.

4.1 Triage Approaches

The word “triage” comes from the French word “trier,” which means “to sort.” The medical usage of the term dates back to the 1800s when medical officers on the battlefield realized that sorting wounded soldiers according to their medical needs (unsalvageable; in need of immediate attention; less urgent) could improve the efficiency of their treatment. The formal concept of triage was first introduced in EDs in the late 1950s and early 1960s when a significant patient volume increase occurred. During this time, triage was carried out by triage nurses, whose competence had to be tested.

Brillman et al. [24] and Gilboy et al. [25] were among the first to point out the problematic nature of this type of triage. Brillman et al. examined agreement among observers regarding the need for ED care and the ability to predict at triage the need for admission to the hospital. They used a crossover design in which each subject was subjected to three types of triage: nurse-guided triage first, computer-guided triage second (or vice versa), and physician triage last. The authors found significant variability among physicians, nurses, and the computer program regarding triage decisions. Gilboy et al. emphasized the need for standardization and quality to ensure reliable, reproducible triage nurse decisions. Additional researchers began to question the reliability of triage methods: Wuertz et al. [26] and Fernandes et al. [27], for example, measured inter-rater and intra-rater agreement within existing ED triage systems. Wuertz et al., who based their study on five standardized patient scenarios of complaints, concluded that triage assessments (both inter-rater and intra-rater) by experienced personnel are inconsistent. These results challenged the reliability of current ED triage practices. Fernandes et al. conducted an experimental study using standardized patient scenarios with emergency triage nurses and attending emergency physicians. They found fair intra-rater agreement among the nurses rating the severity of patient conditions and a consensus in inter-rater assessment (between nurses and physicians) of triage classification.

In light of these concerns, subsequent studies sought to improve the triage process using a combination of nurse and doctor triage, referred to as team triage. Subash et al. [28] observed a scenario of 3 h of combined doctor and nurse triage within 8 days. They found that this type of triage led to earlier medical assessment compared with nurse triage and that the benefit would carry on for the rest of the day, even after standard nurse triage had resumed. The authors concluded that the implementation of team triage has the potential to improve ED efficiency substantially. In 2006, Choi et al. [29] evaluated how stationing a senior ED doctor in triage instead of a separate consultation room influenced the waiting time and processing time of an ED without extra staff. The approach, called Triage Rapid Initial Assessment by a doctor (TRIAD), was implemented in an ED for seven shifts of 9 h each. The authors found that TRIAD use substantially reduced the waiting time and processing time of the ED. Two systematic literature reviews of triage-related interventions were done by Wiler et al. [30] who dealt with triage strategies that may improve front-end operations in EDs, and Oredsson et al. [31] who showed that team triage is likely to result in a shorter waiting time and shorter LOS; furthermore, it is most likely to result in fewer patients leaving without being seen, compared with triage processes that do not involve physicians. Burström et al. [32] compared the performance of different triage models (physician-led team triage, nurse first/emergency physician second, and nurse first/junior physician second) used in three Swedish EDs. They observed that physician-led team triage seemed advantageous in efficiency and quality indicators compared to the other two models. In a subsequent study, Burström et al. [33] compared efficiency and quality measures before and after the replacement of a nurse triage model in an ED with physician-led team triage (implemented during the daytime and early evenings on weekdays). Physician-led triage was shown to improve the efficiency and quality of EDs.

Additional recent studies concerning team triage methods include the work of Traub et al. [34], who proposed that the mechanism by which team triage improves ED throughput is that of Rapid Medical Assessment (RMA). Namely, a physician’s presence enables patient medical needs to be assessed and processed more quickly. The authors observed that implementing an RMA system in the ED of their institution (the Mayo Clinic) improved patient LOS but did not reduce the number of patients left without being seen. Lauks et al. [35] introduced the concept of the Medical Team Evaluation (MTE), encompassing team triage, quick registration, redesign of triage rooms, and electronic medical records. The authors carried out an observational study investigating how the implementation of such a method affects door-to-doctor (waiting) time and ED LOS. Notably, the authors observed an improvement in LOS only among the least urgent patients. Jarvis [36] performed a literature review to identify evidence-based strategies to reduce the amount of time spent by patients in the ED. The author noted that the use of doctor triage, rapid assessment, streaming, and the co-location of a primary care clinician in the ED have all been shown to improve patient flow.

Several studies based on queueing principles have sought to evaluate and compare various triage approaches. Connelly and Bair [37], for example, showed that DES could be used to assess the efficacy of triage operations and used the approach to compare two triage methods. Ruohonen et al. [38] used a simulation model to evaluate a team triage method (in their study, the triage team comprised a nurse and a doctor, in addition to a receptionist). He et al. [39] also proposed a simulation approach to examine different triage strategies.

Finally, several studies have examined specific characteristics of the triage process to identify potential areas for improvement. Farrohknia et al. [40] evaluated the reliability of several triage scales designed as decision support systems to guide the triage nurse to a correct decision. Similarly, a study by Claudio et al. [41] evaluated the ESI’s (Emergency Severity Index) usefulness as a clinical decision support method for prioritizing triage patients, and more recently, Hinson et al. [42] checked the influence of its accuracy on nurse triage. Olofsson et al. [43] investigated the influence of staff behavior during triage on ED elderly patients’ feelings and satisfaction. O’Connor et al. [44] evaluated the effect of ED crowding on triage destination and waiting times. In 2018, Gardner et al. [45] studied a revised triage approach identifying eligible patients at triage based on complaint and illness severity and reallocating a nurse practitioner (NP) into the triage area of an urban ED. This process was shown to improve the ED throughput and reduce the number of patients left without being seen.

A more recent paper by Hodgson and Traub [46] deals with diverse variations of patient assignment systems, including provider-in-triage/team triage, fast-tracks/vertical pathways, and rotational patient assignment. The authors discussed the theory behind such system variations and reviewed potential benefits of specific models of patient assignment found in the current literature.

It results from the above review, and is shown in Fig. 5, that triage approaches in healthcare and more specifically in the EDs have been studied and applicated throughout the years, with a massive increase in the recent decade. This is due to the technological improvements over those years, from both academic and managerial points of view. As noted above, in the early years, the medical triage methods were rather simple and even intuitive, and the breakthrough in that field occurred in the 1990s with studies regarding the usefulness of the triage methods and their reliability. Since the first decade of the twenty-first century, additional methods could be developed thanks to the increasing computation capacity and availability of data in hospital systems. A large amount of information is continuously gathered into those systems and is now available, not only to medical decision-makers but also for academic use. As online data are available, more than ever, to the triage staff, real-time decisions are now made possible that considerably improve the ED triage process. It is also expected that future technological developments will make this process more and more effective and increase its importance in resolving queueing problems in the EDs.

4.2 Bed Management

ED departments have always dealt with bed management problems, well before the problem was formally defined. Broadly, bed management refers to the allocation of beds to incoming patients in a manner that ensures that enough beds are available for emergency patients while not “wasting” bed space, i.e., leaving too many beds empty for too long. Traditionally, bed management decisions have been the ED staff’s responsibility (sometimes, but not always, formally referred to as bed managers): the shift manager, triage staff, or the nurses/physicians who received patients. Until recently, these decisions were not necessarily made systematically but rather were based on the decision-maker’s experience.

In 1994, Green and Armstrong [47] formally defined the problem of bed management as “keeping a balance between flexibility for admitting emergency patients and high bed occupancy” and outlined the bed manager’s responsibilities. Boaden et al. [48] adopted a similar definition of the problem while emphasizing that a bed manager’s decisions should take into account operational (immediate) considerations and strategic factors that affect the flow of patients. They also identified several key criteria that bed managers must fulfill to be effective in their roles: For example, the bed manager should be able to deal with crisis scenarios and resource shortages. To do so, the bed manager should have knowledge about the specific ED and its work methods, as well as familiarity with the patients in it; he or she should be able to exercise authority over the staff and patients and should possess the ability to obtain relevant information and send data to other parts of the hospital if needed (for example, information relating to occupancy in other departments).

Several field studies published in the 2000s have shown that effective bed management can indeed improve ED efficiency. Proudlove et al. [49] claimed that efficient bed management can play a key role in resolving overcrowding in EDs and accident departments. In their work, the authors provided a clear scheme of the patient’s “journey” through the hospital and bed management’s role in that journey. In 2008, a study by Howell et al. [50] empirically showed that “active” bed management — in which a designated bed manager assesses bed availability in various departments and “reshuffles” bed assignments according to the needs — can substantially reduce patient waiting times. A study by Ben-Tovim et al. [51] showed that applying the managerial approach of “lean thinking” to hospital processes such as bed management enabled a hospital in Australia to cope with increasing demand. Notably, the approaches adopted in these studies were not based on queueing theory principles but rather on managerial expertise.

The study of bed management from a queueing theory perspective is still in relatively early stages of development, suggesting that there is a great deal of room for researchers to improve and optimize bed management procedures. The first studies in queueing theory that began to address bed management were published in the 1980s and early 1990s. These included the papers by Kao and Tung [21] and Worthington [23, 24] which referred to waiting lists for healthcare services, in which patients effectively queued for available beds.

In 2001, Mackay [52] proposed quantitative models for bed occupancy management and planning (the bed occupancy management and planning system and Sorensen’s multi-phased bed modeling) and elaborated on his experiences applying these models to healthcare systems in Australia. Mackay noted that these models could provide healthcare planners and managers with important information about patient flows and bed numbers suitable for different resource planning strategies. However, they cannot yet replace the information obtained through the day-to-day process of bed management. In 2002, Gorunescu et al. [53, 54] published two of the best-known papers addressing queueing theory and bed management combined. In the first paper [53], they used an M/PH/c queueing theory model to describe patient movement through a hospital department and proposed an optimization approach to improve the use of hospital resources. Their model was based on the premise of balancing the cost of empty beds against the cost of turning patients away. In their second paper, Gorunescu et al. [54] checked how changing admission rates, LOS, and bed allocation affects bed occupancy. They found that 10–15% of the beds should stay empty to ensure high responsiveness and cost-effectiveness.

In the last 10 years, bed management studies using operation research and queueing theory have gained momentum. In 2012, Hall [55] devoted a chapter of his Handbook of Healthcare System Scheduling to an overview of bed management from an operations research perspective. He defined the need for beds as a function of the population’s health and age, technology (e.g., the capacity to serve patients in an outpatient setting rather than to hospitalize them), efficiency and quality of serving patients, and, finally, efficiency in bed management (e.g., capacity to coordinate and predict patient flow). The purpose of bed management, according to Hall, is to reduce the time when beds are unoccupied and thus unproductive. He noted the importance of acknowledging that not all beds are alike and provided a classification of hospital beds according to their availability and purpose (adult/young care, surgical, etc.). Finally, he proposed a few indices for measuring the performance of bed management processes: e.g., throughput per bed, waiting time for beds, and occupancy level.

Recent operations-focused modeling studies of bed management include the work of Mackay et al. [56], who built a simulation model aimed at facilitating collaborative decision-making in hospitals with regard to patient flow and bed management. Tsai and Lin [57] proposed a multi-attribute-value theory application for bed management using preference-based decision rules. Building on previous research in queueing theory, they developed and solved a mathematical programming model that used empirical data from EDs and took into account patient preferences. Their simulations suggested that it is possible to significantly improve bed assignment quality in the ED regarding resource utilization and patient satisfaction. Recently, an interesting simulation study of bed allocation to reduce blocking probability in EDs has been performed by Wu et al. [58] using a case study in China. They demonstrated that the blocking probabilities can be significantly reduced under different priority assignment cases and total number of beds.

Wargon et al. [59] proposed a queueing model and a DES approach to optimize bed management in the ED, with the ultimate aim of reducing transfers from the ED to other institutions. Like Mackay et al. [56], they suggested that simulation is a powerful tool for bed management decision-making. Their model’s key benefit is its simplicity, making it very flexible and easy for ED staff to adapt to their needs. In 2015, Belciug and Gorunescu [60] proposed a complex “What-if analysis” of resource allocation by creating a framework that integrated (1) an M/PH/c/N queueing system (N is the maximum capacity of patients in the ED); (2) a compartmental model; and (3) an evolution-based optimization approach. The efficiency of the combination of queueing theory and evolutionary optimization was proven on the task of optimizing patient management and healthcare costs. They illustrated their model’s applicability using data from a geriatric department of a hospital in London, UK. Most recently, Folake et al. [61] analyzed the use of queueing models in healthcare with an emphasis on accident and emergency department (AED) of a city hospital. They determined the optimal bed count and its performance measure for improving the patient flow.

As noted above, and is shown in Fig. 6, bed management was already an addressed issue, even before its formal definition in the 1990s and has undergone a steady increase in interest since then. Since 1980 and also during the first decade of the current millennium, its importance was shown by researchers to help with the crowding, waiting times, and utilization of the hospital’s resources. At the same time, a new stream of literature began to improve this important task: the queueing models for bed managing. Using theoretical models of increasing complexity, researchers were able to design various real-life case studies and to optimize them analytically, by heuristics and simulations. Those models remain in place, but as already available resources continuously experience wear and tear and new or improved resources are brought into the hospitals, they can still be improved and modified to suit real-life scenarios. This could be done, for example, by dividing the various resources into servers and sub-servers, for various beds and armchairs etc.; that in addition to applying those models to various and specific EDs with different settings.

4.3 Dynamic Resource Allocation

About two decades ago, researchers and practitioners began to propose flexibility in resources such as the number of beds (and the number of patients occupying them), nurses, physicians, and even patients as a means of reducing crowding in hospital departments. Baschung et al. [62], for example, described a concept of “floating bed,” in which individual clinic departments only have the minimum of fixed beds and the other beds are shared among the different departments in a flexible way. The method was implemented successfully in a Swiss hospital; however, the authors did not specify how it influenced ED processes. Notably, no further practical application of the specific method was reported.

In 2005, Bard and Purnomo [63] designed a branch-and-price algorithmic methodology for dynamic nurse scheduling based on fix shifts and floating nurses according to the needs. The computational results showed that a daily adjustment problem can be solved efficiently for up to 200 nurses. Another example of the “floating nurses” method can be found in the study from 2012 by Wang et al. [64]. The authors constructed a simulation-based research at a large community hospital and showed, among other findings, that adding a floating nurse can substantially improve the LOS in the ED, and it can lead to a 33% reduction in LOS, which is substantial. Zlotnik et al. [65] used support vector regression and M5P trees to forecast visits and to create dynamic nursing allocation. Their results showed that ED visits can be potentially managed with dynamic nurse staff allocation, resulting in improvements in both understaffing risk reduction and direct personnel cost savings. The aim of a research by Bornemann-Shepherd et al. [66] was to improve safety, as well as patient and staff satisfaction. They found a significant improvement of that satisfaction when creating an inpatient unit within the ED in which floating nurses from the hospitalization department work when the ED’s crowding demands that.

Another use of flexible resources can be found in the paper of Tan et al. [67] who considered the dynamic allocation of physicians at the ED. They proposed an online and historical data-driven approach and showed by simulation that their approach allows the ED to better cope with the demands and service levels needed. In 2017, Bakker and Tsui [68] proposed a dynamic allocation method of specialists for efficient patient scheduling using a data-driven approach and a DES. The authors were able to show that their method improves patient service quality as well as waiting times without change in resource capacity. In a more classical aspect of resources, Luscombe and Kozan [69] proposed a dynamic scheduling framework to provide real-time support for managing resources in the ED. The authors used the settings of parallel machine and flexible job shop environments to schedule beds and task resources allocation in a dynamic way. The heuristic solution method was implemented as a dynamic algorithm that receives information about patient arrivals and treatment tasks and incorporates the new data into an efficient resource allocation schedule.

A novel definition of dynamic resource allocation was made in 2015 by Elalouf and Wachtel [70], who studied the use of dynamic patient allocation for the ED and patient benefits. In this article, the authors proposed an algorithmic approach for optimizing the implementation of the so-called floating patient method and assessed the approach using empiric observations. They showed that they could thus ameliorate the work rate and crowding balance between the ED and the other departments. In subsequent work, Elalouf and Wachtel [71] incorporated the method into an algorithm aimed at optimizing the scheduling of patient examinations, assuming a constraint on the maximal LOS allowed in the ED. In 2017, they analyzed a holistic approach to the dynamic patient allocation method, considering crowding in the ED and other hospital departments [72]. They also considered the extent to which information has been made available about the patient’s condition in addition to other factors such as its severity and the effect of crowding on treatment time. They suggested that their tool can enhance decision-makers’ capacity to balance crowding in the ED with crowding in other hospitalization departments.

In contrary to other fields, the dynamic resource allocation field is relatively a new one in the EDs, as research in this area has gained momentum only in the last decade. Although the field has not been studied in depth before and therefore no research literature is available, the term “floating” applied to nurses and beds was familiar and used in practice with respect to ED teams. The resources previously considered were beds and nurses as they were available and flexible for allocation. In the last decade, additional resources have been taken into account as the need for limited budget solutions has arisen and the quantity and availability of online data from hospital information systems are constantly increasing. Thanks to advances in information availability, these resources can now be managed in the same way as in other industries to improve the efficiency of the ED queuing system. As shown in Fig. 7, the massive growth in the number of relevant publications involving dynamic allocation in EDs began in the second decade of the present century with the mentioned increase in availability of data and the possibility to “float” physicians, specialists, and even patients themselves for the benefit of the system and patients. Future research may address those new areas and look for the best strategies to assign them.

4.4 Grouping of Patients and Prioritization of Specific Groups

The concept of grouping and prioritizing patients in the hospital is not a new one. It entails giving shift managers/ED managers the ability to group patients with similar conditions together or prioritize specific treatments when resources are limited. The most widely known patient classification system is the diagnosis-related groups (DRG) system. This classification system was first introduced by McGuire [73] and has undergone considerable modifications over the years. Sanderson and Mountney [74] reviewed the concepts on which patient groups are based in order to identify means of better adapting healthcare grouping designs to the needs of the population. They emphasized the potential of appropriate group design to facilitate data analysis and, consequently, contribute to effective planning and management of healthcare resources. King et al. [75] applied lean thinking concepts to show the impact of streaming on waiting times and total durations of stay in the ED. El-Darzi et al. [76] proposed a novel grouping methodology based on the premise that identifying groups of patients with common characteristics and detecting the workload associated with each group might help predict resource needs and improve resource utilization. The methodology involved grouping patients according to their LOS, by fitting Gaussian mixture models to empirical LOS observations. Gorunescu et al. [77] further developed this method and used surgical data to assess the models they proposed. Xu et al. [78] proposed a patient grouping method based on their required medical procedures and compared several grouping techniques applied to real ED data.

In recent years, a group at Pennsylvania State University has produced extensive research on methodologies for prioritizing ED patients, though not with a queueing-related focus. Claudio and Okudan [79], for example, used a hypothetical case study to investigate the use of multi-attribute utility theory in the prioritization of ED patients. Their method addressed cases where it is necessary to prioritize multiple patients who present the same acuity level (implying that treatment prioritization is not a straightforward task) but with different vital signs. Fields et al. [80] subsequently carried out a study that acknowledged the reality in which multiple decision-makers (e.g., nurses and other staff members) disagree on the priority rankings of different patients, a problem called the rank aggregation problem. Ashour and Okudan Kremer [81] developed an algorithm based on a management philosophy called Group Technology (GT), which is grounded on the premise that knowledge about groups leads to efficient problem-solving. The authors applied the algorithm to triage analysis in an ED system. In a subsequent study [82], they extended this idea to develop a Dynamic Grouping and Prioritization (DGP) algorithm that identifies the appropriate criteria for grouping patients and prioritizes them according to patient- and system-related information. This approach’s simulation demonstrated its superiority to a baseline grouping and prioritization method (based on the ESI) in terms of patient average LOS in the ED.

Recently, several grouping and prioritization methods have emerged that explicitly address queueing problems in the ED. Tan et al. [83] proposed using a dynamic-priority queue to dispatch patients to consultations with doctors. They tested the proposed model using a simulation and observed that it effectively reduced patient LOS and improved patient flow. In 2019, Ding et al. [84] analyzed the patient routing behaviors of ED decision-makers in four EDs of metro Vancouver. They proposed a general discrete choice framework, consistent with queueing literature, as a tool to analyze prioritization behaviors in multi-class queues. They observed that decision-makers in all four EDs applied a delay-dependent (dynamic) prioritization approach across different triage levels. In the same year, Zhang et al. [85] proposed a new patient queueing model with priority weight to optimize the ED management and analyze the impact of prioritization on the outpatient queueing system in an ED with limited medical resources.

Since it was first formally introduced, the concept of grouping patients changed drastically. Although it is proven, and well known, that efficient implementation of patient grouping can improve patient care and workload management in the ED, the optimal way to apply the concept is still not found. Since the ESI was developed in 1999 by Gilboy et al. [25] who brought the idea of grouping to all EDs, the grouping and prioritization methods have been addressed in studies whose number has increased significantly in the recent years as shown in Fig. 8. Grouping criteria evolved, since the initial one defined by the first use of the ESI, i.e., the characteristics of patients such as symptoms and risk situation and now commonly involve the required medical procedures. Other streams attacked the prioritization of patient groups when using the entire hospital’s information systems and the real-life cases that can arise from such use. From the perspective of queueing theory, this field is still very open for improvement as it can be well combined with other managerial methods with various definitions and models.

4.5 Fast-Track

The fast-track method is one of the best-known and most commonly used methods for directing patient flow in EDs. In effect, this method’s premise is similar to that of the grouping and prioritization approach discussed above. It entails treating acutely ill patients separately from minimally ill patients in the ED, such that the latter can be seen and discharged rapidly. The fast-track method originated in the EDs themselves during the 1980s. Researchers in medicine and management began to write about it in the late 1980s and 1990s; they studied its usefulness and explored means of improving its implementation. Meislin et al. [86], for example, tested a fast-track method for 10 weeks in a teaching hospital and showed that it decreased patient waiting times (as compared with the previous system the hospital had in place) and increased patient satisfaction. In 1992, Wright et al. [87] reviewed a 1-year implementation of this method and reported high satisfaction levels among patients and medical staff. Cardello [88] studied the introduction of nurse practitioners to help reduce waiting time for ambulant nonurgent cases in fast-track program. Fernandes et al. [89] applied a managerial approach called Continuous Quality Improvement (CQI) to improve fast-track care in a hospital ED; the authors observed that the approach reduced patient LOS. They continued [90] to explore the outcomes of their hospital’s fast-track approach; they observed that (1) reducing patient LOS was associated with a decrease in the number of ED patients who left without seeing a physician; and (2) many patients leaving the ED without being seen had been classified as urgent (opposed to nonurgent) at presentation. Cooke et al. [91] investigated the influence of the use of a fast-track on waiting times within accident and emergency (A&E) departments in the UK. They assessed that it decreases the number of patients enduring long waits without delaying the care of those with a more severe injury.

Subsequent field studies on the implementation of the fast-track method include the work of Sanchez et al. [92], who studied the influence of the opening of a fast-track area in an ED in a US hospital. They concluded that the opening of the fast-track area improved ED effectiveness, as measured by a decrease in waiting times and LOS, without leading to deterioration in the quality of care provided, as measured by rates of mortality and revisits. Darrab et al. [93] examined the use of a fast-track method in a Canadian hospital; they showed that fast-tracking lower-acuity patients reduced overall LOS and the left-without-being-seen rate without affecting higher-acuity patients in the ED. A study by Nash et al. [94] evaluated the efficacy of a newly developed fast-track area in a university-affiliated ED and concluded that patients did move more quickly through the department after adding the fast-track unit. Combs et al. [95] evaluated the outcomes of adopting a fast-track approach in an Australian hospital. They determined that the fast-track reduced congestion in the ED waiting area and improved staff morale. Considine et al. [96], who also studied a fast-track program in Australia, concluded that the fast-track decreased LOS in the ED for non-admitted patients without compromising waiting times and LOS for other ED patients. Oredsson et al. [31], discussed above, carried out a systematic review of studies on interventions aimed at improving flow processes in EDs. They showed that introducing a fast-track for patients with less severe symptoms consistently results in shorter waiting time, shorter LOS, and fewer patients leaving without being seen.

Recent field studies evaluating adjustments to fast-track processes include a controlled study by White et al. [97], who showed that a Lean-based reorganization of fast-track process flow improved fast-track ED performance (in terms of LOS, percent of patients discharged within 1 h, and room use), without adding expense. Manno et al. [98] examined a fast-track system in which lower-acuity patients were streamed to specialized care areas and determined that they were seen quickly by specialists and safely discharged or admitted to the hospital without diverting resources from patients with high-acuity illness or injury.

During the 1990s, owing to advancements in technology and processing power, many researchers began to use simulation models (as will be discussed in detail in the section dealing with scientific modeling) to evaluate and seek methods of improving the implementation of the fast-track method. Kraitsik and Bossmeyer [99], for example, developed a simulation to evaluate the establishment of a fast-track lane adjacent to the main ED and a large-capacity lab to improve patient flow. McGuire [100] carried out a simulation study for improving patient flow in the ED of a specific hospital that implemented a fast-track system. Their simulations yielded several recommendations, including adding an additional registration clerk during peak hours, adding a holding area for waiting patients, extending the fast-track lane hours, and using more senior physicians instead of residents in the fast-track area. Garcia et al. [101] used a simulation approach to determine whether a specific hospital would benefit from implementing a fast-track method; they found that a fast-track system using a minimal number of resources would indeed greatly reduce patient waiting times. Kirtland et al. [102] examined 11 alternatives to improve patient flow in an ED in Maryland and found that the use of a fast-track method, combined with treating the patients at the same place in which they wait and using point-of-care lab testing procedures, could substantially reduce the average patient’s LOS. In the early 2000s, Sinreich and Marmor [103,104,105] developed simulation tools to assist ED decision-makers; these tools divided patients into categories—one of them was a “fast-track” category—and used these categories to predict patient LOS (see “Sect. 5.3” for additional details). Marmor et al. [106] also developed a methodology for ED design to help the ED managers and used it to compare triage and fast-track operational models in different hospitals. La and Jewkes [107] used DES to model an ED’s fast-track system and determined an optimal fast-track strategy to improve performance measures. They used real data to evaluate the effectiveness of several fast-track strategies within a hospital ED and showed practical implications for reducing patient wait times in EDs.

Additional queueing theory-based approaches to the analysis of fast-track applications include the works of Roche and Cochran in 2007 [108] and 2009 [109], who developed a queueing network model to describe a scenario in which acute patients are treated in specific areas of the ED and “fast-tracked” patients are flexible in terms of where in the ED they can be treated. Their model can be used to derive recommendations to increase ED utilization and minimize the number of “walk-aways,” i.e., patients who leave without receiving treatment, with the help of operation research methods. Recently, Fitzgerald et al. [110] used a queue-based Monte Carlo analysis to support decision-making for implementing an ED fast-track. They expanded the simple queueing model with a DES that enabled them to calculate waiting times. Their results indicated that implementing a fast-track can reduce patient waiting times without increasing demand for nursing resources.

In conclusion, the fast-track is one of the most intuitive method for reducing the average LOS in the ED, and therefore, it has been well studied during the years since the late 1980s, as shown in Fig. 9, and proved to be very effective in terms of both LOS and patient satisfaction. Two major streams of literature are strongly recognizable for this method: one includes the field studies, which are most common as the fast-track method is applied in many places around the world and with many settings. The other stream is the simulation-based research that increased drastically since the early years of the millennium, with the growing availability of data from the hospital information systems. Although case studies with specific implementation are still used commonly, most of the current research in the field is based on simulations whose effectiveness varies with the data availability of the specific hospital or ED.

5 Scientific Modeling Methods

This section focuses on the diverse scientific modeling methods and approaches used to investigate ED applications of queueing-related problems in operations research. In 2010, Eitel et al. [111] described the ED as a service business and then discussed specific methods, such as triage, bedside registration, Lean and Six Sigma management methods, statistical forecasting, queueing systems, discrete-event simulation modeling, and others, to improve the ED quality and flow. In 2011, Wiler et al. [112] reviewed and compared modeling approaches to describe, and at times predict, ED patient load and crowding and evaluated their limitations. Saghafian et al. [113] reviewed many papers to demonstrate the contribution of operations research and management science to ED patient flow problems. More recently, Palmer et al. [114] performed a systematic literature review of operations research methods for modeling patient flow and outcomes within community healthcare. Ahsan et al. [115] reviewed various analytical models utilized to optimize ED resources for the improvement of patient flow and highlighted the benefits and limitations of these models. A range of modeling techniques including agent-based modeling and simulation, discrete-event simulation, queueing models, simulation optimization, and mathematical modeling have been reviewed in their work. In the present work, we built on Wiler et al. [110] classification, presenting a similar one and expanding it to accommodate additional approaches and more recent research. The methods included in our work are presented in the following order: queueing, priority and simulation models, statistical methods, and additional algorithms and computational methods.

The total number of relevant articles reviewed in this section as function of the year of publication is shown in Fig. 10. Its growth in recent years emphasizes the interest and importance of further research and development of such modeling methods. It is expected that the continuously growing technological developments and the increasing availability of data as previously mentioned in “4.1” change the way that the EDs (and healthcare systems) work, and therefore can change the models needed.

5.1 Queueing Models

Computational queueing models arise directly from general queueing theory. Leading textbooks on fundamentals of queueing theory and modeling that can contribute to general knowledge are those of Gross et al. [116] and Bhat [117]. According to Green’s chapter (“Queueing Theory and Modeling”) [118] in the Handbook of Healthcare Delivery Systems: “A queueing model is a mathematical description of a queueing system which makes some specific assumptions about the probabilistic nature of the arrival and service processes, the number and type of servers, and the queue discipline and organization.” A basic queueing system is defined in the same chapter as “a service system where ‘customers’ arrive at a bank of ‘servers’ and require some service from one of them.” As noted in “Sect. 3” above, one of the first prominent works to be published in this field was Keller and Laughhunn’s [13] discussion of a queueing model addressing physician capacity in an outpatient clinic and its influence on clinic efficiency. A good review of queueing models in the field of healthcare may be found in the above chapter by Green [118]. Fomundam and Herrmann [119] also reviewed queueing models in healthcare systems, focusing on waiting time and utilization analysis, system design, and appointment systems.

Later on, Mehandiratta [120] attempted to analyze in his paper the theory and instances of use of queueing theory in healthcare organizations around the world and the benefits acquired from the same. Palvannan and Teow [121] applied queueing theory to healthcare, using queueing models in two hospitals to estimate and analyze service capacity. Some additional papers, such as that of Bain et al. [122] and more recent ones by Cho et al. [123], Bittencourt et al. [124], and Lin et al. [125], deal with the application of queueing theory and modeling to healthcare in general.

This area has been continually studied over the years since the 1990s, although it has received much attention during the last 2 decades, as shown in Fig. 11. Even before that, there were works referring to queueing models specifically in the ED environment. One of the first works in this area was presented by Collings and Stoneman [19]. They derived an M/M/∞ queue with varying arrival and departure rates to study the influence of patient arrival rates on bed occupancy levels. However, the most significant works involving ED queueing models began to appear around the beginning of the twenty-first century. Gorunescu et al. [53, 54], for example, used a queueing approach to model bed occupancy in EDs as well as in other hospital departments. In 2004, McManus et al. [126] constructed a queueing theory-based model for resource allocation in various hospital activities, including EDs, and validated it in an intensive care unit. Green et al. [127] evaluated the effectiveness of a queueing model in identifying ED staffing patterns to reduce the fraction of patients who leave the ED without being seen. De Bruin et al. [128] used a queueing model to analyze congestion in the flow of emergency cardiac patients and to allocate numbers of beds, given a required service level (operationalized as percentage of refused admissions). Creemers et al. [129] showed how queueing models could quantify the relationship between capacity utilization, waiting time, and patient service, thus enabling decision-makers to improve the performance of healthcare systems, including that of EDs. Mayhew and Smith [130] used a queueing model to analyze patient flow in accident and emergency departments to evaluate government-imposed targets’ feasibility for treatment completion times. Laskowski et al. [131] utilized both agent-based and queueing models to investigate patient access and patient flow through EDs towards identifying means of reducing waiting times. Further examples of application of queueing models to ED systems and their improvement in the first decade of the twenty-first century may also be found in the publications of Morton and Bevan [132], Au et al. [133], and Tseytlin [134].

More recently, Tan et al. [83] presented a hospital ED case where the queueing process can be modeled using a time-varying M/M/s queue with re-entrant patients, and tested this model using simulation. Hagen et al. [135] dealt more specifically with priority queueing models applied to ICUs and EDs; these specific models deserve special attention and will be referred to in a separate section. Wiler et al. [136] derived an ED patient flow model based on queueing theory that predicts the effects of various patient crowding scenarios on the number of patients who leave the ED without being seen. Yom-Tov and Mandelbaum [137] analyzed — in a paper based also on a previous work by Yom-Tov from 2010 [138] — a queueing model called Erlang-R that helps answer questions such as how many servers (physicians/nurses) are required to achieve predetermined service levels. Batt and Terwiesch [139] reviewed a number of queueing models and focused on the so-called Erlang-A model when studying queue abandonment from an ED. Vass and Szabo [140] applied M/M/3 queueing model to ED patient data collected over 1 year to evaluate the ED’s performance. Additional publications of interest by Alavi-Moghaddam et al. [141], Cantero and Redondo [142], Mohseni [143], and Huang et al. [144] deal to some extent with potential contribution of queueing theory and models to the improvement of that performance and will not be expanded here.

Rotich [145] used M/M/s queueing model to determine the optimum waiting and service cost in a hospital ICU emergency service. Xu and Chan [146] used queueing models which accurately predict the number of patient arrivals to the ED for the purpose of reducing waiting times in the ED via diversion and analyzed the quality of those models. Jáuregui et al. [147] analyzed the emergency service of a public hospital in Mexico by applying the concepts and relations of queueing theory. They evaluated the minimum number of doctors necessary to satisfy the current and future emergency service demands for different scenarios and added recommendations to the managerial staff. Recently, Hu et al. [2] evaluated the contributions of queueing theory in modeling EDs and assessed the strengths and limitations of this application. They concluded that queueing models tend to oversimplify operations but that combining these models with simulations (as further discussed in the specific subsection on simulation modeling) should be a powerful approach. Joseph [148] reviewed and analyzed the use and benefits of the application of queueing theory and modeling to ED resources and operations in general.

Additional noteworthy queueing models include priority queueing models such as those of Siddharthan et al. [149] and more recent ones in the 2000s; they will be discussed separately in the following subsection.

5.2 Priority Models

Priority models (sometimes called priority queueing models) are a family of queueing models developed to be goal-oriented and deserve special mention. Here we address a field of models that uses pure queueing theory models and definitions and is different from the grouping and prioritization field that arose in parallel. As such, this field is rather new in the ED environment as shown in Fig. 12.

Green [150] referred to the triage system in an ED as a classic example of a priority queue. In their review of methodologies for modeling ED patient flow (discussed above), Wiler et al. [110] suggested that models based on priority principles are appropriate for ED settings, where acuity, in addition to the time of presentation, determines the order in which patients are serviced (i.e., the sickest patients are prioritized over less-acute patients). Already in 1996, Siddharthan et al. [149] classified patients into emergency and non-emergency care and provided evidence that non‐emergency patients contribute to lengthy delays in the ED for all classes of patients. They proposed a priority queueing model to reduce average waiting times. Two additional works of Au-Yeung et al. are of interest: In the first one dated 2006 [151], they used a simulation modeling to provide some insights into the effects of prioritizing different classes of patients in a real accident and emergency unit based in London, UK. In the second one from 2007 [152], they developed an Approximate Generating Function Analysis (AGFA) technique, experimenting it with different patient-handling priority schemes and comparing the AGFA moments with the results from a discrete-event simulation.

Additional examples of studies using priority queueing models include the work of Laskowski et al. [131], who developed a framework of multiple-priority queue systems as a means of forecasting patient waiting times. Tan et al. [83] developed a dynamic-priority queue model in which the priority levels are assigned to queueing patients in the ED shift with staff availability. The authors modeled the queueing process using a time-varying M/M/s queue with re-entrant patients and tested it using simulation. Hagen et al. [135] used a priority queueing model to analyze the differences between prioritizing admissions by expected LOS or patient severity. An interesting conclusion of this work was that prioritizing patients by severity not only considerably reduced delays for critical cases but also increased the average waiting time for all patients. Lin et al. [153] developed a priority queueing model to describe the flow and waiting times of patients waiting for ED service and subsequently for hospitalization in an inpatient unit. The model assumed that emergency patients are characterized by different priority levels and incorporated priority-specific waiting time targets.

More recently, a paper by De Boeck et al. [154] dealt with confronting the physicians with the challenging task of prioritizing between boarding patients and patients currently under treatment in the ED. Their case study showed that system performance is optimized by applying a policy that gives priority to patients currently under treatment in the ED. In 2020, Hou and Zhao [155] presented a practical approach to estimate the waiting times for multi-class patients and applied it to reduce the waiting time for high priority patients. Using a case study from an ED, they found that the proposed approach can efficiently prioritize patient flows in decreasing waiting times.

5.3 Simulation Models

Simulation is a very popular approach for describing complex processes and the ED environment as such is not different. As visible in Fig. 13, this area has been well studied since the 1990s with massive growth in the 2000s due to the availability of data and computational power. We see on the figure a peak in the occurrence of such studies at the end of the first decade of the present century. It can be explained by the fact that their use has been very effective since then, thus reducing to some extent the demand for further theoretical research. A vast number of ED studies using computer simulations have been published over the years; thus, we will review in detail only the most important ones.

Ladany and Turban [20] were among the first to use a queueing simulation model to provide a framework for the planning and staffing of an ED. They emphasized the benefits of a simulation approach as a means for ED managers to obtain information about costs, waiting times of patients, and idleness of facilities. Saunders et al. [156] used simulation software to develop a computer simulation model of ED operations. The authors varied selected input data systematically to determine the effects of various (simulated) factors on patient throughput time, queue sizes, and resource utilization rates. Lopez-Valcarcel and Perez [157] claimed that simulation is an effective tool for the analysis of the complex problem of scheduling in the ED and that the result can be used to improve its quality care and efficiency. In the same year, McGuire [100] also used simulation software to identify means of reducing patient LOS in EDs. Nearly a decade later, Samaha et al. [158] published a similar model based on empirical data that proved to be very helpful in the ED of their hospital. Ruohonen et al. [38] used a simulation approach — that was previously discussed in “Sect. 4.1” — to demonstrate the capacity of a team triage method to improve ED operations.

The increasing availability of powerful computers has led to the development and extensive use of DES, a computerized simulation approach in which discontinuous systems are modeled as networks of interdependent discrete events. DES has proven to be effective for representing queueing systems in general and ED systems in particular and has become a preferred simulation method over the years. Indeed, a literature review by Günal and Pidd [159] noted that the number of studies of DES applications to EDs has increased since the beginning of the twenty-first century.

Jun et al. [160] reviewed applications of DES modeling to healthcare clinics and systems (including EDs), primarily in areas of patient flow and resource allocation. Connelly and Bair [37] explored the potential of DES methods as a tool for analyzing ED operations and illustrated its potential by comparing the efficacy of two alternative triage methods (as discussed above in “Sect. 4.1”). Komashie and Mousavi [161] also developed a DES approach to model the operations of an ED. They used their model to provide ED managers of a London hospital with new insights and system improvement recommendations. Duguay and Fatah [162] used Arena software to carry out a DES study of a Canadian ED in order to derive recommendations to reduce patient waiting times and improve overall service delivery and system throughput. Hoot et al. [163] drew from theoretical studies to develop a DES of patient flow and used it to forecast overcrowding occurrences in an ED in real-time; they subsequently used observational data to validate the model’s forecasts [164]. Bair et al. [165] used a DES model of ED patient flow to investigate the effect of inpatient boarding on ED efficiency in terms of the US National Emergency Department Crowding Scale (NEDOCS) score and the rate of patients who leave without being seen. The results of their analysis helped to quantify the impact of patient boarding on ED crowding. Further papers that use DES modeling for solving queueing issues in EDs have been published in the same years, among them we notice those of Brailsford and Hilton [166], Davies [167], Kolker [168], Khare et al. [169], and Fletcher and Worthington [170]. Between 2009 and 2011, we also identify the works of Marmor et al. [171], Tseytlin [133], Marmor [172], and Zeltyn et al. [173], who belong to a common research group that specializes in the development of DES modeling techniques and their application to decision support for ED issues like staffing.

Lim et al. [174] used DES analysis to model interactions between physicians and “delegates” they supervise (such as residents, nurse practitioners, and assistants). They compared their model with a simulation model that did not incorporate such interactions and showed that the formerly produced recommendations yielding substantially better outcomes (e.g., in terms of patient LOS) than the latter did. In the same year, La et al. [107] used DES to model an ED’s “fast-track” system to derive recommendations for improving performance measures. Hurwitz et al. [175] developed an event-driven flexible simulation platform to quantify and manage ED crowding. In a further work [176], they demonstrated the ability of such a simulation model to recreate and predict site-specific patient flow in two very different EDs. El-Rifai et al. [177] focused on human resources organization in an ED and developed a stochastic optimization model for shift scheduling in it. Then they evaluated the resulting personnel schedules using a DES model and performed numerical analyses with data from a French hospital to compare different personnel scheduling strategies. Ahalt et al. [178] used DES to address ED crowding problems when the identified need for emergency services exceeds available resources for patient care in the ED, hospital, or both. Using data from a large academic hospital, they evaluated and compared three metrics commonly used in practice as future crowding indicators. More recently, Gulhane [179] presented a study that aimed to manage queues in hospital using DES simulation. He concluded that issues in hospital were well resolved through that simulation and modifications in queueing have been done. Castanheira-Pinto et al. [180] presented a methodology to assist the design process of an ED using a DES simulation technique. The ED of a hospital in Portugal was considered for testing that technique. The methodology proved to be very useful in determining an optimized operation for complex and non-linear systems.

Although DES has proven to be a beneficial simulation approach for ED contexts, several additional simulation approaches have been used successfully in these settings and warrant a mention here. Some of these are discussed in the work of Mohiuddin et al. [181], who undertook a systematic review to investigate the different computer simulation methods and their contribution to the analysis of patient flow within EDs in the UK. One of these approaches is the system dynamics approach (SD), which is used to understand complex healthcare systems’ behavior over time by capturing aggregate (instead of individual) patient flows. Brailsford et al. [182], for example, applied a system dynamics simulation to an extensive complex ED distribution system in the UK city of Nottingham and used it to identify potential bottlenecks and points that might benefit from intervention. Thorwarth et al. [183] used a simulation model within structured modeling methods, to investigate flexible workload management for an ED environment. They applied the results to derive a generalized analytic expression describing settings that lead to an instable queueing system and affected service quality; they provided thus the decision-makers with a tool which allows identifying and preventing such conditions.

In 2010, Van Sambeek et al. [184] carried out a literature review of decision-making models according to healthcare problems and showed that 46% of the studies used computer simulation. Paul et al. [185] performed a systematic review of ED simulation literature from 1970 to 2006 to highlight these simulation studies’ contributions to their understanding of ED overcrowding and to discuss how simulation can be better used as a tool to address this problem. Their review did not include a more recent simulation technique — the so-called agent-based simulation (ABS) approach, commonly used to model complex systems composed of interacting, autonomous “agents.” Laskowski and Mukhi [186] used this approach to simulate the performance of a stand-alone ED and multiple interacting EDs throughout a Canadian city. Jones and Evans [187] used an agent-based approach to model the influence of ED physician scheduling on patient waiting times. Liu et al. [188] identified ABS modeling as an excellent tool to deal with complex system like an ED and introduced such a generalized computational model for simulating ED performance. More recently, Yousefi and Ferreira [189] presented a pure agent-based simulation combined with a group decision-making technique to improve the performance of an ED.

Additional simulation studies in ED queueing use multiple simulation methods or simulations combined with a simple queueing model. The recent review by Hu et al. [2] provides a detailed discussion of such combinations and their advantages. To avoid model complexity and requirement of large amount of data collection, Ceglowski et al. [190] described a unique approach of simulation by combining data mining with discrete-event simulation and could thus provide insight into the complex relationship between patient urgency, treatment and disposal, and the occurrence of queues for treatment in the ED. Saghafian et al. [191] separated patients (and resources) into different streams and used various analytic and simulation models to determine that this streaming policy will improve ED performance in some situations. Uriarte et al. [192] used a combination of DES with multi-objective optimization to help decision-makers reduce ED patient LOS and waiting times. Fitzgerald et al. [110] supplemented DES with a queue-based simulation to demonstrate the impact of implementing a fast-track method in an ED on patient waiting times and service quality.

Finally, a new literature review of simulation modeling applied to EDs by Salmon et al. [193] covering all English language papers from the year 2000 expanded previous reviews on simulation models in EDs such as the systematic review by Paul et al. [185]. They showed that most of the works are academic, based essentially on DES, and focused on capacity, process, and workforce issues at an operational level.

5.4 Statistical Methods (Regression Analysis and Forecasting)

Statistical methods can provide robust mathematical tools for forecasting crowding in EDs. As such, and in a similar way to other fields that address the ED environment, we can observe a big and increasing interest in this field in the present century, as shown in Fig. 14. As noted in a review by Wargon et al. [194], two of the most commonly used mathematical approaches for predicting patient attendance at EDs or walk-in clinics are regression analysis and time series analysis. (The authors noted that these models tend to achieve good performance, with errors ranging from 4.2 to 14.4%.) Regression and time series analyses applied to ED issues are discussed comprehensively in the paper of Wiler et al. [112]. A thorough description of regression techniques to be used in EDs can be found in that of Jones et al. [195] and will not be expanded here.